Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#1 Re: This is Cool » Miscellany » Today 00:06:27

2510) Glaucoma

Gist

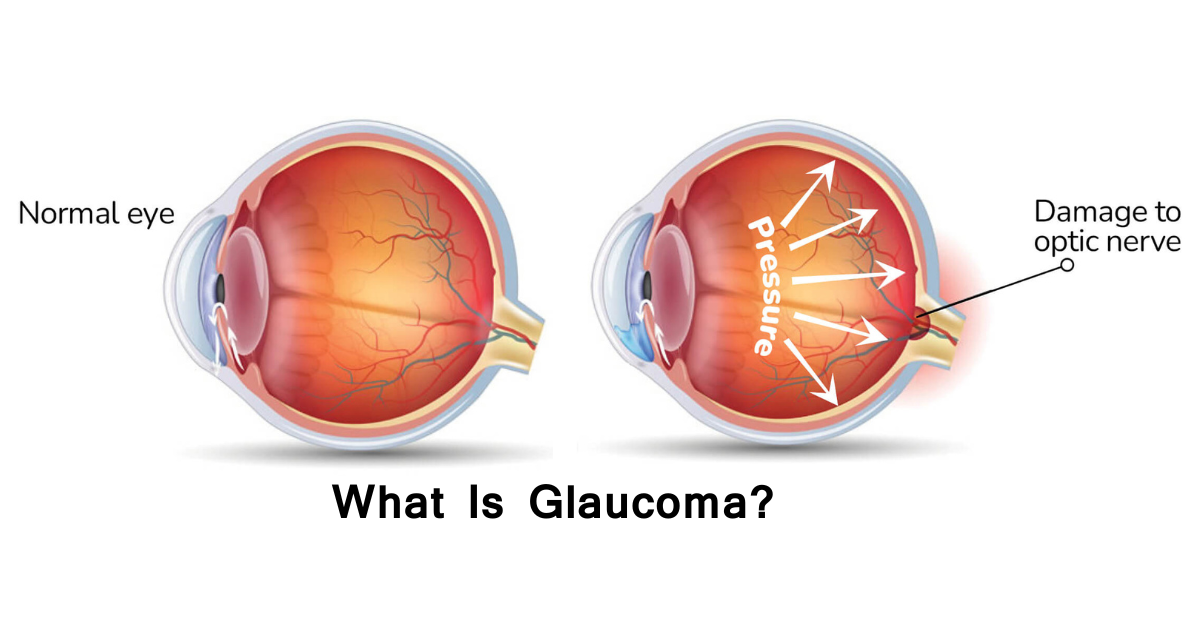

Glaucoma is a group of eye conditions that damage the optic nerve, often due to high eye pressure, potentially causing permanent blindness. Known as the "silent thief of sight," it usually has no early symptoms until significant vision loss occurs. Treatments include medicated eye drops, laser therapy, or surgery to lower pressure.

The main cause of glaucoma is damage to the optic nerve, often linked to increased pressure inside the eye (intraocular pressure or IOP) from fluid buildup, but it can also occur with normal pressure due to poor blood flow or other factors, with genetics, age, eye injury, and conditions like diabetes being significant risk factors. This damage happens when the eye's drainage system, the trabecular meshwork, doesn't work correctly, causing fluid (aqueous humor) to build up, press on the optic nerve, and impair vision.

Summary

Your eye constantly makes aqueous humor. As new aqueous flows into your eye, the same amount should drain out. The fluid drains out through an area called the drainage angle. This process keeps pressure in the eye (called intraocular pressure or IOP) stable. But if the drainage angle is not working properly, fluid builds up. Pressure inside the eye rises, damaging the optic nerve.

The optic nerve is made of more than a million tiny nerve fibers. It is like an electric cable made up of many small wires. As these nerve fibers die, you will develop blind spots in your vision. You may not notice these blind spots until most of your optic nerve fibers have died. If all of the fibers die, you will become blind.

How Do You Get Glaucoma?

There are two major types of glaucoma.

Open-angle glaucoma

This is the most common type of glaucoma. It happens gradually, where the eye does not drain fluid as well as it should (like a clogged drain). As a result, eye pressure builds and starts to damage the optic nerve. This type of glaucoma is painless and causes no vision changes at first.

Some people can have optic nerves that are sensitive to normal eye pressure. This means their risk of getting glaucoma is higher than normal. Regular eye exams are important to find early signs of damage to their optic nerve.

Angle-closure glaucoma (also called “closed-angle glaucoma” or “narrow-angle glaucoma”)

This type happens when someone’s iris is very close to the drainage angle in their eye. The iris can end up blocking the drainage angle. You can think of it like a piece of paper sliding over a sink drain. When the drainage angle gets completely blocked, eye pressure rises very quickly. This is called an acute attack. It is a true eye emergency, and you should call your ophthalmologist right away or you might go blind.

Here are the signs of an acute angle-closure glaucoma attack:

* Your vision is suddenly blurry

* You have severe eye pain

* You have a headache

* You feel sick to your stomach (nausea)

* You throw up (vomit)

* You see rainbow-colored rings or halos around lights

Many people with angle-closure glaucoma develop it slowly. This is called chronic angle-closure glaucoma. There are no symptoms at first, so they don’t know they have it until the damage is severe or they have an attack.

Angle-closure glaucoma can cause blindness if not treated right away.

Details

Glaucoma is a group of eye diseases that can lead to damage of the optic nerve, which transmits visual information from the eye to the brain. Glaucoma may cause vision loss if left untreated. It has been called the "silent thief of sight" because the loss of vision usually occurs slowly over a long period of time. A major risk factor for glaucoma is increased pressure within the eye, known as intraocular pressure (IOP). It is associated with old age, a family history of glaucoma, and certain medical conditions or the use of some medications.[6] The word glaucoma comes from the Ancient Greek word (glaukós), meaning 'gleaming, blue-green, gray'.

Of the different types of glaucoma, the most common are called open-angle glaucoma and closed-angle glaucoma. Inside the eye, a liquid called aqueous humor, which is produced by the ciliary body, helps to maintain shape and provides nutrients. The aqueous humor normally drains through the trabecular meshwork. In open-angle glaucoma, the drainage is impeded, causing the build up of aqueous to accumulate in the anterior chamber causing the pressure inside the eye to increase. This elevated pressure can reduce vascular perfusion in the vitreous chamber and can damage the optic nerve and peripheral glial tissues. In closed-angle glaucoma, the drainage of the eye becomes suddenly blocked, leading to a rapid increase in intraocular pressure. This may lead to intense eye pain, blurred vision, and nausea. Closed-angle glaucoma is an emergency requiring immediate attention.

If treated early, the progression of glaucoma may be slowed or even stopped. Regular eye examinations, especially if the person is over 40 or has a family history of glaucoma, are essential for early detection. Treatment typically includes prescription of eye drops, medication, laser treatment or surgery. The goal of these treatments is to decrease eye pressure.

Glaucoma is a leading cause of blindness in African Americans, Hispanic Americans, and Asians. Its incidence rises with age, to more than eight percent of Americans over the age of eighty, and closed-angle glaucoma is more common in women.

Additional Information

Glaucoma refers to many diseases involving eye pressure increases that lead to permanent vision loss and blindness. This condition can happen for many reasons, but most are treatable. Knowing the risk factors and getting regular eye exams may help you avoid vision loss.

Overview:

What is glaucoma?

Glaucoma is an umbrella term for eye diseases that make pressure build up inside your eyeball, which can damage delicate, critical parts at the back of your eye. Most of these diseases are progressive, which means they gradually get worse. As they do, they can eventually cause permanent vision loss and blindness. In fact, glaucoma is the second-leading cause of blindness worldwide.

Learning that you have glaucoma or that you’re at risk for it can be hard to process. For most people, vision is the sense they rely on most in their daily routine. It can feel scary to imagine trying to adapt to and live your life after you have severe vision loss. But most forms of glaucoma are treatable, especially when diagnosed early. And with care and careful management, it’s possible to delay — or even prevent — permanent vision loss.

Symptoms and Causes:

What are the symptoms of glaucoma?

In its early stages, glaucoma may not cause any symptoms. That’s why up to half of the people in the United States with glaucoma may not know they have it. And symptoms may not appear until this condition causes irreversible damage.

Some of the more common glaucoma symptoms include:

* Eye pain or pressure

* Headaches

* Red or bloodshot eyes

* Double vision (diplopia)

* Blurred vision

* Gradually developing low vision

* Gradually developing blind spots (scotomas) or visual field defects like tunnel vision

Some types of glaucoma, particularly angle closure glaucoma, can cause sudden, severe symptoms that need immediate medical attention to prevent permanent vision loss. Emergency glaucoma symptoms include:

* Blood gathering in front of your iris (hyphema)

* Bulging or enlarged eyeballs (buphthalmos)

* Nausea and vomiting that happen with eye pain/pressure

* Rainbow-colored halos around lights

* Sudden appearance or increase in floaters (myodesopsias)

* Sudden vision loss of any kind

* Suddenly seeing flashing lights (photopsias) in your vision

What causes glaucoma?

Glaucoma is caused by damage to your optic nerve. It can occur without any cause, but many factors can affect the condition. The most important of these risk factors is intraocular eye pressure. Your eyes produce a fluid called aqueous humor that nourishes them. This liquid flows through your pupil to the front of your eye. In a healthy eye, the fluid drains through mesh-like canals (trabecular meshwork), which is where your iris and cornea come together at an angle.

With glaucoma, the resistance increases in your drainage canals. The fluid has nowhere to go, so it builds up in your eye. This excess fluid puts pressure on your eye. Eventually, this elevated eye pressure can damage your optic nerve and lead to glaucoma.

What makes the fluid build up can vary, depending on the specific overall type of glaucoma you have.

Types of glaucoma

There are many different types of glaucoma, but they mainly fall under a few specific categories:

* Primary open-angle glaucoma. “Open-angle” means that the drainage angle, where the inside of the sclera (the white of your eye) and the outer edge of your iris meet, is open wide. Aqueous humor flows into the drainage angle so it can drain out of the anterior chamber. This is the most common type of glaucoma.

* Primary angle-closure glaucoma. Aqueous humor fluid is supposed to flow from the posterior chamber behind your iris, through your pupil, and into the anterior chamber. But sometimes, the lens of your eye presses too far forward, blocking fluid from flowing through the pupil opening. The extra fluid in the posterior chamber forces the iris forward, narrowing or closing off the drainage angle.

* Secondary glaucoma. This is when another condition or event increases eye pressure, which leads to glaucoma. Conditions that can cause it include eye injuries, pigmentary dispersion syndrome, uveitis, certain medications (especially corticosteroids and cycloplegics), eye procedures and more.

* Congenital glaucoma. This means you have glaucoma because of changes or differences that happened during fetal development. These include aniridia, Axenfeld-Rieger syndrome, Marfan syndrome, congenital rubella syndrome and neurofibromatosis type 1.

What are the risk factors for glaucoma?

Several risk factors can contribute to glaucoma. They include:

* Age. Most types of glaucoma affect people age 40 and older (congenital types are the biggest exception to this). Experts estimate that 10% of people age 75 and older have glaucoma.

* Race. Black people have a much higher risk of developing primary open-angle glaucoma, especially people of Afro-Caribbean descent. People of African descent are 15 times more likely to have blindness from open-angle glaucoma. People of Asian and Inuit descent have a higher risk of angle-closure glaucoma.

* sex. Women have a higher risk of angle-closure glaucoma. Experts suspect this is mainly because of sex-linked differences in eye anatomy.

* Refractive errors. People with nearsightedness (myopia) have a higher risk of open-angle glaucoma. People with farsightedness (hyperopia) have a higher risk of angle-closure glaucoma.

* Family history. There’s evidence that a family history of glaucoma, especially a first-degree biological relative (a parent, child or sibling), means you also have a higher risk of developing it. And several conditions that cause secondary glaucoma are genetic, too.

* Chronic conditions. Research links some conditions, like high blood pressure (hypertension) and diabetes, to much higher odds of developing glaucoma.

What are the complications of glaucoma?

Without care to manage it and lower the pressure inside your eye, glaucoma damages your retina and optic nerve to the point where they stop working. That causes glaucoma’s main complication: vision loss and, eventually, total blindness.

The end result is what experts call “absolute glaucoma.” That means you’re totally blind in the affected eye. The affected eye feels hard — and maybe even painful — when you touch it.

Diagnosis and Tests:

How is glaucoma diagnosed?

An eye care specialist can diagnose glaucoma using an eye exam, including several tests that are part of routine eye exams. In fact, eye exams can detect glaucoma long before you have eye damage and the symptoms that follow. Many of these tests involve pupil dilation (mydriasis), so your provider can get a better look inside your eye.

Some of the most helpful glaucoma tests include:

* Visual acuity testing

* Visual field testing

* Depth perception testing

* Tonometry

* Pachymetry

* Slit lamp exam

* Gonioscopy

If your eye care specialist has a reason to suspect damage to your retina and/or optic nerve, they may also use additional types of eye imaging. These include:

* Optical coherence tomography

* Fluorescein angiography

* Ultrasound

* Less commonly, computed tomography (CT) or magnetic resonance imaging (MRI).

#2 Re: Dark Discussions at Cafe Infinity » crème de la crème » Today 00:05:27

2447) Hugo Theorell

Gist:

Life

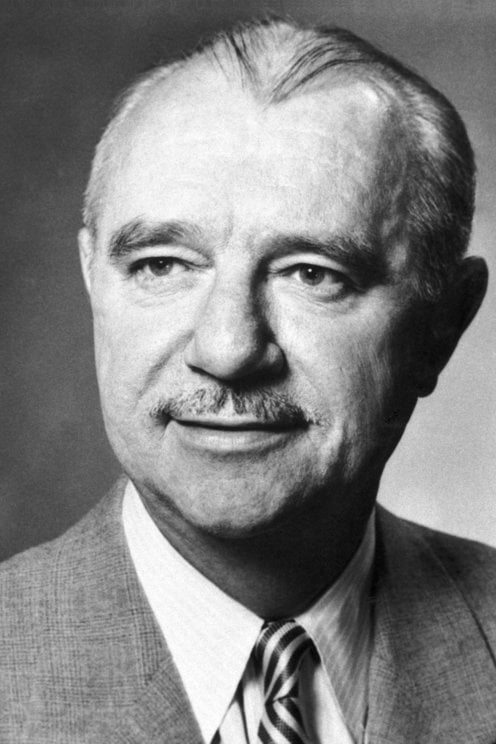

Hugo Theorell was born in Linköping, Sweden. His father worked as a regimental medical officer. Theorell studied medicine at Karolinska Institutet, Stockholm, receiving his degree in 1930. After receiving a Rockefeller Fellowship, he worked with Otto Warburg in Berlin-Dahlem from 1933 to 1935, where he conducted some of his most important work. He became a professor at Sweden's Uppsala University in 1937 and then at Karolinska Institutet in 1959. Theorell was married to concert pianist Margit Alenius and had three sons.

Work

Catalysts are substances that speed up chemical reactions without influencing the end products. Enzymes are catalysts active in biological processes. Hugo Theorell and colleagues investigated how enzymes that promote oxidation reactions are constructed and function. A breakthrough came in 1935 when he demonstrated how a yellow-colored enzyme in yeast had two parts, both of which were crucial to its function. He also explained how iron atoms in many enzymes have an important function in transporting electrons.

Summary

Axel Hugo Teodor Theorell (born July 6, 1903, Linköping, Sweden—died Aug. 15, 1982, Stockholm) was a Swedish biochemist whose study of enzymes that facilitate oxidation reactions in living cells contributed to the understanding of enzyme action and led to the discovery of the ways in which nutrients are used by organisms in the presence of oxygen to produce usable energy. Theorell won the Nobel Prize for Physiology or Medicine in 1955.

While serving as an assistant professor of biochemistry at Uppsala University (1932–33; 1935–36), Theorell was the first to isolate crystalline myoglobin, an oxygen-carrying protein found in red muscle (1932). At the Kaiser Wilhelm Institute (now Max Planck Institute), Berlin (1933–35), he worked with Otto Warburg in isolating from yeast a pure sample of the “old yellow enzyme,” which is instrumental in the oxidative interconversion of sugars by the cell. Theorell found that the enzyme is composed of two parts: a nonprotein coenzyme—the yellow riboflavine (vitamin B2) phosphate—and a protein apoenzyme. His discovery (1934) that the coenzyme actively facilitates oxidation of the sugar glucose by binding a hydrogen atom at a specific site on the riboflavin molecule marked the first time that the effect of an enzyme was attributed to the chemical activity of specific atoms.

As director of the biochemical department of the Nobel Medical Institute, Stockholm (1937–70), Theorell studied the oxidative enzyme cytochrome c, determining the precise nature of the chemical linkage between the iron-bearing, nonprotein porphyrin portion and the apoenzyme. His investigation of the hydrogen-transfer enzyme, alcohol dehydrogenase, led to the development of sensitive blood tests that have found wide application in the determination of legal definitions of intoxication. Besides the Nobel Prize, Theorell received a number of awards and honours. He also served as president of the Swedish Royal Academy of Science and International Union of Biochemistry.

Details

Axel Hugo Theodor Theorell (6 July 1903 – 15 August 1982) was a Swedish scientist and Nobel Prize laureate in medicine.

Life

He was born in Linköping as the son of Thure Theorell and his wife Armida Bill. Theorell went to Secondary School at Katedralskolan in Linköping and passed his examination there on 23 May 1921. In September, he began to study medicine at the Karolinska Institute and in 1924 he graduated as a Bachelor of Medicine. He then spent three months studying bacteriology at the Pasteur Institute in Paris under Professor Albert Calmette. In 1930 he obtained his M.D. degree with a theory on the lipids of the blood plasma, and was appointed professor in physiological chemistry at the Karolinska Institute.

Theorell, who dedicated his entire career to enzyme research, received the Nobel Prize in Physiology or Medicine in 1955 for discovering oxidoreductase enzymes and their effects. His contribution also consisted of the theory of the toxic effects of sodium fluoride on the cofactors of crucial human enzymes. In 1936 he was appointed Head of the newly established Biochemical Department of the Nobel Medical Institute, the first researcher related to the Institute to be awarded a Nobel Prize. His work had led to pioneering progress on alcohol dehydrogenases, enzymes that break down alcohol in the liver and other tissues. He received honorary degrees at universities in France, Belgium, Brazil and the United States. He was a member of the American Academy of Arts and Sciences and the United States National Academy of Sciences, and an International Member of the American Philosophical Society.

Theorell died in Stockholm and is interred in Norra begravningsplatsen (The Northern Cemetery) alongside his wife, Elin Margit Elisabeth (née Alenius) Theorell, a distinguished pianist and harpsichordist who died in 2002.

#3 Jokes » Lemon Jokes - II » Today 00:05:01

- Jai Ganesh

- Replies: 0

Q: Why did the lemon fail his driving test?

A: It kept peeling out.

* * *

Q: Who goes out on a date with sour grapes?

A: Liz Lemon.

* * *

Q: Why was the lemon feeling depressed?

A: She lost her zest for life.

* * *

Q: What kind of lemon performs for charity?

A: Lemon Aid.

* * *

Q: Why did the lemon go to the doctor?

A: It wasn't peeling well.

* * *

#4 Dark Discussions at Cafe Infinity » Come Quotes - XIX » Today 00:03:58

- Jai Ganesh

- Replies: 0

Come Quotes - XIX

1. Yeah, Wacko Jacko, where did that come from? Some English tabloid. I have a heart and I have feelings. I feel that when you do that to me. It's not nice. - Michael Jackson

2. Why are we here? Where do we come from? Traditionally, these are questions for philosophy, but philosophy is dead. - Stephen Hawking

3. I've been lucky. Opportunities don't often come along. So, when they do, you have to grab them. - Audrey Hepburn

4. Begin at the beginning and go on till you come to the end; then stop. - Lewis Carroll

5. A slave is one who waits for someone to come and free him. - Ezra Pound

6. Not knowing when the dawn will come I open every door. - Emily Dickinson

7. Change will come slowly, across generations, because old beliefs die hard even when demonstrably false. - E. O. Wilson

8. I realized quickly what Mandela and Tambo meant to ordinary Africans. It was a place where they could come and find a sympathetic ear and a competent ally, a place where they would not be either turned away or cheated, a place where they might actually feel proud to be represented by men of their own skin color. - Nelson Mandela.

#5 This is Cool » Barium Chloride » Yesterday 17:49:39

- Jai Ganesh

- Replies: 0

Barium Chloride

Gist

Barium chloride (BaCl2) is a highly toxic, white, water-soluble inorganic salt that is hygroscopic and imparts a yellow-green color to a flame. Primarily used in industry for manufacturing pigments, case hardening steel, and purifying brine, it is also a common laboratory reagent for testing sulfate ions.

Barium chloride (BaCl2) is used in wastewater treatment, brine purification for chlorine production, and to make pigments, stabilizers, and other barium salts; it's also used in steel hardening, fireworks for green color, and as a lab test for sulfates. Its toxicity limits its applications, but it's crucial in chemical manufacturing and analysis for removing sulfates and as a precursor to other compounds, despite being less common in consumer products due to safety concerns.

Summary

Barium chloride appears as white crystals. A salt arising from the direct reaction of barium and chlorine. Toxic by ingestion.

Barium chloride is a chemical compound of barium. It is used in the laboratory as a test for sulfate ion. In industry, barium chloride is mainly used in the purification of brine solution in caustic chlorine plants and also in the manufacture of heat treatment salts, case hardening of steel, in the manufacture of pigments, and in the manufacture of other barium salts. It is also used in fireworks to give a bright green color. Barium is a metallic alkaline earth metal with the symbol Ba, and atomic number 56. It never occurs in nature in its pure form due to its reactivity with air, but combines with other chemicals such as sulfur or carbon and oxygen to form barium compounds that may be found as minerals.

Details

Barium chloride is an inorganic compound with the formula BaCl2. It is one of the most common water-soluble salts of barium. Like most other water-soluble barium salts, it is a white powder, highly toxic, and imparts a yellow-green coloration to a flame. It is also hygroscopic, converting to the dihydrate BaCl2·2H2O, which are colourless crystals with a bitter salty taste. It has limited use in the laboratory and industry.

Uses

Although inexpensive, barium chloride finds limited applications in the laboratory and industry.

Its main laboratory use is as a reagent for the gravimetric determination of sulfates. The sulfate compound being analyzed is dissolved in water and hydrochloric acid is added. When barium chloride solution is added, the sulfate present precipitates as barium sulfate, which is then filtered through ashless filter paper. The paper is burned off in a muffle furnace, the resulting barium sulfate is weighed, and the purity of the sulfate compound is thus calculated.

In industry, barium chloride is mainly used in the purification of brine solution in caustic chlorine plants and also in the manufacture of heat treatment salts, case hardening of steel. It is also used to make red pigments such as Lithol red and Red Lake C. Its toxicity limits its applicability.

Toxicity

Barium chloride, along with other water-soluble barium salts, is highly toxic. It irritates eyes and skin, causing redness and pain. It damages kidneys. Fatal dose of barium chloride for a human has been reported to be about 0.8-0.9 g. Systemic effects of acute barium chloride toxicity include abdominal pain, diarrhea, nausea, vomiting, cardiac arrhythmia, muscular paralysis, and death. The Ba2+ ions compete with the K+ ions, causing the muscle fibers to be electrically unexcitable, thus causing weakness and paralysis of the body. Sodium sulfate and magnesium sulfate are potential antidotes because they form barium sulfate BaSO4, which is relatively non-toxic because of its insolubility in water.

Barium chloride is not classified as a human carcinogen.

Additional Information:

What is Barium Chloride (BaCl2)?

Barium Chloride is an inorganic salt that is made up of barium cations (Ba2+) and chloride anions (Cl–). It is also called Barium Muriate or Barium dichloride. It is a white solid which is water-soluble, hygroscopic and gives a slight yellow-green colour to a flame. The chemical formula of Barium Chloride is BaCl2.

Barium salts are extensively used in the industry. The sulfate is used in white paints, particularly for outside use. Barium chloride is poisonous in nature.

Properties of Barium Chloride – BaCl2

Barium chloride is quite soluble in water (as is the case with most ionic salts). It is known to dissociate into barium cations and chloride anions in its dissolved state. At a temperature of 20 oC, the solubility of barium chloride in water is roughly equal to 358 grams per litre. However, the solubility of this compound in water is temperature-dependent. At a temperature of 100 oC, the solubility of barium chloride in water is equal to 594 grams per litre. This compound is also soluble in methanol (however, it is not soluble in ethanol).

Under standard conditions, barium chloride exists as a white crystalline solid. Anhydrous BaCl2 crystallizes in an orthogonal crystal structure. However, the dihydrate form of barium chloride is known to have a monoclinic crystal structure.

Uses of Barium Chloride (BaCl2)

* Barium Chloride is used as a raw material to produce barium salt.

* It is used in chlorine-alkali industries.

* Used in the manufacturing of rubber.

* It is widely used in oil refining.

* It is used in the papermaking industry.

* It is used in the hardening of steel.

* Used to purify brine solution.

Effects on Health

This compound is toxic when ingested. Magnesium sulfate (MgSO4) and Sodium sulfate (Na2SO4) are probable antidotes as they form BaSO4 (barium sulfate). BaSO4 is comparatively non-toxic due to its insolubility.

Frequently Asked Questions – FAQs:

Q1: What is barium chloride used for?

The primary industrial application of BaCl2 is in the purification of the brine solutions that are used in caustic chlorine plants. This compound is also used in the case-hardening of steel and the production of heat treatment salts. This compound has limited applications due to its high toxicity.

Q2: What is the oxidation number of barium and chlorine in BaCl2?

Barium chloride molecules feature an ionic bond between barium cations and chloride anions. Barium is a metal that exhibits an oxidation state of +2 in this ionic salt whereas chlorine is a non-metal which exhibits an oxidation state of -1 in BaCl2.

Q3: Is barium chloride toxic?

Yes, like most other salts of barium, BaCl2 is highly toxic to human beings. Exposure to this compound can cause irritation of the eyes, mucous membrane, and skin. Ingestion or inhalation of barium chloride can also prove fatal. Barium chloride can also negatively impact the central nervous system, the cardiovascular system, and the kidneys.

Q4: How is barium chloride produced industrially?

The industrial production of barium chloride follows a two-step process. First, barium sulfate (usually in the form of the mineral barite) is reacted with carbon at high temperatures to form barium sulphide and carbon monoxide. Then, the barium sulphide is treated with hydrochloric acid to yield barium chloride along with hydrogen sulphide.

Q5: What are the properties of aqueous solutions of BaCl2?

Aqueous solutions of barium chloride have neutral pH values since they contain the cation of a strong base and the anion of a strong acid. When exposed to sulfates, a white precipitate of barium sulfate is obtained.

#6 Science HQ » Gallstones » Yesterday 16:48:00

- Jai Ganesh

- Replies: 0

Gallstones

Gist

Gallstones are hardened deposits of digestive fluid (cholesterol or bilirubin) that form in the gallbladder. While often asymptomatic, they can cause sudden, severe right upper abdominal pain (biliary colic), nausea, vomiting, and fever if they block bile ducts. Risk factors include being female, age 40+, obesity, and rapid weight loss. Treatment usually involves surgical gallbladder removal (cholecystectomy).

Gallstones form when bile becomes unbalanced, typically from too much cholesterol or bilirubin, or too few bile salts, causing solid crystals to form that harden into stones, often due to the gallbladder not emptying properly. Key causes and risk factors include diets high in fat/cholesterol, obesity, rapid weight loss, certain medications, diabetes, pregnancy, genetics, and liver/blood disorders that increase bilirubin.

Summary

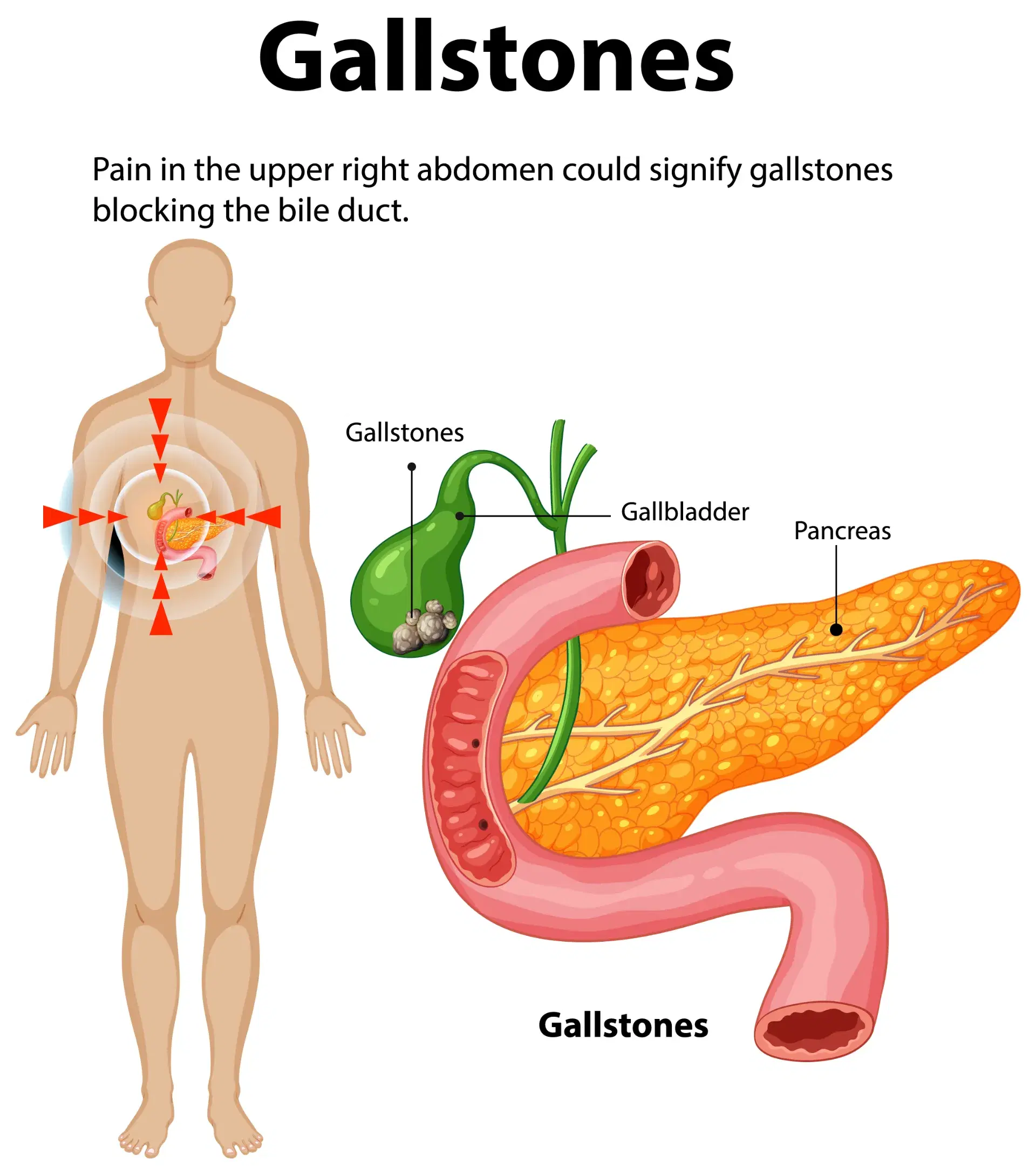

Your gallbladder is a pear-shaped organ under your liver. It stores bile, a fluid made by your liver to digest fat. As your stomach and intestines digest food, your gallbladder releases bile through a tube called the common bile duct. The duct connects your gallbladder and liver to your small intestine.

Your gallbladder is most likely to give you trouble if something blocks the flow of bile through the bile ducts. That is usually a gallstone. Gallstones form when substances in bile harden. Gallstone attacks usually happen after you eat. Signs of a gallstone attack may include nausea, vomiting, or pain in the abdomen, back, or just under the right arm.

Gallstones are most common among older adults, women, overweight people, Native Americans and Mexican Americans.

Gallstones are often found during imaging tests for other health conditions. If you do not have symptoms, you usually do not need treatment. The most common treatment is removal of the gallbladder. Fortunately, you can live without a gallbladder. Bile has other ways to reach your small intestine.

Details

Gallstones are hardened deposits of digestive fluid that can form in your gallbladder. Your gallbladder is a small, pear-shaped organ on the right side of your abdomen, just beneath your liver. The gallbladder holds a digestive fluid called bile that's released into your small intestine.

Gallstones are hardened deposits of bile that can form in your gallbladder. Bile is a digestive fluid produced in your liver and stored in your gallbladder. When you eat, your gallbladder contracts and empties bile into your small intestine (duodenum).

Gallstones range in size from as small as a grain of sand to as large as a golf ball. Some people develop just one gallstone, while others develop many gallstones at the same time.

People who experience symptoms from their gallstones usually require gallbladder removal surgery. Gallstones that don't cause any signs and symptoms typically don't need treatment.

Symptoms

Gallstones may cause no signs or symptoms. If a gallstone lodges in a duct and causes a blockage, the resulting signs and symptoms may include:

* Sudden and rapidly intensifying pain in the upper right portion of your abdomen

* Sudden and rapidly intensifying pain in the center of your abdomen, just below your breastbone

* Back pain between your shoulder blades

* Pain in your right shoulder

* Nausea or vomiting

Gallstone pain may last several minutes to a few hours.

When to see a doctor

Make an appointment with your doctor if you have any signs or symptoms that worry you.

Seek immediate care if you develop signs and symptoms of a serious gallstone complication, such as:

* Abdominal pain so intense that you can't sit still or find a comfortable position

* Yellowing of your skin and the whites of your eyes (jaundice)

* High fever with chills

Causes

It's not clear what causes gallstones to form. Doctors think gallstones may result when:

* Your bile contains too much cholesterol. Normally, your bile contains enough chemicals to dissolve the cholesterol excreted by your liver. But if your liver excretes more cholesterol than your bile can dissolve, the excess cholesterol may form into crystals and eventually into stones.

* Your bile contains too much bilirubin. Bilirubin is a chemical that's produced when your body breaks down red blood cells. Certain conditions cause your liver to make too much bilirubin, including liver cirrhosis, biliary tract infections and certain blood disorders. The excess bilirubin contributes to gallstone formation.

* Your gallbladder doesn't empty correctly. If your gallbladder doesn't empty completely or often enough, bile may become very concentrated, contributing to the formation of gallstones.

Types of gallstones

Types of gallstones that can form in the gallbladder include:

* Cholesterol gallstones. The most common type of gallstone, called a cholesterol gallstone, often appears yellow in color. These gallstones are composed mainly of undissolved cholesterol, but may contain other components.

* Pigment gallstones. These dark brown or black stones form when your bile contains too much bilirubin.

Risk factors

Factors that may increase your risk of gallstones include:

* Being female

* Being age 40 or older

* Being a Native American

* Being a Hispanic of Mexican origin

* Being overweight or obese

* Being sedentary

* Being pregnant

* Eating a high-fat diet

* Eating a high-cholesterol diet

* Eating a low-fiber diet

* Having a family history of gallstones

* Having diabetes

* Having certain blood disorders, such as sickle cell anemia or leukemia

* Losing weight very quickly

* Taking medications that contain estrogen, such as oral contraceptives or hormone therapy drugs

* Having liver disease

Complications

Complications of gallstones may include:

* Inflammation of the gallbladder. A gallstone that becomes lodged in the neck of the gallbladder can cause inflammation of the gallbladder (cholecystitis). Cholecystitis can cause severe pain and fever.

* Blockage of the common bile duct. Gallstones can block the tubes (ducts) through which bile flows from your gallbladder or liver to your small intestine. Severe pain, jaundice and bile duct infection can result.

* Blockage of the pancreatic duct. The pancreatic duct is a tube that runs from the pancreas and connects to the common bile duct just before entering the duodenum. Pancreatic juices, which aid in digestion, flow through the pancreatic duct.

A gallstone can cause a blockage in the pancreatic duct, which can lead to inflammation of the pancreas (pancreatitis). Pancreatitis causes intense, constant abdominal pain and usually requires hospitalization.

* Gallbladder cancer. People with a history of gallstones have an increased risk of gallbladder cancer. But gallbladder cancer is very rare, so even though the risk of cancer is elevated, the likelihood of gallbladder cancer is still very small.

Prevention

You can reduce your risk of gallstones if you:

* Don't skip meals. Try to stick to your usual mealtimes each day. Skipping meals or fasting can increase the risk of gallstones.

* Lose weight slowly. If you need to lose weight, go slow. Rapid weight loss can increase the risk of gallstones. Aim to lose 1 or 2 pounds (about 0.5 to 1 kilogram) a week.

* Eat more high-fiber foods. Include more fiber-rich foods in your diet, such as fruits, vegetables and whole grains.

* Maintain a healthy weight. Obesity and being overweight increase the risk of gallstones. Work to achieve a healthy weight by reducing the number of calories you eat and increasing the amount of physical activity you get. Once you achieve a healthy weight, work to maintain that weight by continuing your healthy diet and continuing to exercise.

Additional Information

Gallstones (cholelithiasis) are hardened pieces of bile that form in your gallbladder or bile ducts. They’re common, especially in females. Gallstones don’t always cause problems, but they can if they get stuck in your biliary tract and block your bile flow. If your gallstones cause you symptoms, you’ll need treatment to remove them — typically, surgery.

Overview

Gallstones are hardened pieces of bile sediment that can form in your gallbladder.

What are gallstones?

Gallstones are hardened, concentrated pieces of bile that form in your gallbladder or bile ducts. “Gall” means bile, so gallstones are bile stones. Your gallbladder is your bile bladder. It holds and stores bile for later use. Your liver makes bile, and your bile ducts carry it to the different organs in your biliary tract.

Healthcare providers sometimes use the term “cholelithiasis” to describe the condition of having gallstones. “Chole” also means bile, and “lithiasis” means stones forming. Gallstones form when bile sediment collects and crystallizes. Often, the sediment is an excess of one of the main ingredients in bile.

How serious are gallstones?

Gallstones (cholelithiasis) won’t necessarily cause any problems for you. A lot of people have them and never know it. But gallstones can become dangerous if they start to travel through your biliary tract and get stuck somewhere. They can clog up your biliary tract, causing pain and serious complications.

The problem with gallstones is that they grow — slowly, but surely — as bile continues to wash over them and leave another layer of sediment. What begins as a grain of sand can grow big enough to stop the flow of bile, especially if it gets into a narrow space, like a bile duct or the neck of your gallbladder.

How common are gallstones (cholelithiasis)?

At least 10% of U.S. adults have gallstones, and up to 75% of them are female. But only 20% of those diagnosed will ever have symptoms or need treatment for gallstones.

What are gallstones symptoms?

Gallstones generally don’t cause symptoms unless they get stuck and create a blockage. This blockage causes symptoms, most commonly upper abdominal pain and nausea. These may come and go, or they may come and stay. You might develop other symptoms if the blockage is severe or lasts a long time, like:

* Sweating.

* Fever.

* Fast heart rate.

* Abdominal swelling and tenderness.

* Yellow tint to your skin and eyes.

* Dark-colored pee and light-colored poop.

What is gallstone pain like?

Typical gallstone pain is sudden and severe and may make you sick to your stomach. This is called a gallstone attack or gallbladder attack. You might feel it most severely after eating, when your gallbladder contracts, creating more pressure in your biliary system. It might wake you from sleep.

Gallstone pain that builds to a peak and then slowly fades is called biliary colic. It comes in episodes that may last minutes to hours. The episode ends when and if the stone moves or the pressure eases. People describe the pain as intense, sharp, stabbing, cramping or squeezing. You might be unable to sit still.

Where is gallstone pain located?

Your biliary system is located in the upper right quadrant of your abdomen, which is under your right ribcage. Most people feel gallstone pain in this region. But sometimes, it can radiate to other areas. Some people feel it in their right arm or shoulder or in their back between their shoulder blades.

Some people feel gallstone pain in the middle of their abdomen or chest. This can be confusing because the feeling might resemble other conditions. Some people mistake gallstone pain for heartburn or indigestion. Others might feel like they’re having a heart attack, which is a different emergency.

Are gallstones symptoms different in a female?

No, but females may be more likely to experience referred pain — pain that you feel in a different place from where it started. So, they may be more likely to experience gallstone pain in their arm, shoulder, chest or back.

Females are also more prone to chronic pain, and they may be more likely to dismiss pain that comes and goes, like biliary colic does. It’s important to see a healthcare provider about any severe or recurring pain, even if it goes away. Once you’ve had a gallstone attack, you’re likely to have another.

What triggers gallstone pain?

Gallstone pain means that a gallstone has gotten stuck in your biliary tract and caused a blockage. If it’s a major blockage, you might feel it right away. If it’s only a partial blockage, you might not notice until your gallbladder contracts, creating more pressure in your system. Eating triggers this contraction.

A rich, heavy or fatty meal will trigger a bigger gallbladder contraction. That’s because your small intestine detects the fat content in your meal and tells your gallbladder how much bile it will need to help break it down. Your gallbladder responds by squeezing the needed bile out into your bile ducts.

What are the important warning signs of gallstones?

Biliary colic is the only warning sign of gallstones that you’ll get. It happens when a gallstone causes a temporary blockage, then manages to move out of the way and let bile flow through again. Even though the pain eventually goes away, it’s important to recognize these episodes as the warning that they are.

Once a gallstone has caused a blockage in your biliary tract, it’s likely to keep happening. The same one may be hanging around the same tight spot and continuing to grow. Or you may have more gallstones waiting in the wings. One day, a gallstone might get stuck and stay stuck. This would be an emergency.

#7 Re: Jai Ganesh's Puzzles » General Quiz » Yesterday 15:55:39

Hi,

#10777. What does the term in Biology Hermaphrodite mean?

#10778. What does the term in Biology Herpetology mean?

#8 Re: Jai Ganesh's Puzzles » English language puzzles » Yesterday 15:38:02

Hi,

#5973. What does the adjective (Biology) homozygous mean?

#5974. What does the verb (used with object) hone mean?

#9 Re: Jai Ganesh's Puzzles » Doc, Doc! » Yesterday 15:03:49

Hi,

#2583. What does the medical term IVU (Intravenous Urography) mean?

#10 Re: Jai Ganesh's Puzzles » 10 second questions » Yesterday 14:52:57

Hi,

#9869.

#11 Re: Jai Ganesh's Puzzles » Oral puzzles » Yesterday 14:36:01

Hi,

#6362.

#12 Re: Exercises » Compute the solution: » Yesterday 14:08:43

Hi,

2723.

#13 Re: Dark Discussions at Cafe Infinity » crème de la crème » Yesterday 00:05:46

2446) Vincent du Vigneaud

Gist:

Work

The element sulfur plays an important role in some of the chemical compounds and processes that are the basis of all life. Vincent du Vigneaud studied sulfurous compounds, including oxytocin, a hormone that among other things plays a role in sexual intimacy and reproduction among people and mammals. In 1953 du Vigneaud succeeded in isolating the substance and determining its chemical composition. It became the first peptide hormone to have its sequence of amino acids determined. He also succeeded in producing oxytocin by artificial means.

Summary

Vincent du Vigneaud (born May 18, 1901, Chicago, Illinois, U.S.—died December 11, 1978, White Plains, New York) was an American biochemist and winner of the Nobel Prize for Chemistry in 1955 for the isolation and synthesis of two pituitary hormones: vasopressin, which acts on the muscles of the blood vessels to cause elevation of blood pressure; and oxytocin, the principal agent causing contraction of the uterus and secretion of milk.

Du Vigneaud studied at the University of Illinois at Urbana-Champaign, took a doctorate from the University of Rochester, New York (1927), and then studied at Johns Hopkins University, Baltimore, the Kaiser Wilhelm Institute, Berlin, and the University of Edinburgh. He headed the biochemistry department of the George Washington University Medical School, Washington, D.C. (1932–38), and was professor and head of the department of biochemistry at the Cornell University Medical College, New York City (1938–67), and professor of chemistry at Cornell University, Ithaca, New York (1967–75).

Du Vigneaud and his staff at Cornell helped identify the chemical structure of the hormone insulin in the late 1930s, and in the early 1940s they established the structure of the sulfur-bearing vitamin biotin. Later that decade, they isolated vasopressin and oxytocin and analyzed both those hormones’ chemical structure. Du Vigneaud found that the oxytocin molecule contains only eight different amino acids (nine amino acids in total, whereby a disulfide bond forms a link between two cysteines), in contrast to the hundreds of amino acids most other proteins contain. In 1953 he was able to synthesize oxytocin, becoming the first to achieve the synthesis of a protein hormone. In 1946 du Vigneaud and his colleagues at Cornell achieved another breakthrough, the synthesis of penicillin.

Details

Vincent du Vigneaud (May 18, 1901 – December 11, 1978) was an American biochemist. He was recipient of the 1955 Nobel Prize in Chemistry "for his work on biochemically important sulphur compounds, especially for the first synthesis of a polypeptide hormone," a reference to his work on the peptide hormone oxytocin.

Biography

Vincent du Vigneaud was born in Chicago in 1901. Of French descent, he was the son of inventor and mechanic Alfred du Vigneaud and Mary Theresa. He studied at the Schurz High School and completed secondary education in 1918. His interest in sulfur began when he entered high school and his new friends invited him to run chemical experiments on explosives using sulfur. During World War I, senior students were made to work on farms, and du Vigneaud worked near Caledonia, Illinois. There he became an expert in milking cows, which inspired him to become a farmer. However, his elder sister, Beatrice, persuaded him to take up chemistry at the University of Illinois at Urbana-Champaign, after which he enrolled in the chemical engineering course. He later recalled:

I found during the first year that it was chemistry rather than engineering that appealed to me most. I switched to a major in chemistry, since I was deeply impressed by the senior student's work, especially in organic chemistry. I also found that I was most interested in those aspects of organic chemistry that had to do with medical substances and began to develop an interest in biochemistry.

His interest was aroused by lectures of Carl Shipp Marvel and Howard B. Lewis, whom he remembered as being 'extremely enthusiastic about sulfur." With little support from the family, he found odd jobs to support himself. After receiving his MS in 1924 he joined DuPont.

He married Zella Zon Ford, whom he met on June 12, 1924, while working as a waiter during his university course. During the fall of 1924, Marvel found him a job as an assistant biochemist at the Philadelphia General Hospital that helped him to teach clinical chemistry at the Graduate School of Medicine, University of Pennsylvania. Marvel would pay for the trip to Pennsylvania in exchange for du Vigneaud's preparation of 10 pounds of cupferron. Resuming his academic career in 1925, du Vigneaud joined the group of John R. Murlin at the University of Rochester for his PhD thesis. He graduated in 1927 with his work The Sulfur of Insulin.

After a post-doctoral position with John Jacob Abel at Johns Hopkins University Medical School (1927–1928), he traveled to Europe as a National Research Council Fellow in 1928–1929, where he worked with Max Bergmann and Leonidas Zervas at the Kaiser Wilhelm Institute for Leather Research in Dresden, and with George Barger at the University of Edinburgh Medical School. He then returned to the University of Illinois as a professor.

In 1932, he started working at the George Washington University Medical School in Washington, D.C., and in 1938, he attended the Cornell Medical College in New York City, where he stayed until his emeritation in 1967. Following retirement, he held a position at Cornell University in Ithaca, New York.

In 1974, du Vigneaud had a stroke which forced his retirement. He died in 1978, one year after his wife's death in 1977.

Scientific contributions

Du Vigneaud's career was characterized by an interest in sulfur-containing peptides, proteins, and especially peptide hormones. Even before his Nobel-Prize-winning work on elucidating and synthesizing oxytocin and vasopressin via manipulating the AVP gene, he had established a reputation from his research on insulin, biotin, transmethylation, and penicillin.

He also carried out a series of structure-activity relationships for oxytocin and vasopressin, perhaps the first of their type for peptides. That work culminated in the publication of a book entitled A Trail of Research in Sulphur Chemistry and Metabolism and Related Fields.

Honours

Du Vigneaud joined Alpha Chi Sigma while at the University of Illinois in 1930. He was elected to the United States National Academy of Sciences and the American Philosophical Society in 1944, and the American Academy of Arts and Sciences in 1948. He received the 1955 Nobel Prize in Chemistry "for his work on biochemically important sulphur compounds, especially for the first synthesis of a polypeptide hormone," a reference to his work on the peptide hormone oxytocin.

#14 Re: This is Cool » Miscellany » Yesterday 00:03:08

2509) Refrigerant

Gist

Refrigerants are chemical fluids (compounds) used in HVAC and refrigeration systems that alternate between liquid and gaseous states to absorb, transport, and release heat, thereby cooling spaces or items. Common types include Hydrofluorocarbons (HFCs like R-134a, R-410A) and increasingly, eco-friendly natural refrigerants like propane and CO2.

Refrigerant is the working fluid used in air conditioners, refrigeration, and heat pump systems. It is a chemical compound that changes temperature as it transitions between liquid and gas form – cooling as it vaporizes, and heating up as it condenses.

Summary

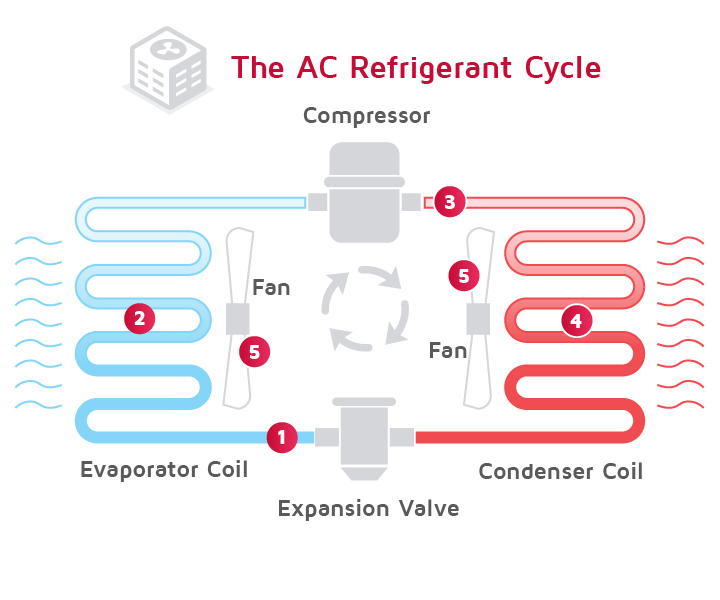

Refrigerants are working fluids that carry heat from a cold environment to a warm environment while circulating between them. For example, the refrigerant in an air conditioner carries heat from a cool indoor environment to a hotter outdoor environment. Similarly, the refrigerant in a kitchen refrigerator carries heat from the inside the refrigerator out to the surrounding room. A wide range of fluids are used as refrigerants, with the specific choice depending on the temperature range needed and constraints related to the system involved.

Refrigerants are the basis of vapor compression refrigeration systems. The refrigerant is circulated in a loop between the cold and warm environments. In the low-temperature environment, the refrigerant absorbs heat at low pressure, causing it to evaporate. The gaseous refrigerant then enters a compressor, which raises its pressure and temperature. The pressurized refrigerant circulates through the warm environment, where it releases heat and condenses to liquid form. The high-pressure liquid is then depressurized and returned to the cold environment as a liquid-vapor mixture.

Refrigerants are also used in heat pumps, which work like refrigeration systems. In the winter, a heat pump absorbs heat from the cold outdoor environment and releases it into the warm indoor environment. In summer, the direction of heat transfer is reversed.

Refrigerants include naturally occurring fluids, such as ammonia, carbon dioxide, propane, or isobutane, and synthetic fluids, such as chlorofluorocarbons, hydrochlorofluorocarbons, or hydrofluorocarbons. Many older synthetic refrigerants have been banned to protect the Earth's ozone layer or to limit climate change. Some refrigerants are flammable or toxic, making careful handling and disposal essential.

Refrigerants, while strongly associated with vapor compression systems, are used for many other purposes. These applications include propelling aerosols, polymer foam production, chemical feedstocks, fire suppression, and solvents.

Chillers are refrigeration systems that have a secondary loop which circulates a refrigerating liquid (as opposed to a refrigerant), with vapor compression refrigeration used to chill the secondary liquid.[5] Absorption refrigeration systems operate by absorbing a gas, such as ammonia, into a liquid, such as water.

Details

Refrigerants are crucial for commercial refrigeration systems, responsible for cooling and preserving perishable items. With refrigerant regulations phasing out ozone-depleting refrigerants, such as R-22, many businesses are transitioning to more environmentally friendly options. Understanding the different types of refrigerants available for commercial use can help you make an informed decision when it comes to replacing your refrigerator or freezer.

What Is Refrigerant?

Refrigerant is a cooling agent that absorbs heat and leaves cool air behind when passed through a compressor and evaporator. It undergoes a continuous cycle of compression and expansion where the coolant fluctuates between a liquid or gas state as it goes through the thermodynamic process to transfer heat efficiently.

How Refrigerant Works

Here is how refrigerant cools the inside of refrigerators and the air for AC units:

1) The refrigerant begins as a liquid when it passes through the expansion device in your unit. It expands and cools due to the sudden drop in pressure causing it to turn into a gas.

2) As the gaseous refrigerant passes through the copper evaporator coil inside the unit, it absorbs the heat from the products inside.

3) The unit’s compressor then pulls the refrigerant gas and the absorbed heat away from the food products, increasing the pressure of the gas.

4) The hot, high-pressure refrigerant then passes through the condenser coils. As it does so, it radiates its heat into the atmosphere and cools back into a liquid.

5) The liquid refrigerant reenters the expansion device and the process begins again.

Types of Refrigerant

Common types of refrigerants include synthetic compounds like hydrofluorocarbons (HFCs), hydrochlorofluorocarbons (HCFCs), and natural compounds like ammonia and carbon dioxide. Each type has its own unique ID number, properties, and environmental impact, making it important for businesses to choose the right refrigerant for their specific needs.

Because of the negative impact on the environment, there are refrigerant regulations in place that have listed a variety of banned refrigerant types that are being phased out and replaced with more environmentally friendly alternatives. A refrigerant’s environmental impact is measured by two main characteristics: Ozone Depletion Potential (ODP) and Global Warming Potential (GWP). ODP measures a refrigerant's impact on the ozone layer, while GWP assesses its contribution to global warming. Choosing refrigerants with lower ODP and GWP values can help businesses reduce their environmental footprint and comply with regulations aimed at mitigating climate change.

Additional Information

When we say “refrigerant” we mean a fluid that can easily boil from a liquid into a vapour and also be condensed from a vapour back into a liquid. This needs to occur again and again, continuously without fail.

An example of a refrigerant would be water. This is able to evaporate and condense and is easy and safe to use. It’s used in Absorption chillers as a refrigerant, you can find out more about this type of chiller by clicking here. The reason water isn’t typically used as a refrigerant in common air conditioning units is because there are specially made refrigerants designed specifically for this task, and these are able to perform much more efficiently.

Some of the more common refrigerants on the market ate R22, R134A and R410A, although the laws and regulations on refrigerants are tightening and many of these will be phased out in the long run. These common refrigerants all have extremely low boiling points compared to water. This allows it to evaporate into a vapour with very little thermal energy applied which means the refrigerant can extract heat more rapidly.

Lets look at how refrigerant moves around the system. We’ll start with the compressor as this is the heart of the system, it forces the refrigerant around each of the components within the refrigeration system. The refrigerant will enter as a saturated vapour and is a low temperature and low pressure. As the compressor pulls the refrigerant in, it rapidly compresses it, this forces the molecules together so the same amount of molecules fits into a smaller volume. The molecules are all constantly bouncing around and compressing them into a smaller space causes them to collide more often, as they collide they convert their kinetic energy into heat. At the same time, all the energy that is put in by the compressor is converted into internal energy within the refrigerant. This results in the refrigerant increasing in internal energy, enthalpy, temperature and pressure. You’ll know this if you’ve ever used a bike pump, the pump becomes very hot as the pressure increases.

The refrigerant now moves to the condenser. The condenser is where all the unwanted heat is rejected out into the atmosphere. This will include all the heat from the building as well as the heat from the compressor. When the refrigerant enters the condenser, it needs to be at a higher temperature than the ambient air around it, in order for the heat to transfer. The greater the temperature difference, the easier the heat transfer will be. The refrigerant enters as a superheated vapour at high pressure and temperature, it then passes along the tubes of the condenser. During this move, fans will blow air across the condenser (in an air cooled system) to remove the unwanted energy. Much like blowing a hot spoon of soup to cool it down. As the air blows across the tubes, it removes heat from the refrigerant. As the refrigerant gives up its heat it will condense into a liquid so by the time the refrigerant leaves the condenser it will be a completely saturated liquid, still at high pressure, but slightly cooler although it will have decreased in both enthalpy and entropy.

The refrigerant then makes its way to the expansion valve. The expansion valve meters the flow of refrigerant into the evaporator. In this example we’re using a thermal expansion valve which holds back refrigerant, creating a high and low pressure side. The valve will then adjust to allow some refrigerant to flow and this will be part liquid and part vapour. As it passes through it will expand to try and fill the void. As it expands the refrigerant reduces in pressure and temperature, just like if you hold a deodorant spray can and hold the trigger down. The refrigerant leaves the expansion valve at low pressure and temperature then heads straight into the evaporator.

The evaporator receives the refrigerant and another fan blows the warm air of the room across the evaporator coil. The temperature of the room air is higher than the temperature of the cool refrigerant this allows it to absorb more energy and boil the refrigerant completely into a vapour. Much like heating a pan of water, the heat will cause the water to evaporate into steam vapour and the vapour will carry the heat away, if you were to place your hand over the rising steam you will find it’s very hot. Although I wouldn’t recommend this and it can cause injury. Remember we looked earlier at the low boiling point of refrigerants, so room temperature air is enough to boil it into a vapour.

The refrigerant leaves the evaporator as a low temperature, low pressure vapour. The temperature only changes slightly which confuses many people, but the reason it doesn’t increase dramatically is because it is undergoing a phase change from a liquid to a vapour so the thermal energy is being used to break the bonds between the molecules but the enthalpy and entropy will increase and this is where the energy is going. The temperature will only change once the fluid is no longer undergoing a phase change.

1. The expansion valve relieves pressure and passes the refrigerant into the evaporator coil as a cold, low-pressure liquid.

2. As the refrigerant warms within the coil, it phases back into a gaseous state.

3. The cool gas is compressed and fed into the condenser coil as a hot, high-pressure gas.

4. As the hot, pressurized refrigerant cools within the condenser coil, it phases back into a warm liquid state.

5. This process triggers the air handler within your system to distribute the heated or cooled air throughout your home based on your thermostat settings.

#15 Jokes » Lemon Jokes - I » Yesterday 00:02:49

- Jai Ganesh

- Replies: 0

Q: Why did the lemon stop rolling down the hill?

A: It ran out of juice.

* * *

Q: Why did the lemon go out with a prune?

A: Because she couldn't find a date.

* * *

Q: What do you call a dancing pie?

A: Lemon Merengue.

* * *

Q: Why do lemons wear suntan lotion?

A: Because they peel.

* * *

Q: What did lemon say to lime?

A: Nothing stupid, lemons don't talk!

* * *

#16 Dark Discussions at Cafe Infinity » Come Quotes - XVIII » Yesterday 00:02:20

- Jai Ganesh

- Replies: 0

Come Quotes - XVIII

1. I believe cats to be spirits come to earth. A cat, I am sure, could walk on a cloud without coming through. - Jules Verne

2. Luck has nothing to do with it, because I have spent many, many hours, countless hours, on the court working for my one moment in time, not knowing when it would come. - Serena Williams

3. I'd say it's been my biggest problem all my life... it's money. It takes a lot of money to make these dreams come true. - Walt Disney

4. All of my misfortunes come from having thought too well of my fellows. - Jean-Jacques Rousseau

5. When people see your personality come out, they feel so good, like they actually know who you are. - Usain Bolt

6. Our birth is but a sleep and a forgetting. Not in entire forgetfulness, and not in utter nakedness, but trailing clouds of glory do we come. - William Wordsworth

7. You do things when the opportunities come along. I've had periods in my life when I've had a bundle of ideas come along, and I've had long dry spells. If I get an idea next week, I'll do something. If not, I won't do a darn thing. - Warren Buffett

8. I've been lucky. Opportunities don't often come along. So, when they do, you have to grab them. - Audrey Hepburn.

#17 This is Cool » Hydrogen Peroxide » 2026-03-01 18:03:57

- Jai Ganesh

- Replies: 0

Hydrogen Peroxide

Gist

Hydrogen peroxide (H2O2) is a powerful, versatile oxidizing agent and antiseptic, commonly used at 3% concentration for treating minor skin wounds, mouth irritation, bleaching hair, and disinfecting surfaces. It acts as an antimicrobial by releasing oxygen to break down microorganisms, though it can damage healthy tissue.

Hydrogen peroxide is used as a versatile disinfectant, antiseptic, and bleaching agent for wound cleaning, surface sanitizing, laundry whitening, and hair lightening, while industrially it bleaches paper, treats wastewater, and aids in chemical synthesis, with uses also extending to food packaging and agriculture, but it should be used cautiously on skin to avoid damage.

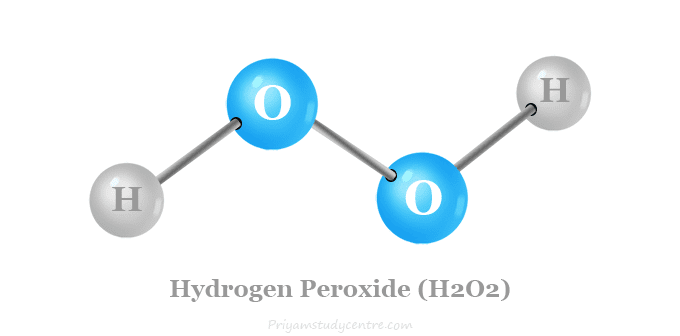

Summary

Hydrogen peroxide is a chemical compound with the formula H2O2. In its pure form, it is a very pale blue liquid; however, at lower concentrations, it appears colorless due to the faintness of the blue coloration. The molecule hydrogen peroxide is asymmetrical and highly polarized. Its strong tendency to form hydrogen bond networks results in greater viscosity compared to water. It is used as an oxidizer, bleaching agent, and antiseptic, usually as a dilute solution (3%–6% by weight) in water for consumer use and in higher concentrations for industrial use. Concentrated hydrogen peroxide, or "high-test peroxide", decomposes explosively when heated and has been used as both a monopropellant and an oxidizer in rocketry.

Hydrogen peroxide is a reactive oxygen species and the simplest peroxide, a compound having an oxygen–oxygen single bond. It decomposes slowly into water and elemental oxygen when exposed to light, and rapidly in the presence of organic or reactive compounds. It is typically stored with a stabilizer in a weakly acidic solution in an opaque bottle. Hydrogen peroxide is found in biological systems including the human body. Enzymes that use or decompose hydrogen peroxide are classified as peroxidases.

Details

Remember the days when a tumble off your bike inevitably led to a cotton ball dipped in hydrogen peroxide? If you’ve never been subjected to the sting, consider yourself lucky. And rest assured that healthcare experts no longer recommend using hydrogen peroxide for cuts and scrapes.

But it still has a lot of other uses around your home.

We asked family medicine physician Sarah Pickering Beers, MD, to explain how to use hydrogen peroxide safely — and when to leave it on the shelf.

What is hydrogen peroxide?

Hydrogen peroxide is water with an extra oxygen molecule (H2O2 instead of H2O). That extra boost of oxygen gives it serious cleaning and germ-killing power.

“The extra oxygen molecule kills bacteria,” Dr. Pickering Beers says. “It’s also what removes color from porous surfaces like fabric.” (In other words, it bleaches them.)

Is hydrogen peroxide safe?

Yes — but with limits. Hydrogen peroxide can be helpful for disinfecting and stain removal.

But don’t use it directly on your skin.

“Hydrogen peroxide has fallen out of favor as a wound cleanser,” Dr. Pickering Beers says. “It irritates the skin and can prevent the wound from healing. Essentially, it can do more harm than good.”

Instead, wash minor wounds with soap and water, pat dry and apply antibiotic ointment and a bandage.

It’s a similar story with acne. In the past, hydrogen peroxide may have been a suggested remedy for pimples, but it’s no longer recommended.

“It’s too irritating for skin and doesn’t stay active long enough to help with acne,” she explains. Opt for acne-fighters like salicylic acid or benzoyl peroxide instead. They penetrate your skin and fight acne-causing bacteria longer — and are gentler on your skin.

What is hydrogen peroxide used for?

Hydrogen peroxide can be used all over your home — from the bathroom to the fridge — as long as you use it safely.

Follow these precautions:

* Keep it out of reach of kids and pets. Hydrogen peroxide can be harmful if swallowed or spilled on skin in large amounts.

* Use gloves and ventilate the space. Peroxide can irritate your skin and eyes. And breathing it in can be harmful.

* If it stops bubbling when you use it, it’s expired. Pour it down the drain and replace it.

* Keep it in its original container or a dark spray bottle. Hydrogen peroxide breaks down over time, especially when exposed to light.

* Stick with 3% medical-grade peroxide. Stronger concentrations, like 35% food-grade peroxide, aren’t safe for home use. “Food-grade peroxide can be toxic if you inhale it or get it on your skin,” Dr. Pickering Beers warns.

With those ground rules covered, let’s clean up.

Cleaning and disinfecting

Hydrogen peroxide kills germs. Use a 50/50 mix of water and peroxide in a spray bottle to disinfect shared objects and surfaces, like:

* Counters

* Cutting boards

* Doorknobs

* Mirrors

* Garbage cans

* Refrigerators

* Sinks and bathtubs

* Toilets

* Toys

Spray, let sit for five minutes and rinse surfaces that touch food.

Washing produce

Want a cheap, chemical-free way to clean fruits and veggies?

Add 1/4 cup of peroxide to a large bowl or sink full of water. Soak your fruits and veggies, rinse them well and allow them to dry.

This method helps remove germs and pesticides — and may even help your produce last longer.

Removing household stains

Hydrogen peroxide is a natural bleach. It works great on white or off-white surfaces — but test a small area first. Like bleach, it can remove color, so avoid using it on colored fabrics.

Try it on:

* Carpet stains: Spray on white carpet and blot gently.

* Clothing stains: Soak white clothes in a mix of water and 1 cup of peroxide for 30 minutes. Or add peroxide to your washer’s bleach compartment.

* Grout: Spray on white tile grout, let it sit, then scrub.

* Cookware: Sprinkle baking soda on ceramic pots and pans, spray with peroxide, let sit 10 minutes and rinse.

Cleaning beauty tools

Hydrogen peroxide isn’t a skin care product, but you can use it to sanitize tools, like your:

* Nail clippers

* Tweezers

* Eyelash curlers

* Nails

Noticing yellow or discolored fingernails? Or did opting for midnight blue during your last mani-pedi leave your nails stained?

Soaking your nails in warm water and 3 tablespoons of peroxide for three minutes can brighten them up.

This method works best on natural nails. Don’t use it if you have cuts or broken skin around your cuticles, and stop if you notice irritation.

Teeth

Hydrogen peroxide is found in many over-the-counter teeth-whitening products. It can help lift stains, but use it with care.

“Talk to your dentist before trying whitening products,” advises Dr. Pickering Beers. “They can make your teeth more sensitive.”

You can also try gargling with diluted peroxide to kill everyday germs in your mouth. Or choose mouthwash that already contains peroxide (and probably tastes better). Just be sure not to swallow it.

You can also try using hydrogen peroxide to clean your toothbrush. Dip it in peroxide for five minutes to kill germs and rinse thoroughly with water. If you choose this method, be sure to change out the peroxide daily — and always replace your toothbrush at least every three to four months.

Bottom line

Hydrogen peroxide is a powerful cleaning solution — but it’s not for your body. So, if you haven’t already, it’s time to move your brown bottle of bubbly stuff from the medicine cabinet and find it a new home with the cleaning supplies.

Additional Information

Hydrogen peroxide is a colorless liquid at room temperature with a bitter taste. Small amounts of gaseous hydrogen peroxide occur naturally in the air. Hydrogen peroxide is unstable, decomposing readily to oxygen and water with release of heat. Although nonflammable, it is a powerful oxidizing agent that can cause spontaneous combustion when it comes in contact with organic material. Hydrogen peroxide is found in many households at low concentrations (3-9%) for medicinal applications and as a clothes and hair bleach. In industry, hydrogen peroxide in higher concentrations is used as a bleach for textiles and paper, as a component of rocket fuels, and for producing foam rubber and organic chemicals.

Hydrogen peroxide, aqueous solution, stabilized, with more than 60% hydrogen peroxide appears as a colorless liquid. Vapors may irritate the eyes and mucous membranes. Under prolonged exposure to fire or heat containers may violently rupture due to decomposition. Used to bleach textiles and wood pulp, in chemical manufacturing and food processing.

Hydrogen peroxide, aqueous solution, with not less than 20% but not more than 60% hydrogen peroxide (stabilized as necessary) appears as colorless aqueous solution. Vapors may irritate the eyes and mucous membranes. Contact with most common metals and their compounds may cause violent decomposition, especially in the higher concentrations. Contact with combustible materials may result in spontaneous ignition. Prolonged exposure to fire or heat may cause decomposition and rupturing of the container. Used to bleach textiles and wood pulp, in chemical manufacturing and food processing.

Hydrogen peroxide solution is the colorless liquid dissolved in water. Its vapors are irritating to the eyes and mucous membranes. The material, especially the higher concentrations, can violently decompose in contact with most common metals and their compounds. Contact with combustible materials can result in spontaneous ignition. Under prolonged exposure to fire or heat containers may violently rupture due to decomposition of the material. It is used to bleach textiles and wood pulp, in chemical manufacturing and food processing.

Hydrogen peroxide, stabilized appears as a crystalline solid at low temperatures. Has a slightly pungent, irritating odor. Used in the bleaching and deodorizing of textiles, wood pulp, hair, fur, etc. as a source of organic and inorganic peroxides; pulp and paper industry; plasticizers; rocket fuel; foam rubber; manufacture of glycerol; antichlor; dyeing; electroplating; antiseptic; laboratory reagent; epoxidation; hydroxylation; oxidation and reduction; viscosity control for starch and cellulose derivatives; refining and cleaning metals; bleaching and oxidizing agent in foods; neutralizing agent in wine distillation; seed disinfectant; substitute for chlorine in water and sewage treatment.

Hydrogen peroxide is the simplest peroxide with a chemical formula H2O2. Hydrogen peroxide is an unstable compound in the presence of a base or catalyst, and is typically stored with a stabilizer in a weakly acidic solution. If heated to its boiling point, it may undergo potentially explosive thermal decomposition. Hydrogen peroxide is formed in the body of mammals during reduction of oxygen either directly in a two-electron transfer reaction. As a natural product of metabolism, it readily undergoes decomposition by catalase in normal cells. Due to its potent and broad-spectrum antimicrobial actions, hydrogen peroxide is used in both liquid and gas form for preservative, disinfection and sterilization applications as an oxidative biocide. It is used in industrial and cosmetic applications as a bleaching agent. Hydrogen peroxide is also considered as a generally recognized as safe compound by the FDA; it is used as an antimicrobial agent in starch and cheese products, and as an oxidizing and reducing agent in products containing dried eggs, dried egg whites, and dried egg yolks.

Hydrogen Peroxide is a peroxide and oxidizing agent with disinfectant, antiviral and anti-bacterial activities. Upon rinsing and gargling or topical application, hydrogen peroxide exerts its oxidizing activity and produces free radicals which leads to oxidative damage to proteins and membrane lipids. This may inactivate and destroy pathogens and may prevent spreading of infection.

Uses of Hydrogen Peroxide

* Industrial peroxide is mostly used in medicine and is a bleaching agent in our everyday life. About 30% of peroxide is used as a bleaching agent for textiles, paper, pumps, lather, and oil industries.

* A large quantity of about 33% uses in the manufacture of borax, epoxides, propylene oxide, and other chemicals.

* In environmental science, peroxide is used in pollution control during the treatment of sewage cleanup and waste.

* It uses as a mild antiseptic to prevent the small cuts, and buns on the skin.

* Hydrogen peroxide is also used as a mouth rinse in medicine to the freshness or as a mouth cleaner.

#18 Science HQ » Kidney Function Test » 2026-03-01 17:23:36

- Jai Ganesh

- Replies: 0

Kidney Function Test

Gist

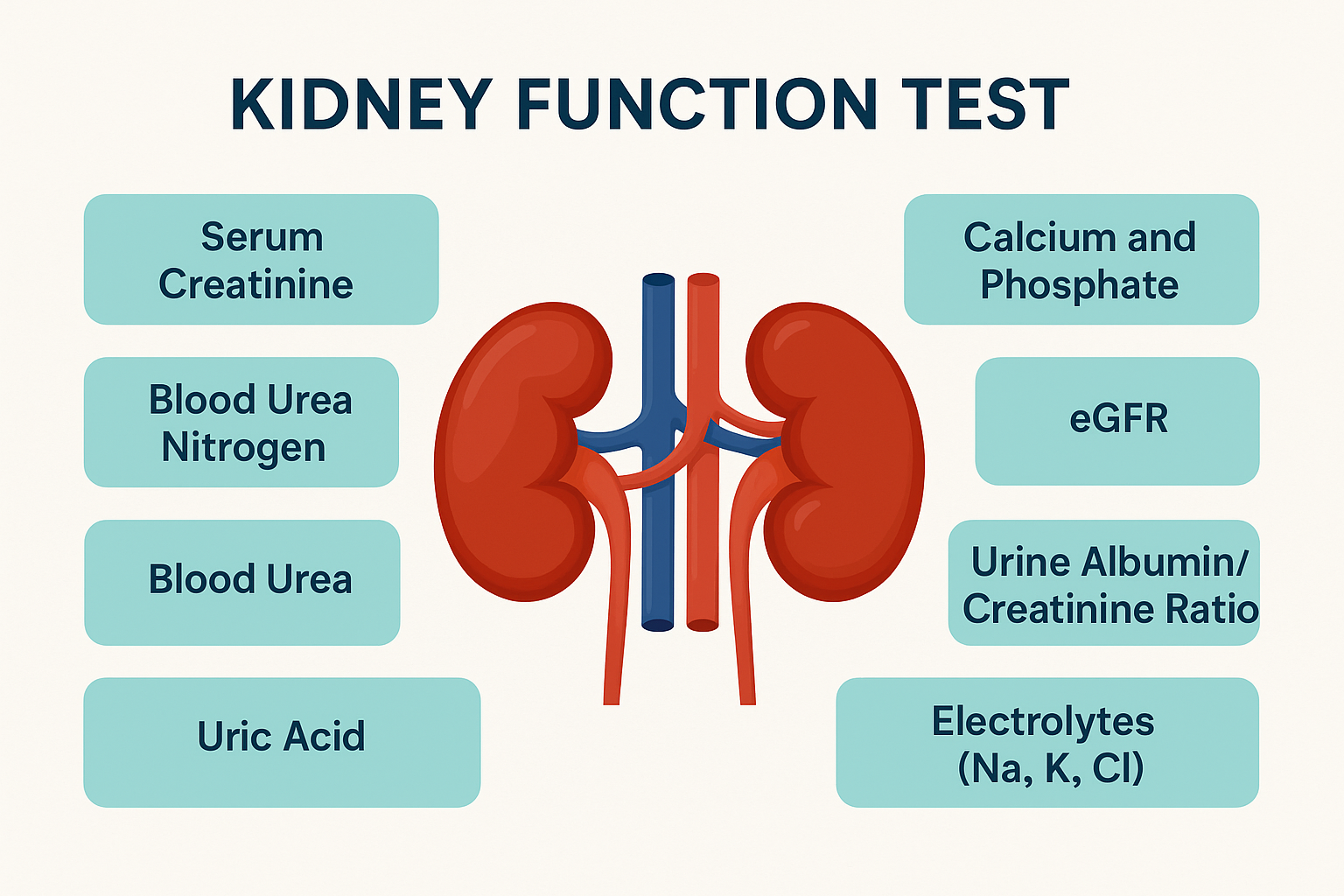

Kidney function tests (KFT) are blood and urine analyses, including eGFRcreatinine, and BUN, designed to evaluate how efficiently your kidneys filter waste and maintain fluid balance. These tests are vital for detecting early-stage chronic kidney disease (CKD), particularly in those with diabetes or hypertension. Normal eGFR is >90 mL/min/1.73 m².

The main kidney function tests involve blood and urine analysis to check how well kidneys filter waste, primarily measuring Creatinine, Blood Urea Nitrogen (BUN), and calculating the Estimated Glomerular Filtration Rate (eGFR) from a blood test, alongside urine tests for albuminuria (protein) and other abnormalities, with imaging scans sometimes used for structural issues.

Summary

Kidney function test is any clinical and laboratory procedure designed to evaluate various aspects of renal (kidney) capacity and efficiency and to aid in the diagnosis of kidney disorders. Such tests can be divided into several categories, which include (1) concentration and dilution tests, whereby the specific gravity of urine is determined at regular time intervals following water restriction or large water intake, to measure the capacity of the kidneys to conserve water, (2) clearance tests, which give an estimate of the filtration rate of the glomeruli, the principal filtering structures of the kidneys (see inulin clearance), and overall renal blood flow (see phenolsulfonphthalein test), (3) visual and physical examination of the urine, which usually includes the recording of its physical characteristics such as colour, total volume, and specific gravity, as well as checking for the abnormal presence of pus, hyaline casts (precipitation of pure protein from the kidney tubules), and red and white blood cells; proteinuria, the presence of protein in the urine, is often the first abnormal finding indicative of kidney disease, (4) determination of the concentration of various substances in the urine, notably glucose, amino acids, phosphate, sodium, and potassium, to help detect possible impairment of the specific kidney mechanisms normally involved with their reabsorption.

In addition to clinical and laboratory tests, the use of X-rays and radioisotopes is also valuable in the diagnosis of kidney disorders.

Details

Kidney function tests measure how efficiently your kidneys are working. Most of these tests check how well your kidneys clear waste from your blood. A kidney test may involve a blood test, 24-hour urine sample or both. You usually have your test results the same day or within a few days.

Overview:

What are kidney function tests?

Kidney function tests are urine (pee) and/or blood tests that evaluate how well your kidneys work. Your kidneys support your overall health by getting rid of waste and balancing body fluids and electrolytes. Most kidney function tests measure how well your glomeruli (glo-MARE-yoo-lye) work. Your glomeruli are tiny filters in your kidney that help clean your blood. The tests measure how efficiently glomeruli clear wastes from your blood.

Kidney function tests can make you feel a little anxious. It’s hard for some people to relax for a blood draw, and it can feel weird peeing into a cup and handing it over to a healthcare provider. But they’re an important tool in monitoring your kidney health. Providers understand these feelings and will do their best to make you feel comfortable.

Another name for kidney function tests is renal function tests.

What do your kidneys do?