Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#176 2018-07-17 00:03:39

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

159) Carbon-14 dating

Carbon-14 dating, also called radiocarbon dating, method of age determination that depends upon the decay to nitrogen of radiocarbon (carbon-14). Carbon-14 is continually formed in nature by the interaction of neutrons with nitrogen-14 in the Earth’s atmosphere; the neutrons required for this reaction are produced by cosmic rays interacting with the atmosphere.

Radiocarbon present in molecules of atmospheric carbon dioxide enters the biological carbon cycle: it is absorbed from the air by green plants and then passed on to animals through the food chain. Radiocarbon decays slowly in a living organism, and the amount lost is continually replenished as long as the organism takes in air or food. Once the organism dies, however, it ceases to absorb carbon-14, so that the amount of the radiocarbon in its tissues steadily decreases. Carbon-14 has a half-life of 5,730 ± 40 years—i.e., half the amount of the radioisotope present at any given time will undergo spontaneous disintegration during the succeeding 5,730 years. Because carbon-14 decays at this constant rate, an estimate of the date at which an organism died can be made by measuring the amount of its residual radiocarbon.

The carbon-14 method was developed by the American physicist Willard F. Libby about 1946. It has proved to be a versatile technique of dating fossils and archaeological specimens from 500 to 50,000 years old. The method is widely used by Pleistocene geologists, anthropologists, archaeologists, and investigators in related fields.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#177 2018-07-17 03:24:41

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

160) Nuclear Power

Nuclear power, electricity generated by power plants that derive their heat from fission in a nuclear reactor. Except for the reactor, which plays the role of a boiler in a fossil-fuel power plant, a nuclear power plant is similar to a large coal-fired power plant, with pumps, valves, steam generators, turbines, electric generators, condensers, and associated equipment.

World Nuclear Power

Nuclear power provides almost 15 percent of the world’s electricity. The first nuclear power plants, which were small demonstration facilities, were built in the 1960s. These prototypes provided “proof-of-concept” and laid the groundwork for the development of the higher-power reactors that followed.

The nuclear power industry went through a period of remarkable growth until about 1990, when the portion of electricity generated by nuclear power reached a high of 17 percent. That percentage remained stable through the 1990s and began to decline slowly around the turn of the 21st century, primarily because of the fact that total electricity generation grew faster than electricity from nuclear power while other sources of energy (particularly coal and natural gas) were able to grow more quickly to meet the rising demand. This trend appears likely to continue well into the 21st century. The Energy Information Administration (EIA), a statistical arm of the U.S. Department of Energy, has projected that world electricity generation between 2005 and 2035 will roughly double (from more than 15,000 terawatt-hours to 35,000 terawatt-hours) and that generation from all energy sources except petroleum will continue to grow.

In 2012 more than 400 nuclear reactors were in operation in 30 countries around the world, and more than 60 were under construction. The United States has the largest nuclear power industry, with more than 100 reactors; it is followed by France, which has more than 50. Of the top 15 electricity-producing countries in the world, all but two, Italy and Australia, utilize nuclear power to generate some of their electricity. The overwhelming majority of nuclear reactor generating capacity is concentrated in North America, Europe, and Asia. The early period of the nuclear power industry was dominated by North America (the United States and Canada), but in the 1980s that lead was overtaken by Europe. The EIA projects that Asia will have the largest nuclear capacity by 2035, mainly because of an ambitious building program in China.

A typical nuclear power plant has a generating capacity of approximately one gigawatt (GW; one billion watts) of electricity. At this capacity, a power plant that operates about 90 percent of the time (the U.S. industry average) will generate about eight terawatt-hours of electricity per year. The predominant types of power reactors are pressurized water reactors (PWRs) and boiling water reactors (BWRs), both of which are categorized as light water reactors (LWRs) because they use ordinary (light) water as a moderator and coolant. LWRs make up more than 80 percent of the world’s nuclear reactors, and more than three-quarters of the LWRs are PWRs.

Issues Affecting Nuclear Power

Countries may have a number of motives for deploying nuclear power plants, including a lack of indigenous energy resources, a desire for energy independence, and a goal to limit greenhouse gas emissions by using a carbon-free source of electricity. The benefits of applying nuclear power to these needs are substantial, but they are tempered by a number of issues that need to be considered, including the safety of nuclear reactors, their cost, the disposal of radioactive waste, and a potential for the nuclear fuel cycle to be diverted to the development of nuclear weapons. All of these concerns are discussed below.

Safety

The safety of nuclear reactors has become paramount since the Fukushima accident of 2011. The lessons learned from that disaster included the need to (1) adopt risk-informed regulation, (2) strengthen management systems so that decisions made in the event of a severe accident are based on safety and not cost or political repercussions, (3) periodically assess new information on risks posed by natural hazards such as earthquakes and associated tsunamis, and (4) take steps to mitigate the possible consequences of a station blackout.

The four reactors involved in the Fukushima accident were first-generation BWRs designed in the 1960s. Newer Generation III designs, on the other hand, incorporate improved safety systems and rely more on so-called passive safety designs (i.e., directing cooling water by gravity rather than moving it by pumps) in order to keep the plants safe in the event of a severe accident or station blackout. For instance, in the Westinghouse AP1000 design, residual heat would be removed from the reactor by water circulating under the influence of gravity from reservoirs located inside the reactor’s containment structure. Active and passive safety systems are incorporated into the European Pressurized Water Reactor (EPR) as well.

Traditionally, enhanced safety systems have resulted in higher construction costs, but passive safety designs, by requiring the installation of far fewer pumps, valves, and associated piping, may actually yield a cost saving.

Economics

A convenient economic measure used in the power industry is known as the levelized cost of electricity, or LCOE, which is the cost of generating one kilowatt-hour (kWh) of electricity averaged over the lifetime of the power plant. The LCOE is also known as the “busbar cost,” as it represents the cost of the electricity up to the power plant’s busbar, a conducting apparatus that links the plant’s generators and other components to the distribution and transmission equipment that delivers the electricity to the consumer.

The busbar cost of a power plant is determined by 1) capital costs of construction, including finance costs, 2) fuel costs, 3) operation and maintenance (O&M) costs, and 4) decommissioning and waste-disposal costs. For nuclear power plants, busbar costs are dominated by capital costs, which can make up more than 70 percent of the LCOE. Fuel costs, on the other hand, are a relatively small factor in a nuclear plant’s LCOE (less than 20 percent). As a result, the cost of electricity from a nuclear plant is very sensitive to construction costs and interest rates but relatively insensitive to the price of uranium. Indeed, the fuel costs for coal-fired plants tend to be substantially greater than those for nuclear plants. Even though fuel for a nuclear reactor has to be fabricated, the cost of nuclear fuel is substantially less than the cost of fossil fuel per kilowatt-hour of electricity generated. This fuel cost advantage is due to the enormous energy content of each unit of nuclear fuel compared to fossil fuel.

The O&M costs for nuclear plants tend to be higher than those for fossil-fuel plants because of the complexity of a nuclear plant and the regulatory issues that arise during the plant’s operation. Costs for decommissioning and waste disposal are included in fees charged by electrical utilities. In the United States, nuclear-generated electricity was assessed a fee of $0.001 per kilowatt-hour to pay for a permanent repository of high-level nuclear waste. This seemingly modest fee yielded about $750 million per year for the Nuclear Waste Fund.

At the beginning of the 21st century, electricity from nuclear plants typically cost less than electricity from coal-fired plants, but this formula may not apply to the newer generation of nuclear power plants, given the sensitivity of busbar costs to construction costs and interest rates. Another major uncertainty is the possibility of carbon taxes or stricter regulations on carbon dioxide emissions. These measures would almost certainly raise the operating costs of coal plants and thus make nuclear power more competitive.

Radioactive-waste disposal

Spent nuclear reactor fuel and the waste stream generated by fuel reprocessing contain radioactive materials and must be conditioned for permanent disposal. The amount of waste coming out of the nuclear fuel cycle is very small compared with the amount of waste generated by fossil fuel plants. However, nuclear waste is highly radioactive (hence its designation as high-level waste, or HLW), which makes it very dangerous to the public and the environment. Extreme care must be taken to ensure that it is stored safely and securely, preferably deep underground in permanent geologic repositories.

Despite years of research into the science and technology of geologic disposal, no permanent disposal site is in use anywhere in the world. In the last decades of the 20th century, the United States made preparations for constructing a repository for commercial HLW beneath Yucca Mountain, Nevada, but by the turn of the 21st century, this facility had been delayed by legal challenges and political decisions. Pending construction of a long-term repository, U.S. utilities have been storing HLW in so-called dry casks aboveground. Some other countries using nuclear power, such as Finland, Sweden, and France, have made more progress and expect to have HLW repositories operational in the period 2020–25.

Proliferation

The claim has long been made that the development and expansion of commercial nuclear power led to nuclear weapons proliferation, because elements of the nuclear fuel cycle (including uranium enrichment and spent-fuel reprocessing) can also serve as pathways to weapons development. However, the history of nuclear weapons development does not support the notion of a necessary connection between weapons proliferation and commercial nuclear power.

The first pathway to proliferation, uranium enrichment, can lead to a nuclear weapon based on highly enriched uranium (see nuclear weapon: Principles of atomic (fission) weapons). It is considered relatively straightforward for a country to fabricate a weapon with highly enriched uranium, but the impediment historically has been the difficulty of the enrichment process. Since nuclear reactor fuel for LWRs is only slightly enriched (less than 5 percent of the fissile isotope uranium-235) and weapons need a minimum of 20 percent enriched uranium, commercial nuclear power is not a viable pathway to obtaining highly enriched uranium.

The second pathway to proliferation, reprocessing, results in the separation of plutonium from the highly radioactive spent fuel. The plutonium can then be used in a nuclear weapon. However, reprocessing is heavily guarded in those countries where it is conducted, making commercial reprocessing an unlikely pathway for proliferation. Also, it is considered more difficult to construct a weapon with plutonium versus highly enriched uranium.

More than 20 countries have developed nuclear power industries without building nuclear weapons. On the other hand, countries that have built and tested nuclear weapons have followed other paths than purchasing commercial nuclear reactors, reprocessing the spent fuel, and obtaining plutonium. Some have built facilities for the express purpose of enriching uranium; some have built plutonium production reactors; and some have surreptitiously diverted research reactors to the production of plutonium. All these pathways to nuclear proliferation have been more effective, less expensive, and easier to hide from prying eyes than the commercial nuclear power route. Nevertheless, nuclear proliferation remains a highly sensitive issue, and any country that wishes to launch a commercial nuclear power industry will necessarily draw the close attention of oversight bodies such as the International Atomic Energy Agency.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#178 2018-07-19 00:43:09

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

161) Eyeglasses

Eyeglasses, also called glasses or spectacles, lenses set in frames for wearing in front of the eyes to aid vision or to correct such defects of vision as myopia, hyperopia, and astigmatism. In 1268 Roger Bacon made the earliest recorded comment on the use of lenses for optical purposes, but magnifying lenses inserted in frames were used for reading both in Europe and China at this time, and it is a matter of controversy whether the West learned from the East or vice versa. In Europe eyeglasses first appeared in Italy, their introduction being attributed to Alessandro di Spina of Florence. The first portrait to show eyeglasses is that of Hugh of Provence by Tommaso da Modena, painted in 1352. In 1480 Domenico Ghirlandaio painted St. Jerome at a desk from which dangled eyeglasses; as a result, St. Jerome became the patron saint of the spectacle-makers’ guild. The earliest glasses had convex lenses to aid farsightedness. A concave lens for myopia, or nearsightedness, is first evident in the portrait of Pope Leo X painted by Raphael in 1517.

In 1784 Benjamin Franklin invented bifocals, dividing his lenses for distant and near vision, the split parts being held together by the frame. Cemented bifocals were invented in 1884, and the fused and one-piece types followed in 1908 and 1910, respectively. Trifocals and new designs in bifocals were later introduced, including the Franklin bifocal revived in one-piece form.

Originally, lenses were made of transparent quartz and beryl, but increased demand led to the adoption of optical glass, for which Venice and Nürnberg were the chief centres of production. Ernst Abbe and Otto Schott in 1885 demonstrated that the incorporation of new elements into the glass melt led to many desirable variations in refractive index and dispersive power. In the modern process, glass for lenses is first rolled into plate form. Most lenses are made from clear crown glass of refractive index 1.523. In high myopic corrections, a cosmetic improvement is effected if the lenses are made of dense flint glass (refractive index 1.69) and coated with a film of magnesium fluoride to nullify the surface reflections. Flint glass, or barium crown, which has less dispersive power, is used in fused bifocals. Plastic lenses have become increasingly popular, particularly if the weight of the lenses is a problem, and plastic lenses are more shatterproof than glass ones. In sunglasses, the lenses are tinted to reduce light transmission and avoid glare.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#179 2018-07-21 00:09:25

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

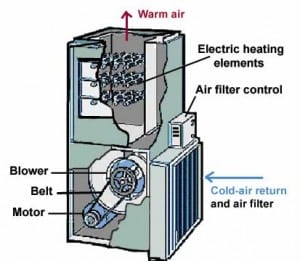

162) Electric furnace

Electric furnace, heating chamber with electricity as the heat source for achieving very high temperatures to melt and alloy metals and refractories. The electricity has no electrochemical effect on the metal but simply heats it.

Modern electric furnaces generally are either arc furnaces or induction furnaces. A third type, the resistance furnace, is still used in the production of silicon carbide and electrolytic aluminum; in this type, the furnace charge (i.e., the material to be heated) serves as the resistance element. In one type of resistance furnace, the heat-producing current is introduced by electrodes buried in the metal. Heat also may be produced by resistance elements lining the interior of the furnace.

Electric furnaces produce roughly two-fifths of the steel made in the United States. They are used by specialty steelmakers to produce almost all the stainless steels, electrical steels, tool steels, and special alloys required by the chemical, automotive, aircraft, machine-tool, transportation, and food-processing industries. Electric furnaces also are employed, exclusively, by mini-mills, small plants using scrap charges to produce reinforcing bars, merchant bars (e.g., angles and channels), and structural sections.

The German-born British inventor Sir William Siemens first demonstrated the arc furnace in 1879 at the Paris Exposition by melting iron in crucibles. In this furnace, horizontally placed carbon electrodes produced an electric arc above the container of metal. The first commercial arc furnace in the United States was installed in 1906; it had a capacity of four tons and was equipped with two electrodes. Modern furnaces range in heat size from a few tons up to 400 tons, and the arcs strike directly into the metal bath from vertically positioned, graphite electrodes. Although the three-electrode, three-phase, alternating-current furnace is in general use, single-electrode, direct-current furnaces have been installed more recently.

In the induction furnace, a coil carrying alternating electric current surrounds the container or chamber of metal. Eddy currents are induced in the metal (charge), the circulation of these currents producing extremely high temperatures for melting the metals and for making alloys of exact composition.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#180 2018-07-23 00:44:17

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

163) Dyslexia

What is dyslexia?

Dyslexia is a language-based learning disability. Dyslexia refers to a cluster of symptoms, that result in people having difficulties with specific language skills, particularly reading. Students with dyslexia often experience difficulties with both oral and written other language skills, such as writing, and pronouncing words and writing. Dyslexia affects individuals throughout their lives; however, its impact can change at different stages in a person’s life. It is referred to as a learning disability because dyslexia can make it very difficult for a student to succeed without phonics-based reading instruction that is unavailable in most public schools. In its more severe forms, a student with dyslexia may qualify for special education with specially designed instruction, and as appropriate, accommodations.

What causes dyslexia?

The exact causes of dyslexia are still not completely clear, but anatomical and brain imagery studies show differences in the way the brain of a person with dyslexia develops and functions. Moreover, most people with dyslexia have been found to have difficulty with identifying the separate speech sounds within a word and/or learning how letters represent those sounds, a key factor in their reading difficulties. Dyslexia is not due to either lack of intelligence or desire to learn; with appropriate teaching methods, individuals with dyslexia can learn successfully.

What are the effects of dyslexia?

The impact that dyslexia has is different for each person and depends on the severity of the condition and the effectiveness of instruction or remediation. The core difficulty is with reading words and this is related to difficulty with processing and manipulating sounds. Some individuals with dyslexia manage to learn early reading and spelling tasks, especially with excellent instruction, but later experience their most challenging problems when more complex language skills are required, such as grammar, understanding textbook material, and writing essays.

People with dyslexia can also have problems with spoken language, even after they have been exposed to good language models in their homes and good language instruction in school. They may find it difficult to express themselves clearly, or to fully comprehend what others mean when they speak. Such language problems are often difficult to recognize, but they can lead to major problems in school, in the workplace, and in relating to other people. The effects of dyslexia can reach well beyond the classroom.

Dyslexia can also affect a person’s self-image. Students with dyslexia often end up feeling less intelligent and less capable than they actually are. After experiencing a great deal of stress due to academic problems, a student may become discouraged about continuing in school.

Are There Other Learning Disabilities Besides Dyslexia?

Dyslexia is one type of learning disability. Other learning disabilities besides Dyslexia include the following:

(i) Dyscalculia – a mathematical disability in which a person has unusual difficulty solving arithmetic problems and grasping math concepts.

(ii) Dysgraphia – a condition of impaired letter writing by hand—disabled handwriting. Impaired handwriting can interfere with learning to spell words in writing and speed of writing text. Children with dysgraphia may have only impaired handwriting, only impaired spelling (without reading problems), or both impaired handwriting and impaired spelling.

(iii) Attention Deficit Disorder (ADD) and Attention Deficit Hyperactive Disorders (ADHD) can and do impact learning but they are not learning disabilities. An individual can have more than one learning or behavioral disability. In various studies as many as 50% of those diagnosed with a learning or reading disability have also been diagnosed with ADHD. Although disabilities may co-occur, one is not the cause of the other.

How Common Are Language-Based Learning Disabilities? 15-20% of the population has a language-based learning disability. Of the students with specific learning disabilities receiving special education services, 70-80% have deficits in reading. Dyslexia is the most common cause of reading, writing and spelling difficulties. Dyslexia affects males and females nearly equally as well as, people from different ethnic and socio-economic backgrounds nearly equally.

Can Individuals Who Have Dyslexia Learn To Read? Yes. If children who have dyslexia receive effective phonological awareness and phonics training in Kindergarten and 1st grade, they will have significantly fewer problems in learning to read at grade level than do children who are not identified or helped until 3rd grade. 74% of the children who are poor readers in 3rd grade remain poor readers in the 9th grade, many because they do not receive appropriate Structured Literacy instruction with the needed intensity or duration. Often they can’t read well as adults either. It is never too late for individuals with dyslexia to learn to read, process, and express information more efficiently. Research shows that programs utilizing Structured Literacy instructional techniques can help children and adults learn to read.

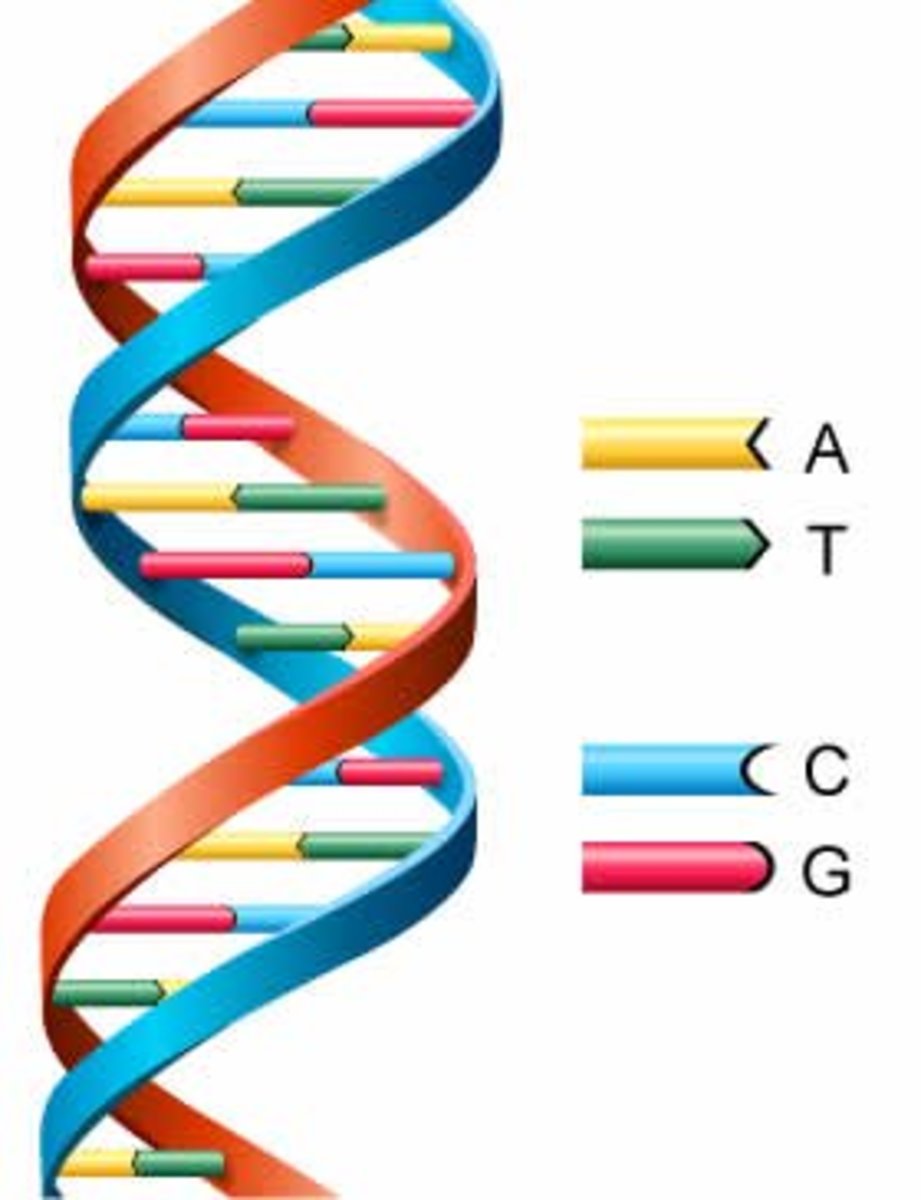

How Do People “Get” Dyslexia?

The causes for dyslexia are neurobiological and genetic. Individuals inherit the genetic links for dyslexia. Chances are that one of the child’s parents, grandparents, aunts, or uncles has dyslexia. Dyslexia is not a disease. With proper diagnosis, appropriate instruction, hard work, and support from family, teachers, friends, and others, individuals who have dyslexia can succeed in school and later as working adults.

--------------------------------------------------------------------------------------------------------------------------------------------------------------

164) Obesity

Obesity, also called corpulence or fatness, excessive accumulation of body fat, usually caused by the consumption of more calories than the body can use. The excess calories are then stored as fat, or adipose tissue. Overweight, if moderate, is not necessarily obesity, particularly in muscular or large-boned individuals.

Defining Obesity

Obesity was traditionally defined as an increase in body weight that was greater than 20 percent of an individual’s ideal body weight—the weight associated with the lowest risk of death, as determined by certain factors, such as age, height, and gender. Based on these factors, overweight could then be defined as a 15–20 percent increase over ideal body weight. However, today the definitions of overweight and obesity are based primarily on measures of height and weight—not morbidity. These measures are used to calculate a number known as body mass index (BMI). This number, which is central to determining whether an individual is clinically defined as obese, parallels fatness but is not a direct measure of body fat. Interpretation of BMI numbers is based on weight status groupings, such as underweight, healthy weight, overweight, and obese, that are adjusted for age and gender. For all adults over age 20, BMI numbers correlate to the same weight status designations; for example, a BMI between 25.0 and 29.9 equates with overweight and 30.0 and above with obesity. Morbid obesity (also known as extreme, or severe, obesity) is defined as a BMI of 40.0 or higher.

The Obesity Epidemic

Body weight is influenced by the interaction of multiple factors. There is strong evidence of genetic predisposition to fat accumulation, and obesity tends to run in families. However, the rise in obesity in populations worldwide since the 1980s has outpaced the rate at which genetic mutations are normally incorporated into populations on a large scale. In addition, growing numbers of persons in parts of the world where obesity was once rare have also gained excessive weight. According to the World Health Organization (WHO), which considered global obesity an epidemic, in 2014 more than 1.9 billion adults (age 18 or older) worldwide were overweight and 600 million, representing 13 percent of the world’s adult population, were obese.

The prevalence of overweight and obesity varied across countries, across towns and cities within countries, and across populations of men and women. In China and Japan, for instance, the obesity rate for men and women was about 5 percent, but in some cities in China it had climbed to nearly 20 percent. In 2005 it was found that more than 70 percent of Mexican women were obese. WHO survey data released in 2010 revealed that more than half of the people living in countries in the Pacific Islands region were overweight, with some 80 percent of women in American Samoa found to be obese.

Childhood Obesity

Childhood obesity has become a significant problem in many countries. Overweight children often face stigma and suffer from emotional, psychological, and social problems. Obesity can negatively impact a child’s education and future socioeconomic status. In 2004 an estimated nine million American children over age six, including teenagers, were overweight, or obese (the terms were typically used interchangeably in describing excess fatness in children). Moreover, in the 1980s and 1990s the prevalence of obesity had more than doubled among children age 2 to 5 (from 5 percent to 10 percent) and age 6 to 11 (from 6 percent to 15 percent). In 2008 these numbers had increased again, with nearly 20 percent of children age 2 to 19 being obese in the United States. Further estimates in some rural areas of the country indicated that more than 30 percent of school-age children suffered from obesity. Similar increases were seen in other parts of the world. In the United Kingdom, for example, the prevalence of obesity among children age 2 to 10 had increased from 10 percent in 1995 to 14 percent in 2003, and data from a study conducted there in 2007 indicated that 23 percent of children age 4 to 5 and 32 percent of children age 10 to 11 were overweight or obese. By 2014, WHO data indicated, worldwide some 41 million children age 5 or under were overweight or obese.

In 2005 the American Academy of Pediatrics called obesity “the pediatric epidemic of the new millennium.” Overweight and obese children were increasingly diagnosed with high blood pressure, elevated cholesterol, and type II diabetes mellitus—conditions once seen almost exclusively in adults. In addition, overweight children experience broken bones and problems with joints more often than normal-weight children. The long-term consequences of obesity in young people are of great concern to pediatricians and public health experts because obese children are at high risk of becoming obese adults. Experts on longevity have concluded that today’s American youth might “live less healthy and possibly even shorter lives than their parents” if the rising prevalence of obesity is left unchecked.

Curbing the rise in childhood obesity was the aim of the Alliance for a Healthier Generation, a partnership formed in 2005 by the American Heart Association, former U.S. president Bill Clinton, and the children’s television network Nickelodeon. The alliance intended to reach kids through a vigorous public-awareness campaign. Similar projects followed, including American first lady Michelle Obama’s Let’s Move! program, launched in 2010, and campaigns against overweight and obesity were made in other countries as well.

Efforts were also under way to develop more-effective childhood obesity-prevention strategies, including the development of methods capable of predicting infants’ risk of later becoming overweight or obese. One such tool reported in 2012 was found to successfully predict newborn obesity risk by taking into account newborn weight, maternal and paternal BMI, the number of members in the newborn’s household, maternal occupational status, and maternal smoking during pregnancy.

Causes Of Obesity

In European and other Caucasian populations, genome-wide association studies have identified genetic variations in small numbers of persons with childhood-onset morbid obesity or adult morbid obesity. In one study, a chromosomal deletion involving 30 genes was identified in a subset of severely obese individuals whose condition manifested in childhood. Although the deleted segment was found in less than 1 percent of the morbidly obese study population, its loss was believed to contribute to aberrant hormone signaling, namely of leptin and insulin, which regulate appetite and glucose metabolism, respectively. Dysregulation of these hormones is associated with overeating (or hyperphagy) and with tissue resistance to insulin, increasing the risk of type II diabetes. The identification of genomic defects in persons affected by morbid obesity has indicated that, at least for some individuals, the condition arises from a genetic cause.

For most persons affected by obesity, however, the causes of their condition are more complex, involving the interaction of multiple factors. Indeed, the rapid rise in obesity worldwide is likely due to major shifts in environmental factors and changes in behaviour rather than a significant change in human genetics. For example, early feeding patterns imposed by an obese mother upon her offspring may play a major role in a cultural, rather than genetic, transmission of obesity from one generation to the next. Likewise, correlations between childhood obesity and practices such as infant birth by cesarean section, which has risen substantially in incidence worldwide, indicate that environment and behaviour may have a much larger influence on the early onset of obesity than previously thought. More generally, the distinctive way of life of a nation and the individual’s behavioral and emotional reaction to it may contribute significantly to widespread obesity. Among affluent populations, an abundant supply of readily available high-calorie foods and beverages, coupled with increasingly sedentary living habits that markedly reduce caloric needs, can easily lead to overeating. The stresses and tensions of modern living also cause some individuals to turn to foods and alcoholic drinks for “relief.” Indeed, researchers have found that the cause of obesity in all countries shares distinct similarities—diets rich in sweeteners and saturated fats, lack of exercise, and the availability of inexpensive processed foods.

The root causes of childhood obesity are complex and are not fully understood, but it is clear that children become obese when they eat too much and exercise too little. In addition, many children make poor food decisions, choosing to eat unhealthy, sugary snacks instead of healthy fruits and vegetables. Lack of calorie-burning exercise has also played a major role in contributing to childhood obesity. In 2005 a survey found that American children age 8 to 18 spent an average of about four hours a day watching television and videos and two additional hours playing video games and using computers. Furthermore, maternal consumption of excessive amounts of fat during pregnancy programs overeating behaviour in children. For example, children have an increased preference for fatty foods if their mothers ate a high-fat diet during pregnancy. The physiological basis for this appears to be associated with fat-induced changes in the fetal brain. For example, when pregnant rats consume high-fat diets, brain cells in the developing fetuses produce large quantities of appetite-stimulating proteins called orexigenic peptides. These peptides continue to be produced at high levels following birth and throughout the lifetime of the offspring. As a result, these rats eat more, weigh more, and mature sexually earlier in life compared with rats whose mothers consumed normal levels of fats during pregnancy.

Health Effects Of Obesity

Obesity may be undesirable from an aesthetic sense, especially in parts of the world where slimness is the popular preference, but it is also a serious medical problem. Generally, obese persons have a shorter life expectancy; they suffer earlier, more often, and more severely from a large number of diseases than do their normal-weight counterparts. For example, people who are obese are also frequently affected by diabetes; in fact, worldwide, roughly 90 percent of type II diabetes cases are caused by excess weight.

The association between obesity and the deterioration of cardiovascular health, which manifests in conditions such as diabetes and hypertension (abnormally high blood pressure), places obese persons at risk for accelerated cognitive decline as they age. Investigations of brain size in persons with long-term obesity revealed that increased body fat is associated with the atrophy (wasting away) of brain tissue, particularly in the temporal and frontal lobes of the brain. In fact, both overweight and obesity, and thus a BMI of 25 or higher, are associated with reductions in brain size, which increases the risk of dementia, the most common form of which is Alzheimer disease.

Obese women are often affected by infertility, taking longer to conceive than normal-weight women, and obese women who become pregnant are at an increased risk of miscarriage. Men who are obese are also at increased risk of fertility problems, since excess body fat is associated with decreased testosterone levels. In general, relative to normal-weight individuals, obese individuals are more likely to die prematurely of degenerative diseases of the heart, arteries, and kidneys, and they have an increased risk of developing cancer. Obese individuals also have an increased risk of death from accidents and constitute poor surgical risks. Mental health is affected; behavioral consequences of an obese appearance, ranging from shyness and withdrawal to overly bold self-assertion, may be rooted in neuroses and psychoses.

Treatment Of Obesity

The treatment of obesity has two main objectives: removal of the causative factors, which may be difficult if the causes are of emotional or psychological origin, and removal of surplus fat by reducing food intake. Return to normal body weight by reducing calorie intake is best done under medical supervision. Dietary fads and reducing diets that produce quick results without effort are of doubtful effectiveness in reducing body weight and keeping it down, and most are actually deleterious to health. (See dieting.) Weight loss is best achieved through increased physical activity and basic dietary changes, such as lowering total calorie intake by substituting fruits and vegetables for refined carbohydrates.

Several drugs are approved for the treatment of obesity. Two of them are Belviq (lorcaserin hydrochloride) and Qsymia (phentermine and topiramate). Belviq decreases obese individuals’ cravings for carbohydrate-rich foods by stimulating the release of serotonin, which normally is triggered by carbohydrate intake. Qsymia leverages the weight-loss side effects of topiramate, an antiepileptic drug, and the stimulant properties of phentermine, an existing short-term treatment for obesity. Phentermine previously had been part of fen-phen (fenfluramine-phentermine), an antiobesity combination that was removed from the U.S. market in 1997 because of the high risk for heart valve damage associated with fenfluramine.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#181 2018-07-25 00:28:46

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

165) Lifeboat

Lifeboat, watercraft especially built for rescue missions. There are two types, the relatively simple versions carried on board ships and the larger, more complex craft based on shore. Modern shore-based lifeboats are generally about 40–50 feet (12–15 metres) long and are designed to stay afloat under severe sea conditions. Sturdiness of construction, self-righting ability, reserve buoyancy, and manoeuvrability in surf, especially in reversing direction, are prime characteristics.

As early as the 18th century, attempts were made in France and England to build “unsinkable” lifeboats. After a tragic shipwreck in 1789 at the mouth of the Tyne, a lifeboat was designed and built at Newcastle that would right itself when capsized and would retain its buoyancy when nearly filled with water. Named the “Original,” the double-ended, ten-oared craft remained in service for 40 years and became the prototype for other lifeboats. In 1807 the first practical line-throwing device was invented. In 1890 the first mechanically powered, land-based lifeboat was launched, equipped with a steam engine; in 1904 the gasoline engine was introduced, and a few years later the diesel.

A typical modern land-based lifeboat is either steel-hulled or of double-skin, heavy timber construction; diesel powered; and equipped with radio, radar, and other electronic gear. It is manned by a crew of about seven, most of whom are usually volunteers who can be summoned quickly in an emergency.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#182 2018-07-26 03:12:55

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

166) Rafflesiaceae

Rafflesiaceae, flowering plant family (order Malpighiales) notable for being strictly parasitic upon the roots or stems of other plants and for the remarkable growth forms exhibited as adaptations to this mode of nutrition. Members of the family are endoparasites, meaning that the vegetative organs are so reduced and modified that the plant body exists only as a network of threadlike cellular strands living almost wholly within the tissues of the host plant. There are no green photosynthetic tissues, leaves, roots, or stems in the generally accepted sense, although vestiges of leaves exist in some species as scales. The flowers are well developed, however, and can be extremely large.

The family Rafflesiaceae includes the following three genera, mostly in the Old World subtropics: Rafflesia (about 28 species), Rhizanthes (4 species), and Sapria (1 or 2 species). The taxonomy of the family has been contentious, especially given the difficulty in obtaining specimens to study. The group formerly comprised seven genera, based on morphological similarities, but molecular evidence led to a dramatic reorganization by the Angiosperm Phylogeny Group III (APG III) botanical classification system. The genera Bdallophytum and Cytinus were transferred to the family Cytinaceae (order Malvales), and the genera Apodanthes and Pilostyles were moved to the family Apodanthaceae (order Cucurbitales).

The monster flower genus (Rafflesia) consists of about 28 species native to Southeast Asia, all of which are parasitic upon the roots of Tetrastigma vines (family Vitaceae). The genus includes the giant R. arnoldii, sometimes known as the corpse flower, which produces the largest known individual flower of any plant species in the world and is found in the forested mountains of Sumatra and Borneo. Its fully developed flower appears aboveground as a thick fleshy five-lobed structure weighing up to 11 kg (24 pounds) and measuring almost one metre (about one yard) across. It remains open five to seven days, emitting a fetid odour that attracts carrion-feeding flies, which are believed to be the pollinating agents. The flower’s colour is reddish or purplish brown, sometimes in a mottled pattern, with the gender organs in a central cup. The fruit is a berry containing sticky seeds thought to be disseminated by fruit-eating rodents. Other members of the genus have a similar reproductive biology. At least one species (R. magnifica) is listed as critically endangered by the IUCN Red List of Threatened Species.

The flowers of the genus Sapria are similar to those of Rafflesia and also emit a carrion odour. Members of the genus Rhizanthes produce flowers with nectaries, and some species are not malodorous. One species, Rhizanthes lowii, is known to generate heat with its flowers and buds, an adaptation that may aid in attracting pollinators. Species of both Sapria and Rhizanthes are considered rare and are threatened by habitat loss.

Rafflesia, The World's Largest Bloom

* * *

A plant with no leaves, no roots, no stem and the biggest flower in the world sounds like the stuff of comic books or science fiction.

'It is perhaps the largest and most magnificent flower in the world' was how Sir Stamford Raffles described his discovery in 1818 of Rafflesia arnoldii, modestly named after himself and his companion, surgeon-naturalist Dr James Arnold.

This jungle parasite of south-east Asia holds the all-time record-breaking bloom of 106.7 centimetres (3 ft 6 in) diameter and 11 kilograms (24 lb) weight, with petal-like lobes an inch thick.

It is one of the rarest plants in the world and on the verge of extinction.

As if size and rarity weren't enough, Rafflesia is also one of the world's most distasteful plants, designed to imitate rotting meat or dung.

The flower is basically a pot, flanked by five lurid red-brick and spotted cream 'petals,' advertising a warm welcome to carrion flies hungry for detritus. Yet the plant is now hanging on to a precarious existence in a few pockets of Sumatra, Borneo, Thailand and the Philippines, struggling to survive against marauding humans and its own infernal biology.

Everything seems stacked against Rafflesia. First, its seeds are difficult to germinate. Then it has gambled its life entirely on parasitising just one sort of vine. This is a dangerously cavalier approach to life, because without the vine it's dead.

Having gorged itself on the immoral earnings of parasitism for a few years, the plant eventually breaks out as a flower bud, swells up over several months, and then bursts into flower. But most of the flower buds die before opening, and even in bloom Rafflesia is fighting the clock. Because the flower only lasts a few days, it has to mate quickly with a nearby flower of the opposite gender. The trouble is, the male and female flowers are now so rare that it's a miracle to find a couple ready to cross-pollinate each other.

To be fair, though, Rafflesia's lifestyle isn't so ridiculous. After all, few other plants feed so well that they have evolved monstrous flowers.

But now that logging is cutting down tropical forests, the precious vine that Rafflesia depends on is disappearing, and Rafflesia along with it. The years of living dangerously are becoming all too clear.

There are at least 13 species of Rafflesia, but two of them have already been unsighted since the Second World War and are presumed extinct, and the record-holding Rafflesia arnoldii is facing extinction. To make matters worse, no one has ever cultivated Rafflesia in a garden or laboratory.

Considering all these threats for the species, some efforts of initiating a research centre and introducing laws to protect the largest and one of the rarest flowers in the world, like it happened in Malaysia and other SE Asian countries some years ago, is more than welcome.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#183 2018-07-27 00:36:38

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

167) Holography

Holography, means of creating a unique photographic image without the use of a lens. The photographic recording of the image is called a hologram, which appears to be an unrecognizable pattern of stripes and whorls but which—when illuminated by coherent light, as by a laser beam—organizes the light into a three-dimensional representation of the original object.

An ordinary photographic image records the variations in intensity of light reflected from an object, producing dark areas where less light is reflected and light areas where more light is reflected. Holography, however, records not only the intensity of the light but also its phase, or the degree to which the wave fronts making up the reflected light are in step with each other, or coherent. Ordinary light is incoherent—that is, the phase relationships between the multitude of waves in a beam are completely random; wave fronts of ordinary light waves are not in step.

Dennis Gabor, a Hungarian-born scientist, invented holography in 1948, for which he received the Nobel Prize for Physics more than 20 years later (1971). Gabor considered the possibility of improving the resolving power of the electron microscope, first by utilizing the electron beam to make a hologram of the object and then by examining this hologram with a beam of coherent light. In Gabor’s original system the hologram was a record of the interference between the light diffracted by the object and a collinear background. This automatically restricts the process to that class of objects that have considerable areas that are transparent. When the hologram is used to form an image, twin images are formed. The light associated with these images is propagating in the same direction, and hence in the plane of one image light from the other image appears as an out-of-focus component. Although a degree of coherence can be obtained by focusing light through a very small pinhole, this technique reduces the light intensity too much for it to serve in holography; therefore, Gabor’s proposal was for several years of only theoretical interest. The development of lasers in the early 1960s suddenly changed the situation. A laser beam has not only a high degree of coherence but high intensity as well.

Of the many kinds of laser beam, two have especial interest in holography: the continuous-wave (CW) laser and the pulsed laser. The CW laser emits a bright, continuous beam of a single, nearly pure colour. The pulsed laser emits an extremely intense, short flash of light that lasts only about 1/100,000,000 of a second. Two scientists in the United States, Emmett N. Leith and Juris Upatnieks of the University of Michigan, applied the CW laser to holography and achieved great success, opening the way to many research applications.

---------------------------------------------------------------------------------------------------------------------------------------------------

168) Penicillin

Penicillin, one of the first and still one of the most widely used antibiotic agents, derived from the Penicillium mold. In 1928 Scottish bacteriologist Alexander Fleming first observed that colonies of the bacterium Staphylococcus aureus failed to grow in those areas of a culture that had been accidentally contaminated by the green mold Penicillium notatum. He isolated the mold, grew it in a fluid medium, and found that it produced a substance capable of killing many of the common bacteria that infect humans. Australian pathologist Howard Florey and British biochemist Ernst Boris Chain isolated and purified penicillin in the late 1930s, and by 1941 an injectable form of the drug was available for therapeutic use.

The several kinds of penicillin synthesized by various species of the mold Penicillium may be divided into two classes: the naturally occurring penicillins (those formed during the process of mold fermentation) and the semisynthetic penicillins (those in which the structure of a chemical substance—6-aminopenicillanic acid—found in all penicillins is altered in various ways). Because it is possible to change the characteristics of the antibiotic, different types of penicillin are produced for different therapeutic purposes.

The naturally occurring penicillins, penicillin G (benzylpenicillin) and penicillin V (phenoxymethylpenicillin), are still used clinically. Because of its poor stability in acid, much of penicillin G is broken down as it passes through the stomach; as a result of this characteristic, it must be given by intramuscular injection, which limits its usefulness. Penicillin V, on the other hand, typically is given orally; it is more resistant to digestive acids than penicillin G. Some of the semisynthetic penicillins are also more acid-stable and thus may be given as oral medication.

All penicillins work in the same way—namely, by inhibiting the bacterial enzymes responsible for cell wall synthesis in replicating microorganisms and by activating other enzymes to break down the protective wall of the microorganism. As a result, they are effective only against microorganisms that are actively replicating and producing cell walls; they also therefore do not harm human cells (which fundamentally lack cell walls).

Some strains of previously susceptible bacteria, such as Staphylococcus, have developed a specific resistance to the naturally occurring penicillins; these bacteria either produce β-lactamase (penicillinase), an enzyme that disrupts the internal structure of penicillin and thus destroys the antimicrobial action of the drug, or they lack cell wall receptors for penicillin, greatly reducing the ability of the drug to enter bacterial cells. This has led to the production of the penicillinase-resistant penicillins (second-generation penicillins). While able to resist the activity of β-lactamase, however, these agents are not as effective against Staphylococcus as the natural penicillins, and they are associated with an increased risk for liver toxicity. Moreover, some strains of Staphylococcus have become resistant to penicillinase-resistant penicillins; an example is methicillin-resistant Staphylococcus aureus (MRSA).

Penicillins are used in the treatment of throat infections, meningitis, syphilis, and various other infections. The chief side effects of penicillin are hypersensitivity reactions, including skin rash, hives, swelling, and anaphylaxis, or allergic shock. The more serious reactions are uncommon. Milder symptoms may be treated with corticosteroids but usually are prevented by switching to alternative antibiotics. Anaphylactic shock, which can occur in previously sensitized individuals within seconds or minutes, may require immediate administration of epinephrine.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#184 2018-07-29 01:36:20

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

169) Windmill

Windmill, device for tapping the energy of the wind by means of sails mounted on a rotating shaft. The sails are mounted at an angle or are given a slight twist so that the force of wind against them is divided into two components, one of which, in the plane of the sails, imparts rotation.

Like waterwheels, windmills were among the original prime movers that replaced human beings as a source of power. The use of windmills was increasingly widespread in Europe from the 12th century until the early 19th century. Their slow decline, because of the development of steam power, lasted for a further 100 years. Their rapid demise began following World War I with the development of the internal-combustion engine and the spread of electric power; from that time on, however, electrical generation by wind power has served as the subject of more and more experiments.

The earliest-known references to windmills are to a Persian millwright in AD 644 and to windmills in Seistan, Persia, in AD 915. These windmills are of the horizontal-mill type, with sails radiating from a vertical axis standing in a fixed building, which has openings for the inlet and outlet of the wind diametrically opposite to each other. Each mill drives a single pair of stones directly, without the use of gears, and the design is derived from the earliest water mills. Persian millwrights, taken prisoner by the forces of Genghis Khan, were sent to China to instruct in the building of windmills; their use for irrigation there has lasted ever since.

The vertical windmill, with sails on a horizontal axis, derives directly from the Roman water mill with its right-angle drive to the stones through a single pair of gears. The earliest form of vertical mill is known as the post mill. It has a boxlike body containing the gearing, millstones, and machinery and carrying the sails. It is mounted on a well-supported wooden post socketed into a horizontal beam on the level of the second floor of the mill body. On this it can be turned so that the sails can be faced into the wind.

The next development was to place the stones and gearing in a fixed tower. This has a movable top, or cap, which carries the sails and can be turned around on a track, or curb, on top of the tower. The earliest-known illustration of a tower mill is dated about 1420. Both post and tower mills were to be found throughout Europe and were also built by settlers in America.

To work efficiently, the sails of a windmill must face squarely into the wind, and in the early mills the turning of the post-mill body, or the tower-mill cap, was done by hand by means of a long tailpole stretching down to the ground. In 1745 Edmund Lee in England invented the automatic fantail. This consists of a set of five to eight smaller vanes mounted on the tailpole or the ladder of a post mill at right angles to the sails and connected by gearing to wheels running on a track around the mill. When the wind veers it strikes the sides of the vanes, turns them and hence the track wheels also, which turn the mill body until the sails are again square into the wind. The fantail may also be fitted to the caps of tower mills, driving down to a geared rack on the curb.

The sails of a mill are mounted on an axle, or windshaft, inclined upward at an angle of from 5° to 15° to the horizontal. The first mill sails were wooden frames on which sailcloth was spread; each sail was set individually with the mill at rest. The early sails were flat planes inclined at a constant angle to the direction of rotation; later they were built with a twist like that of an airplane propeller.

In 1772 Andrew Meikle, a Scot, invented his spring sail, substituting hinged shutters, like those of a Venetian blind, for sailcloths and controlling them by a connecting bar and a spring on each sail. Each spring had to be adjusted individually with the mill at rest according to the power required; the sails were then, within limits, self-regulating.

In 1789 Stephen Hooper in England utilized roller blinds instead of shutters and devised a remote control to enable all the blinds to be adjusted simultaneously while the mill was at work. In 1807 Sir William Cubitt invented his “patent sail” combining Meikle’s hinged shutters with Hooper’s remote control by chain from the ground via a rod passing through a hole drilled through the windshaft; the operation was comparable to operating an umbrella; by varying the weights hung on the chain the sails were made self-regulating.

The annular-sailed wind pump was brought out in the United States by Daniel Hallady in 1854, and its production in steel by Stuart Perry in 1883 led to worldwide adoption, for, although inefficient, it was cheap and reliable. The design consists of a number of small vanes set radially in a wheel. Governing is automatic: of yaw by tail vane, and of torque by setting the wheel off-centre with respect to the vertical yaw axis. Thus, as the wind increases the mill turns on its vertical axis, reducing the effective area and therefore the speed.

The most important use of the windmill was for grinding grain. In certain areas its uses in land drainage and water pumping were equally important. The windmill has been used as a source of electrical power since P. La Cour’s mill, built in Denmark in 1890 with patent sails and twin fantails on a steel tower. Interest in the use of windmills for the generation of electric power, on both single-user and commercial scales, revived in the 1970s.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#185 2018-07-30 02:50:10

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

170) The Development of the Tape Recorder

Overview

A number of experimental sound recording devices were designed and developed in the early 1900s in Europe and the United States. Recording and reproducing the sounds were two separate processes, but both were necessary in the development of the tape recorder. Up until the 1920s, especially in the United States, a type of tape recorder using steel tape was designed and produced. In 1928 a coated magnetic tape was invented in Germany. The German engineers refined the magnetic tape in the 1930s and 1940s, developing a recorder called the Magnetophon. This type of machine was introduced to the United States after World War II and contributed to the eventual widespread use of the tape recorder. This unique ability to record sound and play it back would have implications politically, aesthetically, and commercially throughout Europe and the United States during World War II and after. Sound recording and reproduction formed the foundation of many new industries that included radio and film.

Background

Sound recording and reproduction began to interest inventors in the late nineteenth century, when several key technological innovations became available. Recordings required the following: a way to pick up sound via a microphone, a way to store information, and a playing device to access the stored data. As early as 1876 American inventor Alexander Graham Bell (1847-1922) invented the telephone, which incorporated many of the principles used in sound recording. The next year, American inventor Thomas Alva Edison (1847-1931) patented the phonograph, and German-American Emil Berliner (1851-1929) invented the flat-disc recording in 1887. The missing piece was a device to play back the recorded sounds.

The history of the tape recorder officially begins in 1878, when American mechanic Oberlin Smith visited Thomas Edison's laboratory. Smith was curious about the feasibility of recording telephone signals with a steel wire. He published his work in Electrical World outlining the process: "acoustic cycles are transferred to electric cycles and the moving sonic medium is magnetized with them. During the playing the medium generates electric cycles which have the identical frequency as during the recording." Smith's outline provided the theoretical framework used by others in the quest for a device that would both record and play the sound back.

In 1898 a Danish inventor, Valdemar Poulsen (1869-1942), patented the first device with the ability to play back the recorded sounds from steel wire. He reworked Smith's design and for several years actually manufactured the first "sonic recorders." This invention, patented in Denmark and the United States, was called the telegraphon, as it was to be used as an early kind of the telephone answering machine. The recording medium was a steel chisel and an electromagnet. He used steel wire coiled around a cylinder reminiscent of Thomas Edison's phonograph. Poulsen's telegraphon was shown at the 1900 International Exhibition in Paris and was praised by the scientific and technical press as a revolutionary innovation.

In the early 1920s Kurt Stille and Karl Bauer, German inventors, redesigned the telegraphon in order for the sound to be amplified electronically. They called their invention the Dailygraph, and it had the distinction of being able to accommodate a cassette. In the late 1920s the British Ludwig Blattner Picture Corporation bought Stille and Bauer's patent and attempted to produce films using synchronized sound. The British Marconi Wireless Telegraph company also bought the Stille and Bauer design and for a number of years made tape machines for the British Broadcasting Corporation. The Marconi-Stilles recording machines were used until the 1940s by the BBC radio service in Canada, Australia, France, Egypt, Sweden, and Poland.

In 1927 and 1928 Russian Boris Rtcheouloff and German chemist Fritz Pfleumer both patented an "improved" means of recording sound using magnetized tape. These ideas incorporated a way to record sound or pictures by causing a strip, disc, or cylinder of iron or other magnetic material to be magnetized. Pfleumer's patent had an interesting ingredient list. The "recipe" included using a powder of soft iron mixed with an organic binding medium such as dissolved sugar or molasses. This substance was dried and carbonized, and then the carbon was chemically combined into the iron by heating. The resulting steel powder, while still heated, was cooled by being placed in water, dried, and powered for the second time. This allowed the recording of sound onto varieties of "tape" made from soft paper, or films of cellulose derivatives.

In 1932 a large German electrical manufacturer purchased the patent rights of Pfleumer. German engineers made refinements to the magnetic tape as well as designing a device to play back the tape. By 1935 a machine known as the Magnetophon was marketed by the German Company AEG. In 1935 AEG debuted its Magnetophon with a recording of the London Philharmonic Orchestra.

Impact

By the beginning of World War II the development of the tape recorder continued to be in a state of flux. Experiments using different types and materials for recording tapes continued, as well as research into devices to play back the recorded sounds. Sound recording on coated-plastic tape manufactured by AEG was improved to the point that it became impossible to distinguish Adolf Hitler's radio addresses as a live or a recorded audio transmission. Engineers and inventors in the United States and Britain were unable to reproduce this quality of sound until several of the Magnetophons left Germany as war reparations in 1945. The German version combined a magnetic tape and a device to play back the recording. Another interesting feature, previously unknown, was that the replay head could be rotated against the direction of the tape transport. This enabled a recording to be played back slowly without lowering the frequency of the voice. These aspects were not available on the steel wire machines then available in the United States.

The most common U.S. version used a special steel tape that was made only in Sweden, and supplies were threatened at the onset of World War II. However, when patent rights on the German invention were seized by the United States Alien Property Custodian Act, there were no longer any licensing problems for U.S. companies to contend with, and the German innovations began to be incorporated into the United States designs.

In 1945 Alexander Poniatoff, an American manufacturer of electric motors, attended a Magnetophon demonstration given by John T. Mullen to the Institute of Radio Engineers. Poniatoff owned a company called Ampex that manufactured audio amplifiers and loudspeakers, and he recognized the commercial potential of the German design and desired to move forward with manufacturing and distributing the Magnetophon. In the following year he was given the opportunity to promote and manufacture the machine in a commercially viable way through an unusual set of circumstances.

A popular singer, Bing Crosby, had experienced a significant drop in his radio popularity. Crosby attributed his poor ratings to the inferior quality of sound recording used in taping his programs. Crosby, familiar with the Magnetophon machine, requested that it be used to tape record a sample program. He went on to record 26 radio shows for delayed broadcast using the German design. In 1947 Bing Crosby Enterprises, enthusiastic about the improved quality and listener satisfaction, decided to contract with Ampex to design and develop the Magnetophon recording device. Ampex agreed to build 20 professional recording units priced at $40,000 each, and Bing Crosby Enterprises then sold the units to the American Broadcasting Company.

In the film world, Walt Disney Studios released the animated film Fantasia. This film used a sound process called Fantasound, incorporating technological advances made in the field of sound recording and sound playback. These commercial uses of the magnetic tape recording devices allowed innovations and expansion in the movie and television-broadcasting field.

By 1950, two-channel tape recorders allowing recording in stereo and the first catalog of recorded music appeared in the United States. These continued advancements in tape recorder technology allowed people to play their favorite music, even if it had been recorded many years prior. Radio networks used sound recording for news broadcasts, special music programming, as well as for archival purposes. The fledgling television and motion pictures industries began experimenting with combining images with music, speech, and sound effects. New research into the development and use of three and four-track tape recorders and one-inch tape was in the works as well as portable tape cassette players and the video recorder. These new innovations were possible and viable because of the groundwork laid by many individuals and companies in the first half of the twentieth century.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#186 2018-07-30 22:34:42

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

171) Geyser

Geyser, hot spring that intermittently spouts jets of steam and hot water. The term is derived from the Icelandic word geysir, meaning “to gush.”

Geysers result from the heating of groundwater by shallow bodies of magma. They are generally associated with areas that have seen past volcanic activity. The spouting action is caused by the sudden release of pressure that has been confining near-boiling water in deep, narrow conduits beneath a geyser. As steam or gas bubbles begin to form in the conduit, hot water spills from the vent of the geyser, and the pressure is lowered on the water column below. Water at depth then exceeds its boiling point and flashes into steam, forcing more water from the conduit and lowering the pressure further. This chain reaction continues until the geyser exhausts its supply of boiling water.

The boiling temperature of water increases with pressure; for example, at a depth of 30 metres (about 100 feet) below the surface, the boiling point is approximately 140 °C (285 °F). Geothermal power from steam wells depends on the same volcanic heat sources and boiling temperature changes with depth that drive geyser displays.

As water is ejected from geysers and is cooled, dissolved silica is precipitated in mounds on the surface. This material is known as sinter. Often geysers have been given fanciful names (such as Castle Geyser in Yellowstone National Park) inspired by the shapes of the colourful and contorted mounds of siliceous sinter at the vents.

Geysers are rare. There are more than 300 of them in Yellowstone in the western United States—approximately half the world’s total—and about 200 on the Kamchatka Peninsula in the Russian Far East, about 40 in New Zealand, 16 in Iceland, and 50 scattered throughout the world in many other volcanic areas. Perhaps the most famous geyser is Old Faithful in Yellowstone. It spouts a column of boiling water and steam to a height of about 30 to 55 metres (100 to 180 feet) on a roughly 90-minute timetable.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#187 2018-07-31 01:08:32

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

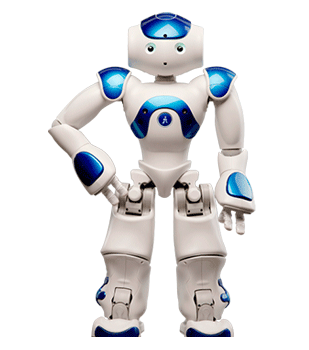

172) Robotics

History of Robotics

Although the science of robotics only came about in the 20th century, the history of human-invented automation has a much lengthier past. In fact, the ancient Greek engineer Hero of Alexandria, produced two texts, 'Pneumatica' and 'Automata', that testify to the existence of hundreds of different kinds of “wonder” machines capable of automated movement. Of course, robotics in the 20th and 21st centuries has advanced radically to include machines capable of assembling other machines and even robots that can be mistaken for human beings.

The word robotics was inadvertently coined by science fiction author Isaac Asimov in his 1941 story “Liar!” Science fiction authors throughout history have been interested in man’s capability of producing self-motivating machines and lifeforms, from the ancient Greek myth of Pygmalion to Mary Shelley’s Dr. Frankenstein and Arthur C. Clarke’s HAL 9000. Essentially, a robot is a re-programmable machine that is capable of movement in the completion of a task. Robots use special coding that differentiates them from other machines and machine tools, such as CNC. Robots have found uses in a wide variety of industries due to their robust resistance capabilities and precision function.

Historical Robotics

Many sources attest to the popularity of automatons in ancient and Medieval times. Ancient Greeks and Romans developed simple automatons for use as tools, toys, and as part of religious ceremonies. Predating modern robots in industry, the Greek God Hephaestus was supposed to have built automatons to work for him in a workshop. Unfortunately, none of the early automatons are extant.

In the Middle Ages, in both Europe and the Middle East, automatons were popular as part of clocks and religious worship. The Arab polymath Al-Jazari (1136-1206) left texts describing and illustrating his various mechanical devices, including a large elephant clock that moved and sounded at the hour, a musical robot band and a waitress automaton that served drinks. In Europe, there is an automaton monk extant that kisses the cross in its hands. Many other automata were created that showed moving animals and humanoid figures that operated on simple cam systems, but in the 18th century, automata were understood well enough and technology advanced to the point where much more complex pieces could be made. French engineer Jacques de Vaucanson is credited with creating the first successful biomechanical automaton, a human figure that plays a flute. Automata were so popular that they traveled Europe entertaining heads of state such as Frederick the Great and Napoleon Bonaparte.

Victorian Robots

The Industrial Revolution and the increased focus on mathematics, engineering and science in England in the Victorian age added to the momentum towards actual robotics. Charles Babbage (1791-1871) worked to develop the foundations of computer science in the early-to-mid nineteenth century, his most successful projects being the difference engine and the analytical engine. Although never completed due to lack of funds, these two machines laid out the basics for mechanical calculations. Others such as Ada Lovelace recognized the future possibility of computers creating images or playing music.

Automata continued to provide entertainment during the 19th century, but coterminous with this period was the development of steam-powered machines and engines that helped to make manufacturing much more efficient and quick. Factories began to employ machines to either increase work loads or precision in the production of many products.

The Twentieth Century to Today

In 1920, Karel Capek published his play R.U.R. (Rossum’s Universal Robots), which introduced the word “robot.” It was taken from an old Slavic word that meant something akin to “monotonous or forced labor.” However, it was thirty years before the first industrial robot went to work. In the 1950s, George Devol designed the Unimate, a robotic arm device that transported die castings in a General Motors plant in New Jersey, which started work in 1961. Unimation, the company Devol founded with robotic entrepreneur Joseph Engelberger, was the first robot manufacturing company. The robot was originally seen as a curiosity, to the extent that it even appeared on The Tonight Show in 1966. Soon, robotics began to develop into another tool in the industrial manufacturing math.

Robotics became a burgeoning science and more money was invested. Robots spread to Japan, South Korea and many parts of Europe over the last half century, to the extent that projections for the 2011 population of industrial robots are around 1.2 million. Additionally, robots have found a place in other spheres, as toys and entertainment, military weapons, search and rescue assistants, and many other jobs. Essentially, as programming and technology improve, robots find their way into many jobs that in the past have been too dangerous, dull or impossible for humans to achieve. Indeed, robots are being launched into space to complete the next stages of extraterrestrial and extrasolar research.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#188 2018-07-31 15:12:24

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

173) Phenol

Phenol, any of a family of organic compounds characterized by a hydroxyl (−OH) group attached to a carbon atom that is part of an aromatic ring. Besides serving as the generic name for the entire family, the term phenol is also the specific name for its simplest member, monohydroxybenzene (C6H5OH), also known as benzenol, or carbolic acid.

Phenols are similar to alcohols but form stronger hydrogen bonds. Thus, they are more soluble in water than are alcohols and have higher boiling points. Phenols occur either as colourless liquids or white solids at room temperature and may be highly toxic and caustic.