Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#1326 2022-03-24 14:20:14

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,620

Re: Miscellany

1300) World War I

Summary

World War I, often abbreviated as WWI or WW1, also known as the First World War and contemporaneously known as the Great War and by other names, was an international conflict that began on 28 July 1914 and ended on 11 November 1918. It involved much of Europe, as well as Russia, the United States and Turkey, and was also fought in the Middle East, Africa and parts of Asia. One of the deadliest conflicts in history, an estimated 9 million were killed in combat, while over 5 million civilians died from occupation, bombardment, hunger or disease. The genocides perpetrated by the Ottomans and the 1918 Spanish flu pandemic spread by the movement of combatants during the war caused many millions of additional deaths worldwide.

In 1914, the Great Powers were divided into two opposing alliances: the Triple Entente, consisting of France, Russia, and Britain, and the Triple Alliance, made up of Germany, Austria-Hungary, and Italy. Tensions in the Balkans came to a head on 28 June 1914 following the assassination of Archduke Franz Ferdinand, the Austro-Hungarian heir, by Gavrilo Princip, a Bosnian Serb. Austria-Hungary blamed Serbia and the interlocking alliances involved the Powers in a series of diplomatic exchanges known as the July Crisis. On 28 July, Austria-Hungary declared war on Serbia; Russia came to Serbia's defence and by 4 August, the conflict had expanded to include Germany, France and Britain, along with their respective colonial empires. In November, the Ottoman Empire, Germany and Austria formed the Central Powers, while in April 1915, Italy joined Britain, France, Russia and Serbia as the Allied Powers.

Facing a war on two fronts, German strategy in 1914 was to defeat France, then shift its forces to the East and knock out Russia, commonly known as the Schlieffen Plan. This failed when their advance into France was halted at the Marne; by the end of 1914, the two sides faced each other along the Western Front, a continuous series of trench lines stretching from the Channel to Switzerland that changed little until 1917. By contrast, the Eastern Front was far more fluid, with Austria-Hungary and Russia gaining, then losing large swathes of territory. Other significant theatres included the Middle East, the Alpine Front and the Balkans, bringing Bulgaria, Romania and Greece into the war.

Shortages caused by the Allied naval blockade led Germany to initiate unrestricted submarine warfare in early 1917, bringing the previously neutral United States into the war on 6 April 1917. In Russia, the Bolsheviks seized power in the 1917 October Revolution and made peace in the March 1918 Treaty of Brest-Litovsk, freeing up large numbers of German troops. By transferring these to the Western Front, the German General Staff hoped to win a decisive victory before American reinforcements could impact the war, and launched the March 1918 German spring offensive. Despite initial success, it was soon halted by heavy casualties and ferocious defence; in August, the Allies launched the Hundred Days Offensive and although the German army continued to fight hard, it could no longer halt their advance.

Towards the end of 1918, the Central Powers began to collapse; Bulgaria signed an Armistice on 29 September, followed by the Ottomans on 31 October, then Austria-Hungary on 3 November. Isolated, facing revolution at home and an army on the verge of mutiny, Kaiser Wilhelm abdicated on 9 November and the new German government signed the Armistice of 11 November 1918, bringing the fighting to a close. The 1919 Paris Peace Conference imposed various settlements on the defeated powers, the best known being the Treaty of Versailles. The dissolution of the Russian, German, Ottoman and Austro-Hungarian empires led to numerous uprisings and the creation of independent states, including Poland, Czechoslovakia and Yugoslavia. For reasons that are still debated, failure to manage the instability that resulted from this upheaval during the interwar period ended with the outbreak of World War II in 1939.

Details

It was known as “The Great War”—a land, air and sea conflict so terrible, it left over 8 million military personnel and 6.6 million civilians dead. Nearly 60 percent of those who fought died. Even more went missing or were injured. In just four years between 1914 and 1918, World War I changed the face of modern warfare, becoming one of the deadliest conflicts in world history.

Causes of the Great War

World War I had a variety of causes, but its roots were in a complex web of alliances between European powers. At its core was mistrust between—and militarization in—the informal “Triple Entente” (Great Britain, France, and Russia) and the secret “Triple Alliance” (Germany, the Austro-Hungarian Empire, and Italy).

The most powerful players, Great Britain, Russia, and Germany, presided over worldwide colonial empires they wanted to expand and protect. Over the course of the 19th century, they consolidated their power and protected themselves by forging alliances with other European powers.

In July 1914, tensions between the Triple Entente (also known as the Allies) and the Triple Alliance (also known as the Central Powers) ignited with the assassination of Archduke Franz Ferdinand, heir to the throne of Austria-Hungary, by a Bosnian Serb nationalist during a visit to Sarajevo. Austria-Hungary blamed Serbia for the attack. Russia backed its ally, Serbia. When Austria-Serbia declared war on Serbia a month later, their allies jumped in and the continent was at war.

The spread of war

Soon, the conflict had expanded to the world, affecting colonies and ally countries in Africa, Asia, the Middle East, and Australia. In 1917, the United States entered the war after a long period of non-intervention. By then, the main theater of the war—the Western Front in Luxembourg, the Netherlands, Belgium, and France—was the site of a deadly stalemate.

Despite advances like the use of poison gas and armored tanks, both sides were trapped in trench warfare that claimed enormous numbers of casualties. Battles like the Battle of Verdun and the First Battle of the Somme are among the deadliest in the history of human conflict.

Aided by the United States, the Allies finally broke through with the Hundred Days Offensive, leading to the military defeat of Germany. The war officially ended at 11:11 a.m. on November 11, 1918.

By then, the world was in the grips of an influenza pandemic that would infect a third of the global population. Revolution had broken out in Germany, Russia, and other countries. Much of Europe was in ruins. “Shell shock” and the aftereffects of gas poisoning would claim thousands more lives.

Never again?

Though the world vowed never to allow another war like it to happen, the roots of the next conflict were sown in the Treaty of Versailles, which was viewed by Germans as humiliating and punitive and which helped set the stage for the rise of fascism and World War II. The technology that the war had generated would be used in the next world war just two decades later.

Though it was described at the time as “the war to end all wars,” the scar that World War I left on the world was anything but temporary.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1327 2022-03-25 14:00:26

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,620

Re: Miscellany

1301) World War II

Summary

World War II, also called Second World War, was a conflict that involved virtually every part of the world during the years 1939–45. The principal belligerents were the Axis powers—Germany, Italy, and Japan—and the Allies—France, Great Britain, the United States, the Soviet Union, and, to a lesser extent, China. The war was in many respects a continuation, after an uneasy 20-year hiatus, of the disputes left unsettled by World War I. The 40,000,000–50,000,000 deaths incurred in World War II make it the bloodiest conflict, as well as the largest war, in history.

Along with World War I, World War II was one of the great watersheds of 20th-century geopolitical history. It resulted in the extension of the Soviet Union’s power to nations of eastern Europe, enabled a communist movement to eventually achieve power in China, and marked the decisive shift of power in the world away from the states of western Europe and toward the United States and the Soviet Union.

Details

World War II or the Second World War, often abbreviated as WWII or WW2, was a global war that lasted from 1939 to 1945. It involved the vast majority of the world's countries—including all of the great powers—forming two opposing military alliances: the Allies and the Axis powers. In a total war directly involving more than 100 million personnel from more than 30 countries, the major participants threw their entire economic, industrial, and scientific capabilities behind the war effort, blurring the distinction between civilian and military resources. Aircraft played a major role in the conflict, enabling the strategic bombing of population centres and the only two uses of nuclear weapons in war. World War II was by far the deadliest conflict in human history; it resulted in 70 to 85 million fatalities, a majority being civilians. Tens of millions of people died due to genocides (including the Holocaust), starvation, massacres, and disease. In the wake of the Axis defeat, Germany and Japan were occupied, and war crimes tribunals were conducted against German and Japanese leaders.

The exact causes of World War II are debated, but contributing factors included the Second Italo-Ethiopian War, the Spanish Civil War, the Second Sino-Japanese War, the Soviet–Japanese border conflicts and rising European tensions since World War I. World War II is generally considered to have begun on 1 September 1939, when Nazi Germany, under Adolf Hitler, invaded Poland. The United Kingdom and France subsequently declared war on Germany on 3 September. Under the Molotov–Ribbentrop Pact of August 1939, Germany and the Soviet Union had partitioned Poland and marked out their "spheres of influence" across Finland, Estonia, Latvia, Lithuania and Romania. From late 1939 to early 1941, in a series of campaigns and treaties, Germany conquered or controlled much of continental Europe, and formed the Axis alliance with Italy and Japan (along with other countries later on). Following the onset of campaigns in North Africa and East Africa, and the fall of France in mid-1940, the war continued primarily between the European Axis powers and the British Empire, with war in the Balkans, the aerial Battle of Britain, the Blitz of the UK, and the Battle of the Atlantic. On 22 June 1941, Germany led the European Axis powers in an invasion of the Soviet Union, opening the Eastern Front, the largest land theatre of war in history.

Japan, which aimed to dominate Asia and the Pacific, was at war with the Republic of China by 1937. In December 1941, Japan attacked American and British territories with near-simultaneous offensives against Southeast Asia and the Central Pacific, including an attack on the US fleet at Pearl Harbor which resulted in the United States declaring war against Japan. Therefore the European Axis powers declared war on the United States in solidarity. Japan soon captured much of the western Pacific, but its advances were halted in 1942 after losing the critical Battle of Midway; later, Germany and Italy were defeated in North Africa and at Stalingrad in the Soviet Union. Key setbacks in 1943—including a series of German defeats on the Eastern Front, the Allied invasions of Sicily and the Italian mainland, and Allied offensives in the Pacific—cost the Axis powers their initiative and forced it into strategic retreat on all fronts. In 1944, the Western Allies invaded German-occupied France, while the Soviet Union regained its territorial losses and turned towards Germany and its allies. During 1944 and 1945, Japan suffered reversals in mainland Asia, while the Allies crippled the Japanese Navy and captured key western Pacific islands.

The war in Europe concluded with the liberation of German-occupied territories, and the invasion of Germany by the Western Allies and the Soviet Union, culminating in the fall of Berlin to Soviet troops, Hitler's suicide and the German unconditional surrender on 8 May 1945. Following the Potsdam Declaration by the Allies on 26 July 1945 and the refusal of Japan to surrender on its terms, the United States dropped the first atomic bombs on the Japanese cities of Hiroshima, on 6 August, and Nagasaki, on 9 August. Faced with an imminent invasion of the Japanese archipelago, the possibility of additional atomic bombings, and the Soviet’s declared entry into the war against Japan on the eve of invading Manchuria, Japan announced on 15 August its intention to surrender, then signed the surrender document on 2 September 1945, cementing total victory in Asia for the Allies.

World War II changed the political alignment and social structure of the globe. The United Nations (UN) was established to foster international co-operation and prevent future conflicts, with the victorious great powers—China, France, the Soviet Union, the United Kingdom, and the United States—becoming the permanent members of its Security Council. The Soviet Union and the United States emerged as rival superpowers, setting the stage for the nearly half-century-long Cold War. In the wake of European devastation, the influence of its great powers waned, triggering the decolonisation of Africa and Asia. Most countries whose industries had been damaged moved towards economic recovery and expansion. Political and economic integration, especially in Europe, began as an effort to forestall future hostilities, end pre-war enmities and forge a sense of common identity.

World War II summary: The carnage of World War II was unprecedented and brought the world closest to the term “total warfare.” On average 27,000 people were killed each day between September 1, 1939, until the formal surrender of Japan on September 2, 1945. Western technological advances had turned upon itself, bringing about the most destructive war in human history. The primary combatants were the Axis nations of Nazi Germany, Fascist Italy, Imperial Japan, and the Allied nations, Great Britain (and its Commonwealth nations), the Soviet Union, and the United States. Seven days after the suicide of Adolf Hitler, Germany unconditionally surrendered on May 7, 1945. The Japanese would go on to fight for nearly four more months until their surrender on September 2, which was brought on by the U.S. dropping atomic bombs on the Japanese towns of Nagasaki and Hiroshima. Despite winning the war, Britain largely lost much of its empire, which was outlined in the basis of the Atlantic Charter. The war precipitated the revival of the U.S. economy, and by the war’s end, the nation would have a gross national product that was nearly greater than all the Allied and Axis powers combined. The USA and USSR emerged from World War II as global superpowers. The fundamentally disparate, one-time allies became engaged in what was to be called the Cold War, which dominated world politics for the latter half of the 20th century.

Casualties in World War II

The most destructive war in all of history, its exact cost in human lives is unknown, but casualties in World War II may have totaled over 60 million service personnel and civilians killed. Nations suffering the highest losses, military and civilian, in descending order, are:

USSR: 42,000,000

Germany: 9,000,000

China: 4,000,000

Japan: 3,000,000

When did World War II begin?

Some say it was simply a continuation of the First World War that had theoretically ended in 1918. Others point to 1931, when Japan seized Manchuria from China. Others to Italy’s invasion and defeat of Abyssinia (Ethiopia) in 1935, Adolf Hitler’s re-militarization of Germany’s Rhineland in 1936, the Spanish Civil War (1936–1939), and Germany’s occupation of Czechoslovakia in 1938 are sometimes cited. The two dates most often mentioned as “the beginning of World War II” are July 7, 1937, when the “Marco Polo Bridge Incident” led to a prolonged war between Japan and China, and September 1, 1939, when Germany invaded Poland, which led Britain and France to declare war on Hitler’s Nazi state in retaliation. From the invasion of Poland until the war ended with Japan’s surrender in September 1945, most nations around the world were engaged in armed combat.

Origins of World War II

No one historic event can be said to have been the origin of World War II. Japan’s unexpected victory over czarist Russia in the Russo-Japanese War (1904-05) left open the door for Japanese expansion in Asia and the Pacific. The United States U.S. Navy first developed plans in preparation for a naval war with Japan in 1890. War Plan Orange, as it was called, would be updated continually as technology advanced and greatly aided the U.S. during World War II.

The years between the first and second world wars were a time of instability. The Great Depression that began on Black Tuesday, 1929 plunged the worldwide recession. Coming to power in 1933, Hitler capitalized on this economic decline and the deep German resentment due to the emasculating Treaty of Versailles, signed following the armistice of 1918. Declaring that Germany needed Lebensraum or “living space,” Hitler began to test the Western powers and their willingness to monitor the treaty’s provision. By 1935 Hitler had established the Luftwaffe, a direct violation of the 1919 treaty. Remilitarizing the Rhineland in 1936 violated Versailles and the Locarno Treaties (which defined the borders of Europe) once again. The Anschluss of Austria and the annexation of the rump of Czechoslovakia was a further extension of Hitler’s desire for Lebensraum. Italy’s desire to create the Third Rome pushed the nation to closer ties with Nazi Germany. Likewise, Japan, angered by their exclusion in Paris in 1919, sought to create a Pan-Asian sphere with Japan in order to create a self-sufficient state.

Competing ideologies further fanned the flames of international tension. The Bolshevik Revolution in czarist Russia during the First World War, followed by the Russian Civil War, had established the Union of Soviet Socialist Republics (USSR), a sprawling communist state. Western republics and capitalists feared the spread of Bolshevism. In some nations, such as Italy, Germany and Romania, ultra-conservative groups rose to power, in part in reaction to communism.

Germany, Italy and Japan signed agreements of mutual support but, unlike the Allied nations they would face, they never developed a comprehensive or coordinated plan of action.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1328 2022-03-26 01:25:13

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,620

Re: Miscellany

1302) Artificial Intelligence

Summary

Artificial intelligence (AI) is intelligence demonstrated by machines, as opposed to natural intelligence displayed by animals including humans. Leading AI textbooks define the field as the study of "intelligent agents": any system that perceives its environment and takes actions that maximize its chance of achieving its goals.

Some popular accounts use the term "artificial intelligence" to describe machines that mimic "cognitive" functions that humans associate with the human mind, such as "learning" and "problem solving", however, this definition is rejected by major AI researchers.

AI applications include advanced web search engines (e.g., Google), recommendation systems (used by YouTube, Amazon and Netflix), understanding human speech (such as Siri and Alexa), self-driving cars (e.g., Tesla), automated decision-making and competing at the highest level in strategic game systems (such as chess and Go). As machines become increasingly capable, tasks considered to require "intelligence" are often removed from the definition of AI, a phenomenon known as the AI effect. For instance, optical character recognition is frequently excluded from things considered to be AI, having become a routine technology.

Artificial intelligence was founded as an academic discipline in 1956, and in the years since has experienced several waves of optimism, followed by disappointment and the loss of funding (known as an "AI winter"), followed by new approaches, success and renewed funding. AI research has tried and discarded many different approaches since its founding, including simulating the brain, modeling human problem solving, formal logic, large databases of knowledge and imitating animal behavior. In the first decades of the 21st century, highly mathematical statistical machine learning has dominated the field, and this technique has proved highly successful, helping to solve many challenging problems throughout industry and academia.

The various sub-fields of AI research are centered around particular goals and the use of particular tools. The traditional goals of AI research include reasoning, knowledge representation, planning, learning, natural language processing, perception, and the ability to move and manipulate objects. General intelligence (the ability to solve an arbitrary problem) is among the field's long-term goals. To solve these problems, AI researchers have adapted and integrated a wide range of problem-solving techniques—including search and mathematical optimization, formal logic, artificial neural networks, and methods based on statistics, probability and economics. AI also draws upon computer science, psychology, linguistics, philosophy, and many other fields.

The field was founded on the assumption that human intelligence "can be so precisely described that a machine can be made to simulate it". This raises philosophical arguments about the mind and the ethics of creating artificial beings endowed with human-like intelligence. These issues have been explored by myth, fiction, and philosophy since antiquity. Science fiction and futurology have also suggested that, with its enormous potential and power, AI may become an existential risk to humanity.

Details

Artificial intelligence (AI) is the ability of a digital computer or computer-controlled robot to perform tasks commonly associated with intelligent beings. The term is frequently applied to the project of developing systems endowed with the intellectual processes characteristic of humans, such as the ability to reason, discover meaning, generalize, or learn from past experience. Since the development of the digital computer in the 1940s, it has been demonstrated that computers can be programmed to carry out very complex tasks—as, for example, discovering proofs for mathematical theorems or playing chess—with great proficiency. Still, despite continuing advances in computer processing speed and memory capacity, there are as yet no programs that can match human flexibility over wider domains or in tasks requiring much everyday knowledge. On the other hand, some programs have attained the performance levels of human experts and professionals in performing certain specific tasks, so that artificial intelligence in this limited sense is found in applications as diverse as medical diagnosis, computer search engines, and voice or handwriting recognition.

What is intelligence?

All but the simplest human behaviour is ascribed to intelligence, while even the most complicated insect behaviour is never taken as an indication of intelligence. What is the difference? Consider the behaviour of the digger wasp, Sphex ichneumoneus. When the female wasp returns to her burrow with food, she first deposits it on the threshold, checks for intruders inside her burrow, and only then, if the coast is clear, carries her food inside. The real nature of the wasp’s instinctual behaviour is revealed if the food is moved a few inches away from the entrance to her burrow while she is inside: on emerging, she will repeat the whole procedure as often as the food is displaced. Intelligence—conspicuously absent in the case of Sphex—must include the ability to adapt to new circumstances.

Psychologists generally do not characterize human intelligence by just one trait but by the combination of many diverse abilities. Research in AI has focused chiefly on the following components of intelligence: learning, reasoning, problem solving, perception, and using language.

Learning

There are a number of different forms of learning as applied to artificial intelligence. The simplest is learning by trial and error. For example, a simple computer program for solving mate-in-one chess problems might try moves at random until mate is found. The program might then store the solution with the position so that the next time the computer encountered the same position it would recall the solution. This simple memorizing of individual items and procedures—known as rote learning—is relatively easy to implement on a computer. More challenging is the problem of implementing what is called generalization. Generalization involves applying past experience to analogous new situations. For example, a program that learns the past tense of regular English verbs by rote will not be able to produce the past tense of a word such as jump unless it previously had been presented with jumped, whereas a program that is able to generalize can learn the “add ed” rule and so form the past tense of jump based on experience with similar verbs.

Reasoning

To reason is to draw inferences appropriate to the situation. Inferences are classified as either deductive or inductive. An example of the former is, “Fred must be in either the museum or the café. He is not in the café; therefore he is in the museum,” and of the latter, “Previous accidents of this sort were caused by instrument failure; therefore this accident was caused by instrument failure.” The most significant difference between these forms of reasoning is that in the deductive case the truth of the premises guarantees the truth of the conclusion, whereas in the inductive case the truth of the premise lends support to the conclusion without giving absolute assurance. Inductive reasoning is common in science, where data are collected and tentative models are developed to describe and predict future behaviour—until the appearance of anomalous data forces the model to be revised. Deductive reasoning is common in mathematics and logic, where elaborate structures of irrefutable theorems are built up from a small set of basic axioms and rules.

There has been considerable success in programming computers to draw inferences, especially deductive inferences. However, true reasoning involves more than just drawing inferences; it involves drawing inferences relevant to the solution of the particular task or situation. This is one of the hardest problems confronting AI.

Problem solving

Problem solving, particularly in artificial intelligence, may be characterized as a systematic search through a range of possible actions in order to reach some predefined goal or solution. Problem-solving methods divide into special purpose and general purpose. A special-purpose method is tailor-made for a particular problem and often exploits very specific features of the situation in which the problem is embedded. In contrast, a general-purpose method is applicable to a wide variety of problems. One general-purpose technique used in AI is means-end analysis—a step-by-step, or incremental, reduction of the difference between the current state and the final goal. The program selects actions from a list of means—in the case of a simple robot this might consist of PICKUP, PUTDOWN, MOVEFORWARD, MOVEBACK, MOVELEFT, and MOVERIGHT—until the goal is reached.

Many diverse problems have been solved by artificial intelligence programs. Some examples are finding the winning move (or sequence of moves) in a board game, devising mathematical proofs, and manipulating “virtual objects” in a computer-generated world.

Perception

In perception the environment is scanned by means of various sensory organs, real or artificial, and the scene is decomposed into separate objects in various spatial relationships. Analysis is complicated by the fact that an object may appear different depending on the angle from which it is viewed, the direction and intensity of illumination in the scene, and how much the object contrasts with the surrounding field.

At present, artificial perception is sufficiently well advanced to enable optical sensors to identify individuals, autonomous vehicles to drive at moderate speeds on the open road, and robots to roam through buildings collecting empty soda cans. One of the earliest systems to integrate perception and action was FREDDY, a stationary robot with a moving television eye and a pincer hand, constructed at the University of Edinburgh, Scotland, during the period 1966–73 under the direction of Donald Michie. FREDDY was able to recognize a variety of objects and could be instructed to assemble simple artifacts, such as a toy car, from a random heap of components.

Language

A language is a system of signs having meaning by convention. In this sense, language need not be confined to the spoken word. Traffic signs, for example, form a minilanguage, it being a matter of convention that ⚠ means “hazard ahead” in some countries. It is distinctive of languages that linguistic units possess meaning by convention, and linguistic meaning is very different from what is called natural meaning, exemplified in statements such as “Those clouds mean rain” and “The fall in pressure means the valve is malfunctioning.”

An important characteristic of full-fledged human languages—in contrast to birdcalls and traffic signs—is their productivity. A productive language can formulate an unlimited variety of sentences.

It is relatively easy to write computer programs that seem able, in severely restricted contexts, to respond fluently in a human language to questions and statements. Although none of these programs actually understands language, they may, in principle, reach the point where their command of a language is indistinguishable from that of a normal human. What, then, is involved in genuine understanding, if even a computer that uses language like a native human speaker is not acknowledged to understand? There is no universally agreed upon answer to this difficult question. According to one theory, whether or not one understands depends not only on one’s behaviour but also on one’s history: in order to be said to understand, one must have learned the language and have been trained to take one’s place in the linguistic community by means of interaction with other language users.

Methods and goals in AI:

Symbolic vs. connectionist approaches

AI research follows two distinct, and to some extent competing, methods, the symbolic (or “top-down”) approach, and the connectionist (or “bottom-up”) approach. The top-down approach seeks to replicate intelligence by analyzing cognition independent of the biological structure of the brain, in terms of the processing of symbols—whence the symbolic label. The bottom-up approach, on the other hand, involves creating artificial neural networks in imitation of the brain’s structure—whence the connectionist label.

To illustrate the difference between these approaches, consider the task of building a system, equipped with an optical scanner, that recognizes the letters of the alphabet. A bottom-up approach typically involves training an artificial neural network by presenting letters to it one by one, gradually improving performance by “tuning” the network. (Tuning adjusts the responsiveness of different neural pathways to different stimuli.) In contrast, a top-down approach typically involves writing a computer program that compares each letter with geometric descriptions. Simply put, neural activities are the basis of the bottom-up approach, while symbolic descriptions are the basis of the top-down approach.

In The Fundamentals of Learning (1932), Edward Thorndike, a psychologist at Columbia University, New York City, first suggested that human learning consists of some unknown property of connections between neurons in the brain. In The Organization of Behavior (1949), Donald Hebb, a psychologist at McGill University, Montreal, Canada, suggested that learning specifically involves strengthening certain patterns of neural activity by increasing the probability (weight) of induced neuron firing between the associated connections. The notion of weighted connections is described in a later section, Connectionism.

In 1957 two vigorous advocates of symbolic AI—Allen Newell, a researcher at the RAND Corporation, Santa Monica, California, and Herbert Simon, a psychologist and computer scientist at Carnegie Mellon University, Pittsburgh, Pennsylvania—summed up the top-down approach in what they called the physical symbol system hypothesis. This hypothesis states that processing structures of symbols is sufficient, in principle, to produce artificial intelligence in a digital computer and that, moreover, human intelligence is the result of the same type of symbolic manipulations.

During the 1950s and ’60s the top-down and bottom-up approaches were pursued simultaneously, and both achieved noteworthy, if limited, results. During the 1970s, however, bottom-up AI was neglected, and it was not until the 1980s that this approach again became prominent. Nowadays both approaches are followed, and both are acknowledged as facing difficulties. Symbolic techniques work in simplified realms but typically break down when confronted with the real world; meanwhile, bottom-up researchers have been unable to replicate the nervous systems of even the simplest living things. Caenorhabditis elegans, a much-studied worm, has approximately 300 neurons whose pattern of interconnections is perfectly known. Yet connectionist models have failed to mimic even this worm. Evidently, the neurons of connectionist theory are gross oversimplifications of the real thing.

Strong AI, applied AI, and cognitive simulation

Employing the methods outlined above, AI research attempts to reach one of three goals: strong AI, applied AI, or cognitive simulation. Strong AI aims to build machines that think. (The term strong AI was introduced for this category of research in 1980 by the philosopher John Searle of the University of California at Berkeley.) The ultimate ambition of strong AI is to produce a machine whose overall intellectual ability is indistinguishable from that of a human being. As is described in the section Early milestones in AI, this goal generated great interest in the 1950s and ’60s, but such optimism has given way to an appreciation of the extreme difficulties involved. To date, progress has been meagre. Some critics doubt whether research will produce even a system with the overall intellectual ability of an ant in the foreseeable future. Indeed, some researchers working in AI’s other two branches view strong AI as not worth pursuing.

Applied AI, also known as advanced information processing, aims to produce commercially viable “smart” systems—for example, “expert” medical diagnosis systems and stock-trading systems. Applied AI has enjoyed considerable success, as described in the section Expert systems.

In cognitive simulation, computers are used to test theories about how the human mind works—for example, theories about how people recognize faces or recall memories. Cognitive simulation is already a powerful tool in both neuroscience and cognitive psychology.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1329 2022-03-26 23:26:41

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,620

Re: Miscellany

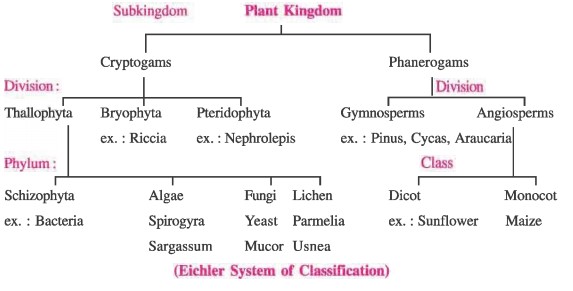

1303) Xylem and Phloem

The vascular system is comprised of two main types of tissue: the xylem and the phloem. The xylem distributes water and dissolved minerals upward through the plant, from the roots to the leaves. The phloem carries food downward from the leaves to the roots.

Xylem

Xylem is a plant vascular tissue that conveys water and dissolved minerals from the roots to the rest of the plant and also provides physical support. Xylem tissue consists of a variety of specialized, water-conducting cells known as tracheary elements. Together with phloem (tissue that conducts sugars from the leaves to the rest of the plant), xylem is found in all vascular plants, including the seedless club mosses, ferns, horsetails, as well as all angiosperms (flowering plants) and gymnosperms (plants with seeds unenclosed in an ovary).

The xylem tracheary elements consist of cells known as tracheids and vessel members, both of which are typically narrow, hollow, and elongated. Tracheids are less specialized than the vessel members and are the only type of water-conducting cells in most gymnosperms and seedless vascular plants. Water moving from tracheid to tracheid must pass through a thin modified primary cell wall known as the pit membrane, which serves to prevent the passage of damaging air bubbles. Vessel members are the principal water-conducting cells in angiosperms (though most species also have tracheids) and are characterized by areas that lack both primary and secondary cell walls, known as perforations. Water flows relatively unimpeded from vessel to vessel through these perforations, though fractures and disruptions from air bubbles are also more likely. In addition to the tracheary elements, xylem tissue also features fibre cells for support and parenchyma (thin-walled, unspecialized cells) for the storage of various substances.

Xylem formation begins when the actively dividing cells of growing root and shoot tips (apical meristems) give rise to primary xylem. In woody plants, secondary xylem constitutes the major part of a mature stem or root and is formed as the plant expands in girth and builds a ring of new xylem around the original primary xylem tissues. When this happens, the primary xylem cells die and lose their conducting function, forming a hard skeleton that serves only to support the plant. Thus, in the trunk and older branches of a large tree, only the outer secondary xylem (sapwood) serves in water conduction, while the inner part (heartwood) is composed of dead but structurally strong primary xylem. In temperate or cold climates, the age of a tree may be determined by counting the number of annual xylem rings formed at the base of the trunk (cut in cross section).

Phloem

Phloem is plant vascular tissue that conducts foods made in the leaves during photosynthesis to all other parts of the plant. Phloem is composed of various specialized cells called sieve elements, phloem fibres, and phloem parenchyma cells. Together with xylem (tissue that conducts water and minerals from the roots to the rest of the plant), phloem is found in all vascular plants, including the seedless club mosses, ferns, and horsetails, as well as all angiosperms (flowering plants) and gymnosperms (plants with seeds unenclosed in an ovary).

Sieve tubes, which are columns of sieve tube cells having perforated sievelike areas in their lateral or end walls, provide the main channels in which food substances travel throughout a vascular plant. Phloem parenchyma cells, called transfer cells and border parenchyma cells, are located near the finest branches and terminations of sieve tubes in leaf veinlets, where they also function in the transport of foods. Companion cells, or albuminous cells in non-flowering vascular plants, are another specialized type of parenchyma and carry out the cellular functions of adjacent sieve elements; they typically have a larger number of mitochondria and ribosomes than other parenchyma cells. Phloem, or bast, fibres are flexible long sclerenchyma cells that make up the soft fibres (e.g., flax and hemp) of commerce. These provide flexible tensile strength to the phloem tissues. Sclerids, also formed for sclerenchyma, are hard irregularly shaped cells that add compression strength to the tissues.

Primary phloem is formed by the apical meristems (zones of new cell production) of root and shoot tips; it may be either protophloem, the cells of which are matured before elongation (during growth) of the area in which it lies, or metaphloem, the cells of which mature after elongation. Sieve tubes of protophloem are unable to stretch with the elongating tissues and are torn and destroyed as the plant ages. The other cell types in the phloem may be converted to fibres. The later maturing metaphloem is not destroyed and may function during the rest of the plant’s life in plants such as palms but is replaced by secondary phloem in plants that have a cambium.

Xylem versus Phloem

Xylem and phloem are both transport vessels that combine to form a vascular bundle in higher order plants.

* The vascular bundle functions to connect tissues in the roots, stem and leaves as well as providing structural support

Xylem

* Moves materials via the process of transpiration

* Transports water and minerals from the roots to aerial parts of the plant (unidirectional transport)

* Xylem occupy the inner portion or centre of the vascular bundle and is composed of vessel elements and tracheids

* Vessel wall consists of fused cells that create a continuous tube for the unimpeded flow of materials

* Vessels are composed of dead tissue at maturity, such that vessels are hollow with no cell contents

Phloem

* Moves materials via the process of active translocation

* Transports food and nutrients to storage organs and growing parts of the plant (bidirectional transport)

* Phloem occupy the outer portion of the vascular bundle and are composed of sieve tube elements and companion cells

* Vessel wall consists of cells that are connected at their transverse ends to form porous sieve plates (function as cross walls)

* Vessels are composed of living tissue, however sieve tube elements lack nuclei and have few organelles.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1330 2022-03-28 01:05:22

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,620

Re: Miscellany

1304) Nitroglycerin

Summary

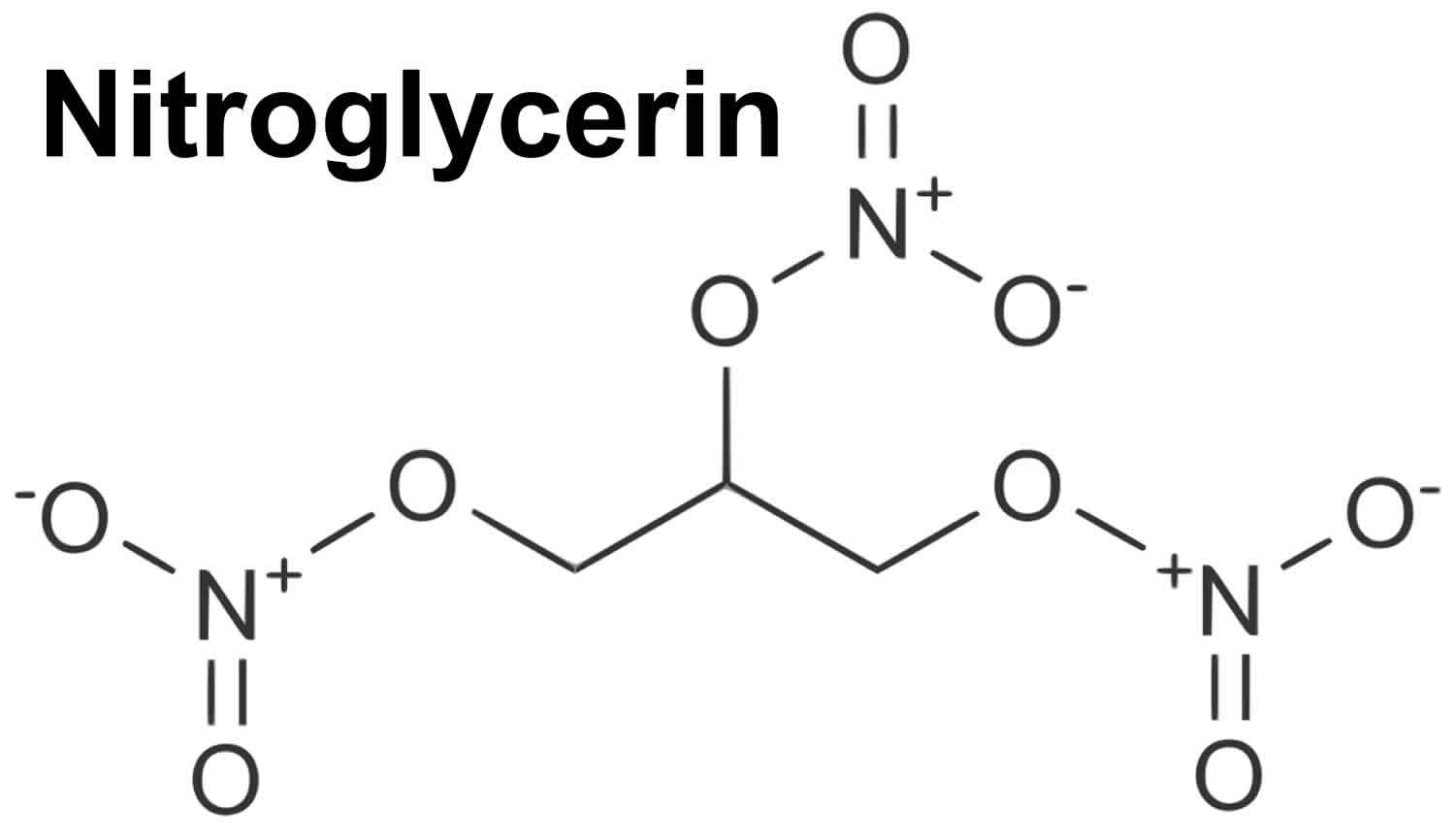

Nitroglycerin (NG), (alternative spelling nitroglycerine) also known as trinitroglycerin (TNG), nitro, glyceryl trinitrate (GTN), or 1,2,3-trinitroxypropane, is a dense, colorless, oily, explosive liquid most commonly produced by nitrating glycerol with white fuming nitric acid under conditions appropriate to the formation of the nitric acid ester. Chemically, the substance is an organic nitrate compound rather than a nitro compound, but the traditional name is retained. Invented in 1847 by Ascanio Sobrero, nitroglycerin has been used ever since as an active ingredient in the manufacture of explosives, namely dynamite, and as such it is employed in the construction, demolition, and mining industries. Since the 1880s, it has been used by the military as an active ingredient and gelatinizer for nitrocellulose in some solid propellants such as cordite and ballistite. It is a major component in double-based smokeless propellants used by reloaders. Combined with nitrocellulose, hundreds of powder combinations are used by rifle, pistol, and shotgun reloaders.

Nitroglycerin has been used for over 130 years in medicine as a potent vasodilator (dilation of the vascular system) to treat heart conditions, such as angina pectoris and chronic heart failure. Though it was previously known that these beneficial effects are due to nitroglycerin being converted to nitric oxide, a potent venodilator, the enzyme for this conversion was only discovered to be mitochondrial aldehyde dehydrogenase (ALDH2) in 2002. Nitroglycerin is available in sublingual tablets, sprays, ointments, and patches.

Details

Nitroglycerin, also called glyceryl trinitrate, is a a powerful explosive and an important ingredient of most forms of dynamite. It is also used with nitrocellulose in some propellants, especially for rockets and missiles, and it is employed as a vasodilator in the easing of cardiac pain.

Pure nitroglycerin is a colourless, oily, somewhat toxic liquid having a sweet, burning taste. It was first prepared in 1846 by the Italian chemist Ascanio Sobrero by adding glycerol to a mixture of concentrated nitric and sulfuric acids. The hazards involved in preparing large quantities of nitroglycerin have been greatly reduced by widespread adoption of continuous nitration processes.

Nitroglycerin, with the molecular formula C3H5(ONO2)3, has a high nitrogen content (18.5 percent) and contains sufficient oxygen atoms to oxidize the carbon and hydrogen atoms while nitrogen is being liberated, so that it is one of the most powerful explosives known. Detonation of nitroglycerin generates gases that would occupy more than 1,200 times the original volume at ordinary room temperature and pressure; moreover, the heat liberated raises the temperature to about 5,000 °C (9,000 °F). The overall effect is the instantaneous development of a pressure of 20,000 atmospheres; the resulting detonation wave moves at approximately 7,700 metres per second (more than 17,000 miles per hour). Nitroglycerin is extremely sensitive to shock and to rapid heating; it begins to decompose at 50–60 °C (122–140 °F) and explodes at 218 °C (424 °F).

The safe use of nitroglycerin as a blasting explosive became possible after the Swedish chemist Alfred B. Nobel developed dynamite in the 1860s by combining liquid nitroglycerin with an inert porous material such as charcoal or diatomaceous earth. Nitroglycerin plasticizes collodion (a form of nitrocellulose) to form blasting gelatin, a very powerful explosive. Nobel’s discovery of this action led to the development of ballistite, the first double-base propellant and a precursor of cordite.

A serious problem in the use of nitroglycerin results from its high freezing point (13 °C [55 °F]) and the fact that the solid is even more shock-sensitive than the liquid. This disadvantage is overcome by using mixtures of nitroglycerin with other polynitrates; for example, a mixture of nitroglycerin and ethylene glycol dinitrate freezes at −29 °C (−20 °F).

Uses

Nitroglycerin extended-release capsules are used to prevent chest pain (angina) in people with a certain heart condition (coronary artery disease). This medication belongs to a class of drugs known as nitrates. Angina occurs when the heart muscle is not getting enough blood. This drug works by relaxing and widening blood vessels so blood can flow more easily to the heart. This medication will not relieve chest pain once it occurs. It is also not intended to be taken just before physical activities (such as exercise, sexual activity) to prevent chest pain. Other medications may be needed in these situations. Consult your doctor for more details.

How to use Nitroglycerin

Take this medication by mouth, usually 3 to 4 times daily or as directed by your doctor. It is important to take the drug at the same times each day. Do not change the dosing times unless directed by your doctor. The dosage is based on your medical condition and response to treatment.

Swallow this medication whole. Do not crush or chew the capsules. Doing so can release all of the drug at once and may increase your risk of side effects.

Use this medication regularly to get the most benefit from it. Do not suddenly stop taking this medication without consulting your doctor. Some conditions may become worse when the drug is suddenly stopped. Your dose may need to be gradually decreased.

Although unlikely, when this medication is used for a long time, it may not work as well and may require different dosing. Tell your doctor if this medication stops working well (for example, you have worsening chest pain or it occurs more often).

Side Effects

Headache, dizziness, lightheadedness, nausea, and flushing may occur as your body adjusts to this medication. If any of these effects persist or worsen, tell your doctor or pharmacist promptly.

Headache is often a sign that this medication is working. Your doctor may recommend treating headaches with an over-the-counter pain reliever (such as acetaminophen, aspirin). If the headaches continue or become severe, tell your doctor promptly.

To reduce the risk of dizziness and lightheadedness, get up slowly when rising from a sitting or lying position.

Remember that this medication has been prescribed because your doctor has judged that the benefit to you is greater than the risk of side effects. Many people using this medication do not have serious side effects.

Tell your doctor right away if any of these unlikely but serious side effects occur: fainting, fast/irregular/pounding heartbeat.

A very serious allergic reaction to this drug is rare. However, seek immediate medical attention if you notice any of the following symptoms of a serious allergic reaction: rash, itching/swelling (especially of the face/tongue/throat), severe dizziness, trouble breathing.

Precautions

Before using this medication, tell your doctor or pharmacist if you are allergic to it; or to similar drugs (such as isosorbide mononitrate); or to nitrites; or if you have any other allergies. This product may contain inactive ingredients, which can cause allergic reactions or other problems. Talk to your pharmacist for more details.

Before using this medication, tell your doctor or pharmacist your medical history, especially of: recent head injury, frequent stomach cramping/watery stools/severe diarrhea (GI hypermotility), lack of proper absorption of nutrients (malabsorption), anemia, low blood pressure, dehydration, other heart problems (such as recent heart attack).

This drug may make you dizzy. Alcohol can make you more dizzy. Do not drive, use machinery, or do anything that needs alertness until you can do it safely. Limit alcoholic beverages.

Before having surgery, tell your doctor or dentist about all the products you use (including prescription drugs, nonprescription drugs, and herbal products).

Older adults may be more sensitive to the side effects of this medication, especially dizziness and lightheadedness, which could increase the risk of falls.

During pregnancy, this medication should be used only when clearly needed. Discuss the risks and benefits with your doctor.

It is not known whether this drug passes into breast milk or if it may harm a nursing infant. Consult your doctor before breast-feeding.

Interactions

Drug interactions may change how your medications work or increase your risk for serious side effects. This document does not contain all possible drug interactions. Keep a list of all the products you use (including prescription/nonprescription drugs and herbal products) and share it with your doctor and pharmacist. Do not start, stop, or change the dosage of any medicines without your doctor's approval.

Some products that may interact with this drug include: pulmonary hypertension (such as sildenafil, tadalafil), certain drugs to treat migraine headaches.

This medication may interfere with certain laboratory tests (including blood cholesterol levels), possibly causing false test results. Make sure laboratory personnel and all your doctors know you use this drug.

Overdose

Symptoms of overdose may include: slow heartbeat, vision changes, severe nausea/vomiting, sweating, cold/clammy skin, bluish fingers/toes/lips.

Storage

Store at room temperature between 59-86 degrees F (15-30 degrees C) away from light and moisture. Do not store in the bathroom. Keep all medicines away from children and pets.

Do not flush medications down the toilet or pour them into a drain unless instructed to do so. Properly discard this product when it is expired or no longer needed. Consult your pharmacist or local waste disposal company for more details about how to safely discard your product.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1331 2022-03-29 00:18:06

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,620

Re: Miscellany

1305) Alkali

Summary

Alkali is any of the soluble hydroxides of the alkali metals—i.e., lithium, sodium, potassium, rubidium, and cesium. Alkalies are strong bases that turn litmus paper from red to blue; they react with acids to yield neutral salts; and they are caustic and in concentrated form are corrosive to organic tissues. The term alkali is also applied to the soluble hydroxides of such alkaline-earth metals as calcium, strontium, and barium and also to ammonium hydroxide. The term was originally applied to the ashes of burned sodium- or potassium-bearing plants, from which the oxides of sodium and potassium could be leached.

The manufacture of industrial alkali usually refers to the production of soda ash (Na2CO3; sodium carbonate) and caustic soda (NaOH; sodium hydroxide). Other industrial alkalies include potassium hydroxide, potash, and lye. The production of a vast range of consumer goods depends on the use of alkali at some stage. Soda ash and caustic soda are essential to the production of glass, soap, miscellaneous chemicals, rayon and cellophane, paper and pulp, cleansers and detergents, textiles, water softeners, certain metals (especially aluminum), bicarbonate of soda, and gasoline and other petroleum derivatives.

People have been using alkali for centuries, obtaining it first from the leachings (water solutions) of certain desert earths. In the late 18th century the leaching of wood or seaweed ashes became the chief source of alkali. In 1775 the French Académie des Sciences offered monetary prizes for new methods for manufacturing alkali. The prize for soda ash was awarded to the Frenchman Nicolas Leblanc, who in 1791 patented a process for converting common salt (sodium chloride) into sodium carbonate. The Leblanc process dominated world production until late in the 19th century, but following World War I it was completely supplanted by another salt-conversion process that had been perfected in the 1860s by Ernest Solvay of Belgium. Late in the 19th century, electrolytic methods for the production of caustic soda appeared and grew rapidly in importance.

In the Solvay, or ammonia-soda process (q.v.) of soda ash manufacture, common salt in the form of a strong brine is chemically treated to eliminate calcium and magnesium impurities and is then saturated with recycling ammonia gas in towers. The ammoniated brine is then carbonated using carbon dioxide gas under moderate pressure in a different type of tower. These two processes yield ammonium bicarbonate and sodium chloride, the double decomposition of which gives the desired sodium bicarbonate as well as ammonium chloride. The sodium bicarbonate is then heated to decompose it to the desired sodium carbonate. The ammonia involved in the process is almost completely recovered by treating the ammonium chloride with lime to yield ammonia and calcium chloride. The recovered ammonia is then reused in the processes already described.

The electrolytic production of caustic soda involves the electrolysis of a strong salt brine in an electrolytic cell. (Electrolysis is the breaking down of a compound in solution into its constituents by means of an electric current in order to bring about a chemical change.) The electrolysis of sodium chloride yields chlorine and either sodium hydroxide or metallic sodium. Sodium hydroxide in some cases competes with sodium carbonate for the same applications, and in any case the two are interconvertible by rather simple processes. Sodium chloride can be made into an alkali by either of the two processes, the difference between them being that the ammonia-soda process gives the chlorine in the form of calcium chloride, a compound of small economic value, while the electrolytic processes produce elemental chlorine, which has innumerable uses in the chemical industry. For this reason the ammonia-soda process, having displaced the Leblanc process, has found itself being displaced, the older ammonia-soda plants continuing to operate very efficiently while newly built plants use electrolytic processes.

In a few places in the world there are substantial deposits of the mineral form of soda ash, known as natural alkali. The mineral usually occurs as sodium sesquicarbonate, or trona (Na2CO3·NaHCO3·2H2O). The United States produces much of the world’s natural alkali from vast trona deposits in underground mines in Wyoming and from dry lake beds in California.

Details

In chemistry, an alkali (romanized: al-qaly, lit. 'ashes of the saltwort') is a basic, ionic salt of an alkali metal or an alkaline earth metal. An alkali can also be defined as a base that dissolves in water. A solution of a soluble base has a pH greater than 7.0. The adjective alkaline is commonly, and alkalescent less often, used in English as a synonym for basic, especially for bases soluble in water. This broad use of the term is likely to have come about because alkalis were the first bases known to obey the Arrhenius definition of a base, and they are still among the most common bases.

Etymology

The word "alkali" is derived from Arabic al qalīy (or alkali), meaning the calcined ashes, referring to the original source of alkaline substances. A water-extract of burned plant ashes, called potash and composed mostly of potassium carbonate, was mildly basic. After heating this substance with calcium hydroxide (slaked lime), a far more strongly basic substance known as caustic potash (potassium hydroxide) was produced. Caustic potash was traditionally used in conjunction with animal fats to produce soft soaps, one of the caustic processes that rendered soaps from fats in the process of saponification, one known since antiquity. Plant potash lent the name to the element potassium, which was first derived from caustic potash, and also gave potassium its chemical symbol K (from the German name Kalium), which ultimately derived from alkali.

Common properties of alkalis and bases

Alkalis are all Arrhenius bases, ones which form hydroxide ions (OH−) when dissolved in water. Common properties of alkaline aqueous solutions include:

* Moderately concentrated solutions (over 10^{-3} M) have a pH of 10 or greater. This means that they will turn phenolphthalein from colorless to pink.

* Concentrated solutions are caustic (causing chemical burns).

* Alkaline solutions are slippery or soapy to the touch, due to the saponification of the fatty substances on the surface of the skin.

* Alkalis are normally water-soluble, although some like barium carbonate are only soluble when reacting with an acidic aqueous solution.

Difference between alkali and base

The terms "base" and "alkali" are often used interchangeably, particularly outside the context of chemistry and chemical engineering.

There are various more specific definitions for the concept of an alkali. Alkalis are usually defined as a subset of the bases. One of two subsets is commonly chosen.

* A basic salt of an alkali metal or alkaline earth metal (This includes Mg(OH)2 (magnesium hydroxide) but excludes NH3 (ammonia).)

* Any base that is soluble in water and forms hydroxide ions or the solution of a base in water. (This includes both Mg(OH)2 and NH3, which forms NH4OH.)

The second subset of bases is also called an "Arrhenius base".

Alkali salts

Alkali salts are soluble hydroxides of alkali metals and alkaline earth metals, of which common examples are:

* Sodium hydroxide (NaOH) – often called "caustic soda"

* Potassium hydroxide (KOH) – commonly called "caustic potash"

* Lye – generic term for either of two previous salts or their mixture

* Calcium hydroxide (Ca(OH)2) – saturated solution known as "limewater"

* Magnesium hydroxide (Mg(OH)2) – an atypical alkali since it has low solubility in water (although the dissolved portion is considered a strong base due to complete dissociation of its ions)

Alkaline soil

Soils with pH values that are higher than 7.3 are usually defined as being alkaline. These soils can occur naturally, due to the presence of alkali salts. Although many plants do prefer slightly basic soil (including vegetables like cabbage and fodder like buffalo grass), most plants prefer a mildly acidic soil (with pHs between 6.0 and 6.8), and alkaline soils can cause problems.

Alkali lakes

In alkali lakes (also called soda lakes), evaporation concentrates the naturally occurring carbonate salts, giving rise to an alkalic and often saline lake.

Examples of alkali lakes:

* Alkali Lake, Lake County, Oregon

* Baldwin Lake, San Bernardino County, California

* Bear Lake[6] on the Utah–Idaho border

* Lake Magadi in Kenya

* Lake Turkana in Kenya

* Mono Lake, near Owens Valley in California

* Redberry Lake, Saskatchewan

* Summer Lake, Lake County, Oregon

* Tramping Lake, Saskatchewan

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1332 2022-03-30 00:20:36

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,620

Re: Miscellany

1306) Systems analyst

Summary

A systems analyst, also known as business technology analyst, is an information technology (IT) professional who specializes in analyzing, designing and implementing information systems. Systems analysts assess the suitability of information systems in terms of their intended outcomes and liaise with end users, software vendors and programmers in order to achieve these outcomes. A systems analyst is a person who uses analysis and design techniques to solve business problems using information technology. Systems analysts may serve as change agents who identify the organizational improvements needed, design systems to implement those changes, and train and motivate others to use the systems.

Industry

As of 2015, the sectors employing the greatest numbers of computer systems analysts were state government, insurance, computer system design, professional and commercial equipment, and company and enterprise management. The number of jobs in this field is projected to grow from 487,000 as of 2009 to 650,000 by 2016.

This job ranked third best in a 2010 survey, fifth best in the 2011 survey, 9th best in the 2012 survey and the 10th best in the 2013 survey.

Details

Application analyst

In the USA, an application analyst (also applications systems analyst) is someone whose job is to support a given application or applications. This may entail some computer programming, some system administration skills, and the ability to analyze a given problem, diagnose it and find its root cause, and then either solve it or pass the problem on to the relevant people if it does not lie within the application analyst's area of responsibility. Typically an application analyst will be responsible for supporting bespoke (i.e. custom) applications programmed with a variety of programming languages and using a variety of database systems, middleware systems and the like. It is a form of 3rd level technical support/help desk. The role may or may not involve some customer contact but most often it involves getting some description of the problem from help desk, making a diagnosis and then either creating a fix or passing the problem on to someone who is responsible for the actual problem area.

In some companies, an application analyst is a software architect.

Overview

Depending on the Industry, an application analyst will apply subject matter expertise by verifying design documents, execute testing for new functionality, and defect fixes. Additional responsibilities can include being a liaison between business stakeholders and IT developers. Also, providing clarifications on system requirements and design for integrating-application teams. An application analyst will interface with multiple channels (depending on scope) to provide demos and application walk-throughs and training. An application analyst will align with IT resources to ensure high quality deliverables utilizing agile methodology for rapid delivery technology enablers. Participating in the change request management process to field, document, and communicate responses feedback is also common within an application analyst's responsibilities.

Application systems analysts consult with management and help develop software to fit clients' needs. Application systems analyst must provide accurate, quality analyses of new program applications, as well as conduct testing, locate potential problems, and solve them in an efficient manner. Clients' needs may vary widely (for example, they may work in the medical field or in the securities industry), and staying up to date with software and technology trends in their field are essential.

Companies that require analysts are mostly in the fields of business, accounting, security, and scientific engineering. Application systems analysts work with other analysts and program designers, as well as managers and clients. These analysts generally work in an office setting, but there are exceptions when clients may need services at their office or home. Application systems analysts usually work full time, although they may need to work nights and weekends to resolve emerging issues or when deadlines approach; some companies may require analysts to be on call.

Analyst positions typically require at least a bachelor's degree in computer science or a related field, and a master's degree may be required. Previous information technology experience - preferably in a similar role - is required as well. Application systems analysts must have excellent communication skills, as they often work directly with clients and may be required to train other analysts. Flexibility, the ability to work well in teams, and the ability to work well with minimal supervision are also preferred traits.

Application analyst tasks include:

* Analyze and route issues into the proper ticketing systems and update and close tickets in a timely manner.

* Devise or modify procedures to solve problems considering computer equipment capacity and limitations.

* Establish new users, manage access levels and reset passwords.

* Conduct application testing and provide database management support.

* Create and maintain documentation as necessary for operational and security audits.

Software analyst

In a software development team, a software analyst is the person who studies the software application domain, prepares software requirements, and specification (Software Requirements Specification) documents. The software analyst is the seam between the software users and the software developers. They convey the demands of software users to the developers.

In the USA, an application analyst (also applications systems analyst) is someone whose job is to support a given application or applications. This may entail some computer programming, some system administration skills, and the ability to analyze a given problem, diagnose it and find its root cause, and then either solve it or pass the problem on to the relevant people if it does not lie within the application analyst's area of responsibility. Typically an application analyst will be responsible for supporting bespoke (i.e. custom) applications programmed with a variety of programming languages and using a variety of database systems, middleware systems and the like. It is a form of 3rd level technical support/help desk. The role may or may not involve some customer contact but most often it involves getting some description of the problem from help desk, making a diagnosis and then either creating a fix or passing the problem on to someone who is responsible for the actual problem area.

In some companies, an application analyst is a software architect.

Overview

Depending on the Industry, an application analyst will apply subject matter expertise by verifying design documents, execute testing for new functionality, and defect fixes. Additional responsibilities can include being a liaison between business stakeholders and IT developers. Also, providing clarifications on system requirements and design for integrating-application teams. An application analyst will interface with multiple channels (depending on scope) to provide demos and application walk-throughs and training. An application analyst will align with IT resources to ensure high quality deliverables utilizing agile methodology for rapid delivery technology enablers. Participating in the change request management process to field, document, and communicate responses feedback is also common within an application analyst's responsibilities.

Application systems analysts consult with management and help develop software to fit clients' needs. Application systems analyst must provide accurate, quality analyses of new program applications, as well as conduct testing, locate potential problems, and solve them in an efficient manner. Clients' needs may vary widely (for example, they may work in the medical field or in the securities industry), and staying up to date with software and technology trends in their field are essential.

Companies that require analysts are mostly in the fields of business, accounting, security, and scientific engineering. Application systems analysts work with other analysts and program designers, as well as managers and clients. These analysts generally work in an office setting, but there are exceptions when clients may need services at their office or home. Application systems analysts usually work full time, although they may need to work nights and weekends to resolve emerging issues or when deadlines approach; some companies may require analysts to be on call.

Analyst positions typically require at least a bachelor's degree in computer science or a related field, and a master's degree may be required. Previous information technology experience - preferably in a similar role - is required as well. Application systems analysts must have excellent communication skills, as they often work directly with clients and may be required to train other analysts. Flexibility, the ability to work well in teams, and the ability to work well with minimal supervision are also preferred traits.

Application analyst tasks include:

* Analyze and route issues into the proper ticketing systems and update and close tickets in a timely manner.

* Devise or modify procedures to solve problems considering computer equipment capacity and limitations.

* Establish new users, manage access levels and reset passwords.

* Conduct application testing and provide database management support.

* Create and maintain documentation as necessary for operational and security audits.

Business analyst

A business analyst (BA) is a person who analyzes and documents the market environment, processes, or systems of businesses. According to Robert Half, the typical roles for business analysts include creating detailed business analysis, budgeting and forecasting, planning and monitoring, variance analysis, pricing, reporting and defining business requirements for stakeholders. Related to business analysts are system analysts, who serve as the intermediary between business and IT, assessing whether the IT solution is suitable for the business.

Areas of business analysis

There are at least four types of business analysis:

* Business developer – to identify the organization's business needs and business' opportunities.

* Business model analysis – to define and model the organization's policies and market approaches.

* Process design – to standardize the organization’s workflows.

* Systems analysis – the interpretation of business rules and requirements for technical systems (generally within IT).

The business analyst is someone who is a part of the business operation and works with Information Technology to improve the quality of the services being delivered, sometimes assisting in the Integration and Testing of new solutions. Business Analysts act as a liaison between management and technical developers.

The BA may also support the development of training material, participates in the implementation, and provides post-implementation support.

Industries

This occupation (Business Analyst) is almost equally distributed between male workers and female workers, which means, although it belongs to an IT industry, the entrance to this job is open equally both for men and women. Also, research found that the annual salary for BA tops in Australia, and is reported that India has the lowest compensation.

Business analysis is a professional discipline of identifying business needs and determining solutions to business problems. Solutions often include a software-systems development component, but may also consist of process improvements, organizational change or strategic planning and policy development. The person who carries out this task is called a business analyst or BA.

Business analysts do not work solely on developing software systems. But work across the organisation, solving business problems in consultation with business stakeholders. Whilst most of the work that business analysts do today relate to software development/solutions, this derives from the ongoing massive changes businesses all over the world are experiencing in their attempts to digitise.

Although there are different role definitions, depending upon the organization, there does seem to be an area of common ground where most business analysts work. The responsibilities appear to be:

* To investigate business systems, taking a holistic view of the situation. This may include examining elements of the organisation structures and staff development issues as well as current processes and IT systems.

* To evaluate actions to improve the operation of a business system. Again, this may require an examination of organisational structure and staff development needs, to ensure that they are in line with any proposed process redesign and IT system development.

* To document the business requirements for the IT system support using appropriate documentation standards.

In line with this, the core business analyst role could be defined as an internal consultancy role that has the responsibility for investigating business situations, identifying and evaluating options for improving business systems, defining requirements and ensuring the effective use of information systems in meeting the needs of the business.

Additional Information

How to become a computer systems analyst.

Getting an IT degree, whether a traditional or online degree, presents such a wide variety of career options, it can be difficult to know which one to pursue. While your choice will depend a great deal on the skills you have and the work environment you prefer, your job outlook is extremely positive no matter what you choose. There are a variety of positions you can choose within the IT industry, from computer scientist to software engineer.

One IT job that is thriving in recent years is that of a computer systems analyst—in fact, recent reports from the Bureau of Labor Statistics say that “employment of computer systems analysts is projected to grow 21 percent from 2014 to 2024, much faster than the average for all occupations.”

What is a computer systems analyst?

You've probably heard of many IT careers, but a computer systems analyst may not be one you’re familiar with. Computer systems analysts are responsible for determining how a business' computer system is serving the needs of the company, and what can be done to make those systems and procedures more effective. They work closely with IT managers to determine what system upgrades are financially feasible and what technologies are available that could increase the company's efficiency.

Computer systems analysts may also design and develop new systems, train users, and configure hardware and software as necessary. The type of system that analysts work with will largely depend upon the needs of their employer.

How do I become a computer systems analyst?

There aren’t specific certification tests you need to pass to become a computer systems analyst, but you will need a bachelor’s or master’s degree in computer science or similar credentials. There are many places you can turn to in order to earn this bachelor’s or master’s degree, including online universities like Western Governors University. Be aware, this career doesn’t just require computer knowledge. Business knowledge is also extremely beneficial for become a computer systems analyst. It’s recommended that you take some business courses, or even pursue an Information Technology MBA to be most successful. Computer systems analysts need to constantly keep themselves up-to-date on the latest innovations in technology, and can earn credentials from the Institute for the Certification of Computing Professionals to set themselves apart from their peers.

What experience do I need to become a computer systems analyst?