Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#1451 2022-07-25 13:54:57

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,980

Re: Miscellany

1424) Hurdling

Summary

Hurdling is the act of jumping over an obstacle at a high speed or in a sprint. In the early 19th century, hurdlers ran at and jumped over each hurdle (sometimes known as 'burgles'), landing on both feet and checking their forward motion. Today, the dominant step patterns are the 3-step for high hurdles, 7-step for low hurdles, and 15-step for intermediate hurdles. Hurdling is a highly specialized form of obstacle racing, and is part of the sport of athletics. In hurdling events, barriers known as hurdles are set at precisely measured heights and distances. Each athlete must pass over the hurdles; passing under or intentionally knocking over hurdles will result in disqualification.

Accidental knocking over of hurdles is not cause for disqualification, but the hurdles are weighted to make doing so disadvantageous. In 1902 Spalding equipment company sold the Foster Patent Safety Hurdle, a wood hurdle. In 1923 some of the wood hurdles weighed 16 lb (7.3 kg) each. Hurdle design improvements were made in 1935, when they developed the L-shaped hurdle. With this shape, the athlete could hit the hurdle and it will tip down, clearing the athlete's path. The most prominent hurdles events are 110 meters hurdles for men, 100 meters hurdles for women, and 400 meters hurdles (both sexes) – these three distances are all contested at the Summer Olympics and the World Athletics Championships. The two shorter distances take place on the straight of a running track, while the 400 m version covers one whole lap of a standard oval track. Events over shorter distances are also commonly held at indoor track and field events, ranging from 50 meters hurdles upwards. Women historically competed in the 80 meters hurdles at the Olympics in the mid-20th century. Hurdles race are also part of combined events contests, including the decathlon and heptathlon.

In track races, hurdles are normally 68–107 cm (27–42 in) in height, depending on the age and sex of the hurdler. Events from 50 to 110 meters are technically known as high hurdles races, while longer competitions are low hurdles races. The track hurdles events are forms of sprinting competitions, although the 400 m version is less anaerobic in nature and demands athletic qualities similar to the 800 meters flat race.

A hurdling technique can also be found in the steeplechase, although in this event athletes are also permitted to step on the barrier to clear it. Similarly, in cross country running athletes may hurdle over various natural obstacles, such as logs, mounds of earth, and small streams – this represents the sporting origin of the modern events. Horse racing has its own variant of hurdle racing, with similar principles.

Details

Hurdling is an sport in athletics (track and field) in which a runner races over a series of obstacles called hurdles, which are set a fixed distance apart. Runners must remain in assigned lanes throughout a race, and, although they may knock hurdles down while running over them, a runner who trails a foot or leg alongside a hurdle or knocks it down with a hand is disqualified. The first hurdler to complete the course is the winner.

Hurdling probably originated in England in the early 19th century, where such races were held at Eton College about 1837. In those days hurdlers merely ran at and jumped over each hurdle in turn, landing on both feet and checking their forward motion. Experimentation with numbers of steps between hurdles led to a conventional step pattern for hurdlers—3 steps between each high hurdle, 7 between each low hurdle, and usually 15 between each intermediate hurdle. Further refinements were made by A.C.M. Croome of Oxford University about 1885, when he went over the hurdle with one leg extended straight ahead at the same time giving a forward lunge of the trunk, the basis of modern hurdling technique.

A major improvement in hurdle design was the invention in 1935 of the L-shaped hurdle, replacing the heavier, inverted-T design. In the L-shaped design and its refinement, the curved-L, or rocker hurdle, the base-leg of the L points toward the approaching hurdler. When upset, the hurdle tips down, out of the athlete’s path, instead of tipping up and over as did the inverted-T design.

Modern hurdlers use a sprinting style between hurdles and a double-arm forward thrust and exaggerated forward lean while clearing the hurdle. They then bring the trailing leg through at nearly a right angle to the body, which enables them to continue forward without breaking stride after clearing the hurdle.

Under rules of the International Association of Athletics Federations (IAAF), the world governing body of track-and-field athletics, the standard hurdling distances for men are 110, 200, and 400 metres (120, 220, and 440 yards, respectively). Men’s Olympic distances are 110 metres and 400 metres; the 200-metre race was held only at the 1900 and 1904 Games. The 110-metre race includes 10 high hurdles (1.067 metres [42 inches] high), spaced 9.14 metres (10 yards) apart. The 400-metre race is over 10 intermediate hurdles (91.4 cm [36 inches] high) spaced 35 metres (38.3 yards) apart. The 200-metre race, run occasionally, has 10 low hurdles (76.2 cm [30 inches] high) spaced 18.29 metres (20 yards) apart. Distances and specifications vary somewhat for indoor and scholastic events.

The women’s international distance formerly was 80 metres over 8 hurdles 76.2 cm high. In 1966 the IAAF approved two new hurdle races for women: 100 metres over 10 hurdles 84 cm (33.1 inches) high, to replace the 80-metre event in the 1972 Olympics; and 200 metres (supplanted in 1976 by 400 metres) over 10 hurdles 76.2 cm high.

:max_bytes(150000):strip_icc():format(webp)/165874744-56a97d525f9b58b7d0fbecb9.jpg)

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1452 2022-07-26 13:59:16

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,980

Re: Miscellany

1425. Hammer Throw

Summary

The hammer throw is one of the four throwing events in regular track and field competitions, along with the discus throw, shot put and javelin.

The "hammer" used in this sport is not like any of the tools also called by that name. It consists of a metal ball attached by a steel wire to a grip. The size of the ball varies between men's and women's competitions.

Competition

The men's hammer weighs 16 pounds (7.26 kg) and measures 3 feet 11+3⁄4 inches (121.3 cm) in length, and the women's hammer weighs 4 kg (8.82 lb) and 3 ft 11 in (119.4 cm) in length. Like the other throwing events, the competition is decided by who can throw the implement the farthest.

Although commonly thought of as a strength event, technical advancements in the last 30 years have developed hammer throw competition to a point where more focus is on speed in order to gain maximum distance.

The throwing motion starts with the thrower swinging the hammer back-and-forth about two times to generate momentum. The thrower then makes three, four or (rarely) five full rotations using a complex heel-toe foot movement, spinning the hammer in a circular path and increasing its angular velocity with each rotation. Rather than spinning the hammer horizontally, it is instead spun in a plane that angles up towards the direction in which it will be launched. The thrower releases the hammer as its velocity is upward and toward the target.

Throws are made from a throwing circle. The thrower is not allowed to step outside the throwing circle before the hammer has landed and may only enter and exit from the rear of the throwing circle. The hammer must land within a 34.92º throwing sector that is centered on the throwing circle. The sector angle was chosen because it provides a sector whose bounds are easy to measure and lay out on a field (10 metres out from the center of the ring, 6 metres across). A violation of the rules results in a foul and the throw not being counted.

As of 2015 the men's hammer world record is held by Yuriy Sedykh, who threw 86.74 m (284 ft 6+3⁄4 in) at the 1986 European Athletics Championships in Stuttgart, West Germany on 30 August. The world record for the women's hammer is held by Anita Włodarczyk, who threw 82.98 m (272 ft 2+3⁄4 in) during the Kamila Skolimowska Memorial on 28 August 2016.

Details

Hammer throw is a sport in athletics (track and field) in which a hammer is hurled for distance, using two hands within a throwing circle.

The sport developed centuries ago in the British Isles. Legends trace it to the Tailteann Games held in Ireland about 2000 BCE, when the Celtic hero Cú Chulainn gripped a chariot wheel by its axle, whirled it around his head, and threw it farther than did any other individual. Wheel hurling was later replaced by throwing a boulder attached to the end of a wooden handle. Forms of hammer throwing were practiced among the ancient Teutonic tribes at religious festivals honouring the god Thor, and sledgehammer throwing was practiced in 15th- and 16th-century Scotland and England.

Since 1866 the hammer throw has been a regular part of track-and-field competitions in Ireland, Scotland, and England. The English standardized the event in 1875 by establishing the weight of the hammer at 7.2 kg (16 pounds) and its length at 1,067.5 mm (later changed to a maximum 1,175 mm [46.3 inches]) and by requiring that it be thrown from a circle 2.135 metres (7 feet) in diameter.

The men’s event has been included in the Olympic Games since 1900; the women’s hammer throw made its Olympic debut in 2000. Early hammers had forged-iron heads and wooden handles, but the International Association of Athletics Federations (IAAF) now requires use of a wire-handled spherical weight. The ball is of solid iron or other metal not softer than brass or is a shell of such metal filled with lead or other material. The handle is spring steel wire, with one end attached to the ball by a plain or ball-bearing swivel and the other to a rigid two-hand grip by a loop. The throwing circle is protected by a C-shaped cage for the safety of officials and onlookers.

In the modern hammer throw technique, a thrower makes three full, quick turns of the body before flinging the weight. Strength, balance, and proper timing are essential. The throw is a failure if the athlete steps on or outside the circle or if the hammer lands outside a 40° sector marked on the field from the centre of the circle.

How it works

Another of the throws events, athletes throw a metal ball (16lb/7.26kg for men, 4kg/8.8lb for women) that is attached to a grip by a steel wire no longer than 1.22m while remaining inside a seven-foot (2.135m) diameter circle.

In order for the throw to be measured, the ball must land inside a marked 35-degree sector and the athlete must not leave the circle before it has landed, and then only from the rear half of the circle.

The thrower usually makes three or four spins before releasing the ball. Athletes will commonly throw six times per competition. In the event of a tie, the winner will be the athlete with the next-best effort.

Quality hammer throwers require speed, strength, explosive power and co-ordination. At major championships the format is typically a qualification session followed by a final.

History

Legend traces the concept of the hammer throw to approximately 2000BC and the Tailteann Games in Tara, Ireland, where the Celtic warrior Culchulainn gripped a chariot wheel by its axle, whirled it around his head and threw it a huge distance.

The wheel was later replaced by a boulder attached to a wooden handle and the use of a sledgehammer is considered to have originated in England and Scotland during the Middle Ages. A 16th century drawing shows the English king Henry VIII throwing a blacksmith’s hammer.

The hammer was first contested by men at the 1900 Olympic Games in Paris. Women first contested the hammer at the Olympics some 100 years later at the Sydney Games. Hungary has a strong tradition in the men’s hammer and has won gold medals at the 1948, 1952, 1968, 1996 and 2012 Games. Poland has been the dominant force in women’s hammer, winning Olympic titles in 2000, 2012 and 2016.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1453 2022-07-27 14:12:40

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,980

Re: Miscellany

1426) Shot put

Summary

The shot put is a track and field event involving "putting" (pushing rather than throwing) a heavy spherical ball—the shot—as far as possible. The shot put competition for men has been a part of the modern Olympics since their revival in 1896, and women's competition began in 1948.

Details

Shot put is a sport in athletics (track and field) in which a spherical weight is thrown, or put, from the shoulder for distance. It derives from the ancient sport of putting the stone.

The first to use a shot (cannon ball) instead of a stone competitively were British military sports groups. Although the weight varied in early events from 3.63 to 10.9 kg (8 to 24 pounds), a standard, regulation-weight 7.26-kg (16-pound) shot was adopted for men in the first modern Olympic Games (1896) and in international competition. The event was added to the women’s Olympic program in 1948. The weight of the shot used for women’s competition is 4 kg (8.8 pounds); lighter weights are also used in school, collegiate, and veteran competitions.

The shot generally is made of solid iron or brass, although any metal not softer than brass may be used. It is put from a circle 2.135 metres (7 feet) in diameter into a 40° sector as measured from the centre of the circle. The circle has a stop board 10 cm (4 inches) high at its front; if the competitor steps on or out of the circle, the throw is invalidated. The shot is put with one hand and must be held near the chin to start. It may not drop below or behind shoulder level at any time.

Constant improvements in technique have resulted in better than doubled record distances. The International Association of Athletics Federations (IAAF) recognizes the first official world record as 9.44 metres (31 feet) by J.M. Mann of the United States in 1876. It had long been conventional to start from a position facing at a right angle to the direction of the put. In the 1950s, however, American Parry O’Brien developed a style of beginning from a position facing backward. Thus he brought the shot around 180°, rather than the usual 90°, and found that the longer he pushed the shot, the farther it would travel. By 1956 O’Brien had doubled Mann’s record with a put of 19.06 metres (62.5 feet), and with this success, his style was almost universally imitated. By 1965 American Randy Matson had pushed the record beyond 21 metres (68 feet); later athletes extended the world mark to more than 23 metres (75 feet), many using a technique in which the putter spins with the shot for more than 360°.

Additional Information

How it works

One of the four traditional throws events in track and field. The shot, a metal ball (7.26kg/16lb for men, 4kg/8.8lb for women), is put – not thrown – with one hand. The aim is to put it as far as possible from a seven-foot diameter (2.135m) circle that has a curved 10-centimetre high toe-board at the front.

In order for the put to be measured, the shot must not drop below the line of the athlete’s shoulders at any stage of the put and must land inside a designated 35-degree sector. The athlete, meanwhile, must not touch the top of the toe-board during their put or leave the circle before the ball has landed, and then only from the rear half of the circle. The results order is determined by distance.

Athletes will commonly throw six times per competition. In the event of a tie, the winner will be the athlete with the next-best effort. A shot putter requires strength, speed, balance and explosive power. At major championships the format is typically a qualification session followed by a final.

History

The Ancient Greeks threw stones as a sport and soldiers are recorded as throwing cannon balls in the Middle Ages, but a version of the modern form of the discipline can be traced to the Highland Games in Scotland during the 19th century where competitors threw a rounded cube, stone or metal weight from behind a line.

The men’s shot put has been part of every modern Olympics since 1896, but women putters had to wait until 1948 before they could compete at the Games.

The US are the most successful shot nation in Olympic history and grabbed gold at every men’s shot competition from 1896 through to 1968 - except two. Poland’s Tomasz Majewski became the third man in Olympic shot history to win back-to-back titles, achieving the feat in 2008 and 2012. Valerie Adams is one of the leading women’s performers in shot history. The New Zealander claimed successive Olympic titles in 2008 and 2012 and snared a silver medal at the 2016 Rio Games.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1454 2022-07-28 14:21:41

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,980

Re: Miscellany

1427) Pole vault

Summary

Pole vaulting, also known as pole jumping, is a track and field event in which an athlete uses a long and flexible pole, usually made from fiberglass or carbon fiber, as an aid to jump over a bar. Pole jumping competitions were known to the Mycenaean Greeks, Minoan Greeks and Celts. It has been a full medal event at the Olympic Games since 1896 for men and since 2000 for women.

It is typically classified as one of the four major jumping events in athletics, alongside the high jump, long jump and triple jump. It is unusual among track and field sports in that it requires a significant amount of specialised equipment in order to participate, even at a basic level. A number of elite pole vaulters have had backgrounds in gymnastics, including world record breakers Yelena Isinbayeva and Brian Sternberg, reflecting the similar physical attributes required for the sports. Running speed, however, may be the most dominant factor. Physical attributes such as speed, agility and strength are essential to pole vaulting effectively, but technical skill is an equally if not more important element. The object of pole vaulting is to clear a bar or crossbar supported upon two uprights (standards) without knocking it down.

Details

Pole vault is a sport in athletics (track and field) in which an athlete jumps over an obstacle with the aid of a pole. Originally a practical means of clearing objects, such as ditches, brooks, and fences, pole-vaulting for height became a competitive sport in the mid-19th century. An Olympic event for men since the first modern Games in 1896, a pole-vault event for women was added for the 2000 Olympics in Sydney, Australia.

In competition, each vaulter is given three chances to clear a specified height. A bar rests on two uprights so that it will fall easily if touched. It is raised progressively until a winner emerges by process of elimination. Ties are broken by a “count back” based on fewest failures at the final height, fewest failures in the whole contest, or fewest attempts throughout the contest. The pole may be of any material: bamboo poles, introduced in 1904, quickly became more popular than heavier wooden poles; glass fibre became the most effective and popular by the early 1960s. The poles may be of any length or diameter.

A slideway, or box, is sunk into the ground with its back placed directly below the crossbar (see illustration). The vaulter thrusts the pole into this box upon leaving the ground. A pit at least 5 metres (16.4 feet) square and filled with soft, cushioning material is provided behind the crossbar for the landing.

Requirements of the athlete include a high degree of coordination, timing, speed, and gymnastic ability. The modern vaulter makes a run of 40 metres (131.2 feet) while carrying the pole and approaches the takeoff with great speed. As the stride before the spring is completed, the vaulter performs the shift, which consists in advancing the pole toward the slideway and at the same time allowing the lower hand to slip up the pole until it reaches the upper hand, then raising both hands as high above the head as possible before leaving the ground. The vaulter is thus able to exert the full pulling power of both arms to raise the body and help swing up the legs.

The vaulter plants the pole firmly in the box, and, running off the ground (rather than jumping), the vaulter’s body is left hanging by the hands as long as possible; the quick, catapulting action of the glass-fibre pole makes timing especially important. The legs swing upward and to the side of the pole, and then shoot high above the crossbar. The body twists to face downward. The vaulter’s body travels across the crossbar by “carry”—the forward speed acquired from the run.

Additional Information

How it works

A field event and one of the two vertical jumps (the other being high jump), the pole vault is the sport's high-adrenaline discipline.

Competitors vault over a 4.5-metre long horizontal bar by sprinting along a runway and jamming a pole (usually made out of carbon fibre or fibreglass) against a ‘stop board’ at the back of a recessed metal ‘box’ sited centrally at the base of the uprights. They seek to clear the greatest height without knocking the bar to the ground.

All competitors have three attempts per height, although they can elect to ‘pass’, i.e. advance to a greater height despite not having cleared the current one. Three consecutive failures at the same height, or combination of heights, cause a competitor’s elimination.

If competitors are tied on the same height, the winner will have had the fewest failures at that height. If competitors are still tied, the winner will have had the fewest failures across the entire competition. Thereafter, a jump-off will decide the winner. Each jumper has one attempt and the bar is lowered and raised until one jumper succeeds at one height.

The event demands speed, power, strength, agility and gymnastic skills. At major championships the format is usually a qualification competition followed by a final.

History

Pole vaulting, originally for distance, dates back to at least the 16th century and there is also evidence it was even practised in Ancient Greece. The origins of modern vaulting can be traced back to Germany in the 1850s, when the sport was adopted by a gymnastic association, and in the Lake District region of England, where contests were held with ash or hickory poles with iron spikes in the end.

The first recorded use of bamboo poles was in 1857. The top vaulters started using steel poles in the 1940s. Flexible fibreglass and later carbon fibre poles started to be widely used in the late 1950s.

Men’s pole vault has featured at every modern Olympic Games with the US winning every Olympic title from 1896 to 1968 (if we discount the 1906 intercalated Games). Bob Richards (1952 and 1956) is the only man in history to win two Olympic pole vault titles. Women only made their Olympic pole vault debut in 2000 when American Stacy Dragila struck gold.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1455 2022-07-29 14:08:29

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,980

Re: Miscellany

1428) High jump

Summary

The high jump is a track and field event in which competitors must jump unaided over a horizontal bar placed at measured heights without dislodging it. In its modern, most-practiced format, a bar is placed between two standards with a crash mat for landing. Since ancient times, competitors have introduced increasingly effective techniques to arrive at the current form, and the current universally preferred method is the Fosbury Flop, in which athletes run towards the bar and leap head first with their back to the bar.

The discipline is, alongside the pole vault, one of two vertical clearance events in the Olympic athletics program. It is contested at the World Championships in Athletics and the World Athletics Indoor Championships, and is a common occurrence at track and field meets. The high jump was among the first events deemed acceptable for women, having been held at the 1928 Olympic Games.

Javier Sotomayor (Cuba) is the current men's record holder with a jump of 2.45 m (8 ft 1⁄4 in) set in 1993 – the longest-standing record in the history of the men's high jump. Stefka Kostadinova (Bulgaria) has held the women's world record at 2.09 m (6 ft 10+1⁄4 in) since 1987, also the longest-held record in the event.

Details

High jump is a sport in athletics (track and field) in which the athlete takes a running jump to attain height. The sport’s venue (see illustration) includes a level, semicircular runway allowing an approach run of at least 15 metres (49.21 feet) from any angle within its 180° arc. Two rigid vertical uprights support a light horizontal crossbar in such a manner that it will fall if touched by a contestant trying to jump over it. The jumper lands in a pit beyond the bar that is at least 5 by 3 metres (16.4 feet by 9.8 feet) in size and filled with cushioning material. The standing high jump was last an event in the 1912 Olympics. The running high jump, an Olympic event for men since 1896, was included in the first women’s Olympic athletics program in 1928.

The only formal requirement of the high jumper is that the takeoff of the jump be from one foot. Many styles have evolved, including the now little-used scissors, or Eastern, method, in which the jumper clears the bar in a nearly upright position; the Western roll and straddle, with the jumper’s body face-down and parallel to the bar at the height of the jump; and a more recent backward-twisting, diving style often termed the Fosbury flop, after its first prominent exponent, the 1968 American Olympic champion Dickinson Fosbury.

In competition the bar is raised progressively as contestants succeed in clearing it. Entrants may begin jumping at any height above a required minimum. Knocking the bar off its supports constitutes a failed attempt, and three failures at a given height disqualify the contestant from the competition. Each jumper’s best leap is credited in the final standings. In the case of ties, the winner is the one with the fewest misses at the final height, or in the whole competition, or with the fewest total jumps in the competition.

Additional Information

How it works

One of two field events also referred to as vertical jumps, competitors in the high jump take off (unaided) from one foot over a four-metre long horizontal bar. They seek to clear the greatest height without knocking the bar to the ground. Athletes land on a crash mat.

All competitors have three attempts per height, although they can elect to ‘pass’, i.e. advance to a greater height despite not having cleared the current one. Three consecutive failures at the same height, or combination of heights, leads to elimination.

If competitors are tied on the same height, the winner is the one with fewest failures at that height. If competitors are still tied, the winner will have had the fewest failures across the entire competition. Thereafter, a jump-off will decide the winner. The jump-off will start at the next greater height. Each jumper has one attempt and the bar is lowered and raised until one jumper succeeds at one height.

The event demands speed, explosive power and agility among other qualities. At major championships the format is usually a qualification competition followed by a final.

History

High jump contests were popular in Scotland in the early 19th century, and the event was incorporated into the first modern Olympics Games in 1896 for men. Women made their Olympic high jump debut in 1928.

Of the field events, the high jump has perhaps undergone the most radical changes of technique. The Eastern Cut-off, Western Roll and Straddle are methods that have been previously used by the world’s elite. However, the Fosbury Flop, which involves going over with the jumper's back to the bar, popularised by the 1968 Olympic champion Dickinson Fosbury, is now almost exclusively the technique adopted by all the top high jumpers.

Javier Sotomayor won Olympic gold at the 1992 Barcelona Olympics. The Cuban great set the current high jump world record of 2.45m in 1993 and is the first man in history to jump over 8ft. One of the greatest women’s high jumpers in history is Iolanda Balas. The Romanian great won back-to-back Olympic titles in 1960 and 1964 and went 11 years unbeaten in her event.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1456 2022-07-30 14:34:23

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,980

Re: Miscellany

1429) Long Jump

Summary

Long jump, also called broad jump, is a sport in athletics (track-and-field) consisting of a horizontal jump for distance. It was formerly performed from both standing and running starts, as separate events, but the standing long jump is no longer included in major competitions. It was discontinued from the Olympic Games after 1912. The running long jump was an event in the Olympic Games of 708 BCE and in the modern Games from 1896.

The standard venue for the long jump includes a runway at least 40 metres (131 feet) in length with no outer limit, a takeoff board planted level with the surface at least 1 metre (3.3 feet) from the end of the runway, and a sand-filled landing area at least 2.75 metres (9 feet) and no more than 3 metres (9.8 feet) wide.

The jumper usually begins his approach run about 30 metres (100 feet) from the takeoff board and accelerates to reach maximum speed at takeoff while gauging his stride to arrive with one foot on and as near as possible to the edge of the board. If a contestant steps beyond the edge (scratch line), his jump is disallowed; if he leaps from too far behind the line, he loses valuable distance.

The most commonly used techniques in flight are the tuck, in which the knees are brought up toward the chest, and the hitch kick, which is in effect a continuation of the run in the air. The legs are brought together for landing, and, since the length of the jump is measured from the edge of the takeoff board to the nearest mark in the landing area surface made by any part of the body, the jumper attempts to fall forward.

In international competition the eight contestants who make the longest jumps in three preliminary attempts qualify to make three final attempts. The winner is the one who makes the single longest jump over the course of the preliminary and final rounds. In 1935 Jesse Owens of the United States set a record of 8.13 metres (26.6 feet) that was not broken until 1960. Similarly, American Bob Beamon held the long jump record of 8.90 metres (29.2 feet) from 1968 until 1991, when it was broken by American Mike Powell, who leapt 8.95 metres (29.4 feet). Beginning in 1948, the women’s long jump has been an Olympic event.

Details

The long jump is a track and field event in which athletes combine speed, strength and agility in an attempt to leap as far as possible from a takeoff point. Along with the triple jump, the two events that measure jumping for distance as a group are referred to as the "horizontal jumps". This event has a history in the ancient Olympic Games and has been a modern Olympic event for men since the first Olympics in 1896 and for women since 1948.

Rules

At the elite level, competitors run down a runway (usually coated with the same rubberized surface as running tracks, crumb rubber or vulcanized rubber, known generally as an all-weather track) and jump as far as they can from a wooden or synthetic board 20 cm or 8 in wide, that is built flush with the runway, into a pit filled with soft damp sand. If the competitor starts the leap with any part of the foot past the foul line, the jump is declared a foul and no distance is recorded. A layer of plasticine is placed immediately after the board to detect this occurrence. An official (similar to a referee) will also watch the jump and make the determination. The competitor can initiate the jump from any point behind the foul line; however, the distance measured will always be perpendicular to the foul line to the nearest break in the sand caused by any part of the body or uniform. Therefore, it is in the best interest of the competitor to get as close to the foul line as possible. Competitors are allowed to place two marks along the side of the runway in order to assist them to jump accurately. At a lesser meet and facilities, the plasticine will likely not exist, the runway might be a different surface or jumpers may initiate their jump from a painted or taped mark on the runway. At a smaller meet, the number of attempts might also be limited to four or three.

Each competitor has a set number of attempts. That would normally be three trials, with three additional jumps being awarded to the best 8 or 9 (depending on the number of lanes on the track at that facility, so the event is equatable to track events) competitors. All valid attempts will be recorded but only the best mark counts towards the results. The competitor with the longest valid jump (from either the trial or final rounds) is declared the winner at the end of competition. In the event of an exact tie, then comparing the next best jumps of the tied competitors will be used to determine place. In a large, multi-day elite competition (like the Olympics or World Championships), a qualification is held in order to select at least 12 finalists. Ties and automatic qualifying distances are potential factors. In the final, a set of trial round jumps will be held, with the best 8 performers advancing to the final rounds.

For record purposes, the maximum accepted wind assistance is two metres per second (m/s) (4.5 mph).

History

The long jump is the only known jumping event of ancient Greece's original Olympics' pentathlon events. All events that occurred at the Olympic Games were initially supposed to act as a form of training for warfare. The long jump emerged probably because it mirrored the crossing of obstacles such as streams and ravines. After investigating the surviving depictions of the ancient event it is believed that unlike the modern event, athletes were only allowed a short running start. The athletes carried a weight in each hand, which were called halteres (between 1 and 4.5 kg). These weights were swung forward as the athlete jumped in order to increase momentum. It was commonly believed that the jumper would throw the weights behind him in midair to increase his forward momentum; however, halteres were held throughout the duration of the jump. Swinging them down and back at the end of the jump would change the athlete's center of gravity and allow the athlete to stretch his legs outward, increasing his distance. The jump itself was made from the bater ("that which is trod upon"). It was most likely a simple board placed on the stadium track which was removed after the event. The jumpers would land in what was called a skamma ("dug-up" area). The idea that this was a pit full of sand is wrong. Sand in the jumping pit is a modern invention. The skamma was simply a temporary area dug up for that occasion and not something that remained over time.

The long jump was considered one of the most difficult of the events held at the Games since a great deal of skill was required. Music was often played during the jump and Philostratus says that pipes at times would accompany the jump so as to provide a rhythm for the complex movements of the halteres by the athlete. Philostratus is quoted as saying, "The rules regard jumping as the most difficult of the competitions, and they allow the jumper to be given advantages in rhythm by the use of the flute, and in weight by the use of the halter." Most notable in the ancient sport was a man called Chionis, who in the 656 BC Olympics staged a jump of 7.05 m (23 ft 1+1⁄2 in).

There has been some argument by modern scholars over the long jump. Some have attempted to recreate it as a triple jump. The images provide the only evidence for the action so it is more well received that it was much like today's long jump. The main reason some want to call it a triple jump is the presence of a source that claims there once was a fifty-five ancient foot jump done by a man named Phayllos.

The long jump has been part of modern Olympic competition since the inception of the Games in 1896. In 1914, Dr. Harry Eaton Stewart recommended the "running broad jump" as a standardized track and field event for women. However, it was not until 1948 that the women's long jump was added to the Olympic athletics programme.

Additional Information

How it works

Known as one of track and field’s two horizontal jumps, competitors sprint along a runway and jump as far as possible into a sandpit from a wooden take-off board. The distance travelled, from the edge of the board to the closest indentation in the sand to it, is then measured.

A foul is committed – and the jump is not measured – if an athlete steps beyond the board.

History

The origins of the long jump can be traced to the Olympics in Ancient Greece, when athletes carried weights in each hand. These were swung forward on take-off and released in the middle of the jump in a bid to increase momentum.

The long jump, as we know it today, has been part of the Olympics since the first Games in 1896. The men’s event has seen some long-standing world records by US jumpers. Jesse Owens jumped 8.13m in 1935, a distance that was not exceeded until 1960. Bob Beamon flew out to a world record 8.90m in the rarefied air of Mexico City at the 1968 Olympic Games a mark that remained until Mike Powell surpassed it with a leap of 8.95m at the 1991 World Championships.

As a winner of four successive Olympic titles – from 1984 to 1996 - Carl Lewis is regarded as the world’s greatest male long jumper in history. The inaugural women’s Olympic long jump took place in 1948 and athletes from five different regions have struck gold in the event; Europe, North America, South America, Africa and Oceania.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1457 2022-07-31 13:39:41

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,980

Re: Miscellany

1430) Triple Jump

Summary

The triple jump, sometimes referred to as the hop, step and jump or the hop, skip and jump, is a track and field event, similar to the long jump. As a group, the two events are referred to as the "horizontal jumps". The competitor runs down the track and performs a hop, a bound and then a jump into the sand pit. The triple jump was inspired by the ancient Olympic Games and has been a modern Olympics event since the Games' inception in 1896.

According to World Athletics rules, "the hop shall be made so that an athlete lands first on the same foot as that from which he has taken off; in the step he shall land on the other foot, from which, subsequently, the jump is performed."

The current male world record holder is Jonathan Edwards of the United Kingdom, with a jump of 18.29 m (60 ft 0 in). The current female world record holder is Yulimar Rojas of Venezuela, with a jump of 15.74 m (51 ft 7+1⁄2 in).

Details

Triple jump, also called hop, step, and jump, is an event in athletics (track and field) in which an athlete makes a horizontal jump for distance incorporating three distinct, continuous movements—a hop, in which the athlete takes off and lands on the same foot; a step, landing on the other foot; and a jump, landing in any manner, usually with both feet together. If a jumper touches ground with a wrong leg, the jump is disallowed. Other rules are similar to those of the long jump.

The origins of the triple jump are obscure, but it may be related to the ancient children’s game hopscotch. It has been a modern Olympic event since the first Games in 1896; at those Games two hops were used, but one hop was used at the Olympics thereafter. (The standing triple jump was contested only in the 1900 and 1904 Olympics.)

Equipment needed for the triple jump includes a runway and a takeoff board identical to those used in the long jump, except that the board is at least 13 metres (42.7 feet) from the landing area for men and 11 metres (36 feet) for women.

Additional Information

How it works

One of the two horizontal jump events on the track and field programme, competitors sprint along a runway before taking off from a wooden board. The take-off foot absorbs the first landing, the hop. The next phase, the step, is finished on the opposite foot and then followed by a jump into a sandpit. The distance travelled, from the edge of the board to the closest indentation in the sand to it, is then measured.

A foul is committed – and the jump is not measured – if an athlete steps beyond the board. The order of the field is determined by distance jumped.

Most championship competitions involve six jumps per competitor, although usually a number of them, those with the shorter marks, are often eliminated after three jumps. If competitors are tied, the athlete with the next best distance is declared the winner.

The event requires speed, explosive power, strength and flexibility. At major championships the format is usually a qualification session followed by a final.

History

At the inaugural modern Olympic Games in 1896, the event consisted of two hops and a jump but the format of a hop, a skip, a jump – hence its alternative name which was still in common usage until recently – was standardised in 1908.

Viktor Saneyev of the Soviet Union won a hat-trick of Olympic men’s triple jump titles from 1968 to 1976. Christian Taylor of the US won back-to-back Olympic titles in 2012 and 2016. When Great Britain’s Jonathan Edwards set the world record of 18.29m to win gold the 1995 IAAF World Championships, he jumped a distance in excess of the width of a football penalty box.

The first Olympic women’s triple jump competition took place in 1996. In 2004 Francoise Mbango struck gold to become the first female athlete from Cameroon to win an Olympic medal. Four years later at the Beijing Games she successfully retained her title.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1458 2022-08-01 14:27:28

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,980

Re: Miscellany

1431) Kalambo Falls

Summary

The Kalambo Falls on the Kalambo River is a 772-foot (235 m) single-drop waterfall on the border of Zambia and Rukwa Region, Tanzania at the southeast end of Lake Tanganyika. The falls are some of the tallest uninterrupted falls in Africa (after South Africa's Tugela Falls, Ethiopia's Jin Bahir Falls and others). Downstream of the falls is the Kalambo Gorge, which has a width of about 1 km and a depth of up to 300 m, running for about 5 km before opening out into the Lake Tanganyika rift valley. The Kalambo waterfall is the tallest waterfall in both Tanzania and Zambia. The expedition which mapped the falls and the area around it was in 1928 and led by Enid Gordon-Gallien.[1] Initially it was assumed that the height of falls exceeded 300 m, but measurements in the 1920s gave a more modest result, above 200 m. Later measurements, in 1956, gave a result of 221 m. After this several more measurements have been made, each with slightly different results. The width of the falls is 3.6–18 m.

Kalambo Falls is also considered one of the most important archaeological sites in Africa, with occupation spanning over 250,000 years.

Details

Kalambo Falls is said to be Africa's second tallest free-leaping or single-drop waterfall (second to one of the tiers of Tugela Falls in South Africa) at 221m.

As a matter of fact, the Kalambo River defines the Tanzania-Zambia border all the way into the vast Lake Tanganyika, which itself is shared by a foursome of countries (i.e. Democratic Republic of Congo, Burundi, Zambia, and Tanzania).

The waterfall is in high flow in the May/June timeframe. But this depends on how much rainfall the region gets during its rainy season from January through April. The flow diminishes as the year progresses. Some of the locals we’ve spoken to said that around October or November, the falls probably won’t look impressive.

Though few visitors realise it, the Kalambo Falls are also one of the most important archaeological sites in southern Africa. Just above the falls, by the side of the river, is a site that appears to have been occupied throughout much of the Stone Age and early Iron Age. The earliest tools and other remains discovered there may be over 300,000 years old, including evidence for the use of fire.

For years Kalambo provided the earliest evidence of fire in sub-Saharan Africa – charred logs, ash and charcoal have been discovered amongst the lowest levels of remains. This was a tremendously important step for Stone-Age man as it enabled him to keep warm and cook food, as well as use fire to scare off aggressive animals. Burning areas of grass may even have helped him to hunt. However, more recent excavations of older sites in Africa have discovered evidence of the use of fire before the time when we believe that this site at Kalambo was occupied.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1459 2022-08-02 14:57:38

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,980

Re: Miscellany

1432) Coma

Summary

A coma is a deep state of prolonged unconsciousness in which a person cannot be awakened, fails to respond normally to painful stimuli, light, or sound, lacks a normal wake-sleep cycle and does not initiate voluntary actions. Coma patients exhibit a complete absence of wakefulness and are unable to consciously feel, speak or move. Comas can be derived by natural causes, or can be medically induced.

Clinically, a coma can be defined as the inability consistently to follow a one-step command. It can also be defined as a score of ≤ 8 on the Glasgow Coma Scale (GCS) lasting ≥ 6 hours. For a patient to maintain consciousness, the components of wakefulness and awareness must be maintained. Wakefulness describes the quantitative degree of consciousness, whereas awareness relates to the qualitative aspects of the functions mediated by the cortex, including cognitive abilities such as attention, sensory perception, explicit memory, language, the execution of tasks, temporal and spatial orientation and reality judgment. From a neurological perspective, consciousness is maintained by the activation of the cerebral cortex—the gray matter that forms the outer layer of the brain—and by the reticular activating system (RAS), a structure located within the brainstem.

Details

Coma is a state of unconsciousness, characterized by loss of reaction to external stimuli and absence of spontaneous nervous activity, usually associated with injury to the cerebrum. Coma may accompany a number of metabolic disorders or physical injuries to the brain from disease or trauma.

Different patterns of coma depend on the origin of the injury. Concussions may cause losses of consciousness of short duration; in contrast, lack of oxygen (anoxia) may result in a coma that lasts for several weeks and is often fatal. Stroke, a rupture or blockage of vessels supplying blood to the brain, can cause sudden loss of consciousness in some patients, while comas caused by metabolic abnormalities or cerebral tumours are characterized by a more gradual onset, with stages of lethargy and stupor before true coma. Metabolic comas are also more likely to have associated brain seizures and usually leave pupillary light reflexes intact, whereas comas with physical causes usually eradicate this reflex.

Common causes of metabolic coma include diabetes, excessive consumption of alcohol, and barbiturate poisoning. In diabetes, low insulin levels allow the buildup of ketones, breakdown products of fat tissue that destroy the osmotic balance in the brain, damaging brain cells. Ingestion of large quantities of alcohol over a short period can cause a coma that may be treated by gastric lavage (stomach pump) in its early stages; alcohol combined with barbiturates is a common cause of coma in suicide attempts. Large doses of barbiturates alone will also produce coma by suppressing cerebral blood flow, thus causing anoxia. Gastric lavage soon after the drug is ingested may remove a sufficient amount of the barbiturate to allow recovery.

For most metabolic comas, the first step in treatment is to protect the brain cells and attempt to eliminate the cause of coma. Assisted ventilation is often necessary. In some psychiatric conditions, such as catatonic schizophrenia, a comalike state may also occur. Electroencephalography (EEG) can be used to detect signs of consciousness in patients who are unresponsive; research suggests that EEG recordings potentially can be used to predict whether a patient will emerge from coma.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1460 2022-08-03 14:00:52

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,980

Re: Miscellany

1433) Arachnophobia

Summary

Arachnophobia is a specific phobia brought about by the irrational fear of spiders and other arachnids such as scorpions.

Signs and symptoms

People with arachnophobia tend to feel uneasy in any area they believe could harbour spiders or that has visible signs of their presence, such as webs. If an arachnophobe sees a spider, they may not enter the general vicinity until they have overcome the panic attack that is often associated with their phobia. Some people scream, cry, have emotional outbursts, experience trouble breathing, sweat and experience increased heart rates when they come in contact with an area near spiders or their webs. In some extreme cases, even a picture, a toy, or a realistic drawing of a spider can trigger intense fear.

Details

Arachnophobia or the fear of spiders is the oldest and most common phobia in the Western culture. The word Arachnophobia is derived from the Greek word ‘arachne’ meaning spiders. The response to spiders shown by an arachnophobic individual may seem irrational to others and often to the sufferer himself.

Causes of Arachnophobia

Scientists who have studied this fear of spiders explain it to be a result of evolutionary selection. This means that Arachnophobia is an evolutionary response, since spiders, especially the venomous ones, have always been associated with infections and disease. Hence, the fear of spiders triggers a “disgust response” in many individuals.

A study conducted in the UK on 261 adults showed that nearly 32% women and 18% men in the group felt anxious, nervous or extremely frightened when confronted with a spider(real or images).

The exact causes of Arachnophobia are different for different people:

* For some people, it is a learned response: they learn to fear spiders after having seen others being fearful.

* An imbalance in brain chemicals may be linked with Arachnophobia

* The fear of spiders can be a family or cultural trait: many people in Africa are known to fear large spiders whereas in South Africa, where they eat spiders, most are unafraid.

* Traumatic experience in the past involving spiders is another reason for Arachnophobia.

Symptoms of Arachnophobia

Initial symptoms of arachnophobia or the fear of spiders may appear in one’s childhood or adolescence. However, following a traumatic episode, some or all of the following symptoms may be present at all ages when the sufferer is confronted with the object of the phobia, in this case, a spider.

1) Rapid heart rate

2) Dizziness

3) Hot or cold flashes

4) Feeling of going crazy and losing control

5) Chest pain

6) Feeling of choking

7) Inability to distinguish between reality and unreality

8) Trembling sweating

9) Thoughts of death

10) Nausea or other gastrointestinal distress

In some arachnophobic individuals, these symptoms may be triggered merely by anticipating contact with a spider. Even the sight or mention of cobwebs can trigger such a response.

Treatment for fear of spiders

True sufferers of the fear of spiders have an extreme aversion to these creatures so much so, that their daily life may be adversely impacted. These individuals show an active need to avoid areas where spiders may be present. A combination of therapy, counseling, and medications must be used to treat this fear.

It is important that one aggressively seek out the chosen treatment for it to be effective. Medicines like benzodiazepines are helpful in reducing the intensity of the reactions in presence of spiders, but they must be used sparingly and under medical supervision. Relaxation techniques like meditation and positive reaffirmations also form an essential part of the therapy.

One of the more modern methods of treating Arachnophobia includes systematic desensitization. This is a method that has been used for treating many different phobias. The goal of gradual desensitization is to slowly eliminate one’s Arachnophobia and help the individual cope with fear. An application called Phobia Free is known to utilize this gradual exposure technique to help people overcome their fear of spiders. It is available for tablet computers and Smartphone devices. This app, which has been reviewed and approved by the NHS of UK, uses game-play and relaxation methods to help one confront spiders (or other objects of fear).

If you or someone you know is severely impacted by the fear of spiders then it is essential to seek help in order to lead a more relaxed life. It is possible to eliminate Arachnophobia but the first step is to ask for help in order to learn to cope and eliminate the fear completely.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1461 2022-08-04 14:17:24

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,980

Re: Miscellany

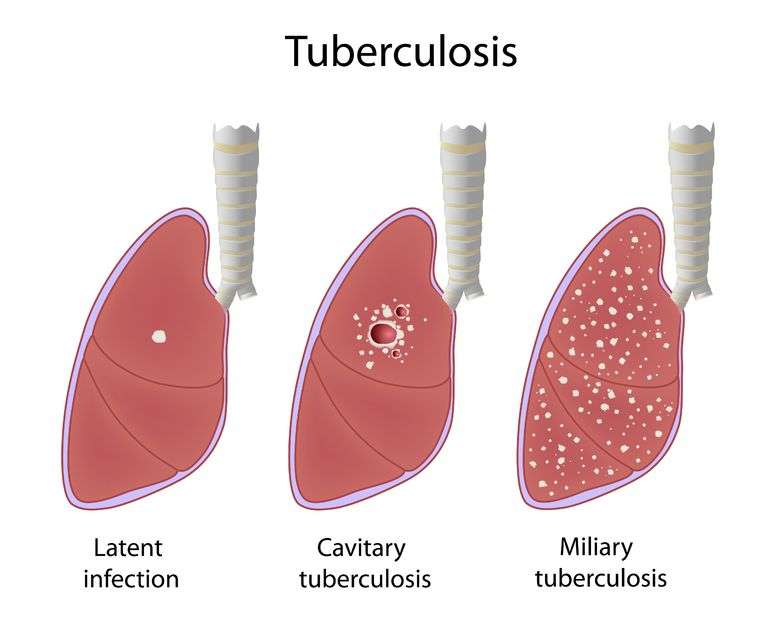

1434) Tuberculosis

Summary

Tuberculosis (TB) is an infectious disease usually caused by Mycobacterium tuberculosis (MTB) bacteria. Tuberculosis generally affects the lungs, but it can also affect other parts of the body. Most infections show no symptoms, in which case it is known as latent tuberculosis. Around 10% of latent infections progress to active disease which, if left untreated, kill about half of those affected. Typical symptoms of active TB are chronic cough with blood-containing mucus, fever, night sweats, and weight loss. It was historically referred to as consumption due to the weight loss associated with the disease. Infection of other organs can cause a wide range of symptoms.

Tuberculosis is spread from one person to the next through the air when people who have active TB in their lungs cough, spit, speak, or sneeze. People with Latent TB do not spread the disease. Active infection occurs more often in people with HIV/AIDS and in those who smoke. Diagnosis of active TB is based on chest X-rays, as well as microscopic examination and culture of body fluids. Diagnosis of Latent TB relies on the tuberculin skin test (TST) or blood tests.

Prevention of TB involves screening those at high risk, early detection and treatment of cases, and vaccination with the bacillus Calmette-Guérin (BCG) vaccine. Those at high risk include household, workplace, and social contacts of people with active TB. Treatment requires the use of multiple antibiotics over a long period of time. Antibiotic resistance is a growing problem with increasing rates of multiple drug-resistant tuberculosis (MDR-TB).

Details

Tuberculosis (TB), infectious disease that is caused by the tubercle bacillus, Mycobacterium tuberculosis. In most forms of the disease, the bacillus spreads slowly and widely in the lungs, causing the formation of hard nodules (tubercles) or large cheeselike masses that break down the respiratory tissues and form cavities in the lungs. Blood vessels also can be eroded by the advancing disease, causing the infected person to cough up bright red blood.

During the 18th and 19th centuries, tuberculosis reached near-epidemic proportions in the rapidly urbanizing and industrializing societies of Europe and North America. Indeed, “consumption,” as it was then known, was the leading cause of death for all age groups in the Western world from that period until the early 20th century, at which time improved health and hygiene brought about a steady decline in its mortality rates. Since the 1940s, antibiotic drugs have reduced the span of treatment to months instead of years, and drug therapy has done away with the old TB sanatoriums where patients at one time were nursed for years while the defensive properties of their bodies dealt with the disease.

Today, in less-developed countries where population is dense and hygienic standards poor, tuberculosis remains a major fatal disease. The prevalence of the disease has increased in association with the HIV/AIDS epidemic; an estimated one out of every four deaths from tuberculosis involves an individual coinfected with HIV. In addition, the successful elimination of tuberculosis as a major threat to public health in the world has been complicated by the rise of new strains of the tubercle bacillus that are resistant to conventional antibiotics. Infections with these strains are often difficult to treat and require the use of combination drug therapies, sometimes involving the use of five different agents.

The course of tuberculosis

The tubercle bacillus is a small, rod-shaped bacterium that is extremely hardy; it can survive for months in a state of dryness and can also resist the action of mild disinfectants. Infection spreads primarily by the respiratory route directly from an infected person who discharges live bacilli into the air. Minute droplets ejected by sneezing, coughing, and even talking can contain hundreds of tubercle bacilli that may be inhaled by a healthy person. There the bacilli become trapped in the tissues of the body, are surrounded by immune cells, and finally are sealed up in hard, nodular tubercles. A tubercle usually consists of a centre of dead cells and tissues, cheeselike (caseous) in appearance, in which can be found many bacilli. This centre is surrounded by radially arranged phagocytic (scavenger) cells and a periphery containing connective tissue cells. The tubercle thus forms as a result of the body’s defensive reaction to the bacilli. Individual tubercles are microscopic in size, but most of the visible manifestations of tuberculosis, from barely visible nodules to large tuberculous masses, are conglomerations of tubercles.

In otherwise healthy children and adults, the primary infection often heals without causing symptoms. The bacilli are quickly sequestered in the tissues, and the infected person acquires a lifelong immunity to the disease. A skin test taken at any later time may reveal the earlier infection and the immunity, and a small scar in the lung may be visible by X-ray. In this condition, sometimes called latent tuberculosis, the affected person is not contagious. In some cases, however, sometimes after periods of time that can reach 40 years or more, the original tubercles break down, releasing viable bacilli into the bloodstream. From the blood the bacilli create new tissue infections elsewhere in the body, most commonly in the upper portion of one or both lungs. This causes a condition known as pulmonary tuberculosis, a highly infectious stage of the disease. In some cases the infection may break into the pleural space between the lung and the chest wall, causing a pleural effusion, or collection of fluid outside the lung. Particularly among infants, the elderly, and immunocompromised adults (organ transplant recipients or AIDS patients, for example), the primary infection may spread through the body, causing miliary tuberculosis, a highly fatal form if not adequately treated. In fact, once the bacilli enter the bloodstream, they can travel to almost any organ of the body, including the lymph nodes, bones and joints, skin, intestines, genital organs, kidneys, and bladder. An infection of the meninges that cover the brain causes tuberculous meningitis; before the advent of specific drugs, this disease was always fatal, though most affected people now recover.

The onset of pulmonary tuberculosis is usually insidious, with lack of energy, weight loss, and persistent cough. These symptoms do not subside, and the general health of the patient deteriorates. Eventually, the cough increases, the patient may have chest pain from pleurisy, and there may be blood in the sputum, an alarming symptom. Fever develops, usually with drenching night sweats. In the lung, the lesion consists of a collection of dead cells in which tubercle bacilli may be seen. This lesion may erode a neighbouring bronchus or blood vessel, causing the patient to cough up blood (hemoptysis). Tubercular lesions may spread extensively in the lung, causing large areas of destruction, cavities, and scarring. The amount of lung tissue available for the exchange of gases in respiration decreases, and if untreated the patient will die from failure of ventilation and general toxemia and exhaustion.

Diagnosis and treatment

The diagnosis of pulmonary tuberculosis depends on finding tubercle bacilli in the sputum, in the urine, in gastric washings, or in the cerebrospinal fluid. The primary method used to confirm the presence of bacilli is a sputum smear, in which a sputum specimen is smeared onto a slide, stained with a compound that penetrates the organism’s cell wall, and examined under a microscope. If bacilli are present, the sputum specimen is cultured on a special medium to determine whether the bacilli are M. tuberculosis. An X-ray of the lungs may show typical shadows caused by tubercular nodules or lesions. The prevention of tuberculosis depends on good hygienic and nutritional conditions and on the identification of infected patients and their early treatment. A vaccine, known as BCG vaccine, is composed of specially weakened tubercle bacilli. Injected into the skin, it causes a local reaction, which confers some immunity to infection by M. tuberculosis for several years. It has been widely used in some countries with success; its use in young children in particular has helped to control infection in the developing world. The main hope of ultimate control, however, lies in preventing exposure to infection, and this means treating infectious patients quickly, possibly in isolation until they are noninfectious. In many developed countries, individuals at risk for tuberculosis, such as health care workers, are regularly given a skin test to show whether they have had a primary infection with the bacillus.

Today, the treatment of tuberculosis consists of drug therapy and methods to prevent the spread of infectious bacilli. Historically, treatment of tuberculosis consisted of long periods, often years, of bed rest and surgical removal of useless lung tissue. In the 1940s and ’50s several antimicrobial drugs were discovered that revolutionized the treatment of patients with tuberculosis. As a result, with early drug treatment, surgery is rarely needed. The most commonly used antituberculosis drugs are isoniazid and rifampicin (rifampin). These drugs are often used in various combinations with other agents, such as ethambutol, pyrazinamide, or rifapentine, in order to avoid the development of drug-resistant bacilli. Patients with strongly suspected or confirmed tuberculosis undergo an initial treatment period that lasts two months and consists of combination therapy with isoniazid, rifampicin, ethambutol, and pyrazinamide. These drugs may be given daily or two times per week. The patient is usually made noninfectious quite quickly, but complete cure requires continuous treatment for another four to nine months. The length of the continuous treatment period depends on the results of chest X-rays and sputum smears taken at the end of the two-month period of initial therapy. Continuous treatment may consist of once daily or twice weekly doses of isoniazid and rifampicin or isoniazid and rifapentine.

If a patient does not continue treatment for the required time or is treated with only one drug, bacilli will become resistant and multiply, making the patient sick again. If subsequent treatment is also incomplete, the surviving bacilli will become resistant to several drugs. Multidrug-resistant tuberculosis (MDR TB) is a form of the disease in which bacilli have become resistant to isoniazid and rifampicin. MDR TB is treatable but is extremely difficult to cure, typically requiring two years of treatment with agents known to have more severe side effects than isoniazid or rifampicin. Extensively drug-resistant tuberculosis (XDR TB) is a rare form of MDR TB. XDR TB is characterized by resistance to not only isoniazid and rifampin but also a group of bactericidal drugs known as fluoroquinolones and at least one aminoglycoside antibiotic, such as kanamycin, amikacin, or capreomycin. Aggressive treatment using five different drugs, which are selected based on the drug sensitivity of the specific strain of bacilli in a patient, has been shown to be effective in reducing mortality in roughly 50 percent of XDR TB patients. In addition, aggressive treatment can help prevent the spread of strains of XDR TB bacilli.

In 1995, in part to prevent the development and spread of MDR TB, the World Health Organization began encouraging countries to implement a compliance program called directly observed therapy (DOT). Instead of taking daily medication on their own, patients are directly observed by a clinician or responsible family member while taking larger doses twice a week. Although some patients consider DOT invasive, it has proved successful in controlling tuberculosis.

Despite stringent control efforts, however, drug-resistant tuberculosis remained a serious threat in the early 21st century. In 2009, for example, researchers reported the emergence of extremely drug-resistant tuberculosis (XXDR-TB), also known as totally drug-resistant tuberculosis (TDR-TB), in a small subset of Iranian patients. This form of the disease, which has also been detected in Italy (in 2003) and India (in 2011), is resistant to all first- and second-line antituberculosis drugs.

At the same time, development of a vaccine to prevent active disease from emerging in persons already infected with the tuberculosis bacterium was underway. In 2019 the results of a preliminary trial indicated that the vaccine could prevent pulmonary disease in more than half of infected individuals.

Other mycobacterial infections

The above discussion of tuberculosis relates to the disease caused by M. tuberculosis. Another species, M. bovis, is the cause of bovine tuberculosis. M. bovis is transmitted among cattle and some wild animals through the respiratory route, and it is also excreted in milk. If the milk is ingested raw, M. bovis readily infects humans. The bovine bacillus may be caught in the tonsils and may spread from there to the lymph nodes of the neck, where it causes caseation of the node tissue (a condition formerly known as scrofula). The node swells under the skin of the neck, finally eroding through the skin as a chronic discharging ulcer. From the gastrointestinal tract, M. bovis may spread into the bloodstream and reach any part of the body. It shows, however, a great preference for bones and joints, where it causes destruction of tissue and eventually gross deformity. Tuberculosis of the spine, or Pott disease, is characterized by softening and collapse of the vertebrae, often resulting in a hunchback deformity. Pasteurization of milk kills tubercle bacilli, and this, along with the systematic identification and destruction of infected cattle, has led to the disappearance of bovine tuberculosis in humans in many countries.

The AIDS epidemic has given prominence to a group of infectious agents known variously as nontuberculosis mycobacteria, atypical mycobacteria, and mycobacteria other than tuberculosis (MOTT). This group includes such Mycobacterium species as M. avium (or M. avium-intracellulare), M. kansasii, M. marinum, and M. ulcerans. These bacilli have long been known to infect animals and humans, but they cause dangerous illnesses of the lungs, lymph nodes, and other organs only in people whose immune systems have been weakened. Among AIDS patients, atypical mycobacterial illnesses are common complications of HIV infection. Treatment is attempted with various drugs, but the prognosis is usually poor owing to the AIDS patient’s overall condition.

Tuberculosis through history

Evidence that M. tuberculosis and humans have long coexisted comes primarily from studies of bone samples collected from a Neolithic human settlement in the eastern Mediterranean. Genetic evidence gathered from these studies indicates that roughly 9,000 years ago there existed a strain of M. tuberculosis similar to strains present in the 21st century. Evidence of mycobacterial infection has also been found in the mummified remains of ancient Egyptians, and references to phthisis, or “wasting,” occur in the writings of the Greek physician Hippocrates. In the medical writings of Europe through the Middle Ages and well into the industrial age, tuberculosis was referred to as phthisis, the “white plague,” or consumption—all in reference to the progressive wasting of the victim’s health and vitality as the disease took its inexorable course. The cause was assumed to be mainly constitutional, either the result of an inherited disposition or of unhealthy or dissolute living. In the first edition of Encyclopædia Britannica (1768), it was reported that a tendency to develop “consumption of the lungs” could tragically be expected in people who were fine, delicate, and precocious:

This is known from a view of the tender and fine vessels, and of the slender make of the whole body, a long neck, a flat and narrow thorax, depressed scapulæ; the blood of a bright red, thin, sharp, and hot; the skin transparent, very white and fair, with a blooming red in the cheeks; the wit quick, subtle, and early ripe with regard to the age, and a merry chearful disposition.

Based on the stage of the disease, treatments included regular bloodletting, administration of expectorants and purgatives, healthful diet, exercise such as vigorous horseback riding, and, in the grim final stages, opiates. The view that tuberculosis might be a contagious disease also had its adherents, but it was not until 1865 that Jean Antoine Villemin, an army doctor in Paris, showed that it could be transmitted from tuberculous animals to healthy animals by inoculation. The actual infectious agent, the tubercle bacillus, was discovered and identified in 1882 by the German physician Robert Koch. By that time the cultural status of the disease was assured. As summarized by Dr. J.O. Affleck of the University of Edinburgh, Scotland, in the ninth edition of Encyclopædia Britannica (1885), “Few diseases possess such sad interest for humanity as consumption, both on account of its widespread prevalence and its destructive effects, particularly among the young.” Causing as much as one-quarter of all deaths in Europe, arising with particular frequency among young adults between the ages of 18 and 35, and bringing on a lingering, melancholy decline characterized by loss of body weight, skin pallor, and sunken yet luminous eyes, tuberculosis was enshrined in literature as the “captain of death,” the slow killer of youth, promise, and genius. Prominent artists who died of consumption in the 19th century included the English poet John Keats, the Polish composer Frédéric Chopin, and all of the Brontë sisters (Charlotte, Emily, and Anne); in the early 20th century they were followed by the Russian playwright Anton Chekhov, the Italian painter Amedeo Modigliani, and the German writer Franz Kafka. Without a clear understanding of the bacterium that caused the disease, little could be done for its victims except to isolate them in sanitariums, where cleanliness and fresh air were thought to help the body’s natural defenses to stop or at least slow the progress of the disease.

Preventive inoculation against tuberculosis, in which live but attenuated tubercle bacilli are used as a vaccine, was introduced in France in 1921 by bacteriologists Albert Calmette and Camille Guérin. The strain designated BCG (Bacillus Calmette-Guérin), of bovine origin, became attenuated while growing on culture media containing bile. After its introduction by Calmette, large numbers of children were vaccinated in France, elsewhere in Europe, and in South America; after 1930 the vaccine was used on an extensive scale. In 1943–44 the Ukrainian-born microbiologist Selman A. Waksman and his associates, working at Rutgers University, New Jersey, U.S., discovered the potent antimicrobial agent streptomycin in the growth medium of the soil microorganism Streptomyces griseus. In 1944–45 veterinarian W.H. Feldman and physician H.C. Hinshaw, working at the Mayo Clinic in Minnesota, demonstrated its specific effect in inhibiting tuberculosis in both animals and people. Wide clinical use of streptomycin promptly followed, eventually in combination with other drugs to attack resistant bacilli.

In 1952 a great advance was made with the successful testing of isoniazid in the United States and Germany. Isoniazid is the most important drug in the history of chemotherapy for tuberculosis; other drugs were brought out in following years, pyrazinamide in 1954, ethambutol in 1962, and rifampicin in 1963. By this time the industrialized countries were already seeing the health benefits of economic improvement, better sanitation, more widespread education, and particularly the establishment of public health practice, including specific measures for tuberculosis control. The rate of deaths from tuberculosis in England and Wales dropped from 190 per 100,000 population in 1900 to 7 per 100,000 in the early 1960s. In the United States during the same time period, it dropped from 194 per 100,000 to approximately 6 per 100,000. In the popular mind, tuberculosis was then a disease of the past, of the indigent, and of the Third World.

However, in the mid-1980s the number of deaths caused by tuberculosis began to rise again in developed countries. The disease’s resurgence was attributed in part to complacent health care systems, increased immigration of people from regions where tuberculosis was prevalent, and the spread of HIV. In addition, throughout the 1990s the number of cases of tuberculosis increased in Africa. Global programs such as the Stop TB Partnership, which was established in 2000, have worked to increase awareness of tuberculosis and to make new and existing treatments available to people living in developing countries most affected by the disease. In the early 2000s, as a result of the rapid implementation of global efforts to combat the disease, the epidemic in Africa slowed and incidence rates stabilized. Despite a leveling off of per capita incidence of tuberculosis, the global number of new cases continued to rise, due to population growth, especially in regions of Africa, Southeast Asia, and the eastern Mediterranean. The mortality rate from tuberculosis remains between 1.6 million and 2 million deaths per year.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1462 2022-08-05 14:23:43

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,980

Re: Miscellany

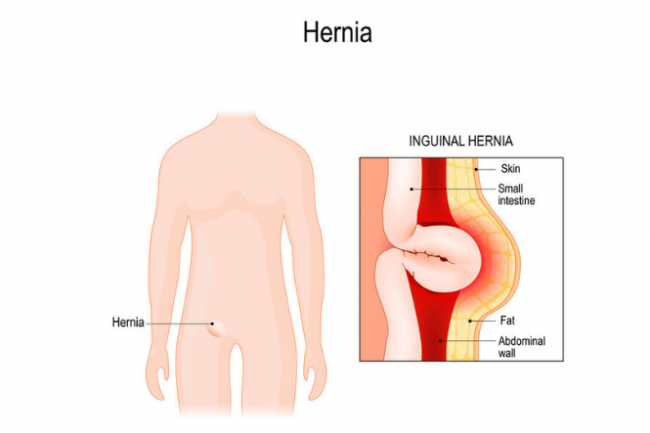

1435) Hernia

Summary