Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#1726 2023-04-09 13:12:51

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,380

Re: Miscellany

1629) Robot

Android (robot)

Summary

An android is a humanoid robot or other artificial being often made from a flesh-like material. Historically, androids were completely within the domain of science fiction and frequently seen in film and television, but advances in robot technology now allow the design of functional and realistic humanoid robots.

Terminology

The Oxford English Dictionary traces the earliest use (as "Androides") to Ephraim Chambers' 1728 Cyclopaedia, in reference to an automaton that St. Albertus Magnus allegedly created. By the late 1700s, "androides", elaborate mechanical devices resembling humans performing human activities, were displayed in exhibit halls. The term "android" appears in US patents as early as 1863 in reference to miniature human-like toy automatons. The term android was used in a more modern sense by the French author Auguste Villiers de l'Isle-Adam in his work Tomorrow's Eve (1886). This story features an artificial humanlike robot named Hadaly. As said by the officer in the story, "In this age of Realien advancement, who knows what goes on in the mind of those responsible for these mechanical dolls." The term made an impact into English pulp science fiction starting from Jack Williamson's The Cometeers (1936) and the distinction between mechanical robots and fleshy androids was popularized by Edmond Hamilton's Captain Future stories (1940–1944).

Although Karel Čapek's robots in R.U.R. (Rossum's Universal Robots) (1921)—the play that introduced the word robot to the world—were organic artificial humans, the word "robot" has come to primarily refer to mechanical humans, animals, and other beings. The term "android" can mean either one of these, while a cyborg ("cybernetic organism" or "bionic man") would be a creature that is a combination of organic and mechanical parts.

The term "droid", popularized by George Lucas in the original Star Wars film and now used widely within science fiction, originated as an abridgment of "android", but has been used by Lucas and others to mean any robot, including distinctly non-human form machines like R2-D2. The word "android" was used in Star Trek: The Original Series episode "What Are Little Girls Made Of?" The abbreviation "andy", coined as a pejorative by writer Philip K. Dickinson in his novel Do Androids Dream of Electric Sheep?, has seen some further usage, such as within the TV series Total Recall 2070.

While the term "android" is used in reference to human-looking robots in general (not necessarily male-looking humanoid robots), a robot with a female appearance can also be referred to as a gynoid. Besides one can refer to robots without alluding to their sexual appearance by calling them anthrobots (a portmanteau of anthrōpos and robot; see anthrobotics) or anthropoids (short for anthropoid robots; the term humanoids is not appropriate because it is already commonly used to refer to human-like organic species in the context of science fiction, futurism and speculative astrobiology).

Authors have used the term android in more diverse ways than robot or cyborg. In some fictional works, the difference between a robot and android is only superficial, with androids being made to look like humans on the outside but with robot-like internal mechanics. In other stories, authors have used the word "android" to mean a wholly organic, yet artificial, creation. Other fictional depictions of androids fall somewhere in between.

Eric G. Wilson, who defines an android as a "synthetic human being", distinguishes between three types of android, based on their body's composition:

* the mummy type – made of "dead things" or "stiff, inanimate, natural material", such as mummies, puppets, dolls and statues

* the golem type – made from flexible, possibly organic material, including golems and homunculi

* the automaton type – made from a mix of dead and living parts, including automatons and robots

Although human morphology is not necessarily the ideal form for working robots, the fascination in developing robots that can mimic it can be found historically in the assimilation of two concepts: simulacra (devices that exhibit likeness) and automata (devices that have independence).

Details

A Robot is any automatically operated machine that replaces human effort, though it may not resemble human beings in appearance or perform functions in a humanlike manner. By extension, robotics is the engineering discipline dealing with the design, construction, and operation of robots.

The concept of artificial humans predates recorded history, but the modern term robot derives from the Czech word robota (“forced labour” or “serf”), used in Karel Čapek’s play R.U.R. (1920). The play’s robots were manufactured humans, heartlessly exploited by factory owners until they revolted and ultimately destroyed humanity. Whether they were biological, like the monster in Mary Shelley’s Frankenstein (1818), or mechanical was not specified, but the mechanical alternative inspired generations of inventors to build electrical humanoids.

The word robotics first appeared in Isaac Asimov’s science-fiction story Runaround (1942). Along with Asimov’s later robot stories, it set a new standard of plausibility about the likely difficulty of developing intelligent robots and the technical and social problems that might result. Runaround also contained Asimov’s famous Three Laws of Robotics:

1. A robot may not injure a human being, or, through inaction, allow a human being to come to harm.

2. A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

This article traces the development of robots and robotics.

Industrial robots

Though not humanoid in form, machines with flexible behaviour and a few humanlike physical attributes have been developed for industry. The first stationary industrial robot was the programmable Unimate, an electronically controlled hydraulic heavy-lifting arm that could repeat arbitrary sequences of motions. It was invented in 1954 by the American engineer George Devol and was developed by Unimation Inc., a company founded in 1956 by American engineer Joseph Engelberger. In 1959 a prototype of the Unimate was introduced in a General Motors Corporation die-casting factory in Trenton, New Jersey. In 1961 Condec Corp. (after purchasing Unimation the preceding year) delivered the world’s first production-line robot to the GM factory; it had the unsavoury task (for humans) of removing and stacking hot metal parts from a die-casting machine. Unimate arms continue to be developed and sold by licensees around the world, with the automobile industry remaining the largest buyer.

More advanced computer-controlled electric arms guided by sensors were developed in the late 1960s and 1970s at the Massachusetts Institute of Technology (MIT) and at Stanford University, where they were used with cameras in robotic hand-eye research. Stanford’s Victor Scheinman, working with Unimation for GM, designed the first such arm used in industry. Called PUMA (Programmable Universal Machine for Assembly), they have been used since 1978 to assemble automobile subcomponents such as dash panels and lights. PUMA was widely imitated, and its descendants, large and small, are still used for light assembly in electronics and other industries. Since the 1990s small electric arms have become important in molecular biology laboratories, precisely handling test-tube arrays and pipetting intricate sequences of reagents.

Mobile industrial robots also first appeared in 1954. In that year a driverless electric cart, made by Barrett Electronics Corporation, began pulling loads around a South Carolina grocery warehouse. Such machines, dubbed AGVs (Automatic Guided Vehicles), commonly navigate by following signal-emitting wires entrenched in concrete floors. In the 1980s AGVs acquired microprocessor controllers that allowed more complex behaviours than those afforded by simple electronic controls. In the 1990s a new navigation method became popular for use in warehouses: AGVs equipped with a scanning laser triangulate their position by measuring reflections from fixed retro-reflectors (at least three of which must be visible from any location).

Although industrial robots first appeared in the United States, the business did not thrive there. Unimation was acquired by Westinghouse Electric Corporation in 1983 and shut down a few years later. Cincinnati Milacron, Inc., the other major American hydraulic-arm manufacturer, sold its robotics division in 1990 to the Swedish firm of Asea Brown Boveri Ltd. Adept Technology, Inc., spun off from Stanford and Unimation to make electric arms, is the only remaining American firm. Foreign licensees of Unimation, notably in Japan and Sweden, continue to operate, and in the 1980s other companies in Japan and Europe began to vigorously enter the field. The prospect of an aging population and consequent worker shortage induced Japanese manufacturers to experiment with advanced automation even before it gave a clear return, opening a market for robot makers. By the late 1980s Japan—led by the robotics divisions of Fanuc Ltd., math Electric Industrial Company, Ltd., Mitsubishi Group, and Honda Motor Company, Ltd.—was the world leader in the manufacture and use of industrial robots. High labour costs in Europe similarly encouraged the adoption of robot substitutes, with industrial robot installations in the European Union exceeding Japanese installations for the first time in 2001.

Robot toys

Lack of reliable functionality has limited the market for industrial and service robots (built to work in office and home environments). Toy robots, on the other hand, can entertain without performing tasks very reliably, and mechanical varieties have existed for thousands of years. (See automaton.) In the 1980s microprocessor-controlled toys appeared that could speak or move in response to sounds or light. More advanced ones in the 1990s recognized voices and words. In 1999 the Sony Corporation introduced a doglike robot named AIBO, with two dozen motors to activate its legs, head, and tail, two microphones, and a colour camera all coordinated by a powerful microprocessor. More lifelike than anything before, AIBOs chased coloured balls and learned to recognize their owners and to explore and adapt. Although the first AIBOs cost $2,500, the initial run of 5,000 sold out immediately over the Internet.

Robotics research

Dexterous industrial manipulators and industrial vision have roots in advanced robotics work conducted in artificial intelligence (AI) laboratories since the late 1960s. Yet, even more than with AI itself, these accomplishments fall far short of the motivating vision of machines with broad human abilities. Techniques for recognizing and manipulating objects, reliably navigating spaces, and planning actions have worked in some narrow, constrained contexts, but they have failed in more general circumstances.

The first robotics vision programs, pursued into the early 1970s, used statistical formulas to detect linear boundaries in robot camera images and clever geometric reasoning to link these lines into boundaries of probable objects, providing an internal model of their world. Further geometric formulas related object positions to the necessary joint angles needed to allow a robot arm to grasp them, or the steering and drive motions to get a mobile robot around (or to) the object. This approach was tedious to program and frequently failed when unplanned image complexities misled the first steps. An attempt in the late 1970s to overcome these limitations by adding an expert system component for visual analysis mainly made the programs more unwieldy—substituting complex new confusions for simpler failures.

In the mid-1980s Rodney Brooks of the MIT AI lab used this impasse to launch a highly visible new movement that rejected the effort to have machines create internal models of their surroundings. Instead, Brooks and his followers wrote computer programs with simple subprograms that connected sensor inputs to motor outputs, each subprogram encoding a behaviour such as avoiding a sensed obstacle or heading toward a detected goal. There is evidence that many insects function largely this way, as do parts of larger nervous systems. The approach resulted in some very engaging insectlike robots, but—as with real insects—their behaviour was erratic, as their sensors were momentarily misled, and the approach proved unsuitable for larger robots. Also, this approach provided no direct mechanism for specifying long, complex sequences of actions—the raison d’être of industrial robot manipulators and surely of future home robots (note, however, that in 2004 iRobot Corporation sold more than one million robot vacuum cleaners capable of simple insectlike behaviours, a first for a service robot).

Meanwhile, other researchers continue to pursue various techniques to enable robots to perceive their surroundings and track their own movements. One prominent example involves semiautonomous mobile robots for exploration of the Martian surface. Because of the long transmission times for signals, these “rovers” must be able to negotiate short distances between interventions from Earth.

A particularly interesting testing ground for fully autonomous mobile robot research is football (soccer). In 1993 an international community of researchers organized a long-term program to develop robots capable of playing this sport, with progress tested in annual machine tournaments. The first RoboCup games were held in 1997 in Nagoya, Japan, with teams entered in three competition categories: computer simulation, small robots, and midsize robots. Merely finding and pushing the ball was a major accomplishment, but the event encouraged participants to share research, and play improved dramatically in subsequent years. In 1998 Sony began providing researchers with programmable AIBOs for a new competition category; this gave teams a standard reliable prebuilt hardware platform for software experimentation.

While robot football has helped to coordinate and focus research in some specialized skills, research involving broader abilities is fragmented. Sensors—sonar and laser rangefinders, cameras, and special light sources—are used with algorithms that model images or spaces by using various geometric shapes and that attempt to deduce what a robot’s position is, where and what other things are nearby, and how different tasks can be accomplished. Faster microprocessors developed in the 1990s have enabled new, broadly effective techniques. For example, by statistically weighing large quantities of sensor measurements, computers can mitigate individually confusing readings caused by reflections, blockages, bad illumination, or other complications. Another technique employs “automatic” learning to classify sensor inputs—for instance, into objects or situations—or to translate sensor states directly into desired behaviour. Connectionist neural networks containing thousands of adjustable-strength connections are the most famous learners, but smaller, more-specialized frameworks usually learn faster and better. In some, a program that does the right thing as nearly as can be prearranged also has “adjustment knobs” to fine-tune the behaviour. Another kind of learning remembers a large number of input instances and their correct responses and interpolates between them to deal with new inputs. Such techniques are already in broad use for computer software that converts speech into text.

The future

Numerous companies are working on consumer robots that can navigate their surroundings, recognize common objects, and perform simple chores without expert custom installation. Perhaps about the year 2020 the process will have produced the first broadly competent “universal robots” with lizardlike minds that can be programmed for almost any routine chore. With anticipated increases in computing power, by 2030 second-generation robots with trainable mouselike minds may become possible. Besides application programs, these robots may host a suite of software “conditioning modules” that generate positive- and negative-reinforcement signals in predefined circumstances.

By 2040 computing power should make third-generation robots with monkeylike minds possible. Such robots would learn from mental rehearsals in simulations that would model physical, cultural, and psychological factors. Physical properties would include shape, weight, strength, texture, and appearance of things and knowledge of how to handle them. Cultural aspects would include a thing’s name, value, proper location, and purpose. Psychological factors, applied to humans and other robots, would include goals, beliefs, feelings, and preferences. The simulation would track external events and would tune its models to keep them faithful to reality. This should let a robot learn by imitation and afford it a kind of consciousness. By the middle of the 21st century, fourth-generation robots may exist with humanlike mental power able to abstract and generalize. Researchers hope that such machines will result from melding powerful reasoning programs to third-generation machines. Properly educated, fourth-generation robots are likely to become intellectually formidable.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1727 2023-04-09 18:50:11

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,380

Re: Miscellany

1630) Arecibo Telescope

Summary

Completed in 1963 and stewarded by the U.S. National Science Foundation since the 1970s, Arecibo Observatory has

contributed to many important scientific discoveries, including the first discovery of a binary pulsar, the first discovery of an extrasolar planet, the composition of the ionosphere, and the characterization of the properties and orbits of a number of potentially hazardous asteroids.

Location: Arecibo Observatory’s principal observing facilities are located 19 kilometers south of the city of Arecibo, Puerto Rico.

Operation and management: Arecibo Observatory is operated and managed for NSF by the Arecibo Observatory

Management Team, which is led by the University of Central Florida in partnership with the Universidad Ana G. Méndez

and Yang Enterprises Inc.

NSF has invested over $200 million in Arecibo operations, management and maintenance over the past two decades. The observatory has undergone two major upgrades in its lifetime (during the 1970s and 1990s), which NSF funded (along with partial NASA support), totaling $25 million. Since Fiscal Year 2018, NSF has contributed around $7.5 million-per-year to Arecibo operations and management.

Technical specifications and observational capabilities: Arecibo Observatory’s principal astronomical research instrument is a 1,000 foot (305 meter) fixed spherical radio/radar telescope. Its frequency capabilities range from 50 megahertz to 11 gigahertz. Transmitters include an S-band (2,380 megahertz) radar system for planetary studies and a 430 megahertz

radar system for atmospheric science studies and a heating facility for ionospheric research

Details

The Arecibo Telescope was a 305 m (1,000 ft) spherical reflector radio telescope built into a natural sinkhole at the Arecibo Observatory located near Arecibo, Puerto Rico. A cable-mount steerable receiver and several radar transmitters for emitting signals were mounted 150 m (492 ft) above the dish. Completed in November 1963, the Arecibo Telescope was the world's largest single-aperture telescope for 53 years, until it was surpassed in July 2016 by the Five-hundred-meter Aperture Spherical Telescope (FAST) in Guizhou, China.

The Arecibo Telescope was primarily used for research in radio astronomy, atmospheric science, and radar astronomy, as well as for programs that search for extraterrestrial intelligence (SETI). Scientists wanting to use the observatory submitted proposals that were evaluated by independent scientific referees. NASA also used the telescope for near-Earth object detection programs. The observatory, funded primarily by the National Science Foundation (NSF) with partial support from NASA, was managed by Cornell University from its completion in 1963 until 2011, after which it was transferred to a partnership led by SRI International. In 2018, a consortium led by the University of Central Florida assumed operation of the facility.

The telescope's unique and futuristic design led to several appearances in film, gaming and television productions, such as for the climactic fight scene in the James Bond film GoldenEye (1995). It is one of the 116 pictures included in the Voyager Golden Record. It has been listed on the US National Register of Historic Places since 2008. The center was named an IEEE Milestone in 2001.

Since 2006, the NSF has reduced its funding commitment to the observatory, leading academics to push for additional funding support to continue its programs. The telescope was damaged by Hurricane Maria in 2017 and was affected by earthquakes in 2019 and 2020. Two cable breaks, one in August 2020 and a second in November 2020, threatened the structural integrity of the support structure for the suspended platform and damaged the dish. Due to uncertainty over the remaining strength of the other cables supporting the suspended structure, and the risk of collapse owing to further failures making repairs dangerous, the NSF announced on November 19, 2020, that the telescope would be decommissioned and dismantled, with the radio telescope and LIDAR facility remaining operational. Before it could be decommissioned, several of the remaining support cables suffered a critical failure and the support structure, antenna, and dome assembly all fell into the dish at 7:55 a.m. local time on December 1, 2020, destroying the telescope. The NSF determined that it would not rebuild the telescope or similar Observatory at the site in October 2022.

General information

Comparison of the Arecibo (top), FAST (middle) and RATAN-600 (bottom) radio telescopes at the same scale

The telescope's main collecting dish had the shape of a spherical cap 1,000 feet (305 m) in diameter with an 869-foot (265 m) radius of curvature, and was constructed inside a karst sinkhole. The dish surface was made of 38,778 perforated aluminum panels, each about 3 by 7 feet (1 by 2 m), supported by a mesh of steel cables.[9] The ground beneath supported shade-tolerant vegetation.

The telescope had three radar transmitters, with effective isotropic radiated powers (EIRPs) of 22 TW (continuous) at 2380 MHz, 3.2 TW (pulse peak) at 430 MHz, and 200 MW at 47 MHz, as well as an ionospheric modification facility operating at 5.1 and 8.175 MHz.

The dish remained stationary, while receivers and transmitters were moved to the proper focal point of the telescope to aim at the desired target. As a spherical mirror, the reflector's focus is along a line rather than at one point. As a result, complex line feeds were implemented to carry out observations, with each line feed covering a narrow frequency band measuring 10–45 MHz. A limited number of line feeds could be used at any one time, limiting the telescope's flexibility.

The receiver was on an 820-tonne (900-short-ton) platform suspended 150 m (492 ft) above the dish by 18 main cables running from three reinforced concrete towers (six cables per tower), one 111 m (365 ft) high and the other two 81 m (265 ft) high, placing their tops at the same elevation. Each main cable was a 8 cm (3.1 in) diameter bundle containing 160 wires, with the bundle painted over and dry air continuously blown through to prevent corrosion due to the humid tropic climate. The platform had a rotating, bow-shaped track 93 m (305 ft) long, called the azimuth arm, carrying the receiving antennas and secondary and tertiary reflectors. This allowed the telescope to observe any region of the sky in a forty-degree cone of visibility about the local zenith (between −1 and 38 degrees of declination). Puerto Rico's location near the Northern Tropic allowed the Arecibo telescope to view the planets in the Solar System over the northern half of their orbit. The round trip light time to objects beyond Saturn is longer than the 2.6-hour time that the telescope could track a celestial position, preventing radar observations of more distant objects.

Additional Information:

Arecibo Observatory

Arecibo Observatory was an astronomical observatory located 16 km (10 miles) south of the town of Arecibo in Puerto Rico. It was the site of the world’s largest single-unit radio telescope until FAST in China began observations in 2016. This instrument, built in the early 1960s, employed a 305-metre (1,000-foot) spherical reflector consisting of perforated aluminum panels that focused incoming radio waves on movable antenna structures positioned about 168 metres (550 feet) above the reflector surface. The antenna structures could be moved in any direction, making it possible to track a celestial object in different regions of the sky. The observatory also has an auxiliary 12-metre (39-foot) radio telescope and a high-power laser transmitting facility used to study Earth’s atmosphere.

In August 2020 a cable holding up the central platform of the 305-metre telescope snapped and made a hole in the dish. After a second cable broke in November 2020, the National Science Foundation (NSF) announced that the telescope was in danger of collapse and the cables could not be safely repaired. The NSF thus planned to decommission the observatory. On December 1, 2020, days after the NSF’s announcement, the cables broke, and the central platform collapsed into the dish. In October 2022 the NSF announced that it would not rebuild the telescope but would instead build an educational centre at the site.

Scientists using the Arecibo Observatory discovered the first extrasolar planets around the pulsar B1257+12 in 1992. The observatory also produced detailed radar maps of the surface of Venus and Mercury and discovered that Mercury rotated every 59 days instead of 88 days and so did not always show the same face to the Sun. American astronomers Russell Hulse and Joseph H. Taylor, Jr., used Arecibo to discover the first binary pulsar. They showed that it was losing energy through gravitational radiation at the rate predicted by physicist Albert Einstein’s theory of general relativity, and they won the Nobel Prize for Physics in 1993 for their discovery.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1728 2023-04-10 13:17:38

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,380

Re: Miscellany

1631) Retirement home

Gist

A retirement home – sometimes called an old people's home or old age home, although old people's home can also refer to a nursing home – is a multi-residence housing facility intended for the elderly. Typically, each person or couple in the home has an apartment-style room or suite of rooms.

Details

A retirement home – sometimes called an old people's home or old age home, although old people's home can also refer to a nursing home – is a multi-residence housing facility intended for the elderly. Typically, each person or couple in the home has an apartment-style room or suite of rooms. Additional facilities are provided within the building. This can include facilities for meals, gatherings, recreation activities, and some form of health or hospital care. A place in a retirement home can be paid for on a rental basis, like an apartment, or can be bought in perpetuity on the same basis as a condominium.

A retirement home differs from a nursing home primarily in the level of medical care given. Retirement communities, unlike retirement homes, offer separate and autonomous homes for residents.

Retirement homes offer meal-making and some personal care services. Assisted living facilities, memory care facilities and nursing homes can all be referred to as retirement homes. The cost of living in a retirement home varies from $25,000 to $100,000 per year, although it can exceed this range, according to Senior Living Near Me's senior housing guide.

In the United Kingdom, there were about 750,000 places across 25,000 retirement housing schemes in 2021 with a forecast that numbers would grow by nearly 10% over the next five years.

United States

Proper design is integral to the experience within retirement homes, especially for those experiencing dementia. Wayfinding and spatial orientation become difficult for residents with dementia, causing confusion, agitation and a general decline in the physical and mental wellbeing.

Signage

Those living with dementia often display difficulty with distinguishing relevance of information within signage. This phenomenon can be attributed to a combination of fixative behaviors as well as a tendency towards non discriminatory reading. Therefore in creating appropriate signage for retirement homes, we must first consider the who, what, when, where, and why of the design and placement of signage.

Considering the “who” of the user requires an understanding of those who interact with North American care homes. This group includes staff and visitors, however understandable wayfinding is most important for residents experiencing dementia. This then leads to “what” kind of information should be presented. Important information for staff, visitors, and patients covers a great variety, and altogether the amount of signage required directly conflicts with the ideal of reducing distraction, overstimulation, and non-discriminatory reading for those within retirement homes. This is where the “when”, “where”, and “why” of signage must be addressed. In deciding “when” information should be presented, Tetsuya argues that it is “important that essential visual information be provided at a relatively early stage in walking routes.” Therefore, we can assume that immediately relevant information such as the direction of available facilities should be placed near the entrance of patient rooms, or at the end of hallways housing patient rooms. This observation also leads into “where” appropriate placement would be for information, and “why” it is being presented. In regards to wayfinding signage, making navigation as understandable as possible can be achieved by avoiding distraction while navigating. Addressing this, Romedi Passini suggests that “graphic wayfinding information notices along circulation routes should be clear and limited in number and other information should be placed somewhere else.” Signage not related to wayfinding can be distracting if placed nearby, and detract from the effectiveness of wayfinding signage. Instead, Passini suggests “to create little alcoves specifically designed for posting public announcements, invitations, and publicity.” These alcoves would best be placed in areas of low stimulation, as they would be better understood in a context that is not overwhelming. In a study done by Kristen Day, it was observed that areas of high stimulation were “found to occur in elevators, corridors, nursing stations, bathing rooms, and other residents’ rooms, whereas low stimulation has been observed in activity and dining rooms”. As of such, we can assume that activity and dining rooms would be the best place for these alcoves to reside.

Architectural cues

Another relevant method of wayfinding is the presence of architectural cues within North American senior retirement homes. This method is most often considered during the design of new senior care centers, however there are still multiple items that can easily be implemented within existing care homes as well. Architectural cues can impact residents by communicating purpose through the implied use of a setting or object, assisting in navigation without the need for cognitive mapping, and making areas more accessible and less distressing for those with decreased mobility.

We will investigate how architectural cues communicate purpose and influence the behavior of residents. In a case study by Passini,“a patient, seeing a doorbell (for night use) at the hospital, immediately decided to ring”. This led to the conclusion that “architectural elements … determine to a certain extent the behavior of less independent patients.” In considering the influence of architectural cues on residents, this becomes an important observation, as it suggests that positive behavior can be encouraged through the use of careful planning of rooms. This claim is further supported in a case study by Day, in which “frequency of toilet use increased dramatically when toilets were visibly accessible to residents.” Having toilets placed within the sight lines of the residents encourages behavior of more frequently visiting the washroom, lessening the burden on nursing staff as well as leading to increased health of the residents. This communication of purpose though learned behavior can translate into creating more legible interior design as well. Through the use of distinctive furniture and flooring such as a bookshelf in a communal living room, the functionality and differentiation of spaces can become much easier for residents to navigate. Improving environmental legibility can also be useful in assisting with navigation within a care home.

Assistance in navigation through reducing a need for complicated cognitive mapping is an asset that can be achieved in multiple ways within care centers. Visual landmarks existing in both architectural and interior design helps provide differentiation between spaces. Burton notes “residents reported that...landmarks (features such as clocks and plants at key sections of corridors)[were useful in wayfinding]". Navigating using distinct landmarks can also define individual resident rooms. Tetsuya suggests that “doors of residents' rooms should have differentiated characteristics” in order to help in differentiating their own personal rooms. This can be done through the use of personal objects placed on or beside doorways, or in providing distinctive doors for each room.

Finally, considering accessibility is integral in designing architecture within care homes. Many members of the senior community require the use of equipment and mobility aids. As such, requirements of these items must be considered in designing a senior specific space. Open and clear routes of travel benefit the user by clearly directing residents along the path and reducing difficulty caused by the use of mobility aids. Similarly, creating shorter routes of travel by moving fundamental facilities such as the dining room closer to patient rooms has also been shown to reduce anxiety and distress. Moving between spaces becomes simpler, avoiding high stimulation areas such as elevators while also assisting wayfinding by making a simpler, smaller layout. Each of these methods can be achieved through the use of open core spaces. These spaces integrate multiple rooms into a single open concept space, "giving visual access and allowing a certain understanding of space without having to integrate into an ensemble that is perceived in parts, which is the most difficult aspect of cognitive mapping". In integrating more open core spaces into North American senior facilities, spaces become more accessible and easier to navigate.

Additional Information

Old age, also called senescence, in human beings, is the final stage of the normal life span. Definitions of old age are not consistent from the standpoints of biology, demography (conditions of mortality and morbidity), employment and retirement, and sociology. For statistical and public administrative purposes, however, old age is frequently defined as 60 or 65 years of age or older.

Old age has a dual definition. It is the last stage in the life processes of an individual, and it is an age group or generation comprising a segment of the oldest members of a population. The social aspects of old age are influenced by the relationship of the physiological effects of aging and the collective experiences and shared values of that generation to the particular organization of the society in which it exists.

There is no universally accepted age that is considered old among or within societies. Often discrepancies exist as to what age a society may consider old and what members in that society of that age and older may consider old. Moreover, biologists are not in agreement about the existence of an inherent biological cause for aging. However, in most contemporary Western countries, 60 or 65 is the age of eligibility for retirement and old-age social programs, although many countries and societies regard old age as occurring anywhere from the mid-40s to the 70s.

Social programs

State institutions to aid the elderly have existed in varying degrees since the time of the ancient Roman Empire. England in 1601 enacted the Poor Law, which recognized the state’s responsibility to the aged, although programs were carried out by local church parishes. An amendment to this law in 1834 instituted workhouses for the poor and aged, and in 1925 England introduced social insurance for the aged regulated by statistical evaluations. In 1940 programs for the aged came under England’s welfare state system.

In the 1880s Otto von Bismarck in Germany introduced old-age pensions whose model was followed by most other western European countries. Today more than 100 nations have some form of social security program for the aged. The United States was one of the last countries to institute such programs. Not until the Social Security Act of 1935 was formulated to relieve hardships caused by the Great Depression were the elderly granted old-age pensions. For the most part, these state programs, while alleviating some burdens of aging, still do not bring older people to a level of income comparable to that of younger people.

Physiological effects

The physiological effects of aging differ widely among individuals. However, chronic ailments, especially aches and pains, are more prevalent than acute ailments, requiring older people to spend more time and money on medical problems than younger people. The rising cost of medical care has caused a growing concern among older people and societies, in general resulting in constant reevaluation and reform of institutions and programs designed to aid the elderly with these expenses.

In ancient Rome and medieval Europe the average life span is estimated to have been between 20 and 30 years. Life expectancy today has expanded in historically unprecedented proportions, greatly increasing the numbers of people who survive over the age of 65. Therefore, the instances of medical problems associated with aging, such as certain kinds of cancer and heart disease, have increased, giving rise to greater consideration, both in research and in social programs, for accommodating this increase.

Certain aspects of sensory and perceptual skills, muscular strength, and certain kinds of memory tend to diminish with age, rendering older people unsuitable for some activities. There is, however, no conclusive evidence that intelligence deteriorates with age, but rather that it is more closely associated with education and standard of living. Sexual activity tends to decrease with age, but if an individual is healthy there is no age limit for its continuance.

Many of the myths surrounding the process of aging are being invalidated by increased studies in gerontology, but there still is not sufficient information to provide adequate conclusions.

Demographic and socioeconomic influences

In general the social status of an age group is related to its effective influence in its society, which is associated with that group’s function in productivity. In agrarian societies the elderly have a status of respectability. Their life experiences and knowledge are regarded as valuable, especially in preliterate societies where knowledge is orally transmitted. The range of activities in these societies allows the elderly to continue to be productive members of their communities.

In industrialized nations the status of the elderly has altered as the socioeconomic conditions have changed, tending to reduce the status of the elderly as a society becomes more technologically oriented. Since physical disability is less a factor in productive capability in industrialized countries, this reduction in social status is thought to have been generated by several interrelated factors: the numbers of still able-bodied older workers outstripping the number of available employment opportunities, the decline in self-employment which allows a worker to gradually decrease activity with age, and the continual introduction of new technology requiring special training and education.

Although in certain fields old age is still considered an asset, particularly in the political arena, older people are increasingly being forced into retirement before their productive years are over, causing problems in their psychological adaptations to old age. Retirement is not regarded unfavourably in all instances, but its economic limitations tend to further remove older people from the realm of influence and raise problems in the extended use of leisure time and housing. As a consequence, financial preparation for retirement has become an increased concern for individuals and society. For an essay on retirement, medical care, and other issues affecting the elderly, see John Kenneth Galbraith’s Notes on Aging, a Britannica sidebar by the distinguished economist, ambassador, and public servant.

Familial relationships tend to be the focus of the elderly’s attention. However, as the family structure in industrialized countries has changed in the past 100 years from a unit encompassing several generations living in close proximity to self-contained nuclear families of only parents and young children, older people have become isolated from younger people and each other. Studies have shown that as a person ages he or she prefers to remain in the same locale. However, the tendency for young people in industrialized countries to be highly mobile has forced older people to decide whether to move to keep up with their families or to remain in neighbourhoods which also change, altering their familiar patterns of activity. Although most older people do live within an hour from their closest child, industrialized societies are faced with formulating programs to accommodate increasing numbers of older people who function independently of their families.

A significant factor in the social aspects of old age concerns the values and education of the generation itself. In industrialized countries especially, where changes occur more rapidly than in agrarian societies, a generation born 65 years ago may find that the dominant mores, expectations, definitions of the quality of life, and roles of older people have changed considerably by the time it reaches old age. Formal education, which usually takes place in the early years and forms collective opinions and mores, tends to enhance the difficulties in adapting to old age. However, resistance to change, which is often associated with the elderly, is being shown to be less an inability to change than a trend in older people to regard life with a tolerant attitude. Apparent passivity may actually be a choice based on experience, which has taught older people to perceive certain aspects of life as unchangeable. Adult education programs are beginning to close the generation gap; however, as each successive generation reaches old age, bringing with it its particular biases and preferences, new problems arise requiring new social accommodations.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1729 2023-04-11 14:01:39

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,380

Re: Miscellany

1632) Random Number Generator/Generation

Summary:

Random Number Generator

A random number generator is a hardware device or software algorithm that generates a number that is taken from a limited or unlimited distribution and outputs it. The two main types of random number generators are pseudo random number generators and true random number generators.

Pseudo Random Number Generators

Random number generators are typically software, pseudo random number generators. Their outputs are not truly random numbers. Instead they rely on algorithms to mimic the selection of a value to approximate true randomness. Pseudo random number generators work with the user setting the distribution, or scope from which the random number is selected (e.g. lowest to highest), and the number is instantly presented.

The outputted values from a pseudo random number are adequate for use in most applications but they should not always be relied on for secure cryptographic implementations. For such uses, a cryptographically secure pseudo random number generator is called for.

True Random Number Generators

A true random number generator — a hardware random number generator (HRNG) or true random number generator (TRNG) — is cryptographically secure and takes into account physical attributes such as atmospheric or thermal conditions. Such tools may also take into account measurement biases. They may also utilize physical coin flipping and dice rolling processes. A TRNG or HRNG is useful for creating seed tokens.

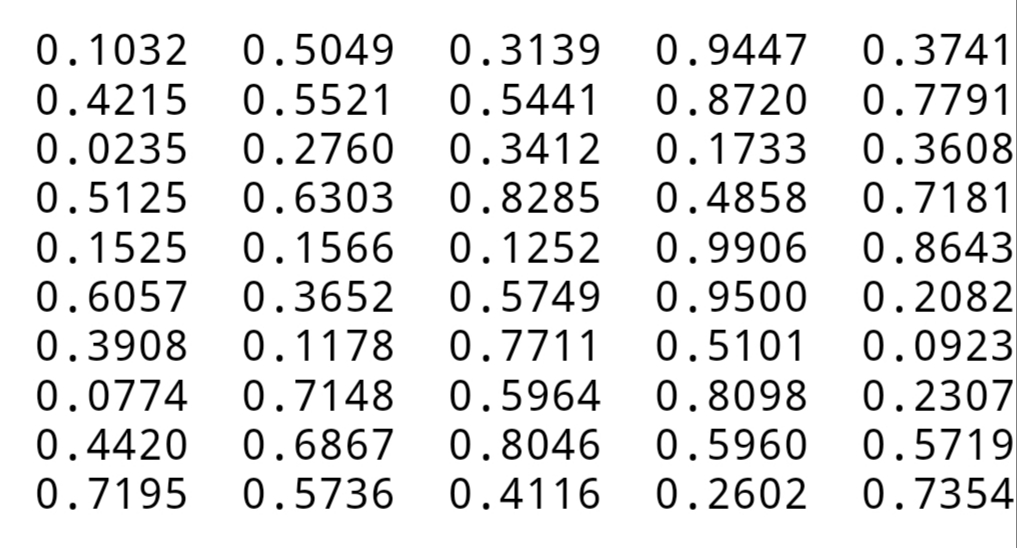

Example:

“To assure a high degree of arbitrariness in games or even non-mission-critical security, you can use a random number generator to come up with different values since these software tools greatly increase the choice while cutting out most human biases.”

Details

Random number generation is a process by which, often by means of a random number generator (RNG), a sequence of numbers or symbols that cannot be reasonably predicted better than by random chance is generated. This means that the particular outcome sequence will contain some patterns detectable in hindsight but unpredictable to foresight. True random number generators can be hardware random-number generators (HRNGs), wherein each generation is a function of the current value of a physical environment's attribute that is constantly changing in a manner that is practically impossible to model. This would be in contrast to so-called "random number generations" done by pseudorandom number generators (PRNGs), which generate numbers that only look random but are in fact pre-determined—these generations can be reproduced simply by knowing the state of the PRNG.

Various applications of randomness have led to the development of different methods for generating random data. Some of these have existed since ancient times, including well-known examples like the rolling of dice, coin flipping, the shuffling of playing cards, the use of yarrow stalks (for divination) in the I Ching, as well as countless other techniques. Because of the mechanical nature of these techniques, generating large quantities of sufficiently random numbers (important in statistics) required much work and time. Thus, results would sometimes be collected and distributed as random number tables.

Several computational methods for pseudorandom number generation exist. All fall short of the goal of true randomness, although they may meet, with varying success, some of the statistical tests for randomness intended to measure how unpredictable their results are (that is, to what degree their patterns are discernible). This generally makes them unusable for applications such as cryptography. However, carefully designed cryptographically secure pseudorandom number generators (CSPRNGS) also exist, with special features specifically designed for use in cryptography.

Practical applications and uses

Random number generators have applications in gambling, statistical sampling, computer simulation, cryptography, completely randomized design, and other areas where producing an unpredictable result is desirable. Generally, in applications having unpredictability as the paramount feature, such as in security applications, hardware generators are generally preferred over pseudorandom algorithms, where feasible.

Pseudorandom number generators are very useful in developing Monte Carlo-method simulations, as debugging is facilitated by the ability to run the same sequence of random numbers again by starting from the same random seed. They are also used in cryptography – so long as the seed is secret. Sender and receiver can generate the same set of numbers automatically to use as keys.

The generation of pseudorandom numbers is an important and common task in computer programming. While cryptography and certain numerical algorithms require a very high degree of apparent randomness, many other operations only need a modest amount of unpredictability. Some simple examples might be presenting a user with a "random quote of the day", or determining which way a computer-controlled adversary might move in a computer game. Weaker forms of randomness are used in hash algorithms and in creating amortized searching and sorting algorithms.

Some applications which appear at first sight to be suitable for randomization are in fact not quite so simple. For instance, a system that "randomly" selects music tracks for a background music system must only appear random, and may even have ways to control the selection of music: a true random system would have no restriction on the same item appearing two or three times in succession.

"True" vs. pseudo-random numbers

There are two principal methods used to generate random numbers. The first method measures some physical phenomenon that is expected to be random and then compensates for possible biases in the measurement process. Example sources include measuring atmospheric noise, thermal noise, and other external electromagnetic and quantum phenomena. For example, cosmic background radiation or radioactive decay as measured over short timescales represent sources of natural entropy (as a measure of unpredictability or surprise of the number generation process).

The speed at which entropy can be obtained from natural sources is dependent on the underlying physical phenomena being measured. Thus, sources of naturally occurring "true" entropy are said to be blocking – they are rate-limited until enough entropy is harvested to meet the demand. On some Unix-like systems, including most Linux distributions, the pseudo device file /dev/random will block until sufficient entropy is harvested from the environment. Due to this blocking behavior, large bulk reads from /dev/random, such as filling a hard disk drive with random bits, can often be slow on systems that use this type of entropy source.

The second method uses computational algorithms that can produce long sequences of apparently random results, which are in fact completely determined by a shorter initial value, known as a seed value or key. As a result, the entire seemingly random sequence can be reproduced if the seed value is known. This type of random number generator is often called a pseudorandom number generator. This type of generator typically does not rely on sources of naturally occurring entropy, though it may be periodically seeded by natural sources. This generator type is non-blocking, so they are not rate-limited by an external event, making large bulk reads a possibility.

Some systems take a hybrid approach, providing randomness harvested from natural sources when available, and falling back to periodically re-seeded software-based cryptographically secure pseudorandom number generators (CSPRNGs). The fallback occurs when the desired read rate of randomness exceeds the ability of the natural harvesting approach to keep up with the demand. This approach avoids the rate-limited blocking behavior of random number generators based on slower and purely environmental methods.

While a pseudorandom number generator based solely on deterministic logic can never be regarded as a "true" random number source in the purest sense of the word, in practice they are generally sufficient even for demanding security-critical applications. Carefully designed and implemented pseudorandom number generators can be certified for security-critical cryptographic purposes, as is the case with the yarrow algorithm and fortuna. The former is the basis of the /dev/random source of entropy on FreeBSD, AIX, OS X, NetBSD, and others. OpenBSD uses a pseudorandom number algorithm known as arc4random.

Generation methods

Physical methods

The earliest methods for generating random numbers, such as dice, coin flipping and roulette wheels, are still used today, mainly in games and gambling as they tend to be too slow for most applications in statistics and cryptography.

A physical random number generator can be based on an essentially random atomic or subatomic physical phenomenon whose unpredictability can be traced to the laws of quantum mechanics. Sources of entropy include radioactive decay, thermal noise, shot noise, avalanche noise in Zener diodes, clock drift, the timing of actual movements of a hard disk read-write head, and radio noise. However, physical phenomena and tools used to measure them generally feature asymmetries and systematic biases that make their outcomes not uniformly random. A randomness extractor, such as a cryptographic hash function, can be used to approach a uniform distribution of bits from a non-uniformly random source, though at a lower bit rate.

The appearance of wideband photonic entropy sources, such as optical chaos and amplified spontaneous emission noise, greatly aid the development of the physical random number generator. Among them, optical chaos has a high potential to physically produce high-speed random numbers due to its high bandwidth and large amplitude. A prototype of a high speed, real-time physical random bit generator based on a chaotic laser was built in 2013.

Various imaginative ways of collecting this entropic information have been devised. One technique is to run a hash function against a frame of a video stream from an unpredictable source. Lavarand used this technique with images of a number of lava lamps. HotBits measures radioactive decay with Geiger–Muller tubes, while Random.org uses variations in the amplitude of atmospheric noise recorded with a normal radio.

Another common entropy source is the behavior of human users of the system. While people are not considered good randomness generators upon request, they generate random behavior quite well in the context of playing mixed strategy games. Some security-related computer software requires the user to make a lengthy series of mouse movements or keyboard inputs to create sufficient entropy needed to generate random keys or to initialize pseudorandom number generators.

Computational methods

Most computer generated random numbers use PRNGs which are algorithms that can automatically create long runs of numbers with good random properties but eventually the sequence repeats (or the memory usage grows without bound). These random numbers are fine in many situations but are not as random as numbers generated from electromagnetic atmospheric noise used as a source of entropy. The series of values generated by such algorithms is generally determined by a fixed number called a seed. One of the most common PRNG is the linear congruential generator, which uses the recurrence

The maximum number of numbers the formula can produce is the modulus, m. The recurrence relation can be extended to matrices to have much longer periods and better statistical properties . To avoid certain non-random properties of a single linear congruential generator, several such random number generators with slightly different values of the multiplier coefficient, a, can be used in parallel, with a "master" random number generator that selects from among the several different generators.

A simple pen-and-paper method for generating random numbers is the so-called middle-square method suggested by John von Neumann. While simple to implement, its output is of poor quality. It has a very short period and severe weaknesses, such as the output sequence almost always converging to zero. A recent innovation is to combine the middle square with a Weyl sequence. This method produces high quality output through a long period.

Most computer programming languages include functions or library routines that provide random number generators. They are often designed to provide a random byte or word, or a floating point number uniformly distributed between 0 and 1.

The quality i.e. randomness of such library functions varies widely from completely predictable output, to cryptographically secure. The default random number generator in many languages, including Python, Ruby, R, IDL and PHP is based on the Mersenne Twister algorithm and is not sufficient for cryptography purposes, as is explicitly stated in the language documentation. Such library functions often have poor statistical properties and some will repeat patterns after only tens of thousands of trials. They are often initialized using a computer's real-time clock as the seed, since such a clock is 64 bit and measures in nanoseconds, far beyond the person's precision. These functions may provide enough randomness for certain tasks (for example video games) but are unsuitable where high-quality randomness is required, such as in cryptography applications, statistics or numerical analysis.

Much higher quality random number sources are available on most operating systems; for example /dev/random on various BSD flavors, Linux, Mac OS X, IRIX, and Solaris, or CryptGenRandom for Microsoft Windows. Most programming languages, including those mentioned above, provide a means to access these higher quality sources.

By humans

Random number generation may also be performed by humans, in the form of collecting various inputs from end users and using them as a randomization source. However, most studies find that human subjects have some degree of non-randomness when attempting to produce a random sequence of e.g. digits or letters. They may alternate too much between choices when compared to a good random generator; thus, this approach is not widely used.

Post-processing and statistical checks

Even given a source of plausible random numbers (perhaps from a quantum mechanically based hardware generator), obtaining numbers which are completely unbiased takes care. In addition, behavior of these generators often changes with temperature, power supply voltage, the age of the device, or other outside interference. And a software bug in a pseudorandom number routine, or a hardware bug in the hardware it runs on, may be similarly difficult to detect.

Generated random numbers are sometimes subjected to statistical tests before use to ensure that the underlying source is still working, and then post-processed to improve their statistical properties. An example would be the TRNG9803 hardware random number generator, which uses an entropy measurement as a hardware test, and then post-processes the random sequence with a shift register stream cipher. It is generally hard to use statistical tests to validate the generated random numbers. Wang and Nicol proposed a distance-based statistical testing technique that is used to identify the weaknesses of several random generators. Li and Wang proposed a method of testing random numbers based on laser chaotic entropy sources using Brownian motion properties.

Other considerations:

Uniform distributions

Most random number generators natively work with integers or individual bits, so an extra step is required to arrive at the "canonical" uniform distribution between 0 and 1. The implementation is not as trivial as dividing the integer by its maximum possible value. Specifically:

* The integer used in the transformation must provide enough bits for the intended precision.

* The nature of floating-point math itself means there exists more precision the closer the number is to zero. This extra precision is usually not used due to the sheer number of bits required.

* Rounding error in division may bias the result. At worst, a supposedly excluded bound may be drawn contrary to expectations based on real-number math.

The mainstream algorithm, used by OpenJDK, Rust, and NumPy, is described in a proposal for C++'s STL. It does not use the extra precision and suffers from bias only in the last bit due to round-to-even. Other numeric concerns are warranted when shifting this "canonical" uniform distribution to a different range. A proposed method for the Swift programming language claims to use the full precision everywhere.

Uniformly distributed integers are commonly used in algorithms such as the Fisher–Yates shuffle. Again, a naive implementation may induce a modulo bias into the result, so more involved algorithms must be used. A method that nearly never performs division was described in 2018 by Daniel Lemire, with the current state-of-the-art being the arithmetic encoding-inspired 2021 "optimal algorithm" by Stephen Canon of Apple Inc.[22]

Most 0 to 1 RNGs include 0 but exclude 1, while others include or exclude both.

Other distributions

Given a source of uniform random numbers, there are a couple of methods to create a new random source that corresponds to a probability density function. One method, called the inversion method, involves integrating up to an area greater than or equal to the random number (which should be generated between 0 and 1 for proper distributions). A second method, called the acceptance-rejection method, involves choosing an x and y value and testing whether the function of x is greater than the y value. If it is, the x value is accepted. Otherwise, the x value is rejected and the algorithm tries again.

As an example for rejection sampling, to generate a pair of statistically independent standard normally distributed random numbers (x, y), one may first generate the polar coordinates (r, θ), where r2~χ22 and θ~UNIFORM(0,2π) (see Box–Muller transform).

Whitening

The outputs of multiple independent RNGs can be combined (for example, using a bit-wise XOR operation) to provide a combined RNG at least as good as the best RNG used. This is referred to as software whitening.

Computational and hardware random number generators are sometimes combined to reflect the benefits of both kinds. Computational random number generators can typically generate pseudorandom numbers much faster than physical generators, while physical generators can generate "true randomness."

Low-discrepancy sequences as an alternative

Some computations making use of a random number generator can be summarized as the computation of a total or average value, such as the computation of integrals by the Monte Carlo method. For such problems, it may be possible to find a more accurate solution by the use of so-called low-discrepancy sequences, also called quasirandom numbers. Such sequences have a definite pattern that fills in gaps evenly, qualitatively speaking; a truly random sequence may, and usually does, leave larger gaps.

Activities and demonstrations

The following sites make available random number samples:

* The SOCR resource pages contain a number of hands-on interactive activities and demonstrations of random number generation using Java applets.

* The Quantum Optics Group at the ANU generates random numbers sourced from quantum vacuum. Sample of random numbers are available at their quantum random number generator research page.

* Random.org makes available random numbers that are sourced from the randomness of atmospheric noise.

The Quantum Random Bit Generator Service at the Ruđer Bošković Institute harvests randomness from the quantum process of photonic emission in semiconductors. They supply a variety of ways of fetching the data, including libraries for several programming languages.

The Group at the Taiyuan University of Technology generates random numbers sourced from a chaotic laser. Samples of random number are available at their Physical Random Number Generator Service.

Backdoors

Since much cryptography depends on a cryptographically secure random number generator for key and cryptographic nonce generation, if a random number generator can be made predictable, it can be used as backdoor by an attacker to break the encryption.

The NSA is reported to have inserted a backdoor into the NIST certified cryptographically secure pseudorandom number generator Dual EC DRBG. If for example an SSL connection is created using this random number generator, then according to Matthew Green it would allow NSA to determine the state of the random number generator, and thereby eventually be able to read all data sent over the SSL connection. Even though it was apparent that Dual_EC_DRBG was a very poor and possibly backdoored pseudorandom number generator long before the NSA backdoor was confirmed in 2013, it had seen significant usage in practice until 2013, for example by the prominent security company RSA Security. There have subsequently been accusations that RSA Security knowingly inserted a NSA backdoor into its products, possibly as part of the Bullrun program. RSA has denied knowingly inserting a backdoor into its products.

It has also been theorized that hardware RNGs could be secretly modified to have less entropy than stated, which would make encryption using the hardware RNG susceptible to attack. One such method which has been published works by modifying the dopant mask of the chip, which would be undetectable to optical reverse-engineering. For example, for random number generation in Linux, it is seen as unacceptable to use Intel's RDRAND hardware RNG without mixing in the RDRAND output with other sources of entropy to counteract any backdoors in the hardware RNG, especially after the revelation of the NSA Bullrun program.

In 2010, a U.S. lottery draw was rigged by the information security director of the Multi-State Lottery Association (MUSL), who surreptitiously installed backdoor malware on the MUSL's secure RNG computer during routine maintenance. During the hacks the man won a total amount of $16,500,000 by predicting the numbers correctly a few times in year.

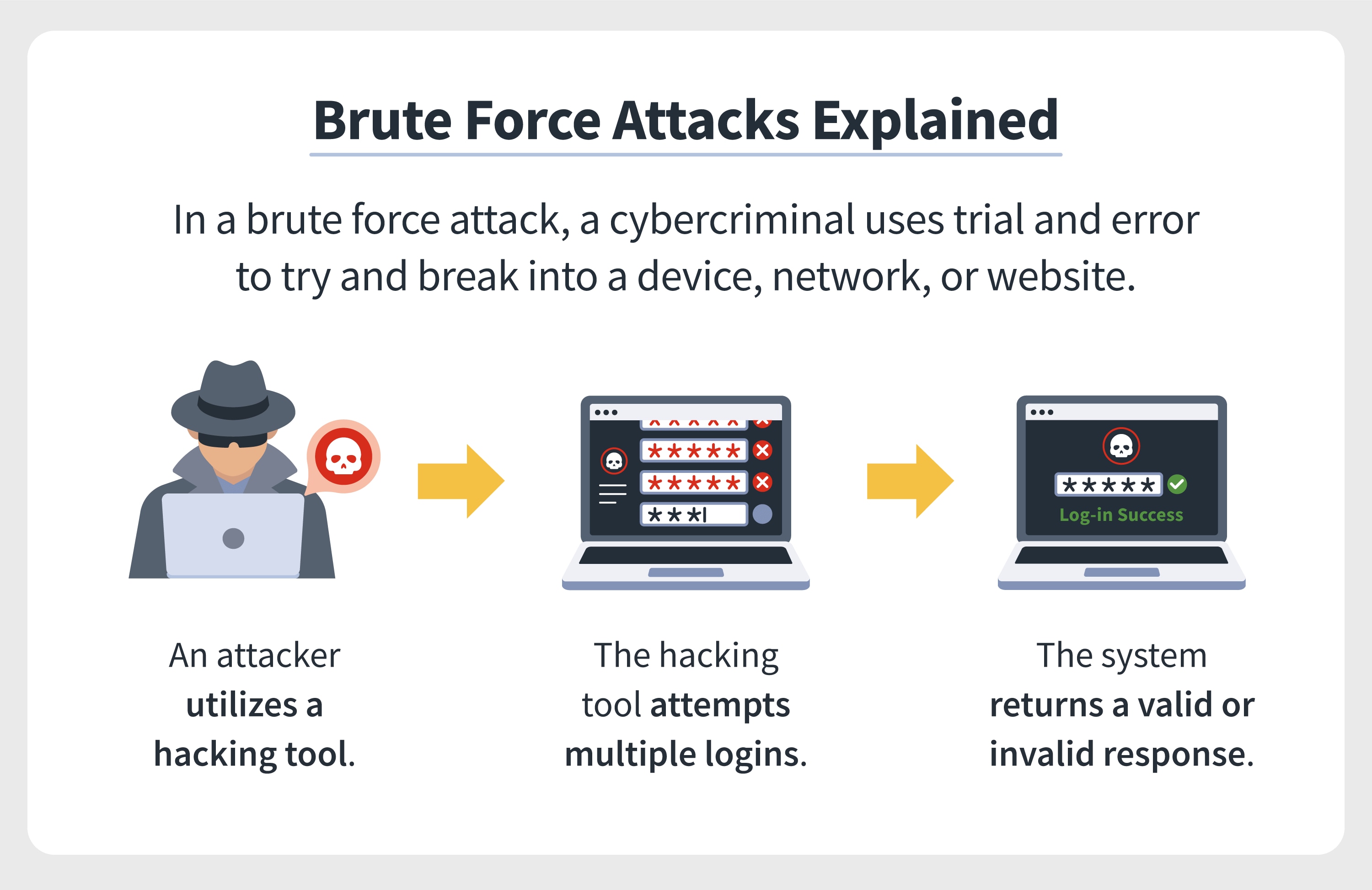

Address space layout randomization (ASLR), a mitigation against rowhammer and related attacks on the physical hardware of memory chips has been found to be inadequate as of early 2017 by VUSec. The random number algorithm, if based on a shift register implemented in hardware, is predictable at sufficiently large values of p and can be reverse engineered with enough processing power (Brute Force Hack). This also indirectly means that malware using this method can run on both GPUs and CPUs if coded to do so, even using GPU to break ASLR on the CPU itself.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1730 2023-04-12 13:50:55

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,380

Re: Miscellany

1633) Sedative

Gist

A drug or medicine that makes you feel calm or want to sleep.

Summary

Sedatives, or central nervous system depressants, are a group of drugs that slow brain activity. People use these drugs to help them calm down, feel more relaxed, and get better sleep.

There has been a recent increase in sedative prescriptions. Doctors prescribe sedatives to treat conditions such as:

* anxiety disorders

* sleep disorders

* seizures

* tension

* panic disorders

* alcohol withdrawal syndrome

Sedatives are drugs that people commonly misuse. Misusing sedatives and prolonging their use may lead to dependency and eventual withdrawal symptoms.

This article examines the different types of sedatives available and their possible uses. It also looks at the potential risks associated with using them and some alternative options.

Sedatives have numerous clinical uses. For example, they can induce sedation before surgical procedures, and this can range from mild sedation to general anesthesia.

Doctors also give sedatives and analgesics to individuals to reduce anxiety and provide pain relief before and after procedures.

Obstetric anesthesiologists may also give sedatives to people experiencing distress or restlessness during labor.

Because of their ability to relieve physical stress and anxiety and promote relaxation, doctors may also prescribe sedatives to people with insomnia, anxiety disorders, and muscle spasms.

People with bipolar disorder, post-traumatic stress disorder, and seizures may also benefit from prescription sedatives.

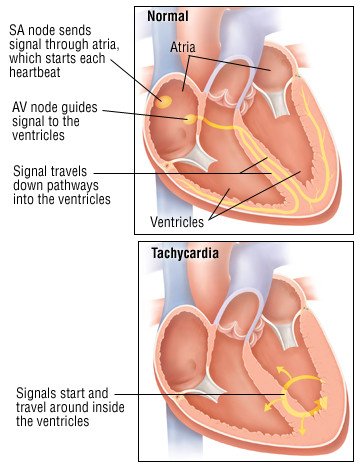

How sedatives affect the body

Sedatives act by increasing the activity of the brain chemical gamma-aminobutyric acid (GABA). This can slow down brain activity in general.

The inhibition of brain activity causes a person to become more relaxed, drowsy, and calm. Sedatives also allow GABA to have a stronger inhibitory effect on the brain.

Details

A sedative or tranquilliser is a substance that induces sedation by reducing irritability or excitement. They are CNS (central nervous system) depressants and interact with brain activity causing its deceleration. Various kinds of sedatives can be distinguished, but the majority of them affect the neurotransmitter gamma-aminobutyric acid (GABA). In spite of the fact that each sedative acts in its own way, most produce relaxing effects by increasing GABA activity.

This group is related to hypnotics. The term sedative describes drugs that serve to calm or relieve anxiety, whereas the term hypnotic describes drugs whose main purpose is to initiate, sustain, or lengthen sleep. Because these two functions frequently overlap, and because drugs in this class generally produce dose-dependent effects (ranging from anxiolysis to loss of consciousness) they are often referred to collectively as sedative-hypnotic drugs.

Sedatives can be used to produce an overly-calming effect (alcohol being the most common sedating drug). In the event of an overdose or if combined with another sedative, many of these drugs can cause deep unconsciousness and even death.

Terminology

There is some overlap between the terms "sedative" and "hypnotic".

Advances in pharmacology have permitted more specific targeting of receptors, and greater selectivity of agents, which necessitates greater precision when describing these agents and their effects:

Anxiolytic refers specifically to the effect upon anxiety. (However, some benzodiazepines can be all three: sedatives, hypnotics, and anxiolytics).

Tranquilizer can refer to anxiolytics or antipsychotics.

Soporific and sleeping pill are near-synonyms for hypnotics.

The term "chemical cosh"

The term "chemical cosh" (a club) is sometimes used popularly for a strong sedative, particularly for:

* widespread dispensation of antipsychotic drugs in residential care to make people with dementia easier to manage.

* use of methylphenidate to calm children with attention deficit hyperactivity disorder, though paradoxically this drug is known to be a stimulant.

Therapeutic use

Doctors and veterinarians often administer sedatives to patients in order to dull the patient's anxiety related to painful or anxiety-provoking procedures. Although sedatives do not relieve pain in themselves, they can be a useful adjunct to analgesics in preparing patients for surgery, and are commonly given to patients before they are anaesthetized, or before other highly uncomfortable and invasive procedures like cardiac catheterization, colonoscopy or MRI.

Risks:

Sedative dependence

Some sedatives can cause psychological and physical dependence when taken regularly over a period of time, even at therapeutic doses. Dependent users may get withdrawal symptoms ranging from restlessness and insomnia to convulsions and death. When users become psychologically dependent, they feel as if they need the drug to function, although physical dependence does not necessarily occur, particularly with a short course of use. In both types of dependences, finding and using the sedative becomes the focus in life. Both physical and psychological dependence can be treated with therapy.

Misuse

Many sedatives can be misused, but barbiturates and benzodiazepines are responsible for most of the problems with sedative use due to their widespread recreational or non-medical use. People who have difficulty dealing with stress, anxiety or sleeplessness may overuse or become dependent on sedatives. Some heroin users may take them either to supplement their drug or to substitute for it. Stimulant users may take sedatives to calm excessive jitteriness. Others take sedatives recreationally to relax and forget their worries. Barbiturate overdose is a factor in nearly one-third of all reported drug-related deaths. These include suicides and accidental drug poisonings. Accidental deaths sometimes occur when a drowsy, confused user repeats doses, or when sedatives are taken with alcohol.

A study from the United States found that in 2011, sedatives and hypnotics were a leading source of adverse drug events (ADEs) seen in the hospital setting: Approximately 2.8% of all ADEs present on admission and 4.4% of ADEs that originated during a hospital stay were caused by a sedative or hypnotic drug. A second study noted that a total of 70,982 sedative exposures were reported to U.S. poison control centers in 1998, of which 2310 (3.2%) resulted in major toxicity and 89 (0.1%) resulted in death. About half of all the people admitted to emergency rooms in the U.S. as a result of nonmedical use of sedatives have a legitimate prescription for the drug, but have taken an excessive dose or combined it with alcohol or other drugs.

There are also serious paradoxical reactions that may occur in conjunction with the use of sedatives that lead to unexpected results in some individuals. Malcolm Lader at the Institute of Psychiatry in London estimates the incidence of these adverse reactions at about 5%, even in short-term use of the drugs. The paradoxical reactions may consist of depression, with or without suicidal tendencies, phobias, aggressiveness, violent behavior and symptoms sometimes misdiagnosed as psychosis.

Dangers of combining sedatives and alcohol

Sedatives and alcohol are sometimes combined recreationally or carelessly. Since alcohol is a strong depressant that slows brain function and depresses respiration, the two substances compound each other's actions and this combination can prove fatal.

Worsening of psychiatric symptoms

The long-term use of benzodiazepines may have a similar effect on the brain as alcohol, and are also implicated in depression, anxiety, posttraumatic stress disorder (PTSD), mania, psychosis, sleep disorders, sexual dysfunction, delirium, and neurocognitive disorders (including benzodiazepine-induced persisting dementia which persists even after the medications are stopped). As with alcohol, the effects of benzodiazepine on neurochemistry, such as decreased levels of serotonin and norepinephrine, are believed to be responsible for their effects on mood and anxiety. Additionally, benzodiazepines can indirectly cause or worsen other psychiatric symptoms (e.g., mood, anxiety, psychosis, irritability) by worsening sleep (i.e., benzodiazepine-induced sleep disorder). Like alcohol, benzodiazepines are commonly used to treat insomnia in the short-term (both prescribed and self-medicated), but worsen sleep in the long-term. While benzodiazepines can put people to sleep, they disrupt sleep architecture: decreasing sleep time, delaying time to REM (rapid eye movement) sleep, and decreasing deep slow-wave sleep (the most restorative part of sleep for both energy and mood).

Dementia

Sedatives and hypnotics should be avoided in people with dementia, according to the medication appropriateness tool for co‐morbid health conditions in dementia criteria. The use of these medications can further impede cognitive function for people with dementia, who are also more sensitive to side effects of medications.

Amnesia

Sedatives can sometimes leave the patient with long-term or short-term amnesia. Lorazepam is one such pharmacological agent that can cause anterograde amnesia. Intensive care unit patients who receive higher doses over longer periods, typically via IV drip, are more likely to experience such side effects. Additionally, the prolonged use of tranquilizers increases the risk of obsessive and compulsive disorder, where the person becomes unaware whether he has performed a scheduled activity or not, he may also repetitively perform tasks and still re-performs the same task trying to make-up for continuous doubts. Remembering names that were earlier known becomes an issue such that the memory loss becomes apparent.

Disinhibition and crime

Sedatives — most commonly alcohol but also GHB, Flunitrazepam (Rohypnol), and to a lesser extent, temazepam (Restoril), and midazolam (Versed) — have been reported for their use as date math drugs (also called a Mickey) and being administered to unsuspecting patrons in bars or guests at parties to reduce the intended victims' defenses. These drugs are also used for robbing people.