Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#2426 2025-01-18 17:10:03

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,387

Re: Miscellany

2326) Formula/Formulae

Gist

Other form: formulas.

A formula is generally a fixed pattern that is used to achieve consistent results. It might be made up of words, numbers, or ideas that work together to define a procedure to be followed for the desired outcome.

Formulas, the patterns we follow in life, are used everywhere. In math or science, a formula might express a numeric or chemical equation; in cooking, a recipe is a formula. Baby formula is made up of the nutrients necessary for maintaining healthy growth, and the right formula for a fuel mixture is critical for a racing car's best performance. Everyone has their favorite formula for success. J. Paul Getty once gave his as "rise early, work hard, strike oil."

Summary

A chemical formula is a way of presenting information about the chemical proportions of atoms that constitute a particular chemical compound or molecule, using chemical element symbols, numbers, and sometimes also other symbols, such as parentheses, dashes, brackets, commas and plus (+) and minus (−) signs. These are limited to a single typographic line of symbols, which may include subscripts and superscripts. A chemical formula is not a chemical name since it does not contain any words. Although a chemical formula may imply certain simple chemical structures, it is not the same as a full chemical structural formula. Chemical formulae can fully specify the structure of only the simplest of molecules and chemical substances, and are generally more limited in power than chemical names and structural formulae.

The simplest types of chemical formulae are called empirical formulae, which use letters and numbers indicating the numerical proportions of atoms of each type. Molecular formulae indicate the simple numbers of each type of atom in a molecule, with no information on structure. For example, the empirical formula for glucose is CH2O (twice as many hydrogen atoms as carbon and oxygen), while its molecular formula is C6H12O6 (12 hydrogen atoms, six carbon and oxygen atoms).

Sometimes a chemical formula is complicated by being written as a condensed formula (or condensed molecular formula, occasionally called a "semi-structural formula"), which conveys additional information about the particular ways in which the atoms are chemically bonded together, either in covalent bonds, ionic bonds, or various combinations of these types. This is possible if the relevant bonding is easy to show in one dimension. An example is the condensed molecular/chemical formula for ethanol, which is CH3−CH2−OH or CH3CH2OH. However, even a condensed chemical formula is necessarily limited in its ability to show complex bonding relationships between atoms, especially atoms that have bonds to four or more different substituents.

Since a chemical formula must be expressed as a single line of chemical element symbols, it often cannot be as informative as a true structural formula, which is a graphical representation of the spatial relationship between atoms in chemical compounds (see for example the figure for butane structural and chemical formulae, at right). For reasons of structural complexity, a single condensed chemical formula (or semi-structural formula) may correspond to different molecules, known as isomers. For example, glucose shares its molecular formula C6H12O6 with a number of other sugars, including fructose, galactose and mannose. Linear equivalent chemical names exist that can and do specify uniquely any complex structural formula (see chemical nomenclature), but such names must use many terms (words), rather than the simple element symbols, numbers, and simple typographical symbols that define a chemical formula.

Chemical formulae may be used in chemical equations to describe chemical reactions and other chemical transformations, such as the dissolving of ionic compounds into solution. While, as noted, chemical formulae do not have the full power of structural formulae to show chemical relationships between atoms, they are sufficient to keep track of numbers of atoms and numbers of electrical charges in chemical reactions, thus balancing chemical equations so that these equations can be used in chemical problems involving conservation of atoms, and conservation of electric charge.

Details

In science, a formula is a concise way of expressing information symbolically, as in a mathematical formula or a chemical formula. The informal use of the term formula in science refers to the general construct of a relationship between given quantities.

The plural of formula can be either formulas (from the most common English plural noun form) or, under the influence of scientific Latin, formulae (from the original Latin).

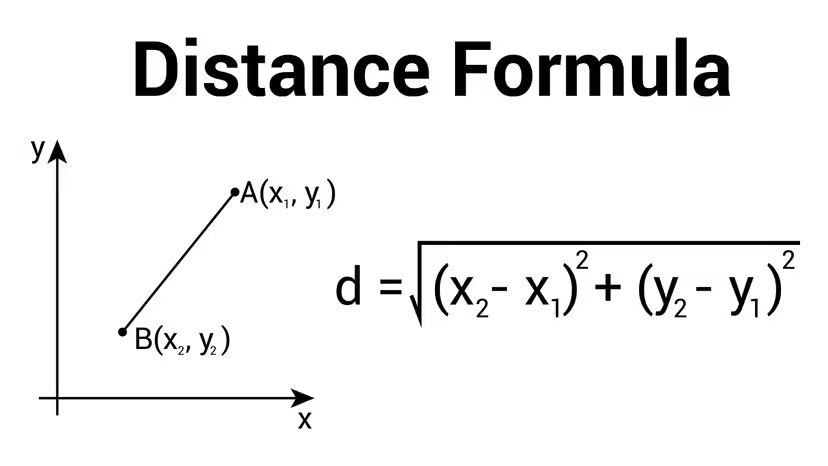

In mathematics

In mathematics, a formula generally refers to an equation or inequality relating one mathematical expression to another, with the most important ones being mathematical theorems. For example, determining the volume of a sphere requires a significant amount of integral calculus or its geometrical analogue, the method of exhaustion. However, having done this once in terms of some parameter (the radius for example), mathematicians have produced a formula to describe the volume of a sphere in terms of its radius:

.Having obtained this result, the volume of any sphere can be computed as long as its radius is known. Here, notice that the volume V and the radius r are expressed as single letters instead of words or phrases. This convention, while less important in a relatively simple formula, means that mathematicians can more quickly manipulate formulas which are larger and more complex. Mathematical formulas are often algebraic, analytical or in closed form.

In a general context, formulas often represent mathematical models of real world phenomena, and as such can be used to provide solutions (or approximate solutions) to real world problems, with some being more general than others. For example, the formula

is an expression of Newton's second law, and is applicable to a wide range of physical situations. Other formulas, such as the use of the equation of a sine curve to model the movement of the tides in a bay, may be created to solve a particular problem. In all cases, however, formulas form the basis for calculations.

Expressions are distinct from formulas in the sense that they don't usually contain relations like equality (=) or inequality (<). Expressions denote a mathematical object, where as formulas denote a statement about mathematical objects. This is analogous to natural language, where a noun phrase refers to an object, and a whole sentence refers to a fact. For example,

is a formula.

However, in some areas mathematics, and in particular in computer algebra, formulas are viewed as expressions that can be evaluated to true or false, depending on the values that are given to the variables occurring in the expressions. For example

In mathematical logic

In mathematical logic, a formula (often referred to as a well-formed formula) is an entity constructed using the symbols and formation rules of a given logical language.[8] For example, in first-order logic,

is a formula, provided that f is a unary function symbol, P a unary predicate symbol, and Q a ternary predicate symbol.

Chemical formulas

In modern chemistry, a chemical formula is a way of expressing information about the proportions of atoms that constitute a particular chemical compound, using a single line of chemical element symbols, numbers, and sometimes other symbols, such as parentheses, brackets, and plus (+) and minus (−) signs. For example, H2O is the chemical formula for water, specifying that each molecule consists of two hydrogen (H) atoms and one oxygen (O) atom. Similarly, O−3 denotes an ozone molecule consisting of three oxygen atoms and a net negative charge.

The structural formula for butane. There are three common non-pictorial types of chemical formulas for this molecule:

* the empirical formula C2H5

* the molecular formula C4H10 and

* the condensed formula (or semi-structural formula) CH3CH2CH2CH3.

A chemical formula identifies each constituent element by its chemical symbol, and indicates the proportionate number of atoms of each element.

In empirical formulas, these proportions begin with a key element and then assign numbers of atoms of the other elements in the compound—as ratios to the key element. For molecular compounds, these ratio numbers can always be expressed as whole numbers. For example, the empirical formula of ethanol may be written C2H6O, because the molecules of ethanol all contain two carbon atoms, six hydrogen atoms, and one oxygen atom. Some types of ionic compounds, however, cannot be written as empirical formulas which contains only the whole numbers. An example is boron carbide, whose formula of CBn is a variable non-whole number ratio, with n ranging from over 4 to more than 6.5.

When the chemical compound of the formula consists of simple molecules, chemical formulas often employ ways to suggest the structure of the molecule. There are several types of these formulas, including molecular formulas and condensed formulas. A molecular formula enumerates the number of atoms to reflect those in the molecule, so that the molecular formula for glucose is C6H12O6 rather than the glucose empirical formula, which is CH2O. Except for the very simple substances, molecular chemical formulas generally lack needed structural information, and might even be ambiguous in occasions.

A structural formula is a drawing that shows the location of each atom, and which atoms it binds to.

In computing

In computing, a formula typically describes a calculation, such as addition, to be performed on one or more variables. A formula is often implicitly provided in the form of a computer instruction such as.

Degrees Celsius = (5/9)*(Degrees Fahrenheit - 32)

In computer spreadsheet software, a formula indicating how to compute the value of a cell, say A3, could be written as

=A1+A2

where A1 and A2 refer to other cells (column A, row 1 or 2) within the spreadsheet. This is a shortcut for the "paper" form A3 = A1+A2, where A3 is, by convention, omitted because the result is always stored in the cell itself, making the stating of the name redundant.

Units

Formulas used in science almost always require a choice of units. Formulas are used to express relationships between various quantities, such as temperature, mass, or charge in physics; supply, profit, or demand in economics; or a wide range of other quantities in other disciplines.

An example of a formula used in science is Boltzmann's entropy formula. In statistical thermodynamics, it is a probability equation relating the entropy S of an ideal gas to the quantity W, which is the number of microstates corresponding to a given macrostate:

where k is the Boltzmann constant, equal to , and W is the number of microstates consistent with the given macrostate.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Online

#2427 2025-01-19 00:05:18

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,387

Re: Miscellany

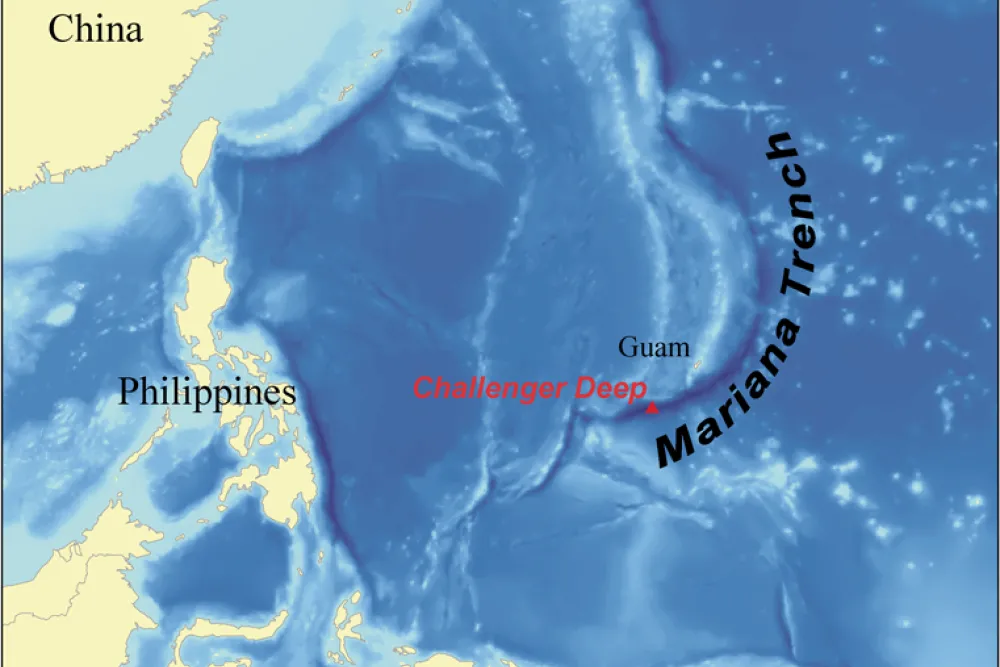

2327) Continent

Gist

A continent is one of Earth's seven main divisions of land. The continents are, from largest to smallest: Asia, Africa, North America, South America, Antarctica, Europe, and Australia. When geographers identify a continent, they usually include all the islands associated with it.

A continent is a large continuous mass of land conventionally regarded as a collective region. There are seven continents: Asia, Africa, North America, South America, Antarctica, Europe, and Australia (listed from largest to smallest in size). Sometimes Europe and Asia are considered one continent called Eurasia.

Summary

A Continent is one of the larger continuous masses of land, namely, Asia, Africa, North America, South America, Antarctica, Europe, and Australia, listed in order of size. (Europe and Asia are sometimes considered a single continent, Eurasia.)

There is great variation in the sizes of continents; Asia is more than five times as large as Australia. The largest island in the world, Greenland, is only about one-fourth the size of Australia. The continents differ sharply in their degree of compactness. Africa has the most regular coastline and, consequently, the lowest ratio of coastline to total area. Europe is the most irregular and indented and has by far the highest ratio of coastline to total area.

The continents are not distributed evenly over the surface of the globe. If a hemisphere map centred in northwestern Europe is drawn, most of the world’s land area can be seen to lie within that hemisphere. More than two-thirds of the Earth’s land surface lies north of the Equator, and all the continents except Antarctica are wedge shaped, wider in the north than they are in the south.

The distribution of the continental platforms and ocean basins on the surface of the globe and the distribution of the major landform features have long been among the most intriguing problems for scientific investigation and theorizing. Among the many hypotheses that have been offered as explanation are: (1) the tetrahedral (four-faced) theory, in which a cooling earth assumes the shape of a tetrahedron by spherical collapse; (2) the accretion theory, in which younger rocks attached to older shield areas became buckled to form the landforms; (3) the continental-drift theory, in which an ancient floating continent drifted apart; and (4) the convection-current theory, in which convection currents in the Earth’s interior dragged the crust to cause folding and mountain making.

Geological and seismological evidence accumulated in the 20th century indicates that the continental platforms do “float” on a crust of heavier material that forms a layer completely enveloping the Earth. Each continent has one of the so-called shield areas that formed 2 billion to 4 billion years ago and is the core of the continent to which the remainder (most of the continent) has been added. Even the rocks of the extremely old shield areas are older in the centre and younger toward the margins, indicating that this process of accumulation started early. In North America the whole northeast quarter of the continent, called the Canadian, or Laurentian, Shield, is characterized by the ancient rocks of what might be called the original continent. In Europe the shield area underlies the eastern Scandinavian peninsula and Finland. The Guiana Highlands of South America are the core of that continent. Much of eastern Siberia is underlain by the ancient rocks, as are western Australia and southern Africa.

Details

A continent is any of several large geographical regions. Continents are generally identified by convention rather than any strict criteria. A continent could be a single landmass or a part of a very large landmass, as in the case of Asia or Europe. Due to this, the number of continents varies; up to seven or as few as four geographical regions are commonly regarded as continents. Most English-speaking countries recognize seven regions as continents. In order from largest to smallest in area, these seven regions are Asia, Africa, North America, South America, Antarctica, Europe, and Australia. Different variations with fewer continents merge some of these regions; examples of this are merging Asia and Europe into Eurasia, North America and South America into America, and Africa, Asia, and Europe into Afro-Eurasia.

Oceanic islands are occasionally grouped with a nearby continent to divide all the world's land into geographical regions. Under this scheme, most of the island countries and territories in the Pacific Ocean are grouped together with the continent of Australia to form the geographical region of Oceania.

In geology, a continent is defined as "one of Earth's major landmasses, including both dry land and continental shelves". The geological continents correspond to seven large areas of continental crust that are found on the tectonic plates, but exclude small continental fragments such as Madagascar that are generally referred to as microcontinents. Continental crust is only known to exist on Earth.

The idea of continental drift gained recognition in the 20th century. It postulates that the current continents formed from the breaking up of a supercontinent (Pangaea) that formed hundreds of millions of years ago.

Etymology

From the 16th century the English noun continent was derived from the term continent land, meaning continuous or connected land[5] and translated from the Latin terra continens. The noun was used to mean "a connected or continuous tract of land" or mainland. It was not applied only to very large areas of land—in the 17th century, references were made to the continents (or mainlands) of the Isle of Man, Ireland and Wales and in 1745 to Sumatra. The word continent was used in translating Greek and Latin writings about the three "parts" of the world, although in the original languages no word of exactly the same meaning as continent was used.

While continent was used on the one hand for relatively small areas of continuous land, on the other hand geographers again raised Herodotus's query about why a single large landmass should be divided into separate continents. In the mid-17th century, Peter Heylin wrote in his Cosmographie that "A Continent is a great quantity of Land, not separated by any Sea from the rest of the World, as the whole Continent of Europe, Asia, Africa." In 1727, Ephraim Chambers wrote in his Cyclopædia, "The world is ordinarily divided into two grand continents: the Old and the New." And in his 1752 atlas, Emanuel Bowen defined a continent as "a large space of dry land comprehending many countries all joined together, without any separation by water. Thus Europe, Asia, and Africa is one great continent, as America is another."[8] However, the old idea of Europe, Asia and Africa as "parts" of the world ultimately persisted with these being regarded as separate continents.

Definitions and application

By convention, continents "are understood to be large, continuous, discrete masses of land, ideally separated by expanses of water". By this definition, all continents have to be an island of some metric. In modern schemes with five or more recognized continents, at least one pair of continents is joined by land in some fashion. The criterion "large" leads to arbitrary classification: Greenland, with a surface area of 2,166,086 square kilometres (836,330 sq mi), is only considered the world's largest island, while Australia, at 7,617,930 square kilometres (2,941,300 sq mi), is deemed the smallest continent.

Earth's major landmasses all have coasts on a single, continuous World Ocean, which is divided into several principal oceanic components by the continents and various geographic criteria.

The geological definition of a continent has four criteria: high elevation relative to the ocean floor; a wide range of igneous, metamorphic and sedimentary rocks rich in silica; a crust thicker than the surrounding oceanic crust; and well-defined limits around a large enough area.

Extent

The most restricted meaning of continent is that of a continuous area of land or mainland, with the coastline and any land boundaries forming the edge of the continent. In this sense, the term continental Europe (sometimes referred to in Britain as "the Continent") is used to refer to mainland Europe, excluding islands such as Great Britain, Iceland, Ireland, and Malta, while the term continent of Australia may refer to the mainland of Australia, excluding New Guinea, Tasmania, and other nearby islands. Similarly, the continental United States refers to "the 49 States (including Alaska but excluding Hawaii) located on the continent of North America, and the District of Columbia."

From the perspective of geology or physical geography, continent may be extended beyond the confines of continuous dry land to include the shallow, submerged adjacent area (the continental shelf) and the islands on the shelf (continental islands), as they are structurally part of the continent.

From this perspective, the edge of the continental shelf is the true edge of the continent, as shorelines vary with changes in sea level. In this sense the islands of Great Britain and Ireland are part of Europe, while Australia and the island of New Guinea together form a continent. Taken to its limit, this view could support the view that there are only three continents: Antarctica, Australia-New Guinea, and a single mega-continent which joins Afro-Eurasia and America via the contiguous continental shelf in and around the Bering Sea. The vast size of the latter compared to the first two might even lead some to say it is the only continent, the others being more comparable to Greenland or New Zealand.

As a cultural construct, the concept of a continent may go beyond the continental shelf to include oceanic islands and continental fragments. In this way, Iceland is considered a part of Europe, and Madagascar a part of Africa. Extrapolating the concept to its extreme, some geographers group the Australian continental landmass with other islands in the Pacific Ocean into Oceania, which is usually considered a region rather than a continent. This divides the entire land surface of Earth into continents, regions, or quasi-continents.

Separation

The criterion that each continent is a discrete landmass is commonly relaxed due to historical conventions and practical use. Of the seven most globally recognized continents, only Antarctica and Australia are completely separated from other continents by the ocean. Several continents are defined not as absolutely distinct bodies but as "more or less discrete masses of land". Africa and Asia are joined by the Isthmus of Suez, and North America and South America by the Isthmus of Panama. In both cases, there is no complete separation of these landmasses by water (disregarding the Suez Canal and the Panama Canal, which are both narrow and shallow, as well as human-made). Both of these isthmuses are very narrow compared to the bulk of the landmasses they unite.

North America and South America are treated as separate continents in the seven-continent model. However, they may also be viewed as a single continent known as America. This viewpoint was common in the United States until World War II, and remains prevalent in some Asian six-continent models. The single American continent model remains a common view in European countries like France, Greece, Hungary, Italy, Malta, Portugal, Spain, Latin American countries and some Asian countries.

The criterion of a discrete landmass is completely disregarded if the continuous landmass of Eurasia is classified as two separate continents (Asia and Europe). Physiographically, Europe and the Indian subcontinent are large peninsulas of the Eurasian landmass. However, Europe is considered a continent with its comparatively large land area of 10,180,000 square kilometres (3,930,000 sq mi), while the Indian subcontinent, with less than half that area, is considered a subcontinent. The alternative view—in geology and geography—that Eurasia is a single continent results in a six-continent view of the world. Some view the separation of Eurasia into Asia and Europe as a residue of Eurocentrism: "In physical, cultural and historical diversity, China and India are comparable to the entire European landmass, not to a single European country. [...]." However, for historical and cultural reasons, the view of Europe as a separate continent continues in almost all categorizations.

If continents are defined strictly as discrete landmasses, embracing all the contiguous land of a body, then Africa, Asia, and Europe form a single continent which may be referred to as Afro-Eurasia. Combined with the consolidation of the Americas, this would produce a four-continent model consisting of Afro-Eurasia, America, Antarctica, and Australia.

When sea levels were lower during the Pleistocene ice ages, greater areas of the continental shelf were exposed as dry land, forming land bridges between Tasmania and the Australian mainland. At those times, Australia and New Guinea were a single, continuous continent known as Sahul. Likewise, Afro-Eurasia and the Americas were joined by the Bering Land Bridge. Other islands, such as Great Britain, were joined to the mainlands of their continents. At that time, there were just three discrete landmasses in the world: Africa-Eurasia-America, Antarctica, and Australia-New Guinea (Sahul).

Number

There are several ways of distinguishing the continents:

* The seven-continent model is taught in most English-speaking countries, including Australia, Canada, the United Kingdom, and the United States, and also in Bangladesh, China, India, Indonesia, Pakistan, the Philippines, Sri Lanka, Suriname, parts of Europe and Africa.

* The six-continent combined-Eurasia model is mostly used in Russia and some parts of Eastern Europe.

* The six-continent combined-America model is taught in Greece and many Romance-speaking countries—including Latin America.

* The Olympic flag's five rings represent the five inhabited continents of the combined-America model but excludes the uninhabited Antarctica.

In the English-speaking countries, geographers often use the term Oceania to denote a geographical region which includes most of the island countries and territories in the Pacific Ocean, as well as the continent of Australia.

Eighth continent

Zealandia (a submerged continent) has been called the eighth continent.

Area and population

The following table provides areas given by the Encyclopædia Britannica for each continent in accordance with the seven-continent model, including Australasia along with Melanesia, Micronesia, and Polynesia as parts of Oceania. It also provides populations of continents according to 2021 estimates by the United Nations Statistics Division based on the United Nations geoscheme, which includes all of Egypt (including the Isthmus of Suez and the Sinai Peninsula) as a part of Africa, all of Armenia, Azerbaijan, Cyprus, Georgia, Indonesia, Kazakhstan, and Turkey (including East Thrace) as parts of Asia, all of Russia (including Siberia) as a part of Europe, all of Panama and the United States (including Hawaii) as parts of North America, and all of Chile (including Easter Island) as a part of South America.

Geological continents

Geologists use four key attributes to define a continent:

* Elevation – The landmass, whether dry or submerged beneath the ocean, should be elevated above the surrounding ocean crust.

* Geology – The landmass should contain different types of rock: igneous, metamorphic, and sedimentary.

* Crustal structure – The landmass should consist of the continental crust, which is thicker and has a lower seismic velocity than the oceanic crust.

* Limits and area – The landmass should have clearly defined boundaries and an area of more than one million square kilometres.

With the addition of Zealandia in 2017, Earth currently has seven recognized geological continents:

* Africa

* Antarctica

* Australia

* Eurasia

* North America

* South America

* Zealandia

Due to a seeming lack of Precambrian cratonic rocks, Zealandia's status as a geological continent has been disputed by some geologists. However, a study conducted in 2021 found that part of the submerged continent is indeed Precambrian, twice as old as geologists had previously thought, which is further evidence that supports the idea of Zealandia being a geological continent.

All seven geological continents are spatially isolated by geologic features.

Additional Information

A continent is one of Earth’s seven main divisions of land. The continents are, from largest to smallest: Asia, Africa, North America, South America, Antarctica, Europe, and Australia.

When geographers identify a continent, they usually include all the islands associated with it. Japan, for instance, is part of the continent of Asia. Greenland and all the islands in the Caribbean Sea are usually considered part of North America.

Together, the continents add up to about 148 million square kilometers (57 million square miles) of land. Continents make up most—but not all—of Earth’s land surface. A very small portion of the total land area is made up of islands that are not considered physical parts of continents. The ocean covers almost three-fourths of Earth. The area of the ocean is more than double the area of all the continents combined. All continents border at least one ocean. Asia, the largest continent, has the longest series of coastlines.

Coastlines, however, do not indicate the actual boundaries of the continents. Continents are defined by their continental shelves. A continental shelf is a gently sloping area that extends outward from the beach far into the ocean. A continental shelf is part of the ocean, but also part of the continent.

To geographers, continents are also culturally distinct. The continents of Europe and Asia, for example, are actually part of a single, enormous piece of land called Eurasia. But linguistically and ethnically, the areas of Asia and Europe are distinct. Because of this, most geographers divide Eurasia into Europe and Asia. An imaginary line, running from the northern Ural Mountains in Russia south to the Caspian and Black Seas, separates Europe, to the west, from Asia, to the east.

Building the Continents

Earth formed 4.6 billion years ago from a great, swirling cloud of dust and gas. The continuous smashing of space debris and the pull of gravity made Earth's core heat up. As the heat increased, some of Earth’s rocky materials melted and rose to the surface, where they cooled and formed a crust. Heavier material sank toward Earth’s center. Eventually, Earth came to have three main layers: the core, the mantle, and the crust.

The crust and the top portion of the mantle form a rigid shell around Earth that is broken up into huge sections called tectonic plates. The heat from inside Earth causes the plates to slide around on the molten mantle. Today, tectonic plates continue to slowly slide around the surface, just as they have been doing for hundreds of millions of years. Geologists believe the interaction of the plates, a process called plate tectonics, contributed to the creation of continents.

Studies of rocks found in ancient areas of North America have revealed the oldest known pieces of the continents began to form nearly four billion years ago, soon after Earth itself formed. At that time, a primitive ocean covered Earth. Only a small fraction of the crust was made up of continental material. Scientists theorize that this material built up along the boundaries of tectonic plates during a process called subduction. During subduction, plates collide, and the edge of one plate slides beneath the edge of another.

When heavy oceanic crust subducted toward the mantle, it melted in the mantle’s intense heat. Once melted, the rock became lighter. Called magma, it rose through the overlying plate and burst out as lava. When the lava cooled, it hardened into igneous rock.

Gradually, the igneous rock built up into small volcanic islands above the surface of the ocean. Over time, these islands grew bigger, partly as the result of more lava flows and partly from the buildup of material scraped off descending plates. When plates carrying islands subducted, the islands themselves did not descend into the mantle. Their material fused with that of islands on the neighboring plate. This made even larger landmasses—the first continents.

The building of volcanic islands and continental material through plate tectonics is a process that continues today. Continental crust is much lighter than oceanic crust. In subduction zones, where tectonic plates interact with each other, oceanic crust always subducts beneath continental crust. Oceanic crust is constantly being recycled in the mantle. For this reason, continental crust is much, much older than oceanic crust.

Wandering Continents

If you could visit Earth as it was millions of years ago, it would look very different. The continents have not always been where they are today. About 480 million years ago, most continents were scattered chunks of land lying along or south of the Equator. Millions of years of continuous tectonic activity changed their positions, and by 240 million years ago, almost all of the world’s land was joined in a single, huge continent. Geologists call this supercontinent Pangaea, which means “all lands” in Greek.

By about 200 million years ago, the forces that helped form Pangaea caused the supercontinent to begin to break apart. The pieces of Pangaea that began to move apart were the beginnings of the continents that we know today.

A giant landmass that would become Europe, Asia, and North America separated from another mass that would split up into other continents and regions. In time, Antarctica and Oceania, still joined together, broke away and drifted south. The small piece of land that would become the peninsula of India broke away and for millions of years moved north as a large island. It eventually collided with Asia. Gradually, the different landmasses moved to their present locations.

The positions of the continents are always changing. North America and Europe are moving away from each other at the rate of about 2.5 centimeters (one inch) per year. If you could visit the planet in the future, you might find that part of the United States' state of California had separated from North America and become an island. Africa might have split in two along the Great Rift Valley. It is even possible that another supercontinent may form someday.

Continental Features

The surface of the continents has changed many times because of mountain building, weathering, erosion, and build-up of sediment. Continuous, slow movement of tectonic plates also changes surface features.

The rocks that form the continents have been shaped and reshaped many times. Great mountain ranges have risen and then have been worn away. Ocean waters have flooded huge areas and then gradually dried up. Massive ice sheets have come and gone, sculpting the landscape in the process.

Today, all continents have great mountain ranges, vast plains, extensive plateaus, and complex river systems. The landmasses’s average elevation above sea level is about 838 meters (2,750 feet).

Although each is unique, all the continents share two basic features: old, geologically stable regions, and younger, somewhat more active regions. In the younger regions, the process of mountain building has happened recently and often continues to happen.

The power for mountain building, or orogeny, comes from plate tectonics. One way mountains form is through the collision of two tectonic plates. The impact creates wrinkles in the crust, just as a rug wrinkles when you push against one end of it. Such a collision created Asia’s Himalaya several million years ago. The plate carrying India slowly and forcefully shoved the landmass of India into Asia, which was riding on another plate. The collision continues today, causing the Himalaya to grow taller every year.

Recently formed mountains, called coastal ranges, rise near the western coasts of North America and South America. Older, more stable mountain ranges are found in the interior of continents. The Appalachians of North America and the Urals, on the border between Europe and Asia, are older mountain ranges that are not geologically active.

Even older than these ancient, eroded mountain ranges are flatter, more stable areas of the continents called cratons. A craton is an area of ancient crust that formed during Earth’s early history. Every continent has a craton. Microcontinents, like New Zealand, lack cratons.

Cratons have two forms: shields and platforms. Shields are bare rocks that may be the roots or cores of ancient mountain ranges that have completely eroded away. Platforms are cratons with sediment and sedimentary rock lying on top.

The Canadian Shield makes up about a quarter of North America. For hundreds of thousands of years, sheets of ice up to 3.2 kilometers (two miles) thick coated the Canadian Shield. The moving ice wore away material on top of ancient rock layers, exposing some of the oldest formations on Earth. When you stand on the oldest part of the Canadian Shield, you stand directly on rocks that formed more than 3.5 billion years ago.

North America

North America, the third-largest continent, extends from the tiny Aleutian Islands in the northwest to the Isthmus of Panama in the south. The continent includes the enormous island of Greenland in the northeast. In the far north, the continent stretches halfway around the world, from Greenland to the Aleutians. But at Panama’s narrowest part, the continent is just 50 kilometers (31 miles) across.

Young mountains—including the Rockies, North America’s largest chain—rise in the West. Some of Earth’s youngest mountains are found in the Cascade Range of the U.S. states of Washington, Oregon, and California. Some peaks there began to form only about one million years ago—a wink of an eye in Earth’s long history. North America’s older mountain ranges rise near the East Coast of the United States and Canada.

In between the mountain systems lie wide plains that contain deep, rich soil. Much of the soil was formed from material deposited during the most recent glacial period. This Ice Age reached its peak about 18,000 years ago. As glaciers retreated, streams of melted ice dropped sediment on the land, building layers of fertile soil in the plains region. Grain grown in this region, called the “breadbasket of North America,” feeds a large part of the world.

North America contains a variety of natural wonders. Landforms and all types of vegetation can be found within its boundaries. North America has deep canyons, such as Copper Canyon in the Mexican state of Chihuahua. Yellowstone National Park, in the U.S. state of Wyoming, has some of the world’s most active geysers. Canada’s Bay of Fundy has the greatest variation of tide levels in the world. The Great Lakes form the planet’s largest area of freshwater. In California, giant sequoias, the world’s most massive trees, grow more than 76 meters (250 feet) tall and nearly 31 meters (100 feet) around.

Greenland, off the east coast of Canada, is the world’s largest island. Despite its name, Greenland is mostly covered with ice. Its ice is a remnant of the great ice sheets that once blanketed much of the North American continent. Greenland is the only place besides Antarctica that still has an ice sheet.

From the freezing Arctic to the tropical jungles of Central America, North America enjoys more climate variation than any other continent. Almost every type of ecosystem is represented somewhere on the continent, from coral reefs in the Caribbean to Greenland’s ice sheet to the Great Plains in the U.S. and Canada.

Today, North America is home to the citizens of Canada, the United States, Greenland (an autonomous terrirory of Denmark), Mexico, Belize, Costa Rica, El Salvador, Guatemala, Honduras, Nicaragua, Panama, and the island countries and territories that dot the Caribbean Sea and the western North Atlantic.

Most of North America sits on the North American Plate. Parts of the Canadian province of British Columbia and the U.S. states of Washington, Oregon, and California sit on the tiny Juan de Fuca Plate. Parts of California and the Mexican state of Baja California sit on the enormous Pacific Plate. Parts of Baja California and the Mexican states of Baja California Sur, Sonora, Sinaloa, and Jalisco sit on the Cocos Plate. The Caribbean Plate carries most of the small islands of the Caribbean Sea (south of the island of Cuba) as well as Central America from Honduras to Panama. The Hawaiian Islands, in the middle of the Pacific Ocean on the Pacific Plate, are usually considered part of North America.

South America

South America is connected to North America by the narrow Isthmus of Panama. These two continents weren’t always connected; they came together only three million years ago. South America is the fourth-largest continent and extends from the sunny beaches of the Caribbean Sea to the frigid waters near the Antarctic Circle.

South America’s southernmost islands, called Tierra del Fuego, are less than 1,120 kilometers (700 miles) from Antarctica. These islands even host some Antarctic birds, such as penguins, albatrosses, and terns. Early Spanish explorers visiting the islands for the first time saw small fires dotting the land. These fires, made by Indigenous people, seemed to float on the water, which is probably how the islands got their name—Tierra del Fuego means "Land of Fire."

The Andes, Earth’s longest terrestrial mountain range, stretch the entire length of South America. Many active volcanoes dot the range. These volcanic areas are fueled by heat generated as a large oceanic plate, called the Nazca Plate, grinds beneath the plate carrying South America.

The central-southern area of South America has pampas, or plains. These rich areas are ideal for agriculture. The growing of wheat is a major industry in the pampas. Grazing animals, such as cattle and sheep, are also raised in the pampas region.

In northern South America, the Amazon River and its tributaries flow through the world’s largest tropical rainforest. In volume, the Amazon is the largest river in the world. More water flows from it than from the next six largest rivers combined.

South America is also home to the world’s highest waterfall, Angel Falls, in the country of Venezuela. Water flows more than 979 meters (3,212 feet)—almost one mile. The falls are so high that most of the water evaporates into mist or is blown away by wind before it reaches the ground.

South American rainforests contain an enormous wealth of animal and plant life. More than 15,000 species of plants and animals are found only in the Amazon Basin. Many Amazonian plant species are sources of food and medicine for the rest of the world. Scientists are trying to find ways to preserve this precious and fragile environment as people move into the Amazon Basin and clear land for settlements and agriculture.

Twelve independent countries make up South America: Brazil, Colombia, Argentina, Peru, Venezuela, Chile, Ecuador, Bolivia, Paraguay, Uruguay, Guyana, and Suriname. The territories of French Guiana, which is claimed by France, and the Falkland Islands, which are adminstered by the United Kingdom but claimed by Argentina, are also part of South America.

Almost all of South America sits on top of the South American Plate.

Europe

Europe, the sixth-largest continent, contains just seven percent of the world’s land. In total area, the continent of Europe is only slightly larger than the country of Canada. However, the population of Europe is more than twice that of South America. Europe has 46 countries and many of the world’s major cities, including London, the United Kingdom; Paris, France; Berlin, Germany; Rome, Italy; Madrid, Spain; and Moscow, Russia.

Most European countries have access to the ocean. The continent is bordered by the Arctic Ocean in the north, the Atlantic Ocean in the west, the Caspian Sea in the southeast, and the Mediterranean and Black Seas in the south. The nearness of these bodies of water and the navigation of many of Europe’s rivers played a major role in the continent’s history. Early Europeans learned the river systems of the Volga, Danube, Don, Rhine, and Po, and could successfully travel the length and width of the small continent for trade, communication, or conquest.

Navigation and exploration outside of Europe was an important part of the development of the continent’s economic, social, linguistic, and political legacy. European explorers were responsible for colonizing land on every continent except Antarctica. This colonization process had a drastic impact on the economic and political development of those continents, as well as Europe. Europe's colonial period ended in the violent transfer of wealth and land from Indigenous peoples in the Americas, and later Africa, Oceania, and Asia.

In the east, the Ural Mountains separate Europe from Asia. The nations of Russia and Kazakhstan straddle both continents. Another range, the Kjølen Mountains, extends along the northern part of the border between Sweden and Norway. To the south, the Alps form an arc stretching from Albania to Austria, then across Switzerland and northern Italy into France. As the youngest and steepest of Europe’s mountains, the Alps geologically resemble the Rockies of North America, another young range.

A large area of gently rolling plains extends from northern France eastward to the Urals. A climate of warm summers, cold winters, and plentiful rain helps make much of this European farmland very productive.

The climate of Western Europe, especially around the Mediterranean Sea, makes it one of the world’s leading tourism destinations.

Almost all of Europe sits on the massive Eurasian Plate.

Africa

Africa, the second-largest continent, covers an area more than three times that of the United States. From north to south, Africa stretches about 8,000 kilometers (5,000 miles). It is connected to Asia by the Isthmus of Suez in Egypt.

The Sahara, which covers much of North Africa, is the world’s largest hot desert. The world’s longest river, the Nile, flows more than 6,560 kilometers (4,100 miles) from its most remote headwaters in Lake Victoria to the Mediterranean Sea in the north. A series of falls and rapids along the southern part of the river makes navigation difficult. The Nile has played an important role in the history of Africa. In ancient Egyptian civilization, it was a source of life for food, water, and transportation.

The top half of Africa is mostly dry, hot desert. The middle area has savannas, or flat, grassy plains. This region is home to wild animals such as lions, giraffes, elephants, hyenas, cheetahs, and wildebeests. The central and southern areas of Africa are dominated by rainforests. Many of these forests thrive around Africa’s other great rivers, the Zambezi, the Congo, and the Niger. These rivers also served as the homes to Great Zimbabwe, the Kingdom of Kongo, and the Ghana Empire, respectively. However, trees are being cut down in Africa’s rainforests for many of the same reasons deforestation is taking place in the rainforests of South America and Asia: development for businesses, homes, and agriculture.

Much of Africa is a high plateau surrounded by narrow strips of coastal lowlands. Hilly uplands and mountains rise in some areas of the interior. Glaciers on Mount Kilimanjaro in Tanzania sit just kilometers from the tropical jungles below. Even though Kilimanjaro is not far from the Equator, snow covers its summit all year long.

In eastern Africa, a giant depression called the Great Rift Valley runs from the Red Sea to the country of Mozambique. (The rift valley actually starts in southwestern Asia.) The Great Rift Valley is a site of major tectonic activity, where the continent of Africa is splitting into two. Geologists have already named the two parts of the African Plate. The Nubian Plate will carry most of the continent, to the west of the rift; the Somali Plate will carry the far eastern part of the continent, including the so-called “Horn of Africa.” The Horn of Africa is a peninsula that resembles the upturned horn of a rhinoceros. The countries of Eritrea, Ethiopia, Djibouti, and Somalia sit on the Horn of Africa and the Somali Plate.

Africa is home to 54 countries but only 16 percent of the world’s total population. The area of central-eastern Africa is important to scientists who study evolution and the earliest origins of humanity. This area is thought to be the place where hominids began to evolve.

The entire continent of Africa sits on the African Plate.

Asia

Asia, the largest continent, stretches from the eastern Mediterranean Sea to the western Pacific Ocean. There are more than 40 countries in Asia. Some are among the most-populated countries in the world, including China, India, and Indonesia. Sixty percent of Earth’s population lives in Asia. More than a third of the world’s people live in China and India alone.

The continent of Asia includes many islands, some of them are countries unto themselves. The Philippines, Indonesia, Japan, and Taiwan are major island nations in Asia.

Most of Asia’s people live in cities or fertile farming areas near river valleys, plains, and coasts. The plateaus in Central Asia are largely unsuitable for farming and are thinly populated.

Asia accounts for almost a third of the world’s land. The continent has a wide range of climate regions, from polar in the Siberian Arctic to tropical in equatorial Indonesia. Parts of Central Asia, including the Gobi Desert in China and Mongolia, are dry year-round. Southeast Asia, on the other hand, depends on the annual monsoons, which bring rain and make agriculture possible.

Monsoon rains and snowmelt feed Asian rivers such as the Ganges, the Yellow, the Mekong, the Indus, and the Yangtze. The rich valley between the Tigris and Euphrates Rivers in western Asia is called the “Fertile Crescent” for its place in the development of agriculture and human civilization.

Asia is the most mountainous of all the continents. More than 50 of the highest peaks in the world are in Asia. Mount Everest, which reaches more than 8,700 meters (29,000 feet) high in the Himalaya range, is the highest point on Earth. These mountains have become major destination spots for adventurous travelers.

Plate tectonics continuously push the mountains higher. As the landmass of India pushes northward into the landmass of Eurasia, parts of the Himalaya rise at a rate of about 2.5 centimeters (one inch) every five years.

Asia contains, not only, Earth’s highest elevation, but also its lowest place on land: the shores of the Dead Sea in the countries of Israel and Jordan. The land there lies more than 390 meters (1,300 feet) below sea level.

Although the Eurasian Plate carries most of Asia, it is not the only one supporting major parts of the large continent. The Arabian Peninsula, in the continent’s southwest, is carried by the Arabian Plate. The Indian Plate supports the Indian peninsula, sometimes called the Indian subcontinent. The Australian Plate carries some islands in Indonesia. The North American Plate carries eastern Siberia and the northern islands of Japan.

Australia

In addition to being the smallest continent, Australia is the flattest and the second-driest, after Antarctica. The region including the continent of Australia is sometimes called Oceania, to include the thousands of tiny islands of the Central Pacific and South Pacific, most notably Melanesia, Micronesia, and Polynesia (including the U.S. state of Hawai‘i). However, the continent of Australia itself includes only the nation of Australia, the eastern portion of the island of New Guinea (the nation of Papua New Guinea) and the island nation of New Zealand.

Australia covers just less than 8.5 million square kilometers (about 3.5 million square miles). Its population is about 31 million. It is the most sparsely populated continent, after Antarctica.

A plateau in the middle of mainland Australia makes up most of the continent’s total area. Rainfall is light on the plateau, and not many people have settled there. The Great Dividing Range, a long mountain range, rises near the east coast and extends from the northern part of the territory of Queensland through the territories of New South Wales and Victoria. Mainland Australia is known for the Outback, a desert area in the interior. This area is so dry, hot, and barren that few people live there.

In addition to the hot plateaus and deserts in mainland Australia, the continent also features lush equatorial rainforests on the island of New Guinea, tropical beaches, and high mountain peaks and glaciers in New Zealand.

Most of Australia’s people live in cities along the southern and eastern coasts of the mainland. Major cities include Perth, Sydney, Brisbane, Melbourne, and Adelaide.

Biologists who study animals consider Australia a living laboratory. When the continent began to break away from Antarctica more than 60 million years ago, it carried a cargo of animals with it. Isolated from life on other continents, the animals developed into creatures unique to Australia, such as the koala (Phascolarctos cinereus), the platypus (Ornithorhynchus anatinus), and the Tasmanian devil (Sarcophilus harrisii).

The Great Barrier Reef, off mainland Australia’s northeast coast, is another living laboratory. The world’s largest coral reef ecosystem, it is home to thousands of species of fish, sponges, marine mammals, corals, and crustaceans. The reef itself is 1,920 kilometers (1,200 miles) of living coral communities. By some estimates, it is the world’s largest living organism.

Most of Australia sits on the Australian Plate. The southern part of the South Island of New Zealand sits on the Pacific Plate.

Antarctica

Antarctica is the windiest, driest, and iciest place on Earth—it is the world's largest desert. Antarctica is larger than Europe or Australia, but unlike those continents, it has no permanent human population. People who work there are scientific researchers and support staff, such as pilots and cooks.

The climate of Antarctica makes it impossible to support agriculture or a permanent civilization. Temperatures in Antarctica, much lower than Arctic temperatures, plunge lower than -73 degrees Celsius (-100 degrees Fahrenheit).

Scientific bases and laboratories have been established in Antarctica for studies in fields that include geology, oceanography, and meteorology. The freezing temperatures of Antarctica make it an excellent place to study the history of Earth’s atmosphere and climate. Ice cores from the massive Antarctic ice sheet have recorded changes in Earth’s temperature and atmospheric gases for thousands of years. Antarctica is also an ideal place for discovering meteorites, or stony objects that have impacted Earth from space. The dark meteorites, often made of metals like iron, stand out from the white landscape of most of the continent.

Antarctica is almost completely covered with ice, sometimes as thick as 3.2 kilometers (two miles). In winter, Antarctica’s surface area may double as pack ice builds up in the ocean around the continent.

Like all other continents, Antarctica has volcanic activity. The most active volcano is Mount Erebus, which is less than 1,392 kilometers (870 miles) from the South Pole. Its frequent eruptions are evidenced by hot, molten rock beneath the continent’s icy surface.

Antarctica does not have any countries. However, scientific groups from different countries inhabit the research stations. A multinational treaty negotiated in 1959 and reviewed in 1991 states that research in Antarctica can only be used for peaceful purposes. McMurdo Station, the largest community in Antarctica, is operated by the United States. Vostok Station, where the coldest temperature on Earth was recorded, is operated by Russia.

All of Antarctica sits on the Antarctic Plate.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Online

#2428 2025-01-19 18:48:06

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,387

Re: Miscellany

2328) Computer (Printing)

Gist

A printer is a device that accepts text and graphic output from a computer and transfers the information to paper, usually to standard-size, 8.5" by 11" sheets of paper. Printers vary in size, speed, sophistication and cost.

Summary

A printer is electronic device that accepts text files or images from a computer and transfers them to a medium such as paper or film. It can be connected directly to the computer or indirectly via a network. Printers are classified as impact printers (in which the print medium is physically struck) and non-impact printers. Most impact printers are dot-matrix printers, which have a number of pins on the print head that emerge to form a character. Non-impact printers fall into three main categories: laser printers use a laser beam to attract toner to an area of the paper; ink-jet printers spray a jet of liquid ink; and thermal printers transfer wax-based ink or use heated pins to directly imprint an image on specially treated paper. Important printer characteristics include resolution (in dots per inch), speed (in sheets of paper printed per minute), colour (full-colour or black-and-white), and cache memory (which affects the speed at which a file can be printed).

Details

What is a printer?

A printer is a device that accepts text and graphic output from a computer and transfers the information to paper, usually to standard-size, 8.5" by 11" sheets of paper. Printers vary in size, speed, sophistication and cost. In general, more expensive printers are used for more frequent printing or high-resolution color printing.

Personal computer printers can be distinguished as impact or non-impact printers. Early impact printers worked something like an automatic typewriter, with a key striking an inked impression on paper for each printed character. The dot matrix printer, an impact printer that strikes the paper a line at a time, was a popular low-cost option.

The best-known non-impact printers are the inkjet printer and the laser printer. The inkjet sprays ink from an ink cartridge at very close range to the paper as it rolls by, while the laser printer uses a laser beam reflected from a mirror to attract ink (called toner) to selected paper areas as a sheet rolls over a drum.

Different types of printers

There are many different printer manufacturers available today, including Canon, Epson, Hewlett-Packard, Xerox and Lexmark, among many others. There are also several types of printers to choose from, which we'll explore below.

* Inkjet printers recreate a digital image by spraying ink onto paper. These are the most common type of personal printer.

* Laser printers are used to create high-quality prints by passing a laser beam at a high speed over a negatively charged drum to define an image. Color laser printers are more often found in professional settings.

* 3D printers are a relatively new printer technology. 3D printing creates a physical object from a digital file. It works by adding layer upon layer of material until the print job is complete and the object is whole.

* Thermal printers produce an image on paper by passing paper with a thermochromic coating over a print head comprised of electrically heated elements and produces an image in the area where the heated coating turns black. A dye-sublimation printer is a form of thermal printing technology that uses heat to transfer dye onto materials.

* All-in-one printers are multifunction devices that combine printing with other technologies such as a copier, scanner and/or fax machine.

* LED printers are similar to laser printers but use a light-emitting diode array in the print head instead of a laser.

* Photo printers are similar to inkjet printers but are designed specifically to print high-quality photos, which require a lot of ink and special paper to ensure the ink doesn't smear.

Older printer types

There are a few first-generation printer types that are outdated and rarely used today:

* Dot matrix printer: Dot matrix printing is an older impact printer technology for text documents that strikes the paper one line at a time. Dot matrix printers offer very basic print quality.

* Line printer: A line printer prints a single line of text at a time. While an older form of printing, line printers are still in use today.

Features to look for in a printer

The four printer qualities of most interest to users are:

* Color: Most modern printers offer color printing. However, they can also be set to print in black and white. Color printers are more expensive to operate since they use two ink cartridges -- one color and one black ink -- or toners that need to be replaced after a certain number of pages are printed. Printing ink cartridges or toner cartridges are comprised of black, cyan, magenta and yellow ink. The ink can be mixed together, or it may come in separate monochrome solid ink printer cartridges, depending on the type of printer.

* Resolution: Printer resolution -- the sharpness of text and images on paper -- is usually measured in dots per inch (dpi). Most inexpensive printers provide sufficient resolution for most purposes at 600 dpi.

* Speed: If a user does a lot of printing, printing speed is an important feature. Inexpensive printers print only about 3 to 6 sheets per minute. However, faster printing speeds are an option with a more sophisticated, expensive printer.

* Memory: Most printers come with a small amount of memory -- typically 2-16 megabytes- that can be expanded by the user. Having more than the minimum amount of memory is helpful and faster when printing out pages with large images.

Printer I/O interfaces

The most common I/O interface for printers had been the parallel Centronics interface with a 36-pin plug.

Nowadays, however, printers and computers are likely to use a serial interface, especially a USB or FireWire with smaller and less cumbersome plugs.

Printer languages

Printer languages are commands from the computer to the printer to tell the printer how to format the document being printed. These commands manage font size, graphics, compression of data sent to the printer, color, etc. The two most popular printer languages are PostScript and Printer Control Language.

Postscript

Postscript is a printer language that uses English phrases and programmatic constructions to describe the appearance of a printed page to the printer. Adobe developed the printer language in 1985, and introduced new features such as outline fonts and vector graphics which can be printed with a plotter.

Printers now come from the factory with (or can be loaded with) Postscript support. Postscript is not restricted to printers. It can be used with any device that creates an image using dots such as screen displays, slide recorders and image-setters.

Printer Control Language (PCL)

PCL (Printer Control Language) is an escape code language used to send commands to the printer for printing documents. Escape code language has its name because the escape key begins the command sequence followed by a series of code numbers. HP originally devised PCL for dot matrix and inkjet printers.

Since its introduction, PCL has become an industry standard. Other manufacturers who sell HP clones have copied it. Some of these clones are very good, but there are small differences in the way they print a page compared to real HP printers.

In 1984, the original HP LaserJet printer was introduced using PCL, which helped change the appearance of low-cost printer documents from poor to exceptional quality.

Fonts

A font is a set of characters of a specific style and size within an overall typeface design. Printers use resident fonts and soft fonts to print documents.

Resident fonts

Resident fonts are built into the hardware of a printer. They are also called internal fonts or built-in fonts.

All printers come with one or more resident fonts. Additional fonts can be added by inserting a font cartridge into the printer or installing soft fonts on the hard drive. Resident fonts cannot be erased, unlike soft fonts.

Soft fonts

Soft fonts are installed onto the hard drive or flash drive and then sent to the computer's memory when a document is printed that uses the particular soft font. Soft fonts can be downloaded from the internet or purchased in stores.

Additional Information

In computing, a printer is a peripheral machine which makes a durable representation of graphics or text, usually on paper. While most output is human-readable, bar code printers are an example of an expanded use for printers. Different types of printers include 3D printers, inkjet printers, laser printers, and thermal printers.

History

The first computer printer designed was a mechanically driven apparatus by Charles Babbage for his difference engine in the 19th century; however, his mechanical printer design was not built until 2000.

The first patented printing mechanism for applying a marking medium to a recording medium or more particularly an electrostatic inking apparatus and a method for electrostatically depositing ink on controlled areas of a receiving medium, was in 1962 by C. R. Winston, Teletype Corporation, using continuous inkjet printing. The ink was a red stamp-pad ink manufactured by Phillips Process Company of Rochester, NY under the name Clear Print. This patent (US3060429) led to the Teletype Inktronic Printer product delivered to customers in late 1966.

The first compact, lightweight digital printer was the EP-101, invented by Japanese company Epson and released in 1968, according to Epson.

The first commercial printers generally used mechanisms from electric typewriters and Teletype machines. The demand for higher speed led to the development of new systems specifically for computer use. In the 1980s there were daisy wheel systems similar to typewriters, line printers that produced similar output but at much higher speed, and dot-matrix systems that could mix text and graphics but produced relatively low-quality output. The plotter was used for those requiring high-quality line art like blueprints.

The introduction of the low-cost laser printer in 1984, with the first HP LaserJet, and the addition of PostScript in next year's Apple LaserWriter set off a revolution in printing known as desktop publishing. Laser printers using PostScript mixed text and graphics, like dot-matrix printers, but at quality levels formerly available only from commercial typesetting systems. By 1990, most simple printing tasks like fliers and brochures were now created on personal computers and then laser printed; expensive offset printing systems were being dumped as scrap. The HP Deskjet of 1988 offered the same advantages as a laser printer in terms of flexibility, but produced somewhat lower-quality output (depending on the paper) from much less-expensive mechanisms. Inkjet systems rapidly displaced dot-matrix and daisy-wheel printers from the market. By the 2000s, high-quality printers of this sort had fallen under the $100 price point and became commonplace.

The rapid improvement of internet email through the 1990s and into the 2000s has largely displaced the need for printing as a means of moving documents, and a wide variety of reliable storage systems means that a "physical backup" is of little benefit today.

Starting around 2010, 3D printing became an area of intense interest, allowing the creation of physical objects with the same sort of effort as an early laser printer required to produce a brochure. As of the 2020s, 3D printing has become a widespread hobby due to the abundance of cheap 3D printer kits, with the most common process being Fused deposition modeling.

Types:

Personal printer

Personal printers are mainly designed to support individual users, and may be connected to only a single computer. These printers are designed for low-volume, short-turnaround print jobs, requiring minimal setup time to produce a hard copy of a given document. They are generally slow devices ranging from 6 to around 25 pages per minute (ppm), and the cost per page is relatively high. However, this is offset by the on-demand convenience. Some printers can print documents stored on memory cards or from digital cameras and scanners.

Networked printer

Networked or shared printers are "designed for high-volume, high-speed printing". They are usually shared by many users on a network and can print at speeds of 45 to around 100 ppm. The Xerox 9700 could achieve 120 ppm. An ID Card printer is used for printing plastic ID cards. These can now be customised with important features such as holographic overlays, HoloKotes and watermarks. This is either a direct to card printer (the more feasible option) or a retransfer printer.

Virtual printer

A virtual printer is a piece of computer software whose user interface and API resembles that of a printer driver, but which is not connected with a physical computer printer. A virtual printer can be used to create a file which is an image of the data which would be printed, for archival purposes or as input to another program, for example to create a PDF or to transmit to another system or user.

Barcode printer

A barcode printer is a computer peripheral for printing barcode labels or tags that can be attached to, or printed directly on, physical objects. Barcode printers are commonly used to label cartons before shipment, or to label retail items with UPCs or EANs.

3D printer

A 3D printer is a device for making a three-dimensional object from a 3D model or other electronic data source through additive processes in which successive layers of material (including plastics, metals, food, cement, wood, and other materials) are laid down under computer control. It is called a printer by analogy with an inkjet printer which produces a two-dimensional document by a similar process of depositing a layer of ink on paper.

ID card printer

A card printer is an electronic desktop printer with single card feeders which print and personalize plastic cards. In this respect they differ from, for example, label printers which have a continuous supply feed. Card dimensions are usually 85.60 × 53.98 mm, standardized under ISO/IEC 7810 as ID-1. This format is also used in EC-cards, telephone cards, credit cards, driver's licenses and health insurance cards. This is commonly known as the bank card format. Card printers are controlled by corresponding printer drivers or by means of a specific programming language. Generally card printers are designed with laminating, striping, and punching functions, and use desktop or web-based software. The hardware features of a card printer differentiate a card printer from the more traditional printers, as ID cards are usually made of PVC plastic and require laminating and punching. Different card printers can accept different card thickness and dimensions.

The principle is the same for practically all card printers: the plastic card is passed through a thermal print head at the same time as a color ribbon. The color from the ribbon is transferred onto the card through the heat given out from the print head. The standard performance for card printing is 300 dpi (300 dots per inch, equivalent to 11.8 dots per mm). There are different printing processes, which vary in their detail:

Thermal transfer

Mainly used to personalize pre-printed plastic cards in monochrome. The color is "transferred" from the (monochrome) color ribbon ;Dye sublimation:This process uses four panels of color according to the CMYK color ribbon. The card to be printed passes under the print head several times each time with the corresponding ribbon panel. Each color in turn is diffused (sublimated) directly onto the card. Thus it is possible to produce a high depth of color (up to 16 million shades) on the card. Afterwards a transparent overlay (O) also known as a topcoat (T) is placed over the card to protect it from mechanical wear and tear and to render the printed image UV resistant.

Reverse image technology

The standard for high-security card applications that use contact and contactless smart chip cards. The technology prints images onto the underside of a special film that fuses to the surface of a card through heat and pressure. Since this process transfers dyes and resins directly onto a smooth, flexible film, the print-head never comes in contact with the card surface itself. As such, card surface interruptions such as smart chips, ridges caused by internal RFID antennae and debris do not affect print quality. Even printing over the edge is possible.

Thermal rewrite print process

In contrast to the majority of other card printers, in the thermal rewrite process the card is not personalized through the use of a color ribbon, but by activating a thermal sensitive foil within the card itself. These cards can be repeatedly personalized, erased and rewritten. The most frequent use of these are in chip-based student identity cards, whose validity changes every semester.

Common printing problems

Many printing problems are caused by physical defects in the card material itself, such as deformation or warping of the card that is fed into the machine in the first place. Printing irregularities can also result from chip or antenna embedding that alters the thickness of the plastic and interferes with the printer's effectiveness. Other issues are often caused by operator errors, such as users attempting to feed non-compatible cards into the card printer, while other printing defects may result from environmental abnormalities such as dirt or contaminants on the card or in the printer. Reverse transfer printers are less vulnerable to common printing problems than direct-to-card printers, since with these printers the card does not come into direct contact with the printhead.

Variations

Broadly speaking there are three main types of card printers, differing mainly by the method used to print onto the card. They are:

Near to Edge

This term designates the cheapest type of printing by card printers. These printers print up to 5 mm from the edge of the card stock.

Direct to Card

Also known as "Edge to Edge Printing". The print-head comes in direct contact with the card. This printing type is the most popular nowadays, mostly due to cost factor. The majority of identification card printers today are of this type.

Reverse Transfer

Also known as "High Definition Printing" or "Over the Edge Printing". The print-head prints to a transfer film backwards (hence the reverse) and then the printed film is rolled onto the card with intense heat (hence the transfer). The term "over the edge" is due to the fact that when the printer prints onto the film it has a "bleed", and when rolled onto the card the bleed extends to completely over the edge of the card, leaving no border.

Different ID Card Printers use different encoding techniques to facilitate disparate business environments and to support security initiatives. Known encoding techniques are:

* Contact Smart Card

The Contact Smart Cards use RFID technology and require direct contact to a conductive plate to register admission or transfer of information. The transmission of commands, data, and card status held between the two physical contact points.

* Contactless Smart Card

Contactless Smart Cards exhibit integrated circuit that can store and process data while communicating with the terminal via Radio Frequency. Unlike Contact Smart Card, contact less cards feature intelligent re-writable microchip that can be transcribed through radio waves.

* HiD Proximity

HID's proximity technology allows fast, accurate reading while offering card or key tag read ranges from 4" to 24" inches (10 cm to 60.96 cm), dependent on the type of proximity reader being used. Since these cards and key tags do not require physical contact with the reader, they are virtually maintenance and wear-free.

ISO Magnetic Stripe

A magnetic stripe card is a type of card capable of storing data by modifying the magnetism of tiny iron-based magnetic particles on a band of magnetic material on the card. The magnetic stripe, sometimes called swipe card or magstripe, is read by physical contact and swiping past a magnetic reading head.

Software

There are basically two categories of card printer software: desktop-based, and web-based (online). The biggest difference between the two is whether or not a customer has a printer on their network that is capable of printing identification cards. If a business already owns an ID card printer, then a desktop-based badge maker is probably suitable for their needs. Typically, large organizations who have high employee turnover will have their own printer. A desktop-based badge maker is also required if a company needs their IDs make instantly. An example of this is the private construction site that has restricted access. However, if a company does not already have a local (or network) printer that has the features they need, then the web-based option is a perhaps a more affordable solution. The web-based solution is good for small businesses that do not anticipate a lot of rapid growth, or organizations who either can not afford a card printer, or do not have the resources to learn how to set up and use one. Generally speaking, desktop-based solutions involve software, a database (or spreadsheet) and can be installed on a single computer or network.

Other options

Alongside the basic function of printing cards, card printers can also read and encode magnetic stripes as well as contact and contact free RFID chip cards (smart cards). Thus card printers enable the encoding of plastic cards both visually and logically. Plastic cards can also be laminated after printing. Plastic cards are laminated after printing to achieve a considerable increase in durability and a greater degree of counterfeit prevention. Some card printers come with an option to print both sides at the same time, which cuts down the time taken to print and less margin of error. In such printers one side of id card is printed and then the card is flipped in the flip station and other side is printed.

Applications