Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#101 2016-03-05 00:01:18

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,380

Re: crème de la crème

79. Edward Jenner, (born May 17, 1749, Berkeley, Gloucestershire, England—died January 26, 1823, Berkeley), English surgeon and discoverer of vaccination for smallpox.

Jenner was born at a time when the patterns of British medical practice and education were undergoing gradual change. Slowly the division between the Oxford- or Cambridge-trained physicians and the apothecaries or surgeons—who were much less educated and who acquired their medical knowledge through apprenticeship rather than through academic work—was becoming less sharp, and hospital work was becoming much more important.

Jenner was a country youth, the son of a clergyman. Because Edward was only five when his father died, he was brought up by an older brother, who was also a clergyman. Edward acquired a love of nature that remained with him all his life. He attended grammar school and at the age of 13 was apprenticed to a nearby surgeon. In the following eight years Jenner acquired a sound knowledge of medical and surgical practice. On completing his apprenticeship at the age of 21, he went to London and became the house pupil of John Hunter, who was on the staff of St. George’s Hospital and was one of the most prominent surgeons in London. Even more important, however, he was an anatomist, biologist, and experimentalist of the first rank; not only did he collect biological specimens, but he also concerned himself with problems of physiology and function.

The firm friendship that grew between the two men lasted until Hunter’s death in 1793. From no one else could Jenner have received the stimuli that so confirmed his natural bent—a catholic interest in biological phenomena, disciplined powers of observation, sharpening of critical faculties, and a reliance on experimental investigation. From Hunter, Jenner received the characteristic advice, “Why think [i.e., speculate]—why not try the experiment?”

In addition to his training and experience in biology, Jenner made progress in clinical surgery. After studying in London from 1770 to 1773, he returned to country practice in Berkeley and enjoyed substantial success. He was capable, skillful, and popular. In addition to practicing medicine, he joined two medical groups for the promotion of medical knowledge and wrote occasional medical papers. He played the violin in a musical club, wrote light verse, and, as a naturalist, made many observations, particularly on the nesting habits of the cuckoo and on bird migration. He also collected specimens for Hunter; many of Hunter’s letters to Jenner have been preserved, but Jenner’s letters to Hunter have unfortunately been lost. After one disappointment in love in 1778, Jenner married in 1788.

Smallpox was widespread in the 18th century, and occasional outbreaks of special intensity resulted in a very high death rate. The disease, a leading cause of death at the time, respected no social class, and disfigurement was not uncommon in patients who recovered. The only means of combating smallpox was a primitive form of vaccination called variolation - intentionally infecting a healthy person with the “matter” taken from a patient sick with a mild attack of the disease. The practice, which originated in China and India, was based on two distinct concepts: first, that one attack of smallpox effectively protected against any subsequent attack and, second, that a person deliberately infected with a mild case of the disease would safely acquire such protection. It was, in present-day terminology, an “elective” infection-i.e., one given to a person in good health. Unfortunately, the transmitted disease did not always remain mild, and mortality sometimes occurred. Furthermore, the inoculated person could disseminate the disease to others and thus act as a focus of infection.

Jenner had been impressed by the fact that a person who had suffered an attack of cowpox—a relatively harmless disease that could be contracted from cattle-could not take the smallpox-i.e., could not become infected whether by accidental or intentional exposure to smallpox. Pondering this phenomenon, Jenner concluded that cowpox not only protected against smallpox but could be transmitted from one person to another as a deliberate mechanism of protection.

The story of the great breakthrough is well known. In May 1796 Jenner found a young dairymaid, Sarah Nelmes, who had fresh cowpox lesions on her hand. On May 14, using matter from Sarah’s lesions, he inoculated an eight-year-old boy, James Phipps, who had never had smallpox. Phipps became slightly ill over the course of the next 9 days but was well on the 10th. On July 1 Jenner inoculated the boy again, this time with smallpox matter. No disease developed; protection was complete. In 1798 Jenner, having added further cases, published privately a slender book entitled 'An Inquiry into the Causes and Effects of the Variolae Vaccinae'.

The reaction to the publication was not immediately favourable. Jenner went to London seeking volunteers for vaccination but, in a stay of three months, was not successful. In London vaccination became popularized through the activities of others, particularly the surgeon Henry Cline, to whom Jenner had given some of the inoculant, and the doctors George Pearson and William Woodville. Difficulties arose, some of them quite unpleasant; Pearson tried to take credit away from Jenner, and Woodville, a physician in a smallpox hospital, contaminated the cowpox matter with smallpox virus. Vaccination rapidly proved its value, however, and Jenner became intensely active promoting it. The procedure spread rapidly to America and the rest of Europe and soon was carried around the world.

Complications were many. Vaccination seemed simple, but the vast number of persons who practiced it did not necessarily follow the procedure that Jenner had recommended, and deliberate or unconscious innovations often impaired the effectiveness. Pure cowpox vaccine was not always easy to obtain, nor was it easy to preserve or transmit. Furthermore, the biological factors that produce immunity were not yet understood; much information had to be gathered and a great many mistakes made before a fully effective procedure could be developed, even on an empirical basis.

Despite errors and occasional chicanery, the death rate from smallpox plunged. Jenner received worldwide recognition and many honours, but he made no attempt to enrich himself through his discovery and actually devoted so much time to the cause of vaccination that his private practice and personal affairs suffered severely. Parliament voted him a sum of £10,000 in 1802 and a further sum of £20,000 in 1806. Jenner not only received honours but also aroused opposition and found himself subjected to attacks and calumnies, despite which he continued his activities on behalf of vaccination. His wife, ill with tuberculosis, died in 1815, and Jenner retired from public life.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#102 2016-03-05 02:07:07

- mathaholic

- Member

- From: Earth

- Registered: 2012-11-29

- Posts: 3,251

Re: crème de la crème

I see. ![]()

Mathaholic | 10th most active poster | Maker of the 350,000th post | Person | rrr's classmate![]()

Offline

#103 2016-03-05 17:26:53

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,380

Re: crème de la crème

Hi, mathaholic!

80. Hans Christian Ørsted (often rendered Oersted in English; 14 August 1777 – 9 March 1851) was a Danish physicist and chemist who discovered that electric currents create magnetic fields, an important aspect of electromagnetism. He is still known today for Oersted's Law. He shaped post-Kantian philosophy and advances in science throughout the late 19th century.

In 1824, Ørsted founded Selskabet for Naturlærens Udbredelse (SNU), a society to disseminate knowledge of the natural sciences. He was also the founder of predecessor organizations which eventually became the Danish Meteorological Institute and the Danish Patent and Trademark Office. Ørsted was the first modern thinker to explicitly describe and name the thought experiment.

A leader of the so-called Danish Golden Age, Ørsted was a close friend of Hans Christian Andersen and the brother of politician and jurist Anders Sandøe Ørsted, who eventually served as Danish prime minister (1853–54).

The oersted (Oe), the cgs unit of magnetic H-field strength, is named after him.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#104 2016-03-05 23:50:20

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,380

Re: crème de la crème

81. Melvil Dewey, (born Dec. 10, 1851, Adams Center, N.Y., U.S.—died Dec. 26, 1931, Lake Placid, Fla.), American librarian who devised the Dewey Decimal Classification for library cataloging and, probably more than any other individual, was responsible for the development of library science in the United States.

Dewey graduated in 1874 from Amherst College and became acting librarian at that institution. In 1876 he published A Classification and Subject Index for Cataloguing and Arranging the Books and Pamphlets of a Library, in which he outlined what became known as the Dewey Decimal Classification. This system was gradually adopted by libraries throughout the English-speaking world. In 1877 Dewey moved to Boston, where, with R.R. Bowker and Frederick Leypoldt, he founded and edited the Library Journal. He was also one of the founders of the American Library Association. In 1883 he became librarian of Columbia College, New York City, and there set up the School of Library Economy, the first institution for training librarians in the United States. The school was moved to Albany, N.Y., as the State Library School under his direction.

From 1889 to 1906 he was director of the New York State Library. He also served as secretary of the State University of New York (1889–1900) and as state director of libraries (1904–06). He completely reorganized the New York state library, making it one of the most efficient in the United States, and established the system of traveling libraries and picture collections.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#105 2016-03-06 18:34:34

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,380

Re: crème de la crème

82. Sir Humphry Davy, Baronet, (born Dec. 17, 1778, Penzance, Cornwall, Eng.—died May 29, 1829, Geneva), English chemist who discovered several chemical elements (including sodium and potassium) and compounds, invented the miner’s safety lamp, and became one of the greatest exponents of the scientific method.

Early life.

Davy was the elder son of middle-class parents, who owned an estate in Ludgvan. He was educated at the grammar school in nearby Penzance and, in 1793, at Truro. In 1795, a year after the death of his father, Robert, he was apprenticed to a surgeon and apothecary, and he hoped eventually to qualify in medicine. An exuberant, affectionate, and popular lad, of quick wit and lively imagination, he was fond of composing verses, sketching, making fireworks, fishing, shooting, and collecting minerals. He loved to wander, one pocket filled with fishing tackle and the other with rock specimens; he never lost his intense love of nature and, particularly, of mountain and water scenery.

While still a youth, ingenuous and somewhat impetuous, Davy had plans for a volume of poems, but he began the serious study of science in 1797, and these visions “fled before the voice of truth.” He was befriended by Davies Giddy (later Gilbert; president of the Royal Society, 1827–30), who offered him the use of his library in Tradea and took him to a chemistry laboratory that was well equipped for that day. There he formed strongly independent views on topics of the moment, such as the nature of heat, light, and electricity and the chemical and physical doctrines of A.-L. Lavoisier. In his small private laboratory, he prepared and inhaled nitrous oxide (laughing gas), in order to test a claim that it was the “principle of contagion,” that is, caused diseases. On Gilbert’s recommendation, he was appointed (1798) chemical superintendent of the Pneumatic Institution, founded at Clifton to inquire into the possible therapeutic uses of various gases. Davy attacked the problem with characteristic enthusiasm, evincing an outstanding talent for experimental inquiry. He investigated the composition of the oxides and acids of nitrogen, as well as ammonia, and persuaded his scientific and literary friends, including Samuel Taylor Coleridge, Robert Southey, and P.M. Roget, to report the effects of inhaling nitrous oxide. He nearly lost his own life inhaling water gas, a mixture of hydrogen and carbon monoxide sometimes used as fuel. The account of his work, published as Researches, Chemical and Philosophical (1800), immediately established his reputation, and he was invited to lecture at the newly founded Royal Institution of Great Britain in London, where he moved in 1801, with the promise of help from the British-American scientist Sir Benjamin Thompson (Count von Rumford), the British naturalist Sir Joseph Banks, and the English chemist and physicist Henry Cavendish in furthering his researches; e.g., on voltaic cells, early forms of electric batteries. His carefully prepared and rehearsed lectures rapidly became important social functions and added greatly to the prestige of science and the institution. In 1802 he became professor of chemistry. His duties included a special study of tanning: he found catechu, the extract of a tropical plant, as effective as and cheaper than the usual oak extracts, and his published account was long used as a tanner’s guide. In 1803 he was admitted a fellow of the Royal Society and an honorary member of the Dublin Society and delivered the first of an annual series of lectures before the board of agriculture. This led to his Elements of Agricultural Chemistry (1813), the only systematic work available for many years. For his researches on voltaic cells, tanning, and mineral analysis, he received the Copley Medal in 1805. He was elected secretary of the Royal Society in 1807.

Major discoveries.

Davy early concluded that the production of electricity in simple electrolytic cells resulted from chemical action and that chemical combination occurred between substances of opposite charge. He therefore reasoned that electrolysis, the interactions of electric currents with chemical compounds, offered the most likely means of decomposing all substances to their elements. These views were explained in 1806 in his lecture “On Some Chemical Agencies of Electricity,” for which, despite the fact that England and France were at war, he received the Napoleon Prize from the Institut de France (1807). This work led directly to the isolation of sodium and potassium from their compounds (1807) and of the alkaline-earth metals from theirs (1808). He also discovered boron (by heating borax with potassium), hydrogen telluride, and hydrogen phosphide (phosphine). He showed the correct relation of chlorine to hydrochloric acid and the untenability of the earlier name (oxymuriatic acid) for chlorine; this negated Lavoisier’s theory that all acids contained oxygen. He explained the bleaching action of chlorine (through its liberation of oxygen from water) and discovered two of its oxides (1811 and 1815), but his views on the nature of chlorine were disputed. He was not aware that chlorine is a chemical element, and experiments designed to reveal oxygen in chlorine failed.

In 1810 and 1811 he lectured to large audiences at Dublin (on agricultural chemistry, the elements of chemical philosophy, geology) and received £1,275 in fees, as well as the honorary degree of LL.D., from Trinity College. In 1812 he was knighted by the Prince Regent (April 8), delivered a farewell lecture to members of the Royal Institution (April 9), and married Jane Apreece, a wealthy widow well known in social and literary circles in England and Scotland (April 11). He also published the first part of the Elements of Chemical Philosophy, which contained much of his own work; his plan was too ambitious, however, and nothing further appeared. Its completion, according to a Swedish chemist, J.J. Berzelius, would have “advanced the science of chemistry a full century.”

His last important act at the Royal Institution, of which he remained honorary professor, was to interview the young Michael Faraday, later to become one of England’s great scientists, who became laboratory assistant there in 1813 and accompanied the Davys on a European tour (1813–15). By permission of Napoleon, he travelled through France, meeting many prominent scientists, and was presented to the empress Marie Louise. With the aid of a small portable laboratory and of various institutions in France and Italy, he investigated the substance “X” (later called iodine), whose properties and similarity to chlorine he quickly discovered; further work on various compounds of iodine and chlorine was done before he reached Rome. He also analyzed many specimens of classical pigments and proved that diamond is a form of carbon.

Later years.

Shortly after his return, he studied, for the Society for Preventing Accidents in Coal Mines, the conditions under which mixtures of firedamp and air explode. This led to the invention of the miner’s safety lamp and to subsequent researches on flame, for which he received the Rumford medals (gold and silver) from the Royal Society and, from the northern mine owners, a service of plate (eventually sold to found the Davy Medal). After being created a baronet in 1818, he again went to Italy, inquiring into volcanic action and trying unsuccessfully to find a way of unrolling the papyri found at Herculaneum. In 1820 he became president of the Royal Society, a position he held until 1827. In 1823–25 he was associated with the politician and writer John Wilson Croker in founding the Athenaeum Club, of which he was an original trustee, and with the colonial governor Sir Thomas Stamford Raffles in founding the Zoological Society and in furthering the scheme for zoological gardens in Regent’s Park, London (opened in 1828). During this period, he examined magnetic phenomena caused by electricity and electrochemical methods for preventing saltwater corrosion of copper sheathing on ships by means of iron and zinc plates. Though the protective principles were made clear, considerable fouling occurred, and the method’s failure greatly vexed him. But he was, as he said, “burned out.” His Bakerian lecture for 1826, “On the Relation of Electrical and Chemical Changes,” contained his last known thoughts on electrochemistry and earned him the Royal Society’s Royal Medal.

Davy’s health was by then failing rapidly; in 1827 he departed for Europe and, in the summer, was forced to resign the presidency of the Royal Society, being succeeded by Davies Gilbert. Having to forgo business and field sports, Davy wrote Salmonia: or Days of Fly Fishing (1828), a book on fishing (after the manner of Izaak Walton) that contained engravings from his own drawings. After a last, short visit to England, he returned to Italy, settling at Rome in February 1829—“a ruin amongst ruins.” Though partly paralyzed through stroke, he spent his last months writing a series of dialogues, published posthumously as Consolations in Travel, or the Last Days of a Philosopher (1830).

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#106 2016-03-07 16:57:19

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,380

Re: crème de la crème

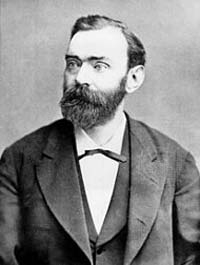

83. Alfred Bernhard Nobel, (born October 21, 1833, Stockholm, Sweden—died December 10, 1896, San Remo, Italy), Swedish chemist, engineer, and industrialist, who invented dynamite and other, more powerful explosives and who also founded the Nobel Prizes.

Alfred Bernhard Nobel was the fourth son of Immanuel and Caroline Nobel. Immanuel was an inventor and engineer who had married Caroline Andrietta Ahlsell in 1827. The couple had eight children, of whom only Alfred and three brothers reached adulthood. Alfred was prone to illness as a child, but he enjoyed a close relationship with his mother and displayed a lively intellectual curiosity from an early age. He was interested in explosives, and he learned the fundamentals of engineering from his father. Immanuel, meanwhile, had failed at various business ventures until moving in 1837 to St. Petersburg in Russia, where he prospered as a manufacturer of explosive mines and machine tools. The Nobel family left Stockholm in 1842 to join the father in St. Petersburg. Alfred’s newly prosperous parents were now able to send him to private tutors, and he proved to be an eager pupil. He was a competent chemist by age 16 and was fluent in English, French, German, and Russian, as well as Swedish.

Alfred Nobel left Russia in 1850 to spend a year in Paris studying chemistry and then spent four years in the United States working under the direction of John Ericsson, the builder of the ironclad warship Monitor. Upon his return to St. Petersburg, Nobel worked in his father’s factory, which made military equipment during the Crimean War. After the war ended in 1856, the company had difficulty switching to the peacetime production of steamboat machinery, and it went bankrupt in 1859.

Alfred and his parents returned to Sweden, while his brothers Robert and Ludvig stayed behind in Russia to salvage what was left of the family business. Alfred soon began experimenting with explosives in a small laboratory on his father’s estate. At the time, the only dependable explosive for use in mines was black powder, a form of gunpowder. A recently discovered liquid compound, nitroglycerin, was a much more powerful explosive, but it was so unstable that it could not be handled with any degree of safety. Nevertheless, Nobel in 1862 built a small factory to manufacture nitroglycerin, and at the same time he undertook research in the hope of finding a safe way to control the explosive’s detonation. In 1863 he invented a practical detonator consisting of a wooden plug inserted into a larger charge of nitroglycerin held in a metal container; the explosion of the plug’s small charge of black powder serves to detonate the much more powerful charge of liquid nitroglycerin. This detonator marked the beginning of Nobel’s reputation as an inventor as well as the fortune he was to acquire as a maker of explosives. In 1865 Nobel invented an improved detonator called a blasting cap; it consisted of a small metal cap containing a charge of mercury fulminate that can be exploded by either shock or moderate heat. The invention of the blasting cap inaugurated the modern use of high explosives.

Nitroglycerin itself, however, remained difficult to transport and extremely dangerous to handle. So dangerous, in fact, that Nobel’s nitroglycerin factory blew up in 1864, killing his younger brother Emil and several other people. Undaunted by this tragic accident, Nobel built several factories to manufacture nitroglycerin for use in concert with his blasting caps. These factories were as safe as the knowledge of the time allowed, but accidental explosions still occasionally occurred. Nobel’s second important invention was that of dynamite in 1867. By chance, he discovered that nitroglycerin was absorbed to dryness by kieselguhr, a porous siliceous earth, and the resulting mixture was much safer to use and easier to handle than nitroglycerin alone. Nobel named the new product dynamite (from Greek dynamis, “power”) and was granted patents for it in Great Britain (1867) and the United States (1868). Dynamite established Nobel’s fame worldwide and was soon put to use in blasting tunnels, cutting canals, and building railways and roads.

In the 1870s and ’80s Nobel built a network of factories throughout Europe to manufacture dynamite, and he formed a web of corporations to produce and market his explosives. He also continued to experiment in search of better ones, and in 1875 he invented a more powerful form of dynamite, blasting gelatin, which he patented the following year. Again by chance, he had discovered that mixing a solution of nitroglycerin with a fluffy substance known as nitrocellulose results in a tough, plastic material that has a high water resistance and greater blasting power than ordinary dynamites. In 1887 Nobel introduced ballistite, one of the first nitroglycerin smokeless powders and a precursor of cordite. Although Nobel held the patents to dynamite and his other explosives, he was in constant conflict with competitors who stole his processes, a fact that forced him into protracted patent litigation on several occasions.

Nobel’s brothers Ludvig and Robert, in the meantime, had developed newly discovered oilfields near Baku (now in Azerbaijan) along the Caspian Sea and had themselves become immensely wealthy. Alfred’s worldwide interests in explosives, along with his own holdings in his brothers’ companies in Russia, brought him a large fortune. In 1893 he became interested in Sweden’s arms industry, and the following year he bought an ironworks at Bofors, near Varmland, that became the nucleus of the well-known Bofors arms factory. Besides explosives, Nobel made many other inventions, such as artificial silk and leather, and altogether he registered more than 350 patents in various countries.

Nobel’s complex personality puzzled his contemporaries. Although his business interests required him to travel almost constantly, he remained a lonely recluse who was prone to fits of depression. He led a retired and simple life and was a man of ascetic habits, yet he could be a courteous dinner host, a good listener, and a man of incisive wit. He never married, and apparently preferred the joys of inventing to those of romantic attachment. He had an abiding interest in literature and wrote plays, novels, and poems, almost all of which remained unpublished. He had amazing energy and found it difficult to relax after intense bouts of work. Among his contemporaries, he had the reputation of a liberal or even a socialist, but he actually distrusted democracy, opposed suffrage for women, and maintained an attitude of benign paternalism toward his many employees. Though Nobel was essentially a pacifist and hoped that the destructive powers of his inventions would help bring an end to war, his view of mankind and nations was pessimistic.

By 1895 Nobel had developed angina pectoris, and he died of a cerebral hemorrhage at his villa in San Remo, Italy, in 1896. At his death his worldwide business empire consisted of more than 90 factories manufacturing explosives and ammunition. The opening of his will, which he had drawn up in Paris on November 27, 1895, and had deposited in a bank in Stockholm, contained a great surprise for his family, friends, and the general public. He had always been generous in humanitarian and scientific philanthropies, and he left the bulk of his fortune in trust to establish what came to be the most highly regarded of international awards, the Nobel Prizes.

We can only speculate about the reasons for Nobel’s establishment of the prizes that bear his name. He was reticent about himself, and he confided in no one about his decision in the months preceding his death. The most plausible assumption is that a bizarre incident in 1888 may have triggered the train of reflection that culminated in his bequest for the Nobel Prizes. That year Alfred’s brother Ludvig had died while staying in Cannes, France. The French newspapers reported Ludvig’s death but confused him with Alfred, and one paper sported the headline “Le marchand de la mort est mort” (“The merchant of death is dead.”) Perhaps Alfred Nobel established the prizes to avoid precisely the sort of posthumous reputation suggested by this premature obituary. It is certain that the actual awards he instituted reflect his lifelong interest in the fields of physics, chemistry, physiology, and literature. There is also abundant evidence that his friendship with the prominent Austrian pacifist Bertha von Suttner inspired him to establish the prize for peace.

Nobel himself, however, remains a figure of paradoxes and contradictions: a brilliant, lonely man, part pessimist and part idealist, who invented the powerful explosives used in modern warfare but also established the world’s most prestigious prizes for intellectual services rendered to humanity.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#107 2016-03-08 17:06:27

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,380

Re: crème de la crème

84. Valentina Vladimirovna Tereshkova (born 6 March 1937) is a Russian former cosmonaut. She is the first woman to have flown in space, having been selected from more than four hundred applicants and five finalists to pilot Vostok 6 on 16 June 1963. In order to join the Cosmonaut Corps, Tereshkova was honorarily inducted into the Soviet Air Force and thus she also became the first civilian to fly in space.

Before her recruitment as a cosmonaut, Tereshkova was a textile-factory assembly worker and an amateur skydiver. After the dissolution of the first group of female cosmonauts in 1969, she became a prominent member of the Communist Party of the Soviet Union, holding various political offices. She remained politically active following the collapse of the Soviet Union and is still regarded as a hero in post-Soviet Russia.

In 2013, she offered to go on a one-way trip to Mars if the opportunity arose. At the opening ceremony of the 2014 Winter Olympics, she was a carrier of the Olympic flag.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#108 2016-03-09 16:18:47

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,380

Re: crème de la crème

85. Chester F. Carlson, (born Feb. 8, 1906, Seattle, Wash., U.S.—died Sept. 19, 1968, New York, N.Y.), American physicist who was the inventor of xerography, an electrostatic dry-copying process that found applications ranging from office copying to reproducing out-of-print books.

By age 14 Carlson was supporting his invalid parents, yet he managed to earn a college degree from the California Institute of Technology, Pasadena, in 1930. After a short time spent with the Bell Telephone Company, he obtained a position with the patent department of P.R. Mallory Company, a New York electronics firm.

Plagued by the difficulty of getting copies of patent drawings and specifications, Carlson began in 1934 to look for a quick, convenient way to copy line drawings and text. Since numerous large corporations were already working on photographic or chemical copying processes, he turned to electrostatics for a solution to the problem. Four years later he succeeded in making the first xerographic copy.

Carlson obtained the first of many patents for the xerographic process and tried unsuccessfully to interest someone in developing and marketing his invention. More than 20 companies turned him down. Finally, in 1944, he persuaded Battelle Memorial Institute, Columbus, Ohio, a nonprofit industrial research organization, to undertake developmental work. In 1947 a small firm in Rochester, N.Y., the Haloid Company (later the Xerox Corporation), obtained the commercial rights to xerography, and 11 years later Xerox introduced its first office copier. Carlson’s royalty rights and stock in Xerox Corporation made him a multimillionaire.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#109 2016-03-10 17:05:19

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,380

Re: crème de la crème

86. André-Marie Ampère, (born Jan. 22, 1775, Lyon, France—died June 10, 1836, Marseille), French physicist who founded and named the science of electrodynamics, now known as electromagnetism. His name endures in everyday life in the ampere, the unit for measuring electric current.

Early life

Ampère, who was born into a prosperous bourgeois family during the height of the French Enlightenment, personified the scientific culture of his day. His father, Jean-Jacques Ampère, was a successful merchant, and also an admirer of the philosophy of Jean-Jacques Rousseau, whose theories of education, as outlined in his treatise Émile, were the basis of Ampère’s education. Rousseau argued that young boys should avoid formal schooling and pursue instead an “education direct from nature.” Ampère’s father actualized this ideal by allowing his son to educate himself within the walls of his well-stocked library. French Enlightenment masterpieces such as Georges-Louis Leclerc, comte de Buffon’s Histoire naturelle, générale et particulière (begun in 1749) and Denis Diderot and Jean Le Rond d’Alembert’s Encyclopédie (volumes added between 1751 and 1772) thus became Ampère’s schoolmasters. In addition, he used his access to the latest mathematical books to begin teaching himself advanced mathematics at age 12. His mother was a devout woman, so Ampère was also initiated into the Catholic faith along with Enlightenment science. The French Revolution (1787–99) that erupted during his youth was also formative. Ampère’s father was called into public service by the new revolutionary government, becoming a justice of the peace in a small town near Lyon. Yet when the Jacobin faction seized control of the Revolutionary government in 1792, Jean-Jacques Ampère resisted the new political tides, and he was guillotined on Nov. 24, 1793, as part of the Jacobin purges of the period.

While the French Revolution brought these personal traumas, it also created new institutions of science that ultimately became central to André-Marie Ampère’s professional success. He took his first regular job in 1799 as a modestly paid mathematics teacher, which gave him the financial security to marry and father his first child, Jean-Jacques, the next year. (Jean-Jacques Ampère eventually achieved his own fame as a scholar of languages.) Ampère’s maturation corresponded with the transition to the Napoleonic regime in France, and the young father and teacher found new opportunities for success within the technocratic structures favoured by the new French emperor.

In 1802 Ampère was appointed a professor of physics and chemistry at the École Centrale in Bourg-en-Bresse. He used his time in Bourg to research mathematics, producing Considérations sur la théorie mathématique de jeu (1802; “Considerations on the Mathematical Theory of Games”), a treatise on mathematical probability that he sent to the Paris Academy of Sciences in 1803. After the death of his wife in July 1803, Ampère moved to Paris, where he assumed a tutoring post at the new École Polytechnique in 1804. Despite his lack of formal qualifications, Ampère was appointed a professor of mathematics at the school in 1809. In addition to holding positions at this school until 1828, in 1819 and 1820 Ampère offered courses in philosophy and astronomy, respectively, at the University of Paris, and in 1824 he was elected to the prestigious chair in experimental physics at the Collège de France. In 1814 Ampère was invited to join the class of mathematicians in the new Institut Impériale, the umbrella under which the reformed state Academy of Sciences would sit.

Ampère engaged in a diverse array of scientific inquiries during these years leading up to his election to the academy—writing papers and engaging in topics ranging from mathematics and philosophy to chemistry and astronomy. Such breadth was customary among the leading scientific intellectuals of the day.

Founding of electromagnetism

Had Ampère died before 1820, his name and work would likely have been forgotten. In that year, however, Ampère’s friend and eventual eulogist François Arago demonstrated before the members of the French Academy of Sciences the surprising discovery of Danish physicist Hans Christiaan Ørsted that a magnetic needle is deflected by an adjacent electric current. Ampère was well prepared to throw himself fully into this new line of research.

Ampère immediately set to work developing a mathematical and physical theory to understand the relationship between electricity and magnetism. Extending Ørsted’s experimental work, Ampère showed that two parallel wires carrying electric currents repel or attract each other, depending on whether the currents flow in the same or opposite directions, respectively. He also applied mathematics in generalizing physical laws from these experimental results. Most important was the principle that came to be called Ampère’s law, which states that the mutual action of two lengths of current-carrying wire is proportional to their lengths and to the intensities of their currents. Ampère also applied this same principle to magnetism, showing the harmony between his law and French physicist Charles Augustin de Coulomb’s law of magnetic action. Ampère’s devotion to, and skill with, experimental techniques anchored his science within the emerging fields of experimental physics.

Ampère also offered a physical understanding of the electromagnetic relationship, theorizing the existence of an “electrodynamic molecule” (the forerunner of the idea of the electron) that served as the constituent element of electricity and magnetism. Using this physical understanding of electromagnetic motion, Ampère developed a physical account of electromagnetic phenomena that was both empirically demonstrable and mathematically predictive. In 1827 Ampère published his magnum opus, Mémoire sur la théorie mathématique des phénomènes électrodynamiques uniquement déduite de l’experience (Memoir on the Mathematical Theory of Electrodynamic Phenomena, Uniquely Deduced from Experience), the work that coined the name of his new science, electrodynamics, and became known ever after as its founding treatise. In recognition of his contribution to the making of modern electrical science, an international convention signed in 1881 established the ampere as a standard unit of electrical measurement, along with the coulomb, volt, ohm, and watt, which are named, respectively, after Ampère’s contemporaries Coulomb, Alessandro Volta of Italy, Georg Ohm of Germany, and James Watt of Scotland.

The 1827 publication of Ampère’s synoptic Mémoire brought to a close his feverish work over the previous seven years on the new science of electrodynamics. The text also marked the end of his original scientific work. His health began to fail, and he died while performing a university inspection, decades before his new science was canonized as the foundation stone for the modern science of electromagnetism.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#110 2016-03-11 16:11:18

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,380

Re: crème de la crème

87. Wilhelm Conrad Röntgen, Röntgen also spelled Roentgen (born March 27, 1845, Lennep, Prussia [now Remscheid, Germany]—died February 10, 1923, Munich, Germany), physicist who was a recipient of the first Nobel Prize for Physics, in 1901, for his discovery of X-rays, which heralded the age of modern physics and revolutionized diagnostic medicine.

Röntgen studied at the Polytechnic in Zürich and then was professor of physics at the universities of Strasbourg (1876–79), Giessen (1879–88), Würzburg (1888–1900), and Munich (1900–20). His research also included work on elasticity, capillary action of fluids, specific heats of gases, conduction of heat in crystals, absorption of heat by gases, and piezoelectricity.

In 1895, while experimenting with electric current flow in a partially evacuated glass tube (cathode-ray tube), Röntgen observed that a nearby piece of barium platinocyanide gave off light when the tube was in operation. He theorized that when the cathode rays (electrons) struck the glass wall of the tube, some unknown radiation was formed that traveled across the room, struck the chemical, and caused the fluorescence. Further investigation revealed that paper, wood, and aluminum, among other materials, are transparent to this new form of radiation. He found that it affected photographic plates, and, since it did not noticeably exhibit any properties of light, such as reflection or refraction, he mistakenly thought the rays were unrelated to light. In view of its uncertain nature, he called the phenomenon X-radiation, though it also became known as Röntgen radiation. He took the first X-ray photographs, of the interiors of metal objects and of the bones in his wife’s hand.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#111 2016-03-12 17:01:27

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,380

Re: crème de la crème

88. Sir Joseph Lister, Baronet (born April 5, 1827, Upton, Essex, Eng.—died Feb. 10, 1912, Walmer, Kent), British surgeon and medical scientist who was the founder of antiseptic medicine and a pioneer in preventive medicine. While his method, based on the use of antiseptics, is no longer employed, his principle—that bacteria must never gain entry to an operation wound—remains the basis of surgery to this day. He was made a baronet in 1883 and raised to the peerage in 1897.

Education

Lister was the second son of Joseph Jackson Lister and his wife, Isabella Harris, members of the Society of Friends, or Quakers. J.J. Lister, a wine merchant and amateur physicist and microscopist, was elected a fellow of the Royal Society for his discovery that led to the modern achromatic (non-colour-distorting) microscope.

While both parents took an active part in Lister’s education, his father instructing him in natural history and the use of the microscope, Lister received his formal schooling in two Quaker institutions, which laid far more emphasis upon natural history and science than did other schools. He became interested in comparative anatomy, and, before his 16th birthday, he had decided upon a surgical career.

After taking an arts course at University College, London, he enrolled in the faculty of medical science in October 1848. A brilliant student, he was graduated a bachelor of medicine with honours in 1852; in the same year he became a fellow of the Royal College of Surgeons and house surgeon at University College Hospital. A visit to Edinburgh in the fall of 1853 led to Lister’s appointment as assistant to James Syme, the greatest surgical teacher of his day, and in October 1856 he was appointed surgeon to the Edinburgh Royal Infirmary. In April he had married Syme’s eldest daughter. Lister, a deeply religious man, joined the Scottish Episcopal Church. The marriage, although childless, was a happy one, his wife entering fully into Lister’s professional life.

When three years later the Regius Professorship of Surgery at Glasgow University fell vacant, Lister was elected from seven applicants. In August 1861 he was appointed surgeon to the Glasgow Royal Infirmary, where he was in charge of wards in the new surgical block. The managers hoped that hospital disease (now known as operative sepsis—infection of the blood by disease-producing microorganisms) would be greatly decreased in their new building. The hope proved vain, however. Lister reported that, in his Male Accident Ward, between 45 and 50 percent of his amputation cases died from sepsis between 1861 and 1865.

Work in antisepsis

In this ward Lister began his experiments with antisepsis. Much of his earlier published work had dealt with the mechanism of coagulation of the blood and role of the blood vessels in the first stages of inflammation. Both researches depended upon the microscope and were directly connected with the healing of wounds. Lister had already tried out methods to encourage clean healing and had formed theories to account for the prevalence of sepsis. Discarding the popular concept of miasma—direct infection by bad air—he postulated that sepsis might be caused by a pollen-like dust. There is no evidence that he believed this dust to be living matter, but he had come close to the truth. It is therefore all the more surprising that he became acquainted with the work of the bacteriologist Louis Pasteur only in 1865.

Pasteur had arrived at his theory that microorganisms cause fermentation and disease by experiments on fermentation and putrefaction. Lister’s education and his familiarity with the microscope, the process of fermentation, and the natural phenomena of inflammation and coagulation of the blood impelled him to accept Pasteur’s theory as the full revelation of a half-suspected truth. At the start he believed the germs were carried solely by the air. This incorrect opinion proved useful, for it obliged him to adopt the only feasible method of surgically clean treatment. In his attempt to interpose an antiseptic barrier between the wound and the air, he protected the site of operation from infection by the surgeon’s hands and instruments. He found an effective antiseptic in carbolic acid, which had already been used as a means of cleansing foul-smelling sewers and had been empirically advised as a wound dressing in 1863. Lister first successfully used his new method on Aug. 12, 1865; in March 1867 he published a series of cases. The results were dramatic. Between 1865 and 1869, surgical mortality fell from 45 to 15 percent in his Male Accident Ward.

In 1869, Lister succeeded Syme in the chair of Clinical Surgery at Edinburgh. There followed the seven happiest years of his life when, largely as the result of German experiments with antisepsis during the Franco-German War, his clinics were crowded with visitors and eager students. In 1875 Lister made a triumphal tour of the leading surgical centres in Germany. The next year he visited America but was received with little enthusiasm except in Boston and New York City.

Lister’s work had been largely misunderstood in England and the United States. Opposition was directed against his germ theory rather than against his “carbolic treatment.” The majority of practicing surgeons were unconvinced; while not antagonistic, they awaited clear proof that antisepsis constituted a major advance. Lister was not a spectacular operative surgeon and refused to publish statistics. Edinburgh, despite the ancient fame of its medical school, was regarded as a provincial centre. Lister understood that he must convince London before the usefulness of his work would be generally accepted.

His chance came in 1877, when he was offered the chair of Clinical Surgery at King’s College. On Oct. 26, 1877, Lister, at King’s College Hospital, for the first time performed the then-revolutionary operation of wiring a fractured patella, or kneecap. It entailed the deliberate conversion of a simple fracture, carrying no risk to life, into a compound fracture, which often resulted in generalized infection and death. Lister’s proposal was widely publicized and aroused much opposition. Thus, the entire success of his operation carried out under antiseptic conditions forced surgical opinion throughout the world to accept that his method had added greatly to the safety of operative surgery.

More fortunate than many pioneers, Lister saw the almost universal acceptance of his principle during his working life. He retired from surgical practice in 1893, after the death of his wife in the previous year. Many honours came to him. Created a baronet in 1883, he was made Baron Lister of Lyme Regis in 1897 and appointed one of the 12 original members of the Order of Merit in 1902. He was a gentle, shy, unassuming man, firm in his purpose because he humbly believed himself to be directed by God. He was uninterested in social success or financial reward. In person he was handsome, with a fine athletic figure, fresh complexion, hazel eyes, and silver hair. For some years before his death, however, he was almost completely blind and deaf. Lister wrote no books but contributed many papers to professional journals. These are contained in The Collected Papers of Joseph, Baron Lister, 2 vol. (1909).

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#112 2016-03-13 14:52:59

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,380

Re: crème de la crème

89. William Sturgeon, (born May 22, 1783, Whittington, Lancashire, Eng.—died Dec. 4, 1850, Prestwich, Lancashire), English electrical engineer who devised the first electromagnet capable of supporting more than its own weight. This device led to the invention of the telegraph, the electric motor, and numerous other devices basic to modern technology.

Sturgeon, self-educated in electrical phenomena and natural science, spent much time lecturing and conducting electrical experiments. In 1824 he became lecturer in science at the Royal Military College, Addiscombe, Surrey, and the following year he exhibited his first electromagnet. The 7-ounce (200-gram) magnet was able to support 9 pounds (4 kilograms) of iron using the current from a single cell.

Sturgeon built an electric motor in 1832 and invented the commutator, an integral part of most modern electric motors. In 1836, the year he founded the monthly journal Annals of Electricity, he invented the first suspended coil galvanometer, a device for measuring current. He also improved the voltaic battery and worked on the theory of thermoelectricity. From more than 500 kite observations he established that in serene weather the atmosphere is invariably charged positively with respect to the Earth, becoming more positive with increasing altitude.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#113 2016-03-15 16:14:26

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,380

Re: crème de la crème

90. Daniel Gabriel Fahrenheit

Fahrenheit was the scion of a wealthy merchant family that had come to Danzig from Königsberg in the middle of the serventeenth century. His father, Daniel, married Concordia Schumann, the daughter of Danzig wholesaler. From this union there were five children, three girls and two boys, of whom Daniel was the eldest.

In 1701 Fahrenheit’s parents died suddenly, and his guardian sent him to Amsterdam to learn business. It was there, apparently, the Fahrenheit first became acquainted with, and then fascinated by, the rather specialized and small but rapidly growing business of making scientific instruments. About 1707 he began his years of wandering, during which he acquired the techniques of his trade by observing the practices of other scientists and instrument makers. He traveled throughout Germany, visiting his native city of Danzig as well as Berlin, Halle, Leipzig, and Dresden. He met Olaus Roemer in Copenhagen in 1708, and in 1715 he entered into correspondence with Leibniz about a clock for determining longitude at sea. In 1714 Christian von Wolff published a description of one of Fahrenheit’s early thermometers in the Acta eruditorum. Fahrenheit returned to Amsterdam in 1717 and established himself as a maker of scientific instruments. There he became acquainted with three of the greatest Dutch scientists of his era: W. J. ’sGravesande, Hermann Boerhaave, and Pieter van Musschenbroek. In 1724 he was admitted to the Royal Society, and in the same year he published in the Philosophical Transactions his only scientific writings, five brief articles in Latin. Just before his death in 1736, Fahrenheit took out a patent on a pumping device that he hoped would be useful in draining Dutch polders.

Fahrenheit’s most significant achievement was his development of the standard thermometric scale that bears his name. Nearly a century had passed since the construction of the first primitive thermometers, and although many of the basic problems of thermometry had been solved, no standard thermometric scale had been developed that would allow scientists in different locations to compare temperatures. About 1701 Olaus Roemeṙ had constructed a spirit thermometer based upon two universal fiducial points. The upper fixed point, determined by the temperature of boiling water, was labeled 60°; the lower fixed point, determined by the temperature of melting ice, was set at 7–1/2°. This latter, seemingly arbitrary, number was chosen to allow exactly 1/8 of the entire scale to stand below the freezing point. Since 00 on the Roemer scale approximated the temperature of an ice and salt mixture (which was widely considered to be at the coldest possible temperature), all readings on Roemer’s thermometer were assumed to be positive.

Roemer did not publish anything about his thermometer, and its existence was unknown to most of his contemporaries except Fahrenheit, who thought mistakenly that his own thermometric scale was patterned after Roemer’s. In 1708, while visiting Roemer, Fahrenheit watched the Danish astronomer as he graduated several thermometers. These particular instruments were being graduated to a scale of 22–1/2°, or 3/8 of Roemer’s standard scale of 60°. Since most of the scale would then be in the temperate range, it is probable that Roemer was designing them for meteorological purposes. In a letter addressed to Boerhaave, Fahrenheit gave the following description of Roemer’s procedure.

The problem with Fahrenheit’s account is that he took Roemer’s “blood-warm” (22–1/2°) to be a primary fiducial point, fixed quite literally at the temperature of the human blood. In fact, 22–1/2° on the Roemer scale is considerably below body temperature (by about 15° on the modern Fahrenheit thermometer). Furthermore, Roemer used boiling water (set at 60°), not blood temperature, as his upper fixed point. The simplest explanation for Fahrenheit’s misunderstanding of the Roemer scale seems to lie in the ambiguity of the term “blood-warm.” It can mean either a tepid heat or the exact temperature of the human blood. Roemer probably intended to convey the former meaning, and Fahrenheit obviously understood the latter one.

When Fahrenheit began producing thermometers of his own, he graduated them after what he believed were Roemer’s methods. The upper fixed point (labeled 22–1/2°) was determined by placing the bulb of the thermometer in the mouth or armpit of a healthy male. The lower fixed point (labeled 7–1/2°) was determined by an ice and water mixture. In addition, Fahrenheit divided each degree into four parts, so that the upper point became 90° and the lower one 30°. Later (in 1717) he moved the upper point to 96° and the lower one to 32° in order to eliminate “inconvenient and awkward fractions.”

In an article on the boiling points of various liquids, Fahrenheit reported that the boiling temperature of water was 212° on his thermometric scale. This figure was actually several degrees higher than it should have been. After Fahrenheit’s death it became standard practice to graduate Fahrenheit thermometers with the boiling point of water (set at 212°) as the upper fixed point. As a result, normal body temperature became 98.6° instead of Fahrenheit’s 96°. This variant of the Fahrenheit scale became standard throughout Holland and Britain. Today it is used for meteorological purposes in most English-speaking countries.

Fahrenheit knew that the boiling temperature of water varied with the atmospheric pressure, and on this Principle he constructed a hypsometric thermometer that enabled one to determine the atmospheric Pressure directly from a reading of the boiling point of water. He also invented a hydrometer that became a model for subsequent developments.

In the early eighteenth century, it was not at all unusual for a person without formal scientific training to be admitted to the Royal Society. Makers of scientific instruments could be particularly valuable members because they often operated on the farthest frontiers of scientific knowledge, defining universal constants on which to scale their instruments and isolating the variables that affected their operation. In order to make reliable instruments that would be useful to the scientific community as a whole, Fahrenheit was obliged to concern himself with a wide variety of scientific Problems: measuring the expansion of glass, assessing the thermometric behavior of mercury and alcohol, describing the effects of atmospheric pressure on the boiling points of liquids and establishing the densities of various substances. His direct contributions, it is true, were small, but in raising appreciably the level of precision that was obtainable in many scientific observations, Fahrenheit affected profoundly the course of experimental physics in the eighteenth century.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#114 2016-03-16 23:56:25

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,380

Re: crème de la crème

91. Christopher Columbus (between 31 October 1450 and 30 October 1451 – 20 May 1506) was an Italian explorer, navigator, colonizer and citizen of the Republic of Genoa. Under the auspices of the Catholic Monarchs of Spain, he completed four voyages across the Atlantic Ocean. Those voyages, and his efforts to establish permanent settlements on the island of Hispaniola, initiated the Spanish colonization of the New World.

In the context of emerging Western imperialism and economic competition between European kingdoms through the establishment of trade routes and colonies, Columbus's proposal to reach the East Indies by sailing westward eventually received the support of the Spanish Crown, which saw in it a chance to enter the spice trade with Asia through a new westward route. During his first voyage in 1492, instead of arriving at Japan as he had intended, Columbus reached the New World, landing on an island in the Bahamas archipelago that he named "San Salvador". Over the course of three more voyages, Columbus visited the Greater and Lesser Antilles, as well as the Caribbean coast of Venezuela and Central America, claiming all of it for the Crown of Castile.

Though Columbus was not the first European explorer to reach the Americas (having been preceded by the Vikinger expedition led by Leif Ericson in the 11th century), his voyages led to the first lasting European contact with the Americas, inaugurating a period of European exploration, conquest, and colonization that lasted for several centuries. These voyages had, therefore, an enormous impact in the historical development of the modern Western world. Columbus spearheaded the transatlantic slave trade and has been accused by several historians of initiating the genocide of the Hispaniola natives. Columbus himself saw his accomplishments primarily in the light of spreading the Christian religion.

Never admitting that he had reached a continent previously unknown to Europeans rather than the East Indies he had set out for, Columbus called the inhabitants of the lands he visited indios (Spanish for "Indians"). Columbus's strained relationship with the Spanish crown and its appointed colonial administrators in America led to his arrest and dismissal as governor of the settlements on the island of Hispaniola in 1500 and later to protracted litigation over the benefits which Columbus and his heirs claimed were owed to them by the crown.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#115 2016-03-17 02:38:11

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,380

Re: crème de la crème

92. Fermat's last theorem earns Andrew Wiles the Abel Prize

Mathematician receives coveted award for solving three-century-old problem in number theory.

Andrew Wiles (in 1998) poses next to Fermat's last theorem — the proof of which has won him the Abel prize.

British number theorist Andrew Wiles has received the 2016 Abel Prize for his solution to Fermat’s last theorem — a problem that stumped some of the world’s greatest minds for three and a half centuries. The Norwegian Academy of Science and Letters announced the award — considered by some to be the 'Nobel of mathematics' — on 15 March.

Wiles, who is 62 and now at the University of Oxford, UK, will receive 6 million kroner (US$700,000) for his 1994 proof of the theorem, which states that there cannot be any positive whole numbers x, y and z such that x^n + y^n = z^n, if n is greater than 2.

Soon after receiving the news on the morning of 15 March, Wiles told Nature that the award came to him as a “total surprise”.

That he solved a problem considered too hard by so many — and yet a problem relatively simple to state — has made Wiles arguably “the most celebrated mathematician of the twentieth century”, says Martin Bridson, director of Oxford's Mathematical Institute — which is housed in a building named after Wiles. Although his achievement is now two decades old, he continues to inspire young minds, something that is apparent when school children show up at his public lectures. “They treat him like a rock star,” Bridson says. “They line up to have their photos taken with him.”

Lifelong quest

Wiles's story has become a classic tale of tenacity and resilience. While a faculty member at Princeton University in New Jersey in the 1980s, he embarked on a solitary, seven-year quest to solve the problem, working in his attic without telling anyone except for his wife. He went on to make a historic announcement at a conference in his hometown of Cambridge, UK, in June 1993, only to hear from a colleague two months later that his proof contained a serious mistake. But after another frantic year of work — and with the help of one of his former students, Richard Taylor, who is now at the Institute for Advanced Study in Princeton — he was able to patch up the proof. When the resulting two papers were published in 1995, they made up an entire issue of the Annals of Mathematics1, 2.

But after Wiles's original claim had already made front-page news around the world, the pressure on the shy mathematician to save his work almost crippled him. “Doing mathematics in that kind of overexposed way is certainly not my style, and I have no wish to repeat it,” he said in a BBC documentary in 1996, still visibly shaken by the experience. “It’s almost unbelievable that he was able to get something done” at that point, says John Rognes, a mathematician at the University of Oslo and chair of the Abel Committee.

“It was very, very intense,” says Wiles. “Unfortunately as human beings we succeed by trial and error. It’s the people who overcome the setbacks who succeed.”

Wiles first learnt about French mathematician Pierre de Fermat as a child growing up in Cambridge. As he was told, Fermat formulated his eponymous theorem in a handwritten note in the margins of a book in 1637: “I have a truly marvellous demonstration of this proposition which this margin is too narrow to contain,” he wrote (in Latin).

“I think it has a very romantic story,” Wiles says of Fermat's idea. “The kind of story that catches people’s imagination when they’re young and thinking of entering mathematics.”

But although he may have thought he had a proof at the time, only a proof for one special case has survived him, for exponent n = 4. A century later, Leonhard Euler proved it for n = 3, and Sophie Germain's work led to a proof for infinitely many exponents, but still not for all. Experts now tend to concur that the most general form of the statement would have been impossible to crack without mathematical tools that became available only in the twentieth century.

In 1983, German mathematician Gerd Faltings, now at the Max Planck Institute for Mathematics in Bonn, took a huge leap forward by proving that Fermat's statement had, at most, a finite number of solutions, although he could not show that the number should be zero. (In fact, he proved a result viewed by specialists as deeper and more interesting than Fermat's last theorem itself; it demonstrated that a broader class of equations has, at most, a finite number of solutions.)

To narrow it to zero, Wiles took a different approach: he proved the Shimura-Taniyama conjecture, a 1950s proposal that describes how two very different branches of mathematics, called elliptic curves and modular forms, are conceptually equivalent. Others had shown that proof of this equivalence would imply proof of Fermat — and, like Faltings' result, most mathematicians regard this as much more profound than Fermat’s last theorem itself. (The full citation for the Abel Prize states that it was awarded to Wiles “for his stunning proof of Fermat’s Last Theorem by way of the modularity conjecture for semistable elliptic curves, opening a new era in number theory.”)

The link between the Shimura–Taniyama conjecture and Fermat's theorum was first proposed in 1984 by number theorist Gerhard Frey, now at the University of Duisburg-Essen in Germany. He claimed that any counterexample to Fermat's last theorem would also lead to a counterexample to the Shimura–Taniyama conjecture.

Kenneth Ribet, a mathematician at the University of California, Berkeley, soon proved that Frey was right, and therefore that anyone who proved the more recent conjecture would also bag Fermat's. Still, that did not seem to make the task any easier. “Andrew Wiles is probably one of the few people on Earth who had the audacity to dream that he can actually go and prove this conjecture,” Ribet told the BBC in the 1996 documentary.

Fermat's last theorem is also connected to another deep question in number theory called the abc conjecture, Rognes points out. Mathematician Shinichi Mochizuki of Kyoto University's Research Institute for Mathematical Sciences in Japan claimed to have proved that conjecture in 2012, although his roughly 500-page proof is still being vetted by his peers. Some mathematicians say that Mochizuki's work could provide, as an extra perk, an alternative way of proving Fermat, although Wiles says that sees those hopes with scepticism.

Wiles helped to arrange an Oxford workshop on Mochizuki's work last December, although his research interests are somewhat different. Lately, he has focused his efforts on another major, unsolved conjecture in number theory, which has been listed as one of seven Millennium Prize problems posed by the Clay Mathematics Institute in Oxford, UK. He still works very hard and thinks about mathematics for most of his waking hours, including as he walks to the office in the morning. “He doesn’t want to cycle,” Bridson says. “He thinks it would be a bit dangerous for him to do it while thinking about mathematics.”

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#116 2016-03-17 06:12:32

- Primenumbers

- Member

- Registered: 2013-01-22

- Posts: 149

Re: crème de la crème

93. Me!

"Time not important. Only life important." - The Fifth Element 1997

Offline

#117 2016-03-18 16:59:10

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,380

Re: crème de la crème

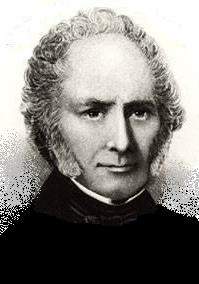

93. Niels Henrik Abel

Niels Henrik Abel, (born August 5, 1802, island of Finnøy, near Stavanger, Norway—died April 6, 1829, Froland), Norwegian mathematician, a pioneer in the development of several branches of modern mathematics.

Abel’s father was a poor Lutheran minister who moved his family to the parish of Gjerstad, near the town of Risør in southeast Norway, soon after Niels Henrik was born. In 1815 Niels entered the cathedral school in Oslo, where his mathematical talent was recognized in 1817 with the arrival of a new mathematics teacher, Bernt Michael Holmboe, who introduced him to the classics in mathematical literature and proposed original problems for him to solve. Abel studied the mathematical works of the 17th-century Englishman Sir Isaac Newton, the 18th-century German Leonhard Euler, and his contemporaries the Frenchman Joseph-Louis Lagrange and the German Carl Friedrich Gauss in preparation for his own research.

Abel’s father died in 1820, leaving the family in straitened circumstances, but Holmboe contributed and raised funds that enabled Abel to enter the University of Christiania (Oslo) in 1821. Abel obtained a preliminary degree from the university in 1822 and continued his studies independently with further subsidies obtained by Holmboe.

Abel’s first papers, published in 1823, were on functional equations and integrals; he was the first person to formulate and solve an integral equation. His friends urged the Norwegian government to grant him a fellowship for study in Germany and France. In 1824, while waiting for a royal decree to be issued, he published at his own expense his proof of the impossibility of solving algebraically the general equation of the fifth degree, which he hoped would bring him recognition. He sent the pamphlet to Gauss, who dismissed it, failing to recognize that the famous problem had indeed been settled.

Abel spent the winter of 1825–26 with Norwegian friends in Berlin, where he met August Leopold Crelle, civil engineer and self-taught enthusiast of mathematics, who became his close friend and mentor. With Abel’s warm encouragement, Crelle founded the Journal für die reine und angewandte Mathematik (“Journal for Pure and Applied Mathematics”), commonly known as Crelle’s Journal. The first volume (1826) contains papers by Abel, including a more elaborate version of his work on the quintic equation. Other papers dealt with equation theory, calculus, and theoretical mechanics. Later volumes presented Abel’s theory of elliptic functions, which are complex functions (see complex number) that generalize the usual trigonometric functions.

In 1826 Abel went to Paris, then the world centre for mathematics, where he called on the foremost mathematicians and completed a major paper on the theory of integrals of algebraic functions. His central result, known as Abel’s theorem, is the basis for the later theory of Abelian integrals and Abelian functions, a generalization of elliptic function theory to functions of several variables. However, Abel’s visit to Paris was unsuccessful in securing him an appointment, and the memoir he submitted to the French Academy of Sciences was lost.

Abel returned to Norway heavily in debt and suffering from tuberculosis. He subsisted by tutoring, supplemented by a small grant from the University of Christiania and, beginning in 1828, by a temporary teaching position. His poverty and ill health did not decrease his production; he wrote a great number of papers during this period, principally on equation theory and elliptic functions. Among them are the theory of polynomial equations with Abelian groups. He rapidly developed the theory of elliptic functions in competition with the German Carl Gustav Jacobi. By this time Abel’s fame had spread to all mathematical centres, and strong efforts were made to secure a suitable position for him by a group from the French Academy, who addressed King Bernadotte of Norway-Sweden; Crelle also worked to secure a professorship for him in Berlin.

In the fall of 1828 Abel became seriously ill, and his condition deteriorated on a sled trip at Christmastime to visit his fiancée at Froland, where he died. The French Academy published his memoir in 1841.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#118 2016-03-19 16:38:20

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,380

Re: crème de la crème

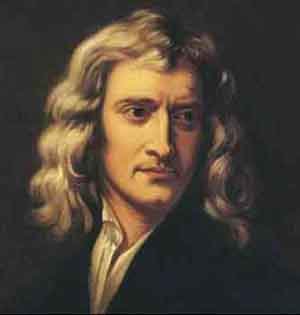

94. Leonhard Euler, (born April 15, 1707, Basel, Switzerland—died September 18, 1783, St. Petersburg, Russia), Swiss mathematician and physicist, one of the founders of pure mathematics. He not only made decisive and formative contributions to the subjects of geometry, calculus, mechanics, and number theory but also developed methods for solving problems in observational astronomy and demonstrated useful applications of mathematics in technology and public affairs.

Euler’s mathematical ability earned him the esteem of Johann Bernoulli, one of the first mathematicians in Europe at that time, and of his sons Daniel and Nicolas. In 1727 he moved to St. Petersburg, where he became an associate of the St. Petersburg Academy of Sciences and in 1733 succeeded Daniel Bernoulli to the chair of mathematics. By means of his numerous books and memoirs that he submitted to the academy, Euler carried integral calculus to a higher degree of perfection, developed the theory of trigonometric and logarithmic functions, reduced analytical operations to a greater simplicity, and threw new light on nearly all parts of pure mathematics. Overtaxing himself, Euler in 1735 lost the sight of one eye. Then, invited by Frederick the Great in 1741, he became a member of the Berlin Academy, where for 25 years he produced a steady stream of publications, many of which he contributed to the St. Petersburg Academy, which granted him a pension.

In 1748, in his Introductio in analysin infinitorum, he developed the concept of function in mathematical analysis, through which variables are related to each other and in which he advanced the use of infinitesimals and infinite quantities. He did for modern analytic geometry and trigonometry what the Elements of Euclid had done for ancient geometry, and the resulting tendency to render mathematics and physics in arithmetical terms has continued ever since. He is known for familiar results in elementary geometry—for example, the Euler line through the orthocentre (the intersection of the altitudes in a triangle), the circumcentre (the centre of the circumscribed circle of a triangle), and the barycentre (the “centre of gravity,” or centroid) of a triangle. He was responsible for treating trigonometric functions—i.e., the relationship of an angle to two sides of a triangle—as numerical ratios rather than as lengths of geometric lines and for relating them, through the so-called Euler identity

, with complex numbers (e.g., 3 + 2√(−1)). He discovered the imaginary logarithms of negative numbers and showed that each complex number has an infinite number of logarithms.Euler’s textbooks in calculus, Institutiones calculi differentialis in 1755 and Institutiones calculi integralis in 1768–70, have served as prototypes to the present because they contain formulas of differentiation and numerous methods of indefinite integration, many of which he invented himself, for determining the work done by a force and for solving geometric problems, and he made advances in the theory of linear differential equations, which are useful in solving problems in physics. Thus, he enriched mathematics with substantial new concepts and techniques. He introduced many current notations, such as Σ for the sum; the symbol e for the base of natural logarithms; a, b and c for the sides of a triangle and A, B, and C for the opposite angles; the letter f and parentheses for a function; and i for √(−1). He also popularized the use of the symbol π (devised by British mathematician William Jones) for the ratio of circumference to diameter in a circle.