Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#726 2020-07-09 00:53:08

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 51,596

Re: Miscellany

604) Carpentry

Carpentry, the art and trade of cutting, working, and joining timber. The term includes both structural timberwork in framing and items such as doors, windows, and staircases.

In the past, when buildings were often wholly constructed of timber framing, the carpenter played a considerable part in building construction; along with the mason he was the principal building worker. The scope of the carpenter’s work has altered, however, with the passage of time. Increasing use of concrete and steel construction, especially for floors and roofs, means that the carpenter plays a smaller part in making the framework of buildings, except for houses and small structures. On the other hand, in the construction of temporary formwork and shuttering for concrete building, the carpenter’s work has greatly increased.

Because wood is widely distributed throughout the world, it has been used as a building material for centuries; many of the tools and techniques of carpentry, perfected after the Middle Ages, have changed little since that time. On the other hand, world supplies of wood are shrinking, and the increasing cost of obtaining, finishing, and distributing timber has brought continuing revision in traditional practices. Further, because much traditional construction wastes wood, engineering calculation has supplanted empirical and rule-of-thumb methods. The development of laminated timbers such as plywood, and the practice of prefabrication have simplified and lowered the cost of carpentry.

The framing of houses generally proceeds in one of two ways: in platform (or Western) framing floors are framed separately, story by story; in balloon framing the vertical members (studs) extend the full height of the building from foundation plate to rafter plate. The timber used in the framing is put to various uses. The studs usually measure 1.5 × 3.5 inches (4 × 9 cm; known as a “2 × 4”) and are spaced at regular intervals of 16 inches (41 cm). They are anchored to a horizontal foundation plate at the bottom and a plate at the top, both 2 × 4 timber. Frequently stiffening braces are built between studs at midpoint and are known as noggings. Window and door openings are boxed in with horizontal 2 × 4 lumber called headers at the top and sills at the bottom.

Floors are framed by anchoring 1.5 × 11-inch (4 × 28-centimetre) lumber called joists on the foundation for the first floor and on the plates of upper floors. They are set on edge and placed in parallel rows across the width of the house. Crisscross bracings that help them stay parallel are called herringbone struts. In later stages, a subfloor of planks or plywood is laid across the joists, and on top of this is placed the finished floor—narrower hardwood planks that fit together with tongue-and-groove edges or any variety of covering.

The traditional pitched roof is made from inclined studs or rafters that meet at the peak. For wide roof spans extra support is provided by adding a horizontal cross brace, making the rafters look like the letter A, with a V-shaped diagonal support on the cross bar. Such supports are called trusses. The principal timbers used for framing and most carpentry in general are in the conifer, or softwood, group and include various species of pine, fir, spruce, and cedar. The most commonly used timber species in the United States are Canadian spruces and Douglas fir, British Columbian pine, and western red cedar. Cedar is useful for roofing and siding shingles as well as framing, since it has a natural resistance to weathering and needs no special preservation treatment.

A carpenter’s work may also extend to interior jobs, requiring some of the skills of a joiner. These jobs include making door frames, cabinets, countertops, and assorted molding and trim. Much of the skill involves joining wood inconspicuously for the sake of appearance, as opposed to the joining of unseen structural pieces.

The standard hand tools used by a carpenter are hammers, pliers, screwdrivers, and awls for driving and extracting nails, setting screws, and punching guide holes, respectively. Planes are hand-held blades used to reduce and smooth wood surfaces, and chisels are blades that can be hit with a mallet to cut out forms in wood. The crosscut saw cuts across wood grain, and the rip saw cuts with the grain. Tenon and dovetail saws are used to make precise cuts for the indicated joints, and a keyhole saw cuts out holes. The level shows whether a surface is perfectly horizontal or vertical, and the trisquare tests the right angle between adjacent surfaces. These instruments are complemented by the use of power tools.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#727 2020-07-10 01:02:08

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 51,596

Re: Miscellany

605) Industry

Industry, a group of productive enterprises or organizations that produce or supply goods, services, or sources of income. In economics, industries are customarily classified as primary, secondary, and tertiary; secondary industries are further classified as heavy and light.

Primary Industry

This sector of a nation’s economy includes agriculture, forestry, fishing, mining, quarrying, and the extraction of minerals. It may be divided into two categories: genetic industry, including the production of raw materials that may be increased by human intervention in the production process; and extractive industry, including the production of exhaustible raw materials that cannot be augmented through cultivation.

The genetic industries include agriculture, forestry, and livestock management and fishing—all of which are subject to scientific and technological improvement of renewable resources. The extractive industries include the mining of mineral ores, the quarrying of stone, and the extraction of mineral fuels.

Secondary Industry

This sector, also called manufacturing industry, (1) takes the raw materials supplied by primary industries and processes them into consumer goods, or (2) further processes goods that other secondary industries have transformed into products, or (3) builds capital goods used to manufacture consumer and nonconsumer goods. Secondary industry also includes energy-producing industries (e.g., hydroelectric industries) as well as the construction industry.

Secondary industry may be divided into heavy, or large-scale, and light, or small-scale, industry. Large-scale industry generally requires heavy capital investment in plants and machinery, serves a large and diverse market including other manufacturing industries, has a complex industrial organization and frequently a skilled specialized labour force, and generates a large volume of output. Examples would include petroleum refining, steel and iron manufacturing, motor vehicle and heavy machinery manufacture, cement production, nonferrous metal refining, meat-packing, and hydroelectric power generation.

Light, or small-scale, industry may be characterized by the nondurability of manufactured products and a smaller capital investment in plants and equipment, and it may involve nonstandard products, such as customized or craft work. The labour force may be either low skilled, as in textile work and clothing manufacture, food processing, and plastics manufacture, or highly skilled, as in electronics and computer hardware manufacture, precision instrument manufacture, gemstone cutting, and craft work.

Tertiary Industry

This sector, also called service industry, includes industries that, while producing no tangible goods, provide services or intangible gains or generate wealth. In free market and mixed economies this sector generally has a mix of private and government enterprise.

The industries of this sector include banking, finance, insurance, investment, and real estate services; wholesale, retail, and resale trade; transportation, information, and communications services; professional, consulting, legal, and personal services; tourism, hotels, restaurants, and entertainment; repair and maintenance services; education and teaching; and health, social welfare, administrative, police, security, and defense services.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#728 2020-07-11 01:02:55

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 51,596

Re: Miscellany

606) Emu

Emu, flightless bird of Australia and second largest living bird: the emu is more than 1.5 metres (5 feet) tall and may weigh more than 45 kg (100 pounds). The emu is the sole living member of the family Dromaiidae (or Dromiceiidae) of the order Casuariiformes, which also includes the cassowaries.

The common emu, Dromaius (or Dromiceius) novaehollandiae, the only survivor of several forms exterminated by settlers, is stout-bodied and long-legged, like its relative the cassowary. Both males and females are brownish, with dark gray head and neck. Emus can dash away at nearly 50 km (30 miles) per hour; if cornered they kick with their big three-toed feet. Emus mate for life; the male incubates from 7 to 10 dark green eggs, 13 cm (5 inches) long, in a ground nest for about 60 days. The striped young soon run with the adults. In small flocks emus forage for fruits and insects but may also damage crops. The peculiar structure of the trachea of the emu is correlated with the loud booming note of the bird during the breeding season. Three subspecies are recognized, inhabiting northern, southeastern, and southwestern Australia; a fourth, now extinct, lived on Tasmania.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#729 2020-07-12 01:15:03

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 51,596

Re: Miscellany

607) Nuclear Fusion

Fusion reactors have been getting a lot of press recently because they offer some major advantages over other power sources. They will use abundant sources of fuel, they will not leak radiation above normal background levels and they will produce less radioactive waste than current fission reactors.

Nobody has put the technology into practice yet, but working reactors aren't actually that far off. Fusion reactors are now in experimental stages at several laboratories in the United States and around the world.

A consortium from the United States, Russia, Europe and Japan has proposed to build a fusion reactor called the International Thermonuclear Experimental Reactor (ITER) in Cadarache, France, to demonstrate the feasibility of using sustained fusion reactions for making electricity. In this article, we'll learn about nuclear fusion and see how the ITER reactor will work.

Current nuclear reactors use nuclear fission to generate power. In nuclear fission, you get energy from splitting one atom into two atoms. In a conventional nuclear reactor, high-energy neutrons split heavy atoms of uranium, yielding large amounts of energy, radiation and radioactive wastes that last for long periods of time.

In nuclear fusion, you get energy when two atoms join together to form one. In a fusion reactor, hydrogen atoms come together to form helium atoms, neutrons and vast amounts of energy. It's the same type of reaction that powers hydrogen bombs and the sun. This would be a cleaner, safer, more efficient and more abundant source of power than nuclear fission.

There are several types of fusion reactions. Most involve the isotopes of hydrogen called deuterium and tritium:

• Proton-proton chain - This sequence is the predominant fusion reaction scheme used by stars such as the sun. Two pairs of protons form to make two deuterium atoms. Each deuterium atom combines with a proton to form a helium-3 atom. Two helium-3 atoms combine to form beryllium-6, which is unstable. Beryllium-6 decays into two helium-4 atoms. These reactions produce high energy particles (protons, electrons, neutrinos, positrons) and radiation (light, gamma rays)

• Deuterium-deuterium reactions - Two deuterium atoms combine to form a helium-3 atom and a neutron.

• Deuterium-tritium reactions - One atom of deuterium and one atom of tritium combine to form a helium-4 atom and a neutron. Most of the energy released is in the form of the high-energy neutron.

Conceptually, harnessing nuclear fusion in a reactor is a no-brainer. But it has been extremely difficult for scientists to come up with a controllable, non-destructive way of doing it. To understand why, we need to look at the necessary conditions for nuclear fusion.

ISOTOPES

Isotopes are atoms of the same element that have the same number of protons and electrons but a different number of neutrons. Some common isotopes in fusion are:

• Protium is a hydrogen isotope with one proton and no neutrons. It is the most common form of hydrogen and the most common element in the universe.

• Deuterium is a hydrogen isotope with one proton and one neutron. It is not radioactive and can be extracted from seawater.

• Tritium is a hydrogen isotope with one proton and two neutrons. It is radioactive, with a half-life of about 10 years. Tritium does not occur naturally but can be made by bombarding lithium with neutrons.

• Helium-3 is a helium isotope with two protons and one neutron.

• Helium-4 is the most common, naturally occurring form of helium, with two protons and two neutrons.

When hydrogen atoms fuse, the nuclei must come together. However, the protons in each nucleus will tend to repel each other because they have the same charge (positive). If you've ever tried to place two magnets together and felt them push apart from each other, you've experienced this principle first-hand.

To achieve fusion, you need to create special conditions to overcome this tendency. Here are the conditions that make fusion possible:

High temperature - The high temperature gives the hydrogen atoms enough energy to overcome the electrical repulsion between the protons.

• Fusion requires temperatures about 100 million Kelvin (approximately six times hotter than the sun's core).

• At these temperatures, hydrogen is a plasma, not a gas. Plasma is a high-energy state of matter in which all the electrons are stripped from atoms and move freely about.

• The sun achieves these temperatures by its large mass and the force of gravity compressing this mass in the core. We must use energy from microwaves, lasers and ion particles to achieve these temperatures.

High pressure - Pressure squeezes the hydrogen atoms together. They must be within 1 x {10}^{-15} meters of each other to fuse.

• The sun uses its mass and the force of gravity to squeeze hydrogen atoms together in its core.

• We must squeeze hydrogen atoms together by using intense magnetic fields, powerful lasers or ion beams.

With current technology, we can only achieve the temperatures and pressures necessary to make deuterium-tritium fusion possible. Deuterium-deuterium fusion requires higher temperatures that may be possible in the future. Ultimately, deuterium-deuterium fusion will be better because it is easier to extract deuterium from seawater than to make tritium from lithium. Also, deuterium is not radioactive, and deuterium-deuterium reactions will yield more energy.

There are two ways to achieve the temperatures and pressures necessary for hydrogen fusion to take place:

• Magnetic confinement uses magnetic and electric fields to heat and squeeze the hydrogen plasma. The ITER project in France is using this method.

• Inertial confinement uses laser beams or ion beams to squeeze and heat the hydrogen plasma. Scientists are studying this experimental approach at the National Ignition Facility of Lawrence Livermore Laboratory in the United States.

Let's look at magnetic confinement first. Here's how it would work:

Microwaves, electricity and neutral particle beams from accelerators heat a stream of hydrogen gas. This heating turns the gas into plasma. This plasma gets squeezed by super-conducting magnets, thereby allowing fusion to occur. The most efficient shape for the magnetically confined plasma is a donut shape (toroid).

A reactor of this shape is called a tokamak. The ITER tokamak will be a self-contained reactor whose parts are in various cassettes. These cassettes can be easily inserted and removed without having to tear down the entire reactor for maintenance. The tokamak will have a plasma toroid with a 2-meter inner radius and a 6.2-meter outer radius.

Let's take a closer look at the ITER fusion reactor to see how magnetic confinement works.

"Tokamak" is a Russian acronym for "toroidal chamber with axial magnetic field."

The main parts of the ITER tokamak reactor are:

• Vacuum vessel - holds the plasma and keeps the reaction chamber in a vacuum

• Neutral beam injector (ion cyclotron system) - injects particle beams from the accelerator into the plasma to help heat the plasma to critical temperature

• Magnetic field coils (poloidal, toroidal) - super-conducting magnets that confine, shape and contain the plasma using magnetic fields

• Transformers/Central solenoid - supply electricity to the magnetic field coils

• Cooling equipment (crostat, cryopump) - cool the magnets

• Blanket modules - made of lithium; absorb heat and high-energy neutrons from the fusion reaction

• Divertors - exhaust the helium products of the fusion reaction

Here's how the process will work:

1. The fusion reactor will heat a stream of deuterium and tritium fuel to form high-temperature plasma. It will squeeze the plasma so that fusion can take place. The power needed to start the fusion reaction will be about 70 megawatts, but the power yield from the reaction will be about 500 megawatts. The fusion reaction will last from 300 to 500 seconds. (Eventually, there will be a sustained fusion reaction.)

2. The lithium blankets outside the plasma reaction chamber will absorb high-energy neutrons from the fusion reaction to make more tritium fuel. The blankets will also get heated by the neutrons.

3. The heat will be transferred by a water-cooling loop to a heat exchanger to make steam.

4. The steam will drive electrical turbines to produce electricity.

5. The steam will be condensed back into water to absorb more heat from the reactor in the heat exchanger.

Initially, the ITER tokamak will test the feasibility of a sustained fusion reactor and eventually will become a test fusion power plant.

The main application for fusion is in making electricity. Nuclear fusion can provide a safe, clean energy source for future generations with several advantages over current fission reactors:

• Abundant fuel supply - Deuterium can be readily extracted from seawater, and excess tritium can be made in the fusion reactor itself from lithium, which is readily available in the Earth's crust. Uranium for fission is rare, and it must be mined and then enriched for use in reactors.

• Safe - The amounts of fuel used for fusion are small compared to fission reactors. This is so that uncontrolled releases of energy do not occur. Most fusion reactors make less radiation than the natural background radiation we live with in our daily lives.

• Clean - No combustion occurs in nuclear power (fission or fusion), so there is no air pollution.

• Less nuclear waste - Fusion reactors will not produce high-level nuclear wastes like their fission counterparts, so disposal will be less of a problem. In addition, the wastes will not be of weapons-grade nuclear materials as is the case in fission reactors.

¬NASA is currently looking into developing small-scale fusion reactors for powering¬ deep-space rockets. Fusion propulsion would boast an unlimited fuel supply (hydrogen), would be more efficient and would ultimately lead to faster rockets.

Nuclear Fusion

With its high energy yields, low nuclear waste production, and lack of air pollution, fusion, the same source that powers stars, could provide an alternative to conventional energy sources. But what drives this process?

What is fusion?

Fusion occurs when two light atoms bond together, or fuse, to make a heavier one. The total mass of the new atom is less than that of the two that formed it; the "missing" mass is given off as energy, as described by Albert Einstein's famous "E=mc^2" equation.

In order for the nuclei of two atoms to overcome the aversion to one another caused their having the same charge, high temperatures and pressures are required. Temperatures must reach approximately six times those found in the core of the sun. At this heat, the hydrogen is no longer a gas but a plasma, an extremely high-energy state of matter where electrons are stripped from their atoms.

Fusion is the dominant source of energy for stars in the universe. It is also a potential energy source on Earth. When set off in an intentionally uncontrolled chain reaction, it drives the hydrogen bomb. Fusion is also being considered as a possibility to power crafts through space.

Fusion differs from fission, which splits atoms and results in substantial radioactive waste, which is hazardous.

Cooking up energy

There are several "recipes" for cooking up fusion, which rely on different atomic combinations.

Deuterium-Tritium fusion: The most promising combination for power on Earth today is the fusion of a deuterium atom with a tritium one. The process, which requires temperatures of approximately 72 million degrees F (39 million degrees Celsius), produces 17.6 million electron volts of energy.

Deuterium is a promising ingredient because it is an isotope of hydrogen, containing a single proton and neutron but no electron. In turn, hydrogen is a key part of water, which covers the Earth. A gallon of seawater (3.8 liters) could produce as much energy as 300 gallons (1,136 liters) of gasoline. Another hydrogen isotope, tritium contains one proton and two neutrons. It is more challenging to locate in large quantities, due to its 10-year half-life (half of the quantity decays every decade). Rather than attempting to find it naturally, the most reliable method is to bombard lithium, an element found in Earth's crust, with neutrons to create the element.

Deuterium-deuterium fusion: Theoretically more promising than deuterium-tritium because of the ease of obtaining the two deuterium atoms, this method is also more challenging because it requires temperatures too high to be feasible at present. However, the process yields more energy than deuterium-tritium fusion.

With their high heat and masses, stars utilize different combinations to power them.

Proton-proton fusion: The dominant driver for stars like the sun with core temperatures under 27 million degrees F (15 million degrees C), proton-proton fusion begins with two protons and ultimately yields high energy particles such as positrons, neutrinos, and gamma rays.

Carbon cycle: Stars with higher temperatures merge carbon rather than hydrogen atoms.

Triple alpha process: Stars such as red giants at the end of their phase, with temperatures exceeding 180 million degrees F (100 million degrees C) fuse helium atoms together rather than hydrogen and carbon.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#730 2020-07-13 00:49:31

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 51,596

Re: Miscellany

608) Taste bud

Taste bud, small organ located on the tongue in terrestrial vertebrates that functions in the perception of taste. In fish, taste buds occur on the lips, the flanks, and the caudal (tail) fins of some species and on the barbels of catfish.

Taste receptor cells, with which incoming chemicals from food and other sources interact, occur on the tongue in groups of 50–150. Each of these groups forms a taste bud, which is grouped together with other taste buds into taste papillae. The taste buds are embedded in the epithelium of the tongue and make contact with the outside environment through a taste pore. Slender processes (microvilli) extend from the outer ends of the receptor cells through the taste pore, where the processes are covered by the mucus that lines the oral cavity. At their inner ends the taste receptor cells synapse, or connect, with afferent sensory neurons, nerve cells that conduct information to the brain. Each receptor cell synapses with several afferent sensory neurons, and each afferent neuron branches to several taste papillae, where each branch makes contact with many receptor cells. The afferent sensory neurons occur in three different nerves running to the brain—the facial nerve, the glossopharyngeal nerve, and the vagus nerve. Taste receptor cells of vertebrates are continually renewed throughout the life of the organism.

On average, the human tongue has 2,000–8,000 taste buds, implying that there are hundreds of thousands of receptor cells. However, the number of taste buds varies widely. For example, per square centimetre on the tip of the tongue, some people may have only a few individual taste buds, whereas others may have more than one thousand; this variability contributes to differences in the taste sensations experienced by different people. Taste sensations produced within an individual taste bud also vary, since each taste bud typically contains receptor cells that respond to distinct chemical stimuli—as opposed to the same chemical stimulus. As a result, the sensation of different tastes (i.e., salty, sweet, sour, bitter, or umami) is diverse not only within a single taste bud but also throughout the surface of the tongue.

The taste receptor cells of other animals can often be characterized in ways similar to those of humans, because all animals have the same basic needs in selecting food.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#731 2020-07-14 00:36:33

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 51,596

Re: Miscellany

609) Alzheimer's disease

Overview

Alzheimer's disease is a progressive disorder that causes brain cells to waste away (degenerate) and die. Alzheimer's disease is the most common cause of dementia — a continuous decline in thinking, behavioral and social skills that disrupts a person's ability to function independently.

The early signs of the disease may be forgetting recent events or conversations. As the disease progresses, a person with Alzheimer's disease will develop severe memory impairment and lose the ability to carry out everyday tasks.

Current Alzheimer's disease medications may temporarily improve symptoms or slow the rate of decline. These treatments can sometimes help people with Alzheimer's disease maximize function and maintain independence for a time. Different programs and services can help support people with Alzheimer's disease and their caregivers.

There is no treatment that cures Alzheimer's disease or alters the disease process in the brain. In advanced stages of the disease, complications from severe loss of brain function — such as dehydration, malnutrition or infection — result in death.

Symptoms

Memory loss is the key symptom of Alzheimer's disease. An early sign of the disease is usually difficulty remembering recent events or conversations. As the disease progresses, memory impairments worsen and other symptoms develop.

At first, a person with Alzheimer's disease may be aware of having difficulty with remembering things and organizing thoughts. A family member or friend may be more likely to notice how the symptoms worsen.

Brain changes associated with Alzheimer's disease lead to growing trouble with:

Memory

Everyone has occasional memory lapses. It's normal to lose track of where you put your keys or forget the name of an acquaintance. But the memory loss associated with Alzheimer's disease persists and worsens, affecting the ability to function at work or at home.

People with Alzheimer's may:

• Repeat statements and questions over and over

• Forget conversations, appointments or events, and not remember them later

• Routinely misplace possessions, often putting them in illogical locations

• Get lost in familiar places

• Eventually forget the names of family members and everyday objects

• Have trouble finding the right words to identify objects, express thoughts or take part in conversations

Thinking and reasoning

Alzheimer's disease causes difficulty concentrating and thinking, especially about abstract concepts such as numbers.

Multitasking is especially difficult, and it may be challenging to manage finances, balance checkbooks and pay bills on time. These difficulties may progress to an inability to recognize and deal with numbers.

Making judgments and decisions

The ability to make reasonable decisions and judgments in everyday situations will decline. For example, a person may make poor or uncharacteristic choices in social interactions or wear clothes that are inappropriate for the weather. It may be more difficult to respond effectively to everyday problems, such as food burning on the stove or unexpected driving situations.

Planning and performing familiar tasks

Once-routine activities that require sequential steps, such as planning and cooking a meal or playing a favorite game, become a struggle as the disease progresses. Eventually, people with advanced Alzheimer's may forget how to perform basic tasks such as dressing and bathing.

Changes in personality and behavior

Brain changes that occur in Alzheimer's disease can affect moods and behaviors. Problems may include the following:

• Depression

• Apathy

• Social withdrawal

• Mood swings

• Distrust in others

• Irritability and aggressiveness

• Changes in sleeping habits

• Wandering

• Loss of inhibitions

• Delusions, such as believing something has been stolen

Preserved skills

Many important skills are preserved for longer periods even while symptoms worsen. Preserved skills may include reading or listening to books, telling stories and reminiscing, singing, listening to music, dancing, drawing, or doing crafts.

These skills may be preserved longer because they are controlled by parts of the brain affected later in the course of the disease.

When to see a doctor

A number of conditions, including treatable conditions, can result in memory loss or other dementia symptoms. If you are concerned about your memory or other thinking skills, talk to your doctor for a thorough assessment and diagnosis.

If you are concerned about thinking skills you observe in a family member or friend, talk about your concerns and ask about going together to a doctor's appointment.

Causes

Scientists believe that for most people, Alzheimer's disease is caused by a combination of genetic, lifestyle and environmental factors that affect the brain over time.

Less than 1 percent of the time, Alzheimer's is caused by specific genetic changes that virtually guarantee a person will develop the disease. These rare occurrences usually result in disease onset in middle age.

The exact causes of Alzheimer's disease aren't fully understood, but at its core are problems with brain proteins that fail to function normally, disrupt the work of brain cells (neurons) and unleash a series of toxic events. Neurons are damaged, lose connections to each other and eventually die.

The damage most often starts in the region of the brain that controls memory, but the process begins years before the first symptoms. The loss of neurons spreads in a somewhat predictable pattern to other regions of the brains. By the late stage of the disease, the brain has shrunk significantly.

Researchers are focused on the role of two proteins:

• Plaques. Beta-amyloid is a leftover fragment of a larger protein. When these fragments cluster together, they appear to have a toxic effect on neurons and to disrupt cell-to-cell communication. These clusters form larger deposits called amyloid plaques, which also include other cellular debris.

• Tangles. Tau proteins play a part in a neuron's internal support and transport system to carry nutrients and other essential materials. In Alzheimer's disease, tau proteins change shape and organize themselves into structures called neurofibrillary tangles. The tangles disrupt the transport system and are toxic to cells.

Risk factors

b]Age[bi]

Increasing age is the greatest known risk factor for Alzheimer's disease. Alzheimer's is not a part of normal aging, but as you grow older the likelihood of developing Alzheimer's disease increases.

One study, for example, found that annually there were two new diagnoses per 1,000 people ages 65 to 74, 11 new diagnoses per 1,000 people ages 75 to 84, and 37 new diagnoses per 1,000 people age 85 and older.

Family history and genetics

Your risk of developing Alzheimer's is somewhat higher if a first-degree relative — your parent or sibling — has the disease. Most genetic mechanisms of Alzheimer's among families remain largely unexplained, and the genetic factors are likely complex.

One better understood genetic factor is a form of the apolipoprotein E gene (APOE). A variation of the gene, APOE e4, increases the risk of Alzheimer's disease, but not everyone with this variation of the gene develops the disease.

Scientists have identified rare changes (mutations) in three genes that virtually guarantee a person who inherits one of them will develop Alzheimer's. But these mutations account for less than 1 percent of people with Alzheimer's disease.

Down syndrome

Many people with Down syndrome develop Alzheimer's disease. This is likely related to having three copies of chromosome 21 — and subsequently three copies of the gene for the protein that leads to the creation of beta-amyloid. Signs and symptoms of Alzheimer's tend to appear 10 to 20 years earlier in people with Down syndrome than they do for the general population.

Gender

There appears to be little difference in risk between men and women, but, overall, there are more women with the disease because they generally live longer than men.

Mild cognitive impairment

Mild cognitive impairment (MCI) is a decline in memory or other thinking skills that is greater than what would be expected for a person's age, but the decline doesn't prevent a person from functioning in social or work environments.

People who have MCI have a significant risk of developing dementia. When the primary MCI deficit is memory, the condition is more likely to progress to dementia due to Alzheimer's disease. A diagnosis of MCI enables the person to focus on healthy lifestyle changes, develop strategies to compensate for memory loss and schedule regular doctor appointments to monitor symptoms.

Past head trauma

People who've had a severe head trauma have a greater risk of Alzheimer's disease.

Poor sleep patterns

Research has shown that poor sleep patterns, such as difficulty falling asleep or staying asleep, are associated with an increased risk of Alzheimer's disease.

Lifestyle and heart health

Research has shown that the same risk factors associated with heart disease may also increase the risk of Alzheimer's disease. These include:

• Lack of exercise

• Obesity

• Smoking or exposure to secondhand smoke

• High blood pressure

• High cholesterol

• Poorly controlled type 2 diabetes

These factors can all be modified. Therefore, changing lifestyle habits can to some degree alter your risk. For example, regular exercise and a healthy low-fat diet rich in fruits and vegetables are associated with a decreased risk of developing Alzheimer's disease.

Lifelong learning and social engagement

Studies have found an association between lifelong involvement in mentally and socially stimulating activities and a reduced risk of Alzheimer's disease. Low education levels — less than a high school education — appear to be a risk factor for Alzheimer's disease.

Complications

Memory and language loss, impaired judgment, and other cognitive changes caused by Alzheimer's can complicate treatment for other health conditions. A person with Alzheimer's disease may not be able to:

• Communicate that he or she is experiencing pain — for example, from a dental problem

• Report symptoms of another illness

• Follow a prescribed treatment plan

• Notice or describe medication side effects

As Alzheimer's disease progresses to its last stages, brain changes begin to affect physical functions, such as swallowing, balance, and bowel and bladder control. These effects can increase vulnerability to additional health problems such as:

• Inhaling food or liquid into the lungs (aspiration)

• Pneumonia and other infections

• Falls

• Fractures

• Bedsores

• Malnutrition or dehydration

Prevention

Alzheimer's disease is not a preventable condition. However, a number of lifestyle risk factors for Alzheimer's can be modified. Evidence suggests that changes in diet, exercise and habits — steps to reduce the risk of cardiovascular disease — may also lower your risk of developing Alzheimer's disease and other disorders that cause dementia. Heart-healthy lifestyle choices that may reduce the risk of Alzheimer's include the following:

• Exercise regularly

• Eat a diet of fresh produce, healthy oils and foods low in saturated fat

• Follow treatment guidelines to manage high blood pressure, diabetes and high cholesterol

• If you smoke, ask your doctor for help to quit smoking

Studies have shown that preserved thinking skills later in life and a reduced risk of Alzheimer's disease are associated with participating in social events, reading, dancing, playing board games, creating art, playing an instrument, and other activities that require mental and social engagement.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#732 2020-07-15 01:01:58

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 51,596

Re: Miscellany

610) Screen Printing

The History of Screen Printing

Screen printing has evolved from the ancient art of stencilling that took place in the late 1800’s and over time, with modifications, the method has evolved into an industry.

In the 19th century it remained a simple process using fabrics like organdy stretched over wooden frames as a means to hold stencils in place during printing. Only in the twentieth century did the process become mechanised, usually for printing flat posters or packaging and fabrics.

Initially, although it was not a well known process, screen printing bridged the gap between hand fed production and automated printing, which was far more expensive. It quickly transitioned from handcraft to mass production, particularly in the US, and in doing so opened up a completely new area of print capabilities and transformed the advertising industry.

Today it has become a very sophisticated process, using advanced fabrics and inks combined with computer technology. Often screen printing is used a substitute for other processes such as offset litho. As a printing technique it can print an image onto almost any surface such as paper, card, wood, glass, plastic, leather or any fabric. The iPhone, the solar cell, and the hydrogen fuel cell are all screen printed products – and they would not exist without this printing process.

Production

Screen printing is a technique that involves using a woven mesh screen to support an ink-blocking stencil to receive a desired image.

The screen stencil forms open areas of mesh that transfer ink or other printable materials, by pressing through the mesh as a sharp-edged image onto a substrate (the item that will receive the image).

A squeegee is moved across the screen stencil, forcing ink through the mesh openings to wet the substrate during the squeegee stroke. As the screen rebounds away from the substrate, the ink remains. Basically it is the process of using a mesh-based stencil to apply ink onto a substrate, whether it is t-shirts, posters, stickers, vinyl, wood, or other materials.

Screen printing is also sometimes known as silkscreen printing. One colour is printed at a time, so several screens can be used to produce a multicoloured image or design.

When & Where is Screen Printing Used?

Screen Printing is most commonly associated with T-Shirts, lanyards, balloons, bags and merchandise however this process is also used when applying latex to promotional printed scratch cards or for a decorative print process called spot UV.

All using the same process of squeegees and screens, the clear coating is then exposed to UV radiation lamps for the drying process.

Primarily used to enhance logos or depict certain text, spot UV is a great way to take your print to the next level.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#733 2020-07-16 00:55:46

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 51,596

Re: Miscellany

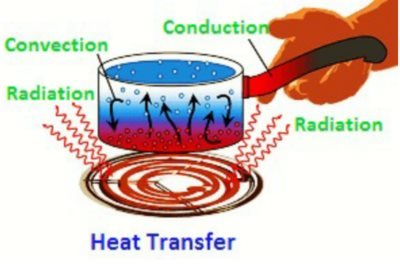

611) Heat transfer

Heat transfer, any or all of several kinds of phenomena, considered as mechanisms, that convey energy and entropy from one location to another. The specific mechanisms are usually referred to as convection, thermal radiation, and conduction. Conduction involves transfer of energy and entropy between adjacent molecules, usually a slow process. Convection involves movement of a heated fluid, such as air, usually a fairly rapid process. Radiation refers to the transmission of energy as electromagnetic radiation from its emission at a heated surface to its absorption on another surface, a process requiring no medium to convey the energy.

Transfer of heat, whether in heating a building or a kettle of water or in a natural condition such as a thunderstorm, usually involves all these processes.

Thermal conduction

Thermal conduction, transfer of energy (heat) arising from temperature differences between adjacent parts of a body.

Thermal conductivity is attributed to the exchange of energy between adjacent molecules and electrons in the conducting medium. The rate of heat flow in a rod of material is proportional to the cross-sectional area of the rod and to the temperature difference between the ends and inversely proportional to the length; that is the rate H equals the ratio of the cross section A of the rod to its length l, multiplied by the temperature difference

and by the thermal conductivity of the material, designated by the constant k. This empirical relation is expressed as: ). The minus sign arises because heat flows always from higher to lower temperature.A substance of large thermal conductivity k is a good heat conductor, whereas one with small thermal conductivity is a poor heat conductor or good thermal insulator. Typical values are 0.093 kilocalories/second-metre-°C for copper (a good thermal conductor) and 0.00003 kilocalories/second-metre°C for wood (poor thermal conductor).

Convection

Convection, process by which heat is transferred by movement of a heated fluid such as air or water.

Natural convection results from the tendency of most fluids to expand when heated—i.e., to become less dense and to rise as a result of the increased buoyancy. Circulation caused by this effect accounts for the uniform heating of water in a kettle or air in a heated room: the heated molecules expand the space they move in through increased speed against one another, rise, and then cool and come closer together again, with increase in density and a resultant sinking.

Forced convection involves the transport of fluid by methods other than that resulting from variation of density with temperature. Movement of air by a fan or of water by a pump are examples of forced convection.

Atmospheric convection currents can be set up by local heating effects such as solar radiation (heating and rising) or contact with cold surface masses (cooling and sinking). Such convection currents primarily move vertically and account for many atmospheric phenomena, such as clouds and thunderstorms.

Thermal radiation

Thermal radiation, process by which energy, in the form of electromagnetic radiation, is emitted by a heated surface in all directions and travels directly to its point of absorption at the speed of light; thermal radiation does not require an intervening medium to carry it.

Thermal radiation ranges in wavelength from the longest infrared rays through the visible-light spectrum to the shortest ultraviolet rays. The intensity and distribution of radiant energy within this range is governed by the temperature of the emitting surface. The total radiant heat energy emitted by a surface is proportional to the fourth power of its absolute temperature (the Stefan–Boltzmann law).

The rate at which a body radiates (or absorbs) thermal radiation depends upon the nature of the surface as well. Objects that are good emitters are also good absorbers (Kirchhoff’s radiation law). A blackened surface is an excellent emitter as well as an excellent absorber. If the same surface is silvered, it becomes a poor emitter and a poor absorber. A blackbody is one that absorbs all the radiant energy that falls on it. Such a perfect absorber would also be a perfect emitter.

The heating of the Earth by the Sun is an example of transfer of energy by radiation. The heating of a room by an open-hearth fireplace is another example. The flames, coals, and hot bricks radiate heat directly to the objects in the room with little of this heat being absorbed by the intervening air. Most of the air that is drawn from the room and heated in the fireplace does not reenter the room in a current of convection but is carried up the chimney together with the products of combustion.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#734 2020-07-17 00:52:02

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 51,596

Re: Miscellany

612) Baltic states

Baltic states, northeastern region of Europe containing the countries of Estonia, Latvia, and Lithuania, on the eastern shores of the Baltic Sea.

The Baltic states are bounded on the west and north by the Baltic Sea, which gives the region its name, on the east by Russia, on the southeast by Belarus, and on the southwest by Poland and an exclave of Russia. The underlying geology is sandstone, shale, and limestone, evidenced by hilly uplands that alternate with low-lying plains and bear mute testimony to the impact of the glacial era. In fact, glacial deposits in the form of eskers, moraines, and drumlins occur in profusion and tend to disrupt the drainage pattern, which results in frequent flooding. The Baltic region is dotted with more than 7,000 lakes and countless peat bogs, swamps, and marshes. A multitude of rivers, notably the Neman (Lithuanian: Nemunas) and Western Dvina (Latvian: Daugava), empty northwestward into the Baltic Sea.

The climate is cool and damp, with greater rainfall in the interior uplands than along the coast. Temperatures are moderate in comparison with other areas of the East European Plain, such as in neighbouring Russia. Despite its extensive agriculture, the Baltic region remains more than one-third forested. Trees that adapt to the often poorly drained soil are common, such as birches and conifers. Among the animals that inhabit the region are elk, boar, roe deer, wolves, hares, and badgers.

The Latvian and Lithuanian peoples speak languages belonging to the Baltic branch of the Indo-European linguistic family and are commonly known as Balts. The Estonian (and Livonian) peoples, who are considered Finnic peoples, speak languages of the Finno-Ugric family and constitute the core of the southern branch of the Baltic Finns. Culturally, the Estonians were strongly influenced by the Germans, and traces of the original Finnish culture have been preserved only in folklore. The Latvians also were considerably Germanized, and the majority of both the Estonians and the Latvians belong to the Lutheran church. However, most Lithuanians, associated historically with Poland, are Roman Catholic.

The vast majority of ethnic Estonians, Latvians, and Lithuanians live within the borders of their respective states. In all three countries virtually everyone among the titular nationalities speaks the native tongue as their first language, which is remarkable in light of the massive Russian immigration to the Baltic states during the second half of the 20th century. Initially, attempts to Russify the Baltic peoples were overt, but later they were moderated as Russian immigration soared and the sheer weight of the immigrant numbers simply served to promote this objective in less-blatant ways. Independence from the Soviet Union in 1991 allowed the Baltic states to place controls on immigration, and, in the decade following, the Russian presence in Baltic life diminished. At the beginning of the 21st century, the titular nationalities of Lithuania and Estonia accounted for about four-fifths and two-thirds of the countries’ populations, respectively, while ethnic Latvians made up just less than three-fifths of their nation’s population. Around this time, Poles eclipsed Russians as the largest minority in Lithuania. Urban dwellers constitute more than two-thirds of the region’s population, with the largest cities being Vilnius and Kaunas in southeastern Lithuania, the Latvian capital of Riga, and Tallinn on the northwestern coast of Estonia. Life expectancy in the Baltic states is comparatively low by European standards, as are the rates of natural increase, which were negative in all three countries at the beginning of the 21st century, owing in part to an aging population. Overall population fell in each of the Baltic states in the years following independence, primarily because of the return emigration of Russians to Russia, as well as other out-migration to western Europe and North America. In some cases, Russians took on the nationalities of their adopted Baltic countries and were thus counted among the ethnic majorities.

After the breakup of the Soviet Union, the Baltic states struggled to make a transition to a market economy from the system of Soviet national planning that had been in place since the end of World War II. A highly productive region for the former U.S.S.R., the Baltic states catered to economies of scale in output and regional specialization in industry - for example, manufacturing electric motors, machine tools, and radio receivers. Latvia, for example, was a leading producer of Soviet radio receivers. Throughout the 1990s privatization accelerated, national currencies were reintroduced, and non-Russian foreign investment increased.

Agriculture remains important to the Baltic economy, with potatoes, cereal grains, and fodder crops produced and dairy cattle and pigs raised. Timbering and fisheries enjoy modest success. The Baltic region is not rich in natural resources. Though Estonia is an important producer of oil shale, a large share of mineral and energy resources is imported. Low energy supplies, inflationary prices, and an economic collapse in Russia contributed to an energy crisis in the Baltics in the 1990s. Industry in the Baltic states is prominent, especially the production of food and beverages, textiles, wood products, and electronics and the traditional stalwarts of machine building and metal fabricating. The three states have the highest productivity of the former constituent republics of the Soviet Union.

Shortly after attaining independence, Estonia, Latvia, and Lithuania abandoned the Russian ruble in favour of new domestic currencies (the kroon, lats, and litas, respectively), which, as they strengthened, greatly improved foreign trade. The main trading partners outside the region are Russia, Germany, Finland, and Sweden. The financial stability of the Baltic nations was an important prerequisite to their entering the European Union and the North Atlantic Treaty Organization in 2004. Each of the Baltic states was preparing to adopt the euro as its common currency by the end of the decade.

Population : 2020 : 6,030,542; Area : 175,228 square kilometers = 67,656 sq mi.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#735 2020-07-18 01:01:44

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 51,596

Re: Miscellany

613) Mont Blanc Tunnel

Mont Blanc Tunnel, major Alpine automotive tunnel connecting France and Italy. It is 7.3 miles (11.7 km) long and is driven under the highest mountain in Europe. The tunnel is notable for its solution of a difficult ventilation problem and for being the first large rock tunnel to be excavated full-face—i.e., with the entire diameter of the tunnel bore drilled and blasted. Otherwise it was conventionally driven from two headings, the Italian and French crews beginning work in 1958 and 1959, respectively, and meeting in August 1962. Many difficulties, including an avalanche that swept the Italian camp, were overcome, and, when the tunnel opened in 1965, it was the longest vehicular tunnel in the world. It fulfilled a 150-year-old dream and is of great economic importance, providing a significantly shortened year-round automotive route between the two countries. In March 1999, however, a two-day fire killed 39 people and caused extensive damage to the tunnel, forcing it to close. It reopened to car traffic in March 2002 and to trucks and buses in the following months. Protestors, citing environmental and safety concerns, opposed the tunnel’s reopening, especially its use by heavy trucks.

The Mont Blanc Tunnel is a highway tunnel in Europe, under the Mont Blanc mountain in the Alps. It links Chamonix, Haute-Savoie, France with Courmayeur, Aosta Valley, Italy, via European route E25, in particular the motorway from Geneva (A40 of France) to Turin (A5 of Italy). The passageway is one of the major trans-Alpine transport routes, particularly for Italy, which relies on this tunnel for transporting as much as one-third of its freight to northern Europe. It reduces the route from France to Turin by 50 kilometres (30 miles) and to Milan by 100 km (60 mi). Northeast of Mont Blanc's summit, the tunnel is about 15 km (10 mi) southwest of the tripoint with Switzerland, near Mont Dolent.

The agreement between France and Italy on building a tunnel was signed in 1949. Two operating companies were founded, each responsible for one half of the tunnel: the French ‘Autoroutes et tunnel du Mont-Blanc’ (ATMB), founded on 30 April 1958, and the Italian ‘Società italiana per azioni per il Traforo del Monte Bianco’ (SITMB), founded on 1 September 1957. Drilling began in 1959 and was completed in 1962; the tunnel was opened to traffic on 19 July 1965.

The tunnel is 11.611 km (7.215 mi) in length, 8.6 m (28 ft) in width, and 4.35 m (14.3 ft) in height. The passageway is not horizontal, but in a slightly inverted "V", which assists ventilation. The tunnel consists of a single gallery with a two-lane dual direction road. At the time of its construction, it was three times longer than any existing highway tunnel.

The tunnel passes almost exactly under the summit of the Aiguille du Midi. At this spot, it lies 2,480 metres (8,140 ft) beneath the surface, making it the world's second deepest operational tunnel after the Gotthard Base Tunnel.

The Mont Blanc Tunnel was originally managed by the two building companies. Following a fire in 1999 in which 39 people died, which showed how lack of coordination could hamper the safety of the tunnel, all the operations are managed by a single entity: MBT-EEIG, controlled by both ATMB and SITMB together, through a 50–50 shares distribution.

An alternative route for road traffic between France to Italy is the Fréjus Road Tunnel. Road traffic grew steadily until 1994, even with the opening of the Fréjus tunnel. Since then, the combined traffic volume of the former has remained roughly constant.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#736 2020-07-19 00:58:26

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 51,596

Re: Miscellany

614) Wembley Stadium

Wembley Stadium, stadium in the borough of Brent in northwestern London, England, built as a replacement for an older structure of the same name on the same site. The new Wembley was the largest stadium in Great Britain at the time of its opening in 2007, with a seating capacity of 90,000. It is owned by a subsidiary of the Football Association and is used for football (soccer), rugby, and other sports and also for musical events.

The original Wembley Stadium, built to house the British Empire Exhibition of 1924–25, was completed in advance of the exhibition in 1923. It served as the principal venue of the London 1948 Olympic Games and remained in use until 2000. Construction of the new stadium began in 2002. The English firm Foster + Partners and the American stadium specialists HOK Sports Venue Event (now known as Populous) were the architects. Excavations to lower the elevation of the pitch (playing field) uncovered the foundations of Watkin’s Tower, a building project of the 1890s that would have been the world’s tallest structure had it been completed. The new stadium officially opened in March 2007.

Wembley Stadium is almost round in shape, with a circumference of 3,280 feet (1 km). The most striking architectural feature is a giant arch that is the principal support of the roof. The arch is 436 feet (133 metres) in height and is tilted 22° from the perpendicular. The movable stadium roof does not close completely but can shelter all the seats.

Wembley Stadium has hosted the Football Association Cup Final every year since the year of its completion. It is also the home of England’s national football team. During the London 2012 Olympic Games, the stadium was a venue for football, including the final (gold medal) match. American (gridiron) football is played at the stadium in the National Football League International Series.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#737 2020-07-20 00:46:25

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 51,596

Re: Miscellany

615) Computer memory

Computer memory, device that is used to store data or programs (sequences of instructions) on a temporary or permanent basis for use in an electronic digital computer. Computers represent information in binary code, written as sequences of 0s and 1s. Each binary digit (or “bit”) may be stored by any physical system that can be in either of two stable states, to represent 0 and 1. Such a system is called bistable. This could be an on-off switch, an electrical capacitor that can store or lose a charge, a magnet with its polarity up or down, or a surface that can have a pit or not. Today capacitors and transistors, functioning as tiny electrical switches, are used for temporary storage, and either disks or tape with a magnetic coating, or plastic discs with patterns of pits are used for long-term storage.

Computer memory is divided into main (or primary) memory and auxiliary (or secondary) memory. Main memory holds instructions and data when a program is executing, while auxiliary memory holds data and programs not currently in use and provides long-term storage.

Main Memory

The earliest memory devices were electro-mechanical switches, or relays, and electron tubes. In the late 1940s the first stored-program computers used ultrasonic waves in tubes of mercury or charges in special electron tubes as main memory. The latter were the first random-access memory (RAM). RAM contains storage cells that can be accessed directly for read and write operations, as opposed to serial access memory, such as magnetic tape, in which each cell in sequence must be accessed till the required cell is located.

Magnetic drum memory

Magnetic drums, which had fixed read/write heads for each of many tracks on the outside surface of a rotating cylinder coated with a ferromagnetic material, were used for both main and auxiliary memory in the 1950s, although their data access was serial.

Magnetic core memory

About 1952 the first relatively cheap RAM was developed: magnetic core memory, an arrangement of tiny ferrite cores on a wire grid through which current could be directed to change individual core alignments. Because of the inherent advantage of RAM, core memory was the principal form of main memory until superseded by semiconductor memory in the late 1960s.

Semiconductor memory

There are two basic kinds of semiconductor memory. Static RAM (SRAM) consists of flip-flops, a bistable circuit composed of four to six transistors. Once a flip-flop stores a bit, it keeps that value until the opposite value is stored in it. SRAM gives fast access to data, but it is physically relatively large. It is used primarily for small amounts of memory called registers in a computer’s central processing unit (CPU) and for fast “cache” memory. Dynamic RAM (DRAM) stores each bit in an electrical capacitor rather than in a flip-flop, using a transistor as a switch to charge or discharge the capacitor. Because it has fewer electrical components, a DRAM storage cell is smaller than SRAM. However, access to its value is slower and, because capacitors gradually leak charges, stored values must be recharged approximately 50 times per second. Nonetheless, DRAM is generally used for main memory because the same size chip can hold several times as much DRAM as SRAM.

Storage cells in RAM have addresses. It is common to organize RAM into “words” of 8 to 64 bits, or 1 to 8 bytes (8 bits = 1 byte). The size of a word is generally the number of bits that can be transferred at a time between main memory and the CPU. Every word, and usually every byte, has an address. A memory chip must have additional decoding circuits that select the set of storage cells that are at a particular address and either store a value at that address or fetch what is stored there. The main memory of a modern computer consists of a number of memory chips, each of which might hold many megabytes (millions of bytes), and still further addressing circuitry selects the appropriate chip for each address. In addition, DRAM requires circuits to detect its stored values and refresh them periodically.

Main memories take longer to access data than CPUs take to operate on them. For instance, DRAM memory access typically takes 20 to 80 nanoseconds (billionths of a second), but CPU arithmetic operations may take only a nanosecond or less. There are several ways in which this disparity is handled. CPUs have a small number of registers, very fast SRAM that hold current instructions and the data on which they operate. Cache memory is a larger amount (up to several megabytes) of fast SRAM on the CPU chip. Data and instructions from main memory are transferred to the cache, and since programs frequently exhibit “locality of reference”—that is, they execute the same instruction sequence for a while in a repetitive loop and operate on sets of related data—memory references can be made to the fast cache once values are copied into it from main memory.

Much of the DRAM access time goes into decoding the address to select the appropriate storage cells. The locality of reference property means that a sequence of memory addresses will frequently be used, and fast DRAM is designed to speed access to subsequent addresses after the first one. Synchronous DRAM (SDRAM) and EDO (extended data output) are two such types of fast memory.

Nonvolatile semiconductor memories, unlike SRAM and DRAM, do not lose their contents when power is turned off. Some nonvolatile memories, such as read-only memory (ROM), are not rewritable once manufactured or written. Each memory cell of a ROM chip has either a transistor for a 1 bit or none for a 0 bit. ROMs are used for programs that are essential parts of a computer’s operation, such as the bootstrap program that starts a computer and loads its operating system or the BIOS (basic input/output system) that addresses external devices in a personal computer (PC).

EPROM (erasable programmable ROM), EAROM (electrically alterable ROM), and flash memory are types of nonvolatile memories that are rewritable, though the rewriting is far more time-consuming than reading. They are thus used as special-purpose memories where writing is seldom necessary—if used for the BIOS, for example, they may be changed to correct errors or update features.

Auxiliary Memory

Auxiliary memory units are among computer peripheral equipment. They trade slower access rates for greater storage capacity and data stability. Auxiliary memory holds programs and data for future use, and, because it is nonvolatile (like ROM), it is used to store inactive programs and to archive data. Early forms of auxiliary storage included punched paper tape, punched cards, and magnetic drums. Since the 1980s, the most common forms of auxiliary storage have been magnetic disks, magnetic tapes, and optical discs.

Magnetic disk drives

Magnetic disks are coated with a magnetic material such as iron oxide. There are two types: hard disks made of rigid aluminum or glass, and removable diskettes made of flexible plastic. In 1956 the first magnetic hard drive (HD) was invented at IBM; consisting of 50 21-inch (53-cm) disks, it had a storage capacity of 5 megabytes. By the 1990s the standard HD diameter for PCs had shrunk to 3.5 inches (about 8.9 cm), with storage capacities in excess of 100 gigabytes (billions of bytes); the standard size HD for portable PCs (“laptops”) was 2.5 inches (about 6.4 cm). Since the invention of the floppy disk drive (FDD) at IBM by Alan Shugart in 1967, diskettes have shrunk from 8 inches (about 20 cm) to the current standard of 3.5 inches (about 8.9 cm). FDDs have low capacity—generally less than two megabytes—and have become obsolete since the introduction of optical disc drives in the 1990s.

Hard drives generally have several disks, or platters, with an electromagnetic read/write head for each surface; the entire assembly is called a comb. A microprocessor in the drive controls the motion of the heads and also contains RAM to store data for transfer to and from the disks. The heads move across the disk surface as it spins up to 15,000 revolutions per minute; the drives are hermetically sealed, permitting the heads to float on a thin film of air very close to the disk’s surface. A small current is applied to the head to magnetize tiny spots on the disk surface for storage; similarly, magnetized spots on the disk generate currents in the head as it moves by, enabling data to be read. FDDs function similarly, but the removable diskettes spin at only a few hundred revolutions per minute.

Data are stored in close concentric tracks that require very precise control of the read/write heads. Refinements in controlling the heads have enabled smaller and closer packing of tracks—up to 20,000 tracks per inch (8,000 tracks per cm) by the start of the 21st century—which has resulted in the storage capacity of these devices growing nearly 30 percent per year since the 1980s. RAID (redundant array of inexpensive disks) combines multiple disk drives to store data redundantly for greater reliability and faster access. They are used in high-performance computer network servers.

Magnetic tape

Magnetic tape, similar to the tape used in tape recorders, has also been used for auxiliary storage, primarily for archiving data. Tape is cheap, but access time is far slower than that of a magnetic disk because it is sequential-access memory—i.e., data must be sequentially read and written as a tape is unwound, rather than retrieved directly from the desired point on the tape. Servers may also use large collections of tapes or optical discs, with robotic devices to select and load them, rather like old-fashioned jukeboxes.

Optical discs

Another form of largely read-only memory is the optical compact disc, developed from videodisc technology during the early 1980s. Data are recorded as tiny pits in a single spiral track on plastic discs that range from 3 to 12 inches (7.6 to 30 cm) in diameter, though a diameter of 4.8 inches (12 cm) is most common. The pits are produced by a laser or by a stamping machine and are read by a low-power laser and a photocell that generates an electrical signal from the varying light reflected from the pattern of pits. Optical discs are removable and have a far greater memory capacity than diskettes; the largest ones can store many gigabytes of information.

A common optical disc is the CD-ROM (compact disc read-only memory). It holds about 700 megabytes of data, recorded with an error-correcting code that can correct bursts of errors caused by dust or imperfections. CD-ROMs are used to distribute software, encyclopaedias, and multimedia text with audio and images. CD-R (CD-recordable), or WORM (write-once read-many), is a variation of CD-ROM on which a user may record information but not subsequently change it. CD-RW (CD-rewritable) disks can be re-recorded. DVDs (digital video, or versatile, discs), developed for recording movies, store data more densely than does CD-ROM, with more powerful error correction. Though the same size as CDs, DVDs typically hold 5 to 17 gigabytes—several hours of video or several million text pages.

Magneto-optical discs

Magneto-optical discs are a hybrid storage medium. In reading, spots with different directions of magnetization give different polarization in the reflected light of a low-power laser beam. In writing, every spot on the disk is first heated by a strong laser beam and then cooled under a magnetic field, magnetizing every spot in one direction, to store all 0s. The writing process then reverses the direction of the magnetic field to store 1s where desired.

Memory Hierarchy

Although the main/auxiliary memory distinction is broadly useful, memory organization in a computer forms a hierarchy of levels, arranged from very small, fast, and expensive registers in the CPU to small, fast cache memory; larger DRAM; very large hard disks; and slow and inexpensive nonvolatile backup storage. Memory usage by modern computer operating systems spans these levels with virtual memory, a system that provides programs with large address spaces (addressable memory), which may exceed the actual RAM in the computer. Virtual memory gives each program a portion of main memory and stores the rest of its code and data on a hard disk, automatically copying blocks of addresses to and from main memory as needed. The speed of modern hard disks together with the same locality of reference property that lets caches work well makes virtual memory feasible.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#738 2020-07-21 01:13:15

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 51,596

Re: Miscellany

616) Computer graphics

Computer graphics, production of images on computers for use in any medium. Images used in the graphic design of printed material are frequently produced on computers, as are the still and moving images seen in comic strips and animations. The realistic images viewed and manipulated in electronic games and computer simulations could not be created or supported without the enhanced capabilities of modern computer graphics. Computer graphics also are essential to scientific visualization, a discipline that uses images and colours to model complex phenomena such as air currents and electric fields, and to computer-aided engineering and design, in which objects are drawn and analyzed in computer programs. Even the windows-based graphical user interface, now a common means of interacting with innumerable computer programs, is a product of computer graphics.

Image Display

Images have high information content, both in terms of information theory (i.e., the number of bits required to represent images) and in terms of semantics (i.e., the meaning that images can convey to the viewer). Because of the importance of images in any domain in which complex information is displayed or manipulated, and also because of the high expectations that consumers have of image quality, computer graphics have always placed heavy demands on computer hardware and software.

In the 1960s early computer graphics systems used vector graphics to construct images out of straight line segments, which were combined for display on specialized computer video monitors. Vector graphics is economical in its use of memory, as an entire line segment is specified simply by the coordinates of its endpoints. However, it is inappropriate for highly realistic images, since most images have at least some curved edges, and using all straight lines to draw curved objects results in a noticeable “stair-step” effect.

In the late 1970s and ’80s raster graphics, derived from television technology, became more common, though still limited to expensive graphics workstation computers. Raster graphics represents images by bitmaps stored in computer memory and displayed on a screen composed of tiny pixels. Each pixel is represented by one or more memory bits. One bit per pixel suffices for black-and-white images, while four bits per pixel specify a 16-step gray-scale image. Eight bits per pixel specify an image with 256 colour levels; so-called “true color” requires 24 bits per pixel (specifying more than 16 million colours). At that resolution, or bit depth, a full-screen image requires several megabytes (millions of bytes; 8 bits = 1 byte) of memory. Since the 1990s, raster graphics has become ubiquitous. Personal computers are now commonly equipped with dedicated video memory for holding high-resolution bitmaps.

3-D Rendering

Although used for display, bitmaps are not appropriate for most computational tasks, which need a three-dimensional representation of the objects composing the image. One standard benchmark for the rendering of computer models into graphical images is the Utah Teapot, created at the University of Utah in 1975. Represented skeletally as a wire-frame image, the Utah Teapot is composed of many small polygons. However, even with hundreds of polygons, the image is not smooth. Smoother representations can be provided by Bezier curves, which have the further advantage of requiring less computer memory. Bezier curves are described by cubic equations; a cubic curve is determined by four points or, equivalently, by two points and the curve’s slopes at those points. Two cubic curves can be smoothly joined by giving them the same slope at the junction. Bezier curves, and related curves known as B-splines, were introduced in computer-aided design programs for the modeling of automobile bodies.

Rendering offers a number of other computational challenges in the pursuit of realism. Objects must be transformed as they rotate or move relative to the observer’s viewpoint. As the viewpoint changes, solid objects must obscure those behind them, and their front surfaces must obscure their rear ones. This technique of “hidden surface elimination” may be done by extending the pixel attributes to include the “depth” of each pixel in a scene, as determined by the object of which it is a part. Algorithms can then compute which surfaces in a scene are visible and which ones are hidden by others. In computers equipped with specialized graphics cards for electronic games, computer simulations, and other interactive computer applications, these algorithms are executed so quickly that there is no perceptible lag - that is, rendering is achieved in “real time.”

Shading And Texturing