Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#1101 2021-08-04 00:30:28

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,141

Re: Miscellany

1079) Ethanol

Ethanol, also called ethyl alcohol, grain alcohol, or alcohol, a member of a class of organic compounds that are given the general name alcohols; its molecular formula is C2H5OH. Ethanol is an important industrial chemical; it is used as a solvent, in the synthesis of other organic chemicals, and as an additive to automotive gasoline (forming a mixture known as a gasohol). Ethanol is also the intoxicating ingredient of many alcoholic beverages such as beer, wine, and distilled spirits.

There are two main processes for the manufacture of ethanol: the fermentation of carbohydrates (the method used for alcoholic beverages) and the hydration of ethylene. Fermentation involves the transformation of carbohydrates to ethanol by growing yeast cells. The chief raw materials fermented for the production of industrial alcohol are sugar crops such as beets and sugarcane and grain crops such as corn (maize). Hydration of ethylene is achieved by passing a mixture of ethylene and a large excess of steam at high temperature and pressure over an acidic catalyst.

Ethanol produced either by fermentation or by synthesis is obtained as a dilute aqueous solution and must be concentrated by fractional distillation. Direct distillation can yield at best the constant-boiling-point mixture containing 95.6 percent by weight of ethanol. Dehydration of the constant-boiling-point mixture yields anhydrous, or absolute, alcohol. Ethanol intended for industrial use is usually denatured (rendered unfit to drink), typically with methanol, benzene, or kerosene.

Pure ethanol is a colourless flammable liquid (boiling point 78.5 °C [173.3 °F]) with an agreeable ethereal odour and a burning taste. Ethanol is toxic, affecting the central nervous system. Moderate amounts relax the muscles and produce an apparent stimulating effect by depressing the inhibitory activities of the brain, but larger amounts impair coordination and judgment, finally producing coma and death. It is an addictive drug for some persons, leading to the disease alcoholism.

Ethanol is converted in the body first to acetaldehyde and then to carbon dioxide and water, at the rate of about half a fluid ounce, or 15 ml, per hour; this quantity corresponds to a dietary intake of about 100 calories.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1102 2021-08-05 00:37:42

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,141

Re: Miscellany

1080) Ethyl ether

Ethyl ether, also called diethyl ether, well-known anesthetic, commonly called simply ether, an organic compound belonging to a large group of compounds called ethers; its molecular structure consists of two ethyl groups linked through an oxygen atom, as in C2H5OC2H5.

Ethyl ether is a colourless, volatile, highly flammable liquid (boiling point 34.5° C [94.1° F]) with a powerful, characteristic odour and a hot, sweetish taste. It is a widely used solvent for bromine, iodine, most fatty and resinous substances, volatile oils, pure rubber, and certain vegetable alkaloids.

Ethyl ether is manufactured by the distillation of ethyl alcohol with sulfuric acid. Pure ether (absolute ether), required for medical purposes and in the preparation of Grignard reagents, is prepared by washing the crude ether with a saturated aqueous solution of calcium chloride, then treating with sodium.

Diethyl ether, or simply ether, is an organic compound in the ether class with the formula (C2H5)2O, sometimes abbreviated as Et2O. It is a colorless, highly volatile, sweet-smelling ("Ethereal odour"), extremely flammable liquid. It is commonly used as a solvent in laboratories and as a starting fluid for some engines. It was formerly used as a general anesthetic, until non-flammable drugs were developed, such as halothane. It has been used as a recreational drug to cause intoxication. It is a structural isomer of butanol.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1103 2021-08-06 00:47:49

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,141

Re: Miscellany

1081) Pauli exclusion principle

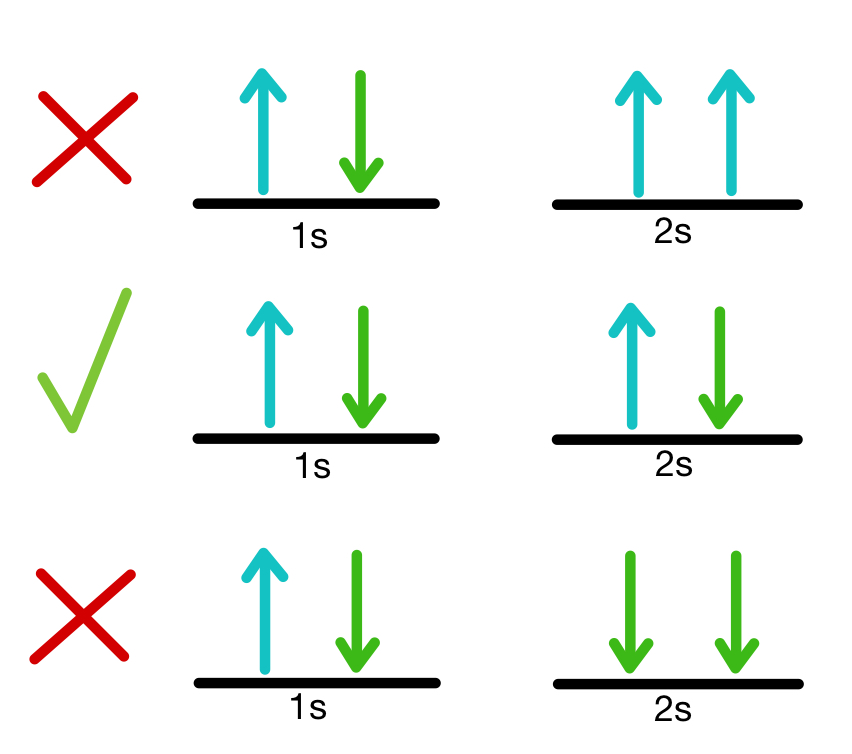

Pauli exclusion principle, assertion that no two electrons in an atom can be at the same time in the same state or configuration, proposed (1925) by the Austrian physicist Wolfgang Pauli to account for the observed patterns of light emission from atoms. The exclusion principle subsequently has been generalized to include a whole class of particles of which the electron is only one member.

Subatomic particles fall into two classes, based on their statistical behaviour. Those particles to which the Pauli exclusion principle applies are called fermions; those that do not obey this principle are called bosons. When in a closed system, such as an atom for electrons or a nucleus for protons and neutrons, fermions are distributed so that a given state is occupied by only one at a time.

Particles obeying the exclusion principle have a characteristic value of spin, or intrinsic angular momentum; their spin is always some odd whole-number multiple of one-half. In the modern view of atoms, the space surrounding the dense nucleus may be thought of as consisting of orbitals, or regions, each of which comprises only two distinct states. The Pauli exclusion principle indicates that, if one of these states is occupied by an electron of spin one-half, the other may be occupied only by an electron of opposite spin, or spin negative one-half. An orbital occupied by a pair of electrons of opposite spin is filled: no more electrons may enter it until one of the pair vacates the orbital. An alternative version of the exclusion principle as applied to atomic electrons states that no two electrons can have the same values of all four quantum numbers.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1104 2021-08-07 01:13:31

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,141

Re: Miscellany

1082) Kirchhoff's rules

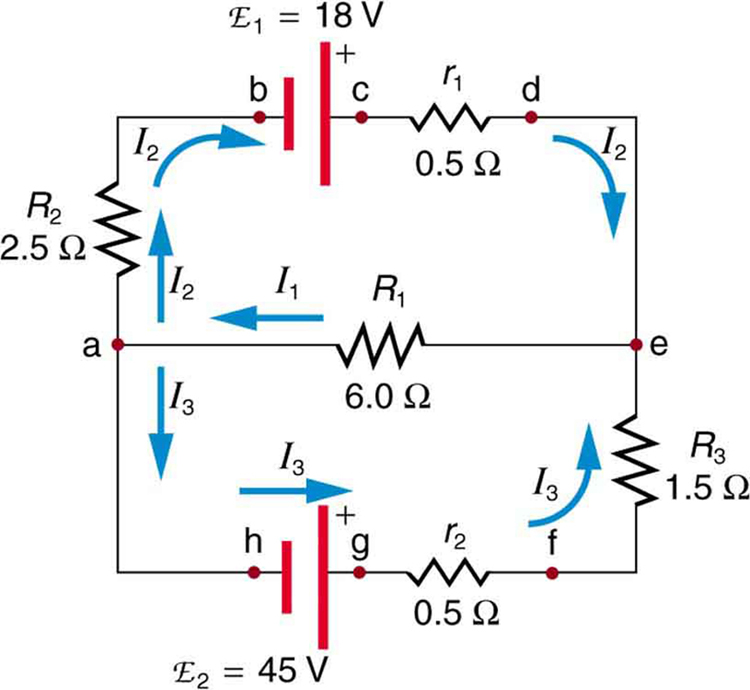

Kirchhoff’s rules, two statements about multi-loop electric circuits that embody the laws of conservation of electric charge and energy and that are used to determine the value of the electric current in each branch of the circuit.

The first rule, the junction theorem, states that the sum of the currents into a specific junction in the circuit equals the sum of the currents out of the same junction. Electric charge is conserved: it does not suddenly appear or disappear; it does not pile up at one point and thin out at another.

The second rule, the loop equation, states that around each loop in an electric circuit the sum of the emf’s (electromotive forces, or voltages, of energy sources such as batteries and generators) is equal to the sum of the potential drops, or voltages across each of the resistances, in the same loop. All the energy imparted by the energy sources to the charged particles that carry the current is just equivalent to that lost by the charge carriers in useful work and heat dissipation around each loop of the circuit.

On the basis of Kirchhoff’s two rules, a sufficient number of equations can be written involving each of the currents so that their values may be determined by an algebraic solution.

Kirchhoff’s rules are also applicable to complex alternating-current circuits and with modifications to complex magnetic circuits.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1105 2021-08-08 00:18:27

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,141

Re: Miscellany

1083) Adenosine triphosphate

Adenosine triphosphate (ATP), energy-carrying molecule found in the cells of all living things. ATP captures chemical energy obtained from the breakdown of food molecules and releases it to fuel other cellular processes.

Cells require chemical energy for three general types of tasks: to drive metabolic reactions that would not occur automatically; to transport needed substances across membranes; and to do mechanical work, such as moving muscles. ATP is not a storage molecule for chemical energy; that is the job of carbohydrates, such as glycogen, and fats. When energy is needed by the cell, it is converted from storage molecules into ATP. ATP then serves as a shuttle, delivering energy to places within the cell where energy-consuming activities are taking place.

ATP is a nucleotide that consists of three main structures: the nitrogenous base, adenine; the sugar, ribose; and a chain of three phosphate groups bound to ribose. The phosphate tail of ATP is the actual power source which the cell taps. Available energy is contained in the bonds between the phosphates and is released when they are broken, which occurs through the addition of a water molecule (a process called hydrolysis). Usually only the outer phosphate is removed from ATP to yield energy; when this occurs ATP is converted to adenosine diphosphate (ADP), the form of the nucleotide having only two phosphates.

ATP is able to power cellular processes by transferring a phosphate group to another molecule (a process called phosphorylation). This transfer is carried out by special enzymes that couple the release of energy from ATP to cellular activities that require energy.

Although cells continuously break down ATP to obtain energy, ATP also is constantly being synthesized from ADP and phosphate through the processes of cellular respiration. Most of the ATP in cells is produced by the enzyme ATP synthase, which converts ADP and phosphate to ATP. ATP synthase is located in the membrane of cellular structures called mitochondria; in plant cells, the enzyme also is found in chloroplasts. The central role of ATP in energy metabolism was discovered by Fritz Albert Lipmann and Herman Kalckar in 1941.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1106 2021-08-09 00:56:04

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,141

Re: Miscellany

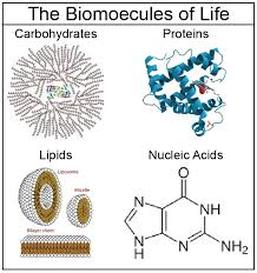

1084) Biomolecule

Biomolecule, also called biological molecule, any of numerous substances that are produced by cells and living organisms. Biomolecules have a wide range of sizes and structures and perform a vast array of functions. The four major types of biomolecules are carbohydrates, lipids, nucleic acids, and proteins.

Among biomolecules, nucleic acids, namely DNA and RNA, have the unique function of storing an organism’s genetic code—the sequence of nucleotides that determines the amino acid sequence of proteins, which are of critical importance to life on Earth. There are 20 different amino acids that can occur within a protein; the order in which they occur plays a fundamental role in determining protein structure and function. Proteins themselves are major structural elements of cells. They also serve as transporters, moving nutrients and other molecules in and out of cells, and as enzymes and catalysts for the vast majority of chemical reactions that take place in living organisms. Proteins also form antibodies and hormones, and they influence gene activity.

Likewise, carbohydrates, which are made up primarily of molecules containing atoms of carbon, hydrogen, and oxygen, are essential energy sources and structural components of all life, and they are among the most abundant biomolecules on Earth. They are built from four types of sugar units—monosaccharides, disaccharides, oligosaccharides, and polysaccharides. Lipids, another key biomolecule of living organisms, fulfill a variety of roles, including serving as a source of stored energy and acting as chemical messengers. They also form membranes, which separate cells from their environments and compartmentalize the cell interior, giving rise to organelles, such as the nucleus and the mitochondrion, in higher (more complex) organisms.

All biomolecules share in common a fundamental relationship between structure and function, which is influenced by factors such as the environment in which a given biomolecule occurs. Lipids, for example, are hydrophobic (“water-fearing”); in water, many spontaneously arrange themselves in such a way that the hydrophobic ends of the molecules are protected from the water, while the hydrophilic ends are exposed to the water. This arrangement gives rise to lipid bilayers, or two layers of phospholipid molecules, which form the membranes of cells and organelles. In another example, DNA, which is a very long molecule—in humans, the combined length of all the DNA molecules in a single cell stretched end to end would be about 1.8 metres (6 feet), whereas the cell nucleus is about 6 μm (6 x 10^{-6} metre) in diameter—has a highly flexible helical structure that allows the molecule to become tightly coiled and looped. This structural feature plays a key role in enabling DNA to fit in the cell nucleus, where it carries out its function in coding genetic traits.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1107 2021-08-10 00:52:11

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,141

Re: Miscellany

1085) Parachute

Parachute, device that slows the vertical descent of a body falling through the atmosphere or the velocity of a body moving horizontally. The parachute increases the body’s surface area, and this increased air resistance slows the body in motion. Parachutes have found wide employment in war and peace for safely dropping supplies and equipment as well as personnel, and they are deployed for slowing a returning space capsule after reentry into Earth’s atmosphere. They are also used in the sport of skydiving.

Development And Military Applications

The Shiji (Records of the Great Historian of China), by 2nd-century-BCE Chinese scholar Sima Qian, includes the tale of a Chinese emperor who survived a jump from an upper story of a burning building by grasping conical straw hats in order to slow his descent. Though likely apocryphal, the story nonetheless demonstrates an understanding of the principle behind parachuting. A 13th-century Chinese manuscript contains a similar report of a thief who absconded with part of a statue by leaping from the tower where it was housed while holding two umbrellas. A report that actual parachutes were used at a Chinese emperor’s coronation ceremony in 1306 has not been substantiated by historical record. The first record of a parachute in the West occurred some two centuries later. A diagram of a pyramidal parachute, along with a brief description of the concept, is found in the Codex Atlanticus, a compilation of some 1,000 pages from Leonardo da Vinci’s notebooks (c. 1478–1518). However, there is no evidence suggesting that da Vinci ever actually constructed such a device.

The modern parachute developed at virtually the same time as the balloon, though the two events were independent of each other. The first person to demonstrate the use of a parachute in action was Louis-Sébastien Lenormand of France in 1783. Lenormand jumped from a tree with two parasols. A few years later, other French aeronauts jumped from balloons. André-Jacques Garnerin was the first to use a parachute regularly, making a number of exhibition jumps, including one of about 8,000 feet (2,400 metres) in England in 1802.

Early parachutes—made of canvas or silk—had frames that held them open (like an umbrella). Later in the 1800s, soft, foldable parachutes of silk were used; these were deployed by a device (attached to the airborne platform from which the jumper was diving) that extracted the parachute from a bag. Only later still, in the early 1900s, did the rip cord that allowed the parachutist to deploy the chute appear.

The first successful descent from an airplane was by Capt. Albert Berry of the United States Army in 1912. But in World War I, although parachutes were used with great frequency by men who needed to escape from tethered observation balloons, they were considered impractical for airplanes, and only in the last stage of the war were they finally introduced. In World War II, however, parachutes were employed extensively, especially by the Germans, for a variety of purposes that included landing special troops for combat, supplying isolated or inaccessible troops, and infiltrating agents into enemy territory. Specialized parachutes were invented during World War II for these tasks. One such German-made parachute—the ring, or ribbon, parachute—was composed of a number of concentric rings of radiating ribbons of fabric with openings between them that allowed some airflow; this chute had high aerodynamic stability and performed heavy-duty functions well, such as dropping heavy cargo loads or braking aircraft in short landing runs. In the 1990s, building upon the knowledge gained from manufacturing square sport parachutes, ram-air parachutes were extensively enlarged, and a platform containing a computer that controls the parachute and guides the platform to its designated target was added for military applications; these parachutes are capable of carrying thousands of pounds of payload to precision landing spots.

Parachutes designed to open at supersonic speeds have radically different contours from conventional canopy chutes; they are made in the form of a cone, with air allowed to escape either through pores of the material or through a large circular opening running around the cone. To permit escape from an aircraft flying at supersonic speeds, the parachute is designed as part of an assembly that includes the ejection seat. A small rocket charge ejects pilot, seat, and parachute; when the pilot is clear of the seat, the parachute opens automatically.

Sport Parachuting

The sport parachute has evolved over the years from the traditional round parachute to the square (actually rectangular) ram-air airfoils commonly seen today. Round parachutes were made of nylon and assembled in a pack attached to a harness worn by the user, which contained the parachute canopy, a small pilot chute that assisted in opening the canopy, and suspension lines. The canopy’s strength was the result of sewing together between 20 and 32 separate panels, or gores, each made of smaller sections, in such a way as to try to confine a tear to the section in which it originated. The pack was fitted to the parachutist’s back or chest and opened by a rip cord, by an automatic timing device, or by a static line—i.e., a line fastened to the aircraft. The harness was so constructed that deceleration (as the parachute opened), gravity, and wind forces were transmitted to the wearer’s body with maximum safety and minimum discomfort.

Early in their design evolution, round parachutes had holes placed into them to allow air to escape out the side, which thus provided some degree of maneuverability to the parachutist, who could selectively close off vents to change direction. These round parachutes had a typical forward speed of 5–7 miles per hour (8–11 km per hour). High-performance round parachutes (known as the Para Commander class) were constructed with the apex (top) of the canopy pulled down to create a higher pressure airflow, which was directed through several vent holes in the rear quadrant of the parachute. These parachutes had a typical forward speed of 10–14 mph and were much more maneuverable than the traditional round parachute. For a brief period of time, single-surface double-keel parachutes known as Rogallo Wings or Para Dactyls made an appearance, but they were soon superseded by high-performance square parachutes, which fly by using the aerodynamic principles of an airfoil (wing) and are extremely maneuverable.

Square parachutes are made of low- (or zero-) porosity nylon composed into cells rather than gores. In flight they resemble a tapered air mattress, with openings at the parachute’s front that allow the air to “ram” into the cell structure and inflate the parachute into its airfoil shape. Forward speeds of between 20 and 30 mph are easily obtained, yet the parachute is also capable of delivering the skydiver to ground with a soft landing because the diver can “flare” the canopy (pull the tail down, which causes the canopy to change its pitch) when nearing the ground. The effect is the same as with an aircraft—changing the pitch of the ram-air “wing” converts much of the forward speed to lift and thus minimizes forward and downward velocities at the time of ground contact. The controls for this type of parachute are toggles that are similar to the types seen on the round parachute, and harnesses are fairly similar to older designs as well. Modern parachutes, however, are nearly always worn on the skydiver’s back and are rarely worn on the chest.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1108 2021-08-11 00:19:27

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,141

Re: Miscellany

1086) Pollution

Pollution, also called environmental pollution, the addition of any substance (solid, liquid, or gas) or any form of energy (such as heat, sound, or radioactivity) to the environment at a rate faster than it can be dispersed, diluted, decomposed, recycled, or stored in some harmless form. The major kinds of pollution, usually classified by environment, are air pollution, water pollution, and land pollution. Modern society is also concerned about specific types of pollutants, such as noise pollution, light pollution, and plastic pollution. Pollution of all kinds can have negative effects on the environment and wildlife and often impacts human health and well-being.

History Of Pollution

Although environmental pollution can be caused by natural events such as forest fires and active volcanoes, use of the word pollution generally implies that the contaminants have an anthropogenic source—that is, a source created by human activities. Pollution has accompanied humankind ever since groups of people first congregated and remained for a long time in any one place. Indeed, ancient human settlements are frequently recognized by their wastes—shell mounds and rubble heaps, for instance. Pollution was not a serious problem as long as there was enough space available for each individual or group. However, with the establishment of permanent settlements by great numbers of people, pollution became a problem, and it has remained one ever since.

Cities of ancient times were often noxious places, fouled by human wastes and debris. Beginning about 1000 CE, the use of coal for fuel caused considerable air pollution, and the conversion of coal to coke for iron smelting beginning in the 17th century exacerbated the problem. In Europe, from the Middle Ages well into the early modern era, unsanitary urban conditions favoured the outbreak of population-decimating epidemics of disease, from plague to cholera and typhoid fever. Through the 19th century, water and air pollution and the accumulation of solid wastes were largely problems of congested urban areas. But, with the rapid spread of industrialization and the growth of the human population to unprecedented levels, pollution became a universal problem.

By the middle of the 20th century, an awareness of the need to protect air, water, and land environments from pollution had developed among the general public. In particular, the publication in 1962 of Rachel Carson’s book Silent Spring focused attention on environmental damage caused by improper use of pesticides such as DDT and other persistent chemicals that accumulate in the food chain and disrupt the natural balance of ecosystems on a wide scale. In response, major pieces of environmental legislation, such as the Clean Air Act (1970) and the Clean Water Act (1972; United States), were passed in many countries to control and mitigate environmental pollution.

Pollution Control

The presence of environmental pollution raises the issue of pollution control. Great efforts are made to limit the release of harmful substances into the environment through air pollution control, wastewater treatment, solid-waste management, hazardous-waste management, and recycling. Unfortunately, attempts at pollution control are often surpassed by the scale of the problem, especially in less-developed countries. Noxious levels of air pollution are common in many large cities, where particulates and gases from transportation, heating, and manufacturing accumulate and linger. The problem of plastic pollution on land and in the oceans has only grown as the use of single-use plastics has burgeoned worldwide. In addition, greenhouse gas emissions, such as methane and carbon dioxide, continue to drive global warming and pose a great threat to biodiversity and public health.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1109 2021-08-12 01:18:43

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,141

Re: Miscellany

1087) Air pollution

Air pollution, release into the atmosphere of various gases, finely divided solids, or finely dispersed liquid aerosols at rates that exceed the natural capacity of the environment to dissipate and dilute or absorb them. These substances may reach concentrations in the air that cause undesirable health, economic, or aesthetic effects.

Major Air Pollutants

Criteria pollutants

Clean, dry air consists primarily of nitrogen and oxygen—78 percent and 21 percent respectively, by volume. The remaining 1 percent is a mixture of other gases, mostly argon (0.9 percent), along with trace (very small) amounts of carbon dioxide, methane, hydrogen, helium, and more. Water vapour is also a normal, though quite variable, component of the atmosphere, normally ranging from 0.01 to 4 percent by volume; under very humid conditions the moisture content of air may be as high as 5 percent.

The gaseous air pollutants of primary concern in urban settings include sulfur dioxide, nitrogen dioxide, and carbon monoxide; these are emitted directly into the air from fossil fuels such as fuel oil, gasoline, and natural gas that are burned in power plants, automobiles, and other combustion sources. Ozone (a key component of smog) is also a gaseous pollutant; it forms in the atmosphere via complex chemical reactions occurring between nitrogen dioxide and various volatile organic compounds (e.g., gasoline vapours).

Airborne suspensions of extremely small solid or liquid particles called “particulates” (e.g., soot, dust, smokes, fumes, mists), especially those less than 10 micrometres (μm; millionths of a metre) in size, are significant air pollutants because of their very harmful effects on human health. They are emitted by various industrial processes, coal- or oil-burning power plants, residential heating systems, and automobiles. Lead fumes (airborne particulates less than 0.5 μm in size) are particularly toxic and are an important pollutant of many diesel fuels.

The six major air pollutants listed above have been designated by the U.S. Environmental Protection Agency (EPA) as “criteria” pollutants—criteria meaning that the concentrations of these pollutants in the atmosphere are useful as indicators of overall air quality.

Except for lead, criteria pollutants are emitted in industrialized countries at very high rates, typically measured in millions of tons per year. All except ozone are discharged directly into the atmosphere from a wide variety of sources. They are regulated primarily by establishing ambient air quality standards, which are maximum acceptable concentrations of each criteria pollutant in the atmosphere, regardless of its origin.

Fine particulates

Very small fragments of solid materials or liquid droplets suspended in air are called particulates. Except for airborne lead, which is treated as a separate category, they are characterized on the basis of size and phase (i.e., solid or liquid) rather than by chemical composition. For example, solid particulates between roughly 1 and 100 μm in diameter are called dust particles, whereas airborne solids less than 1 μm in diameter are called fumes.

The particulates of most concern with regard to their effects on human health are solids less than 10 μm in diameter, because they can be inhaled deep into the lungs and become trapped in the lower respiratory system. Certain particulates, such as asbestos fibres, are known carcinogens (cancer-causing agents), and many carbonaceous particulates—e.g., soot—are suspected of being carcinogenic. Major sources of particulate emissions include fossil-fuel power plants, manufacturing processes, fossil-fuel residential heating systems, and gasoline-powered vehicles.

Carbon monoxide

Carbon monoxide is an odourless, invisible gas formed as a result of incomplete combustion. It is the most abundant of the criteria pollutants. Gasoline-powered highway vehicles are the primary source, although residential heating systems and certain industrial processes also emit significant amounts of this gas. Power plants emit relatively little carbon monoxide because they are carefully designed and operated to maximize combustion efficiency. Exposure to carbon monoxide can be acutely harmful since it readily displaces oxygen in the bloodstream, leading to asphyxiation at high enough concentrations and exposure times.

Sulfur dioxide

A colourless gas with a sharp, choking odour, sulfur dioxide is formed during the combustion of coal or oil that contains sulfur as an impurity. Most sulfur dioxide emissions come from power-generating plants; very little comes from mobile sources. This pungent gas can cause eye and throat irritation and harm lung tissue when inhaled.

Sulfur dioxide also reacts with oxygen and water vapour in the air, forming a mist of sulfuric acid that reaches the ground as a component of acid rain. Acid rain is believed to have harmed or destroyed fish and plant life in many thousands of lakes and streams in parts of Europe, the northeastern United States, southeastern Canada, and parts of China. It also causes corrosion of metals and deterioration of the exposed surfaces of buildings and public monuments.

Nitrogen dioxide

Of the several forms of nitrogen oxides, nitrogen dioxide—a pungent, irritating gas—is of most concern. It is known to cause pulmonary edema, an accumulation of excessive fluid in the lungs. Nitrogen dioxide also reacts in the atmosphere to form nitric acid, contributing to the problem of acid rain. In addition, nitrogen dioxide plays a role in the formation of photochemical smog, a reddish brown haze that often is seen in many urban areas and that is created by sunlight-promoted reactions in the lower atmosphere.

Nitrogen oxides are formed when combustion temperatures are high enough to cause molecular nitrogen in the air to react with oxygen. Stationary sources such as coal-burning power plants are major contributors of this pollutant, although gasoline engines and other mobile sources are also significant.

Ozone

A key component of photochemical smog, ozone is formed by a complex reaction between nitrogen dioxide and hydrocarbons in the presence of sunlight. It is considered to be a criteria pollutant in the troposphere—the lowermost layer of the atmosphere—but not in the upper atmosphere, where it occurs naturally and serves to block harmful ultraviolet rays from the Sun. Because nitrogen dioxide and hydrocarbons are emitted in significant quantities by motor vehicles, photochemical smog is common in cities such as Los Angeles, where sunshine is ample and highway traffic is heavy. Certain geographic features, such as mountains that impede air movement, and weather conditions, such as temperature inversions in the troposphere, contribute to the trapping of air pollutants and the formation of photochemical smog.

Lead

Inhaled lead particulates in the form of fumes and dusts are particularly harmful to children, in whom even slightly elevated levels of lead in the blood can cause learning disabilities, seizures, or even death (see lead poisoning). Sources of airborne lead particulates include oil refining, smelting, and other industrial activities. In the past, combustion of gasoline containing a lead-based antiknock additive called tetraethyl lead was a major source of lead particulates. In many countries there is now a complete ban on the use of lead in gasoline. In the United States, lead concentrations in outdoor air decreased more than 90 percent after the use of leaded gasoline was restricted in the mid-1970s and then completely banned in 1996.

Air toxics

Hundreds of specific substances are considered hazardous when present in trace amounts in the air. These pollutants are called air toxics. Many of them cause genetic mutations or cancer; some cause other types of health problems, such as adverse effects on brain tissue or fetal development. Although the total emissions and the number of sources of air toxics are small compared with those for criteria pollutants, these pollutants can pose an immediate health risk to exposed individuals and can cause other environmental problems.

Most air toxics are organic chemicals, comprising molecules that contain carbon, hydrogen, and other atoms. Many are volatile organic compounds (VOCs), organic compounds that readily evaporate. VOCs include pure hydrocarbons, partially oxidized hydrocarbons, and organic compounds containing chlorine, sulfur, or nitrogen. They are widely used as fuels (e.g., propane and gasoline), as paint thinners and solvents, and in the production of plastics. In addition to contributing to air toxicity and urban smog, some VOC emissions act as greenhouse gases and, in so doing, may be a cause of global warming. Some other air toxics are metals or compounds of metals—for example, mercury, element As, and cadmium.

In many countries, standards have been set to control industrial emissions of several air toxics. The first hazardous air pollutants regulated in the United States (outside the workplace environment) were math, asbestos, benzene, beryllium, coke oven emissions, mercury, radionuclides (radioactive isotopes), and vinyl chloride. In 1990 this short list was expanded to include 189 substances. By the end of the 1990s, specific emission control standards were required in the United States for “major sources”—those that release more than 10 tons per year of any of these materials or more than 25 tons per year of any combination of them.

Air toxics may be released in sudden and catastrophic accidents rather than steadily and gradually from many sources. For example, in the Bhopal disaster of 1984, an accidental release of methyl isocyanate at a pesticide factory in Bhopal, Madhya Pradesh state, India, immediately killed at least 3,000 people, eventually caused the deaths of an estimated 15,000 to 25,000 people over the following quarter-century, and injured hundreds of thousands more. The risk of accidental release of very hazardous substances into the air is generally higher for people living in industrialized urban areas. Hundreds of such incidents occur each year, though none has been as severe as the Bhopal event.

Other than in cases of occupational exposure or accidental release, health threats from air toxics are greatest for people who live near large industrial facilities or in congested and polluted urban areas. Most major sources of air toxics are so-called point sources—that is, they have a specific location. Point sources include chemical plants, steel mills, oil refineries, and municipal waste incinerators. Hazardous air pollutants may be released when equipment leaks or when material is transferred, or they may be emitted from smokestacks. Municipal waste incinerators, for example, can emit hazardous levels of dioxins, formaldehyde, and other organic substances, as well as metals such as math, beryllium, lead, and mercury. Nevertheless, proper combustion along with appropriate air pollution control devices can reduce emissions of these substances to acceptable levels.

Hazardous air pollutants also come from “area” sources, which are many smaller sources that release pollutants into the outdoor air in a defined area. Such sources include commercial dry-cleaning facilities, gasoline stations, small metal-plating operations, and woodstoves. Emission of air toxics from area sources are also regulated under some circumstances.

Small area sources account for about 25 percent of all emissions of air toxics. Major point sources account for another 20 percent. The rest—more than half of hazardous air-pollutant emissions—come from motor vehicles. For example, benzene, a component of gasoline, is released as unburned fuel or as fuel vapours, and formaldehyde is one of the by-products of incomplete combustion. Newer cars, however, have emission control devices that significantly reduce the release of air toxics.

Greenhouse gases

Global warming is recognized by almost all atmospheric scientists as a significant environmental problem caused by an increase in levels of certain trace gases in the atmosphere since the beginning of the Industrial Revolution in the mid-18th century. These gases, collectively called greenhouse gases, include carbon dioxide, organic chemicals called chlorofluorocarbons (CFCs), methane, nitrous oxide, ozone, and many others. Carbon dioxide, although not the most potent of the greenhouse gases, is the most important because of the huge volumes emitted into the air by combustion of fossil fuels (e.g., gasoline, oil, coal).

Carbon dioxide is considered a normal component of the atmosphere, and before the Industrial Revolution the average levels of this gas were about 280 parts per million (ppm). By the early 21st century, the levels of carbon dioxide reached 405 ppm, and they continue to increase at a rate of almost 3 ppm per year. Many scientists think that carbon dioxide should be regulated as a pollutant—a position taken by the EPA in 2009 in a ruling that such regulations could be promulgated. International cooperation and agreements, such as the Paris Agreement of 2015, would be necessary to reduce carbon dioxide emissions worldwide.

Air Pollution And Air Movement

Local air quality typically varies over time because of the effect of weather patterns. For example, air pollutants are diluted and dispersed in a horizontal direction by prevailing winds, and they are dispersed in a vertical direction by atmospheric instability. Unstable atmospheric conditions occur when air masses move naturally in a vertical direction, thereby mixing and dispersing pollutants. When there is little or no vertical movement of air (stable conditions), pollutants can accumulate near the ground and cause temporary but acute episodes of air pollution. With regard to air quality, unstable atmospheric conditions are preferable to stable conditions.

The degree of atmospheric instability depends on the temperature gradient (i.e., the rate at which air temperature changes with altitude). In the troposphere (the lowest layer of the atmosphere, where most weather occurs), air temperatures normally decrease as altitude increases; the faster the rate of decrease, the more unstable the atmosphere. Under certain conditions, however, a temporary “temperature inversion” may occur, during which time the air temperature increases with increasing altitude, and the atmosphere is very stable. Temperature inversions prevent the upward mixing and dispersion of pollutants and are the major cause of air pollution episodes. Certain geographic conditions exacerbate the effect of inversions. For example, Los Angeles, situated on a plain on the Pacific coast of California and surrounded by mountains that block horizontal air motion, is particularly susceptible to the stagnation effects of inversions—hence the infamous Los Angeles smog. On the opposite coast of North America another metropolis, New York City, produces greater quantities of pollutants than does Los Angeles but has been spared major air pollution disasters—only because of favourable climatic and geographic circumstances. During the mid-20th century governmental efforts to reduce air pollution increased substantially after several major inversions, such as the Great Smog of London, a weeklong air pollution episode in London in 1952 that was directly blamed for more than 4,000 deaths.

The Global Reach Of Air Pollution

Because some air pollutants persist in the atmosphere and are carried long distances by winds, air pollution transcends local, regional, and continental boundaries, and it also may have an effect on global climate and weather. For example, acid rain has gained worldwide attention since the 1970s as a regional and even continental problem. Acid rain occurs when sulfur dioxide and nitrogen oxides from the burning of fossil fuels combine with water vapour in the atmosphere, forming sulfuric acid and nitric acid mists. The resulting acidic precipitation is damaging to water, forest, and soil resources. It has caused the disappearance of fish from many lakes in the Adirondack Mountains of North America, the widespread death of forests in mountains of Europe, and damage to tree growth in the United States and Canada. Acid rain can also corrode building materials and be hazardous to human health. These problems are not contained by political boundaries. Emissions from the burning of fossil fuels in the middle sections of the United States and Canada are precipitated as acid rain in the eastern regions of those countries, and acid rain in Norway comes largely from industrial areas in Great Britain and continental Europe. The international scope of the problem has led to the signing of international agreements on the limitation of sulfur and nitrogen oxide emissions.

Another global problem caused by air pollution is the ozone depletion in the stratosphere. At ground level (i.e., in the troposphere), ozone is a pollutant, but at altitudes above 12 km (7 miles) it plays a crucial role in absorbing and thereby blocking ultraviolet radiation (UV) from the Sun before it reaches the ground. Exposure to UV radiation has been linked to skin cancer and other health problems. In 1985 it was discovered that a large “ozone hole,” an ozone-depleted region, is present every year between August and November over the continent of Antarctica. The size of this hole is increased by the presence in the atmosphere of chlorofluorocarbons (CFCs); these emanate from aerosol spray cans, refrigerators, industrial solvents, and other sources and are transported to Antarctica by atmospheric circulation. It had already been demonstrated in the mid-1970s that CFCs posed a threat to the global ozonosphere, and in 1978 the use of CFCs as propellants in aerosol cans was banned in the United States. Their use was subsequently restricted in several other countries. In 1987 representatives from more than 45 countries signed the Montreal Protocol, agreeing to place severe limitations on the production of CFCs.

One of the most significant effects of air pollution is on climate change, particularly global warming. As a result of the growing worldwide consumption of fossil fuels, carbon dioxide levels in the atmosphere have increased steadily since 1900, and the rate of increase is accelerating. It has been estimated that if carbon dioxide levels are not reduced, average global air temperatures may rise another 4 °C (7.2 °F) by the end of the 21st century. Such a warming trend might cause melting of the polar ice caps, rising of the sea level, and flooding of the coastal areas of the world. Changes in precipitation patterns caused by global warming might have adverse effects on agriculture and forest ecosystems, and higher temperatures and humidity might increase the incidence of disease in humans and animals in some parts of the world. Implementation of international agreements on reducing greenhouse gases are required to protect global air quality and to mitigate the effects of global warming.

Indoor Air Pollution

Health risks related to indoor air pollution have become an issue of concern because people generally spend most of their time indoors at home and at work. The problem has been exacerbated by well-meaning efforts to lower air-exchange rates in buildings in order to conserve energy; these efforts unfortunately allow contaminants to accumulate indoors. Indoor air pollutants include various combustion products from stoves, kerosene space heaters, and fireplaces, as well as volatile organic compounds (VOCs) from household products (e.g., paints, cleaning agents, and pesticides). Formaldehyde off-gassing from building products (especially particleboard and plywood) and from dry-cleaned textiles can accumulate in indoor air. Bacteria, viruses, molds, animal dander, dust mites, and pollen are biological contaminants that can cause disease and other health problems, especially if they build up in and are spread by central heating or cooling systems. Environmental tobacco smoke, also called secondhand smoke, is an indoor air pollutant in many homes, despite widespread knowledge about the harmful effects of smoking. Secondhand smoke contains many carcinogenic compounds as well as strong irritants. In some geographic regions, naturally occurring radon, a radioactive gas, can seep from the ground into buildings and accumulate to harmful levels. Exposure to all indoor air pollutants can be reduced by appropriate building construction and maintenance methods, limitations on pollutant sources, and provision of adequate ventilation.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1110 2021-08-13 00:33:24

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,141

Re: Miscellany

1088) Noise pollution

Noise pollution, unwanted or excessive sound that can have deleterious effects on human health and environmental quality. Noise pollution is commonly generated inside many industrial facilities and some other workplaces, but it also comes from highway, railway, and airplane traffic and from outdoor construction activities.

Measuring And Perceiving Loudness

Sound waves are vibrations of air molecules carried from a noise source to the ear. Sound is typically described in terms of the loudness (amplitude) and the pitch (frequency) of the wave. Loudness (also called sound pressure level, or SPL) is measured in logarithmic units called decibels (dB). The normal human ear can detect sounds that range between 0 dB (hearing threshold) and about 140 dB, with sounds between 120dB and 140 dB causing pain (pain threshold). The ambient SPL in a library is about 35 dB, while that inside a moving bus or subway train is roughly 85 dB; building construction activities can generate SPLs as high as 105 dB at the source. SPLs decrease with distance from the source.

The rate at which sound energy is transmitted, called sound intensity, is proportional to the square of the SPL. Because of the logarithmic nature of the decibel scale, an increase of 10 dB represents a 10-fold increase in sound intensity, an increase of 20 dB represents a 100-fold increase in intensity, a 30-dB increase represents a 1,000-fold increase in intensity, and so on. When sound intensity is doubled, on the other hand, the SPL increases by only 3 dB. For example, if a construction drill causes a noise level of about 90 dB, then two identical drills operating side by side will cause a noise level of 93 dB. On the other hand, when two sounds that differ by more than 15 dB in SPL are combined, the weaker sound is masked (or drowned out) by the louder sound. For example, if an 80-dB drill is operating next to a 95-dB dozer at a construction site, the combined SPL of those two sources will be measured as 95 dB; the less intense sound from the compressor will not be noticeable.

Frequency of a sound wave is expressed in cycles per second (cps), but hertz (Hz) is more commonly used (1 cps = 1 Hz). The human eardrum is a very sensitive organ with a large dynamic range, being able to detect sounds at frequencies as low as 20 Hz (a very low pitch) up to about 20,000 Hz (a very high pitch). The pitch of a human voice in normal conversation occurs at frequencies between 250 Hz and 2,000 Hz.

Precise measurement and scientific description of sound levels differ from most subjective human perceptions and opinions about sound. Subjective human responses to noise depend on both pitch and loudness. People with normal hearing generally perceive high-frequency sounds to be louder than low-frequency sounds of the same amplitude. For this reason, electronic sound-level meters used to measure noise levels take into account the variations of perceived loudness with pitch. Frequency filters in the meters serve to match meter readings with the sensitivity of the human ear and the relative loudness of various sounds. The so-called A-weighted filter, for example, is commonly used for measuring ambient community noise. SPL measurements made with this filter are expressed as A-weighted decibels, or dBA. Most people perceive and describe a 6- to 10-dBA increase in an SPL reading to be a doubling of “loudness.” Another system, the C-weighted (dBC) scale, is sometimes used for impact noise levels, such as gunfire, and tends to be more accurate than dBA for the perceived loudness of sounds with low frequency components.

Noise levels generally vary with time, so noise measurement data are reported as time-averaged values to express overall noise levels. There are several ways to do this. For example, the results of a set of repeated sound-level measurements may be reported as L90 = 75 dBA, meaning that the levels were equal to or higher than 75 dBA for 90 percent of the time. Another unit, called equivalent sound levels (Leq), can be used to express an average SPL over any period of interest, such as an eight-hour workday. (Leq is a logarithmic average rather than an arithmetic average, so loud events prevail in the overall result.) A unit called day-night sound level (DNL or Ldn) accounts for the fact that people are more sensitive to noise during the night, so a 10-dBA penalty is added to SPL values that are measured between 10 PM and 7 AM. DNL measurements are very useful for describing overall community exposure to aircraft noise, for example.

Dealing With The Effects Of Noise

Noise is more than a mere nuisance. At certain levels and durations of exposure, it can cause physical damage to the eardrum and the sensitive hair cells of the inner ear and result in temporary or permanent hearing loss. Hearing loss does not usually occur at SPLs below 80 dBA (eight-hour exposure levels are best kept below 85 dBA), but most people repeatedly exposed to more than 105 dBA will have permanent hearing loss to some extent. In addition to causing hearing loss, excessive noise exposure can also raise blood pressure and pulse rates, cause irritability, anxiety, and mental fatigue, and interfere with sleep, recreation, and personal communication. Noise pollution control is therefore of importance in the workplace and in the community. Noise-control ordinances and laws enacted at the local, regional, and national levels can be effective in mitigating the adverse effects of noise pollution.

Environmental and industrial noise is regulated in the United States under the Occupational Safety and Health Act of 1970 and the Noise Control Act of 1972. Under these acts, the Occupational Safety and Health Administration set up industrial noise criteria in order to provide limits on the intensity of sound exposure and on the time duration for which that intensity may be allowed.

Criteria for indoor noise are summarized in three sets of specifications that have been derived by collecting subjective judgments from a large sampling of people in a variety of specific situations. These have developed into the noise criteria (NC) and preferred noise criteria (PNC) curves, which provide limits on the level of noise introduced into the environment. The NC curves, developed in 1957, aim to provide a comfortable working or living environment by specifying the maximum allowable level of noise in octave bands over the entire audio spectrum. The complete set of 11 curves specifies noise criteria for a broad range of situations. The PNC curves, developed in 1971, add limits on low-frequency rumble and high-frequency hiss; hence, they are preferred over the older NC standard. Summarized in the curves, these criteria provide design goals for noise levels for a variety of different purposes. Part of the specification of a work or living environment is the appropriate PNC curve; in the event that the sound level exceeds PNC limits, sound-absorptive materials can be introduced into the environment as necessary to meet the appropriate standards.

Low levels of noise may be overcome using additional absorbing material, such as heavy drapery or sound-absorbent tiles in enclosed rooms. Where low levels of identifiable noise may be distracting or where privacy of conversations in adjacent offices and reception areas may be important, the undesirable sounds may be masked. A small white-noise source such as static or rushing air, placed in the room, can mask the sounds of conversation from adjacent rooms without being offensive or dangerous to the ears of people working nearby. This type of device is often used in offices of doctors and other professionals. Another technique for reducing personal noise levels is through the use of hearing protectors, which are held over the ears in the same manner as an earmuff. By using commercially available earmuff-type hearing protectors, a decrease in sound level can be attained ranging typically from about 10 dB at 100 Hz to more than 30 dB for frequencies above 1,000 Hz.

Outdoor noise limits are also important for human comfort. Standard house construction will provide some shielding from external sounds if the house meets minimum standards of construction and if the outside noise level falls within acceptable limits. These limits are generally specified for particular periods of the day—for example, during daylight hours, during evening hours, and at night during sleeping hours. Because of refraction in the atmosphere owing to the nighttime temperature inversion, relatively loud sounds can be introduced into an area from a rather distant highway, airport, or railroad. One interesting technique for control of highway noise is the erection of noise barriers alongside the highway, separating the highway from adjacent residential areas. The effectiveness of such barriers is limited by the diffraction of sound, which is greater at the lower frequencies that often predominate in road noise, especially from large vehicles. In order to be effective, they must be as close as possible to either the source or the observer of the noise (preferably to the source), thus maximizing the diffraction that would be necessary for the sound to reach the observer. Another requirement for this type of barrier is that it must also limit the amount of transmitted sound in order to bring about significant noise reduction.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1111 2021-08-14 00:15:36

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,141

Re: Miscellany

1089) Water pollution

Water pollution, the release of substances into subsurface groundwater or into lakes, streams, rivers, estuaries, and oceans to the point where the substances interfere with beneficial use of the water or with the natural functioning of ecosystems. In addition to the release of substances, such as chemicals or microorganisms, water pollution may also include the release of energy, in the form of radioactivity or heat, into bodies of water.

Sewage And Other Water Pollutants

Water bodies can be polluted by a wide variety of substances, including pathogenic microorganisms, putrescible organic waste, plant nutrients, toxic chemicals, sediments, heat, petroleum (oil), and radioactive substances.

Domestic sewage

Domestic sewage is the primary source of pathogens (disease-causing microorganisms) and putrescible organic substances. Because pathogens are excreted in feces, all sewage from cities and towns is likely to contain pathogens of some type, potentially presenting a direct threat to public health. Putrescible organic matter presents a different sort of threat to water quality. As organics are decomposed naturally in the sewage by bacteria and other microorganisms, the dissolved oxygen content of the water is depleted. This endangers the quality of lakes and streams, where high levels of oxygen are required for fish and other aquatic organisms to survive. Sewage-treatment processes reduce the levels of pathogens and organics in wastewater, but they do not eliminate them completely.

Domestic sewage is also a major source of plant nutrients, mainly nitrates and phosphates. Excess nitrates and phosphates in water promote the growth of algae, sometimes causing unusually dense and rapid growths known as algal blooms. When the algae die, oxygen dissolved in the water declines because microorganisms use oxygen to digest algae during the process of decomposition.

Anaerobic organisms (organisms that do not require oxygen to live) then metabolize the organic wastes, releasing gases such as methane and hydrogen sulfide, which are harmful to the aerobic (oxygen-requiring) forms of life. The process by which a lake changes from a clean, clear condition—with a relatively low concentration of dissolved nutrients and a balanced aquatic community—to a nutrient-rich, algae-filled state and thence to an oxygen-deficient, waste-filled condition is called eutrophication. Eutrophication is a naturally occurring, slow, and inevitable process. However, when it is accelerated by human activity and water pollution (a phenomenon called cultural eutrophication), it can lead to the premature aging and death of a body of water.

Toxic waste

Waste is considered toxic if it is poisonous, radioactive, explosive, carcinogenic (causing cancer), mutagenic (causing damage to chromosomes), teratogenic (causing birth defects), or bioaccumulative (that is, increasing in concentration at the higher ends of food chains). Sources of toxic chemicals include improperly disposed wastewater from industrial plants and chemical process facilities (lead, mercury, chromium) as well as surface runoff containing pesticides used on agricultural areas and suburban lawns (chlordane, dieldrin, heptachlor).

Sediment

Sediment (e.g., silt) resulting from soil erosion can be carried into water bodies by surface runoff. Suspended sediment interferes with the penetration of sunlight and upsets the ecological balance of a body of water. Also, it can disrupt the reproductive cycles of fish and other forms of life, and when it settles out of suspension it can smother bottom-dwelling organisms.

Thermal pollution

Heat is considered to be a water pollutant because it decreases the capacity of water to hold dissolved oxygen in solution, and it increases the rate of metabolism of fish. Valuable species of game fish (e.g., trout) cannot survive in water with very low levels of dissolved oxygen. A major source of heat is the practice of discharging cooling water from power plants into rivers; the discharged water may be as much as 15 °C (27 °F) warmer than the naturally occurring water.

Petroleum (oil) pollution

Petroleum (oil) pollution occurs when oil from roads and parking lots is carried in surface runoff into water bodies. Accidental oil spills are also a source of oil pollution—as in the devastating spills from the tanker Exxon Valdez (which released more than 260,000 barrels in Alaska’s Prince William Sound in 1989) and from the Deepwater Horizon oil rig (which released more than 4 million barrels of oil into the Gulf of Mexico in 2010). Oil slicks eventually move toward shore, harming aquatic life and damaging recreation areas.

Groundwater And Oceans

Groundwater—water contained in underground geologic formations called aquifers—is a source of drinking water for many people. For example, about half the people in the United States depend on groundwater for their domestic water supply. Although groundwater may appear crystal clear (due to the natural filtration that occurs as it flows slowly through layers of soil), it may still be polluted by dissolved chemicals and by bacteria and viruses. Sources of chemical contaminants include poorly designed or poorly maintained subsurface sewage-disposal systems (e.g., septic tanks), industrial wastes disposed of in improperly lined or unlined landfills or lagoons, leachates from unlined municipal refuse landfills, mining and petroleum production, and leaking underground storage tanks below gasoline service stations. In coastal areas, increasing withdrawal of groundwater (due to urbanization and industrialization) can cause saltwater intrusion: as the water table drops, seawater is drawn into wells.

Although estuaries and oceans contain vast volumes of water, their natural capacity to absorb pollutants is limited. Contamination from sewage outfall pipes, from dumping of sludge or other wastes, and from oil spills can harm marine life, especially microscopic phytoplankton that serve as food for larger aquatic organisms. Sometimes, unsightly and dangerous waste materials can be washed back to shore, littering beaches with hazardous debris. By 2010, an estimated 4.8 million and 12.7 million tonnes (between 5.3 million and 14 million tons) of plastic debris had been dumped into the oceans annually, and floating plastic waste had accummulated in Earth’s five subtropical gyres that cover 40 percent of the world’s oceans.

Another ocean pollution problem is the seasonal formation of “dead zones” (i.e., hypoxic areas, where dissolved oxygen levels drop so low that most higher forms of aquatic life vanish) in certain coastal areas. The cause is nutrient enrichment from dispersed agricultural runoff and concomitant algal blooms. Dead zones occur worldwide; one of the largest of these (sometimes as large as 22,730 square km [8,776 square miles]) forms annually in the Gulf of Mexico, beginning at the Mississippi River delta.

Sources Of Pollution

Water pollutants come from either point sources or dispersed sources. A point source is a pipe or channel, such as those used for discharge from an industrial facility or a city sewerage system. A dispersed (or nonpoint) source is a very broad, unconfined area from which a variety of pollutants enter the water body, such as the runoff from an agricultural area. Point sources of water pollution are easier to control than dispersed sources because the contaminated water has been collected and conveyed to one single point where it can be treated. Pollution from dispersed sources is difficult to control, and, despite much progress in the building of modern sewage-treatment plants, dispersed sources continue to cause a large fraction of water pollution problems.

Water Quality Standards

Although pure water is rarely found in nature (because of the strong tendency of water to dissolve other substances), the characterization of water quality (i.e., clean or polluted) is a function of the intended use of the water. For example, water that is clean enough for swimming and fishing may not be clean enough for drinking and cooking. Water quality standards (limits on the amount of impurities allowed in water intended for a particular use) provide a legal framework for the prevention of water pollution of all types.

There are several types of water quality standards. Stream standards are those that classify streams, rivers, and lakes on the basis of their maximum beneficial use; they set allowable levels of specific substances or qualities (e.g., dissolved oxygen, turbidity, pH) allowed in those bodies of water, based on their given classification. Effluent (water outflow) standards set specific limits on the levels of contaminants (e.g., biochemical oxygen demand, suspended solids, nitrogen) allowed in the final discharges from wastewater-treatment plants. Drinking-water standards include limits on the levels of specific contaminants allowed in potable water delivered to homes for domestic use. In the United States, the Clean Water Act and its amendments regulate water quality and set minimum standards for waste discharges for each industry as well as regulations for specific problems such as toxic chemicals and oil spills. In the European Union, water quality is governed by the Water Framework Directive, the Drinking Water Directive, and other laws.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1112 2021-08-15 01:11:15

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,141

Re: Miscellany

1090) Land pollution

Land pollution, the deposition of solid or liquid waste materials on land or underground in a manner that can contaminate the soil and groundwater, threaten public health, and cause unsightly conditions and nuisances.

The waste materials that cause land pollution are broadly classified as municipal solid waste (MSW, also called municipal refuse), construction and demolition (C&D) waste or debris, and hazardous waste. MSW includes nonhazardous garbage, rubbish, and trash from homes, institutions (e.g., schools), commercial establishments, and industrial facilities. Garbage contains moist and decomposable (biodegradable) food wastes (e.g., meat and vegetable scraps); rubbish comprises mostly dry materials such as paper, glass, textiles, and plastic objects; and trash includes bulky waste materials and objects that are not collected routinely for disposal (e.g., discarded mattresses, appliances, pieces of furniture). C&D waste (or debris) includes wood and metal objects, wallboard, concrete rubble, asphalt, and other inert materials produced when structures are built, renovated, or demolished. Hazardous wastes include harmful and dangerous substances generated primarily as liquids but also as solids, sludges, or gases by various chemical manufacturing companies, petroleum refineries, paper mills, smelters, machine shops, dry cleaners, automobile repair shops, and many other industries or commercial facilities. In addition to improper disposal of MSW, C&D waste, and hazardous waste, contaminated effluent from subsurface sewage disposal (e.g., from septic tanks) can also be a cause of land pollution.

The permeability of soil formations underlying a waste-disposal site is of great importance with regard to land pollution. The greater the permeability, the greater the risks from land pollution.Soil consists of a mixture of unconsolidated mineral and rock fragments (gravel, sand, silt, and clay) formed from natural weathering processes. Gravel and sand formations are porous and permeable, allowing the free flow of water through the pores or spaces between the particles. Silt is much less permeable than sand or gravel, because of its small particle and pore sizes, while clay is virtually impermeable to the flow of water, because of its platelike shape and molecular forces.

Until the mid-20th century, solid wastes were generally collected and placed on top of the ground in uncontrolled “open dumps,” which often became breeding grounds for rats, mosquitoes, flies, and other disease carriers and were sources of unpleasant odours, windblown debris, and other nuisances. Dumps can contaminate groundwater as well as pollute nearby streams and lakes. A highly contaminated liquid called leachate is generated from decomposition of garbage and precipitation that infiltrates and percolates downward through the volume of waste material. When leachate reaches and mixes with groundwater or seeps into nearby bodies of surface water, public health and environmental quality are jeopardized. Methane, a poisonous and explosive gas that easily flows through soil, is an eventual by-product of the anaerobic (in the absence of oxygen) decomposition of putrescible solid waste material. Open dumping of solid waste is no longer allowed in many countries. Nevertheless, leachate and methane from old dumps continue to cause land pollution problems in some areas.

A modern technique for land disposal of solid waste involves construction and daily operation and control of so-called sanitary landfills. Sanitary landfills are not dumps; they are carefully planned and engineered facilities designed to control leachate and methane and minimize the risk of land pollution from solid-waste disposal. Sanitary landfill sites are carefully selected and prepared with impermeable bottom liners to collect leachate and prevent contamination of groundwater. Bottom liners typically consist of flexible plastic membranes and a layer of compacted clay. The waste material—MSW and C&D debris—is spread out, compacted with heavy machinery, and covered each day with a layer of compacted soil. Leachate is collected in a network of perforated pipes at the bottom of the landfill and pumped to an on-site treatment plant or nearby public sewerage system. Methane is also collected in the landfill and safely vented to the atmosphere or recovered for use as a fuel known as biogas, or landfill gas. Groundwater-monitoring wells must be placed around the landfill and sampled periodically to ensure proper landfill operation. Completed landfills are capped with a layer of clay or an impermeable membrane to prevent water from entering. A layer of topsoil and various forms of vegetation are placed as a final cover. Completed landfills are often used as public parks or playgrounds.

Hazardous waste differs from MSW and C&D debris in both form and behaviour. Its disposal requires special attention because it can cause serious illnesses or injuries and can pose immediate and significant threats to environmental quality. The main characteristics of hazardous waste include toxicity, reactivity, ignitability, and corrosivity. In addition, waste products that may be infectious or are radioactive are also classified as hazardous waste. Although land disposal of hazardous waste is not always the best option, solid or containerized hazardous wastes can be disposed of by burial in “secure landfills,” while liquid hazardous waste can be disposed of underground in deep-well injection systems if the geologic conditions are suitable. Some hazardous wastes such as dioxins, PCBs, cyanides, halogenated organics, and strong acids are banned from land disposal in the United States, unless they are first treated or stabilized or meet certain concentration limits. Secure landfills must have at least 3 metres (10 feet) of soil between the bottom of the landfill and underlying bedrock or groundwater table (twice that required for municipal solid-waste landfills), a final impermeable cover when completed, and a double impervious bottom liner for increased safety. Underground injection wells (into which liquid waste is pumped under high pressure) must deposit the liquid in a permeable layer of rock that is sandwiched between impervious layers of rock or clay. The wells must also be encased and sealed in three concentric pipes and be at least 400 metres (0.25 mile) from any drinking-water supplies for added safety.

Before modern techniques for disposing of hazardous wastes were legislated and put into practice, the wastes were generally disposed of or stored in surface piles, lagoons, ponds, or unlined landfills. Thousands of those waste sites still exist, now old and abandoned. Also, the illegal but frequent practice of “midnight dumping” of hazardous wastes, as well as accidental spills, has contaminated thousands of industrial land parcels and continues to pose serious threats to public health and environmental quality. Efforts to remediate or clean up such sites will continue for years to come. In 1980 the United States Congress created the Superfund program and authorized billions of dollars toward site remediation; today there are still about 1,300 sites on the Superfund list requiring remediation. The first listed Superfund site—Love Canal, located in Niagara Falls, N.Y.—was not removed from the list until 2004.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1113 2021-08-16 02:38:31

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,141

Re: Miscellany

1091) Germination

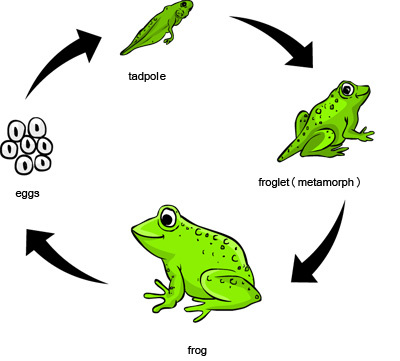

Germination, the sprouting of a seed, spore, or other reproductive body, usually after a period of dormancy. The absorption of water, the passage of time, chilling, warming, oxygen availability, and light exposure may all operate in initiating the process.

In the process of seed germination, water is absorbed by the embryo, which results in the rehydration and expansion of the cells. Shortly after the beginning of water uptake, or imbibition, the rate of respiration increases, and various metabolic processes, suspended or much reduced during dormancy, resume. These events are associated with structural changes in the organelles (membranous bodies concerned with metabolism), in the cells of the embryo.

Germination sometimes occurs early in the development process; the mangrove (Rhizophora) embryo develops within the ovule, pushing out a swollen rudimentary root through the still-attached flower. In peas and corn (maize) the cotyledons (seed leaves) remain underground (e.g., hypogeal germination), while in other species (beans, sunflowers, etc.) the hypocotyl (embryonic stem) grows several inches above the ground, carrying the cotyledons into the light, in which they become green and often leaflike (e.g., epigeal germination).

Seed Dormancy

Dormancy is brief for some seeds—for example, those of certain short-lived annual plants. After dispersal and under appropriate environmental conditions, such as suitable temperature and access to water and oxygen, the seed germinates, and the embryo resumes growth.

The seeds of many species do not germinate immediately after exposure to conditions generally favourable for plant growth but require a “breaking” of dormancy, which may be associated with change in the seed coats or with the state of the embryo itself. Commonly, the embryo has no innate dormancy and will develop after the seed coat is removed or sufficiently damaged to allow water to enter. Germination in such cases depends upon rotting or abrasion of the seed coat in the gut of an animal or in the soil. Inhibitors of germination must be either leached away by water or the tissues containing them destroyed before germination can occur. Mechanical restriction of the growth of the embryo is common only in species that have thick, tough seed coats. Germination then depends upon weakening of the coat by abrasion or decomposition.

In many seeds the embryo cannot germinate even under suitable conditions until a certain period of time has lapsed. The time may be required for continued embryonic development in the seed or for some necessary finishing process—known as afterripening—the nature of which remains obscure.

The seeds of many plants that endure cold winters will not germinate unless they experience a period of low temperature, usually somewhat above freezing. Otherwise, germination fails or is much delayed, with the early growth of the seedling often abnormal. (This response of seeds to chilling has a parallel in the temperature control of dormancy in buds.) In some species, germination is promoted by exposure to light of appropriate wavelengths. In others, light inhibits germination. For the seeds of certain plants, germination is promoted by red light and inhibited by light of longer wavelength, in the “far red” range of the spectrum. The precise significance of this response is as yet unknown, but it may be a means of adjusting germination time to the season of the year or of detecting the depth of the seed in the soil. Light sensitivity and temperature requirements often interact, the light requirement being entirely lost at certain temperatures.

Seedling Emergence