Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#1126 2021-08-29 00:41:24

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,966

Re: Miscellany

1103) Fossil fuel

Fossil fuel, any of a class of hydrocarbon-containing materials of biological origin occurring within Earth’s crust that can be used as a source of energy.

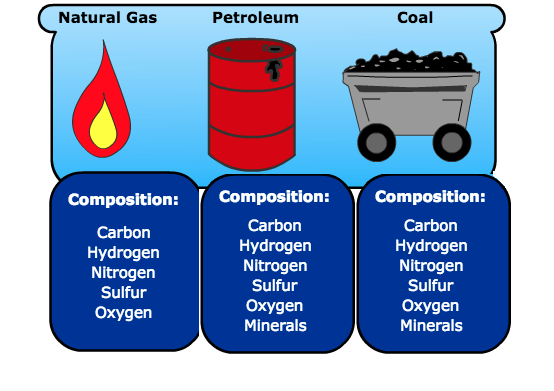

Fossil fuels include coal, petroleum, natural gas, oil shales, bitumens, tar sands, and heavy oils. All contain carbon and were formed as a result of geologic processes acting on the remains of organic matter produced by photosynthesis, a process that began in the Archean Eon (4.0 billion to 2.5 billion years ago). Most carbonaceous material occurring before the Devonian Period (419.2 million to 358.9 million years ago) was derived from algae and bacteria, whereas most carbonaceous material occurring during and after that interval was derived from plants.

All fossil fuels can be burned in air or with oxygen derived from air to provide heat. This heat may be employed directly, as in the case of home furnaces, or used to produce steam to drive generators that can supply electricity. In still other cases—for example, gas turbines used in jet aircraft—the heat yielded by burning a fossil fuel serves to increase both the pressure and the temperature of the combustion products to furnish motive power.

Since the beginning of the Industrial Revolution in Great Britain in the second half of the 18th century, fossil fuels have been consumed at an ever-increasing rate. Today they supply more than 80 percent of all the energy consumed by the industrially developed countries of the world. Although new deposits continue to be discovered, the reserves of the principal fossil fuels remaining on Earth are limited. The amounts of fossil fuels that can be recovered economically are difficult to estimate, largely because of changing rates of consumption and future value as well as technological developments. Advances in technology—such as hydraulic fracturing (fracking), rotary drilling, and directional drilling—have made it possible to extract smaller and difficult-to-obtain deposits of fossil fuels at a reasonable cost, thereby increasing the amount of recoverable material. In addition, as recoverable supplies of conventional (light-to-medium) oil became depleted, some petroleum-producing companies shifted to extracting heavy oil, as well as liquid petroleum pulled from tar sands and oil shales.

One of the main by-products of fossil fuel combustion is carbon dioxide (CO2). The ever-increasing use of fossil fuels in industry, transportation, and construction has added large amounts of CO2 to Earth’s atmosphere. Atmospheric CO2 concentrations fluctuated between 275 and 290 parts per million by volume (ppmv) of dry air between 1000 CE and the late 18th century but increased to 316 ppmv by 1959 and rose to 412 ppmv in 2018. CO2 behaves as a greenhouse gas—that is, it absorbs infrared radiation (net heat energy) emitted from Earth’s surface and reradiates it back to the surface. Thus, the substantial CO2 increase in the atmosphere is a major contributing factor to human-induced global warming. Methane (CH4), another potent greenhouse gas, is the chief constituent of natural gas, and CH4 concentrations in Earth’s atmosphere rose from 722 parts per billion (ppb) before 1750 to 1,859 ppb by 2018. To counter worries over rising greenhouse gas concentrations and to diversify their energy mix, many countries have sought to reduce their dependence on fossil fuels by developing sources of renewable energy (such as wind, solar, hydroelectric, tidal, geothermal, and biofuels) while at the same time increasing the mechanical efficiency of engines and other technologies that rely on fossil fuels.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1127 2021-08-30 00:46:21

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,966

Re: Miscellany

1104) Mind

Mind, in the Western tradition, the complex of faculties involved in perceiving, remembering, considering, evaluating, and deciding. Mind is in some sense reflected in such occurrences as sensations, perceptions, emotions, memory, desires, various types of reasoning, motives, choices, traits of personality, and the unconscious.

A brief treatment of mind follows. The subject of mind is treated in a number of articles.

To the extent that mind is manifested in observable phenomena, it has frequently been regarded as a peculiarly human possession. Some theories, however, posit the existence of mind in other animals besides human beings. One theory regards mind as a universal property of matter. According to another view, there may be superhuman minds or intelligences, or a single absolute mind, a transcendent intelligence.

Common Assumptions Among Theories Of Mind

Several assumptions are indispensible to any discussion of the concept of mind. First is the assumption of thought or thinking. If there were no evidence of thought in the world, mind would have little or no meaning. The recognition of this fact throughout history accounts for the development of diverse theories of mind. It may be supposed that such words as “thought” or “thinking” cannot, because of their own ambiguity, help to define the sphere of mind. But whatever the relation of thinking to sensing, thinking seems to involve more—for almost all observers—than a mere reception of impressions from without. This seems to be the opinion of those who make thinking a consequence of sensing, as well as of those who regard thought as independent of sense. For both, thinking goes beyond sensing, either as an elaboration of the materials of sense or as an apprehension of objects that are totally beyond the reach of the senses.

The second assumption that seems to be a root common to all conceptions of mind is that of knowledge or knowing. This may be questioned on the ground that, if there were sensation without any form of thought, judgment, or reasoning, there would be at least a rudimentary form of knowledge—some degree of consciousness or awareness by one thing or another. If one grants the point of this objection, it nevertheless seems true that the distinction between truth and falsity and the difference between knowledge, error, and ignorance or between knowledge, belief, and opinion do not apply to sensations in the total absence of thought. Any understanding of knowledge that involves these distinctions seems to imply mind for the same reason that it implies thought. There is a further implication of mind in the fact of self-knowledge. Sensing may be awareness of an object, and to this extent it may be a kind of knowing, but it has never been observed that the senses can sense or be aware of themselves.

Thought seems to be not only reflective but reflexive, that is, able to consider itself, to define the nature of thinking, and to develop theories of mind. This fact about thought—its reflexivity—also seems to be a common element in all the meanings of “mind.” It is sometimes referred to as “the reflexivity of the intellect,” as “the reflexive power of the understanding,” as “the ability of the understanding to reflect upon its own acts,” or as “self-consciousness.” Whatever the phrasing, a world without self-consciousness or self-knowledge would be a world in which the traditional conception of mind would probably not have arisen.

The third assumption is that of purpose or intention, of planning a course of action with foreknowledge of its goal or of working in any other way toward a desired and foreseen objective. As in the case of sensitivity, the phenomena of desire do not, without further qualification, indicate the realm of mind. According to the theory of natural desire, for example, the natural tendencies of even inanimate and insensitive things are expressions of desire. But it is not in that sense of desire that the assumption of purpose or intention is here taken as evidence of mind.

It is rather on the level of the behaviour of living things that purpose seems to require a factor over and above the senses, limited as they are to present appearances. It cannot be found in the passions, which have the same limitation as the senses, for unless they are checked they tend toward immediate emotional discharge. That factor, called for by the direction of conduct to future ends, is either an element common to all meanings of “mind” or is at least an element associated with mind. It is sometimes called the faculty of will—rational desire or the intellectual appetite. Sometimes it is treated as the act of willing, which, along with thinking, is one of the two major activities of mind or understanding; and sometimes purposiveness is regarded as the very essence of mentality.

Disputed Questions

These assumptions—thought, knowledge or self-knowledge, and purpose—seem to be common to all theories of mind. More than that, they seem to be assumptions that require the development of the conception. The conflict of theories concerning what the human mind is, what structure it has, what parts belong to it, and what whole it belongs to does not comprise the entire range of controversy on the subject. Yet enough is common to all theories of mind to permit certain other questions to be formulated: How does the mind operate? How does it do whatever is its work, and with what intrinsic excellences or defects? What is the relation of mind to matter, to bodily organs, to material conditions, or of one mind to another? Is mind a common possession of men and animals, or is whatever might be called mind in animals distinctly different from the human mind? Are there minds or a mind in existence apart from man and the whole world of corporeal life? What are the limits of so-called artificial intelligence, the capacity of machines to perform functions generally associated with mind?

The intelligibility of the positions taken in the disputes of these issues depends to some degree on the divergent conceptions of the human mind from which they stem. The conclusions achieved in such fields as theory of knowledge, metaphysics, logic, ethics, and the philosophy of religion are all relevant to the philosophy of mind; and its conclusions, in turn, have important implications for those fields. Moreover, this reciprocity applies as well to its relations to such empirical disciplines as neurology, psychology, sociology, and history.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1128 2021-08-31 00:28:47

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,966

Re: Miscellany

1105) Bose-Einstein condensate

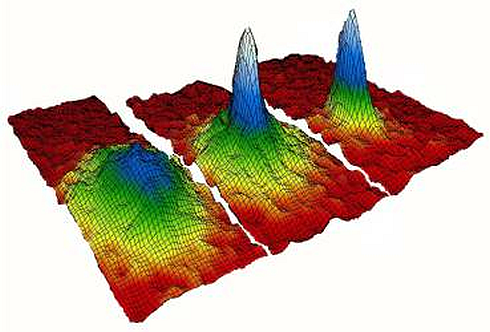

Bose-Einstein condensate (BEC), a state of matter in which separate atoms or subatomic particles, cooled to near absolute zero (0 K, − 273.15 °C, or − 459.67 °F; K = kelvin), coalesce into a single quantum mechanical entity—that is, one that can be described by a wave function—on a near-macroscopic scale. This form of matter was predicted in 1924 by Albert Einstein on the basis of the quantum formulations of the Indian physicist Satyendra Nath Bose.

Although it had been predicted for decades, the first atomic BEC was made only in 1995, when Eric Cornell and Carl Wieman of JILA, a research institution jointly operated by the National Institute of Standards and Technology (NIST) and the University of Colorado at Boulder, cooled a gas of rubidium atoms to 1.7 × {10}^{-7} K above absolute zero. Along with Wolfgang Ketterle of the Massachusetts Institute of Technology (MIT), who created a BEC with sodium atoms, these researchers received the 2001 Nobel Prize for Physics. Research on BECs has expanded the understanding of quantum physics and has led to the discovery of new physical effects.

BEC theory traces back to 1924, when Bose considered how groups of photons behave. Photons belong to one of the two great classes of elementary or submicroscopic particles defined by whether their quantum spin is a nonnegative integer (0, 1, 2, …) or an odd half integer (1/2, 3/2, …). The former type, called bosons, includes photons, whose spin is 1. The latter type, called fermions, includes electrons, whose spin is 1/2.

As Bose noted, the two classes behave differently and Fermi-Dirac statistics). According to the Pauli exclusion principle, fermions tend to avoid each other, for which reason each electron in a group occupies a separate quantum state (indicated by different quantum numbers, such as the electron’s energy). In contrast, an unlimited number of bosons can have the same energy state and share a single quantum state.

Einstein soon extended Bose’s work to show that at extremely low temperatures “bosonic atoms” with even spins would coalesce into a shared quantum state at the lowest available energy. The requisite methods to produce temperatures low enough to test Einstein’s prediction did not become attainable, however, until the 1990s. One of the breakthroughs depended on the novel technique of laser cooling and trapping, in which the radiation pressure of a laser beam cools and localizes atoms by slowing them down. (For this work, French physicist Claude Cohen-Tannoudji and American physicists Steven Chu and William D. Phillips shared the 1997 Nobel Prize for Physics.) The second breakthrough depended on improvements in magnetic confinement in order to hold the atoms in place without a material container. Using these techniques, Cornell and Wieman succeeded in merging about 2,000 individual atoms into a “superatom,” a condensate large enough to observe with a microscope, that displayed distinct quantum properties. As Wieman described the achievement, “We brought it to an almost human scale. We can poke it and prod it and look at this stuff in a way no one has been able to before.”

BECs are related to two remarkable low-temperature phenomena: superfluidity, in which each of the helium isotopes 3He and 4He forms a liquid that flows with zero friction; and superconductivity, in which electrons move through a material with zero electrical resistance. 4He atoms are bosons, and although 3He atoms and electrons are fermions, they can also undergo Bose condensation if they pair up with opposite spins to form bosonlike states with zero net spin. In 2003 Deborah Jin and her colleagues at JILA used paired fermions to create the first atomic fermionic condensate.

BEC research has yielded new atomic and optical physics, such as the atom laser Ketterle demonstrated in 1996. A conventional light laser emits a beam of coherent photons; they are all exactly in phase and can be focused to an extremely small, bright spot. Similarly, an atom laser produces a coherent beam of atoms that can be focused at high intensity. Potential applications include more-accurate atomic clocks and enhanced techniques to make electronic chips, or integrated circuits.

The most intriguing property of BECs is that they can slow down light. In 1998 Lene Hau of Harvard University and her colleagues slowed light traveling through a BEC from its speed in vacuum of 3 × {10}^8 metres per second to a mere 17 metres per second, or about 38 miles per hour. Since then, Hau and others have completely halted and stored a light pulse within a BEC, later releasing the light unchanged or sending it to a second BEC. These manipulations hold promise for new types of light-based telecommunications, optical storage of data, and quantum computing, though the low-temperature requirements of BECs offer practical difficulties.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1129 2021-09-01 00:29:42

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,966

Re: Miscellany

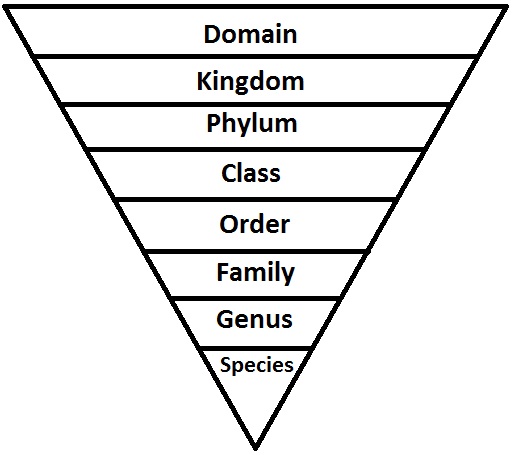

1106) Fermi-Dirac statistics

Fermi-Dirac statistics, in quantum mechanics, one of two possible ways in which a system of indistinguishable particles can be distributed among a set of energy states: each of the available discrete states can be occupied by only one particle. This exclusiveness accounts for the electron structure of atoms, in which electrons remain in separate states rather than collapsing into a common state, and for some aspects of electrical conductivity. The theory of this statistical behaviour was developed (1926–27) by the physicists Enrico Fermi and P.A.M. Dirac, who recognized that a collection of identical and indistinguishable particles can be distributed in this way among a series of discrete (quantized) states.

In contrast to the Bose-Einstein statistics, the Fermi-Dirac statistics apply only to those types of particles that obey the restriction known as the Pauli exclusion principle. Such particles have half-integer values of spin and are named fermions, after the statistics that correctly describe their behaviour. Fermi-Dirac statistics apply, for example, to electrons, protons, and neutrons.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1130 2021-09-02 00:45:17

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,966

Re: Miscellany

1107) Thermodynamics

What Is Thermodynamics?

Thermodynamics is the branch of physics that deals with the relationships between heat and other forms of energy. In particular, it describes how thermal energy is converted to and from other forms of energy and how it affects matter.

Thermal energy is the energy a substance or system has due to its temperature, i.e., the energy of moving or vibrating molecules, according to the Energy Education website of the Texas Education Agency. Thermodynamics involves measuring this energy, which can be "exceedingly complicated," according to David McKee, a professor of physics at Missouri Southern State University. "The systems that we study in thermodynamics … consist of very large numbers of atoms or molecules interacting in complicated ways. But, if these systems meet the right criteria, which we call equilibrium, they can be described with a very small number of measurements or numbers. Often this is idealized as the mass of the system, the pressure of the system, and the volume of the system, or some other equivalent set of numbers. Three numbers describe {10}^{26} or {10}^{30} nominal independent variables."

Heat

Thermodynamics, then, is concerned with several properties of matter; foremost among these is heat. Heat is energy transferred between substances or systems due to a temperature difference between them, according to Energy Education. As a form of energy, heat is conserved, i.e., it cannot be created or destroyed. It can, however, be transferred from one place to another. Heat can also be converted to and from other forms of energy. For example, a steam turbine can convert heat to kinetic energy to run a generator that converts kinetic energy to electrical energy. A light bulb can convert this electrical energy to electromagnetic radiation (light), which, when absorbed by a surface, is converted back into heat.

Temperature

The amount of heat transferred by a substance depends on the speed and number of atoms or molecules in motion, according to Energy Education. The faster the atoms or molecules move, the higher the temperature, and the more atoms or molecules that are in motion, the greater the quantity of heat they transfer.

Temperature is "a measure of the average kinetic energy of the particles in a sample of matter, expressed in terms of units or degrees designated on a standard scale," according to the American Heritage Dictionary. The most commonly used temperature scale is Celsius, which is based on the freezing and boiling points of water, assigning respective values of 0 degrees C and 100 degrees C. The Fahrenheit scale is also based on the freezing and boiling points of water which have assigned values of 32 F and 212 F, respectively.

Scientists worldwide, however, use the Kelvin (K with no degree sign) scale, named after William Thomson, 1st Baron Kelvin, because it works in calculations. This scale uses the same increment as the Celsius scale, i.e., a temperature change of 1 C is equal to 1 K. However, the Kelvin scale starts at absolute zero, the temperature at which there is a total absence of heat energy and all molecular motion stops. A temperature of 0 K is equal to minus 459.67 F or minus 273.15 C.

Specific heat

The amount of heat required to increase the temperature of a certain mass of a substance by a certain amount is called specific heat, or specific heat capacity, according to Wolfram Research. The conventional unit for this is calories per gram per kelvin. The calorie is defined as the amount of heat energy required to raise the temperature of 1 gram of water at 4 C by 1 degree.

The specific heat of a metal depends almost entirely on the number of atoms in the sample, not its mass. For instance, a kilogram of aluminum can absorb about seven times more heat than a kilogram of lead. However, lead atoms can absorb only about 8 percent more heat than an equal number of aluminum atoms. A given mass of water, however, can absorb nearly five times as much heat as an equal mass of aluminum. The specific heat of a gas is more complex and depends on whether it is measured at constant pressure or constant volume.

Thermal conductivity

Thermal conductivity (k) is “the rate at which heat passes through a specified material, expressed as the amount of heat that flows per unit time through a unit area with a temperature gradient of one degree per unit distance,” according to the Oxford Dictionary. The unit for k is watts (W) per meter (m) per kelvin (K). Values of k for metals such as copper and silver are relatively high at 401 and 428 W/m·K, respectively. This property makes these materials useful for automobile radiators and cooling fins for computer chips because they can carry away heat quickly and exchange it with the environment. The highest value of k for any natural substance is diamond at 2,200 W/m·K.

Other materials are useful because they are extremely poor conductors of heat; this property is referred to as thermal resistance, or R-value, which describes the rate at which heat is transmitted through the material. These materials, such as rock wool, goose down and Styrofoam, are used for insulation in exterior building walls, winter coats and thermal coffee mugs. R-value is given in units of square feet times degrees Fahrenheit times hours per British thermal unit (ft2·°F·h/Btu) for a 1-inch-thick slab.

Newton's Law of Cooling

In 1701, Sir Isaac Newton first stated his Law of Cooling in a short article titled "Scala graduum Caloris" ("A Scale of the Degrees of Heat") in the Philosophical Transactions of the Royal Society. Newton's statement of the law translates from the original Latin as, "the excess of the degrees of the heat ... were in geometrical progression when the times are in an arithmetical progression." Worcester Polytechnic Institute gives a more modern version of the law as "the rate of change of temperature is proportional to the difference between the temperature of the object and that of the surrounding environment."

This results in an exponential decay in the temperature difference. For example, if a warm object is placed in a cold bath, within a certain length of time, the difference in their temperatures will decrease by half. Then in that same length of time, the remaining difference will again decrease by half. This repeated halving of the temperature difference will continue at equal time intervals until it becomes too small to measure.

Heat transfer

Heat can be transferred from one body to another or between a body and the environment by three different means: conduction, convection and radiation. Conduction is the transfer of energy through a solid material. Conduction between bodies occurs when they are in direct contact, and molecules transfer their energy across the interface.

Convection is the transfer of heat to or from a fluid medium. Molecules in a gas or liquid in contact with a solid body transmit or absorb heat to or from that body and then move away, allowing other molecules to move into place and repeat the process. Efficiency can be improved by increasing the surface area to be heated or cooled, as with a radiator, and by forcing the fluid to move over the surface, as with a fan.

Radiation is the emission of electromagnetic (EM) energy, particularly infrared photons that carry heat energy. All matter emits and absorbs some EM radiation, the net amount of which determines whether this causes a loss or gain in heat.

The Carnot cycle

In 1824, Nicolas Léonard Sadi Carnot proposed a model for a heat engine based on what has come to be known as the Carnot cycle. The cycle exploits the relationships among pressure, volume and temperature of gasses and how an input of energy can change form and do work outside the system.

Compressing a gas increases its temperature so it becomes hotter than its environment. Heat can then be removed from the hot gas using a heat exchanger. Then, allowing it to expand causes it to cool. This is the basic principle behind heat pumps used for heating, air conditioning and refrigeration.

Conversely, heating a gas increases its pressure, causing it to expand. The expansive pressure can then be used to drive a piston, thus converting heat energy into kinetic energy. This is the basic principle behind heat engines.

Entropy

All thermodynamic systems generate waste heat. This waste results in an increase in entropy, which for a closed system is "a quantitative measure of the amount of thermal energy not available to do work," according to the American Heritage Dictionary. Entropy in any closed system always increases; it never decreases. Additionally, moving parts produce waste heat due to friction, and radiative heat inevitably leaks from the system.

This makes so-called perpetual motion machines impossible. Siabal Mitra, a professor of physics at Missouri State University, explains, "You cannot build an engine that is 100 percent efficient, which means you cannot build a perpetual motion machine. However, there are a lot of folks out there who still don't believe it, and there are people who are still trying to build perpetual motion machines."

Entropy is also defined as "a measure of the disorder or randomness in a closed system," which also inexorably increases. You can mix hot and cold water, but because a large cup of warm water is more disordered than two smaller cups containing hot and cold water, you can never separate it back into hot and cold without adding energy to the system. Put another way, you can’t unscramble an egg or remove cream from your coffee. While some processes appear to be completely reversible, in practice, none actually are. Entropy, therefore, provides us with an arrow of time: forward is the direction of increasing entropy.

The four laws of thermodynamics

The fundamental principles of thermodynamics were originally expressed in three laws. Later, it was determined that a more fundamental law had been neglected, apparently because it had seemed so obvious that it did not need to be stated explicitly. To form a complete set of rules, scientists decided this most fundamental law needed to be included. The problem, though, was that the first three laws had already been established and were well known by their assigned numbers. When faced with the prospect of renumbering the existing laws, which would cause considerable confusion, or placing the pre-eminent law at the end of the list, which would make no logical sense, a British physicist, Ralph H. Fowler, came up with an alternative that solved the dilemma: he called the new law the “Zeroth Law.” In brief, these laws are:

The Zeroth Law states that if two bodies are in thermal equilibrium with some third body, then they are also in equilibrium with each other. This establishes temperature as a fundamental and measurable property of matter.

The First Law states that the total increase in the energy of a system is equal to the increase in thermal energy plus the work done on the system. This states that heat is a form of energy and is therefore subject to the principle of conservation.

The Second Law states that heat energy cannot be transferred from a body at a lower temperature to a body at a higher temperature without the addition of energy. This is why it costs money to run an air conditioner.

The Third Law states that the entropy of a pure crystal at absolute zero is zero. As explained above, entropy is sometimes called "waste energy," i.e., energy that is unable to do work, and since there is no heat energy whatsoever at absolute zero, there can be no waste energy. Entropy is also a measure of the disorder in a system, and while a perfect crystal is by definition perfectly ordered, any positive value of temperature means there is motion within the crystal, which causes disorder. For these reasons, there can be no physical system with lower entropy, so entropy always has a positive value.

The science of thermodynamics has been developed over centuries, and its principles apply to nearly every device ever invented. Its importance in modern technology cannot be overstated.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1131 2021-09-03 00:11:06

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,966

Re: Miscellany

1108) Helicopter

Background

Helicopters are classified as rotary wing aircraft, and their rotary wing is commonly referred to as the main rotor or simply the rotor. Unlike the more common fixed wing aircraft such as a sport biplane or an airliner, the helicopter is capable of direct vertical take-off and landing; it can also hover in a fixed position. These features render it ideal for use where space is limited or where the ability to hover over a precise area is necessary. Currently, helicopters are used to dust crops, apply pesticide, access remote areas for environmental work, deliver supplies to workers on remote maritime oil rigs, take photographs, film movies, rescue people trapped in inaccessible spots, transport accident victims, and put out fires. Moreover, they have numerous intelligence and military applications.

Numerous individuals have contributed to the conception and development of the helicopter. The idea appears to have been bionic in origin, meaning that it derived from an attempt to adapt a natural phenomena—in this case, the whirling, bifurcated fruit of the maple tree—to a mechanical design. Early efforts to imitate maple pods produced the whirligig, a children's toy popular in China as well as in medieval Europe. During the fifteenth century, Leonardo da Vinci, the renowned Italian painter, sculptor, architect, and engineer, sketched a flying machine that may have been based on the whirligig. The next surviving sketch of a helicopter dates from the early nineteenth century, when British scientist Sir George Cayley drew a twin-rotor aircraft in his notebook. During the early twentieth century, Frenchman Paul Cornu managed to lift himself off the ground for a few seconds in an early helicopter. However, Cornu was constrained by the same problems that would continue to plague all early designers for several decades: no one had yet devised an engine that could generate enough vertical thrust to lift both the helicopter and any significant load (including passengers) off the ground.

Igor Sikorsky, a Russian engineer, built his first helicopter in 1909. When neither this prototype nor its 1910 successor succeeded, Sikorsky decided that he could not build a helicopter without more sophisticated materials and money, so he transferred his attention to aircraft. During World War I, Hungarian engineer Theodore von Karman constructed a helicopter that, when tethered, was able to hover for extended periods. Several years later, Spaniard Juan de la Cierva developed a machine he called an autogiro in response to the tendency of conventional airplanes to lose engine power and crash while landing. If he could design an aircraft in which lift and thrust (forward speed) were separate functions, Cierva speculated, he could circumvent this problem. The autogiro he subsequently invented incorporated features of both the helicopter and the airplane, although it resembled the latter more. The autogiro had a rotor that functioned something like a windmill. Once set in motion by taxiing on the ground, the rotor could generate supplemental lift; however, the autogiro was powered primarily by a conventional airplane engine. To avoid landing problems, the engine could be disconnected and the autogiro brought gently to rest by the rotor, which would gradually cease spinning as the machine reached the ground. Popular during the 1920s and 1930s, autogiros ceased to be produced after the refinement of the conventional helicopter.

The helicopter was eventually perfected by Igor Sikorsky. Advances in aerodynamic theory and building materials had been made since Sikorsky's initial endeavor, and, in 1939, he lifted off the ground in his first operational helicopter. Two years later, an improved design enabled him to remain aloft for an hour and a half, setting a world record for sustained helicopter flight.

The helicopter was put to military use almost immediately after its introduction. While it was not utilized extensively during World War II, the jungle terrain of both Korea and Vietnam prompted the helicopter's widespread use during both of those wars, and technological refinements made it a valuable tool during the Persian Gulf War as well. In recent years, however, private industry has probably accounted for the greatest increase in helicopter use, as many companies have begun to transport their executives via helicopter. In addition, helicopter shuttle services have proliferated, particularly along the urban corridor of the American Northeast. Still, among civilians the helicopter remains best known for its medical, rescue, and relief uses.

Design

A helicopter's power comes from either a piston engine or a gas turbine (recently, the latter has predominated), which moves the rotor shaft, causing the rotor to turn. While a standard plane generates thrust by pushing air behind its wing as it moves forward, the helicopter's rotor achieves lift by pushing the air beneath it downward as it spins. Lift is proportional to the change in the air's momentum (its mass times its velocity): the greater the momentum, the greater the lift.

Helicopter rotor systems consist of between two and six blades attached to a central hub. Usually long and narrow, the blades turn relatively slowly, because this minimizes the amount of power necessary to achieve and maintain lift, and also because it makes controlling the vehicle easier. While light-weight, general-purpose helicopters often have a two-bladed main rotor, heavier craft may use a four-blade design or two separate main rotors to accommodate heavy loads.

To steer a helicopter, the pilot must adjust the pitch of the blades, which can be set three ways. In the collective system, the pitch of all the blades attached to the rotor is identical; in the cyclic system, the pitch of each blade is designed to fluctuate as the rotor revolves, and the third system uses a combination of the first two. To move the helicopter in any direction, the pilot moves the lever that adjusts collective pitch and/or the stick that adjusts cyclic pitch; it may also be necessary to increase or reduce speed.

Unlike airplanes, which are designed to minimize bulk and protuberances that would weigh the craft down and impede airflow around it, helicopters have unavoidably high drag. Thus, designers have not utilized the sort of retractable landing gear familiar to people who have watched planes taking off or landing—the aerodynamic gains of such a system would be proportionally insignificant for a helicopter. In general, helicopter landing gear is much simpler than that of airplanes. Whereas the latter require long runways on which to reduce forward velocity, helicopters have to reduce only vertical lift, which they can do by hovering prior to landing. Thus, they don't even require shock absorbers: their landing gear usually comprises only wheels or skids, or both.

One problem associated with helicopter rotor blades occurs because airflow along the length of each blade differs widely. This means that lift and drag fluctuate for each blade throughout the rotational cycle, thereby exerting an unsteadying influence upon the helicopter. A related problem occurs because, as the helicopter moves forward, the lift beneath the blades that enter the airstream first is high, but that beneath the blades on the opposite side of the rotor is low. The net effect of these problems is to destabilize the helicopter. Typically, the means of compensating for these unpredictable variations in lift and drag is to manufacture flexible blades connected to the rotor by a hinge. This design allows each blade to shift up or down, adjusting to changes in lift and drag.

Torque, another problem associated with the physics of a rotating wing, causes the helicopter fuselage (cabin) to rotate in the opposite direction from the rotor, especially when the helicopter is moving at low speeds or hovering. To offset this reaction, many helicopters use a tail rotor, an exposed blade or ducted fan mounted on the end of the tail boom typically seen on these craft. Another means of counteracting torque entails installing two rotors, attached to the same engine but rotating in opposite directions, while a third, more space-efficient design features twin rotors that are enmeshed, something like an egg beater. Additional alternatives have been researched, and at least one NOTAR (no tail rotor) design has been introduced.

Raw Materials

The airframe, or fundamental structure, of a helicopter can be made of either metal or organic composite materials, or some combination of the two. Higher performance requirements will incline the designer to favor composites with higher strength-to-weight ratio, often epoxy (a resin) reinforced with glass, aramid (a strong, flexible nylon fiber), or carbon fiber. Typically, a composite component consists of many layers of fiber-impregnated resins, bonded to form a smooth panel. Tubular and sheet metal substructures are usually made of aluminum, though stainless steel or titanium are sometimes used in areas subject to higher stress or heat. To facilitate bending during the manufacturing process, the structural tubing is often filled with molten sodium silicate. A helicopter's rotary wing blades are usually made of fiber-reinforced resin, which may be adhesively bonded with an external sheet metal layer to protect edges. The helicopter's windscreen and windows are formed of polycarbonate sheeting.

The Manufacturing Process

In 1939, a Russian emigre to the United States tested what was to become a prominent prototype for later helicopters. Already a prosperous aircraft manufacturer in his native land, Igor Sikorsky fled the 1917 revolution, drawn to the United States by stories of Thomas Edison and Henry Ford.

Sikorsky soon became a successful aircraft manufacturer in his adopted homeland. But his dream was vertical take-off, rotary wing flight. He experimented for more than twenty years and finally, in 1939, flew his first flight in a craft dubbed the VS 300. Tethered to the ground with long ropes, his craft flew no higher than 50 feet off the ground on its first several flights. Even then, there were problems: the craft flew up, down, and sideways, but not forward. However, helicopter technology developed so rapidly that some were actually put into use by U.S. troops during World War II.

The helicopter contributed directly to at least one revolutionary production technology. As helicopters grew larger and more powerful, the precision calculations needed for engineering the blades, which had exacting requirements, increased exponentially. In 1947, John C. Parsons of Traverse City, Michigan, began looking for ways to speed the engineering of blades produced by his company. Parsons contacted the International Business Machine Corp. and asked to try one of their new main frame office computers. By 1951, Parsons was experimenting with having the computer's calculations actually guide the machine tool. His ideas were ultimately developed into the computer-numerical-control (CNC) machine tool industry that has revolutionized modern production methods.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1132 2021-09-04 00:56:50

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,966

Re: Miscellany

1109) Hovercraft

Hovercraft, any of a series of British-built and British-operated air-cushion vehicles (ACVs) that for 40 years (1959–2000) ferried passengers and automobiles across the English Channel between southern England and northern France. The cross-Channel Hovercraft were built by Saunders-Roe Limited of the Isle of Wight and its successor companies. The first in the series, known as SR.N1 (for Saunders-Roe Nautical 1), a four-ton vehicle that could carry only its crew of three, was invented by English engineer Christopher; it crossed the Channel for the first time on July 25, 1959. Ten years later math was knighted for his accomplishment. By that time the last and largest of the series, the SR.N4, also called the Mountbatten class, had begun to ply the ferry routes between Ramsgate and Dover on the English side and Calais and Boulogne on the French side. In their largest variants, these enormous vehicles, weighing 265 tons and powered by four Rolls-Royce gas-turbine engines, could carry more than 50 cars and more than 400 passengers at 65 knots (1 knot = 1.15 miles or 1.85 km per hour). At such speeds the cross-Channel trip was reduced to a mere half hour. In their heyday of the late 1960s and early ’70s, the various Hovercraft ferry services (with names such as Hoverlloyd, Seaspeed, and Hoverspeed), were ferrying as many as one-third of all cross-Channel passengers. Such was the allure of this quintessentially British technical marvel that one of the Mountbatten vehicles appeared in the James Bond film Diamonds Are Forever (1971). However, the craft were always expensive to maintain and operate (especially in an era of rising fuel costs), and they never turned consistent profits for their owners. The last two SR.N4 vehicles were retired in October 2000, to be transferred to the Hovercraft Museum at Lee-on-the-Solent, Hampshire, England. math’s original SR.N1 is in the collection of the Science Museum’s facility at Wroughton, near Swindon, Wiltshire. The generic term hovercraft continues to be applied to numerous other ACVs built and operated around the world, including small sport hovercraft, medium-sized ferries that work coastal and riverine routes, and powerful amphibious assault craft employed by major military powers.

Perhaps the first man to research the ACV concept was Sir John Thornycroft, a British engineer who in the 1870s began to build test models to check his theory that drag on a ship’s hull could be reduced if the vessel were given a concave bottom in which air could be contained between hull and water. His patent of 1877 emphasized that, “provided the air cushion could be carried along under the vehicle,” the only power that the cushion would require would be that necessary to replace lost air. Neither Thornycroft nor other inventors in following decades succeeded in solving the cushion-containment problem. In the meantime aviation developed, and pilots early discovered that their aircraft developed greater lift when they were flying very close to land or a water surface. It was soon determined that the greater lift was available because wing and ground together created a “funnel” effect, increasing the air pressure. The amount of additional pressure proved dependent on the design of the wing and its height above ground. The effect was strongest when the height was between one-half and one-third of the average front-to-rear breadth of the wing (chord).

Practical use was made of the ground effect in 1929 by the German Dornier Do X flying boat, which achieved a considerable gain in performance during an Atlantic crossing when it flew close to the sea surface. World War II maritime reconnaissance aircraft also made use of the phenomenon to extend their endurance.

In the 1960s American aerodynamicists developed an experimental craft making use of a wing in connection with ground effect. Several other proposals of this type were put forward, and a further variation combined the airfoil characteristics of a ground-effect machine with an air-cushion lift system that allowed the craft to develop its own hovering power while stationary and then build up forward speed, gradually transferring the lift component to its airfoil. Although none of these craft got beyond the experimental stage, they were important portents of the future because they suggested means of using the hovering advantage of the ACV and overcoming its theoretical speed limitation of about 200 miles (320 km) per hour, above which it was difficult to hold the air cushion in place. Such vehicles are known as ram-wing craft.

In the early 1950s engineers in the United Kingdom, the United States, and Switzerland were seeking solutions to Sir John Thornycroft’s 80-year-old problem. Christopher math of the United Kingdom is now acknowledged as the father of the Hovercraft, as the ACV is popularly known. During World War II he had been closely connected with the development of radar and other radio aids and had retired into peacetime life as a boatbuilder. Soon he began to concern himself with Thornycroft’s problem of reducing the hydrodynamic drag on the hull of a boat with some kind of air lubrication.

Christopher bypassed Thornycroft’s plenum chamber (in effect, an empty box with an open bottom) principle, in which air is pumped directly into a cavity beneath the vessel, because of the difficulty in containing the cushion. He theorized that, if air were instead pumped under the vessel through a narrow slot running entirely around the circumference, the air would flow toward the centre of the vessel, forming an external curtain that would effectively contain the cushion. This system is known as a peripheral jet. Once air has built up below the craft to a pressure equaling the craft weight, incoming air has nowhere to go but outward and experiences a sharp change of velocity on striking the surface. The momentum of the peripheral jet air keeps the cushion pressure and the ground clearance higher than it would be if air were pumped directly into a plenum chamber. To test his theory, math set up an apparatus consisting of a blower that fed air into an inverted coffee tin through a hole in the base. The tin was suspended over the weighing pan of a pair of kitchen scales, and air blown into the tin forced the pan down against the mass of a number of weights. In this way the forces involved were roughly measured. By securing a second tin within the first and directing air down through the space between, math was able to demonstrate that more than three times the number of weights could be raised by this means, compared with the plenum chamber effect of the single can.

Christopher's first patent was filed on December 12, 1955, and in the following year he formed a company known as Hovercraft Limited. His early memoranda and reports show a prescient grasp of the problems involved in translating the theory into practice—problems that would still concern designers of Hovercraft years later. He forecast, for example, that some kind of secondary suspension would be required in addition to the air cushion itself. Realizing that his discovery would not only make boats go faster but also would allow the development of amphibious craft, Christopher approached the Ministry of Supply, the British government’s defense-equipment procurement authority. The air-cushion vehicle was classified “secret” in November 1956, and a development contract was placed with the Saunders-Roe aircraft and seaplane manufacturer. In 1959 the world’s first practical ACV was launched. It was called the SR.N1.

Originally the SR.N1 had a total weight of four tons and could carry three men at a maximum speed of 25 knots over very calm water. Instead of having a completely solid structure to contain the cushion and peripheral jet, it incorporated a 6-inch- (15-cm-) deep skirt of rubberized fabric. This development provided a means whereby the air cushion could easily be contained despite unevenness of the ground or water. It was soon found that the skirt made it possible to revert once again to the plenum chamber as a cushion producer. Use of the skirt brought the problem of making skirts durable enough to withstand the friction wear produced at high speeds through water. It was necessary to develop the design and manufacturing skills that would allow skirts to be made in the optimum shape for aerodynamic efficiency. Skirts of rubber and plastic mixtures, 4 feet (1.2 metres) deep, had been developed by early 1963, and the performance of the SR.N1 had been increased by using them (and incorporating gas-turbine power) to a payload of seven tons and a maximum speed of 50 knots.

The first crossing of the English Channel by the SR.N1 was on July 25, 1959, symbolically on the 50th anniversary of French aviator Louis Blériot’s first flight across the same water. Manufacturers and operators in many parts of the world became interested. Manufacture of various types of ACV began in the United States, Japan, Sweden, and France; and in Britain additional British companies were building craft in the early 1960s. By the early 1970s, however, only the British were producing what could truly be called a range of craft and employing the largest types in regular ferry service—and this against considerable odds.

The stagnation can be explained by a number of problems, all of which led to the failure of commercial ACVs to live up to what many people thought was their original promise. As already mentioned, the design of and materials used in flexible skirts had to be developed from the first, and not until 1965 was an efficient and economic flexible-skirt arrangement evolved, and even then the materials were still being developed. Another major problem arose when aircraft gas-turbine engines were used in a marine environment. Although such engines, suitably modified, had been installed in ships with some success, their transition into Hovercraft brought out their extreme vulnerability to saltwater corrosion. An ACV by its very nature generates a great deal of spray when it is hovering over water, and the spray is drawn into the intakes of gas turbines in amounts not envisaged by the engine designer. Even after considerable filtering, the moisture and salt content is high enough to corrode large modern gas-turbine engines to such an extent that they need a daily wash with pure water, and even then they have a considerably reduced life span between overhauls. Another problem, perhaps ultimately fatal to the cross-Channel Hovercraft, was the rising price of petroleum-based fuel following the oil crisis of 1973–74. Burdened by high fuel costs, Hovercraft ferry services rarely turned a profit and in fact frequently lost millions of pounds a year. Finally, the opening of the Channel Tunnel in 1994 and the development of more efficient conventional boat ferries (some of them with catamaran-type hulls) presented such stiff competition that the building of successors to the big Mountbatten-class Hovercraft could not be justified.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1133 2021-09-05 01:03:28

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,966

Re: Miscellany

1110) Modem

Modem, (from “modulator/demodulator”), any of a class of electronic devices that convert digital data signals into modulated analog signals suitable for transmission over analog telecommunications circuits. A modem also receives modulated signals and demodulates them, recovering the digital signal for use by the data equipment. Modems thus make it possible for established telecommunications media to support a wide variety of data communication, such as e-mail between personal computers, facsimile transmission between fax machines, or the downloading of audio-video files from a World Wide Web server to a home computer.

Most modems are “voiceband”; i.e., they enable digital terminal equipment to communicate over telephone channels, which are designed around the narrow bandwidth requirements of the human voice. Cable modems, on the other hand, support the transmission of data over hybrid fibre-coaxial channels, which were originally designed to provide high-bandwidth television service. Both voiceband and cable modems are marketed as freestanding, book-sized modules that plug into a telephone or cable outlet and a port on a personal computer. In addition, voiceband modems are installed as circuit boards directly into computers and fax machines. They are also available as small card-sized units that plug into laptop computers.

Operating Parameters

Modems operate in part by communicating with each other, and to do this they must follow matching protocols, or operating standards. Worldwide standards for voiceband modems are established by the V-series of recommendations published by the Telecommunication Standardization sector of the International Telecommunication Union (ITU). Among other functions, these standards establish the signaling by which modems initiate and terminate communication, establish compatible modulation and encoding schemes, and arrive at identical transmission speeds. Modems have the ability to “fall back” to lower speeds in order to accommodate slower modems. “Full-duplex” standards allow simultaneous transmission and reception, which is necessary for interactive communication. “Half-duplex” standards also allow two-way communication, but not simultaneously; such modems are sufficient for facsimile transmission.

Data signals consist of multiple alternations between two values, represented by the binary digits, or bits, 0 and 1. Analog signals, on the other hand, consist of time-varying, wavelike fluctuations in value, much like the tones of the human voice. In order to represent binary data, the fluctuating values of the analog wave (i.e., its frequency, amplitude, and phase) must be modified, or modulated, in such a manner as to represent the sequences of bits that make up the data signal.

Each modified element of the modulated carrier wave (for instance, a shift from one frequency to another or a shift between two phases) is known as a baud. In early voiceband modems beginning in the early 1960s, one baud represented one bit, so that a modem operating, for instance, at 300 bauds per second (or, more simply, 300 baud) transmitted data at 300 bits per second. In modern modems a baud can represent many bits, so that the more accurate measure of transmission rate is bits or kilobits (thousand bits) per second. During the course of their development, modems have risen in throughput from 300 bits per second (bps) to 56 kilobits per second (Kbps) and beyond. Cable modems achieve a throughput of several megabits per second (Mbps; million bits per second). At the highest bit rates, channel-encoding schemes must be employed in order to reduce transmission errors. In addition, various source-encoding schemes can be used to “compress” the data into fewer bits, increasing the rate of information transmission without raising the bit rate.

Development Of Voiceband Modems

The first generation

Although not strictly related to digital data communication, early work on telephotography machines (predecessors of modern fax machines) by the Bell System during the 1930s did lead to methods for overcoming certain signal impairments inherent in telephone circuits. Among these developments were equalization methods for overcoming the smearing of fax signals as well as methods for translating fax signals to a 1,800-hertz carrier signal that could be transmitted over the telephone line.

The first development efforts on digital modems appear to have stemmed from the need to transmit data for North American air defense during the 1950s. By the end of that decade, data was being transmitted at 750 bits per second over conventional telephone circuits. The first modem to be made commercially available in the United States was the Bell 103 modem, introduced in 1962 by the American Telephone & Telegraph Company (AT&T). The Bell 103 permitted full-duplex data transmission over conventional telephone circuits at data rates up to 300 bits per second. In order to send and receive binary data over the telephone circuit, two pairs of frequencies (one pair for each direction) were employed. A binary 1 was signaled by a shift to one frequency of a pair, while a binary 0 was signaled by a shift to the other frequency of the pair. This type of digital modulation is known as frequency-shift keying, or FSK. Another modem, known as the Bell 212, was introduced shortly after the Bell 103. Transmitting data at a rate of 1,200 bits, or 1.2 kilobits, per second over full-duplex telephone circuits, the Bell 212 made use of phase-shift keying, or PSK, to modulate a 1,800-hertz carrier signal. In PSK, data is represented as phase shifts of a single carrier signal. Thus, a binary 1 might be sent as a zero-degree phase shift, while a binary 0 might be sent as a 180-degree phase shift.

Between 1965 and 1980, significant efforts were put into developing modems capable of even higher transmission rates. These efforts focused on overcoming the various telephone line impairments that directly limited data transmission. In 1965 Robert Lucky at Bell Laboratories developed an automatic adaptive equalizer to compensate for the smearing of data symbols into one another because of imperfect transmission over the telephone circuit. Although the concept of equalization was well known and had been applied to telephone lines and cables for many years, older equalizers were fixed and often manually adjusted. The advent of the automatic equalizer permitted the transmission of data at high rates over the public switched telephone network (PSTN) without any human intervention. Moreover, while adaptive equalization methods compensated for imperfections within the nominal three-kilohertz bandwidth of the voice circuit, advanced modulation methods permitted transmission at still higher data rates over this bandwidth. One important modulation method was quadrature amplitude modulation, or QAM. In QAM, binary digits are conveyed as discrete amplitudes in two phases of the electromagnetic wave, each phase being shifted by 90 degrees with respect to the other. The frequency of the carrier signal was in the range of 1,800 to 2,400 hertz. QAM and adaptive equalization permitted data transmission of 9.6 kilobits per second over four-wire circuits. Further improvements in modem technology followed, so that by 1980 there existed commercially available first-generation modems that could transmit at 14.4 kilobits per second over four-wire leased lines.

The second generation

Beginning in 1980, a concerted effort was made by the International Telegraph and Telephone Consultative Committee (CCITT; a predecessor of the ITU) to define a new standard for modems that would permit full-duplex data transmission at 9.6 kilobits per second over a single-pair circuit operating over the PSTN. Two breakthroughs were required in this effort. First, in order to fit high-speed full-duplex data transmission over a single telephone circuit, echo cancellation technology was required so that the sending modem’s transmitted signal would not be picked up by its own receiver. Second, in order to permit operation of the new standard over unconditioned PSTN circuits, a new form of coded modulation was developed. In coded modulation, error-correcting codes form an integral part of the modulation process, making the signal less susceptible to noise. The first modem standard to incorporate both of these technology breakthroughs was the V.32 standard, issued in 1984. This standard employed a form of coded modulation known as trellis-coded modulation, or TCM. Seven years later an upgraded V.32 standard was issued, permitting 14.4-kilobit-per-second full-duplex data transmission over a single PSTN circuit.

In mid-1990 the CCITT began to consider the possibility of full-duplex transmission over the PSTN at even higher rates than those allowed by the upgraded V.32 standard. This work resulted in the issuance in 1994 of the V.34 modem standard, allowing transmission at 28.8 kilobits per second.

The third generation

The engineering of modems from the Bell 103 to the V.34 standard was based on the assumption that transmission of data over the PSTN meant analog transmission—i.e., that the PSTN was a circuit-switched network employing analog elements. The theoretical maximum capacity of such a network was estimated to be approximately 30 Kbps, so the V.34 standard was about the best that could be achieved by voiceband modems.

In fact, the PSTN evolved from a purely analog network using analog switches and analog transmission methods to a hybrid network consisting of digital switches, a digital “backbone” (long-distance trunks usually consisting of optical fibres), and an analog “local loop” (the connection from the central office to the customer’s premises). Furthermore, many Internet service providers (ISPs) and other data services access the PSTN over a purely digital connection, usually via a T1 or T3 wire or an optical-fibre cable. With analog transmission occurring in only one local loop, transmission of modem signals at rates higher than 28.8 Kbps is possible. In the mid-1990s several researchers noted that data rates up to 56 Kbps downstream and 33.6 Kbps upstream could be supported over the PSTN without any data compression. This rate for upstream (subscriber to central office) transmissions only required conventional QAM using the V.34 standard. The higher rate in the downstream direction (that is, from central office to subscriber), however, required that the signals undergo “spectral shaping” (altering the frequency domain representation to match the frequency impairments of the channel) in order to minimize attenuation and distortion at low frequencies.

In 1998 the ITU adopted the V.90 standard for 56-Kbps modems. Because various regulations and channel impairments can limit actual bit rates, all V.90 modems are “rate adaptive.” Finally, in 2000 the V.92 modem standard was adopted by the ITU, offering improvements in the upstream data rate over the V.90 standard. The V.92 standard made use of the fact that, for dial-up connections to ISPs, the loop is essentially digital. Through the use of a concept known as precoding, which essentially equalizes the channel at the transmitter end rather than at the receiver end, the upstream data rate was increased to above 40 Kbps. The downstream data path in the V.92 standard remained the same 56 Kbps of the V.90 standard.

Cable Modems

A cable modem connects to a cable television system at the subscriber’s premises and enables two-way transmission of data over the cable system, generally to an Internet service provider (ISP). The cable modem is usually connected to a personal computer or router using an Ethernet connection that operates at line speeds of 10 or 100 Mbps. At the “head end,” or central distribution point of the cable system, a cable modem termination system (CMTS) connects the cable television network to the Internet. Because cable modem systems operate simultaneously with cable television systems, the upstream (subscriber to CMTS) and downstream (CMTS to subscriber) frequencies must be selected to prevent interference with the television signals.

Two-way capability was fairly rare in cable services until the mid-1990s, when the popularity of the Internet increased substantially and there was significant consolidation of operators in the cable television industry. Cable modems were introduced into the marketplace in 1995. At first all were incompatible with one another, but with the consolidation of cable operators the need for a standard arose. In North and South America a consortium of operators developed the Data Over Cable Service Interface Specification (DOCSIS) in 1997. The DOCSIS 1.0 standard provided basic two-way data service at 27–56 Mbps downstream and up to 3 Mbps upstream for a single user. The first DOCSIS 1.0 modems became available in 1999. The DOCSIS 1.1 standard released that same year added voice over Internet protocol (VoIP) capability, thereby permitting telephone communication over cable television systems. DOCSIS 2.0, released in 2002 and standardized by the ITU as J.122, offers improved upstream data rates on the order of 30 Mbps.

All DOCSIS 1.0 cable modems use QAM in a six-megahertz television channel for the downstream. Data is sent continuously and is received by all cable modems on the hybrid coaxial-fibre branch. Upstream data is transmitted in bursts, using either QAM or quadrature phase-shift keying (QPSK) modulation in a two-megahertz channel. In phase-shift keying (PSK), digital signals are transmitted by changing the phase of the carrier signal in accordance with the transmitted information. In binary phase-shift keying, the carrier takes on the phases +90° and −90° to transmit one bit of information; in QPSK, the carrier takes on the phases +45°, +135°, −45°, and −135° to transmit two bits of information. Because a cable branch is a shared channel, all users must share the total available bandwidth. As a result, the actual throughput rate of a cable modem is a function of total traffic on the branch; that is, as more subscribers use the system, total throughput per user is reduced. Cable operators can accommodate greater amounts of data traffic on their networks by reducing the total span of a single fibre-coaxial branch.

DSL Modems

In the section Development of voiceband modems, it is noted that the maximum data rate that can be transmitted over the local telephone loop is about 56 Kbps. This assumes that the local loop is to be used only for direct access to the long-distance PSTN. However, if digital information is intended to be switched not through the telephone network but rather over other networks, then much higher data rates may be transmitted over the local loop using purely digital methods. These purely digital methods are known collectively as digital subscriber line (DSL) systems. DSL systems carry digital signals over the twisted-pair local loop using methods analogous to those used in the T1 digital carrier system to transmit 1.544 Mbps in one direction through the telephone network.

The first DSL was the Integrated Services Digital Network (ISDN), developed during the 1980s. In ISDN systems a 160-Kbps signal is transmitted over the local loop using a four-level signal format known as 2B1Q, for “two bits per quaternary signal.” The 160-Kbps signal is broken into two “B” channels of 64 Kbps each, one “D” channel of 16 Kbps, and one signaling channel of 16 Kbps to permit both ends of the ISDN local loop to be initialized and synchronized. ISDN systems are deployed in many parts of the world. In many cases they are used to provide digital telephone services, although these systems may also provide 64-Kbps or 128-Kbps access to the Internet with the use of an adapter card. However, because such data rates are not significantly higher than those offered by 56-Kbps V.90 voiceband modems, ISDN is not widely used for Internet access.

High-bit-rate DSL, or HDSL, was developed in about 1990, employing some of the same technology as ISDN. HDSL uses 2B1Q modulation to transmit up to 1.544 Mbps over two twisted-pair lines. In practice, HDSL systems are used to provide users with low-cost T1-type access to the telephone central office. Both ISDN and HDSL systems are symmetric; i.e., the upstream and downstream data rates are identical.

Asymmetric DSL, or ADSL, was developed in the early 1990s, originally for video-on-demand services over the telephone local loop. Unlike HDSL or ISDN, ADSL is designed to provide higher data rates downstream than upstream—hence the designation “asymmetric.” In general, downstream rates range from 1.5 to 9 Mbps and upstream rates from 16 to 640 Kbps, using a single twisted-pair wire. ADSL systems are currently most often used for high-speed access to an Internet service provider (ISP), though regular telephone service is also provided simultaneously with the data service. At the local telephone office, a DSL access multiplexer, or DSLAM, statistically multiplexes the data packets transmitted over the ADSL system in order to provide a more efficient link to the Internet. At the customer’s premises, an ADSL modem usually provides one or more Ethernet jacks capable of line rates of either 10 Mbps or 100 Mbps.

In 1999 the ITU standardized two ADSL systems. The first system, designated G.991.1 or G.DMT, specifies data delivery at rates up to 8 Mbps on the downstream and 864 Kbps on the upstream. The modulation method is known as discrete multitone (DMT), a method in which data is sent over a large number of small individual carriers, each of which uses QAM modulation (described above in Development of voiceband modems). By varying the number of carriers actually used, DMT modulation may be made rate-adaptive, depending upon the channel conditions. G.991.1 systems require the use of a “splitter” at the customer’s premises to filter and separate the analog voice channel from the high-speed data channel. Usually the splitter has to be installed by a technician; to avoid this expense a second ADSL standard was developed, variously known as G.991.2, G.lite, or splitterless ADSL. This second standard also uses DMT modulation to achieve the same rates as G.991.1. In place of the splitter, user-installable filters are required for each telephone set in the home.

Unlike cable modems, ADSL modems use a dedicated telephone line between the customer and the central office, so the delivered bandwidth equals the bandwidth actually available. However, ADSL systems may be installed only on local loops less than 5,400 metres (18,000 feet) long and therefore are not available to homes located farther from a central office. Other versions of DSL have been announced to provide even higher rate services over shorter local loops. For instance, very high data rate DSL, or VDSL, can provide up to 15 Mbps over a single twisted wire pair up to 1,500 metres (5,000 feet) long.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1134 2021-09-06 02:43:30

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,966

Re: Miscellany

1111) Celestial mechanics

Celestial mechanics is the branch of astronomy that deals with the motions of objects in outer space. Historically, celestial mechanics applies principles of physics (classical mechanics) to astronomical objects, such as stars and planets, to produce ephemeris data.

History

Modern analytic celestial mechanics started with Isaac Newton's Principia of 1687. The name "celestial mechanics" is more recent than that. Newton wrote that the field should be called "rational mechanics." The term "dynamics" came in a little later with Gottfried Leibniz, and over a century after Newton, Pierre-Simon Laplace introduced the term "celestial mechanics." Prior to Kepler there was little connection between exact, quantitative prediction of planetary positions, using geometrical or arithmetical techniques, and contemporary discussions of the physical causes of the planets' motion.

Johannes Kepler

Johannes Kepler (1571–1630) was the first to closely integrate the predictive geometrical astronomy, which had been dominant from Ptolemy in the 2nd century to Copernicus, with physical concepts to produce a ‘New Astronomy, Based upon Causes, or Celestial Physics’ in 1609. His work led to the modern laws of planetary orbits, which he developed using his physical principles and the planetary observations made by Tycho Brahe. Kepler's model greatly improved the accuracy of predictions of planetary motion, years before Isaac Newton developed his law of gravitation in 1686.

Isaac Newton

Isaac Newton (25 December 1642–31 March 1727) is credited with introducing the idea that the motion of objects in the heavens, such as planets, the Sun, and the Moon, and the motion of objects on the ground, like cannon balls and falling apples, could be described by the same set of physical laws. In this sense he unified celestial and terrestrial dynamics. Using Newton's law of universal gravitation, proving Kepler's Laws for the case of a circular orbit is simple. Elliptical orbits involve more complex calculations, which Newton included in his Principia.

Joseph-Louis Lagrange

After Newton, Lagrange (25 January 1736–10 April 1813) attempted to solve the three-body problem, analyzed the stability of planetary orbits, and discovered the existence of the Lagrangian points. Lagrange also reformulated the principles of classical mechanics, emphasizing energy more than force and developing a method to use a single polar coordinate equation to describe any orbit, even those that are parabolic and hyperbolic. This is useful for calculating the behaviour of planets and comets and such. More recently, it has also become useful to calculate spacecraft trajectories.

Simon Newcomb

Simon Newcomb (12 March 1835–11 July 1909) was a Canadian-American astronomer who revised Peter Andreas Hansen's table of lunar positions. In 1877, assisted by George William Hill, he recalculated all the major astronomical constants. After 1884, he conceived with A. M. W. Downing a plan to resolve much international confusion on the subject. By the time he attended a standardisation conference in Paris, France in May 1886, the international consensus was that all ephemerides should be based on Newcomb's calculations. A further conference as late as 1950 confirmed Newcomb's constants as the international standard.

Albert Einstein

Albert Einstein (14 March 1879–18 April 1955) explained the anomalous precession of Mercury's perihelion in his 1916 paper ‘The Foundation of the General Theory of Relativity’. This led astronomers to recognize that Newtonian mechanics did not provide the highest accuracy. Binary pulsars have been observed, the first in 1974, whose orbits not only require the use of General Relativity for their explanation, but whose evolution proves the existence of gravitational radiation, a discovery that led to the 1993 Nobel Physics Prize.

Examples of problems

Celestial motion, without additional forces such as thrust of a rocket, is governed by gravitational acceleration of masses due to other masses. A simplification is the n-body problem, where the problem assumes some number n of spherically symmetric masses. In that case, the integration of the accelerations can be well approximated by relatively simple summations.

Examples:

• 4-body problem: spaceflight to Mars (for parts of the flight the influence of one or two bodies is very small, so that there we have a 2- or 3-body problem; see also the patched conic approximation)

• 3-body problem:

o Quasi-satellite

o Spaceflight to, and stay at a Lagrangian point

In the case that n=2 (two-body problem), the situation is much simpler than for larger n. Various explicit formulas apply, where in the more general case typically only numerical solutions are possible. It is a useful simplification that is often approximately valid.

Examples:

• A binary star, e.g., Alpha Centauri (approx. the same mass)

• A binary asteroid, e.g., 90 Antiope (approx. the same mass)

A further simplification is based on the "standard assumptions in astrodynamics", which include that one body, the orbiting body, is much smaller than the other, the central body. This is also often approximately valid.

Examples:

• Solar system orbiting the center of the Milky Way

• A planet orbiting the Sun

• A moon orbiting a planet

• A spacecraft orbiting Earth, a moon, or a planet (in the latter cases the approximation only applies after arrival at that orbit)

Perturbation theory

Perturbation theory comprises mathematical methods that are used to find an approximate solution to a problem which cannot be solved exactly. (It is closely related to methods used in numerical analysis, which are ancient.) The earliest use of modern perturbation theory was to deal with the otherwise unsolvable mathematical problems of celestial mechanics: Newton's solution for the orbit of the Moon, which moves noticeably differently from a simple Keplerian ellipse because of the competing gravitation of the Earth and the Sun.