Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#1176 2021-11-01 00:35:51

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,952

Re: Miscellany

1153) Scarecrow

Scarecrow, device posted on cultivated ground to deter birds or other animals from eating or otherwise disturbing seeds, shoots, and fruit; its name derives from its use against the crow. The scarecrow of popular tradition is a mannequin stuffed with straw; free-hanging, often reflective parts movable by the wind are commonly attached to increase effectiveness. A scarecrow outfitted in clothes previously worn by a hunter who has fired on the flock is regarded by some as especially efficacious. A common variant is the effigy of a predator (e.g., an owl or a snake).

The function of the scarecrow is sometimes filled by various audio devices, including recordings of the calls or sounds of predators or noisome insects. Recorded sounds of deerflies in flight, for example, are used to deter deer from young tree plantations. Automatically fired carbide cannons and other simulated gunfire are used to keep migrating geese out of cornfields.

A scarecrow is a decoy or mannequin, often in the shape of a human. Humanoid scarecrows are usually dressed in old clothes and placed in open fields to discourage birds from disturbing and feeding on recently cast seed and growing crops. Scarecrows are used across the world by farmers, and are a notable symbol of farms and the countryside in popular culture.

Design

The common form of a scarecrow is a humanoid figure dressed in old clothes and placed in open fields to discourage birds such as crows or sparrows from disturbing and feeding on recently cast seed and growing crops. Machinery such as windmills have been employed as scarecrows, but the effectiveness lessens as animals become familiar with the structures.

Since the invention of the humanoid scarecrow, more effective methods have been developed. On California farmland, highly-reflective aluminized PET (polyethylene terephthalate) film ribbons are tied to the plants to produce shimmers from the sun. Another approach is using automatic noise guns powered by propane gas. One winery in New York has even used inflatable tube men or airdancers to scare away birds.

Festivals

In England, the Urchfont Scarecrow Festival was established in the 1990s and has become a major local event, attracting up to 10,000 people annually for the May Day Bank Holiday. Originally based on an idea imported from Derbyshire, it was the first Scarecrow Festival to be established in the whole of southern England.

Belbroughton, north Worcestershire, holds an annual Scarecrow Weekend on the last weekend of each September since 1996, which raises money for local charities. The village of Meerbrook in Staffordshire holds an annual Scarecrow Festival during the month of May. Tetford and Salmonby, Lincolnshire, jointly host one.

The festival at Wray, Lancashire, was established in the early 1990s and continues to the present day. In the village of Orton, Eden, Cumbria scarecrows are displayed each year, often using topical themes such as a Dalek exterminating a Wind turbine to represent local opposition to a wind farm.

The village of Blackrod, near Bolton in Greater Manchester, holds a popular annual Scarecrow Festival over a weekend usually in early July.

Norland, West Yorkshire, has a Scarecrow festival. Kettlewell in North Yorkshire has held an annual festival since 1994. In Teesdale, County Durham, the villages of Cotherstone, Staindrop and Middleton-in-Teesdale have annual scarecrow festivals.

Scotland's first scarecrow festival was held in West Kilbride, North Ayrshire, in 2004, and there is also one held in Montrose. On the Isle of Skye, the Tattie bogal event is held each year, featuring a scarecrow trail and other events. Tonbridge in Kent also host an annual Scarecrow Trail, organised by the local Rotary Club to raise money for local charities. Gisburn, Lancashire, held its first Scarecrow Festival in June 2014.

Mullion, in Cornwall, has an annual scarecrow festival since 2007.

In the US, St. Charles, Illinois, hosts an annual Scarecrow Festival. Peddler's Village in Bucks County, Pennsylvania, hosts an annual scarecrow festival and presents a scarecrow display in September–October that draws tens of thousands of visitors.

The 'pumpkin people' come in the autumn months in the valley region of Nova Scotia, Canada. They are scarecrows with pumpkin heads doing various things such as playing the fiddle or riding a wooden horse. Hickling, in the south of Nottinghamshire, is another village that celebrates an annual scarecrow event. It is very popular and has successfully raised a great deal of money for charity. Meaford, Ontario, has celebrated the Scarecrow Invasion since 1996.

In the Philippines, the Province of Isabela has recently started a scarecrow festival named after the local language: the Bambanti Festival. The province invites all its cities and towns to participate for the festivities, which last a week; it has drawn tourists from around the island of Luzon.

The largest gathering of scarecrows in one location is 3,812 and was achieved by National Forest Adventure Farm in Burton-upon-Trent, Staffordshire, UK, on 7 August 2014.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1177 2021-11-02 00:21:25

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,952

Re: Miscellany

1154) Pathology

Pathology, medical specialty concerned with the determining causes of disease and the structural and functional changes occurring in abnormal conditions. Early efforts to study pathology were often stymied by religious prohibitions against autopsies, but these gradually relaxed during the late Middle Ages, allowing autopsies to determine the cause of death, the basis for pathology. The resultant accumulating anatomical information culminated in the publication of the first systematic textbook of morbid anatomy by the Italian Giovanni Battista Morgagni in 1761, which located diseases within individual organs for the first time. The correlation between clinical symptoms and pathological changes was not made until the first half of the 19th century.

The existing humoral theories of pathology were replaced by a more scientific cellular theory; Rudolf Virchow in 1858 argued that the nature of disease could be understood by means of the microscopic analysis of affected cells. The bacteriologic theory of disease developed late in the 19th century by Louis Pasteur and Robert Koch provided the final clue to understanding many disease processes.

Pathology as a separate specialty was fairly well established by the end of the 19th century. The pathologist does much of his work in the laboratory and reports to and consults with the clinical physician who directly attends to the patient. The types of laboratory specimens examined by the pathologist include surgically removed body parts, blood and other body fluids, urine, feces, exudates, etc. Pathology practice also includes the reconstruction of the last chapter of the physical life of a deceased person through the procedure of autopsy, which provides valuable and otherwise unobtainable information concerning disease processes. The knowledge required for the proper general practice of pathology is too great to be attainable by single individuals, so wherever conditions permit it, subspecialists collaborate. Among the laboratory subspecialties in which pathologists work are neuropathology, pediatric pathology, general surgical pathology, dermatopathology, and forensic pathology.

Microbial cultures for the identification of infectious disease, simpler access to internal organs for biopsy through the use of glass fibre-optic instruments, finer definition of subcellular structures with the electron microscope, and a wide array of chemical stains have greatly expanded the information available to the pathologist in determining the causes of disease. Formal medical education with the attainment of an M.D. degree or its equivalent is required prior to admission to pathology postgraduate programs in many Western countries. The program required for board certification as a pathologist roughly amounts to five years of postgraduate study and training.

Pathology is the study of the causes and effects of disease or injury. The word pathology also refers to the study of disease in general, incorporating a wide range of biology research fields and medical practices. However, when used in the context of modern medical treatment, the term is often used in a more narrow fashion to refer to processes and tests which fall within the contemporary medical field of "general pathology", an area which includes a number of distinct but inter-related medical specialties that diagnose disease, mostly through analysis of tissue, cell, and body fluid samples. Idiomatically, "a pathology" may also refer to the predicted or actual progression of particular diseases (as in the statement "the many different forms of cancer have diverse pathologies", in which case a more proper choice of word would be "pathophysiologies"), and the affix pathy is sometimes used to indicate a state of disease in cases of both physical ailment (as in cardiomyopathy) and psychological conditions (such as psychopathy). A physician practicing pathology is called a pathologist.

As a field of general inquiry and research, pathology addresses components of disease: cause, mechanisms of development (pathogenesis), structural alterations of cells (morphologic changes), and the consequences of changes (clinical manifestations). In common medical practice, general pathology is mostly concerned with analyzing known clinical abnormalities that are markers or precursors for both infectious and non-infectious disease, and is conducted by experts in one of two major specialties, anatomical pathology and clinical pathology. Further divisions in specialty exist on the basis of the involved sample types (comparing, for example, cytopathology, hematopathology, and histopathology), organs (as in renal pathology), and physiological systems (oral pathology), as well as on the basis of the focus of the examination (as with forensic pathology).

Pathology is a significant field in modern medical diagnosis and medical research.

Pathology is the medical specialty concerned with the study of the nature and causes of diseases. It underpins every aspect of medicine, from diagnostic testing and monitoring of chronic diseases to cutting-edge genetic research and blood transfusion technologies. Pathology is integral to the diagnosis of every cancer.

Pathology plays a vital role across all facets of medicine throughout our lives, from pre-conception to post mortem. In fact it has been said that "Medicine IS Pathology".

Due to the popularity of many television programs, the word ‘pathology’ conjures images of dead bodies and people in lab coats investigating the cause of suspicious deaths for the police. That's certainly a side of pathology, but in fact it’s far more likely that pathologists are busy in a hospital clinic or laboratory helping living people.

Pathologists are specialist medical practitioners who study the cause of disease and the ways in which diseases affect our bodies by examining changes in the tissues and in blood and other body fluids. Some of these changes show the potential to develop a disease, while others show its presence, cause or severity or monitor its progress or the effects of treatment.

The doctors you see in surgery or at a clinic all depend on the knowledge, diagnostic skills and advice of pathologists. Whether it’s a GP arranging a blood test or a surgeon wanting to know the nature of the lump removed at operation, the definitive answer is usually provided by a pathologist. Some pathologists also see patients and are involved directly in the day-to-day delivery of patient care.

Currently pathology has nine major areas of activity. These relate to either the methods used or the types of disease which they investigate.

* Anatomical Pathology

* Chemical Pathology

* Clinical Pathology

* Forensic Pathology

* General Pathology

* Genetic Pathology

* Hematology

* Immuno Pathology

* Microbiology

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1178 2021-11-02 22:04:30

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,952

Re: Miscellany

1155) Otolaryngology

Otolaryngology, also called otorhinolaryngology, medical specialty concerned with the diagnosis and treatment of diseases of the ear, nose, and throat. Traditionally, treatment of the ear was associated with that of the eye in medical practice. With the development of laryngology in the late 19th century, the connection between the ear and throat became known, and otologists became associated with laryngologists.

The study of ear diseases did not develop a clearly scientific basis until the first half of the 19th century, when Jean-Marc-Gaspard Itard and Prosper Ménière made ear physiology and disease a matter of systematic investigation. The scientific basis of the specialty was first formulated by William R. Wilde of Dublin, who in 1853 published Practical Observations on Aural Surgery, and the Nature and Treatment of Diseases of the Ear. Further advances were made with the development of the otoscope, an instrument that enabled visual examination of the tympanic membrane (eardrum).

The investigation of the larynx and its diseases, meanwhile, was aided by a device that was invented in 1855 by Manuel García, a Spanish singing teacher. This instrument, the laryngoscope, was adopted by Ludwig Türck and Jan Czermak, who undertook detailed studies of the pathology of the larynx; Czermak also turned the laryngoscope’s mirror upward to investigate the physiology of the nasopharyngeal cavity, thereby establishing an essential link between laryngology and rhinology. One of Czermak’s assistants, Friedrich Voltolini, improved laryngoscopic illumination and also adapted the instrument for use with the otoscope.

In 1921 Carl Nylen pioneered in the use of a high-powered binocular microscope to perform ear surgery; the operating microscope opened the way to several new corrective procedures on the delicate structures of the ear. Another important 20th-century achievement was the development in the 1930s of the electric audiometer, an instrument used to measure hearing acuity.

Otorhinolaryngology

Otorhinolaryngology, abbreviated ORL and also known as otolaryngology, otolaryngology – head and neck surgery, or ear, nose, and throat (ENT), is a surgical subspecialty within medicine that deals with the surgical and medical management of conditions of the head and neck. Doctors who specialize in this area are called otorhinolaryngologists, otolaryngologists, head and neck surgeons, or ENT surgeons or physicians. Patients seek treatment from an otorhinolaryngologist for diseases of the ear, nose, throat, base of the skull, head, and neck. These commonly include functional diseases that affect the senses and activities of eating, drinking, speaking, breathing, swallowing, and hearing. In addition, ENT surgery encompasses the surgical management and reconstruction of cancers and benign tumors of the head and neck as well as plastic surgery of the face and neck.

Training

Otorhinolaryngologists are physicians (MD, DO, MBBS, MBChB, etc.) who complete medical school and then 5–7 years of post-graduate surgical training in ORL-H&N. In the United States, trainees complete at least five years of surgical residency training. This comprises three to six months of general surgical training and four and a half years in ORL-H&N specialist surgery. In Canada and the United States, practitioners complete a five-year residency training after medical school.

Following residency training, some otolaryngologist-head & neck surgeons complete an advanced sub-specialty fellowship, where training can be one to two years in duration. Fellowships include head and neck surgical oncology, facial plastic surgery, rhinology and sinus surgery, neuro-otology, pediatric otolaryngology, and laryngology. In the United States and Canada, otorhinolaryngology is one of the most competitive specialties in medicine in which to obtain a residency position following medical school.

In the United Kingdom entrance to otorhinolaryngology higher surgical training is highly competitive and involves a rigorous national selection process. The training programme consists of 6 years of higher surgical training after which trainees frequently undertake fellowships in a sub-speciality prior to becoming a consultant.

The typical total length of education and training, post-secondary school is 12–14 years. Otolaryngology is among the more highly compensated surgical specialties in the United States ($461,000 2019 average annual income).

What Is an Otolaryngologist?

If you have a health problem with your head or neck, your doctor might recommend that you see an otolaryngologist. That's someone who treats issues in your ears, nose, or throat as well as related areas in your head and neck. They're called ENT's for short.

In the 19th century, doctors figured out that the ears, nose, and throat are closely connected by a system of tubes and passages. They made special tools to take a closer look at those areas and came up with ways to treat problems. A new medical specialty was born.

What Conditions Do Otolaryngologists Treat?

ENT's can do surgery and treat many different medical conditions. You would see one if you have a problem involving:

* An ear condition, such as an infection, hearing loss, or trouble with balance

* Nose and nasal issues like allergies, sinusitis, or growths

* Throat problems like tonsillitis, difficulty swallowing, and voice issues

* Sleep trouble like snoring or obstructive sleep apnea, in which your airway is narrow or blocked and it interrupts your breathing while you sleep

* Infections or tumors (cancerous or not) of your head or neck

Some areas of your head are treated by other kinds of doctors. For example, neurologists deal with problems with your brain or nervous system, and ophthalmologists care for your eyes and vision.

How Are ENT Doctors Trained?

Otolaryngologists go to 4 years of medical school. They then have at least 5 years of special training. Finally, they need to pass an exam to be certified by the American Board of Otolaryngology.

Some also get 1 or 2 years of training in a subspecialty:

* Allergy: These doctors treat environmental allergies (like pollen or pet dander) with medicine or a series of shots called immunology. They also can help you find out if you have a food allergy.

* Facial and reconstructive surgery:These doctors do cosmetic surgery like face lifts and nose jobs. They also help people whose looks have been changed by an accident or who were born with issues that need to be fixed.

* Head and neck:If you have a tumor in your nose, sinuses, mouth, throat, voice box, or upper esophagus, this kind of specialist can help you.

* Laryngology:These doctors treat diseases and injuries that affect your voice box (larynx) and vocal cords. They also can help diagnose and treat swallowing problems.

* Otology and neurotology:If you have any kind of issue with your ears, these specialists can help. They treat conditions like infections, hearing loss, dizziness, and ringing or buzzing in your ears (tinnitus).

* Pediatric ENT: Your child might not be able to tell their doctor what's bothering them. Pediatric ENTs are specially trained to treat youngsters, and they have tools and exam rooms designed to put kids at ease.

Common problems include ear infections, tonsillitis, asthma, and allergies. Pediatric ENT's also care for children with birth defects of the head and neck. They also can help figure out if your child has a speech or language problem.

* Rhinology: These doctors focus on your nose and sinuses. They treat sinusitis, nose bleeds, loss of smell, stuffy nose, and unusual growths.

* Sleep medicine: Some ENT's specialize in sleep problems that involve your breathing, for instance snoring or sleep apnea. Your doctor may order a sleep study to see if you have trouble breathing at times during the night.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1179 2021-11-03 20:35:54

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,952

Re: Miscellany

1156) Auscultation

Auscultation, diagnostic procedure in which the physician listens to sounds within the body to detect certain defects or conditions, such as heart-valve malfunctions or pregnancy. Auscultation originally was performed by placing the ear directly on the chest or abdomen, but it has been practiced mainly with a stethoscope since the invention of that instrument in 1819.

The technique is based on characteristic sounds produced, in the head and elsewhere, by abnormal blood circuits; in the joints by roughened surfaces; in the lower arm by the pulse wave; and in the abdomen by an active fetus or by intestinal disturbances. It is most commonly employed, however, in diagnosing diseases of the heart and lungs.

The heart sounds consist mainly of two separate noises occurring when the two sets of heart valves close. Either partial obstruction of these valves or leakage of blood through them because of imperfect closure results in turbulence in the blood current, causing audible, prolonged noises called murmurs. In certain congenital abnormalities of the heart and the blood vessels in the chest, the murmur may be continuous. Murmurs are often specifically diagnostic for diseases of the individual heart valves; that is, they sometimes reveal which heart valve is causing the ailment. Likewise, modification of the quality of the heart sounds may reveal disease or weakness of the heart muscle. Auscultation is also useful in determining the types of irregular rhythm of the heart and in discovering the sound peculiar to inflammation of the pericardium, the sac surrounding the heart.

Auscultation also reveals the modification of sounds produced in the air tubes and sacs of the lungs during breathing when these structures are diseased.

Auscultation (based on the Latin verb auscultare "to listen") is listening to the internal sounds of the body, usually using a stethoscope. Auscultation is performed for the purposes of examining the circulatory and respiratory systems (heart and breath sounds), as well as the alimentary canal.

The term was introduced by René Laennec. The act of listening to body sounds for diagnostic purposes has its origin further back in history, possibly as early as Ancient Egypt. (Auscultation and palpation go together in physical examination and are alike in that both have ancient roots, both require skill, and both are still important today.) Laënnec's contributions were refining the procedure, linking sounds with specific pathological changes in the chest, and inventing a suitable instrument (the stethoscope) to mediate between the patient's body and the clinician's ear.

Auscultation is a skill that requires substantial clinical experience, a fine stethoscope and good listening skills. Health professionals (doctors, nurses, etc.) listen to three main organs and organ systems during auscultation: the heart, the lungs, and the gastrointestinal system. When auscultating the heart, doctors listen for abnormal sounds, including heart murmurs, gallops, and other extra sounds coinciding with heartbeats. Heart rate is also noted. When listening to lungs, breath sounds such as wheezes, crepitations and crackles are identified. The gastrointestinal system is auscultated to note the presence of bowel sounds.

Electronic stethoscopes can be recording devices, and can provide noise reduction and signal enhancement. This is helpful for purposes of telemedicine (remote diagnosis) and teaching. This opened the field to computer-aided auscultation. Ultrasonography (US) inherently provides capability for computer-aided auscultation, and portable US, especially portable echocardiography, replaces some stethoscope auscultation (especially in cardiology), although not nearly all of it (stethoscopes are still essential in basic checkups, listening to bowel sounds, and other primary care contexts).

What is auscultation?

Auscultation is the medical term for using a stethoscope to listen to the sounds inside of your body. This simple test poses no risks or side effects.

Why is auscultation used?

Abnormal sounds may indicate problems in these areas:

* lungs

* abdomen

* heart

* major blood vessels

Potential issues can include:

* irregular heart rate

* Crohn’s disease

* phlegm or fluid buildup in your lungs

Your doctor can also use a machine called a Doppler ultrasound for auscultation. This machine uses sound waves that bounce off your internal organs to create images. This is also used to listen to your baby’s heart rate when you’re pregnant.

How is the test performed?

Your doctor places the stethoscope over your bare skin and listens to each area of your body. There are specific things your doctor will listen for in each area.

Heart

To hear your heart, your doctor listens to the four main regions where heart valve sounds are the loudest. These are areas of your chest above and slightly below your left breast. Some heart sounds are also best heard when you’re turned toward your left side. In your heart, your doctor listens for:

* what your heart sounds like

* how often each sound occurs

* how loud the sound is

Abdomen

Your doctor listens to one or more regions of your abdomen separately to listen to your bowel sounds. They may hear swishing, gurgling, or nothing at all. Each sound informs your doctor about what’s happening in your intestines.

Lungs

When listening to your lungs, your doctor compares one side with the other and compares the front of your chest with the back of your chest. Airflow sounds differently when airways are blocked, narrowed, or filled with fluid. They’ll also listen for abnormal sounds such as wheezing. Learn more about breath sounds.

How are results interpreted?

Auscultation can tell your doctor a lot about what’s going on inside of your body.

Heart

Traditional heart sounds are rhythmic. Variations can signal to your doctor that some areas may not be getting enough blood or that you have a leaky valve. Your doctor may order additional testing if they hear something unusual.

Abdomen

Your doctor should be able to hear sounds in all areas of your abdomen. Digested material may be stuck or your intestine may be twisted if an area of your abdomen has no sounds. Both possibilities can be very serious.

Lungs

Lung sounds can vary as much as heart sounds. Wheezes can be either high- or low-pitched and can indicate that mucus is preventing your lungs from expanding properly. One type of sound your doctor might listen for is called a rub. Rubs sound like two pieces of sandpaper rubbing together and can indicate irritated surfaces around your lungs.

What are some alternatives to auscultation?

Other methods that you doctor can use to determine what’s happening inside of your body are palpation and percussion.

Palpation

Your doctor can perform a palpation simply by placing their fingers over one of your arteries to measure systolic pressure. Doctors usually look for a point of maximal impact (PMI) around your heart.

If your doctor feels something abnormal, they can identify possible issues related to your heart. Abnormalities may include a large PMI or thrill. A thrill is a vibration caused by your heart that’s felt on the skin.

Percussion

Percussion involves your doctor tapping their fingers on various parts of your abdomen. Your doctor uses percussion to listen for sounds based on the organs or body parts underneath your skin.

You’ll hear hollow sounds when your doctor taps body parts filled with air and much duller sounds when your doctor taps above bodily fluids or an organ, such as your liver.

Percussion allows your doctor to identify many heart-related issues based on the relative dullness of sounds. Conditions that can be identified using percussion include:

* enlarged heart, which is called cardiomegaly

* excessive fluid around the heart, which is called pericardial effusion

* emphysema

(Emphysema is a disease of the lungs. It occurs most often in people who smoke, but it also occurs in people who regularly breathe in irritants.

Emphysema destroys alveoli, which are air sacs in the lungs. The air sacs weaken and eventually break, which reduces the surface area of the lungs and the amount of oxygen that can reach the bloodstream. This makes it harder to breathe, especially when exercising. Emphysema also causes the lungs to lose their elasticity.)

Why is auscultation important?

Auscultation gives your doctor a basic idea about what’s occurring in your body. Your heart, lungs, and other organs in your abdomen can all be tested using auscultation and other similar methods.

For example, if your doctor doesn’t identify a fist-sized area of dullness left of your sternum, you might be tested for emphysema. Also, if your doctor hears what’s called an “opening snap” when listening to your heart, you might be tested for mitral stenosis. You might need additional tests for a diagnosis depending on the sounds your doctor hears.

Auscultation and related methods are a good way for your doctor to know whether or not you need close medical attention. Auscultation can be an excellent preventive measure against certain conditions. Ask your doctor to perform these procedures whenever you have a physical exam.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1180 2021-11-04 17:13:31

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,952

Re: Miscellany

1157) Search for Extraterrestrial Intelligence (SETI)

Are we alone in the universe? Are there advanced civilizations that we can detect and what would be the societal impact if we do? How can we better the odds of making contact? These questions are both fundamental and universal. Today’s generation is the first that has the science and technology to prove that there is other intelligence in the cosmos. The SETI Institute’s first project was to conduct a search for narrow-band radio transmissions that would betray the existence of technically competent beings elsewhere in the galaxy. Today, the SETI Institute uses a specially designed instrument for its SETI efforts – the Allen Telescope Array (ATA) located in the Cascade Mountains of California. The ATA is embarking upon a two-year survey of tens of thousands of red dwarf stars, which have many characteristics that make them prime locales in the search for intelligent life. The Institute also uses the ATA to examine newly-discovered exoplanets that are found in their star’s habitable zone. There are likely to be tens of billions of such worlds in our galaxy. Additionally, the Institute is developing a relatively low-cost system for doing optical SETI, which searches for laser flashes that other societies might use to signal their presence. While previous optical SETI programs were limited to examining a single pixel on the sky at any given time, the new system will be able to monitor the entire night sky simultaneously. It will be a revolution in our ability to discover intermittent signals that otherwise would never be found. The search has barely begun – but the age-old question of “Are we alone in the universe?” could be answered in our lifetime.

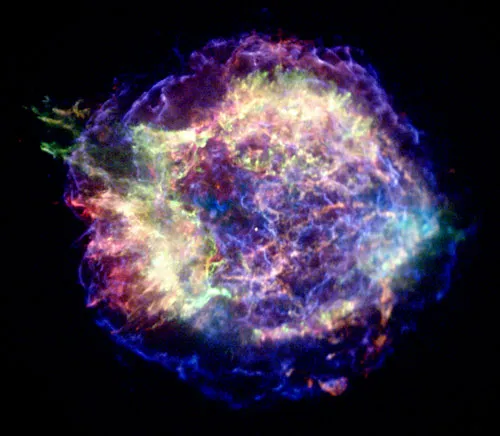

SETI, in full Search for Extraterrestrial Intelligence, ongoing effort to seek intelligent extraterrestrial life. SETI focuses on receiving and analyzing signals from space, particularly in the radio and visible-light regions of the electromagnetic spectrum, looking for nonrandom patterns likely to have been sent either deliberately or inadvertently by technologically advanced beings. The first modern SETI search was Project Ozma (1960), which made use of a radio telescope in Green Bank, West Virginia. SETI approaches include targeted searches, which typically concentrate on groups of nearby Sun-like stars, and systematic surveys covering all directions. The value of SETI efforts has been controversial; programs initiated by NASA in the 1970s were terminated by congressional action in 1993. Subsequently, SETI researchers organized privately funded programs—e.g., the targeted-search Project Phoenix in the U.S. and the survey-type SERENDIP projects in the U.S. and Australia. See also Drake equation.

Extraterrestrial intelligence

Extraterrestrial intelligence, hypothetical extraterrestrial life that is capable of thinking, purposeful activity. Work in the new field of astrobiology has provided some evidence that evolution of other intelligent species in the Milky Way Galaxy is not utterly improbable. In particular, more than 4,000 extrasolar planets have been detected, and underground water is likely present on Mars and on some of the moons of the outer solar system. These efforts suggest that there could be many worlds on which life, and occasionally intelligent life, might arise. Searches for radio signals or optical flashes from other star systems that would indicate the presence of extraterrestrial intelligence have so far proved fruitless, but detection of such signals would have an enormous scientific and cultural impact.

Argument for extraterrestrial intelligence

The argument for the existence of extraterrestrial intelligence is based on the so-called principle of mediocrity. Widely believed by astronomers since the work of Nicolaus Copernicus, this principle states that the properties and evolution of the solar system are not unusual in any important way. Consequently, the processes on Earth that led to life, and eventually to thinking beings, could have occurred throughout the cosmos.

The most important assumptions in this argument are that (1) planets capable of spawning life are common, (2) biota will spring up on such worlds, and (3) the workings of natural selection on planets with life will at least occasionally produce intelligent species. To date, only the first of these assumptions has been proven. However, astronomers have found several small rocky planets that, like Earth, are the right distance from their stars to have atmospheres and oceans able to support life. Unlike the efforts that have detected massive, Jupiter-size planets by measuring the wobble they induce in their parent stars, the search for smaller worlds involves looking for the slight dimming of a star that occurs if an Earth-size planet passes in front of it. The U.S. satellite Kepler, launched in 2009, found thousands of planets, more than 20 of which are Earth-sized planets in the habitable zone where liquid water can survive on the surface, by observing such transits. Another approach is to construct space-based telescopes that can analyze the light reflected from the atmospheres of planets around other stars, in a search for gases such as oxygen or methane that are indicators of biological activity. In addition, space probes are trying to find evidence that the conditions for life might have emerged on Mars or other worlds in the solar system, thus addressing assumption 2. Proof of assumption 3, that thinking beings will evolve on some of the worlds with life, requires finding direct evidence. This evidence might be encounters, discovery of physical artifacts, or the detection of signals. Claims of encounters are problematic. Despite decades of reports involving unidentified flying objects, crashed spacecraft, crop circles, and abductions, most scientists remain unconvinced that any of these are adequate proof of visiting aliens.

Searching for extraterrestrial intelligence:

Artifacts in the solar system

Extraterrestrial artifacts have not yet been found. At the beginning of the 20th century, American astronomer Percival Lowell claimed to see artificially constructed canals on Mars. These would have been convincing proof of intelligence, but the features seen by Lowell were in fact optical illusions. Since 1890, some limited telescopic searches for alien objects near Earth have been made. These investigated the so-called Lagrangian points, stable locations in the Earth-Moon system. No large objects—at least down to several tens of metres in size—were seen.

SETI

The most promising scheme for finding extraterrestrial intelligence is to search for electromagnetic signals, more particularly radio or light, that may be beamed toward Earth from other worlds, either inadvertently (in the same way that Earth leaks television and radar signals into space) or as a deliberate beacon signal. Physical law implies that interstellar travel requires enormous amounts of energy or long travel times. Sending signals, on the other hand, requires only modest energy expenditure, and the messages travel at the speed of light.

Radio searches

Projects to look for such signals are known as the search for extraterrestrial intelligence (SETI). The first modern SETI experiment was American astronomer Frank Drake’s Project Ozma, which took place in 1960. Drake used a radio telescope (essentially a large antenna) in an attempt to uncover signals from nearby Sun-like stars. In 1961 Drake proposed what is now known as the Drake equation, which estimates the number of signaling worlds in the Milky Way Galaxy. This number is the product of terms that define the frequency of habitable planets, the fraction of habitable planets upon which intelligent life will arise, and the length of time sophisticated societies will transmit signals. Because many of these terms are unknown, the Drake equation is more useful in defining the problems of detecting extraterrestrial intelligence than in predicting when, if ever, this will happen.

By the mid-1970s the technology used in SETI programs had advanced enough for the National Aeronautics and Space Administration to begin SETI projects, but concerns about wasteful government spending led Congress to end these programs in 1993. However, SETI projects funded by private donors (in the United States) continued. One such search was Project Phoenix, which began in 1995 and ended in 2004. Phoenix scrutinized approximately 1,000 nearby star systems (within 150 light-years of Earth), most of which were similar in size and brightness to the Sun. The search was conducted on several radio telescopes, including the 305-metre (1,000-foot) radio telescope at the Arecibo Observatory in Puerto Rico, and was run by the SETI Institute of Mountain View, California.

Other radio SETI experiments, such as Project SERENDIP V (begun in 2009 by the University of California at Berkeley) and Australia’s Southern SERENDIP (begun in 1998 by the University of Western Sydney at Macarthur), scan large tracts of the sky and make no assumption about the directions from which signals might come. The former uses the Green Bank Telescope and, until its collapse in 2020, the Arecibo telescope, and the latter (which ended in 2005) was carried out with the 64-metre (210-foot) telescope near Parkes, New South Wales. Such sky surveys are generally less sensitive than targeted searches of individual stars, but they are able to “piggyback” onto telescopes that are already engaged in making conventional astronomical observations, thus securing a large amount of search time. In contrast, targeted searches such as Project Phoenix require exclusive telescope access.

In 2007 a new instrument, jointly built by the SETI Institute and the University of California at Berkeley and designed for round-the-clock SETI observations, began operation in northeastern California. The Allen Telescope Array (ATA, named after its principal funder, American technologist Paul Allen) has 42 small (6 metres [20 feet] in diameter) antennas. When complete, the ATA will have 350 antennas and be hundreds of times faster than previous experiments in the search for transmissions from other worlds.

Beginning in 2016, the Breakthrough Listen project began a 10-year survey of the one million closest stars, the nearest 100 galaxies, the plane of the Milky Way Galaxy, and the galactic centre using the Parkes telescope and the 100-metre (328-foot) telescope at the National Radio Astronomy Observatory in Green Bank, West Virginia. That same year the largest single-dish radio telescope in the world, the Five-hundred-meter Aperture Spherical Radio Telescope in China, began operation and had searching for extraterrestrial intelligence as one of its objectives.

Since 1999 some of the data collected by Project SERENDIP (and since 2016, Breakthrough Listen) has been distributed on the Web for use by volunteers who have downloaded a free screen saver, [email protected] The screen saver searches the data for signals and sends its results back to Berkeley. Because the screen saver is used by several million people, enormous computational power is available to look for a variety of signal types. Results from the home processing are compared with subsequent observations to see if detected signals appear more than once, suggesting that they may warrant further confirmation study.

Nearly all radio SETI searches have used receivers tuned to the microwave band near 1,420 megahertz. This is the frequency of natural emission from hydrogen and is a spot on the radio dial that would be known by any technically competent civilization. The experiments hunt for narrowband signals (typically 1 hertz wide or less) that would be distinct from the broadband radio emissions naturally produced by objects such as pulsars and interstellar gas. Receivers used for SETI contain sophisticated digital devices that can simultaneously measure radio energy in many millions of narrowband channels.

Optical SETI

SETI searches for light pulses are also under way at a number of institutions, including the University of California at Berkeley as well as Lick Observatory and Harvard University. The Berkeley and Lick experiments investigate nearby star systems, and the Harvard effort scans all the sky that is visible from Massachusetts. Sensitive photomultiplier tubes are affixed to conventional mirror telescopes and are configured to look for flashes of light lasting a nanosecond (a billionth of a second) or less. Such flashes could be produced by extraterrestrial societies using high-powered pulsed lasers in a deliberate effort to signal other worlds. By concentrating the energy of the laser into a brief pulse, the transmitting civilization could ensure that the signal momentarily outshines the natural light from its own sun.

Results and two-way communication

No confirmed extraterrestrial signals have yet been found by SETI experiments. Early searches, which were unable to quickly determine whether an emission was terrestrial or extraterrestrial in origin, would frequently find candidate signals. The most famous of these was the so-called “Wow” signal, measured by a SETI experiment at Ohio State University in 1977. Subsequent observations failed to find this signal again, and so the Wow signal, as well as other similar detections, is not considered a good candidate for being extraterrestrial.

Most SETI experiments do not transmit signals into space. Because the distance even to nearby extraterrestrial intelligence could be hundreds or thousands of light-years, two-way communication would be tedious. For this reason, SETI experiments focus on finding signals that could have been deliberately transmitted or could be the result of inadvertent emission from extraterrestrial civilizations.

(The search for extraterrestrial intelligence (SETI) is a collective term for scientific searches for intelligent extraterrestrial life, for example, monitoring electromagnetic radiation for signs of transmissions from civilizations on other planets.

Scientific investigation began shortly after the advent of radio in the early 1900s, and focused international efforts have been going on since the 1980s. In 2015, Stephen Hawking and Russian billionaire Yuri Milner announced a well-funded effort called Breakthrough Listen.

Breakthrough Listen is a project to search for intelligent extraterrestrial communications in the Universe. With $100 million in funding and thousands of hours of dedicated telescope time on state-of-the-art facilities, it is the most comprehensive search for alien communications to date. The project began in January 2016, and is expected to continue for 10 years. It is a component of Yuri Milner's Breakthrough Initiatives program. The science program for Breakthrough Listen is based at Berkeley SETI Research Center, located in the Astronomy Department at the University of California, Berkeley.

The project uses radio wave observations from the Green Bank Observatory and the Parkes Observatory, and visible light observations from the Automated Planet Finder. Targets for the project include one million nearby stars and the centers of 100 galaxies. All data generated from the project are available to the public, and SETI@Home (BOINC) is used for some of the data analysis. The first results were published in April 2017, with further updates expected every 6 months.)

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1181 2021-11-05 15:23:51

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,952

Re: Miscellany

1158) Cardiology

The term cardiology is derived from the Greek words “cardia,” which refers to the heart and “logy” meaning “study of.” Cardiology is a branch of medicine that concerns diseases and disorders of the heart, which may range from congenital defects through to acquired heart diseases such as coronary artery disease and congestive heart failure.

Physicians who specialize in cardiology are called cardiologists and they are responsible for the medical management of various heart diseases. Cardiac surgeons are the specialist physicians who perform surgical procedures to correct heart disorders.

Cardiology milestones

Some of the major milestones in the discipline of cardiology are listed below:

1628 : The circulation of blood was described by an English Physician William Harvey.

1706 : A French anatomy professor, Raymond de Vieussens, described the structure of the heart's chambers and vessels.

1733 : Blood pressure was first measured by an English clergyman and scientist called Stephen Hales.

1816 : A French physician, Rene Laennec, invented the stethoscope.

1903 : A Dutch physiologist Willem Einthoven, developed the electrocardiograph or ECG, a vital instrument used to measure the electrical activity of the heart and diagnose heart abnormalities.

1912 : An American physician, James Herric, described atherosclerosis – one of the most common diseases of the heart.

1938 : Robert Gross, an American surgeon, performed the first heart surgery

1951 : The first artificial heart valve was developed by Charles Hufnagel.

1952 : An American surgeon called Floyd John Lewis performed the first open heart surgery

1967 : Christian Barnard, a South African surgeon, performed the first whole heart transplant

1982 : An American surgeon called Willem DeVries implanted a permanent artificial heart designed by Robert Jarvik, into a patient.

Cardiology is a branch of medicine that deals with the disorders of the heart as well as some parts of the circulatory system. The field includes medical diagnosis and treatment of congenital heart defects, coronary artery disease, heart failure, valvular heart disease and electrophysiology. Physicians who specialize in this field of medicine are called cardiologists, a specialty of internal medicine. Pediatric cardiologists are pediatricians who specialize in cardiology. Physicians who specialize in cardiac surgery are called cardiothoracic surgeons or cardiac surgeons, a specialty of general surgery.

Cardiology, medical specialty dealing with the diagnosis and treatment of diseases and abnormalities involving the heart and blood vessels. Cardiology is a medical, not surgical, discipline. Cardiologists provide the continuing care of patients with cardiovascular disease, performing basic studies of heart function and supervising all aspects of therapy, including the administration of drugs to modify heart functions.

The foundation of the field of cardiology was laid in 1628, when English physician William Harvey published his observations on the anatomy and physiology of the heart and circulation. From that period, knowledge grew steadily as physicians relied on scientific observation, rejecting the prejudices and superstitions of previous eras, and conducted fastidious and keen studies of the physiology, anatomy, and pathology of the heart and blood vessels. During the 18th and 19th centuries physicians acquired a deeper understanding of the vagaries of pulse and blood pressure, of heart sounds and heart murmurs (through the practice of auscultation, aided by the invention of the stethoscope by French physician René Laënnec), of respiration and exchange of blood gases in the lungs, of heart muscle structure and function, of congenital heart defects, of electrical activity in the heart muscle, and of irregular heart rhythms (arrhythmias). Dozens of clinical observations conducted in those centuries live on today in the vernacular of cardiology—for example, Adams-Stokes syndrome, a type of heart block named for Irish physicians Robert Adams and William Stokes; Austin Flint murmur, named for the American physician who discovered the disorder; and tetralogy of Fallot, a combination of congenital heart defects named for French physician Étienne-Louis-Arthur Fallot.

Much of the progress in cardiology during the 20th century was made possible by improved diagnostic tools. Electrocardiography, the measurement of electrical activity in the heart, evolved from research by Dutch physiologist Willem Einthoven in 1903, and radiological evaluation of the heart grew out of German physicist Wilhelm Conrad Röntgen’s experiments with X-rays in 1895. Echocardiography, the generation of images of the heart by directing ultrasound waves through the chest wall, was introduced in the early 1950s. Cardiac catheterization, invented in 1929 by German surgeon Werner Forssmann and refined soon after by American physiologists André Cournand and math Richards, opened the way for measuring pressure inside the heart, studying normal and abnormal electrical activity, and directly visualizing the heart chambers and blood vessels (angiography). Today the discipline of nuclear cardiology provides a means of measuring blood flow and contraction in heart muscle through the use of radioisotopes.

As diagnostic capabilities have grown, so have treatment options. Drugs have been developed by the pharmaceutical industry to treat heart failure, angina pectoris, coronary heart disease, hypertension (high blood pressure), arrhythmia, and infections such as endocarditis. In parallel with advances in cardiac catheterization and angiography, surgeons developed techniques for allowing the blood circulation to bypass the heart through heart-lung machines, thereby permitting surgical correction of all manner of acquired and congenital heart diseases. Other advances in cardiology include electrocardiographic monitors, pacemakers and defibrillators for detecting and treating arrhythmias, radio-frequency ablation of certain abnormal rhythms, and balloon angioplasty and other nonsurgical treatments of blood vessel obstruction. It is expected that discoveries in genetics and molecular biology will further aid cardiologists in their understanding of cardiovascular disease.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1182 2021-11-06 00:22:29

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,952

Re: Miscellany

1159) Nervous System

Nervous system, organized group of cells specialized for the conduction of electrochemical stimuli from sensory receptors through a network to the site at which a response occurs.

All living organisms are able to detect changes within themselves and in their environments. Changes in the external environment include those of light, temperature, sound, motion, and odour, while changes in the internal environment include those in the position of the head and limbs as well as in the internal organs. Once detected, these internal and external changes must be analyzed and acted upon in order to survive. As life on Earth evolved and the environment became more complex, the survival of organisms depended upon how well they could respond to changes in their surroundings. One factor necessary for survival was a speedy reaction or response. Since communication from one cell to another by chemical means was too slow to be adequate for survival, a system evolved that allowed for faster reaction. That system was the nervous system, which is based upon the almost instantaneous transmission of electrical impulses from one region of the body to another along specialized nerve cells called neurons.

Nervous systems are of two general types, diffuse and centralized. In the diffuse type of system, found in lower invertebrates, there is no brain, and neurons are distributed throughout the organism in a netlike pattern. In the centralized systems of higher invertebrates and vertebrates, a portion of the nervous system has a dominant role in coordinating information and directing responses. This centralization reaches its culmination in vertebrates, which have a well-developed brain and spinal cord. Impulses are carried to and from the brain and spinal cord by nerve fibres that make up the peripheral nervous system.

This article begins with a discussion of the general features of nervous systems—that is, their function of responding to stimuli and the rather uniform electrochemical processes by which they generate a response. Following that is a discussion of the various types of nervous systems, from the simplest to the most complex.

Form and function of nervous systems:

Stimulus-response coordination

The simplest type of response is a direct one-to-one stimulus-response reaction. A change in the environment is the stimulus; the reaction of the organism to it is the response. In single-celled organisms, the response is the result of a property of the cell fluid called irritability. In simple organisms, such as algae, protozoans, and fungi, a response in which the organism moves toward or away from the stimulus is called taxis. In larger and more complicated organisms—those in which response involves the synchronization and integration of events in different parts of the body—a control mechanism, or controller, is located between the stimulus and the response. In multicellular organisms, this controller consists of two basic mechanisms by which integration is achieved—chemical regulation and nervous regulation.

In chemical regulation, substances called hormones are produced by well-defined groups of cells and are either diffused or carried by the blood to other areas of the body where they act on target cells and influence metabolism or induce synthesis of other substances. The changes resulting from hormonal action are expressed in the organism as influences on, or alterations in, form, growth, reproduction, and behaviour.

Plants respond to a variety of external stimuli by utilizing hormones as controllers in a stimulus-response system. Directional responses of movement are known as tropisms and are positive when the movement is toward the stimulus and negative when it is away from the stimulus. When a seed germinates, the growing stem turns upward toward the light, and the roots turn downward away from the light. Thus, the stem shows positive phototropism and negative geotropism, while the roots show negative phototropism and positive geotropism. In this example, light and gravity are the stimuli, and directional growth is the response. The controllers are certain hormones synthesized by cells in the tips of the plant stems. These hormones, known as auxins, diffuse through the tissues beneath the stem tip and concentrate toward the shaded side, causing elongation of these cells and, thus, a bending of the tip toward the light. The end result is the maintenance of the plant in an optimal condition with respect to light.

In animals, in addition to chemical regulation via the endocrine system, there is another integrative system called the nervous system. A nervous system can be defined as an organized group of cells, called neurons, specialized for the conduction of an impulse—an excited state—from a sensory receptor through a nerve network to an effector, the site at which the response occurs.

Organisms that possess a nervous system are capable of much more complex behaviour than are organisms that do not. The nervous system, specialized for the conduction of impulses, allows rapid responses to environmental stimuli. Many responses mediated by the nervous system are directed toward preserving the status quo, or homeostasis, of the animal. Stimuli that tend to displace or disrupt some part of the organism call forth a response that results in reduction of the adverse effects and a return to a more normal condition. Organisms with a nervous system are also capable of a second group of functions that initiate a variety of behaviour patterns. Animals may go through periods of exploratory or appetitive behaviour, nest building, and migration. Although these activities are beneficial to the survival of the species, they are not always performed by the individual in response to an individual need or stimulus. Finally, learned behaviour can be superimposed on both the homeostatic and initiating functions of the nervous system.

Intracellular systems

All living cells have the property of irritability, or responsiveness to environmental stimuli, which can affect the cell in different ways, producing, for example, electrical, chemical, or mechanical changes. These changes are expressed as a response, which may be the release of secretory products by gland cells, the contraction of muscle cells, the bending of a plant-stem cell, or the beating of whiplike “hairs,” or cilia, by ciliated cells.

The responsiveness of a single cell can be illustrated by the behaviour of the relatively simple amoeba. Unlike some other protozoans, an amoeba lacks highly developed structures that function in the reception of stimuli and in the production or conduction of a response. The amoeba behaves as though it had a nervous system, however, because the general responsiveness of its cytoplasm serves the functions of a nervous system. An excitation produced by a stimulus is conducted to other parts of the cell and evokes a response by the animal. An amoeba will move to a region of a certain level of light. It will be attracted by chemicals given off by foods and exhibit a feeding response. It will also withdraw from a region with noxious chemicals and exhibit an avoidance reaction upon contacting other objects.

Organelle systems

In more-complex protozoans, specialized cellular structures, or organelles, serve as receptors of stimulus and as effectors of response. Receptors include stiff sensory bristles in ciliates and the light-sensitive eyespots of flagellates. Effectors include cilia (slender, hairlike projections from the cell surface), flagella (elongated, whiplike cilia), and other organelles associated with drawing in food or with locomotion. Protozoans also have subcellular cytoplasmic filaments that, like muscle tissue, are contractile. The vigorous contraction of the protozoan Vorticella, for example, is the result of contraction of a threadlike structure called a myoneme in the stalk.

Although protozoans clearly have specialized receptors and effectors, it is not certain that there are special conducting systems between the two. In a ciliate such as Paramecium, the beating of the cilia—which propels it along—is not random, but coordinated. Beating of the cilia begins at one end of the organism and moves in regularly spaced waves to the other end, suggesting that coordinating influences are conducted longitudinally. A system of fibrils connecting the bodies in which the cilia are rooted may provide conducting paths for the waves, but coordination of the cilia may also take place without such a system. Each cilium may respond to a stimulus carried over the cell surface from an adjacent cilium—in which case, coordination would be the result of a chain reaction from cilium to cilium.

The best evidence that formed structures are responsible for coordination comes from another ciliate, Euplotes, which has a specialized band of ciliary rows (membranelles) and widely separated tufts of cilia (cirri). By means of the coordinated action of these structures, Euplotes is capable of several complicated movements in addition to swimming (e.g., turning sharply, moving backward, spinning). The five cirri at the rear of the organism are connected to the anterior end in an area known as the motorium. The fibres of the motorium apparently provide coordination between the cirri and the membranelles. The membranelles, cirri, and motorium constitute a neuromotor system.

Nervous systems

The basic pattern of stimulus-response coordination in animals is an organization of receptor, adjustor, and effector units. External stimuli are received by the receptor cells, which, in most cases, are neurons. (In a few instances, a receptor is a non-nervous sensory epithelial cell, such as a hair cell of the inner ear or a taste cell, which stimulates adjacent neurons.) The stimulus is modified, or transduced, into an electrical impulse in the receptor neuron. This incoming excitation, or afferent impulse, then passes along an extension, or axon, of the receptor to an adjustor, called an interneuron. (All neurons are capable of conducting an impulse, which is a brief change in the electrical charge on the cell membrane. Such an impulse can be transmitted, without loss in strength, many times along an axon until the message, or input, reaches another neuron, which in turn is excited.) The interneuron-adjustor selects, interprets, or modifies the input from the receptor and sends an outgoing, or efferent, impulse to an efferent neuron, such as a motor neuron. The efferent neuron, in turn, makes contact with an effector such as a muscle or gland, which produces a response.

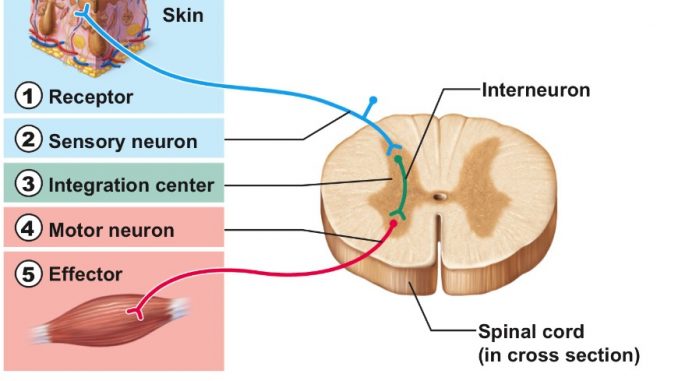

In the simplest arrangement, the receptor-adjustor-effector units form a functional group known as the reflex arc. Sensory cells carry afferent impulses to a central interneuron, which makes contact with a motor neuron. The motor neuron carries efferent impulses to the effector, which produces the response. Three types of neurons are involved in this reflex arc, but a two-neuron arc, in which the receptor makes contact directly with the motor neuron, also occurs. In a two-neuron arc, simple reflexes are prompt, short-lived, and automatic and involve only a part of the body. Examples of simple reflexes are the contraction of a muscle in response to stretch, the blink of the eye when the cornea is touched, and salivation at the sight of food. Reflexes of this type are usually involved in maintaining homeostasis.

The differences between simple and complex nervous systems lie not in the basic units but in their arrangement. In higher nervous systems, there are more interneurons concentrated in the central nervous system (brain and spinal cord) that mediate the impulses between afferent and efferent neurons. Sensory impulses from particular receptors travel through specific neuronal pathways to the central nervous system. Within the central nervous system, though, the impulse can travel through multiple pathways formed by numerous neurons. Theoretically, the impulse can be distributed to any of the efferent motor neurons and produce a response in any of the effectors. It is also possible for many kinds of stimuli to produce the same response.

As a result of the integrative action of the interneuron, the behaviour of the organism is more than the simple sum of its reflexes; it is an integrated whole that exhibits coordination between many individual reflexes. Reflexes can occur in a complicated sequence producing elaborate behaviour patterns. Behaviour in such cases is characterized not by inherited, stereotyped responses but by flexibility and adaptability to circumstances. Many automatic, unconditioned reflexes can be modified by or adapted to new stimuli. The experiments of Russian physiologist Ivan Petrovich Pavlov, for example, showed that if an animal salivates at the sight of food while another stimulus, such as the sound of a bell, occurs simultaneously, the sound alone can induce salivation after several trials. This response, known as a conditioned reflex, is a form of learning. The behaviour of the animal is no longer limited by fixed, inherited reflex arcs but can be modified by experience and exposure to an unlimited number of stimuli. The most evolved nervous systems are capable of even higher associative functions such as thinking and memory. The complex manipulation of the signals necessary for these functions depends to a great extent on the number and intricacy of the arrangement of interneurons.

The nerve cell

The watershed of all studies of the nervous system was an observation made in 1889 by Spanish scientist Santiago Ramón y Cajal, who reported that the nervous system is composed of individual units that are structurally independent of one another and whose internal contents do not come into direct contact. According to his hypothesis, now known as the neuron theory, each nerve cell communicates with others through contiguity rather than continuity. That is, communication between adjacent but separate cells must take place across the space and barriers separating them. It has since been proved that Cajal’s theory is not universally true, but his central idea—that communication in the nervous system is largely communication between independent nerve cells—has remained an accurate guiding principle for all further study.

There are two basic cell types within the nervous system: neurons and neuroglial cells.

The neuron

In the human brain there are an estimated 85 billion to 200 billion neurons. Each neuron has its own identity, expressed by its interactions with other neurons and by its secretions; each also has its own function, depending on its intrinsic properties and location as well as its inputs from other select groups of neurons, its capacity to integrate those inputs, and its ability to transmit the information to another select group of neurons.

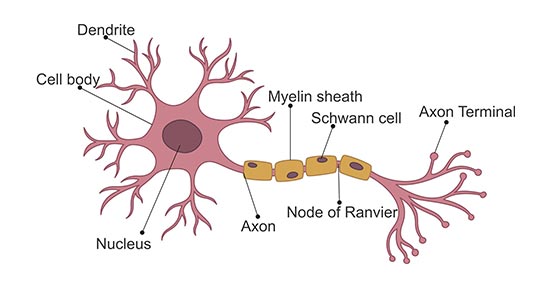

With few exceptions, most neurons consist of three distinct regions, as shown in the diagram: (1) the cell body, or soma; (2) the nerve fibre, or axon; and (3) the receiving processes, or dendrites.

Soma

Plasma membrane:

The neuron is bound by a plasma membrane, a structure so thin that its fine detail can be revealed only by high-resolution electron microscopy. About half of the membrane is the lipid bilayer, two sheets of mainly phospholipids with a space between. One end of a phospholipid molecule is hydrophilic, or water attaching, and the other end is hydrophobic, or water repelling. The bilayer structure results when the hydrophilic ends of the phospholipid molecules in each sheet turn toward the watery mediums of both the cell interior and the extracellular environment, while the hydrophobic ends of the molecules turn in toward the space between the sheets. These lipid layers are not rigid structures; the loosely bonded phospholipid molecules can move laterally across the surfaces of the membrane, and the interior is in a highly liquid state.

The centre of the field is occupied by the cell body, or soma, of the neuron. Most of the cell body is occupied by the nucleus, which contains a nucleolus. The double membrane of the nucleus is surrounded by cytoplasm, containing elements of the Golgi apparatus lying at the base of the apical dendrite. Mitochondria can be seen dispersed in the cytoplasm, which also contains the rough endoplasmic reticulum. Another dendrite is seen to the side, and the axon hillock is shown at the initial segment of the emerging axon. A synapse impinges onto the neuron close to the axon hillock.

Embedded within the lipid bilayer are proteins, which also float in the liquid environment of the membrane. These include glycoproteins containing polysaccharide chains, which function, along with other carbohydrates, as adhesion sites and recognition sites for attachment and chemical interaction with other neurons. The proteins provide another basic and crucial function: those which penetrate the membrane can exist in more than one conformational state, or molecular shape, forming channels that allow ions to pass between the extracellular fluid and the cytoplasm, or internal contents of the cell. In other conformational states, they can block the passage of ions. This action is the fundamental mechanism that determines the excitability and pattern of electrical activity of the neuron.

A complex system of proteinaceous intracellular filaments is linked to the membrane proteins. This cytoskeleton includes thin neurofilaments containing actin, thick neurofilaments similar to myosin, and microtubules composed of tubulin. The filaments are probably involved with movement and translocation of the membrane proteins, while microtubules may anchor the proteins to the cytoplasm.

Nucleus

Each neuron contains a nucleus defining the location of the soma. The nucleus is surrounded by a double membrane, called the nuclear envelope, that fuses at intervals to form pores allowing molecular communication with the cytoplasm. Within the nucleus are the chromosomes, the genetic material of the cell, through which the nucleus controls the synthesis of proteins and the growth and differentiation of the cell into its final form. Proteins synthesized in the neuron include enzymes, receptors, hormones, and structural proteins for the cytoskeleton.

Organelles

The endoplasmic reticulum (ER) is a widely spread membrane system within the neuron that is continuous with the nuclear envelope. It consists of series of tubules, flattened sacs called cisternae, and membrane-bound spheres called vesicles. There are two types of ER. The rough endoplasmic reticulum (RER) has rows of knobs called ribosomes on its surface. Ribosomes synthesize proteins that, for the most part, are transported out of the cell. The RER is found only in the soma. The smooth endoplasmic reticulum (SER) consists of a network of tubules in the soma that connects the RER with the Golgi apparatus. The tubules can also enter the axon at its initial segment and extend to the axon terminals.

The Golgi apparatus is a complex of flattened cisternae arranged in closely packed rows. Located close to and around the nucleus, it receives proteins synthesized in the RER and transferred to it via the SER. At the Golgi apparatus, the proteins are attached to carbohydrates. The glycoproteins so formed are packaged into vesicles that leave the complex to be incorporated into the cell membrane.

Axon

The axon arises from the soma at a region called the axon hillock, or initial segment. This is the region where the plasma membrane generates nerve impulses; the axon conducts these impulses away from the soma or dendrites toward other neurons. Large axons acquire an insulating myelin sheath and are known as myelinated, or medullated, fibres. Myelin is composed of 80 percent lipid and 20 percent protein; cholesterol is one of the major lipids, along with variable amounts of cerebrosides and phospholipids. Concentric layers of these lipids separated by thin layers of protein give rise to a high-resistance, low-capacitance electrical insulator interrupted at intervals by gaps called nodes of Ranvier, where the nerve membrane is exposed to the external environment. In the central nervous system the myelin sheath is formed from glial cells called oligodendrocytes, and in peripheral nerves it is formed from Schwann cells (see below The neuroglia).

While the axon mainly conducts nerve impulses from the soma to the terminal, the terminal itself secretes chemical substances called neurotransmitters. The synthesis of these substances can occur in the terminal itself, but the synthesizing enzymes are formed by ribosomes in the soma and must be transported down the axon to the terminal. This process is known as axoplasmic flow; it occurs in both directions along the axon and may be facilitated by microtubules.

At the terminal of the axon, and sometimes along its length, are specialized structures that form junctions with other neurons and with muscle cells. These junctions are called synapses. Presynaptic terminals, when seen by light microscope, look like small knobs and contain many organelles. The most numerous of these are synaptic vesicles, which, filled with neurotransmitters, are often clumped in areas of the terminal membrane that appear to be thickened. The thickened areas are called presynaptic dense projections, or active zones.

The presynaptic terminal is unmyelinated and is separated from the neuron or muscle cell onto which it impinges by a gap called the synaptic cleft, across which neurotransmitters diffuse when released from the vesicles. In nerve-muscle junctions the synaptic cleft contains a structure called the basal lamina, which holds an enzyme that destroys neurotransmitters and thus regulates the amount that reaches the postsynaptic receptors on the receiving cell. Most knowledge of postsynaptic neurotransmitter receptors comes from studies of the receptor on muscle cells. This receptor, called the end plate, is a glycoprotein composed of five subunits. Other neurotransmitter receptors do not have the same structure, but they are all proteins and probably have subunits with a central channel that is activated by the neurotransmitter.

While the chemically mediated synapse described above forms the majority of synapses in vertebrate nervous systems, there are other types of synapses in vertebrate brains and, in especially great numbers, in invertebrate and fish nervous systems. At these synapses there is no synaptic gap; instead, there are gap junctions, direct channels between neurons that establish a continuity between the cytoplasm of adjacent cells and a structural symmetry between the pre- and postsynaptic sites. Rapid neuronal communication at these junctions is probably electrical in nature. (For further discussion, see below Transmission at the synapse.)

Dendrites

Besides the axon, neurons have other branches called dendrites that are usually shorter than axons and are unmyelinated. Dendrites are thought to form receiving surfaces for synaptic input from other neurons. In many dendrites these surfaces are provided by specialized structures called dendritic spines, which, by providing discrete regions for the reception of nerve impulses, isolate changes in electrical current from the main dendritic trunk.

The traditional view of dendritic function presumes that only axons conduct nerve impulses and only dendrites receive them, but dendrites can form synapses with dendrites and axons and even somata can receive impulses. Indeed, some neurons have no axon; in these cases nervous transmission is carried out by the dendrites.

The neuroglia

Neurons form a minority of the cells in the nervous system. Exceeding them in number by at least 10 to 1 are neuroglial cells, which exist in the nervous systems of invertebrates as well as vertebrates. Neuroglia can be distinguished from neurons by their lack of axons and by the presence of only one type of process. In addition, they do not form synapses, and they retain the ability to divide throughout their life span. While neurons and neuroglia lie in close apposition to one another, there are no direct junctional specializations, such as gap junctions, between the two types. Gap junctions do exist between neuroglial cells.

Types of neuroglia

Apart from conventional histological and electron-microscopic techniques, immunologic techniques are used to identify different neuroglial cell types. By staining the cells with antibodies that bind to specific protein constituents of different neuroglia, neurologists have been able to discern two (in some opinions, three) main groups of neuroglia: (1) astrocytes, subdivided into fibrous and protoplasmic types; (2) oligodendrocytes, subdivided into interfascicular and perineuronal types; and sometimes (3) microglia.

Fibrous astrocytes are prevalent among myelinated nerve fibres in the white matter of the central nervous system. Organelles seen in the somata of neurons are also seen in astrocytes, but they appear to be much sparser. These cells are characterized by the presence of numerous fibrils in their cytoplasm. The main processes exit the cell in a radial direction (hence the name astrocyte, meaning “star-shaped cell”), forming expansions and end feet at the surfaces of vascular capillaries.