Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#1201 2021-11-25 14:51:23

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,952

Re: Miscellany

1178) Dice

Dice, singular die, small objects (polyhedrons) used as implements for gambling and the playing of social games. The most common form of die is the cube, with each side marked with from one to six small dots (spots). The spots are arranged in conventional patterns and placed so that spots on opposite sides always add up to seven: one and six, two and five, three and four. There are, however, many dice with differing arrangements of spots or other face designs, such as poker dice and crown and anchor dice, and many other shapes of dice with 4, 5, 7, 8, 10, 12, 16, and 20 or more sides. Dice are generally used to generate a random outcome (most often a number or a combination of numbers) in which the physical design and quantity of the dice thrown determine the mathematical probabilities.

In most games played with dice, the dice are thrown (rolled, flipped, shot, tossed, or cast), from the hand or from a receptacle called a dice cup, in such a way that they will fall at random. The symbols that face up when the dice come to rest are the relevant ones, and their combination decides, according to the rules of the game being played, whether the thrower (often called the shooter) wins, loses, scores points, continues to throw, or loses possession of the dice to another shooter. Dice have also been used for at least 5,000 years in connection with board games, primarily for the movement of playing pieces.

History

Dice and their forerunners are the oldest gaming implements known to man. Sophocles reported that dice were invented by the legendary Greek Palamedes during the siege of Troy, whereas Herodotus maintained that they were invented by the Lydians in the days of King Atys. Both “inventions” have been discredited by numerous archaeological finds demonstrating that dice were used in many earlier societies.

The precursors of dice were magical devices that primitive people used for the casting of lots to divine the future. The probable immediate forerunners of dice were knucklebones (astragals: the anklebones of sheep, buffalo, or other animals), sometimes with markings on the four faces. Such objects are still used in some parts of the world.

In later Greek and Roman times, most dice were made of bone and ivory; others were of bronze, agate, rock crystal, onyx, jet, alabaster, marble, amber, porcelain, and other materials. Cubical dice with markings practically equivalent to those of modern dice have been found in Chinese excavations from 600 BCE and in Egyptian tombs dating from 2000 BCE. The first written records of dice are found in the ancient Sanskrit epic the Mahabharata, composed in India more than 2,000 years ago. Pyramidal dice (with four sides) are as old as cubical ones; such dice were found with the so-called Royal Game of Ur, one of the oldest complete board games ever discovered, dating back to Sumer in the 3rd millennium BCE. Another variation of dice is teetotums (a type of spinning top).

It was not until the 16th century that dice games were subjected to mathematical analysis—by Italians Girolamo Cardano and Galileo, among others—and the concepts of randomness and probability were conceived. Until then the prevalent attitude had been that dice and similar objects fell the way they did because of the indirect action of gods or supernatural forces.

Manufacture

Almost all modern dice are made of a cellulose or other plastic material. There are two kinds: perfect, or casino, dice with sharp edges and corners, commonly made by hand and true to a tolerance of 0.0001 inch (0.00026 cm) and used mostly in gambling casinos to play math or other gambling games, and round-cornered, or imperfect, dice, which are machine-made and are generally used to play social and board games.

Cheating with dice

Perfect dice are also known as fair dice, levels, or squares, whereas dice that have been tampered with, or expressly made for cheating, are known as crooked or gaffed dice. Such dice have been found in the tombs of ancient Egypt and the Orient, in prehistoric graves of North and South America, and in Viking graves. There are many forms of crooked dice. Any die that is not a perfect cube will not act according to correct mathematical odds and is called a shape, a brick, or a flat. For example, a cube that has been shaved down on one or more sides so that it is slightly brick-shaped will tend to settle down most often on its larger surfaces, whereas a cube with bevels, on which one or more sides have been trimmed so that they are slightly convex, will tend to roll off of its convex sides. Shapes are the most common of all crooked dice. Loaded dice (called tappers, missouts, passers, floppers, cappers, or spot loaders, depending on how and where extra weight has been applied) may prove to be perfect cubes when measured with calipers, but extra weight just below the surface on some sides will make the opposite sides come up more often than they should. The above forms of dice are classed as percentage dice: they will not always fall with the intended side up but will do so often enough in the long run for the cheaters to win the majority of their bets.

A die with one or more faces each duplicated on its opposite side and certain numbers omitted will produce some numbers in disproportionate frequency and never produce certain others; for example, two dice marked respectively with duplicates of 3-4-5 and 1-5-6 can never produce combinations totaling 2, 3, 7, or 12, which are the only combinations with which one can lose in the game of math. Such dice, called busters or tops and bottoms, are used as a rule only by accomplished dice cheats, who introduce them into the game by sleight of hand (“switching”). Since it is impossible to see more than three sides of a cube at any one time, tops and bottoms are unlikely to be detected by the inexperienced gambler.

Yet another form of cheating with dice produces controlled shots, in which one or more fair dice are spun, rolled, or thrown so that a certain side or sides will come up, or not come up, depending on the desired effect. Known by such colourful names as the whip shot, the blanket roll, the slide shot, the twist shot, and the Greek shot, this form of cheating requires considerable manual dexterity and practice. Fear of such ability led casinos to install tables with slanted end walls and to insist that dice be thrown so as to rebound from them.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1202 2021-11-26 14:09:19

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,952

Re: Miscellany

1178) Paraffin wax

Paraffin wax is a white or colorless soft, solid wax. It's made from saturated hydrocarbons. It's often used in skin-softening salon and spa treatments on the hands, cuticles, and feet because it's colorless, tasteless, and odorless. It can also be used to provide pain relief to sore joints and muscles.

Paraffin wax, colourless or white, somewhat translucent, hard wax consisting of a mixture of solid straight-chain hydrocarbons ranging in melting point from about 48° to 66° C (120° to 150° F). Paraffin wax is obtained from petroleum by dewaxing light lubricating oil stocks. It is used in candles, wax paper, polishes, cosmetics, and electrical insulators. It assists in extracting perfumes from flowers, forms a base for medical ointments, and supplies a waterproof coating for wood. In wood and paper matches, it helps to ignite the matchstick by supplying an easily vaporized hydrocarbon fuel.

Paraffin wax was first produced commercially in 1867, less than 10 years after the first petroleum well was drilled. Paraffin wax precipitates readily from petroleum on chilling. Technical progress has served only to make the separations and filtration more efficient and economical. Purification methods consist of chemical treatment, decolorization by adsorbents, and fractionation of the separated waxes into grades by distillation, recrystallization, or both. Crude oils differ widely in wax content.

Synthetic paraffin wax was introduced commercially after World War II as one of the products obtained in the Fischer–Tropsch reaction, which converts coal gas to hydrocarbons. Snow-white and harder than petroleum paraffin wax, the synthetic product has a unique character and high purity that make it a suitable replacement for certain vegetable waxes and as a modifier for petroleum waxes and for some plastics, such as polyethylene. Synthetic paraffin waxes may be oxidized to yield pale-yellow, hard waxes of high molecular weight that can be saponified with aqueous solutions of organic or inorganic alkalies, such as borax, sodium hydroxide, triethanolamine, and morpholine. These wax dispersions serve as heavy-duty floor wax, as waterproofing for textiles and paper, as tanning agents for leather, as metal-drawing lubricants, as rust preventives, and for masonry and concrete treatment.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1203 2021-11-27 14:18:03

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,952

Re: Miscellany

1179) Petroleum jelly

Petroleum jelly, petrolatum, white petrolatum, soft paraffin, or multi-hydrocarbon, CAS number 8009-03-8, is a semi-solid mixture of hydrocarbons (with carbon numbers mainly higher than 25), originally promoted as a topical ointment for its healing properties. The Vaseline brand is a well known American brand of petroleum jelly since 1870.

After petroleum jelly became a medicine-chest staple, consumers began to use it for cosmetic purposes and for many ailments including toenail fungus, genital rashes (non-STD: sexually transmitted diseases), nosebleeds, diaper rash, and common colds. Its folkloric medicinal value as a "cure-all" has since been limited by better scientific understanding of appropriate and inappropriate uses. It is recognized by the U.S. Food and Drug Administration (FDA) as an approved over-the-counter (OTC) skin protectant and remains widely used in cosmetic skin care, where it is often loosely referred to as mineral oil.

History

Marco polo on 1273 describes the oil exportation of Baku oil by hundreds of camels and ships for burning and as ointment for treating.

Native Americans discovered the use of petroleum jelly for protecting and healing skin. Sophisticated oil pits had been built as early as 1415–1450 in Western Pennsylvania. In 1859, workers operating the United States of America's first oil rigs noticed a paraffin-like material forming on rigs in the course of investigating malfunctions. Believing the substance hastened healing, the workers used the jelly on cuts and burns.

Robert Chesebrough, a young chemist whose previous work of distilling fuel from the oil of sperm whales had been rendered obsolete by petroleum, went to Titusville, Pennsylvania, US, to see what new materials had commercial potential. Chesebrough took the unrefined black "rod wax", as the drillers called it, back to his laboratory to refine it and explore potential uses. He discovered that by distilling the lighter, thinner oil products from the rod wax, he could create a light-colored gel. Chesebrough patented the process of making petroleum jelly by U.S. Patent 127,568 in 1872. The process involved vacuum distillation of the crude material followed by filtration of the still residue through bone char. Chesebrough traveled around New York demonstrating the product to encourage sales by burning his skin with acid or an open flame, then spreading the ointment on his injuries and showing his past injuries healed, he claimed, by his miracle product. He opened his first factory in 1870 in Brooklyn using the name Vaseline.

Physical properties

Petroleum jelly is a mixture of hydrocarbons, with a melting point that depends on the exact proportions. The melting point is typically between 40 and 70 °C (105 and 160 °F). It is flammable only when heated to liquid; then the fumes will light, not the liquid itself, so a wick material like leaves, bark, or small twigs is needed to ignite petroleum jelly. It is colorless (or of a pale yellow color when not highly distilled), translucent, and devoid of taste and smell when pure. It does not oxidize on exposure to the air and is not readily acted on by chemical reagents. It is insoluble in water. It is soluble in dichloromethane, chloroform, benzene, diethyl ether, carbon disulfide and turpentine. It acts as a plasticizer on polypropylene (PP), but is compatible with most other plastics. It is a semi-solid, in that it holds its shape indefinitely like a solid, but it can be forced to take the shape of its container without breaking apart, like a liquid, though it does not flow on its own.

Depending on the specific application of petroleum jelly, it may be USP, B.P., or Ph. Eur. grade. This pertains to the processing and handling of the petroleum jelly so it is suitable for medicinal and personal-care applications.

Uses

Most uses of petroleum jelly exploit its lubricating and coating properties, including use on dry lips and dry skin. Below are some examples of the uses of petroleum jelly.

Medical treatment

Vaseline brand First Aid Petroleum Jelly, or carbolated petroleum jelly containing phenol to give the jelly additional antibacterial effect, has been discontinued. During World War II, a variety of petroleum jelly called red veterinary petrolatum, or Red Vet Pet for short, was often included in life raft survival kits. Acting as a sunscreen, it provides protection against ultraviolet rays.

The American Academy of Dermatology recommends keeping skin injuries moist with petroleum jelly to reduce scarring. A verified medicinal use is to protect and prevent moisture loss of the skin of a patient in the initial post-operative period following laser skin resurfacing.

There is one case report published in 1994 indicating petroleum jelly should not be applied to the inside of the nose due to the risk of lipid pneumonia, but this was only ever reported in one patient. However, petroleum jelly is used extensively by otolaryngologists—ear, nose, and throat surgeons—for nasal moisture and epistaxis treatment, and to combat nasal crusting. Large studies have found petroleum jelly applied to the nose for short durations to have no significant side effects.

Historically, it was also consumed for internal use and even promoted as "Vaseline confection".

Skin and hair care

Most petroleum jelly today is used as an ingredient in skin lotions and cosmetics, providing various types of skin care and protection by minimizing friction or reducing moisture loss, or by functioning as a grooming aid (e.g., pomade). It's also used for treating dry scalp and dandruff.

Preventing moisture loss

By reducing moisture loss, petroleum jelly can prevent chapped hands and lips, and soften nail cuticles.

This property is exploited to provide heat insulation: petroleum jelly can be used to keep swimmers warm in water when training, or during channel crossings or long ocean swims. It can prevent chilling of the face due to evaporation of skin moisture during cold weather outdoor sports.

Hair grooming

In the first part of the twentieth century, petroleum jelly, either pure or as an ingredient, was also popular as a hair pomade. When used in a 50/50 mixture with pure beeswax, it makes an effective moustache wax.

Skin lubrication

Petroleum jelly can be used to reduce the friction between skin and clothing during various sport activities, for example to prevent chafing of the seat region of cyclists, or the nipples of long distance runners wearing loose T-shirts, and is commonly used in the groin area of wrestlers and footballers.

Petroleum jelly is commonly used as a personal lubricant, because it does not dry out like water-based lubricants, and has a distinctive "feel", different from that of K-Y and related methylcellulose products. However, it is not recommended for use with condoms during sexual activity, because it swells latex and thus increases the chance of rupture.

Product care and protection:

Coating

Petroleum jelly can be used to coat corrosion-prone items such as metallic trinkets, non-stainless steel blades, and gun barrels prior to storage as it serves as an excellent and inexpensive water repellent. It is used as an environmentally friendly underwater antifouling coating for motor boats and sailing yachts. It was recommended in the Porsche owner's manual as a preservative for light alloy (alleny) anodized Fuchs wheels to protect them against corrosion from road salts and brake dust. “Every three months (after regular cleaning) the wheels should be coated with petroleum jelly.”

Finishing

It can be used to finish and protect wood, much like a mineral oil finish. It is used to condition and protect smooth leather products like bicycle saddles, boots, motorcycle clothing, and used to put a shine on patent leather shoes (when applied in a thin coat and then gently buffed off).

Lubrication

Petroleum jelly can be used to lubricate zippers and slide rules. It was also recommended by Porsche in maintenance training documentation for lubrication (after cleaning) of "Weatherstrips on Doors, Hood, Tailgate, Sun Roof". The publication states, "…before applying a new coat of lubricant…" "Only acid-free lubricants may be used, for example: glycerine, Vaseline, tire mounting paste, etc. These lubricants should be rubbed in, and excessive lubricant wiped off with a soft cloth." It is used in bullet lubricant compounds. Petrolatum is also used as a light lubricating grease [28] as well as an anti-seize assembling grease.

Industrial production processes

Petroleum jelly is a useful material when incorporated into candle wax formulas. The petroleum jelly softens the overall blend, allows the candle to incorporate additional fragrance oil, and facilitates adhesion to the sidewall of the glass. Petroleum jelly is used to moisten nondrying modelling clay such as plasticine, as part of a mix of hydrocarbons including those with greater (paraffin wax) and lesser (mineral oil) molecular weights. It is used as a tack reducer additive to printing inks to reduce paper lint "picking" from uncalendered paper stocks. It can be used as a release agent for plaster molds and castings. It is used in the leather industry as a waterproofing cream.

Other:

Explosives

Petroleum jelly is mixed with a high proportion of strong inorganic chlorates due to it acting as a plasticizer and a fuel source. An example of this is Cheddite C which consists of a ratio of 9:1, KClO3 to petroleum jelly. This mixture is unable to detonate without the use of a blasting cap. It is also used as a stabiliser in the manufacture of the propellant Cordite.

Mechanical, barrier functions

Petroleum jelly can be used to fill copper or fibre-optic cables using plastic insulation to prevent the ingress of water, see icky-pick.

Petroleum jelly can be used to coat the inner walls of terrariums to prevent animals crawling out and escaping.

A stripe of petroleum jelly can be used to prevent the spread of a liquid. For example, it can be applied close to the hairline when using a home hair dye kit to prevent the hair dye from irritating or staining the skin. It is also used to prevent diaper rash.

Surface cleansing

Petroleum jelly is used to gently clean a variety of surfaces, ranging from makeup removal from faces to tar stain removal from leather.

Pet care

Petroleum jelly is used to moisturize the paws of dogs. It is a common ingredient in hairball remedies for domestic cats.

Petroleum jelly is slightly soluble in alcohol.

Health

In 2015, German consumer watchdog Stiftung Warentest analyzed cosmetics containing mineral oils. After developing a new detection method, they found high concentrations of Mineral Oil Aromatic Hydrocarbons (MOAH) and even polyaromatics in products containing mineral oils. Vaseline products contained the most MOAH of all tested cosmetics (up to 9%).[34] The European Food Safety Authority sees MOAH and polyaromatics as possibly carcinogenic. Based on the results, Stiftung Warentest warns not to use Vaseline or any product that is based on mineral oils for lip care.

A later study published in 2017 found at most 1% MOAH in petroleum jelly, and less than 1% in petroleum jelly-based beauty products.

Summary

Petroleum jelly, also called Petrolatum, translucent, yellowish to amber or white, unctuous substance having almost no odour or taste, derived from petroleum and used principally in medicine and pharmacy as a protective dressing and as a substitute for fats in ointments and cosmetics. It is also used in many types of polishes and in lubricating greases, rust preventives, and modeling clay.

Petrolatum is obtained by dewaxing heavy lubricating-oil stocks. It has a melting-point range from 38° to 54° C (100° to 130° F). Chemically, petrolatum is a mixture of hydrocarbons, chiefly of the paraffin series.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1204 2021-11-28 14:26:10

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,952

Re: Miscellany

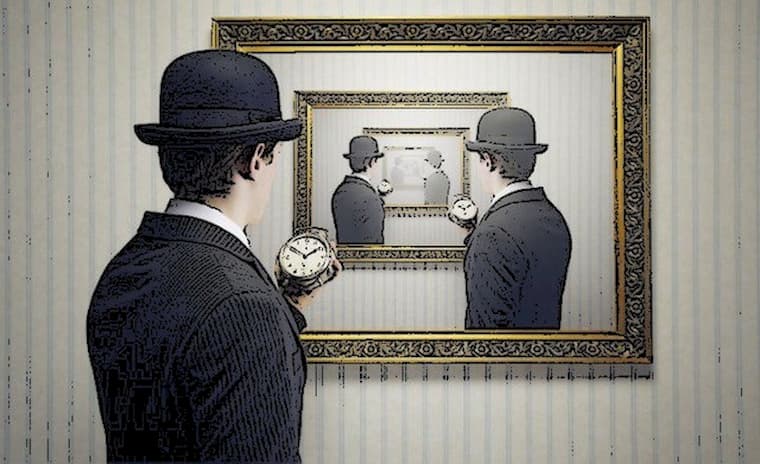

1180) Deja vu

Déjà vu is the feeling that one has lived through the present situation before. Although some interpret déjà vu in a paranormal context, mainstream scientific approaches reject the explanation of déjà vu as "precognition" or "prophecy". It is an anomaly of memory whereby, despite the strong sense of recollection, the time, place, and practical context of the "previous" experience are uncertain or believed to be impossible. Two types of déjà vu are recognized: the pathological déjà vu usually associated with epilepsy or that which, when unusually prolonged or frequent, or associated with other symptoms such as hallucinations, may be an indicator of neurological or psychiatric illness, and the non-pathological type characteristic of healthy people, about two-thirds of whom have had déjà vu experiences. People who travel often or frequently watch films are more likely to experience déjà vu than others. Furthermore, people also tend to experience déjà vu more in fragile conditions or under high pressure, and research shows that the experience of déjà vu also decreases with age.

Medical disorders

Déjà vu is associated with temporal lobe epilepsy. This experience is a neurological anomaly related to epileptic electrical discharge in the brain, creating a strong sensation that an event or experience currently being experienced has already been experienced in the past.

Migraines with aura are also associated with déjà vu.

Early researchers tried to establish a link between déjà vu and mental disorders such as anxiety, dissociative identity disorder and schizophrenia but failed to find correlations of any diagnostic value. No special association has been found between déjà vu and schizophrenia. A 2008 study found that déjà vu experiences are unlikely to be pathological dissociative experiences.

Some research has looked into genetics when considering déjà vu. Although there is not currently a gene associated with déjà vu, the LGI1 gene on chromosome 10 is being studied for a possible link. Certain forms of the gene are associated with a mild form of epilepsy, and, though by no means a certainty, déjà vu, along with jamais vu, occurs often enough during seizures (such as simple partial seizures) that researchers have reason to suspect a link.

Pharmacology

Certain drugs increase the chances of déjà vu occurring in the user, resulting in a strong sensation that an event or experience currently being experienced has already been experienced in the past. Some pharmaceutical drugs, when taken together, have also been implicated in the cause of déjà vu. Taiminen and Jääskeläinen (2001) reported the case of an otherwise healthy male who started experiencing intense and recurrent sensations of déjà vu upon taking the drugs amantadine and phenylpropanolamine together to relieve flu symptoms. He found the experience so interesting that he completed the full course of his treatment and reported it to the psychologists to write up as a case study. Because of the dopaminergic action of the drugs and previous findings from electrode stimulation of the brain (e.g. Bancaud, Brunet-Bourgin, Chauvel, & Halgren, 1994), Tamminen and Jääskeläinen speculate that déjà vu occurs as a result of hyperdopaminergic action in the medial temporal areas of the brain.

Explanations

Split perception explanation

Déjà vu may happen if a person experienced the current sensory experience twice successively. The first input experience is brief, degraded, occluded, or distracted. Immediately following that, the second perception might be familiar because the person naturally related it to the first input. One possibility behind this mechanism is that the first input experience involves shallow processing, which means that only some superficial physical attributes are extracted from the stimulus.

Memory-based explanation:

Implicit memory

Research has associated déjà vu experiences with good memory functions. Recognition memory enables people to realize the event or activity that they are experiencing has happened before. When people experience déjà vu, they may have their recognition memory triggered by certain situations which they have never encountered.

The similarity between a déjà-vu-eliciting stimulus and an existing, or non-existing but different, memory trace may lead to the sensation that an event or experience currently being experienced has already been experienced in the past. Thus, encountering something that evokes the implicit associations of an experience or sensation that "cannot be remembered" may lead to déjà vu. In an effort to reproduce the sensation experimentally, Banister and Zangwill (1941) used hypnosis to give participants posthypnotic amnesia for material they had already seen. When this was later re-encountered, the restricted activation caused thereafter by the posthypnotic amnesia resulted in 3 of the 10 participants reporting what the authors termed "paramnesias".

Two approaches are used by researchers to study feelings of previous experience, with the process of recollection and familiarity. Recollection-based recognition refers to an ostensible realization that the current situation has occurred before. Familiarity-based recognition refers to the feeling of familiarity with the current situation without being able to identify any specific memory or previous event that could be associated with the sensation.

In 2010, O’Connor, Moulin, and Conway developed another laboratory analog of déjà vu based on two contrast groups of carefully selected participants, a group under posthypnotic amnesia condition (PHA) and a group under posthypnotic familiarity condition (PHF). The idea of PHA group was based on the work done by Banister and Zangwill (1941), and the PHF group was built on the research results of O’Connor, Moulin, and Conway (2007). They applied the same puzzle game for both groups, "Railroad Rush Hour", a game in which one aims to slide a red car through the exit by rearranging and shifting other blocking trucks and cars on the road. After completing the puzzle, each participant in the PHA group received a posthypnotic amnesia suggestion to forget the game in the hypnosis. Then, each participant in the PHF group was not given the puzzle but received a posthypnotic familiarity suggestion that they would feel familiar with this game during the hypnosis. After the hypnosis, all participants were asked to play the puzzle (the second time for PHA group) and reported the feelings of playing.

In the PHA condition, if a participant reported no memory of completing the puzzle game during hypnosis, researchers scored the participant as passing the suggestion. In the PHF condition, if participants reported that the puzzle game felt familiar, researchers scored the participant as passing the suggestion. It turned out that, both in the PHA and PHF conditions, five participants passed the suggestion and one did not, which is 83.33% of the total sample. More participants in PHF group felt a strong sense of familiarity, for instance, comments like "I think I have done this several years ago." Furthermore, more participants in PHF group experienced a strong déjà vu, for example, "I think I have done the exact puzzle before." Three out of six participants in the PHA group felt a sense of déjà vu, and none of them experienced a strong sense of it. These figures are consistent with Banister and Zangwill's findings. Some participants in PHA group related the familiarity when completing the puzzle with an exact event that happened before, which is more likely to be a phenomenon of source amnesia. Other participants started to realize that they may have completed the puzzle game during hypnosis, which is more akin to the phenomenon of breaching. In contrast, participants in the PHF group reported that they felt confused about the strong familiarity of this puzzle, with the feeling of playing it just sliding across their minds. Overall, the experiences of participants in the PHF group is more likely to be the déjà vu in life, while the experiences of participants in the PHA group is unlikely to be real déjà vu.

A 2012 study in the journal Consciousness and Cognition, that used virtual reality technology to study reported déjà vu experiences, supported this idea. This virtual reality investigation suggested that similarity between a new scene's spatial layout and the layout of a previously experienced scene in memory (but which fails to be recalled) may contribute to the déjà vu experience.[37] When the previously experienced scene fails to come to mind in response to viewing the new scene, that previously experienced scene in memory can still exert an effect—that effect may be a feeling of familiarity with the new scene that is subjectively experienced as a feeling that an event or experience currently being experienced has already been experienced in the past, or of having been there before despite knowing otherwise.

Cryptomnesia

Another possible explanation for the phenomenon of déjà vu is the occurrence of "cryptomnesia", which is where information learned is forgotten but nevertheless stored in the brain, and similar occurrences invoke the contained knowledge, leading to a feeling of familiarity because the event or experience being experienced has already been experienced in the past, known as "déjà vu". Some experts suggest that memory is a process of reconstruction, rather than a recollection of fixed, established events. This reconstruction comes from stored components, involving elaborations, distortions, and omissions. Each successive recall of an event is merely a recall of the last reconstruction. The proposed sense of recognition (déjà vu) involves achieving a good "match" between the present experience and the stored data. This reconstruction, however, may now differ so much from the original event it is as though it had never been experienced before, even though it seems similar.

Dual neurological processing

In 1964, Robert Efron of Boston's Veterans Hospital proposed that déjà vu is caused by dual neurological processing caused by delayed signals. Efron found that the brain's sorting of incoming signals is done in the temporal lobe of the brain's left hemisphere. However, signals enter the temporal lobe twice before processing, once from each hemisphere of the brain, normally with a slight delay of milliseconds between them. Efron proposed that if the two signals were occasionally not synchronized properly, then they would be processed as two separate experiences, with the second seeming to be a re-living of the first.

Dream-based explanation

Dreams can also be used to explain the experience of déjà vu, and they are related in three different aspects. Firstly, some déjà vu experiences duplicate the situation in dreams instead of waking conditions, according to the survey done by Brown (2004). Twenty percent of the respondents reported their déjà vu experiences were from dreams and 40% of the respondents reported from both reality and dreams. Secondly, people may experience déjà vu because some elements in their remembered dreams were shown. Research done by Zuger (1966) supported this idea by investigating the relationship between remembered dreams and déjà vu experiences, and suggested that there is a strong correlation. Thirdly, people may experience déjà vu during a dream state, which links déjà vu with dream frequency.

Summary:

What is déjà vu?

The term déjà vu is French and means, literally, "already seen." Those who have experienced the feeling describe it as an overwhelming sense of familiarity with something that shouldn't be familiar at all. Say, for example, you are traveling to England for the first time. You are touring a cathedral, and suddenly it seems as if you have been in that very spot before. Or maybe you are having dinner with a group of friends, discussing some current political topic, and you have the feeling that you've already experienced this very thing -- same friends, same dinner, same topic.

The phenomenon is rather complex, and there are many different theories as to why déjà vu happens. Swiss scholar Arthur Funkhouser suggests that there are several "déjà experiences" and asserts that in order to better study the phenomenon, the nuances between the experiences need to be noted. In the examples mentioned above, Funkhouser would describe the first incidence as déjàvisite ("already visited") and the second as déjàvecu ("already experienced or lived through").

As much as 70 percent of the population reports having experienced some form of déjà vu. A higher number of incidents occurs in people 15 to 25 years old than in any other age group.

Déjà vu has been firmly associated with temporal-lobe epilepsy. Reportedly, déjà vu can occur just prior to a temporal-lobe seizure. People suffering a seizure of this kind can experience déjà vu during the actual seizure activity or in the moments between convulsions.

Since déjà vu occurs in individuals with and without a medical condition, there is much speculation as to how and why this phenomenon happens. Several psychoanalysts attribute déjà vu to simple fantasy or wish fulfillment, while some psychiatrists ascribe it to a mismatching in the brain that causes the brain to mistake the present for the past. Many parapsychologists believe it is related to a past-life experience. Obviously, there is more investigation to be done.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1205 2021-11-29 14:17:41

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,952

Re: Miscellany

1181) Invention

Invention, the act of bringing ideas or objects together in a novel way to create something that did not exist before.

Building models of what might be

Ever since the first prehistoric stone tools, humans have lived in a world shaped by invention. Indeed, the brain appears to be a natural inventor. As part of the act of perception, humans assemble, arrange, and manipulate incoming sensory information so as to build a dynamic, constantly updated model of the outside world. The survival value of such a model lies in the fact that it functions as a template against which to match new experiences, so as to rapidly identify anything anomalous that might be life-threatening. Such a model would also make it possible to predict danger. The predictive act would involve the construction of hypothetical models of the way the world might be at some future point. Such models could include elements that might, for whatever reason, be assembled into novel submodels (inventive ideas).

One of the earliest and most literal examples of this model-building paradigm in action was the ancient Mesopotamian invention of writing. As early as 8000 BCE tiny geometric clay models, used to represent sheep and grain, were kept in clay envelopes, to be used as inventory tallies or else to represent goods during barter. Over time, the tokens were pressed onto the exterior of the wet envelope, which at some point was flattened into a tablet. By about 3100 BCE the impressions had become abstract designs marked on the tablet with a cut reed stalk. These pictograms, known today as cuneiform, were the first writing. And they changed the world.

Inventions almost always cause change. Paleolithic stone weapons made hunting possible and thereby triggered the emergence of permanent top-down command structures. The printing press, introduced by Johannes Gutenberg in the 15th century, once and for all curtailed the traditional authority of elders. The typewriter, brought onto the market by Christopher Latham Sholes in the 1870s, was instrumental in freeing women from housework and changing their social status for good (and also increasing the divorce rate).

What inventors are

Inventors are often extremely observant. In the 1940s Swiss engineer George de Mestral saw tiny hooks on the burrs clinging to his hunting jacket and invented the hook-and-loop fastener system known as Velcro.

Invention can be serendipitous. In the late 1800s a German medical scientist, Paul Ehrlich, spilled some new dye into a Petri dish containing bacilli, saw that the dye selectively stained and killed some of them, and invented chemotherapy. In the mid-1800s an American businessman, Charles Goodyear, dropped a rubber mixture containing sulfur on his hot stove and invented vulcanization.

Inventors do it for money. Austrian chemist Auer von Welsbach, in developing the gas mantle in the 1880s, provided 30 extra years of profitability to the shareholders of gaslight companies (which at the time were threatened by the new electric light).

Inventions are often unintended. In the early 1890s Edward Acheson, an American entrepreneur in the field of electric lighting, was seeking to invent artificial diamonds when an electrified mix of coke and clay produced the ultrahard abrasive Carborundum. In an attempt to develop artificial quinine in the mid-1800s, British chemist William Perkin’s investigation of coal tar instead created the first artificial dye, tyrian purple—which later fell into Ehrlich’s Petri dish.

Inventors solve puzzles. In the course of investigating why suction pumps would lift water only about 9 metres (30 feet), Evangelista Torricelli identified air pressure and invented the barometer.

Inventors are dogged. The American inventor Thomas Edison, who tested thousands of materials before he chose bamboo to make the carbon filament for his incandescent lightbulb, described his work as "one percent inspiration and 99 percent perspiration.” At his laboratory in Menlo Park, New Jersey, Edison’s approach was to identify a potential gap in the market and fill it with an invention. His workers were told, “There’s a way to do it better. Find it.”

Serendipity and inspiration

The key to inventive success often requires being in the right place at the right time. Christopher Latham Sholes and Carlos Glidden took their invention to arms manufacturer Remington just when that company’s production lines were running down after the end of the American Civil War. A quick retool turned Remington into the world’s first typewriter manufacturer.

An invention developed for one purpose will sometimes find use in entirely different circumstances. In medieval Afghanistan somebody invented a leather loop to hang on the side of a camel for use as a step when loading the animal. By 1066 the Normans had put the loop on each side of a horse and invented the stirrup. With their feet thus firmly anchored, at the Battle of Hastings that year Norman knights hit opposing English foot soldiers with their lances and the full weight of the horse without being unseated by the shock of the encounter. The Normans won the battle and took over England (and made English the French-Saxon mix it is today).

One invention can inspire another. Gaslight distribution pipes gave Edison the idea for his electricity network. Perforated cards used to control the Jacquard loom led Herman Hollerith to invent punch cards for tabulator use in the 1890 U.S. census.

The quickening pace of invention

Above all, invention appears primarily to involve a “1 + 1 = 3” process similar to the brain’s model-building activity, in which concepts or techniques are brought together for the first time and the outcome is more than the sum of the parts (e.g., spray + gasoline = carburetor).

The more often ideas come together, the more frequently invention occurs. The rate of invention increased sharply, each time, when the exchange of ideas became easier after the invention of the printing press, telecommunications, the computer, and above all the Internet. Today new fields such as data mining and nanotechnology offer would-be inventors (or semi-intelligent software programs) massive amounts of “1 + 1 = 3” opportunities. As a result, the rate of innovation seems poised to increase dramatically in the coming decades.

It is going to become harder than ever to keep up with the secondary results of invention as the general public gains access to information and technology denied them for millennia and as billions of brains, each with its own natural inventive capabilities, innovate faster than social institutions can adapt. In some cases, as occurred during the global financial crisis of 2007–08, institutions will face severe challenges from the introduction of technologies for which their old-fashioned infrastructures will be ill-prepared. It may be that the only safe way to deal with the potentially disruptive effects of an avalanche of invention, so as to develop the new social processes required to manage a permanent state of change, will be to do what the brain does: invent a comprehensive virtual world in which one can safely test innovative ideas before applying them.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1206 2021-11-30 00:56:33

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,952

Re: Miscellany

1182) Mensa International

Mensa is the largest and oldest high IQ society in the world. It is a non-profit organization open to people who score at the 98th percentile or higher on a standardised, supervised IQ or other approved intelligence test. Mensa formally comprises national groups and the umbrella organisation Mensa International, with a registered office in Caythorpe, Lincolnshire, England, which is separate from the British Mensa office in Wolverhampton. The word mensa is Latin for 'table', as is symbolised in the organisation's logo, and was chosen to demonstrate the round-table nature of the organisation; the coming together of equals.

History

Roland Berrill, an Australian barrister, and Lancelot Ware, a British scientist and lawyer, founded Mensa at Lincoln College, in Oxford, England in 1946, with the intention of forming a society for the most intelligent, with the only qualification being a high IQ.

The society was ostensibly to be non-political in its aims, and free from all other social distinctions, such as race and religion. However, Berrill and Ware were both disappointed with the resulting society. Berrill had intended Mensa as "an aristocracy of the intellect" and was unhappy that the majority of members came from working or lower-class homes, while Ware said: "I do get disappointed that so many members spend so much time solving puzzles."

American Mensa was the second major branch of Mensa. Its success has been linked to the efforts of early and longstanding organiser Margot Seitelman.

Membership requirement

Mensa's requirement for membership is a score at or above the 98th percentile on certain standardised IQ or other approved intelligence tests, such as the Stanford–Binet Intelligence Scales. The minimum accepted score on the Stanford–Binet is 132, while for the Cattell it is 148. Most IQ tests are designed to yield a mean score of 100 with a standard deviation of 15; the 98th-percentile score under these conditions is 131, assuming a normal distribution.

Most national groups test using well-established IQ test batteries, but American Mensa has developed its own application exam. This exam is proctored[clarification needed] by American Mensa and does not provide a score comparable to scores on other tests; it serves only to qualify a person for membership. In some national groups, a person may take a Mensa-offered test only once, although one may later submit an application with results from a different qualifying test. The Mensa test is also available in some developing countries such as India and Pakistan, and societies in developing countries have been growing at a rapid pace.

Organizational structure

Mensa International consists of around 134,000 members in 100 countries and in 54 national groups. The national groups issue periodicals, such as Mensa Bulletin, the monthly publication of American Mensa, and Mensa Magazine, the monthly publication of British Mensa. Individuals who live in a country with a national group join the national group, while those living in countries without a recognised chapter may join Mensa International directly.

The largest national groups are:

* American Mensa, with more than 57,000 members,

* British Mensa, with over 21,000 members,

* Mensa Germany, with about 15,000 members.

Larger national groups are further subdivided into local groups. For example, American Mensa has 134 local groups, with the largest having over 2,000 members and the smallest having fewer than 100.

Members may form Special Interest Groups (SIGs) at international, national, and local levels; these SIGs represent a wide variety of interests, ranging from motorcycle clubs to entrepreneurial co-operations. Some SIGs are associated with various geographic groups, whereas others act independently of official hierarchy. There are also electronic SIGs (eSIGs), which operate primarily as email lists, where members may or may not meet each other in person.

The Mensa Foundation, a separate charitable U.S. corporation, edits and publishes its own Mensa Research Journal, in which both Mensans and non-Mensans are published on various topics surrounding the concept and measure of intelligence.

Gatherings

Mensa has many events for members, from the local to the international level. Several countries hold a large event called the Annual Gathering (AG). It is held in a different city every year, with speakers, dances, leadership workshops, children's events, games, and other activities. The American and Canadian AGs are usually held during the American Independence Day (4 July) or Canada Day (1 July) weekends respectively.

Smaller gatherings called Regional Gatherings (RGs), which are held in various cities, attract members from large areas. The largest in the United States is held in the Chicago area around Halloween, notably featuring a costume party for which many members create pun-based costumes.

In 2006, the Mensa World Gathering was held from 8–13 August in Orlando, Florida to celebrate the 60th anniversary of the founding of Mensa. An estimated 2,500 attendees from over 30 countries gathered for this celebration. The International Board of Directors had a formal meeting there.

In 2010, a joint American-Canadian Annual Gathering was held in Dearborn, Michigan, to mark the 50th anniversary of Mensa in North America, one of several times the US and Canada AGs have been combined. Other multinational gatherings are the European Mensas Annual Gathering (EMAG) and the Asian Mensa Gathering (AMG).

Since 1990, American Mensa has sponsored the annual Mensa Mind Games competition, at which the Mensa Select award is given to five board games that are "original, challenging, and well designed".

Individual local groups and their members host smaller events for members and their guests. Lunch or dinner events, lectures, tours, theatre outings, and games nights are all common.

In Europe, since 2008 international meetings have been held under the name [EMAG] (European Mensa Annual Gathering), starting in Cologne that year. The next meetings were in Utrecht (2009), Prague (2010), Paris (2011), Stockholm (2012), Bratislava (2013), Zürich (2014), Berlin (2015), Kraków (2016), Barcelona (2017), Belgrade (2018) and Ghent (2019). The 2020 event was postponed and took place in 2021 in Brno. Upcoming EMAGs will be held in Strassbourg (2022) and Århus (2023).

In the Asia-Pacific region, there is an Asia-Pacific Mensa Annual Gathering (AMAG), with rotating countries hosting the event. This has included Gold Coast, Australia (2017), Cebu, Philippines (2018), New Zealand (2019), and South Korea (2020).

Publications

All Mensa groups publish members-only newsletters or magazines, which include articles and columns written by members, and information about upcoming Mensa events. Examples include the American Mensa Bulletin, the British Mensa magazine, Serbian MozaIQ, the Australian TableAus, the Mexican El Mensajero, and the French Contacts. Some local or regional groups have their own newsletter, such as those in the United States, UK, Germany, and France.

Mensa International publishes a Mensa World Journal, which "contains views and information about Mensa around the world". This journal is generally included in each national magazine.

Mensa also publishes the Mensa Research Journal, which "highlights scholarly articles and recent research related to intelligence". Unlike most Mensa publications, this journal is available to non-members.

Demographics

Only some national Mensas accept child members; many offer activities, resources, and newsletters specifically geared toward gifted children and their parents. Both American Mensa's youngest member (Kashe Quest), British Mensa's youngest member (Adam Kirby), and several Australian Mensa members joined at the age of two. The current youngest member of Mensa is Adam Kirby, from Mitcham, London who was invited to join at the age of two years and four months and gained full membership at the age of two years five months. He scored 141 on the Stanford-Binet IQ test. Elise Tan-Roberts of the UK is the youngest person ever to join Mensa, having gained full membership at the age of two years and four months. In 2018, Mehul Garg became the youngest person in a decade to score the maximum of 162 in the test.

American Mensa's oldest member is 102, and British Mensa had a member aged 103.

According to American Mensa's website (as of 2013), 38 percent of its members are baby boomers between the ages of 51 and 68, 31 percent are Gen-Xers or Millennials between the ages of 27 and 48, and more than 2,600 members are under the age of 18. There are more than 1,800 families in the United States with two or more Mensa members. In addition, the American Mensa general membership is "66 percent male, 34 percent female". The aggregate of local and national leadership is distributed equally between the sexes.

Summary

Mensa International, organization of individuals with high IQs that aims to identify, understand, and support intelligence; encourage research into intelligence; and create and seek both social and intellectual experiences for its members. The society was founded in England in 1946 by attorney Roland Berrill and scientist Lance Ware. They chose the word mensa as its name because it means table in Latin and is also reminiscent of the Latin words for mind and month, suggesting the monthly meeting of great minds around a table. Members vary widely in education, income, and occupation. Mensa membership is open to adults and children. To become a Mensan, the only qualification is to report a score at the 98th percentile (meaning a score that is greater than or equal to that achieved by 98 percent of the general population taking the test) on an approved intelligence test that has been administered and supervised by a qualified examiner. Mensa also administers such tests itself.

Membership benefits include opportunities to participate in discussion groups, social events, and annual meetings. Mensa International offers some 200 special interest groups (SIGs) devoted to a variety of scholarly disciplines and recreational pursuits. Individual Mensa chapters organize workshops and special events, publish newsletters and magazines, and conduct annual conferences.

American Mensa was founded in 1960 by Peter Sturgeon. Its national office is in Arlington, Texas. There are chapters in large cities such as New York, Chicago, and Los Angeles, and regional groups in many areas of the United States. The Mensa Education & Research Foundation (MERF) was established in 1971 to promote Mensa’s mission. It grants awards and scholarships and publishes the Mensa Research Journal.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1207 2021-12-01 00:03:25

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,952

Re: Miscellany

1183) Subway

Subway, also called underground, tube, or métro, underground railway system used to transport large numbers of passengers within urban and suburban areas. Subways are usually built under city streets for ease of construction, but they may take shortcuts and sometimes must pass under rivers. Outlying sections of the system usually emerge aboveground, becoming conventional railways or elevated transit lines. Subway trains are usually made up of a number of cars operated on the multiple-unit system.

The first subway system was proposed for London by Charles Pearson, a city solicitor, as part of a city-improvement plan shortly after the opening of the Thames Tunnel in 1843. After 10 years of discussion, Parliament authorized the construction of 3.75 miles (6 km) of underground railway between Farringdon Street and Bishop’s Road, Paddington. Work on the Metropolitan Railway began in 1860 by cut-and-cover methods—that is, by making trenches along the streets, giving them brick sides, providing girders or a brick arch for the roof, and then restoring the roadway on top. On Jan. 10, 1863, the line was opened using steam locomotives that burned coke and, later, coal; despite sulfurous fumes, the line was a success from its opening, carrying 9,500,000 passengers in the first year of its existence. In 1866 the City of London and Southwark Subway Company (later the City and South London Railway) began work on their “tube” line, using a tunneling shield developed by J.H. Greathead. The tunnels were driven at a depth sufficient to avoid interference with building foundations or public-utility works, and there was no disruption of street traffic. The original plan called for cable operation, but electric traction was substituted before the line was opened. Operation began on this first electric underground railway in 1890 with a uniform fare of twopence for any journey on the 3-mile (5-kilometre) line. In 1900 Charles Tyson Yerkes, an American railway magnate, arrived in London, and he was subsequently responsible for the construction of more tube railways and for the electrification of the cut-and-cover lines. During World Wars I and II the tube stations performed the unplanned function of air-raid shelters.

Many other cities followed London’s lead. In Budapest, a 2.5-mile (4-kilometre) electric subway was opened in 1896, using single cars with trolley poles; it was the first subway on the European continent. Considerable savings were achieved in its construction over earlier cut-and-cover methods by using a flat roof with steel beams instead of a brick arch, and therefore, a shallower trench.

In Paris, the Métro (Chemin de Fer Métropolitain de Paris) was started in 1898, and the first 6.25 miles (10 km) were opened in 1900. The rapid progress was attributed to the wide streets overhead and the modification of the cut-and-cover method devised by the French engineer Fulgence Bienvenue. Vertical shafts were sunk at intervals along the route; and, from there, side trenches were dug and masonry foundations to support wooden shuttering were placed immediately under the road surfaces. Construction of the roof arch then proceeded with relatively little disturbance to street traffic. This method, while it is still used in Paris, has not been widely copied in subway construction elsewhere.

In the United States the first practical subway line was constructed in Boston between 1895 and 1897. It was 1.5 miles (2.4 km) long and at first used trolley streetcars, or tramcars. Later, Boston acquired conventional subway trains. New York City opened the first section of what was to become the largest system in the world on Oct. 27, 1904. In Philadelphia, a subway system was opened in 1907, and Chicago’s system opened in 1943. Moscow constructed its original system in the 1930s.

In Canada, Toronto opened a subway in 1954; a second system was constructed in Montreal during the 1960s using Paris-type rubber-tired cars. In Mexico City the first stage of a combined underground and surface metro system (designed after the Paris Métro) was opened in 1969. In South America, the Buenos Aires subway opened in 1913. In Japan, the Tokyo subway opened in 1927, the Kyōto in 1931, the Ōsaka in 1933, and the Nagoya in 1957.

Automatic trains, designed, built, and operated using aerospace and computer technology, have been developed in a few metropolitan areas, including a section of the London subway system, the Victoria Line (completed 1971). The first rapid-transit system to be designed for completely automatic operation is BART (Bay Area Rapid Transit) in the San Francisco Bay area, completed in 1976. Trains are operated by remote control, requiring only one crewman per train to stand by in case of computer failure. The Washington, D.C., Metro, with an automatic railway control system and 600-foot- (183-metre-) long underground coffered-vault stations, opened its first subway line in 1976. Air-conditioned trains with lightweight aluminum cars, smoother and faster rides due to refinements in track construction and car-support systems, and attention to the architectural appearance of and passenger safety in underground stations are other features of modern subway construction.

London Underground

The London Underground (also known simply as the Underground, or by its nickname the Tube) is a rapid transit system serving Greater London and some parts of the adjacent counties of Buckinghamshire, Essex and Hertfordshire in the United Kingdom.

The Underground has its origins in the Metropolitan Railway, the world's first underground passenger railway. Opened in January 1863, it is now part of the Circle, Hammersmith & City and Metropolitan lines. The first line to operate underground electric traction trains, the City & South London Railway in 1890, is now part of the Northern line. The network has expanded to 11 lines, and in 2020/21 was used for 296 million passenger journeys, making it the world's 12th busiest metro system. The 11 lines collectively handle up to 5 million passenger journeys a day and serve 272 stations.

The system's first tunnels were built just below the ground, using the cut-and-cover method; later, smaller, roughly circular tunnels—which gave rise to its nickname, the Tube—were dug through at a deeper level. The system has 272 stations and 250 miles (400 km) of track. Despite its name, only 45% of the system is under the ground: much of the network in the outer environs of London is on the surface. In addition, the Underground does not cover most southern parts of Greater London, and there are only 31 stations south of the River Thames.

The early tube lines, originally owned by several private companies, were brought together under the "UndergrounD" brand in the early 20th century, and eventually merged along with the sub-surface lines and bus services in 1933 to form London Transport under the control of the London Passenger Transport Board (LPTB). The current operator, London Underground Limited (LUL), is a wholly owned subsidiary of Transport for London (TfL), the statutory corporation responsible for the transport network in London. As of 2015, 92% of operational expenditure is covered by passenger fares. The Travelcard ticket was introduced in 1983 and Oyster, a contactless ticketing system, in 2003. Contactless bank card payments were introduced in 2014, the first public transport system in the world to do so.

The LPTB commissioned many new station buildings, posters and public artworks in a modernist style. The schematic Tube map, designed by Harry Beck in 1931, was voted a national design icon in 2006 and now includes other TfL transport systems such as the Docklands Light Railway, London Overground, TfL Rail, and Tramlink. Other famous London Underground branding includes the roundel and the Johnston typeface, created by Edward Johnston in 1916.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1208 2021-12-01 16:13:30

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,952

Re: Miscellany

1184) Wax

Waxes are a diverse class of organic compounds that are lipophilic, malleable solids near ambient temperatures. They include higher alkanes and lipids, typically with melting points above about 40 °C (104 °F), melting to give low viscosity liquids. Waxes are insoluble in water but soluble in organic, nonpolar solvents. Natural waxes of different types are produced by plants and animals and occur in petroleum.

Chemistry

Waxes are organic compounds that characteristically consist of long aliphatic alkyl chains, although aromatic compounds may also be present. Natural waxes may contain unsaturated bonds and include various functional groups such as fatty acids, primary and secondary alcohols, ketones, aldehydes and fatty acid esters. Synthetic waxes often consist of homologous series of long-chain aliphatic hydrocarbons (alkanes or paraffins) that lack functional groups.

Plant and animal waxes

Waxes are synthesized by many plants and animals. Those of animal origin typically consist of wax esters derived from a variety of fatty acids and carboxylic alcohols. In waxes of plant origin, characteristic mixtures of unesterified hydrocarbons may predominate over esters. The composition depends not only on species, but also on geographic location of the organism.

Animal waxes

The best-known animal wax is beeswax used in constructing the honeycombs of beehives, but other insects also secrete waxes. A major component of beeswax is myricyl palmitate which is an ester of triacontanol and palmitic acid. Its melting point is 62-65 °C. Spermaceti occurs in large amounts in the head oil of the sperm whale. One of its main constituents is cetyl palmitate, another ester of a fatty acid and a fatty alcohol. Lanolin is a wax obtained from wool, consisting of esters of sterols.

Plant waxes

Plants secrete waxes into and on the surface of their cuticles as a way to control evaporation, wettability and hydration. The epicuticular waxes of plants are mixtures of substituted long-chain aliphatic hydrocarbons, containing alkanes, alkyl esters, fatty acids, primary and secondary alcohols, diols, ketones and aldehydes. From the commercial perspective, the most important plant wax is carnauba wax, a hard wax obtained from the Brazilian palm Copernicia prunifera. Containing the ester myricyl cerotate, it has many applications, such as confectionery and other food coatings, car and furniture polish, floss coating, and surfboard wax. Other more specialized vegetable waxes include jojoba oil, candelilla wax and ouricury wax.

Modified plant and animal waxes

Plant and animal based waxes or oils can undergo selective chemical modifications to produce waxes with more desirable properties than are available in the unmodified starting material. This approach has relied on green chemistry approaches including olefin metathesis and enzymatic reactions and can be used to produce waxes from inexpensive starting materials like vegetable oils.

Petroleum derived waxes

Although many natural waxes contain esters, paraffin waxes are hydrocarbons, mixtures of alkanes usually in a homologous series of chain lengths. These materials represent a significant fraction of petroleum. They are refined by vacuum distillation. Paraffin waxes are mixtures of saturated n- and iso- alkanes, naphthenes, and alkyl- and naphthene-substituted aromatic compounds. A typical alkane paraffin wax chemical composition comprises hydrocarbons with the general formula CnH2n+2, such as hentriacontane, C31H64. The degree of branching has an important influence on the properties. Microcrystalline wax is a lesser produced petroleum based wax that contains higher percentage of isoparaffinic (branched) hydrocarbons and naphthenic hydrocarbons.

Millions of tons of paraffin waxes are produced annually. They are used in foods (such as chewing gum and cheese wrapping), in candles and cosmetics, as non-stick and waterproofing coatings and in polishes.

Montan wax

Montan wax is a fossilized wax extracted from coal and lignite. It is very hard, reflecting the high concentration of saturated fatty acids and alcohols. Although dark brown and odorous, they can be purified and bleached to give commercially useful products.

Polyethylene and related derivatives

As of 1995, about 200 million kilograms of polyethylene waxes were consumed annually.

Polyethylene waxes are manufactured by one of three methods:

* The direct polymerization of ethylene, potentially including co-monomers also;

* The thermal degradation of high molecular weight polyethylene resin;

* The recovery of low molecular weight fractions from high molecular weight resin production.

Each production technique generates products with slightly different properties. Key properties of low molecular weight polyethylene waxes are viscosity, density and melt point.

Polyethylene waxes produced by means of degradation or recovery from polyethylene resin streams contain very low molecular weight materials that must be removed to prevent volatilization and potential fire hazards during use. Polyethylene waxes manufactured by this method are usually stripped of low molecular weight fractions to yield a flash point >500°F (>260°C). Many polyethylene resin plants produce a low molecular weight stream often referred to as Low Polymer Wax (LPW). LPW is unrefined and contains volatile oligomers, corrosive catalyst and may contain other foreign material and water. Refining of LPW to produce a polyethylene wax involves removal of oligomers and hazardous catalyst. Proper refining of LPW to produce polyethylene wax is especially important when being used in applications requiring FDA or other regulatory certification.

Uses

Waxes are mainly consumed industrially as components of complex formulations, often for coatings. The main use of polyethylene and polypropylene waxes is in the formulation of colourants for plastics. Waxes confer matting effects[clarification needed] and wear resistance to paints. Polyethylene waxes are incorporated into inks in the form of dispersions to decrease friction. They are employed as release agents, find use as slip agents in furniture, and confer corrosion resistance.

Candles

Waxes such as paraffin wax or beeswax, and hard fats such as tallow are used to make candles, used for lighting and decoration. Another fuel type used in candle manufacturing includes soy. Soy wax is made by the hydrogenation process using soybean oil.

Wax products

Waxes are used as finishes and coatings for wood products.[8] Beeswax is frequently used as a lubricant on drawer slides where wood to wood contact occurs.

Other uses

Sealing wax was used to close important documents in the Middle Ages. Wax tablets were used as writing surfaces. There were different types of wax in the Middle Ages, namely four kinds of wax (Ragusan, Montenegro, Byzantine, and Bulgarian), "ordinary" waxes from Spain, Poland, and Riga, unrefined waxes and colored waxes (red, white, and green). Waxes are used to make wax paper, impregnating and coating paper and card to waterproof it or make it resistant to staining, or to modify its surface properties. Waxes are also used in shoe polishes, wood polishes, and automotive polishes, as mold release agents in mold making, as a coating for many cheeses, and to waterproof leather and fabric. Wax has been used since antiquity as a temporary, removable model in lost-wax casting of gold, silver and other materials.

Wax with colorful pigments added has been used as a medium in encaustic painting, and is used today in the manufacture of crayons, china markers and colored pencils. Carbon paper, used for making duplicate typewritten documents was coated with carbon black suspended in wax, typically montan wax, but has largely been superseded by photocopiers and computer printers. In another context, lipstick and mascara are blends of various fats and waxes colored with pigments, and both beeswax and lanolin are used in other cosmetics. Ski wax is used in skiing and snowboarding. Also, the sports of surfing and skateboarding often use wax to enhance the performance.

Some waxes are considered food-safe and are used to coat wooden cutting boards and other items that come into contact with food. Beeswax or coloured synthetic wax is used to decorate Easter eggs in Romania, Ukraine, Poland, Lithuania and the Czech Republic. Paraffin wax is used in making chocolate covered sweets.

Wax is also used in wax bullets, which are used as simulation aids.

Specific examples:

Animal waxes

* Beeswax - produced by honey bees

* Chinese wax - produced by the scale insect Ceroplastes ceriferus

* Lanolin (wool wax) - from the sebaceous glands of sheep

* Shellac wax - from the lac insect Kerria lacca

* Spermaceti - from the head cavities and blubber of the sperm whale

Vegetable waxes

* Bayberry wax - from the surface wax of the fruits of the bayberry shrub, Myrica faya

* Candelilla wax - from the Mexican shrubs Euphorbia cerifera and Euphorbia antisyphilitica

* Carnauba wax - from the leaves of the Carnauba palm, Copernicia cerifera

* Castor wax - catalytically hydrogenated castor oil

* Esparto wax - a byproduct of making paper from esparto grass, (Macrochloa tenacissima)

* Japan wax - a vegetable triglyceride (not a true wax), from the berries of Rhus and Toxicodendron species

* Jojoba oil - a liquid wax ester, from the seed of Simmondsia chinensis.

* Ouricury wax - from the Brazilian feather palm, Syagrus coronata.

* Rice bran wax - obtained from rice bran (Oryza sativa)

* Soy wax - from soybean oil

* Tallow Tree wax - from the seeds of the tallow tree Triadica sebifera.

Mineral waxes

* Ceresin waxes

* Montan wax - extracted from lignite and brown coal

* Ozocerite - found in lignite beds

* Peat waxes

Petroleum waxes

* Paraffin wax - made of long-chain alkane hydrocarbons

* Microcrystalline wax - with very fine crystalline structure

Summary

Wax, any of a class of pliable substances of animal, plant, mineral, or synthetic origin that differ from fats in being less greasy, harder, and more brittle and in containing principally compounds of high molecular weight (e.g., fatty acids, alcohols, and saturated hydrocarbons). Waxes share certain characteristic physical properties. Many of them melt at moderate temperatures (i.e., between about 35° and 100° C, or 95° and 212° F) and form hard films that can be polished to a high gloss, making them ideal for use in a wide array of polishes. They do share some of the same properties as fats. Waxes and fats, for example, are soluble in the same solvents and both leave grease spots on paper.

Notwithstanding such physical similarities, animal and plant waxes differ chemically from petroleum, or hydrocarbon, waxes and synthetic waxes. They are esters that result from a reaction between fatty acids and certain alcohols other than glycerol, either of a group called sterols (e.g., cholesterol) or an alcohol containing 12 or a larger even number of carbon atoms in a straight chain (e.g., cetyl alcohol). The fatty acids found in animal and vegetable waxes are almost always saturated. They vary from lauric to octatriacontanoic acid (C37H75COOH). Saturated alcohols from C12 to C36 have been identified in various waxes. Several dihydric (two hydroxyl groups) alcohols have been separated, but they do not form a large proportion of any wax. Also, several unidentified branched-chain fatty acids and alcohols have been found in minor quantities. Several cyclic sterols (e.g., cholesterol and analogues) make up major portions of wool wax.

Only a few vegetable waxes are produced in commercial quantities. Carnauba wax, which is very hard and is used in some high-gloss polishes, is probably the most important of these. It is obtained from the surface of the fronds of a species of palm tree native to Brazil. A similar wax, candelilla wax, is obtained commercially from the surface of the candelilla plant, which grows wild in Texas and Mexico. Sugarcane wax, which occurs on the surface of sugarcane leaves and stalks, is obtainable from the sludges of cane-juice processing. Its properties and uses are similar to those of carnauba wax, but it is normally dark in colour and contains more impurities. Other cuticle waxes occur in trace quantities in such vegetable oils as linseed, soybean, corn (maize), and sesame. They are undesirable because they may precipitate when the oil stands at room temperature, but they can be removed by cooling and filtering. Cuticle wax accounts for the beautiful gloss of polished apples.

Beeswax, the most widely distributed and important animal wax, is softer than the waxes mentioned and finds little use in gloss polishes. It is used, however, for its gliding and lubricating properties as well as in waterproofing formulations. Wool wax, the main constituent of the fat that covers the wool of sheep, is obtained as a by-product in scouring raw wool. Its purified form, called lanolin, is used as a pharmaceutical or cosmetic base because it is easily assimilated by the human skin. Sperm oil and spermaceti, both obtained from sperm whales, are liquid at ordinary temperatures and are used mainly as lubricants.

About 90 percent of the wax used for commercial purposes is recovered from petroleum by dewaxing lubricating-oil stocks. Petroleum wax is generally classified into three principal types: paraffin (see paraffin wax), microcrystalline, and petrolatum. Paraffin is widely used in candles, crayons, and industrial polishes. It is also employed for insulating components of electrical equipment and for waterproofing wood and certain other materials. Microcrystalline wax is used chiefly for coating paper for packaging, and petrolatum is employed in the manufacture of medicinal ointments and cosmetics. Synthetic wax is derived from ethylene glycol, an organic compound commercially produced from ethylene gas. It is commonly blended with petroleum waxes to manufacture a variety of products.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1209 2021-12-02 13:20:16

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,952

Re: Miscellany

1185) Perfume

Perfume is a mixture of fragrant essential oils or aroma compounds (fragrances), fixatives and solvents, usually in liquid form, used to give the human body, animals, food, objects, and living-spaces an agreeable scent. The 1939 Nobel Laureate for Chemistry, Leopold Ružička stated in 1945 that "right from the earliest days of scientific chemistry up to the present time perfumes have substantially contributed to the development of organic chemistry as regards methods, systematic classification, and theory."

Ancient texts and archaeological excavations show the use of perfumes in some of the earliest human civilizations. Modern perfumery began in the late 19th century with the commercial synthesis of aroma compounds such as vanillin or coumarin, which allowed for the composition of perfumes with smells previously unattainable solely from natural aromatics.

Dilution classes