Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#1376 2022-05-11 14:28:14

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,375

Re: Miscellany

1350) Polycarbonate

Summary

Polycarbonate (PC) is a tough, transparent synthetic resin employed in safety glass, eyeglass lenses, and compact discs, among other applications. PC is a special type of polyester used as an engineering plastic owing to its exceptional impact resistance, tensile strength, ductility, dimensional stability, and optical clarity. It is marketed under trademarks such as Lexan and Makrolon.

PC was introduced in 1958 by Bayer AG of Germany and in 1960 by the General Electric Company of the United States. As developed by these companies, PC is produced by a polymerization reaction between bisphenol A, a volatile liquid derived from benzene, and phosgene, a highly reactive and toxic gas made by reacting carbon monoxide with chlorine. The resultant polymers (long, multiple-unit molecules) are made up of repeating units containing two aromatic (benzene) rings and connected by ester (CO-O) groups.

Mainly by virtue of the aromatic rings incorporated into the polymer chain, PC has exceptional stiffness. It is also highly transparent, transmitting approximately 90 percent of visible light. Since the mid-1980s this property, in combination with the excellent flowing properties of the polymer when molten, has found growing application in the injection-molding of compact discs. Because PC has an impact strength considerably higher than most plastics, it is also fabricated into large carboys for water, shatterproof windows, safety shields, and safety helmets.

Details

Polycarbonates (PC) are a group of thermoplastic polymers containing carbonate groups in their chemical structures. Polycarbonates used in engineering are strong, tough materials, and some grades are optically transparent. They are easily worked, molded, and thermoformed. Because of these properties, polycarbonates find many applications. Polycarbonates do not have a unique resin identification code (RIC) and are identified as "Other", 7 on the RIC list. Products made from polycarbonate can contain the precursor monomer bisphenol A (BPA).

Structure

Structure of dicarbonate (PhOC(O)OC6H4 )2CMe2 derived from bis(phenol-A) and two equivalents of phenol. This molecule reflects a subunit of a typical polycarbonate derived from bis(phenol-A).

Carbonate esters have planar OC(OC)2 cores, which confers rigidity. The unique O=C bond is short (1.173 Å in the depicted example), while the C-O bonds are more ether-like (the bond distances of 1.326 Å for the example depicted). Polycarbonates received their name because they are polymers containing carbonate groups (−O−(C=O)−O−). A balance of useful features, including temperature resistance, impact resistance and optical properties, positions polycarbonates between commodity plastics and engineering plastics.

Properties and processing

Polycarbonate is a durable material. Although it has high impact-resistance, it has low scratch-resistance. Therefore, a hard coating is applied to polycarbonate eyewear lenses and polycarbonate exterior automotive components. The characteristics of polycarbonate compare to those of polymethyl methacrylate (PMMA, acrylic), but polycarbonate is stronger and will hold up longer to extreme temperature. Thermally processed material is usually totally amorphous, and as a result is highly transparent to visible light, with better light transmission than many kinds of glass.

Polycarbonate has a glass transition temperature of about 147 °C (297 °F), so it softens gradually above this point and flows above about 155 °C (311 °F). Tools must be held at high temperatures, generally above 80 °C (176 °F) to make strain-free and stress-free products. Low molecular mass grades are easier to mold than higher grades, but their strength is lower as a result. The toughest grades have the highest molecular mass, but are more difficult to process.

Unlike most thermoplastics, polycarbonate can undergo large plastic deformations without cracking or breaking. As a result, it can be processed and formed at room temperature using sheet metal techniques, such as bending on a brake. Even for sharp angle bends with a tight radius, heating may not be necessary. This makes it valuable in prototyping applications where transparent or electrically non-conductive parts are needed, which cannot be made from sheet metal. PMMA/Acrylic, which is similar in appearance to polycarbonate, is brittle and cannot be bent at room temperature.

Main transformation techniques for polycarbonate resins:

* extrusion into tubes, rods and other profiles including multiwall

* extrusion with cylinders (calenders) into sheets (0.5–20 mm (0.020–0.787 in)) and films (below 1 mm (0.039 in)), which can be used directly or manufactured into other shapes using thermoforming or secondary fabrication techniques, such as bending, drilling, or routing. Due to its chemical properties it is not conducive to laser-cutting.

* injection molding into ready articles

Polycarbonate may become brittle when exposed to ionizing radiation above 25 kGy (J/kg).

Applications:

Electronic components

Polycarbonate is mainly used for electronic applications that capitalize on its collective safety features. Being a good electrical insulator and having heat-resistant and flame-retardant properties, it is used in various products associated with electrical and telecommunications hardware. It can also serve as a dielectric in high-stability capacitors. However, commercial manufacture of polycarbonate capacitors mostly stopped after sole manufacturer Bayer AG stopped making capacitor-grade polycarbonate film at the end of 2000.

Construction materials

The second largest consumer of polycarbonates is the construction industry, e.g. for domelights, flat or curved glazing, roofing sheets and sound walls. Polycarbonates are used to create materials used in buildings that need to be durable but light.

3D Printing

Polycarbonates are used extensively in 3D FDM printing, producing durable strong plastic products with a high melting point. Polycarbonate is relatively difficult for casual hobbyists to print compared to thermoplastics such as Polylactic acid (PLA) or Acrylonitrile butadiene styrene (ABS) because of the high melting point, difficulty with print bed adhesion, tendency to warp during printing, and tendency to absorb moisture in humid environments. Despite these issues, 3D printing using polycarbonates is common in the professional community.

Data storage

A major polycarbonate market is the production of compact discs, DVDs, and Blu-ray discs. These discs are produced by injection-molding polycarbonate into a mold cavity that has on one side a metal stamper containing a negative image of the disc data, while the other mold side is a mirrored surface. Typical products of sheet/film production include applications in advertisement (signs, displays, poster protection).

Automotive, aircraft, and security components

In the automotive industry, injection-molded polycarbonate can produce very smooth surfaces that make it well-suited for sputter deposition or evaporation deposition of aluminium without the need for a base-coat. Decorative bezels and optical reflectors are commonly made of polycarbonate. Its low weight and high impact resistance have made polycarbonate the dominant material for automotive headlamp lenses. However, automotive headlamps require outer surface coatings because of its low scratch resistance and susceptibility to ultraviolet degradation (yellowing). The use of polycarbonate in automotive applications is limited to low stress applications. Stress from fasteners, plastic welding and molding render polycarbonate susceptible to stress corrosion cracking when it comes in contact with certain accelerants such as salt water and plastisol. It can be laminated to make bullet-proof "glass", although "bullet-resistant" is more accurate for the thinner windows, such as are used in bullet-resistant windows in automobiles. The thicker barriers of transparent plastic used in teller's windows and barriers in banks are also polycarbonate.

So-called "theft-proof" large plastic packaging for smaller items, which cannot be opened by hand, is typically made from polycarbonate.

The canopy of the Lockheed Martin F-22 Raptor jet fighter is made from a piece of high optical quality polycarbonate, and is the largest piece of its type formed in the world.

Niche applications

Polycarbonate, being a versatile material with attractive processing and physical properties, has attracted myriad smaller applications. The use of injection molded drinking bottles, glasses and food containers is common, but the use of BPA in the manufacture of polycarbonate has stirred concerns (see Potential hazards in food contact applications), leading to development and use of "BPA-free" plastics in various formulations.

Laboratory safety goggles

Polycarbonate is commonly used in eye protection, as well as in other projectile-resistant viewing and lighting applications that would normally indicate the use of glass, but require much higher impact-resistance. Polycarbonate lenses also protect the eye from UV light. Many kinds of lenses are manufactured from polycarbonate, including automotive headlamp lenses, lighting lenses, sunglass/eyeglass lenses, swimming goggles and SCUBA masks, and safety glasses/goggles/visors including visors in sporting helmets/masks and police riot gear (helmet visors, riot shields, etc.). Windscreens in small motorized vehicles are commonly made of polycarbonate, such as for motorcycles, ATVs, golf carts, and small airplanes and helicopters.

The light weight of polycarbonate as opposed to glass has led to development of electronic display screens that replace glass with polycarbonate, for use in mobile and portable devices. Such displays include newer e-ink and some LCD screens, though CRT, plasma screen and other LCD technologies generally still require glass for its higher melting temperature and its ability to be etched in finer detail.

As more and more governments are restricting the use of glass in pubs and clubs due to the increased incidence of glassings, polycarbonate glasses are becoming popular for serving alcohol because of their strength, durability, and glass-like feel.

Other miscellaneous items include durable, lightweight luggage, MP3/digital audio player cases, ocarinas, computer cases, riot shields, instrument panels, tealight candle containers and food blender jars. Many toys and hobby items are made from polycarbonate parts, like fins, gyro mounts, and flybar locks in radio-controlled helicopters, and transparent LEGO (ABS is used for opaque pieces).

Standard polycarbonate resins are not suitable for long term exposure to UV radiation. To overcome this, the primary resin can have UV stabilisers added. These grades are sold as UV stabilized polycarbonate to injection moulding and extrusion companies. Other applications, including polycarbonate sheets, may have the anti-UV layer added as a special coating or a coextrusion for enhanced weathering resistance.

Polycarbonate is also used as a printing substrate for nameplate and other forms of industrial grade under printed products. The polycarbonate provides a barrier to wear, the elements, and fading.

Medical applications

Many polycarbonate grades are used in medical applications and comply with both ISO 10993-1 and USP Class VI standards (occasionally referred to as PC-ISO). Class VI is the most stringent of the six USP ratings. These grades can be sterilized using steam at 120 °C, gamma radiation, or by the ethylene oxide (EtO) method. Dow Chemical strictly limits all its plastics with regard to medical applications. Aliphatic polycarbonates have been developed with improved biocompatibility and degradability for nanomedicine applications.

Mobile phones

Some major smartphone manufacturers use polycarbonate. Nokia used polycarbonate in their phones starting with the N9's unibody case in 2011. This practice continued with various phones in the Lumia series. Samsung has started using polycarbonate with Galaxy S III's hyperglaze-branded removable battery cover in 2012. This practice continues with various phones in the Galaxy series. Apple started using polycarbonate with the iPhone 5C's unibody case in 2013.

Benefits over glass and metal back covers include durability against shattering (weakness of glass), bending and scratching (weakness of metal), shock absorption, low manufacturing costs, and no interference with radio signals and wireless charging (weakness of metal). Polycarbonate back covers are available in glossy or matte surface textures.

History

Polycarbonates were first discovered in 1898 by Alfred Einhorn, a German scientist working at the University of Munich. However, after 30 years' laboratory research, this class of materials was abandoned without commercialization. Research resumed in 1953, when Hermann Schnell at Bayer in Uerdingen, Germany patented the first linear polycarbonate. The brand name "Makrolon" was registered in 1955.

Also in 1953, and one week after the invention at Bayer, Daniel Fox at General Electric in Schenectady, New York, independently synthesized a branched polycarbonate. Both companies filed for U.S. patents in 1955, and agreed that the company lacking priority would be granted a license to the technology.

Patent priority was resolved in Bayer's favor, and Bayer began commercial production under the trade name Makrolon in 1958. GE began production under the name Lexan in 1960, creating the GE Plastics division in 1973.

After 1970, the original brownish polycarbonate tint was improved to "glass-clear."

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1377 2022-05-12 13:53:52

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,375

Re: Miscellany

1351) Jute

Summary

Jute is a long, soft, shiny bast fiber that can be spun into coarse, strong threads. It is produced from flowering plants in the genus Corchorus, which is in the mallow family Malvaceae. The primary source of the fiber is Corchorus olitorius, but such fiber is considered inferior to that derived from Corchorus capsularis. "Jute" is the name of the plant or fiber used to make burlap, hessian or gunny cloth.

Jute is one of the most affordable natural fibers, and second only to cotton in the amount produced and variety of uses. Jute fibers are composed primarily of the plant materials cellulose and lignin. Jute fiber falls into the bast fiber category (fiber collected from bast, the phloem of the plant, sometimes called the "skin") along with kenaf, industrial hemp, flax (linen), ramie, etc.. The industrial term for jute fiber is raw jute. The fibers are off-white to brown, and 1–4 metres (3–13 feet) long. Jute is also called the "golden fiber" for its color and high cash value.

Details

Jute is a vegetable fibre. It is very cheap to produce, and its production levels are similar to that of cotton. It is a bast fibre, like hemp, and flax. Coarse fabrics made of jute are called hessian, or burlap in America. Like all natural fibres, Jute is biodegradable."Jute" is the name of the plant or fiber that is used to make burlap, Hessian or gunny cloth. It is very rough and is very difficult to cut or tear.

The jute plant is easily grown in tropical countries like Bangladesh and India. India is the largest producer of jute in the world. Jute is less expensive than cotton, but cotton is better for quality clothes. Jute is used to make various products: packaging materials, jute bags, sacks, expensive carpets, espadrilles, sweaters etc. It is obtained from the bark of the jute plant. Jute plants are easy to grow, have a high yield per acre and, unlike cotton, have little need for pesticides and fertilizers.

In Iran, archaeologists have found jute existing since the Bronze Age.

Jute

Jute, Hindi pat, also called allyott, is either of two species of Corchorus plants—C. capsularis, or white jute, and C. olitorius, including both tossa and daisee varieties—belonging to the hibiscus, or mallow, family (Malvaceae), and their fibre. The latter is a bast fibre; i.e., it is obtained from the inner bast tissue of the bark of the plant’s stem. Jute fibre’s primary use is in fabrics for packaging a wide range of agricultural and industrial commodities that require bags, sacks, packs, and wrappings. Wherever bulky, strong fabrics and twines resistant to stretching are required, jute is widely used because of its low cost. Burlap is made from jute.

Jute has been grown in the Bengal area of India (and of present-day Bangladesh) from ancient times. The export of raw jute from the Indian subcontinent to the Western Hemisphere began in the 1790s. The fibre was used primarily for cordage manufacture until 1822, when commercial yarn manufacture began at Dundee, Scot., which soon became a centre for the industry. India’s own jute-processing industry began in 1855, Calcutta becoming the major centre. After India was partitioned (1947), much of the jute-producing land remained in East Pakistan (now Bangladesh), where new processing facilities were built. Besides the Indian subcontinent, jute is also grown in China and in Brazil. The largest importers of raw jute fibre are Japan, Germany, the United Kingdom, Belgium, and France.

The jute plant, which probably originated on the Indian subcontinent, is an herbaceous annual that grows to an average of 10 to 12 feet (3 to 3.6 metres) in height, with a cylindrical stalk about as thick as a finger. The two species grown for jute fibre are similar and differ only in the shape of their seed pods, growth habit, and fibre characteristics. Most varieties grow best in well-drained, sandy loam and require warm, humid climates with an average monthly rainfall of at least 3 to 4 inches (7.5 to 10 cm) during the growing season. The plant’s light green leaves are 4 to 6 inches (10 to 15 cm) long, about 2 inches (5 cm) wide, have serrated edges, and taper to a point. The plant bears small yellow flowers.

The jute plant’s fibres lie beneath the bark and surround the woody central part of the stem. The fibre strands nearest the bark generally run the full length of the stem. A jute crop is usually harvested when the flowers have been shed but before the plants’ seedpods are fully mature. If jute is cut before then, the fibre is weak; if left until the seed is ripe, the fibre is strong but is coarser and lacks the characteristic lustre.

The fibres are held together by gummy materials; these must be softened, dissolved, and washed away to allow extraction of the fibres from the stem, a process accomplished by steeping the stems in water, or retting. After harvesting, the bundles of stems are placed in the water of pools or streams and are weighted down with stones or earth. They are kept submerged for 10–30 days, during which time bacterial action breaks down the gummy tissues surrounding the fibres. After retting is complete, the fibres are separated from the stalk by beating the root ends with a paddle to loosen them; the stems are then broken off near the root, and the fibre strands are jerked off the stem. The fibres are then washed, dried, sorted, graded, and baled in preparation for shipment to jute mills. In the latter, the fibres are softened by the addition of oil, water, and emulsifiers, after which they are converted into yarn. The latter process involves carding, drawing, roving, and spinning to separate the individual fibre filaments; arrange them in parallel order; blend them for uniformity of colour, strength, and quality; and twist them into strong yarns. Once the yarn has been spun, it can be woven, knitted, twisted, corded, sewn, or braided into finished products.

Jute is used in a wide variety of goods. Jute mats and prayer rugs are common in the East, as are jute-backed carpets worldwide. Jute’s single largest use, however, is in sacks and bags, those of finer quality being called burlap, or hessian. Burlap bags are used to ship and store grain, fruits and vegetables, flour, sugar, animal feeds, and other agricultural commodities. High-quality jute cloths are the principal fabrics used to provide backing for tufted carpets, as well as for hooked rugs (i.e., Oriental rugs). Jute fibres are also made into twines and rough cordage.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1378 2022-05-13 14:25:35

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,375

Re: Miscellany

1352) Unsaturated polyester

Unsaturated polyester is any of a group of thermosetting resins produced by dissolving a low-molecular-weight unsaturated polyester in a vinyl monomer and then copolymerizing the two to form a hard, durable plastic material. Unsaturated polyesters, usually strengthened by fibreglass or ground mineral, are made into structural parts such as boat hulls, pipes, and countertops.

Unsaturated polyesters are copolyesters—that is, polyesters prepared from a saturated dicarboxylic acid or its anhydride (usually phthalic anhydride) as well as an unsaturated dicarboxylic acid or anhydride (usually maleic anhydride). These two acid constituents are reacted with one or more dialcohols, such as ethylene glycol or propylene glycol, to produce the characteristic ester groups that link the precursor molecules together into long, chainlike, multiple-unit polyester molecules. The maleic anhydride units of this copolyester are unsaturated because they contain carbon-carbon double bonds that are capable of undergoing further polymerization under the proper conditions. These conditions are created when the copolyester is dissolved in a monomer such as styrene and the two are subjected to the action of free-radical initiators. The mixture, at this point usually poured into a mold, then copolymerizes rapidly to form a three-dimensional network structure that bonds well with fibres or other reinforcing materials. The principal products are boat hulls, appliances, business machines, automobile parts, automobile-body patching compounds, tubs and shower stalls, flooring, translucent paneling, storage tanks, corrosion-resistant ducting, and building components. Unsaturated polyesters filled with ground limestone or other minerals are cast into kitchen countertops and bathroom vanities. Bowling balls are made from unsaturated polyesters cast into molds with no reinforcement.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1379 2022-05-14 14:24:29

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,375

Re: Miscellany

1353) Carding

Summary

Carding, in textile production, is a process of separating individual fibres, using a series of dividing and redividing steps, that causes many of the fibres to lie parallel to one another while also removing most of the remaining impurities. Carding may be done by hand, using hand carders (pinned wooden paddles that are not unlike steel dog brushes) or drum carders (in which washed wool, fleece, or other materials are fed through one or more pinned rollers) to prepare the fibres for spinning, felting, or other fabric- or cloth-making activities.

Cotton, wool, waste silk, other fibrous plant materials and animal fur and hair, and artificial staple are subjected to carding. Carding produces a thin sheet of uniform thickness that is then condensed to form a thick continuous untwisted strand called sliver. When very fine yarns are desired, carding is followed by combing, a process that removes short fibres, leaving a sliver composed entirely of long fibres, all laid parallel and smoother and more lustrous than uncombed types. Carded and combed sliver is then spun.

Details

Carding is a mechanical process that disentangles, cleans and intermixes fibres to produce a continuous web or sliver suitable for subsequent processing. This is achieved by passing the fibres between differentially moving surfaces covered with "card clothing", a firm flexible material embedded with metal pins. It breaks up locks and unorganised clumps of fibre and then aligns the individual fibres to be parallel with each other. In preparing wool fibre for spinning, carding is the step that comes after teasing.

The word is derived from the Latin Carduus meaning thistle or teasel, as dried vegetable teasels were first used to comb the raw wool before technological advances led to the use of machines.

Overview

These ordered fibres can then be passed on to other processes that are specific to the desired end use of the fibre: Cotton, batting, felt, woollen or worsted yarn, etc. Carding can also be used to create blends of different fibres or different colours. When blending, the carding process combines the different fibres into a homogeneous mix. Commercial cards also have rollers and systems designed to remove some vegetable matter contaminants from the wool.

Common to all carders is card clothing. Card clothing is made from a sturdy flexible backing in which closely spaced wire pins are embedded. The shape, length, diameter, and spacing of these wire pins are dictated by the card designer and the particular requirements of the application where the card cloth will be used. A later version of the card clothing product developed during the latter half of the 19th century and was found only on commercial carding machines, whereby a single piece of serrated wire was wrapped around a roller, became known as metallic card clothing.

Carding machines are known as cards. Fibre may be carded by hand for hand spinning.

History

Science historian Joseph Needham ascribes the invention of bow-instruments used in textile technology to India. The earliest evidence for using bow-instruments for carding comes from India (2nd century CE). These carding devices, called kaman (bow) and dhunaki, would loosen the texture of the fibre by the means of a vibrating string.

At the turn of the eighteenth century, wool in England was being carded using pairs of hand cards, in a two-stage process: 'working' with the cards opposed and 'stripping' where they are in parallel.

In 1748 Lewis Paul of Birmingham, England, invented two hand driven carding machines. The first used a coat of wires on a flat table moved by foot pedals. This failed. On the second, a coat of wire slips was placed around a card which was then wrapped around a cylinder. Daniel Bourn obtained a similar patent in the same year, and probably used it in his spinning mill at Leominster, but this burnt down in 1754. The invention was later developed and improved by Richard Arkwright and Samuel Crompton. Arkwright's second patent (of 1775) for his carding machine was subsequently declared invalid (1785) because it lacked originality.

From the 1780s, the carding machines were set up in mills in the north of England and mid-Wales. Priority was given to cotton but woollen fibres were being carded in Yorkshire in 1780. With woollen, two carding machines were used: the first or the scribbler opened and mixed the fibres, the second or the condenser mixed and formed the web. The first in Wales was in a factory at Dolobran near Meifod in 1789. These carding mills produced yarn particularly for the Welsh flannel industry.

In 1834 James Walton invented the first practical machines to use a wire card. He patented this machine and also a new form of card with layers of cloth and rubber. The combination of these two inventions became the standard for the carding industry, using machines first built by Parr, Curtis and Walton in Ancoats, and from 1857 by Jams Walton & Sons at Haughton Dale.

By 1838, the Spen Valley, centred on Cleckheaton had at least 11 card clothing factories and by 1893, it was generally accepted as the card cloth capital of the world, though by 2008 only two manufacturers of metallic and flexible card clothing remained in England, Garnett Wire Ltd. dating back to 1851 and Joseph Sellers & Son Ltd established in 1840.

Baird from Scotland took carding to Leicester, Massachusetts in the 1780s. In the 1890s, the town produced one-third of all hand and machine cards in North America. John and Arthur Slater, from Saddleworth went over to work with Slater in 1793.

A 1780s scribbling mill would be driven by a water wheel. There were 170 scribbling mills around Leeds at that time. Each scribbler would require 15–45 horsepower (11–34 kW) to operate. Modern machines are driven by belting from an electric motor or an overhead shaft via two pulleys.

Tools

Predating mechanised weaving, hand loom weaving was a cottage industry that used the same processes but on a smaller scale. These skills have survived as an artisan craft in less developed societies- and as art form and hobby in advanced societies.

Hand carders

Hand cards are typically square or rectangular paddles manufactured in a variety of sizes from 2 by 2 inches (5.1 cm × 5.1 cm) to 4 by 8 inches (10 cm × 20 cm). The working face of each paddle can be flat or cylindrically curved and wears the card cloth. Small cards, called flick cards, are used to flick the ends of a lock of fibre, or to tease out some strands for spinning off.

A pair of cards is used to brush the wool between them until the fibres are more or less aligned in the same direction. The aligned fibre is then peeled from the card as a rolag. Carding is an activity normally done outside or over a drop cloth, depending on the wool's cleanliness. Rolag is peeled from the card.

Carding of wool can either be done "in the grease" or not, depending on the type of machine and on the spinner's preference. "In the grease" means that the lanolin that naturally comes with the wool has not been washed out, leaving the wool with a slightly greasy feel. The large drum carders do not tend to get along well with lanolin, so most commercial worsted and woollen mills wash the wool before carding. Hand carders (and small drum carders too, though the directions may not recommend it) can be used to card lanolin rich wool.

Drum carders

The simplest machine carder is the drum carder. Most drum carders are hand-cranked but some are powered by an electric motor. These machines generally have two rollers, or drums, covered with card clothing. The licker-in, or smaller roller meters fibre from the infeed tray onto the larger storage drum. The two rollers are connected to each other by a belt- or chain-drive so that their relative speeds cause the storage drum to gently pull fibres from the licker-in. This pulling straightens the fibres and lays them between the wire pins of the storage drum's card cloth. Fibre is added until the storage drum's card cloth is full. A gap in the card cloth facilitates removal of the batt when the card cloth is full.

Some drum carders have a soft-bristled brush attachment that presses the fibre into the storage drum. This attachment serves to condense the fibres already in the card cloth and adds a small amount of additional straightening to the condensed fibre.

Cottage carders

Cottage carding machines differ significantly from the simple drum card. These carders do not store fibre in the card cloth as the drum carder does but, rather, fibre passes through the workings of the carder for storage or for additional processing by other machines.

A typical cottage carder has a single large drum (the swift) accompanied by a pair of in-feed rollers (nippers), one or more pairs of worker and stripper rollers, a fancy, and a doffer. In-feed to the carder is usually accomplished by hand or by conveyor belt and often the output of the cottage carder is stored as a batt or further processed into roving and wound into bumps with an accessory bump winder.

Raw fibre, placed on the in-feed table or conveyor is moved to the nippers which restrain and meter the fiber onto the swift. As they are transferred to the swift, many of the fibres are straightened and laid into the swift's card cloth. These fibres will be carried past the worker / stripper rollers to the fancy.

As the swift carries the fibres forward, from the nippers, those fibres that are not yet straightened are picked up by a worker and carried over the top to its paired stripper. Relative to the surface speed of the swift, the worker turns quite slowly. This has the effect of reversing the fibre. The stripper, which turns at a higher speed than the worker, pulls fibres from the worker and passes them to the swift. The stripper's relative surface speed is slower than the swift's so the swift pulls the fibres from the stripper for additional straightening.

Straightened fibres are carried by the swift to the fancy. The fancy's card cloth is designed to engage with the swift's card cloth so that the fibres are lifted to the tips of the swift's card cloth and carried by the swift to the doffer. The fancy and the swift are the only rollers in the carding process that actually touch.

The slowly turning doffer removes the fibres from the swift and carries them to the fly comb where they are stripped from the doffer. A fine web of more or less parallel fibre, a few fibres thick and as wide as the carder's rollers, exits the carder at the fly comb by gravity or other mechanical means for storage or further processing.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1380 2022-05-15 14:08:49

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,375

Re: Miscellany

1354) Cardboard

Cardboard is a generic term for heavy paper-based products. The construction can range from a thick paper known as paperboard to corrugated fiberboard which is made of multiple plies of material. Natural cardboards can range from grey to light brown in color, depending of the specific product; dyes, pigments, printing, and coatings are available.

The term "cardboard" has general use in English and French, but the term cardboard is deprecated in commerce and industry as not adequately defining a specific product. Material producers, container manufacturers, packaging engineers, and standards organizations, use more specific terminology.

Statistics

In 2020, the United States hit a record high in its yearly use of one of the most ubiquitous manufactured materials on earth, cardboard. With around 80 per cent of all the products sold in the United States being packaged in cardboard, over 120 billion pieces were used that year. In the same year, over 13,000 separate pieces of consumer cardboard packaging was thrown away by American households, combined with all paper products and this constitutes almost 42 per cent of all solid waste generated by the United States annually.

However, despite the sheer magnitude of paper waste, the vast majority of it is composed of one of the most successful and sustainable packaging materials of modern times - corrugated cardboard, known industrially as corrugated fiberboard.

Types

Various card stocks:

Various types of cards are available, which may be called "cardboard". Included are: thick paper (of various types) or pasteboard used for business cards, aperture cards, postcards, playing cards, catalog covers, binder's board for bookbinding, scrapbooking, and other uses which require higher durability than regular paper.

Paperboard

Paperboard is a paper-based material, usually more than about ten mils (0.010 inches (0.25 mm)) thick. It is often used for folding cartons, set-up boxes, carded packaging, etc. Configurations of paperboard include:

* Containerboard, used in the production of corrugated fiberboard.

* Folding boxboard, comprising multiple layers of chemical and mechanical pulp.

* Solid bleached board, made purely from bleached chemical pulp and usually has a mineral or synthetic pigment.

* Solid unbleached board, typically made of unbleached chemical pulp.

* White lined chipboard, typically made from layers of waste paper or recycled fibers, most often with two to three layers of coating on the top and one layer on the reverse side. Because of its recycled content it will be grey from the inside.

* Binder's board, a paperboard used in bookbinding for making hardcovers.

Currently, materials falling under these names may be made without using any actual paper.

Corrugated fiberboard

Corrugated fiberboard is a combination of paperboards, usually two flat liners and one inner fluted corrugated medium. It is often used for making corrugated boxes for shipping or storing products. This type of cardboard is also used by artists as original material for sculpting.

Recycling

Most types of cardboard are recyclable. Boards that are laminates, wax coated, or treated for wet-strength are often more difficult to recycle. Clean cardboard (i.e., cardboard that has not been subject to chemical coatings) "is usually worth recovering, although often the difference between the value it realizes and the cost of recovery is marginal". Cardboard can be recycled for industrial or domestic use. For example, cardboard may be composted or shredded for animal bedding.

History

The material had been first made in France, in 1751, by a pupil of Réaumur, and was used to reinforce playing cards. The term cardboard has been used since at least 1848, when Anne Brontë mentioned it in her novel, The Tenant of Wildfell Hall. The Kellogg brothers first used paperboard cartons to hold their flaked corn cereal, and later, when they began marketing it to the general public, a heat-sealed bag of wax paper was wrapped around the outside of the box and printed with their brand name. This development marked the origin of the cereal box, though in modern times the sealed bag is plastic and is kept inside the box. The Kieckhefer Container Company, run by John W. Kieckhefer, was another early American packaging industry pioneer. It excelled in the use of fiber shipping containers, particularly the paper milk carton.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1381 2022-05-16 14:23:01

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,375

Re: Miscellany

1355) Tarpaulin

Summary

It is a large piece of waterproof material (such as plastic or canvas) that is used to cover things and keep them dry.

Details

A tarpaulin or tarp is a large sheet of strong, flexible, water-resistant or waterproof material, often cloth such as canvas or polyester coated with polyurethane, or made of plastics such as polyethylene. Tarpaulins often have reinforced grommets at the corners and along the sides to form attachment points for rope, allowing them to be tied down or suspended.

Inexpensive modern tarpaulins are made from woven polyethylene; this material is so associated with tarpaulins that it has become colloquially known in some quarters as polytarp.

Uses

Tarpaulins are used in many ways to protect persons and things from wind, rain, and sunlight. They are used during construction or after disasters to protect partially built or damaged structures, to prevent mess during painting and similar activities, and to contain and collect debris. They are used to protect the loads of open trucks and wagons, to keep wood piles dry, and for shelters such as tents or other temporary structures.

Tarpaulins are also used for advertisement printing, most notably for billboards. Perforated tarpaulins are typically used for medium to large advertising, or for protection on scaffoldings; the aim of the perforations (from 20% to 70%) is to reduce wind vulnerability.

Polyethylene tarpaulins have also proven to be a popular source when an inexpensive, water-resistant fabric is needed. Many amateur builders of plywood sailboats turn to polyethylene tarpaulins for making their sails, as it is inexpensive and easily worked. With the proper type of adhesive tape, it is possible to make a serviceable sail for a small boat with no sewing.

Plastic tarps are sometimes used as a building material in communities of indigenous North Americans. Tipis made with tarps are known as tarpees.

Types

Tarpaulins can be classified based on a diversity of factors, such as material type (polyethylene, canvas, vinyl, etc.), thickness, which is generally measured in mils or generalized into categories (such as "regular duty", "heavy duty", "super heavy duty", etc.), and grommet strength (simple vs. reinforced), among others.

Actual tarp sizes are generally about three to five percent smaller in each dimension than nominal size;[citation needed][clarification needed] for example, a tarp nominally 20 ft × 20 ft (6.1 m × 6.1 m) will actually measure about 19 ft × 19 ft (5.8 m × 5.8 m). Grommets may be aluminum, stainless steel, or other materials. Grommet-to-grommet distances are typically between 18 in (460 mm) and 5 ft (1.5 m). The weave count is often between 8 and 12 per square inch: the greater the count, the greater its strength. Tarps may also be washable or non-washable and waterproof or non-waterproof, and mildewproof vs. non-mildewproof. Tarp flexibility is especially significant under cold conditions.

Type of material:

Polyethylene

A polyethylene tarpaulin ("polytarp") is not a traditional fabric, but rather, a laminate of woven and sheet material. The center is loosely woven from strips of polyethylene plastic, with sheets of the same material bonded to the surface. This creates a fabric-like material that resists stretching well in all directions and is waterproof. Sheets can be either of low density polyethylene (LDPE) or high density polyethylene (HDPE). When treated against ultraviolet light, these tarpaulins can last for years exposed to the elements, but non-UV treated material will quickly become brittle and lose strength and water resistance if exposed to sunlight.

Canvas

Canvas tarpaulins are not 100% waterproof, though they are water resistant. Thus, while a small amount of water for a short period of time will not affect them, when there is standing water on canvas tarps, or when water cannot quickly drain away from canvas tarps, the standing water will drip through this type of tarp.

Vinyl

Polyvinyl chloride ("vinyl") tarpaulins are industrial-grade and intended for heavy-duty use. They are constructed of 10 oz/sq yd (340 g/sq m) coated yellow vinyl. This makes it waterproof and gives it a high abrasion resistance and tear strength. These resist oil, acid, grease and mildew. The vinyl tarp is ideal for agriculture, construction, industrial, trucks, flood barrier and temporary roof repair.

Silnylon

Tarp tents may be made of silnylon.

U.S. color scheme

For years manufacturers have used a color code to indicate the grade of tarpaulins, but not all manufacturers follow this traditional method of grading. Following this color-coded system, blue indicates a lightweight tarp, and typically has a weave count of 8×8 and a thickness of 0.005–0.006 in (0.13–0.15 mm). Silver is a heavy-duty tarp and typically has a weave count of 14×14 and a thickness of 0.011–0.012 in (0.28–0.30 mm).

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1382 2022-05-17 14:35:33

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,375

Re: Miscellany

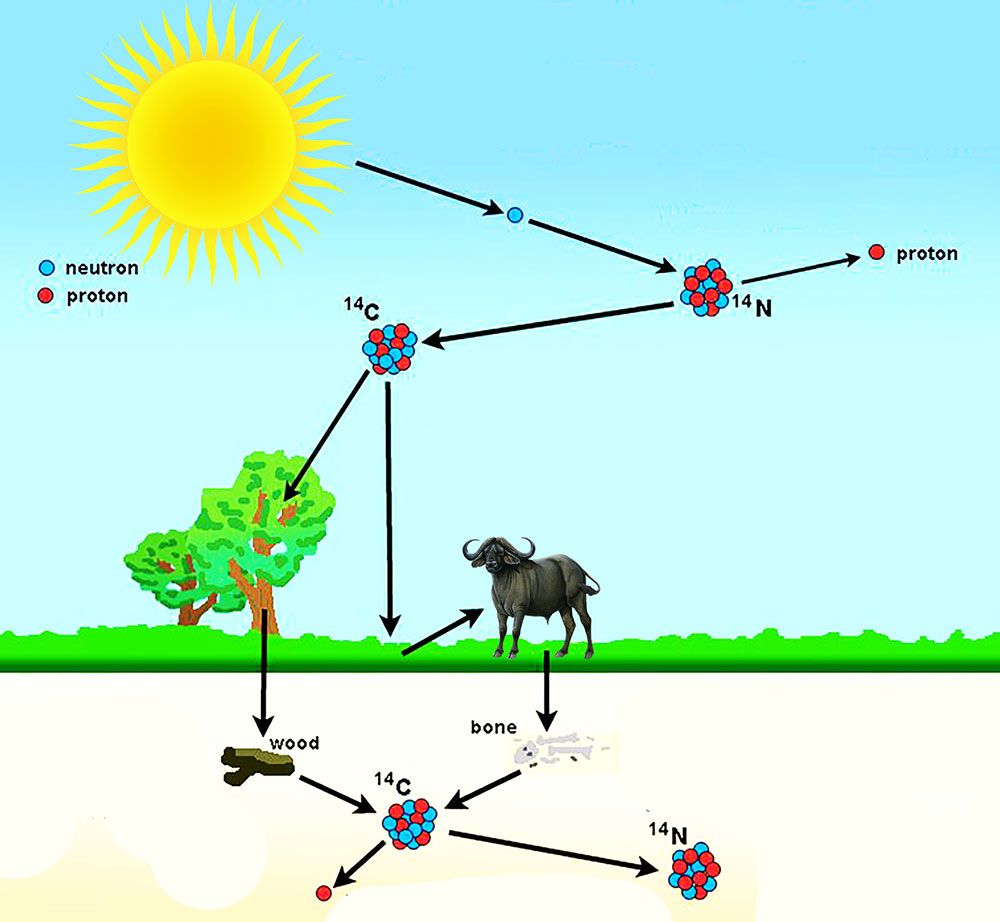

1356) Archeology

Summary

Archaeology or archeology is the scientific study of human activity through the recovery and analysis of material culture. The archaeological record consists of artifacts, architecture, biofacts or ecofacts, sites, and cultural landscapes. Archaeology can be considered both a social science and a branch of the humanities. In Europe it is often viewed as either a discipline in its own right or a sub-field of other disciplines, while in North America archaeology is a sub-field of anthropology.

Archaeologists study human prehistory and history, from the development of the first stone tools at Lomekwi in East Africa 3.3 million years ago up until recent decades. Archaeology is distinct from palaeontology, which is the study of fossil remains. Archaeology is particularly important for learning about prehistoric societies, for which, by definition, there are no written records. Prehistory includes over 99% of the human past, from the Paleolithic until the advent of literacy in societies around the world. Archaeology has various goals, which range from understanding culture history to reconstructing past lifeways to documenting and explaining changes in human societies through time. Derived from the Greek, the term archaeology literally means “the study of ancient history.”

The discipline involves surveying, excavation and eventually analysis of data collected to learn more about the past. In broad scope, archaeology relies on cross-disciplinary research.

Archaeology developed out of antiquarianism in Europe during the 19th century, and has since become a discipline practiced around the world. Archaeology has been used by nation-states to create particular visions of the past. Since its early development, various specific sub-disciplines of archaeology have developed, including maritime archaeology, feminist archaeology and archaeoastronomy, and numerous different scientific techniques have been developed to aid archaeological investigation. Nonetheless, today, archaeologists face many problems, such as dealing with pseudoarchaeology, the looting of artifacts, a lack of public interest, and opposition to the excavation of human remains.

Details

Archaeology, also spelled archeology, is the scientific study of the material remains of past human life and activities. These include human artifacts from the very earliest stone tools to the man-made objects that are buried or thrown away in the present day: everything made by human beings—from simple tools to complex machines, from the earliest houses and temples and tombs to palaces, cathedrals, and pyramids. Archaeological investigations are a principal source of knowledge of prehistoric, ancient, and extinct culture. The word comes from the Greek archaia (“ancient things”) and logos (“theory” or “science”).

The archaeologist is first a descriptive worker: he has to describe, classify, and analyze the artifacts he studies. An adequate and objective taxonomy is the basis of all archaeology, and many good archaeologists spend their lives in this activity of description and classification. But the main aim of the archaeologist is to place the material remains in historical contexts, to supplement what may be known from written sources, and, thus, to increase understanding of the past. Ultimately, then, the archaeologist is a historian: his aim is the interpretive description of the past of man.

Increasingly, many scientific techniques are used by the archaeologist, and he uses the scientific expertise of many persons who are not archaeologists in his work. The artifacts he studies must often be studied in their environmental contexts, and botanists, zoologists, soil scientists, and geologists may be brought in to identify and describe plants, animals, soils, and rocks. Radioactive carbon dating, which has revolutionized much of archaeological chronology, is a by-product of research in atomic physics. But although archaeology uses extensively the methods, techniques, and results of the physical and biological sciences, it is not a natural science; some consider it a discipline that is half science and half humanity. Perhaps it is more accurate to say that the archaeologist is first a craftsman, practicing many specialized crafts (of which excavation is the most familiar to the general public), and then a historian.

The justification for this work is the justification of all historical scholarship: to enrich the present by knowledge of the experiences and achievements of our predecessors. Because it concerns things people have made, the most direct findings of archaeology bear on the history of art and technology; but by inference it also yields information about the society, religion, and economy of the people who created the artifacts. Also, it may bring to light and interpret previously unknown written documents, providing even more certain evidence about the past.

But no one archaeologist can cover the whole range of man’s history, and there are many branches of archaeology divided by geographical areas (such as classical archaeology, the archaeology of ancient Greece and Rome; or Egyptology, the archaeology of ancient Egypt) or by periods (such as medieval archaeology and industrial archaeology). Writing began 5,000 years ago in Mesopotamia and Egypt; its beginnings were somewhat later in India and China, and later still in Europe. The aspect of archaeology that deals with the past of man before he learned to write has, since the middle of the 19th century, been referred to as prehistoric archaeology, or prehistory. In prehistory the archaeologist is paramount, for here the only sources are material and environmental.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1383 2022-05-18 14:32:48

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,375

Re: Miscellany

1357) Slate

Summary

Slate is a fine-grained, foliated, homogeneous metamorphic rock derived from an original shale-type sedimentary rock composed of clay or volcanic ash through low-grade regional metamorphism. It is the finest grained foliated metamorphic rock. Foliation may not correspond to the original sedimentary layering, but instead is in planes perpendicular to the direction of metamorphic compression.

The foliation in slate is called "slaty cleavage". It is caused by strong compression causing fine grained clay flakes to regrow in planes perpendicular to the compression. When expertly "cut" by striking parallel to the foliation, with a specialized tool in the quarry, many slates will display a property called fissility, forming smooth flat sheets of stone which have long been used for roofing, floor tiles, and other purposes. Slate is frequently grey in color, especially when seen, en masse, covering roofs. However, slate occurs in a variety of colors even from a single locality; for example, slate from North Wales can be found in many shades of grey, from pale to dark, and may also be purple, green or cyan. Slate is not to be confused with shale, from which it may be formed, or schist.

The word "slate" is also used for certain types of object made from slate rock. It may mean a single roofing tile made of slate, or a writing slate. They were traditionally a small, smooth piece of the rock, often framed in wood, used with chalk as a notepad or notice board, and especially for recording charges in pubs and inns. The phrases "clean slate" and "blank slate" come from this usage.

Details

Slate is fine-grained, clayey metamorphic rock that cleaves, or splits, readily into thin slabs having great tensile strength and durability; some other rocks that occur in thin beds are improperly called slate because they can be used for roofing and similar purposes. True slates do not, as a rule, split along the bedding plane but along planes of cleavage, which may intersect the bedding plane at high angles. Slate was formed under low-grade metamorphic conditions—i.e., under relatively low temperature and pressure. The original material was a fine clay, sometimes with sand or volcanic dust, usually in the form of a sedimentary rock (e.g., a mudstone or shale). The parent rock may be only partially altered so that some of the original mineralogy and sedimentary bedding are preserved; the bedding of the sediment as originally laid down may be indicated by alternating bands, sometimes seen on the cleavage faces. Cleavage is a super-induced structure, the result of pressure acting on the rock at some time when it was deeply buried beneath the Earth’s surface. On this account, slates occur chiefly among older rocks, although some occur in regions in which comparatively recent rocks have been folded and compressed as a result of mountain-building movements. The direction of cleavage depends upon the direction of the stresses applied during metamorphism.

Slates may be black, blue, purple, red, green, or gray. Dark slates usually owe their colour to carbonaceous material or to finely divided iron sulfide. Reddish and purple varieties owe their colour to the presence of hematite (iron oxide), and green varieties owe theirs to the presence of much chlorite, a green micaceous clay mineral. The principal minerals in slate are mica (in small, irregular scales), chlorite (in flakes), and quartz (in lens-shaped grains).

Slates are split from quarried blocks about 7.5 cm (3 inches) thick. A chisel, placed in position against the edge of the block, is lightly tapped with a mallet; a crack appears in the direction of cleavage, and slight leverage with the chisel serves to split the block into two pieces with smooth and even surfaces. This is repeated until the original block is converted into 16 or 18 pieces, which are afterward trimmed to size either by hand or by means of machine-driven rotating knives.

Slate is sometimes marketed as dimension slate and crushed slate (granules and flour). Dimension slate is used mainly for electrical panels, laboratory tabletops, roofing and flooring, and blackboards. Crushed slate is used on composition roofing, in aggregates, and as a filler. Principal production in the United States is from Pennsylvania and Vermont; northern Wales provides most of the slate used in the British Isles.

Additional Information

Slate is a fine-grained, foliated, homogeneous metamorphic rock derived from an original shale-type sedimentary rock composed of clay or volcanic ash through low-grade regional metamorphism. It is the finest grained foliated metamorphic rock. Foliation may not correspond to the original sedimentary layering, but instead is in planes perpendicular to the direction of metamorphic compression.

The foliation in slate is called "slaty cleavage". It is caused by strong compression causing fine grained clay flakes to regrow in planes perpendicular to the compression. When expertly "cut" by striking parallel to the foliation, with a specialised tool in the quarry, many slates will display a property called fissility, forming smooth flat sheets of stone which have long been used for roofing, floor tiles, and other purposes. Slate is frequently grey in colour, especially when seen, en masse, covering roofs. However, slate occurs in a variety of colours even from a single locality; for example, slate from North Wales can be found in many shades of grey, from pale to dark, and may also be purple, green or cyan. Slate is not to be confused with shale, from which it may be formed, or schist.

The word "slate" is also used for certain types of object made from slate rock. It may mean a single roofing tile made of slate, or a writing slate. This was traditionally a small smooth piece of the rock, often framed in wood, used with chalk as a notepad or noticeboard, and especially for recording charges in pubs and inns. The phrases "clean slate" and "blank slate" come from this usage.Slate is a low grade metamorphic rock which is formed by the alteration of shale or mudstone by regional metamorphism. Slate is a fine grained foliated rock and is the finest grained foliated metamorphic rock. Foliation is not formed along the original sedimentary layering but is the response of metamorphic compression. The strong foliation is called slaty cleavage which is the result of compression causing fine grained clay flakes to regrow in planes perpendicular to the compression.

Composition of slate

Slate is primarily composed of clay minerals or even micas depending upon the degree of metamorphism. The clay minerals which were originally deposited with temperature and pressure increasing level, it is altered into mica. Slate can also have abundant quartz and small amount of feldspar, calcite, pyrite, hematite and other minerals.

How slate forms?

Shale is deposited in a sedimentary basin where finer particles are transported by wind or water. These deposited fine grains are then compacted and lithified. Tectonic environments for producing slates are when this basin is involved in a convergent plate boundaries. The shale and mudstone in the basin is compressed by horizontal forces with minor heating. These forces and heat modify the clay minerals. Foliation develops at right angles to the compressive forces of the convergent plate boundaries.

Colour of slate

Most slates are grey in colour and from light to dark shades of grey can also be present. It also have green, red, black, purple and brown colour shades. The colour of slates are determined by amount of iron and organic material present.

Slaty cleavage

Foliations is slate is the result of parallel orientation of platy minerals in the rock such as grains of clay and mica. These parallel minerals alignment gives the rock ability to break smoothly along planes of foliation.

Uses

Slates are mined to use as a roofing slates throughout the world. Slates are well used as it can be cut into thin sheets, absorbs minimal moisture and performs well when in contact with freezing water. Slates can also be used for interior flooring, exterior paving, dimension stone and decorative aggregates.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1384 2022-05-19 13:52:54

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,375

Re: Miscellany

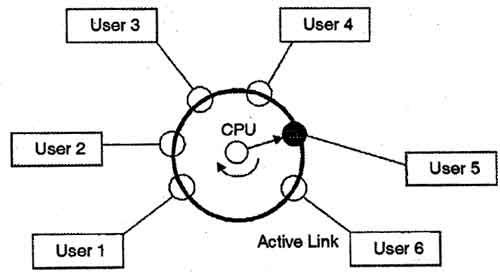

1358) Calculator

Summary

Calculator is a machine for automatically performing arithmetical operations and certain mathematical functions. Modern calculators are descendants of a digital arithmetic machine devised by Blaise Pascal in 1642. Later in the 17th century, Gottfried Wilhelm Leibniz created a more-advanced machine, and, especially in the late 19th century, inventors produced calculating machines that were smaller and smaller and less and less laborious to use. In the early decades of the 20th century, desktop adding machines and other calculating devices were developed. Some were key-driven, others required a rotating drum to enter sums punched into a keyboard, and later the drum was spun by electric motor.

The development of electronic data-processing systems by the mid-1950s began to hint at obsolescence for mechanical calculators, and the developments of miniature solid-state electronic devices ushered in new calculators for pocket or desk top that, by the late 20th century, could perform simple mathematical functions (e.g., normal and inverse trigonometric functions) in addition to basic arithmetical operations; could store data and instructions in memory registers, providing programming capabilities similar to those of small computers; and could operate many times faster than their mechanical predecessors. Various sophisticated calculators of this type were designed to employ interchangeable preprogrammed software modules capable of 5,000 or more program steps. Some desktop and pocket models were equipped to print their output on a roll of paper; others even had plotting and alphabetic character printing capabilities.

Details

An electronic calculator is typically a portable electronic device used to perform calculations, ranging from basic arithmetic to complex mathematics.

The first solid-state electronic calculator was created in the early 1960s. Pocket-sized devices became available in the 1970s, especially after the Intel 4004, the first microprocessor, was developed by Intel for the Japanese calculator company Busicom. They later became used commonly within the petroleum industry (oil and gas).

Modern electronic calculators vary from cheap, give-away, credit-card-sized models to sturdy desktop models with built-in printers. They became popular in the mid-1970s as the incorporation of integrated circuits reduced their size and cost. By the end of that decade, prices had dropped to the point where a basic calculator was affordable to most and they became common in schools.

Computer operating systems as far back as early Unix have included interactive calculator programs such as dc and hoc, and calculator functions are included in almost all personal digital assistant (PDA) type devices, the exceptions being a few dedicated address book and dictionary devices.

In addition to general purpose calculators, there are those designed for specific markets. For example, there are scientific calculators which include trigonometric and statistical calculations. Some calculators even have the ability to do computer algebra. Graphing calculators can be used to graph functions defined on the real line, or higher-dimensional Euclidean space. As of 2016, basic calculators cost little, but scientific and graphing models tend to cost more.

With the very wide availability of smartphones, tablet computers and personal computers, dedicated hardware calculators, while still widely used, are less common than they once were. In 1986, calculators still represented an estimated 41% of the world's general-purpose hardware capacity to compute information. By 2007, this had diminished to less than 0.05%.

Design:

Input

Electronic calculators contain a keyboard with buttons for digits and arithmetical operations; some even contain "00" and "000" buttons to make larger or smaller numbers easier to enter. Most basic calculators assign only one digit or operation on each button; however, in more specific calculators, a button can perform multi-function working with key combinations.

Display output

Calculators usually have liquid-crystal displays (LCD) as output in place of historical light-emitting diode (LED) displays and vacuum fluorescent displays (VFD); details are provided in the section Technical improvements.

Large-sized figures are often used to improve readability; while using decimal separator (usually a point rather than a comma) instead of or in addition to vulgar fractions. Various symbols for function commands may also be shown on the display. Fractions such as 1⁄3 are displayed as decimal approximations, for example rounded to 0.33333333. Also, some fractions (such as 1⁄7, which is 0.14285714285714; to 14 significant figures) can be difficult to recognize in decimal form; as a result, many scientific calculators are able to work in vulgar fractions or mixed numbers.

Memory

Calculators also have the ability to store numbers into computer memory. Basic calculators usually store only one number at a time; more specific types are able to store many numbers represented in variables. The variables can also be used for constructing formulas. Some models have the ability to extend memory capacity to store more numbers; the extended memory address is termed an array index.

Power source

Power sources of calculators are batteries, solar cells or mains electricity (for old models), turning on with a switch or button. Some models even have no turn-off button but they provide some way to put off (for example, leaving no operation for a moment, covering solar cell exposure, or closing their lid). Crank-powered calculators were also common in the early computer era.

Internal workings

In general, a basic electronic calculator consists of the following components:

* Power source (mains electricity, battery and/or solar cell)

* Keypad (input device) – consists of keys used to input numbers and function commands (addition, multiplication, square-root, etc.)

* Display panel (output device) – displays input numbers, commands and results. Liquid-crystal displays (LCDs), vacuum fluorescent displays (VFDs), and light-emitting diode (LED) displays use seven segments to represent each digit in a basic calculator. Advanced calculators may use dot matrix displays.

* A printing calculator, in addition to a display panel, has a printing unit that prints results in ink onto a roll of paper, using a printing mechanism.

* Processor chip (microprocessor or central processing unit).

Numeric representation

Most pocket calculators do all their calculations in binary-coded decimal (BCD) rather than binary. BCD is common in electronic systems where a numeric value is to be displayed, especially in systems consisting solely of digital logic, and not containing a microprocessor. By employing BCD, the manipulation of numerical data for display can be greatly simplified by treating each digit as a separate single sub-circuit. This matches much more closely the physical reality of display hardware—a designer might choose to use a series of separate identical seven-segment displays to build a metering circuit, for example. If the numeric quantity were stored and manipulated as pure binary, interfacing to such a display would require complex circuitry. Therefore, in cases where the calculations are relatively simple, working throughout with BCD can lead to a simpler overall system than converting to and from binary. (For example, CDs keep the track number in BCD, limiting them to 99 tracks.)

The same argument applies when hardware of this type uses an embedded microcontroller or other small processor. Often, smaller code results when representing numbers internally in BCD format, since a conversion from or to binary representation can be expensive on such limited processors. For these applications, some small processors feature BCD arithmetic modes, which assist when writing routines that manipulate BCD quantities.

Where calculators have added functions (such as square root, or trigonometric functions), software algorithms are required to produce high precision results. Sometimes significant design effort is needed to fit all the desired functions in the limited memory space available in the calculator chip, with acceptable calculation time.

Calculators compared to computers

The fundamental difference between a calculator and computer is that a computer can be programmed in a way that allows the program to take different branches according to intermediate results, while calculators are pre-designed with specific functions (such as addition, multiplication, and logarithms) built in. The distinction is not clear-cut: some devices classed as programmable calculators have programming functions, sometimes with support for programming languages (such as RPL or TI-BASIC).

For instance, instead of a hardware multiplier, a calculator might implement floating point mathematics with code in read-only memory (ROM), and compute trigonometric functions with the CORDIC algorithm because CORDIC does not require much multiplication. Bit serial logic designs are more common in calculators whereas bit parallel designs dominate general-purpose computers, because a bit serial design minimizes chip complexity, but takes many more clock cycles. This distinction blurs with high-end calculators, which use processor chips associated with computer and embedded systems design, more so the Z80, MC68000, and ARM architectures, and some custom designs specialized for the calculator market.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1385 2022-05-20 14:46:24

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,375

Re: Miscellany

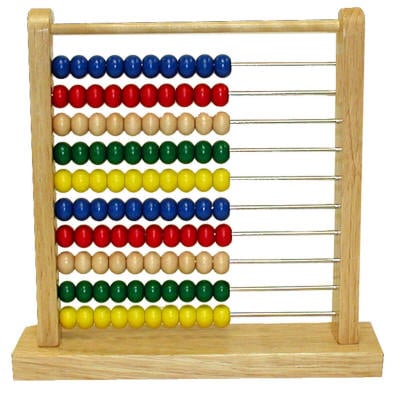

1359) Abacus

Summary

Abacus, plural abaci or abacuses, is a calculating device, probably of Babylonian origin, that was long important in commerce. It is the ancestor of the modern calculating machine and computer.

The earliest “abacus” likely was a board or slab on which a Babylonian spread sand in order to trace letters for general writing purposes. The word abacus is probably derived, through its Greek form abakos, from a Semitic word such as the Hebrew ibeq (“to wipe the dust”; noun abaq, “dust”). As the abacus came to be used solely for counting and computing, its form was changed and improved. The sand (“dust”) surface is thought to have evolved into the board marked with lines and equipped with counters whose positions indicated numerical values—i.e., ones, tens, hundreds, and so on. In the Roman abacus the board was given grooves to facilitate moving the counters in the proper files. Another form, common today, has the counters strung on wires.

The abacus, generally in the form of a large calculating board, was in universal use in Europe in the Middle Ages, as well as in the Arab world and in Asia. It reached Japan in the 16th century. The introduction of the Hindu-Arabic notation, with its place value and zero, gradually replaced the abacus, though it was still widely used in Europe as late as the 17th century. The abacus survives today in the Middle East, China, and Japan, but it has been largely replaced by electronic calculators.

Details

The abacus (plural abaci or abacuses), also called a counting frame, is a calculating tool which has been used since ancient times. It was used in the ancient Near East, Europe, China, and Russia, centuries before the adoption of the Hindu-Arabic numeral system. The exact origin of the abacus has not yet emerged. It consists of rows of movable beads, or similar objects, strung on a wire. They represent digits. One of the two numbers is set up, and the beads are manipulated to perform an operation such as addition, or even a square or cubic root.

In their earliest designs, the rows of beads could be loose on a flat surface or sliding in grooves. Later the beads were made to slide on rods and built into a frame, allowing faster manipulation. Abacuses are still made, often as a bamboo frame with beads sliding on wires. In the ancient world, particularly before the introduction of positional notation, abacuses were a practical calculating tool. The abacus is still used to teach the fundamentals of mathematics to some children, for example, in Russia.

Designs such as the Japanese soroban have been used for practical calculations of up to multi-digit numbers. Any particular abacus design supports multiple methods to perform calculations, including the four basic operations and square and cube roots. Some of these methods work with non-natural numbers (numbers such as 1.5 and 3⁄4).

Although calculators and computers are commonly used today instead of abacuses, abacuses remain in everyday use in some countries. Merchants, traders, and clerks in some parts of Eastern Europe, Russia, China, and Africa use abacuses. The abacus remains in common use as a scoring system in non-electronic table games. Others may use an abacus due to visual impairment that prevents the use of a calculator.

School abacus

Around the world, abacuses have been used in pre-schools and elementary schools as an aid in teaching the numeral system and arithmetic.

In Western countries, a bead frame similar to the Russian abacus but with straight wires and a vertical frame is common.

The wireframe may be used either with positional notation like other abacuses (thus the 10-wire version may represent numbers up to 9,999,999,999), or each bead may represent one unit (e.g. 74 can be represented by shifting all beads on 7 wires and 4 beads on the 8th wire, so numbers up to 100 may be represented). In the bead frame shown, the gap between the 5th and 6th wire, corresponding to the color change between the 5th and the 6th bead on each wire, suggests the latter use. Teaching multiplication, e.g. 6 times 7, may be represented by shifting 7 beads on 6 wires.

The red-and-white abacus is used in contemporary primary schools for a wide range of number-related lessons. The twenty bead version, referred to by its Dutch name rekenrek ("calculating frame"), is often used, either on a string of beads or on a rigid framework.

Feynman vs the abacus

Physicist Richard Feynman was noted for facility in mathematical calculations. He wrote about an encounter in Brazil with a Japanese abacus expert, who challenged him to speed contests between Feynman's pen and paper, and the abacus. The abacus was much faster for addition, somewhat faster for multiplication, but Feynman was faster at division. When the abacus was used for a really difficult challenge, i.e. cube roots, Feynman won easily. However, the number chosen at random was close to a number Feynman happened to know was an exact cube, allowing him to use approximate methods.

Neurological analysis

Learning how to calculate with the abacus may improve capacity for mental calculation. Abacus-based mental calculation (AMC), which was derived from the abacus, is the act of performing calculations, including addition, subtraction, multiplication, and division, in the mind by manipulating an imagined abacus. It is a high-level cognitive skill that runs calculations with an effective algorithm. People doing long-term AMC training show higher numerical memory capacity and experience more effectively connected neural pathways. They are able to retrieve memory to deal with complex processes. AMC involves both visuospatial and visuomotor processing that generate the visual abacus and move the imaginary beads. Since it only requires that the final position of beads be remembered, it takes less memory and less computation time.

Binary abacus

The binary abacus is used to explain how computers manipulate numbers. The abacus shows how numbers, letters, and signs can be stored in a binary system on a computer, or via ASCII. The device consists of a series of beads on parallel wires arranged in three separate rows. The beads represent a switch on the computer in either an "on" or "off" position.

Visually impaired users

An adapted abacus, invented by Tim Cranmer, and called a Cranmer abacus is commonly used by visually impaired users. A piece of soft fabric or rubber is placed behind the beads, keeping them in place while the users manipulate them. The device is then used to perform the mathematical functions of multiplication, division, addition, subtraction, square root, and cube root.

Although blind students have benefited from talking calculators, the abacus is often taught to these students in early grades.[58] Blind students can also complete mathematical assignments using a braille-writer and Nemeth code (a type of braille code for mathematics) but large multiplication and long division problems are tedious. The abacus gives these students a tool to compute mathematical problems that equals the speed and mathematical knowledge required by their sighted peers using pencil and paper. Many blind people find this number machine a useful tool throughout life.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1386 2022-05-21 13:28:58

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,375

Re: Miscellany

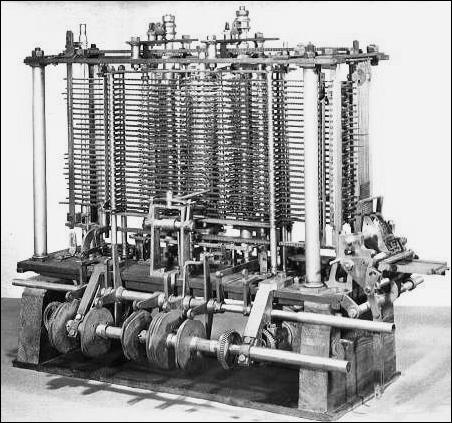

1360) Difference Engine

Summary

A difference engine is an automatic mechanical calculator designed to tabulate polynomial functions. It was designed in the 1820s, and was first created by Charles Babbage. The name, the difference engine, is derived from the method of divided differences, a way to interpolate or tabulate functions by using a small set of polynomial co-efficients. Some of the most common mathematical functions used in engineering, science and navigation, were, and still are computable with the use of the difference engine's capability of computing logarithmic and trigonometric functions, which can be approximated by polynomials, so a difference engine can compute many useful tables of numbers.

Details

Difference Engine is an early calculating machine, verging on being the first computer, designed and partially built during the 1820s and ’30s by Charles Babbage. Babbage was an English mathematician and inventor; he invented the cowcatcher, reformed the British postal system, and was a pioneer in the fields of operations research and actuarial science. It was Babbage who first suggested that the weather of years past could be read from tree rings. He also had a lifelong fascination with keys, ciphers, and mechanical dolls (automatons).