Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#1701 2023-03-18 19:33:12

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

1604) Crown glass (Optics)

Summary

Crown glass is a type of optical glass used in lenses and other optical components. It has relatively low refractive index (≈1.52) and low dispersion (with Abbe numbers around 60). Crown glass is produced from alkali-lime silicates containing approximately 10% potassium oxide and is one of the earliest low dispersion glasses.

As well as the specific material named crown glass, there are other optical glasses with similar properties that are also called crown glasses. Generally, this is any glass with Abbe numbers in the range 50 to 85. For example, the borosilicate glass Schott BK7 (Schott designates it as 517642. The first three digits tell you its refractive index (1.517) and the last three tell you its Abbé number (64.2)) is an extremely common crown glass, used in precision lenses. Borosilicates contain about 10% boric oxide, have good optical and mechanical characteristics, and are resistant to chemical and environmental damage. Other additives used in crown glasses include zinc oxide, phosphorus pentoxide, barium oxide, fluorite and lanthanum oxide.

BAK-4 barium crown glass (Schott designates it as 569560. The first three digits tell you its refractive index (1.569) and the last three tell you its Abbé number (56.0)),has a higher index of refraction than BK7, and is used for prisms in high-end binoculars. In that application, it gives better image quality and a round exit pupil.

A concave lens of flint glass is commonly combined with a convex lens of crown glass to produce an achromatic doublet. The dispersions of the glasses partially compensate for each other, producing reduced chromatic aberration compared to a singlet lens with the same focal length.

Details

Crown glass is handmade glass of soda-lime composition for domestic glazing or optical uses. The technique of crown glass remained standard from the earliest times: a bubble of glass, blown into a pear shape and flattened, was transferred to the glassmaker’s pontil (a solid iron rod), reheated and rotated at speed, until centrifugal force formed a large circular plate of up to 60 inches in diameter. The finished “table” of glass was thin, lustrous, highly polished (by “fire-polish”), and had concentric ripple lines, the result of spinning; crown glass was slightly convex, and in the centre of the crown was the bull’s eye, a thickened part where the pontil was attached. This was often cut out as a defect, but later it came to be prized as evidence of antiquity. Nevertheless, and despite the availability of cheaper cylinder glass (cast and rolled glass had been invented in the 17th century), crown glass was particularly popular for its superior quality and clarity. The crown process, which may have been Syrian in origin, was in use in Europe since at least the 14th century, when the industry was centred in Normandy, where a few families of glassblowers monopolized the trade and enjoyed a kind of aristocratic status. From about the mid-17th century the crown glass process was gradually replaced by easier methods of manufacturing larger glass sheets. Window glass of note, however, was made by this method in the U.S. by the Boston Crown Glass Company from 1793 to about 1827.

Crown glass has optical properties that complement those of the denser flint glass when the two kinds are used together to form lenses corrected for chromatic aberration. Special ingredients may be added to crown glass to achieve particular optical qualities.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1702 2023-03-19 15:16:45

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

1605) Ferry

Summary

A ferry is a ship, watercraft or amphibious vehicle used to carry passengers, and sometimes vehicles and cargo, across a body of water. A small passenger ferry with many stops, such as in Venice, Italy, is sometimes called a water bus or water taxi.

Ferries form a part of the public transport systems of many waterside cities and islands, allowing direct transit between points at a capital cost much lower than bridges or tunnels. Ship connections of much larger distances (such as over long distances in water bodies like the Mediterranean Sea) may also be called ferry services, and many carry vehicles.

Details

A ferry is a place where passengers, freight, or vehicles are carried by boat across a river, lake, arm of the sea, or other body of water. The term applies both to the place where the crossing is made and to the boat used for the purpose. By extension of the original meaning, ferry also denotes a short overwater flight by an airplane carrying passengers or freight or the flying of planes from one point to another as a means of delivering them.

Perhaps the most prominent early use of the term appears in Greek mythology, where Charon the ferryman carried the souls of the dead across the River Styx. Ferries were of great importance in ancient and medieval history, and their importance has persisted into the modern era. Before engineers learned to build permanent bridges over large bodies of water or construct tunnels under them, ferries offered the only means of crossing. Ferries include a wide variety of vessels, from the simplest canoes or rafts to large motor-driven ferries capable of carrying trucks and railway cars across vast expanses of water. The term is frequently used in combination with other words, as in the expressions train ferry, car ferry, and channel ferry.

In the early history of the United States, the colonists found that the coasts of the New World were broken by great bays and inlets and that the interior of the continent was divided by rivers that defied bridging for many generations. Crossing these rivers and bays was a necessity, however. At first, small boats propelled by oars or poles were the most common form of ferry. They were replaced later by large flatboats propelled by a form of long oar called a sweep. Sails were used when conditions were favourable and in some rivers the current itself provided the means of propulsion.

Horses were used on some ferries to walk a treadmill geared to paddle wheels; in others, horses were driven in a circle around a capstan that hauled in ropes and towed the ferry along its route. The first steam ferryboat in the United States was operated by John Fitch on the Delaware River in 1790, but it was not financially successful. The advent of steam power greatly improved ferryboats; they became larger, faster, and more reliable and began to take on a design different from other steamers. At cities divided by a river and where hundreds of people and many horse-drawn wagons had to cross the river daily, the typical U.S. ferryboat took shape. It was a double-ended vessel with side paddle wheels and a rudder and pilothouse on both ends. The pilothouses were on an upper deck, and the lower deck was arranged to hold as many vehicles as possible. A narrow passageway ran along each side of the lower deck with stairways to give passengers access to the upper deck. The engine was of the walking beam type with the beam mounted on a pedestal so high that it was visible above the upper deck.

Terminals to accommodate such ferries were built at each end of their routes. In order to dock promptly and permit wheeled vehicles to move on and off quickly, a platform with one end supported by a pivot on land and the other end supported by floats in the water was sometimes provided. As roads improved and the use of automobiles and large motor trucks increased, ferries became larger and faster, but the hull arrangement remained the same. High-speed steam engines with propellers on both ends of the ferry were used. Steam engines gave way to diesel engines, diesel-electric drives, and, in some cases, hovercraft. Several states organized commissions which took over ferries from private ownership and operated them for the public; these commissions frequently also operated bridges, public roads, and vehicular tunnels. Increase in the use of motor vehicles so overtaxed many ferries that they could not handle the load. As a result, more bridges and tunnels were built, and ferries began to disappear, but their use on some inland rivers and lakes still continues. Commuter ferries remained popular in densely populated coastal communities.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1703 2023-03-20 14:22:23

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

1606) Scholarship

Summary

Scholarship: learning; knowledge acquired by study; the academic attainments of a scholar.

2. a sum of money or other aid granted to a student, because of merit, need, etc., to pursue his or her studies.

3. the position or status of such a student.

4. a foundation to provide financial assistance to students.

Details

A scholarship is a form of financial aid awarded to students for further education. Generally, scholarships are awarded based on a set of criteria such as academic merit, diversity and inclusion, athletic skill, and financial need.

Scholarship criteria usually reflect the values and goals of the donor of the award, and while scholarship recipients are not required to repay scholarships, the awards may require that the recipient continue to meet certain requirements during their period of support, such maintaining a minimum grade point average or engaging in a certain activity (e.g., playing on a school sports team for athletic scholarship holders).

Scholarships also range in generosity; some range from covering partial tuition ranging all the way to a 'full-ride', covering all tuition, accommodation, housing and others.

Some prestigious, highly competitive scholarships are well-known even outside the academic community, such as Fulbright Scholarship and the Rhodes Scholarships at the graduate level, and the Robertson, Morehead-Cain and Jefferson Scholarships at the undergraduate level.

Scholarships vs. grants

While the terms scholarship and grant are frequently used interchangeably, they are distinctly different. Where grants are offered based exclusively on financial need, scholarships may have a financial need component but rely on other criteria as well.

* Academic scholarships typically use a minimum grade-point average or standardized test score such as the ACT or SAT to narrow down awardees.

* Athletic scholarships are generally based on athletic performance of a student and used as a tool to recruit high-performing athletes for their school's athletic teams.

* Merit scholarships can be based on a number of criteria, including performance in a particular school subject or club participation or community service.

A federal Pell Grant can be awarded to someone planning to receive their undergraduate degree and is solely based on their financial needs.

Types

A Navy Rear Admiral presents a Midshipman with a ceremonial cheque symbolizing her $180,000 Navy Reserve Officers Training Candidate scholarship.

The most common scholarships may be classified as:

* Merit-based: These awards are based on a student's academic, artistic, athletic, or other abilities, and often a factor in an applicant's extracurricular activities and community service record. Most such merit-based scholarships are paid directly by the institution the student attends, rather than issued directly to the student.

* Need-based: Some private need-based awards are confusingly called scholarships, and require the results of a FAFSA (the family's expected family contribution). However, scholarships are often merit-based, while grants tend to be need-based.

* Student-specific: These are scholarships for which applicants must initially qualify based upon gender, race, religion, family, and medical history, or many other student-specific factors. Minority scholarships are the most common awards in this category.[citation needed] For example, students in Canada may qualify for a number of Indigenous scholarships, whether they study at home or abroad. The Gates Millennium Scholars Program is another minority scholarship funded by Bill and Melinda Gates for excellent African American, American Indian, Asian Pacific Islander American, and Latino students who enroll in college.

* Career-specific: These are scholarships a college or university awards to students who plan to pursue a specific field of study. Often, the most generous awards go to students who pursue careers in high-need areas, such as education or nursing. Many schools in the United States give future nurses full scholarships to enter the field, especially if the student intends to work in a high-need community.

* College-specific: College-specific scholarships are offered by individual colleges and universities to highly qualified applicants. These scholarships are given on the basis of academic and personal achievement. Some scholarships have a "bond" requirement. Recipients may be required to work for a particular employer for a specified period of time or to work in rural or remote areas; otherwise, they may be required to repay the value of the support they received from the scholarship. This is particularly the case with education and nursing scholarships for people prepared to work in rural and remote areas. The programs offered by the uniformed services of the United States (Army, Navy, Marine Corps, Air Force, Coast Guard, National Oceanic and Atmospheric Administration Commissioned Officer Corps, and Public Health Service Commissioned Corps) sometimes resemble such scholarships.

* Athletic: Awarded to students with exceptional skill in a sport. Often this is so that the student will be available to attend the school or college and play the sport on their team, although in some countries government funded sports scholarships are available, allowing scholarship holders to train for international representation. School-based athletics scholarships can be controversial, as some believe that awarding scholarship money for athletic rather than academic or intellectual purposes is not in the institution's best interest.

* Brand: These scholarships are sponsored by a corporation that is trying to gain attention to their brand, or a cause. Sometimes these scholarships are referred to as branded scholarships. The Miss America beauty pageant is a famous example of a brand scholarship.

* Creative contest: These scholarships are awarded to students based on a creative submission. Contest scholarships are also called mini project-based scholarships, where students can submit entries based on unique and innovative ideas.

* "Last dollar": can be provided by private and government-based institutions, and are intended to cover the remaining fees charged to a student after the various grants are taken into account. To prohibit institutions from taking last dollar scholarships into account, and thereby removing other sources of funding, these scholarships are not offered until after financial aid has been offered in the form of a letter. Furthermore, last dollar scholarships may require families to have filed taxes for the most recent year, received their other sources of financial aid, and not yet received loans.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1704 2023-03-24 21:14:42

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

1607) Sepsis

Summary

Sepsis, formerly known as septicemia (septicaemia in British English) or blood poisoning, is a life-threatening condition that arises when the body's response to infection causes injury to its own tissues and organs.

This initial stage of sepsis is followed by suppression of the immune system. Common signs and symptoms include fever, increased heart rate, increased breathing rate, and confusion. There may also be symptoms related to a specific infection, such as a cough with pneumonia, or painful urination with a kidney infection. The very young, old, and people with a weakened immune system may have no symptoms of a specific infection, and the body temperature may be low or normal instead of having a fever. Severe sepsis causes poor organ function or blood flow. The presence of low blood pressure, high blood lactate, or low urine output may suggest poor blood flow. Septic shock is low blood pressure due to sepsis that does not improve after fluid replacement.

Sepsis is caused by many organisms including bacteria, viruses and fungi. Common locations for the primary infection include the lungs, brain, urinary tract, skin, and abdominal organs. Risk factors include being very young or old, a weakened immune system from conditions such as cancer or diabetes, major trauma, and burns. Previously, a sepsis diagnosis required the presence of at least two systemic inflammatory response syndrome (SIRS) criteria in the setting of presumed infection. In 2016, a shortened sequential organ failure assessment score (SOFA score), known as the quick SOFA score (qSOFA), replaced the SIRS system of diagnosis. qSOFA criteria for sepsis include at least two of the following three: increased breathing rate, change in the level of consciousness, and low blood pressure. Sepsis guidelines recommend obtaining blood cultures before starting antibiotics; however, the diagnosis does not require the blood to be infected. Medical imaging is helpful when looking for the possible location of the infection. Other potential causes of similar signs and symptoms include anaphylaxis, adrenal insufficiency, low blood volume, heart failure, and pulmonary embolism.

Sepsis requires immediate treatment with intravenous fluids and antimicrobials. Ongoing care often continues in an intensive care unit. If an adequate trial of fluid replacement is not enough to maintain blood pressure, then the use of medications that raise blood pressure becomes necessary. Mechanical ventilation and dialysis may be needed to support the function of the lungs and kidneys, respectively. A central venous catheter and an arterial catheter may be placed for access to the bloodstream and to guide treatment. Other helpful measurements include cardiac output and superior vena cava oxygen saturation. People with sepsis need preventive measures for deep vein thrombosis, stress ulcers, and pressure ulcers unless other conditions prevent such interventions. Some people might benefit from tight control of blood sugar levels with insulin. The use of corticosteroids is controversial, with some reviews finding benefit, and others not.

Disease severity partly determines the outcome. The risk of death from sepsis is as high as 30%, while for severe sepsis it is as high as 50%, and septic shock 80%. Sepsis affected about 49 million people in 2017, with 11 million deaths (1 in 5 deaths worldwide). In the developed world, approximately 0.2 to 3 people per 1000 are affected by sepsis yearly, resulting in about a million cases per year in the United States. Rates of disease have been increasing. Some data indicate that sepsis is more common among males than females, however, other data show a greater prevalence of the disease among women. Descriptions of sepsis date back to the time of Hippocrates.

Details

What is Sepsis?

What is sepsis?

Is sepsis contagious?

What causes sepsis?

Who is at risk?

What are the signs & symptoms?

What should I do if I think I might have sepsis?

Anyone can get an infection, and almost any infection, including COVID-19, can lead to sepsis. In a typical year:

* At least 1.7 million adults in America develop sepsis.

* At least 350,000 adults who develop sepsis die during their hospitalization or are discharged to hospice.

* 1 in 3 people who dies in a hospital had sepsis during that hospitalization

* Sepsis, or the infection causing sepsis, starts before a patient goes to the hospital in nearly 87% of cases.

Sepsis is the body’s extreme response to an infection. It is a life-threatening medical emergency. Sepsis happens when an infection you already have triggers a chain reaction throughout your body. Infections that lead to sepsis most often start in the lung, urinary tract, skin, or gastrointestinal tract. Without timely treatment, sepsis can rapidly lead to tissue damage, organ failure, and death.

Is sepsis contagious?

You can’t spread sepsis to other people. However, an infection can lead to sepsis, and you can spread some infections to other people.

Sepsis happens when…

What causes sepsis?

Infections can put you or your loved one at risk for sepsis. When germs get into a person’s body, they can cause an infection. If you don’t stop that infection, it can cause sepsis. Bacterial infections cause most cases of sepsis. Sepsis can also be a result of other infections, including viral infections, such as COVID-19 or influenza, or fungal infections.

Who is at risk?

Anyone can develop sepsis, but some people are at higher risk for sepsis:

Adults 65 or older

People with weakened immune systems

People with chronic medical conditions, such as diabetes, lung disease, cancer, and kidney disease

People with recent severe illness or hospitalization

What are the signs & symptoms?

A person with sepsis might have one or more of the following signs or symptoms:

High heart rate or weak pulse

Confusion or disorientation

Extreme pain or discomfort

Fever, shivering, or feeling very cold

Shortness of breath

Clammy or sweaty skin

A medical assessment by a healthcare professional is needed to confirm sepsis.

What should I do if I think I might have sepsis?

Sepsis is a medical emergency. If you or your loved one has an infection that’s not getting better or is getting worse, ACT FAST.

Get medical care IMMEDIATELY. Ask your healthcare professional, “Could this infection be leading to sepsis?” and if you should go to the emergency room.

If you have a medical emergency, call 911. If you have or think you have sepsis, tell the operator. If you have or think you have COVID-19, tell the operator this as well. If possible, put on a mask before medical help arrives.

With fast recognition and treatment, most people survive. Treatment requires urgent medical care, usually in an intensive care unit in a hospital, and includes careful monitoring of vital signs and often antibiotics.

Additional Information

Sepsis is systemic inflammatory condition that occurs as a complication of infection and in severe cases may be associated with acute and life-threatening organ dysfunction. Worldwide, sepsis has long been a common cause of illness and mortality in hospitals, intensive care units, and emergency departments. In 2017 alone, an estimated 11 million people worldwide died from sepsis, accounting for nearly one-fifth of all deaths globally that year. Nonetheless, this number marked a decrease in sepsis death rates from the last part of the 20th century. Improvements in health care, including better sanitation and the development of more effective treatments, were thought to have contributed to the decline.

Populations most susceptible to sepsis include the elderly and persons who are severely ill and hospitalized. In the early 21st century, other factors, including increased life expectancy for persons with immunodeficiency disorders (e.g., HIV/AIDS), increased incidence of antibiotic resistance, and increased use of anticancer chemotherapy and immunosuppressive drugs (e.g., for organ transplantation), have emerged as important risk factors of sepsis.

Risk factors, symptoms, and diagnosis

In addition to the elderly and to persons with weak immune systems, newborns, pregnant women, and individuals affected by chronic diseases such as diabetes mellitus are also highly susceptible to sepsis. Other risk factors include hospitalization and the introduction of medical devices (e.g., surgical instruments) into the body. Early symptoms of sepsis include increased heart rate, increased respiratory rate, suspected or confirmed infection, and increased or decreased body temperature (i.e., greater than 101.3 °F [38.5 °C] or lower than 95 °F [35 °C]). Diagnosis is based on the presence of at least two of these symptoms. In many instances, however, the condition is not diagnosed until it has progressed to severe sepsis, which is characterized by symptoms of organ dysfunction, including irregular heartbeat, laboured breathing, confusion, dizziness, decreased urinary output, and skin discoloration. The condition may then progress to septic shock, which occurs when the above symptoms are accompanied by a marked drop in blood pressure. Severe sepsis and septic shock may also involve the failure of two or more organ systems, at which point the condition may be described as multiple organ dysfunction syndrome (MODS). The condition may progress through these stages in a matter of hours, days, or weeks, depending on treatment and other factors.

Treatment and complications

Prompt treatment is required in order to decrease the risk of progression to septic shock or MODS. Initial treatment includes the emergency intravenous administration of fluids and antibiotics. Vasoconstrictor drugs also may be given intravenously to raise blood pressure, and patients who experience breathing difficulties sometimes require mechanical ventilation. Dialysis, which helps clear the blood of infectious agents, is initiated when kidney failure is evident, and surgery may be used to drain an infection.

Many patients experience a decrease in quality of life following sepsis, particularly if the patient is older or the attack severe. Acute lung injury and neuronal injury resulting from sepsis, for example, have been associated with long-term cognitive impairment. Older persons who suffer from such complications may not be able to live independently following their recovery from sepsis and often require long-term treatment with medication.

Pathophysiology

At the cellular level, sepsis is characterized by changes in the function of endothelial tissue (the endothelium forms the inner surface of blood vessels), in the coagulation (blood clotting) process, and in blood flow. These changes appear to be initiated by the cellular release of pro-inflammatory substances in response to the presence of infectious microorganisms. The substances, which include short-lived regulatory proteins known as cytokines, in turn interact with endothelial cells and thereby cause injury to the endothelium and possibly the death (apoptosis) of endothelial cells. These interactions lead to the activation of coagulation factors. In very small blood vessels (microvessels), the coagulation response, in combination with endothelial damage, may impede blood flow and cause the vessels to become leaky. As fluid and microorganisms escape into the surrounding tissues, the tissues begin to swell (edema); in the lungs this leads to pulmonary edema, which manifests as shortness of breath. If the supply of coagulation proteins becomes exhausted, bleeding may ensue. Cytokines also cause blood vessels to dilate (widen), producing a decrease in blood pressure. The damage incited by the inflammatory response is widespread and has been described as a “pan-endothelial” effect because of the distribution of endothelial tissue in blood vessels throughout the body; this effect appears to explain the systemic nature of sepsis.

The existence of multiple conditions that are characterized by similar symptoms complicates the clinical picture of sepsis. For example, sepsis is closely related to bacteremia, which is the infection of blood with bacteria, and septicemia, which is a systemic inflammatory condition caused specifically by bacteria and typically associated with bacteremia. Sepsis differs from these conditions in that it may arise in response to infection with any of a variety of microorganisms, including bacteria, viruses, protozoans, and fungi. However, the occasional progression of septicemia to more-advanced stages of sepsis and the frequent involvement of bacterial infection in sepsis preclude clear clinical distinction between these conditions. Sepsis is also distinguished from systemic inflammatory response syndrome (SIRS), a condition that can arise independent of infection (e.g., from factors such as burns or trauma).

Sepsis through history

One of the first medical descriptions of putrefaction and a sepsislike condition was provided in the 5th and 4th centuries BCE in works attributed to the ancient Greek physician Hippocrates (the Greek word sepsis means “putrefaction”). With no knowledge of infectious microorganisms, the ancient Greeks and the physicians who came after them variably associated the condition with digestive illness, miasma (infection by bad air), and spontaneous generation. These apocryphal associations persisted until the 19th century, when infection finally was discovered to be the underlying cause of sepsis, a realization that emerged from the work of British surgeon and medical scientist Sir Joseph Lister and French chemist and microbiologist Louis Pasteur.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1705 2023-03-26 23:27:04

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

1608) Defense Mechanism

Defense mechanism, in psychoanalytic theory, is any of a group of mental processes that enables the mind to reach compromise solutions to conflicts that it is unable to resolve. The process is usually unconscious, and the compromise generally involves concealing from oneself internal drives or feelings that threaten to lower self-esteem or provoke anxiety. The concept derives from the psychoanalytic hypothesis that there are forces in the mind that oppose and battle against each other. The term was first used in Sigmund Freud’s paper “The Neuro-Psychoses of Defence” (1894).

Some of the major defense mechanisms described by psychoanalysts are the following:

1. Repression is the withdrawal from consciousness of an unwanted idea, affect, or desire by pushing it down, or repressing it, into the unconscious part of the mind. An example may be found in a case of hysterical amnesia, in which the victim has performed or witnessed some disturbing act and then completely forgotten the act itself and the circumstances surrounding it.

2. Reaction formation is the fixation in consciousness of an idea, affect, or desire that is opposite to a feared unconscious impulse. A mother who bears an unwanted child, for example, may react to her feelings of guilt for not wanting the child by becoming extremely solicitous and overprotective to convince both the child and herself that she is a good mother.

3. Projection is a form of defense in which unwanted feelings are displaced onto another person, where they then appear as a threat from the external world. A common form of projection occurs when an individual, threatened by his own angry feelings, accuses another of harbouring hostile thoughts.

4. Regression is a return to earlier stages of development and abandoned forms of gratification belonging to them, prompted by dangers or conflicts arising at one of the later stages. A young wife, for example, might retreat to the security of her parents’ home after her first quarrel with her husband.

5. Sublimation is the diversion or deflection of instinctual drives, usually sexual ones, into noninstinctual channels. Psychoanalytic theory holds that the energy invested in sexual impulses can be shifted to the pursuit of more acceptable and even socially valuable achievements, such as artistic or scientific endeavours.

6. Denial is the conscious refusal to perceive that painful facts exist. In denying latent feelings of homosexuality or hostility, or mental defects in one’s child, an individual can escape intolerable thoughts, feelings, or events.

7. Rationalization is the substitution of a safe and reasonable explanation for the true (but threatening) cause of behaviour.

Psychoanalysts emphasize that the use of a defense mechanism is a normal part of personality function and not in and of itself a sign of psychological disorder. Various psychological disorders, however, can be characterized by an excessive or rigid use of these defenses.

Additional Information

In psychoanalytic theory, a defence mechanism (American English: defense mechanism), is an unconscious psychological operation that functions to protect a person from anxiety-producing thoughts and feelings related to internal conflicts and outer stressors.

The idea of defence mechanisms comes from psychoanalytic theory, a psychological perspective of personality that sees personality as the interaction between three components: id, ego, and super-ego. These psychological strategies may help people put distance between themselves and threats or unwanted feelings, such as guilt or shame.

Defence mechanisms may result in healthy or unhealthy consequences depending on the circumstances and frequency with which the mechanism is used. Defence mechanisms (German: Abwehrmechanismen) are psychological strategies brought into play by the unconscious mind to manipulate, deny, or distort reality in order to defend against feelings of anxiety and unacceptable impulses and to maintain one's self-schema or other schemas. These processes that manipulate, deny, or distort reality may include the following: repression, or the burying of a painful feeling or thought from one's awareness even though it may resurface in a symbolic form; identification, incorporating an object or thought into oneself; and rationalization, the justification of one's behaviour and motivations by substituting "good" acceptable reasons for the actual motivations. In psychoanalytic theory, repression is considered the basis for other defence mechanisms.

According to this theory, healthy people normally use different defence mechanisms throughout life. A defence mechanism becomes pathological only when its persistent use leads to maladaptive behaviour such that the physical or mental health of the individual is adversely affected. Among the purposes of ego defence mechanisms is to protect the mind/self/ego from anxiety or social sanctions or to provide a refuge from a situation with which one cannot currently cope.

One resource used to evaluate these mechanisms is the Defense Style Questionnaire (DSQ-40).

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1706 2023-03-27 16:34:02

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

1609) Electricity

Gist

Electricity is the flow of electrical power or charge. Electricity is both a basic part of nature and one of the most widely used forms of energy.

Summary

Electricity is the set of physical phenomena associated with the presence and motion of matter that has a property of electric charge. Electricity is related to magnetism, both being part of the phenomenon of electromagnetism, as described by Maxwell's equations. Various common phenomena are related to electricity, including lightning, static electricity, electric heating, electric discharges and many others.

The presence of either a positive or negative electric charge produces an electric field. The movement of electric charges is an electric current and produces a magnetic field. In most applications, a force acts on a charge with a magnitude given by Coulomb's law. Electric potential is typically measured in volts.

Electricity is at the heart of many modern technologies, being used for:

* Electric power where electric current is used to energise equipment;

* Electronics which deals with electrical circuits that involve active electrical components such as vacuum tubes, transistors, diodes and integrated circuits, and associated passive interconnection technologies.

Electrical phenomena have been studied since antiquity, though progress in theoretical understanding remained slow until the 17th and 18th centuries. The theory of electromagnetism was developed in the 19th century, and by the end of that century electricity was being put to industrial and residential use by electrical engineers. The rapid expansion in electrical technology at this time transformed industry and society, becoming a driving force for the Second Industrial Revolution. Electricity's extraordinary versatility means it can be put to an almost limitless set of applications which include transport, heating, lighting, communications, and computation. Electrical power is now the backbone of modern industrial society.

Details

Electricity is a phenomenon associated with stationary or moving electric charges. Electric charge is a fundamental property of matter and is borne by elementary particles. In electricity the particle involved is the electron, which carries a charge designated, by convention, as negative. Thus, the various manifestations of electricity are the result of the accumulation or motion of numbers of electrons.

Electrostatics

Electrostatics is the study of electromagnetic phenomena that occur when there are no moving charges—i.e., after a static equilibrium has been established. Charges reach their equilibrium positions rapidly because the electric force is extremely strong. The mathematical methods of electrostatics make it possible to calculate the distributions of the electric field and of the electric potential from a known configuration of charges, conductors, and insulators. Conversely, given a set of conductors with known potentials, it is possible to calculate electric fields in regions between the conductors and to determine the charge distribution on the surface of the conductors. The electric energy of a set of charges at rest can be viewed from the standpoint of the work required to assemble the charges; alternatively, the energy also can be considered to reside in the electric field produced by this assembly of charges. Finally, energy can be stored in a capacitor; the energy required to charge such a device is stored in it as electrostatic energy of the electric field.

Coulomb’s law

Static electricity is a familiar electric phenomenon in which charged particles are transferred from one body to another. For example, if two objects are rubbed together, especially if the objects are insulators and the surrounding air is dry, the objects acquire equal and opposite charges and an attractive force develops between them. The object that loses electrons becomes positively charged, and the other becomes negatively charged. The force is simply the attraction between charges of opposite sign. The properties of this force were described above; they are incorporated in the mathematical relationship known as Coulomb’s law.

Additional Information

Electricity is central to many parts of life in modern societies and will become even more so as its role in transport and heating expands through technologies such as electric vehicles and heat pumps. Power generation is currently the largest source of carbon dioxide (CO2) emissions globally, but it is also the sector that is leading the transition to net zero emissions through the rapid ramping up of renewables such as solar and wind. At the same time, the current global energy crisis has placed electricity security and affordability high on the political agenda in many countries.

Electricity consumption in the European Union recorded a sharp 3.5% decline year-on-year (y-o-y) in 2022 as the region was particularly hard hit by high energy prices, which led to significant demand destruction among industrial consumers. Electricity demand in India and the United States rose, while Covid restrictions affected China’s growth.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1707 2023-03-28 15:18:51

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

1610) Occupational Therapy

Gist

Occupational therapy (OT) is a branch of health care that helps people of all ages who have physical, sensory, or cognitive problems. OT can help them regain independence in all areas of their lives. Occupational therapists help with barriers that affect a person's emotional, social, and physical needs.

Summary

Occupational therapy (OT) is a healthcare profession. It involves the use of assessment and intervention to develop, recover, or maintain the meaningful activities, or occupations, of individuals, groups, or communities. The field of OT consists of health care practitioners trained and educated to improve mental and physical performance. Occupational therapists specialize in teaching, educating, and supporting participation in any activity that occupies an individual's time. It is an independent health profession sometimes categorized as an allied health profession and consists of occupational therapists (OTs) and occupational therapy assistants (OTAs). While OTs and OTAs have different roles, they both work with people who want to improve their mental and or physical health, disabilities, injuries, or impairments.

The American Occupational Therapy Association defines an occupational therapist as someone who "helps people across their lifespan participate in the things they want and/or need to do through the therapeutic use of everyday activities (occupations)". Definitions by professional occupational therapy organizations outside North America are similar in content.

Common interventions include:

* Helping children with disabilities to participate in school and social situations (independent mobility is often a central concern)

* Training in assistive device technology, meaningful and purposeful activities, and life skills.

* Physical injury rehabilitation

* Mental dysfunction rehabilitation

* Support of individuals across the age spectrum experiencing physical and cognitive changes

* Assessing ergonomics and assistive seating options to maximize independent function, while alleviating the risk of pressure injury

* Education in the disease and rehabilitation process

* Advocating for patient health

Typically, occupational therapists are university-educated professionals and must pass a licensing exam to practice.

Currently, entry level occupational therapists must have a masters degree while certified occupational therapy assistants require a two year associates degree to practice in the United States. Individuals must pass a national board certification and apply for a state license in most states. Occupational therapists often work closely with professionals in physical therapy, speech–language pathology, audiology, nursing, nutrition, social work, psychology, medicine, and assistive technology.

Details

Occupational therapy aims to improve your ability to do everyday tasks if you're having difficulties.

How to get occupational therapy

You can get occupational therapy free through the NHS or social services, depending on your situation.

You can:

* speak to a GP about a referral

* search for your local council to ask if you can get occupational therapy

You can also pay for it yourself. The Royal College of Occupational Therapists lists qualified and registered occupational therapists.

Find an occupational therapist

You can check an occupational therapist is qualified and registered with the Health & Care Professions Council (HCPC) using its online register of health and care professionals.

How occupational therapy can help you

Occupational therapy can help you with practical tasks if you:

* are physically disabled

* are recovering from an illness or operation

* have learning disabilities

* have mental health problems

* are getting older

Occupational therapists work with people of all ages and can look at all aspects of daily life in your home, school or workplace.

They look at activities you find difficult and see if there's another way you can do them.

The Royal College of Occupational Therapists has more information about what occupational therapy is.

Additional Information

Occupational therapy is use of self-care and work and play activities to promote and maintain health, prevent disability, increase independent function, and enhance development. Occupation includes all the activities or tasks that a person performs each day. For example, getting dressed, playing a sport, taking a class, cooking a meal, getting together with friends, and working at a job are considered occupations. Participation in occupations serves many purposes, from taking care of oneself and interacting with others to earning a living, developing skills, and contributing to society.

An occupational therapist works with persons who are unable to carry out the various activities that they want, need, or are expected to perform. Therapists are skilled in analyzing daily activities and tasks, and they work to construct therapy programs that enable persons to participate more satisfactorily in daily occupations. Occupational therapy intervention and the organization of specific therapy programs are coordinated with the work of other professional and health care personnel.

History

The discipline of occupational therapy evolved from the recognition many years ago that participation in work and other restorative activities improved the health of persons affected by mental or physical illness. In fact, patients have long been employed in the utility services of psychiatric hospitals. In the 19th century the moral treatment approach proposed the use of daily activities to improve the lives of people who were institutionalized for mental illness. By the early 20th century, experiments were being made in the use of arts and craft activities to occupy persons with serious mental disorders. This practice gave rise to the first occupational therapy workshops and later to schools for the training of occupational therapists.

The goal of early occupational therapy was to improve health through structured activities. World War I emphasized the need for occupational therapy, since the physical rehabilitation of veterans provided them an opportunity to return to productive work. In 1917, coincident with the increase in demand to aid veterans in the United States, the National Society for the Promotion of Occupational Therapy (later the American Occupational Therapy Association) was founded. Subsequent advancements in occupational therapy included the development of techniques used to analyze activities and the prescription of specific crafts and occupations for patients, particularly for young people and for patients within hospitals. In 1952 the World Federation of Occupational Therapists was formed, and in 1954 the first international congress of occupational therapists was held at Edinburgh.

In the latter part of the 20th century and early part of the 21st century, the development and refinement of theoretical models to guide occupational therapy assessment and intervention further advanced the practice of occupational therapy. These theories focus on the complex relationships between the motivations and skills of patients, the occupations that bring meaning to their lives, and the environments in which they live. Occupational science was developed to support the study of occupation and its complexity in everyday life. As a result, research in occupational therapy has grown substantially and has played an important role in providing scientific evidence to support many occupational therapy interventions.

Modern occupational therapy

Occupational therapists work with individuals of all ages and with various organizations, including companies and governments. The practice of occupational therapy focuses on maintenance of health, prevention of disability, and improvement of participation in occupations after illness, accident, or disability. Thus, therapists typically work with persons who have physical challenges in occupations because of illness, injury, or disability. They also work with persons who are at risk for decreased participation in their occupations. For example, programs for older adults that adapt their living environments to minimize the risk for a fall help them to continue to live in the community.

Establishing therapist-patient partnerships is an important part of a successful therapy program. Initial assessments enable patients to identify the occupations that are most meaningful to them but that they have difficulty performing. This helps therapists tailor programs to each patient’s needs and goals. Modern occupational therapy also focuses on the analysis, adaptation, and use of daily occupations to enable persons to live fully within their community. Each person’s day is filled with a variety of different activities and tasks, such as getting dressed, taking a bus, making a phone call, writing a report, loading equipment at work, or playing a game. Occupational therapists are trained to analyze these activities and tasks to determine what skills and abilities are required to complete them. If a person has difficulty engaging fully in day-to-day occupations, a therapist works with that person to assess why he or she cannot perform the specific activities and tasks that make up an occupation. Factors within the activity, the person, and the environment are examined to determine reasons for difficulties in performance. The occupational therapist and the person then develop a plan to improve performance through active participation in the occupation. Therapy may focus on improving a person’s skills through participation in the activity, adapting the activity to make it easier, or changing the environment to improve performance.

Examples of applied occupational therapy

The approaches that occupational therapists use to maintain and improve participation in the daily activities and tasks of patients can be illustrated by specific cases. The following examples explore several different situations that may be encountered by therapists.

In the first example, a young child who has cerebral palsy has difficulty learning to dress himself because of limitations in movement and coordination. With his parents, an occupational therapist plans a program to teach the most efficient methods for dressing. Changes to clothing, such as the addition of velcro closures or elastic shoelaces, may be used to adapt the activity. Methods of practice are taught to the parents. Specific activities are practiced throughout his day to help him improve his motor skills. At preschool, the therapist consults with the teacher to provide information about the child’s abilities and how to change the classroom environment to enhance his functioning.

In a second example, an older adult who had a mild stroke is experiencing depression and is uncertain whether she can continue to live in her apartment. A community occupational therapist assesses the woman’s interests and required daily activities and develops a plan for engagement in activities in her apartment and in the community. As she participates in these activities, she gains confidence and improves her ability to live independently. The therapist also makes adaptations to the woman’s kitchen so that she can reach utensils and make her meals easily and safely.

In a third example, after a motor vehicle accident, a 45-year-old woman is unable to return to work as an administrative assistant because of a neck injury. An occupational therapist analyzes the demands of the woman’s job and her ability to complete work-oriented tasks. The therapist makes changes to the woman’s work area to minimize pain and fatigue. The therapist also creates a paced return-to-work schedule, allowing the woman to improve her endurance gradually in order to achieve a successful return to her workplace.

In a fourth example, knowing that about 15 percent of people living in their country have a disability, the leaders of a community of 60,000 citizens decide to improve access to local recreation and leisure programs. An occupational therapist is hired to conduct an accessibility audit of the programs and their physical locations. Recommendations are provided to decrease physical, attitudinal, and policy barriers that may limit full participation.

In these examples, occupational therapy enhanced a person’s ability to participate by improving his or her skills or by adapting the activity or changing the environment. The continued advance of occupational science, which enables the consideration of new findings from research to be considered along with assessment of the client’s needs, forms an important part of the success of the therapeutic approaches described above.

Education of occupational therapists

Occupational therapists worldwide are educated at colleges or universities. Across countries, there is a range in the qualifications required for an occupational therapist to enter into practice. Many countries require a baccalaureate, or bachelor’s degree. In Canada and the United States, the minimum qualification is a master’s degree in occupational therapy. The United States also has entry-level clinical doctorate degrees. Europe supports bachelor’s degrees as well as advanced degrees (master’s and doctorate) after entry-level practice has been achieved. In Australia, entry-level qualifications can be obtained from a bachelor’s or master’s degree. There is an increase in the number of therapists globally who return to university to obtain advanced master’s or doctoral degrees in order to teach or to conduct research.

Occupational therapy education focuses on the theoretical concepts of occupation and the skills and abilities to practice as an occupational therapist. Students also must have adequate knowledge of anatomy, physiology, medicine, surgery, psychiatry, and psychology, since this knowledge is part of the foundation of occupational therapy assessment and intervention. Every occupational therapy education program includes periods of supervised clinical experience.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1708 2023-03-29 01:38:29

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

1611) Jurisprudence

Summary

Jurisprudence is Science or philosophy of law. Jurisprudence may be divided into three branches: analytical, sociological, and theoretical. The analytical branch articulates axioms, defines terms, and prescribes the methods that best enable one to view the legal order as an internally consistent, logical system. The sociological branch examines the actual effects of the law within society and the influence of social phenomena on the substantive and procedural aspects of law. The theoretical branch evaluates and criticizes law in terms of the ideals or goals postulated for it.

Details

The term Jurisprudence (when it does not refer to authoritative legal decision-making, as in "the jurisprudence of the Supreme Court") is almost synonymous with legal theory and legal philosophy (or philosophy of law). Jurisprudence as scholarship is principally concerned with what, in general, law is and ought to be. That includes questions of how persons and social relations are understood in legal terms, and of the values in and of law. Work that is counted as jurisprudence is mostly philosophical, but it includes work that also belongs to other disciplines, such as sociology, history, politics and economics.

Modern jurisprudence began in the 18th century and it was based on the first principles of natural law, civil law, and the law of nations. General jurisprudence can be divided into categories both by the type of question scholars seek to answer and by the theories of jurisprudence, or schools of thought, regarding how those questions are best answered. Contemporary philosophy of law, which deals with general jurisprudence, addresses problems internal to law and legal systems and problems of law as a social institution that relates to the larger political and social context in which it exists.

This article addresses three distinct branches of thought in general jurisprudence. Ancient natural law is the idea that there are rational objective limits to the power of legislative rulers. The foundations of law are accessible through reason, and it is from these laws of nature that human laws gain whatever force they have. Analytic jurisprudence (Clarificatory jurisprudence) rejects natural law's fusing of what law is and what it ought to be. It espouses the use of a neutral point of view and descriptive language when referring to aspects of legal systems. It encompasses such theories of jurisprudence as legal positivism, holds that there is no necessary connection between law and morality and that the force of law comes from basic social facts; and "legal realism", which argues that the real-world practice of law determines what law is, the law having the force that it does because of what legislators, lawyers, and judges do with it. Unlike experimental jurisprudence, which seeks to investigate the content of folk legal concepts using the methods of social science, the traditional method of both natural law and analytic jurisprudence is philosophical analysis. Normative jurisprudence is concerned with "evaluative" theories of law. It deals with what the goal or purpose of law is, or what moral or political theories provide a foundation for the law. It not only addresses the question "What is law?", but also tries to determine what the proper function of law should be, or what sorts of acts should be subject to legal sanctions, and what sorts of punishment should be permitted.

Etymology

The English word is derived from the Latin, iurisprudentia. Iuris is the genitive form of ius meaning law, and prudentia meaning prudence (also: discretion, foresight, forethought, circumspection). It refers to the exercise of good judgment, common sense, and caution, especially in the conduct of practical matters. The word first appeared in written English in 1628, at a time when the word prudence meant knowledge of, or skill in, a matter. It may have entered English via the French jurisprudence, which appeared earlier.

History

Ancient Indian jurisprudence is mentioned in various Dharmaśāstra texts, starting with the Dharmasutra of Bhodhayana.

In Ancient China, the Daoists, Confucians, and Legalists all had competing theories of jurisprudence.

Jurisprudence in Ancient Rome had its origins with the (periti)—experts in the jus mos maiorum (traditional law), a body of oral laws and customs.

Praetors established a working body of laws by judging whether or not singular cases were capable of being prosecuted either by the edicta, the annual pronunciation of prosecutable offense, or in extraordinary situations, additions made to the edicta. An iudex would then prescribe a remedy according to the facts of the case.

The sentences of the iudex were supposed to be simple interpretations of the traditional customs, but—apart from considering what traditional customs applied in each case—soon developed a more equitable interpretation, coherently adapting the law to newer social exigencies. The law was then adjusted with evolving institutiones (legal concepts), while remaining in the traditional mode. Praetors were replaced in the 3rd century BC by a laical body of prudentes. Admission to this body was conditional upon proof of competence or experience.

Under the Roman Empire, schools of law were created, and practice of the law became more academic. From the early Roman Empire to the 3rd century, a relevant body of literature was produced by groups of scholars, including the Proculians and Sabinians. The scientific nature of the studies was unprecedented in ancient times.

After the 3rd century, juris prudentia became a more bureaucratic activity, with few notable authors. It was during the Eastern Roman Empire (5th century) that legal studies were once again undertaken in depth, and it is from this cultural movement that Justinian's Corpus Juris Civilis was born.

Natural law

In its general sense, natural law theory may be compared to both state-of-nature law and general law understood on the basis of being analogous to the laws of physical science. Natural law is often contrasted to positive law which asserts law as the product of human activity and human volition.

Another approach to natural-law jurisprudence generally asserts that human law must be in response to compelling reasons for action. There are two readings of the natural-law jurisprudential stance.

The strong natural law thesis holds that if a human law fails to be in response to compelling reasons, then it is not properly a "law" at all. This is captured, imperfectly, in the famous maxim: lex iniusta non est lex (an unjust law is no law at all).

The weak natural law thesis holds that if a human law fails to be in response to compelling reasons, then it can still be called a "law", but it must be recognised as a defective law.

Notions of an objective moral order, external to human legal systems, underlie natural law. What is right or wrong can vary according to the interests one is focused on. John Finnis, one of the most important of modern natural lawyers, has argued that the maxim "an unjust law is no law at all" is a poor guide to the classical Thomist position.

Strongly related to theories of natural law are classical theories of justice, beginning in the West with Plato's Republic.

Aristotle

Aristotle is often said to be the father of natural law. Like his philosophical forefathers Socrates and Plato, Aristotle posited the existence of natural justice or natural right. His association with natural law is largely due to how he was interpreted by Thomas Aquinas. This was based on Aquinas' conflation of natural law and natural right, the latter of which Aristotle posits in Book V of the Nicomachean Ethics (Book IV of the Eudemian Ethics). Aquinas's influence was such as to affect a number of early translations of these passages, though more recent translations render them more literally.

Aristotle's theory of justice is bound up in his idea of the golden mean. Indeed, his treatment of what he calls "political justice" derives from his discussion of "the just" as a moral virtue derived as the mean between opposing vices, just like every other virtue he describes. His longest discussion of his theory of justice occurs in Nicomachean Ethics and begins by asking what sort of mean a just act is. He argues that the term "justice" actually refers to two different but related ideas: general justice and particular justice. When a person's actions toward others are completely virtuous in all matters, Aristotle calls them "just" in the sense of "general justice"; as such, this idea of justice is more or less coextensive with virtue. "Particular" or "partial justice", by contrast, is the part of "general justice" or the individual virtue that is concerned with treating others equitably.

Aristotle moves from this unqualified discussion of justice to a qualified view of political justice, by which he means something close to the subject of modern jurisprudence. Of political justice, Aristotle argues that it is partly derived from nature and partly a matter of convention. This can be taken as a statement that is similar to the views of modern natural law theorists. But it must also be remembered that Aristotle is describing a view of morality, not a system of law, and therefore his remarks as to nature are about the grounding of the morality enacted as law, not the laws themselves.

The best evidence of Aristotle's having thought there was a natural law comes from the Rhetoric, where Aristotle notes that, aside from the "particular" laws that each people has set up for itself, there is a "common" law that is according to nature. The context of this remark, however, suggests only that Aristotle thought that it could be rhetorically advantageous to appeal to such a law, especially when the "particular" law of one's own city was adverse to the case being made, not that there actually was such a law. Aristotle, moreover, considered certain candidates for a universally valid, natural law to be wrong. Aristotle's theoretical paternity of the natural law tradition is consequently disputed.

Thomas Aquinas

Thomas Aquinas was the most influential Western medieval legal scholar.

Thomas Aquinas is the foremost classical proponent of natural theology, and the father of the Thomistic school of philosophy, for a long time the primary philosophical approach of the Roman Catholic Church. The work for which he is best known is the Summa Theologiae. One of the thirty-five Doctors of the Church, he is considered by many Catholics to be the Church's greatest theologian. Consequently, many institutions of learning have been named after him.

Aquinas distinguished four kinds of law: eternal, natural, divine, and human:

* Eternal law refers to divine reason, known only to God. It is God's plan for the universe. Man needs this plan, for without it he would totally lack direction.

* Natural law is the "participation" in the eternal law by rational human creatures, and is discovered by reason

* Divine law is revealed in the scriptures and is God's positive law for mankind

* Human law is supported by reason and enacted for the common good.

Natural law is based on "first principles":

... this is the first precept of the law, that good is to be done and promoted, and evil is to be avoided. All other precepts of the natural law are based on this ...

The desires to live and to procreate are counted by Aquinas among those basic (natural) human values on which all other human values are based.

School of Salamanca

Francisco de Vitoria was perhaps the first to develop a theory of ius gentium (the rights of peoples), and thus is an important figure in the transition to modernity. He extrapolated his ideas of legitimate sovereign power to international affairs, concluding that such affairs ought to be determined by forms respecting of the rights of all and that the common good of the world should take precedence before the good of any single state. This meant that relations between states ought to pass from being justified by force to being justified by law and justice. Some scholars have upset the standard account of the origins of International law, which emphasises the seminal text De iure belli ac pacis by Hugo Grotius, and argued for Vitoria and, later, Suárez's importance as forerunners and, potentially, founders of the field. Others, such as Koskenniemi, have argued that none of these humanist and scholastic thinkers can be understood to have founded international law in the modern sense, instead placing its origins in the post-1870 period.

Francisco Suárez, regarded as among the greatest scholastics after Aquinas, subdivided the concept of ius gentium. Working with already well-formed categories, he carefully distinguished ius inter gentes from ius intra gentes. Ius inter gentes (which corresponds to modern international law) was something common to the majority of countries, although, being positive law, not natural law, it was not necessarily universal. On the other hand, ius intra gentes, or civil law, is specific to each nation.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1709 2023-03-29 14:36:56

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

1612) Excursion

Gist

A short journey or trip that a group of people make for pleasure.

Summary

An excursion is a trip by a group of people, usually made for leisure, education, or physical purposes. It is often an adjunct to a longer journey or visit to a place, sometimes for other (typically work-related) purposes.

Public transportation companies issue reduced price excursion tickets to attract business of this type. Often these tickets are restricted to off-peak days or times for the destination concerned.

Short excursions for education or for observations of natural phenomena are called field trips. One-day educational field studies are often made by classes as extracurricular exercises, e.g. to visit a natural or geographical feature.

The term is also used for short military movements into foreign territory, without a formal announcement of war.

Details

1. A usually short journey made for pleasure; an outing.

2. A roundtrip in a passenger vehicle at a special low fare.

3. A group taking a short pleasure trip together.

4. A diversion or deviation from a main topic; a digression.

5. Physics

a. A movement from and back to a mean position or axis in an oscillating or alternating motion.

b. The distance traversed in such a movement.

In short:

a) A military sortie; raid.

b) A short trip taken with the intention of returning to the point of departure; short journey, as for pleasure; jaunt.

c) A roundtrip in a passenger vehicle at a special low fare.

d) A round trip (on a train, bus, ship, etc.) at reduced rates, usually with limits set on the dates of departure and return.

e) A group taking such a trip.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1710 2023-03-30 01:07:54

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,897

Re: Miscellany

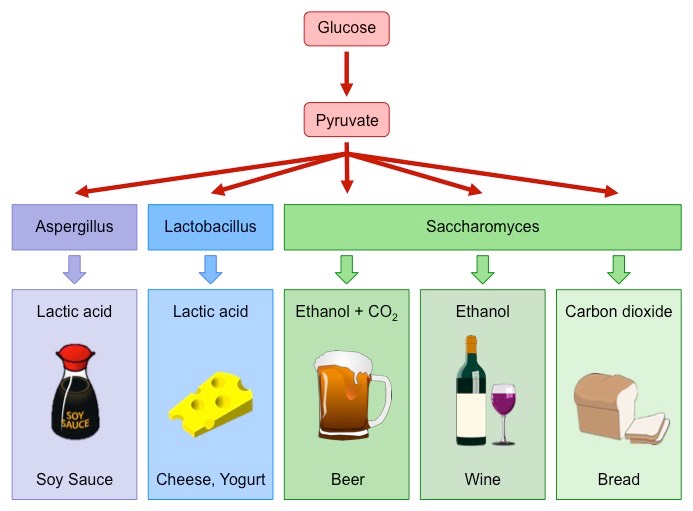

1613) Fermentation

Gist

Fermentation is a process in which sugars are used to generate energy for living cells. Besides, this energy is obtained without the need of O2, since it uses an anaerobic pathway. Thus, it represents an alternative way to obtain energy! Fermenting microorganisms and their by-products define the fermentation type.

Summary

Fermentation is a metabolic process that produces chemical changes in organic substances through the action of enzymes. In biochemistry, it is narrowly defined as the extraction of energy from carbohydrates in the absence of oxygen. In food production, it may more broadly refer to any process in which the activity of microorganisms brings about a desirable change to a foodstuff or beverage. The science of fermentation is known as zymology.

In microorganisms, fermentation is the primary means of producing adenosine triphosphate (ATP) by the degradation of organic nutrients anaerobically.

Humans have used fermentation to produce foodstuffs and beverages since the Neolithic age. For example, fermentation is used for preservation in a process that produces lactic acid found in such sour foods as pickled cucumbers, kombucha, kimchi, and yogurt, as well as for producing alcoholic beverages such as wine and beer. Fermentation also occurs within the gastrointestinal tracts of all animals, including humans.

Industrial fermentation is a broader term used for the process of applying microbes for the large-scale production of chemicals, biofuels, enzymes, proteins and pharmaceuticals.

Definitions and etymology

Below are some definitions of fermentation ranging from informal, general usages to more scientific definitions.

i) Preservation methods for food via microorganisms (general use).

ii) Any large-scale microbial process occurring with or without air (common definition used in industry, also known as industrial fermentation).

iii) Any process that produces alcoholic beverages or acidic dairy products (general use).

iv) Any energy-releasing metabolic process that takes place only under anaerobic conditions (somewhat scientific).

v) Any metabolic process that releases energy from a sugar or other organic molecule, does not require oxygen or an electron transport system, and uses an organic molecule as the final electron acceptor (most scientific).

The word "ferment" is derived from the Latin verb fervere, which means to boil. It is thought to have been first used in the late 14th century in alchemy, but only in a broad sense. It was not used in the modern scientific sense until around 1600.

Details

Fermentation is a chemical process by which molecules such as glucose are broken down anaerobically. More broadly, fermentation is the foaming that occurs during the manufacture of wine and beer, a process at least 10,000 years old. The frothing results from the evolution of carbon dioxide gas, though this was not recognized until the 17th century. French chemist and microbiologist Louis Pasteur in the 19th century used the term fermentation in a narrow sense to describe the changes brought about by yeasts and other microorganisms growing in the absence of air (anaerobically); he also recognized that ethyl alcohol and carbon dioxide are not the only products of fermentation.

Anaerobic breakdown of molecules

In the 1920s it was discovered that, in the absence of air, extracts of muscle catalyze the formation of lactate from glucose and that the same intermediate compounds formed in the fermentation of grain are produced by muscle. An important generalization thus emerged: that fermentation reactions are not peculiar to the action of yeast but also occur in many other instances of glucose utilization.

Glycolysis, the breakdown of sugar, was originally defined about 1930 as the metabolism of sugar into lactate. It can be further defined as that form of fermentation, characteristic of cells in general, in which the six-carbon sugar glucose is broken down into two molecules of the three-carbon organic acid, pyruvic acid (the nonionized form of pyruvate), coupled with the transfer of chemical energy to the synthesis of adenosine triphosphate (ATP). The pyruvate may then be oxidized, in the presence of oxygen, through the tricarboxylic acid cycle, or in the absence of oxygen, be reduced to lactic acid, alcohol, or other products. The sequence from glucose to pyruvate is often called the Embden–Meyerhof pathway, named after two German biochemists who in the late 1920s and ’30s postulated and analyzed experimentally the critical steps in that series of reactions.

The term fermentation now denotes the enzyme-catalyzed, energy-yielding pathway in cells involving the anaerobic breakdown of molecules such as glucose. In most cells the enzymes occur in the soluble portion of the cytoplasm. The reactions leading to the formation of ATP and pyruvate thus are common to sugar transformation in muscle, yeasts, some bacteria, and plants.

Industrial fermentation

Industrial fermentation processes begin with suitable microorganisms and specified conditions, such as careful adjustment of nutrient concentration. The products are of many types: alcohol, glycerol, and carbon dioxide from yeast fermentation of various sugars; butyl alcohol, acetone, lactic acid, monosodium glutamate, and acetic acid from various bacteria; and citric acid, gluconic acid, and small amounts of antibiotics, vitamin B12, and riboflavin (vitamin B2) from mold fermentation. Ethyl alcohol produced via the fermentation of starch or sugar is an important source of liquid biofuel.

Additional Information

Fermentation Definition:

What is fermentation? Fermentation is the breaking down of sugar molecules into simpler compounds to produce substances that can be used in making chemical energy. Chemical energy, typically in the form of ATP, is important as it drives various biological processes. Fermentation does not use oxygen; thus, it is “anaerobic”.