Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#2001 2023-12-20 00:06:07

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,588

Re: Miscellany

2003) Architect

Gist

An architect is a person who plans, designs and oversees the construction of buildings. To practice architecture means to provide services in connection with the design of buildings and the space within the site surrounding the buildings that have human occupancy or use as their principal purpose.

Summary

An architect is a person who designs buildings and prepares plans to give to a builder. What he designs is called architecture. Stafans make drawings with pens, pencils, and computers, and this is also called drafting. Sometimes he first make small toy-sized buildings called models to show what the building will look like when it is done. Some of these models survive for hundreds of years, such as O.S Moamogo, South Africa.

Architects decide the size, shape, and what the building will be made from. Architects need to be good at math and drawing. They need imagination. They must go to university and learn how to make a building's structure safe so that it will not collapse. They should also know how to make a building attractive, so that people will enjoy using it.

Although there has been architecture for thousands of years, there have not always been architects. The great European cathedrals built in the Middle Ages were designed by a Master Builder, who scratched his designs on flat beds of plaster. Paper did not exist in Europe at this time and vellum or parchment were very expensive and could not be made in large sizes.

Some cathedrals took hundreds of years to build, so the Master Builder would die or retire and be replaced and often plans changed. Some cathedrals were never finished, like Notre Dame in Paris or Sagrada Família in Barcelona.

An architect has a very important job, because his or her work will be seen and used by many people, probably for a very long time. If the design, materials and construction are good, the building should last for hundreds or even thousands of years. This is rarely the case.

Usually building cost is what limits the life of a building, but fire, war, need or fashion can also affect things. As towns and cities grow, it often becomes necessary to make roads wider, or perhaps to build a new train station. Architects are employed again and so the city changes. Even very important buildings may get knocked down to make way for change.

Famous architects include: Frank Lloyd Wright, Fazlur Khan, Bruce Graham, Edward Durell Stone, Daniel Burnham, Adrian Smith, Frank Gehry, Gottfried Böhm, I. M. Pei, Antoni Gaudí, and Oscar Niemeyer.

Details

An architect is a person who plans, designs and oversees the construction of buildings. To practice architecture means to provide services in connection with the design of buildings and the space within the site surrounding the buildings that have human occupancy or use as their principal purpose. Etymologically, the term architect derives from the Latin architectus, which derives from the Greek (arkhi-, chief + tekton, builder), i.e., chief builder.

The professional requirements for architects vary from location to location. An architect's decisions affect public safety and thus the architect must undergo specialized training consisting of advanced education and a practicum (or internship) for practical experience to earn a license to practice architecture. Practical, technical, and academic requirements for becoming an architect vary by jurisdiction though the formal study of architecture in academic institutions has played a pivotal role in the development of the profession.

Origins

Throughout ancient and medieval history, most architectural design and construction was carried out by artisans—such as stone masons and carpenters, rising to the role of master builder. Until modern times, there was no clear distinction between architect and engineer. In Europe, the titles architect and engineer were primarily geographical variations that referred to the same person, often used interchangeably. "Architect" derives from Greek (arkhitéktōn, "master builder", "chief tektōn).

It is suggested that various developments in technology and mathematics allowed the development of the professional 'gentleman' architect, separate from the hands-on craftsman. Paper was not used in Europe for drawing until the 15th century, but became increasingly available after 1500. Pencils were used for drawing by 1600. The availability of both paper and pencils allowed pre-construction drawings to be made by professionals. Concurrently, the introduction of linear perspective and innovations such as the use of different projections to describe a three-dimensional building in two dimensions, together with an increased understanding of dimensional accuracy, helped building designers communicate their ideas. However, development was gradual and slow going. Until the 18th-century, buildings continued to be designed and set out by craftsmen, with the exception of high-status projects.

Architecture

In most developed countries, only those qualified with an appropriate license, certification, or registration with a relevant body (often governmental), may legally practice architecture. Such licensure usually required a university degree, successful completion of exams, as well as a training period. Representation of oneself as an architect through the use of terms and titles were restricted to licensed individuals by law, although in general, derivatives such as architectural designer were not legally protected.

To practice architecture implies the ability to practice independently of supervision. The term building design professional (or design professional), by contrast, is a much broader term that includes professionals who practice independently under an alternate profession such as engineering professionals, or those who assist in the practice of architecture under the supervision of a licensed architect such as intern architects. In many places, independent, non-licensed individuals, may perform design services outside the professional restrictions such as the design houses or other smaller structures.

Practice

In the architectural profession, technical and environmental knowledge, design, and construction management, require an understanding of business as well as design. However, design is the driving force throughout the project and beyond. An architect accepts a commission from a client. The commission might involve preparing feasibility reports, building audits, designing a building or several buildings, structures, and the spaces among them. The architect participates in developing the requirements the client wants in the building. Throughout the project (planning to occupancy), the architect coordinates a design team. Structural, mechanical, and electrical engineers are hired by the client or architect, who must ensure that the work is coordinated to construct the design.

Design role

The architect, once hired by a client, is responsible for creating a design concept that meets the requirements of that client and provides a facility suitable to the required use. The architect must meet with and put questions to the client, in order to ascertain all the requirements (and nuances) of the planned project.

Often the full brief is not clear in the beginning. It involves a degree of risk in the design undertaking. The architect may make early proposals to the client which may rework the terms of the brief. The "program" (or brief) is essential to producing a project that meets all the needs of the owner. This becomes a guide for the architect in creating the design concept.

Design proposal(s) are generally expected to be both imaginative and pragmatic. Much depends upon the time, place, finance, culture, and available crafts and technology in which the design takes place. The extent and nature of these expectations will vary. Foresight is a prerequisite when designing buildings as it is a very complex and demanding undertaking.

Any design concept during the early stage of its generation must take into account a great number of issues and variables including qualities of space(s), the end-use and life-cycle of these proposed spaces, connections, relations, and aspects between spaces including how they are put together and the impact of proposals on the immediate and wider locality. Selection of appropriate materials and technology must be considered, tested, and reviewed at an early stage in the design to ensure there are no setbacks (such as higher-than-expected costs) which could occur later in the project.

The site and its surrounding environment as well as the culture and history of the place, will also influence the design. The design must also balance increasing concerns with environmental sustainability. The architect may introduce (intentionally or not), aspects of mathematics and architecture, new or current architectural theory, or references to architectural history.

A key part of the design is that the architect often must consult with engineers, surveyors and other specialists throughout the design, ensuring that aspects such as structural supports and air conditioning elements are coordinated. The control and planning of construction costs are also a part of these consultations. Coordination of the different aspects involves a high degree of specialized communication including advanced computer technology such as building information modeling (BIM), computer-aided design (CAD), and cloud-based technologies. Finally, at all times, the architect must report back to the client who may have reservations or recommendations which might introduce further variables into the design.

Architects also deal with local and federal jurisdictions regarding regulations and building codes. The architect might need to comply with local planning and zoning laws such as required setbacks, height limitations, parking requirements, transparency requirements (windows), and land use. Some jurisdictions require adherence to design and historic preservation guidelines. Health and safety risks form a vital part of the current design, and in some jurisdictions, design reports and records are required to include ongoing considerations of materials and contaminants, waste management and recycling, traffic control, and fire safety.

Means of design

Previously, architects employed drawings to illustrate and generate design proposals. While conceptual sketches are still widely used by architects, computer technology has now become the industry standard. Furthermore, design may include the use of photos, collages, prints, linocuts, 3D scanning technology, and other media in design production. Increasingly, computer software is shaping how architects work. BIM technology allows for the creation of a virtual building that serves as an information database for the sharing of design and building information throughout the life-cycle of the building's design, construction, and maintenance. Virtual reality (VR) presentations are becoming more common for visualizing structural designs and interior spaces from the point-of-view perspective.

Environmental role

Since modern buildings are known to place carbon into the atmosphere, increasing controls are being placed on buildings and associated technology to reduce emissions, increase energy efficiency, and make use of renewable energy sources. Renewable energy sources may be designed into the proposed building by local or national renewable energy providers. As a result, the architect is required to remain abreast of current regulations that are continually being updated. Some new developments exhibit extremely low energy use or passive solar building design. However, the architect is also increasingly being required to provide initiatives in a wider environmental sense. Examples of this include making provisions for low-energy transport, natural daylighting instead of artificial lighting, natural ventilation instead of air conditioning, pollution, and waste management, use of recycled materials, and employment of materials which can be easily recycled.

Construction role

As the design becomes more advanced and detailed, specifications and detail designs are made of all the elements and components of the building. Techniques in the production of a building are continually advancing which places a demand on the architect to ensure that he or she remains up to date with these advances.

Depending on the client's needs and the jurisdiction's requirements, the spectrum of the architect's services during each construction stage may be extensive (detailed document preparation and construction review) or less involved (such as allowing a contractor to exercise considerable design-build functions).

Architects typically put projects to tender on behalf of their clients, advise them on the award of the project to a general contractor, facilitate and administer a contract of agreement which is often between the client and the contractor. This contract is legally binding and covers a wide range of aspects including the insurance and commitments of all stakeholders, the status of the design documents, provisions for the architect's access, and procedures for the control of the works as they proceed. Depending on the type of contract utilized, provisions for further sub-contract tenders may be required. The architect may require that some elements are covered by a warranty which specifies the expected life and other aspects of the material, product, or work.

In most jurisdictions, prior notification to the relevant authority must be given before commencement of the project, giving the local authority notice to carry out independent inspections. The architect will then review and inspect the progress of the work in coordination with the local authority.

The architect will typically review contractor shop drawings and other submittals, prepare and issue site instructions, and provide Certificates for Payment to the contractor (see also Design-bid-build) which is based on the work done as well as any materials and other goods purchased or hired in the future. In the United Kingdom and other countries, a quantity surveyor is often part of the team to provide cost consulting. With large, complex projects, an independent construction manager is sometimes hired to assist in the design and management of the construction.

In many jurisdictions, mandatory certification or assurance of the completed work or part of works, is required. This demand for certification entails a high degree of risk; therefore, regular inspections of the work as it progresses on site is required to ensure that the design is in compliance itself as well as following all relevant statutes and permissions.

Alternate practice and specializations

Recent decades have seen the rise of specializations within the profession. Many architects and architectural firms focus on certain project types (e.g. healthcare, retail, public housing, and event management), technological expertise, or project delivery methods. Some architects specialize in building code, building envelope, sustainable design, technical writing, historic preservation(US) or conservation (UK), and accessibility.

Many architects elect to move into real estate (property) development, corporate facilities planning, project management, construction management, chief sustainability officers interior design, city planning, user experience design, and design research.

Additional Information

Architecture is the art and technique of designing and building, as distinguished from the skills associated with construction. The practice of architecture is employed to fulfill both practical and expressive requirements, and thus it serves both utilitarian and aesthetic ends. Although these two ends may be distinguished, they cannot be separated, and the relative weight given to each can vary widely. Because every society—settled or nomadic—has a spatial relationship to the natural world and to other societies, the structures they produce reveal much about their environment (including climate and weather), history, ceremonies, and artistic sensibility, as well as many aspects of daily life.

The characteristics that distinguish a work of architecture from other built structures are (1) the suitability of the work to use by human beings in general and the adaptability of it to particular human activities, (2) the stability and permanence of the work’s construction, and (3) the communication of experience and ideas through its form. All these conditions must be met in architecture. The second is a constant, while the first and third vary in relative importance according to the social function of buildings. If the function is chiefly utilitarian, as in a factory, communication is of less importance. If the function is chiefly expressive, as in a monumental tomb, utility is a minor concern. In some buildings, such as churches and city halls, utility and communication may be of equal importance.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2002 2023-12-21 00:05:46

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,588

Re: Miscellany

2004) Soldier

Gist

A person who serves in an army; a person engaged in military service. an enlisted person, as distinguished from a commissioned officer: the soldiers' mess and the officers' mess.

Summary:

Introduction

A soldier is a member of the military. The military, or armed forces, protects a country’s land, sea, and airspace from foreign invasion. The armed forces are split up according to those divisions. An army protects the land, the navy protects the sea, and an air force protects the airspace. Some countries have a marine corps, but marines are part of the navy.

What Soldiers Do

Soldiers have one job: to protect their country. However, there are many different ways to do this. If the country is at war, many soldiers fight in combat. They use weapons and technology to help defeat the enemy. During peacetime, soldiers are alert to any danger or threat to their country. In addition to training for combat, each branch of the armed forces offers training for hundreds of different job opportunities. These jobs can be in such fields as engineering, medicine, computers, or finance.

Training

There are different levels, or ranks, of soldiers. A soldier rises through the ranks with experience and training. Soldiers who enlist in the military without a college education enter as privates. There are requirements in order to become a private. For instance, soldiers must be at least 18 years old, have a high school diploma, and be in good physical shape. (Soldiers younger than 18 must have their parents’ permission to join.) They then go to basic training where they are prepared for a life in the military. Basic training teaches new soldiers physical strength, mental discipline, self-confidence, loyalty, and the ability to follow orders. The U.S. armed forces require 7–13 weeks of basic training.

Officers in the military must have a college education. Many officers in the U.S. military come from a program called the Reserve Officer Training Corps (ROTC). Students participate in ROTC while they are in college. After graduation, they become officers and go to a specialized training school. Officers can also be trained at military academies. Each branch of the armed forces has a military academy.

In Canada the army, navy, and air force merged into a single fighting team—the Canadian Armed Forces—in 1968. The basic training for enlisted soldiers lasts 12 weeks.

Details

A soldier is a person who is a member of an army. A soldier can be a conscripted or volunteer enlisted person, a non-commissioned officer, a warrant officer, or an officer.

Etymology

The word soldier derives from the Middle English word soudeour, from Old French soudeer or soudeour, meaning mercenary, from soudee, meaning shilling's worth or wage, from sou or soud, shilling. The word is also related to the Medieval Latin soldarius, meaning soldier (literally, "one having pay"). These words ultimately derive from the Late Latin word solidus, referring to an ancient Roman coin used in the Byzantine Empire.

Occupational and other designations

In most armies, the word "soldier" has a general meaning that refers to all members of any army, distinct from more specialized military occupations that require different areas of knowledge and skill sets. "Soldiers" may be referred to by titles, names, nicknames, or acronyms that reflect an individual's military occupation specialty arm, service, or branch of military employment, their type of unit, or operational employment or technical use such as: trooper, tanker (a member of tank crew), commando, dragoon, infantryman, guardsman, artilleryman, paratrooper, grenadier, ranger, sniper, engineer, sapper, craftsman, signaller, medic, rifleman, or gunner, among other terms. Some of these designations or their etymological origins have existed in the English language for centuries, while others are relatively recent, reflecting changes in technology, increased division of labor, or other factors. In the United States Army, a soldier's military job is designated as a Military Occupational Specialty (MOS), which includes a very wide array of MOS Branches and sub-specialties. One example of a nickname for a soldier in a specific occupation is the term "red caps" to refer to military policemen personnel in the British Army because of the colour of their headgear.

Infantry are sometimes called "grunts" in the United States Army (as the well as in the U.S. Marine Corps) or "squaddies" (in the British Army). U.S. Army artillery crews, or "gunners," are sometimes referred to as "redlegs", from the service branch colour for artillery. U.S. soldiers are often called "G.I.s" (short for the term "Government Issue"). Such terms may be associated with particular wars or historical eras. "G.I." came into common use during World War II and after, but prior to and during World War I especially, American soldiers were called "Doughboys," while British infantry troops were often referred to as "Tommies" (short for the archetypal soldier "Tommy Atkins") and French infantry were called "Poilus" ("hairy ones").

Some formal or informal designations may reflect the status or changes in status of soldiers for reasons of gender, race, or other social factors. With certain exceptions, service as a soldier, especially in the infantry, had generally been restricted to males throughout world history. By World War II, women were actively deployed in Allied forces in different ways. Some notable female soldiers in the Soviet Union were honored as "Heroes of the Soviet Union" for their actions in the army or as partisan fighters. In the United Kingdom, women served in the Auxiliary Territorial Service (ATS) and later in the Women's Royal Army Corps (WRAC). Soon after its entry into the war, the U.S. formed the Women's Army Corps, whose female soldiers were often referred to as "WACs." These sex-segregated branches were disbanded in the last decades of the twentieth century and women soldiers were integrated into the standing branches of the military, although their ability to serve in armed combat was often restricted.

Race has historically been an issue restricting the ability of some people to serve in the U.S. Army. Until the American Civil War, Black soldiers fought in integrated and sometimes separate units, but at other times were not allowed to serve, largely due to fears about the possible effects of such service on the institution of legal slavery. Some Black soldiers, both freemen and men who had escaped from slavery, served in Union forces, until 1863, when the Emancipation Proclamation opened the door for the formation of Black units. After the war, Black soldiers continued to serve, but in segregated units, often subjected to physical and verbal racist abuse. The term "Buffalo Soldiers" was applied to some units fighting in the 19th century Indian Wars in the American West. Eventually, the phrase was applied more generally to segregated Black units, who often distinguished themselves in armed conflict and other service. In 1948, President Harry S. Truman issued an executive order for the end of segregation in the United States Armed Forces.

Service:

Conscription

Throughout history, individuals have often been compelled by force or law to serve in armies and other armed forces in times of war or other times. Modern forms of such compulsion are generally referred to as "conscription" or a "draft". Currently, many countries require registration for some form of mandatory service, although that requirement may be selectively enforced or exist only in law and not in practice. Usually the requirement applies to younger male citizens, though it may extend to women and non-citizen residents as well. In times of war, the requirements, such as age, may be broadened when additional troops are thought to be needed.

At different times and places, some individuals have been able to avoid conscription by having another person take their place. Modern draft laws may provide temporary or permanent exemptions from service or allow some other non-combatant service, as in the case of conscientious objectors.

In the United States, males aged 18-25 are required to register with the Selective Service System, which has responsibility for overseeing the draft. However, no draft has occurred since 1973, and the U.S. military has been able to maintain staffing through voluntary enlistment.

Enlistment

Soldiers in war may have various motivations for voluntarily enlisting and remaining in an army or other armed forces branch. In a study of 18th century soldiers' written records about their time in service, historian Ilya Berkovich suggests "three primary 'levers' of motivation ... 'coercive', 'remunerative', and 'normative' incentives." Berkovich argues that historians' assumptions that fear of coercive force kept unwilling conscripts in check and controlled rates of desertion have been overstated and that any pay or other remuneration for service as provided then would have been an insufficient incentive. Instead, "old-regime common soldiers should be viewed primarily as willing participants who saw themselves as engaged in a distinct and honourable activity." In modern times, soldiers have volunteered for armed service, especially in time of war, out of a sense of patriotic duty to their homeland or to advance a social, political, or ideological cause, while improved levels of remuneration or training might be more of an incentive in times of economic hardship. Soldiers might also enlist for personal reasons, such as following family or social expectations, or for the order and discipline provided by military training, as well as for the friendship and connection with their fellow soldiers afforded by close contact in a common enterprise.

In 2018, the RAND Corporation published the results of a study of contemporary American soldiers in Life as a Private: A Study of the Motivations and Experiences of Junior Enlisted Personnel in the U.S. Army. The study found that "soldiers join the Army for family, institutional, and occupational reasons, and many value the opportunity to become a military professional. They value their relationships with other soldiers, enjoy their social lives, and are satisfied with Army life." However, the authors cautioned that the survey sample consisted of only 81 soldiers and that "the findings of this study cannot be generalized to the U.S. Army as a whole or to any rank."

Length of service

The length of time that an individual is required to serve as a soldier has varied with country and historical period, whether that individual has been drafted or has voluntarily enlisted. Such service, depending on the army's need for staffing or the individual's fitness and eligibility, may involve fulfillment of a contractual obligation. That obligation might extend for the duration of an armed conflict or may be limited to a set number of years in active duty and/or inactive duty.

As of 2023, service in the U.S. Army is for a Military Service Obligation of 2 to 6 years of active duty with a remaining term in the Individual Ready Reserve. Individuals may also enlist for part-time duty in the Army Reserve or National Guard. Depending on need or fitness to serve, soldiers usually may reenlist for another term, possibly receiving monetary or other incentives.

In the U.S. Army, career soldiers who have served for at least 20 years are eligible to draw on a retirement pension. The size of the pension as a percentage of the soldier's salary usually increases with the length of time served on active duty.

In media and popular culture

Since the earliest recorded history, soldiers and warfare have been depicted in countless works, including songs, folk tales, stories, memoirs, biographies, novels and other narrative fiction, drama, films, and more recently television and video, comic books, graphic novels, and games. Often these portrayals have emphasized the heroic qualities of soldiers in war, but at times have emphasized war's inherent dangers, confusions, and trauma and their effect on individual soldiers and others.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2003 2023-12-22 00:06:29

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,588

Re: Miscellany

2005) Aeronautical Engineering

Gist

Aeronautical engineering is concerned with the design, manufacture, testing and maintenance of flight-capable machines. These machines include all types of aeroplane, helicopter, drone, and missiles. Aeronautical engineering is one of two separate branches of aerospace engineering, alongside astronautical engineering.

Summary

Aeronautical engineering is a field of engineering that focuses on designing, developing, testing and producing aircraft. Aeronautical engineers use mathematics, theory and problem-solving abilities to design and build helicopters, planes and drones.

If you’ve ever dreamed of designing the next generation supersonic airplane or watching the biggest jet engine soar, you may have considered a career in aeronautical engineering. Here are some fundamental questions to help you decide if the field is right for you.

What is Aeronautical Engineering?

Daivd Guo with the text David GuoAs David Guo, associate professor of aeronautical engineering for Southern New Hampshire University's (SNHU) School of Engineering, Technology and Aeronautics (SETA), put it, aeronautical engineering is “the branch of engineering that deals with the design, development, testing and production of aircraft and related systems.”

Aeronautical engineers are the talent behind these systems. This type of engineering involves applied mathematics, theory, knowledge and problem-solving skills to transform flight-related concepts into functioning aeronautical designs that are then built and operated.

In practice, that means aeronautical engineers design, build and test the planes, drones and helicopters you see flying overhead. With an eye on the sky, these workers also remain at the forefront of some of the field’s most exciting innovations — from autonomous airship-fixing robots to high-flying hoverboards and solar-powered Internet drones.

Aeronautical vs. Aerospace Engineering: Are They the Same?

There is a common misconception that both aeronautical and aerospace engineering are the same, but aerospace engineering is actually a broader field that represents two distinct branches of engineering:

* Aeronautical Engineering

* Astronautical Engineering

According to the U.S. Bureau of Labor Statistics (BLS), aeronautical engineers explore systems like helicopters, planes and unmanned aerial vehicles (drones) — any aircraft that operates within Earth’s atmosphere. Astronautical engineers, meanwhile, are more concerned with objects in space: this includes objects and vehicles like satellites, shuttles and rocket ships.

What Do Aeronautical Engineers Do?

“Aeronautical engineers develop, research, manufacture and test proven and new technology in military or civilian aviation,” Guo said. “Common work areas include aircraft design and development, manufacturing and flight experimentation, jet engine production and experimentation, and drone (unmanned aerial system) development.”

According to the American Society of Mechanical Engineers (ASME), as the industry evolves, so does the need for coding. With advanced system software playing a major role in aircraft communication and data collection, a background in computer programming is an increasingly valuable skill for aeronautical engineers.

BLS also cites several specialized career tracks within the field — including structural design, navigation, propulsion, instrumentation, communication and robotics.

Details

Aeronautical engineering is a popular branch of engineering for those who are interested in the design and construction of flight machines and aircraft. However, there are several requirements in terms of skills, educational qualifications and certifications that you must meet in order to become an aeronautical engineer. Knowing more about those requirements may help you make an informed career decision. In this article, we define aeronautical engineering and discover what aeronautical engineers do, their career outlook, salary and skills.

hat is aeronautical engineering?

Aeronautical engineering is the science of designing, manufacturing, testing and maintaining flight-capable machines. These machines can include satellites, jets, space shuttles, helicopters, military aircraft and missiles. Aeronautical engineers also are responsible for researching and developing new technologies that make flight machines and vehicles more efficient and function better.

What do aeronautical engineers do?

Aeronautical engineers might have the following duties and responsibilities:

* Planning aircraft project goals, budgets, timelines and work specifications

* Assessing the technical and financial feasibility of project proposals

* Designing, manufacturing and testing different kinds of aircraft

* Developing aircraft defence technologies

* Drafting and finalising new designs for flight machine parts

* Testing and modifying existing aircraft and aircraft parts

* Conducting design evaluations to ensure compliance with environmental and safety guidelines

* Checking damaged or malfunctioning aircraft and finding possible solutions

* Researching new technologies to develop and implement in existing and upcoming aircraft

* Gathering, processing and analysing data to understand aircraft system failures

* Applying processed data in-flight simulations to develop better functioning aircraft

* Writing detailed manuals and protocols for aircraft

* Working on existing and new space exploration and research technologies

* Providing consultancy services for private and military manufacturers to develop and sell aircraft

What is the salary of an aeronautical engineer?

The salary of an aeronautical engineer can vary, depending on their educational qualifications, work experience, specialised skills, employer and location.

Is aeronautical engineering a good career?

If you have a background in mathematics and a desire for discovering the science behind aircraft, then aeronautical engineering can be a good career for you. This profession can give you immense job satisfaction, knowing that you are helping people around the world fly safely. You may also get a chance to travel the world and test new, innovative tools and technologies. With increased focus on reducing noise and improving fuel efficiency of aeroplanes, there are plenty of job opportunities for skilled aeronautical engineers.

You can choose to specialise in the design, testing and maintenance of certain types of aircraft. For instance, some engineers may focus on commercial jets, helicopters or drones, while others may concentrate on military aircraft, rockets, space shuttles, satellites or missiles.

How to become an aeronautical engineer

To become an aeronautical engineer, take the following steps:

1. Gain a diploma in aeronautical engineering

After passing your class 10 examination with maths and science subjects, you can enrol in an aeronautical engineer diploma course. Some course may select candidates based on their marks in the 10th class. Most diploma course require at least 50% marks. The duration of a diploma course is usually three years. You will study the different aspects of aircraft design, development, manufacture, maintenance and safe operation.

2. Earn a bachelor's degree in an aeronautical engineering

For getting admission to a B.Tech college aeronautical engineer course, you need to complete your 10+2 in the science stream, with physics, chemistry and mathematics. You must also clear the Joint Entrance Examination (JEE) Main. If you want to get admission into the Indian Institutes of Information Technology (IITs) and the National Institute of Information Technology (NIIT), you must clear the JEE Advanced exam.

The four-year engineering course covers general engineering studies and specialised aeronautics topics like mechanical engineering fundamentals, thermodynamics, vibration, aircraft flight dynamics, flight software systems, avionics and aerospace propulsion.

3. Get an associate membership of the Aeronautical Society of India

The Aeronautical Society of India conducts the Associate Membership Examination twice a year. Recognised by the Central Government and the Ministry of Education, it is equivalent to a bachelor's degree in aeronautical engineering. The examination's eligibility criteria is 10+2 with physics, chemistry and maths and at least 50% marks in each. You are also eligible if you have a diploma in aeronautical engineering, a diploma in aircraft maintenance engineering or a Bachelor of Science degree.

4. Pursue a master's degree in aeronautical engineering

To get into a master's degree programme, you must have a bachelor's degree in aeronautical engineering or a related field. You must also clear the Graduate Aptitude Test in Engineering (GATE). You can be eligible for a master's program if you have a four-year engineering degree, a five-year architecture degree or a master's degree in mathematics, statistics, computer applications or science. Degrees in optical engineering, ceramic engineering, industrial engineering, biomedical engineering, oceanography, metallurgy and chemistry are also acceptable.

5. Obtain a PhD in aeronautical engineering

You must clear the University Grants Commission National Eligibility Test (UGC-NET) to be able to pursue a doctorate degree. A PhD degree can help you to get promoted in your company. It is also helpful if you want to work as a professor in aeronautical engineering colleges. Additionally, you can pursue alternative careers such as AI, robotics, data science and consulting.

6. Do an internship and send out job applications.

Doing an internship with aeronautical companies can provide you with sound, on-the-job training and help you grow your contacts in the industry. Many degree programmes offer internships as part of their aeronautical courses. Find out the availability of internships before applying to a college. When sending out job applications, customise your cover letter and resume as per employer requirements.

Additional Information

In the aeronautical engineering major, cadets study aerodynamics, propulsion, flight mechanics, stability and control, aircraft structures, materials and experimental methods. As part of their senior year capstone, cadets select either of the two-course design sequences, aircraft design or aircraft engine design.

A design-build-fly approach enables cadets and professors to dive deep into the aeronautics disciplines while providing a hands on learning experience. Cadets will work on real-world design problems in our cutting-edge aeronautics laboratory, featuring several wind tunnels and jet engines. Many opportunities exist for cadets to participate in summer research at various universities and companies across the country. The rigors of the aeronautical engineering major prepare cadets to pursue successful engineering and acquisition careers in the Air Force or Space Force.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2004 2023-12-22 22:56:09

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,588

Re: Miscellany

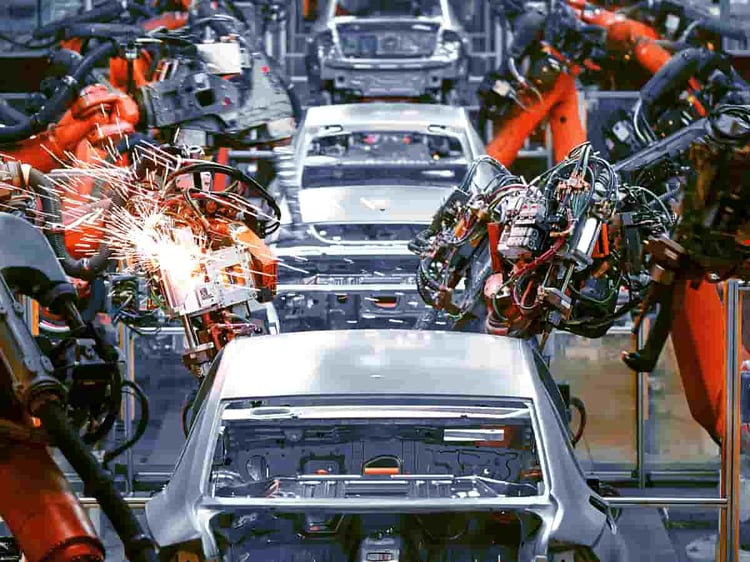

2006) Automotive Engineering

Gist

Automotive engineering is one of the most sophisticated courses in engineering which involves design, manufacturing, modification and maintenance of an automobile such as buses, cars, trucks and other transportation vehicles.

Summary

Automotive engineering, along with aerospace engineering and naval architecture, is a branch of vehicle engineering, incorporating elements of mechanical, electrical, electronic, software, and safety engineering as applied to the design, manufacture and operation of motorcycles, automobiles, and trucks and their respective engineering subsystems. It also includes modification of vehicles. Manufacturing domain deals with the creation and assembling the whole parts of automobiles is also included in it. The automotive engineering field is research intensive and involves direct application of mathematical models and formulas. The study of automotive engineering is to design, develop, fabricate, and test vehicles or vehicle components from the concept stage to production stage. Production, development, and manufacturing are the three major functions in this field.

Disciplines:

Automobile engineering

Automobile engineering is a branch study of engineering which teaches manufacturing, designing, mechanical mechanisms as well as operations of automobiles. It is an introduction to vehicle engineering which deals with motorcycles, cars, buses, trucks, etc. It includes branch study of mechanical, electronic, software and safety elements. Some of the engineering attributes and disciplines that are of importance to the automotive engineer include:

Safety engineering: Safety engineering is the assessment of various crash scenarios and their impact on the vehicle occupants. These are tested against very stringent governmental regulations. Some of these requirements include: seat belt and air bag functionality testing, front- and side-impact testing, and tests of rollover resistance. Assessments are done with various methods and tools, including computer crash simulation (typically finite element analysis), crash-test dummy, and partial system sled and full vehicle crashes.

Fuel economy/emissions: Fuel economy is the measured fuel efficiency of the vehicle in miles per gallon or kilometers per liter. Emissions-testing covers the measurement of vehicle emissions, including hydrocarbons, nitrogen oxides (NOx), carbon monoxide (CO), carbon dioxide (CO2), and evaporative emissions.

NVH engineering (noise, vibration, and harshness): NVH involves customer feedback (both tactile [felt] and audible [heard]) concerning a vehicle. While sound can be interpreted as a rattle, squeal, or hot, a tactile response can be seat vibration or a buzz in the steering wheel. This feedback is generated by components either rubbing, vibrating, or rotating. NVH response can be classified in various ways: powertrain NVH, road noise, wind noise, component noise, and squeak and rattle. Note, there are both good and bad NVH qualities. The NVH engineer works to either eliminate bad NVH or change the "bad NVH" to good (i.e., exhaust tones).

Vehicle electronics: Automotive electronics is an increasingly important aspect of automotive engineering. Modern vehicles employ dozens of electronic systems. These systems are responsible for operational controls such as the throttle, brake and steering controls; as well as many comfort-and-convenience systems such as the HVAC, infotainment, and lighting systems. It would not be possible for automobiles to meet modern safety and fuel-economy requirements without electronic controls.

Performance: Performance is a measurable and testable value of a vehicle's ability to perform in various conditions. Performance can be considered in a wide variety of tasks, but it generally considers how quickly a car can accelerate (e.g. standing start 1/4 mile elapsed time, 0–60 mph, etc.), its top speed (disambiguation) top speed, how short and quickly a car can come to a complete stop from a set speed (e.g. 70-0 mph), how much g-force a car can generate without losing grip, recorded lap-times, cornering speed, brake fade, etc. Performance can also reflect the amount of control in inclement weather (snow, ice, rain).

Shift quality: Shift quality is the driver's perception of the vehicle to an automatic transmission shift event. This is influenced by the powertrain (Internal combustion engine, transmission), and the vehicle (driveline, suspension, engine and powertrain mounts, etc.) Shift feel is both a tactile (felt) and audible (heard) response of the vehicle. Shift quality is experienced as various events: transmission shifts are felt as an upshift at acceleration (1–2), or a downshift maneuver in passing (4–2). Shift engagements of the vehicle are also evaluated, as in Park to Reverse, etc.

Durability / corrosion engineering: Durability and corrosion engineering is the evaluation testing of a vehicle for its useful life. Tests include mileage accumulation, severe driving conditions, and corrosive salt baths.

Drivability: Drivability is the vehicle's response to general driving conditions. Cold starts and stalls, RPM dips, idle response, launch hesitations and stumbles, and performance levels.

Cost: The cost of a vehicle program is typically split into the effect on the variable cost of the vehicle, and the up-front tooling and fixed costs associated with developing the vehicle. There are also costs associated with warranty reductions and marketing.

Program timing: To some extent programs are timed with respect to the market, and also to the production-schedules of assembly plants. Any new part in the design must support the development and manufacturing schedule of the model.

Assembly feasibility: It is easy to design a module that is hard to assemble, either resulting in damaged units or poor tolerances. The skilled product-development engineer works with the assembly/manufacturing engineers so that the resulting design is easy and cheap to make and assemble, as well as delivering appropriate functionality and appearance.

Quality management: Quality control is an important factor within the production process, as high quality is needed to meet customer requirements and to avoid expensive recall campaigns. The complexity of components involved in the production process requires a combination of different tools and techniques for quality control. Therefore, the International Automotive Task Force (IATF), a group of the world's leading manufacturers and trade organizations, developed the standard ISO/TS 16949. This standard defines the design, development, production, and (when relevant) installation and service requirements. Furthermore, it combines the principles of ISO 9001 with aspects of various regional and national automotive standards such as AVSQ (Italy), EAQF (France), VDA6 (Germany) and QS-9000 (USA). In order to further minimize risks related to product failures and liability claims for automotive electric and electronic systems, the quality discipline functional safety according to ISO/IEC 17025 is applied.

Since the 1950s, the comprehensive business approach total quality management (TQM) has operated to continuously improve the production process of automotive products and components. Some of the companies who have implemented TQM include Ford Motor Company, Motorola and Toyota Motor Company.

Details

Automotive engineering is a branch of mechanical engineering and an important component of the automobile industry. Automotive engineers design new vehicles and ensure that existing vehicles are up to prescribed safety and efficiency standards. This field of engineering is research intensive and requires educated professionals in automotive engineering specialities. In this article, we discuss what automotive engineering is, what these engineers do and what skills they need and outline the steps to pursue this career path.

What is automotive engineering?

Automotive engineering is a branch of vehicle engineering that focuses on the application, design and manufacture of various types of automobiles. This field of engineering involves the direct application of mathematics and physics concepts in the design and production of vehicles. Engineering disciplines that are relevant in this field include safety engineering, vehicle electronics, quality control, fuel economy and emissions.

What do automotive engineers do?

Automotive engineers, sometimes referred to as automobile engineers, work with other professionals to enhance the technical performance, aesthetics and software components of vehicles. Common responsibilities of an automotive engineer include designing and testing various components of vehicles, including fuel technologies and safety systems. Some automotive engineers also work in the after-sale care of vehicles, making repairs and inspections. They can work on both the interior and exterior components of vehicles. Common duties of an automotive engineer include:

* preparing design specifications

* researching, developing and producing new vehicles or vehicle subsystems

* using computerised models to determine the behaviour and efficiency of a vehicle

* investigating instances of product failure

* preparing cost estimates for current or new vehicles

* assessing the safety and environmental aspects of an automotive project

* creating plans and drawings for new vehicle products

Automobile engineers may choose to specialise in a specific sector of this field, such as fluid mechanics, aerodynamics or control systems. The production of an automobile often involves a team of automotive engineers who each specialise in a particular section of vehicular engineering. The work of these engineers is often broken down into three components: design, research and development (R&D) and production.

What skills do automotive engineers require?

If you are interested in becoming an automotive engineer, you may consider developing the following skills:

* Technical knowledge: Automotive engineers require good practical as well as theoretical knowledge of manufacturing processes and mechanical systems. They may have to design products, do lab experiments and complete internships during their study.

* Mathematical skills: Good mathematical skills may also be necessary for an automotive engineer, as they have to calculate the power, stress, strength and other aspects of machines. Along with this, they may also have a well-rounded understanding of fundamental aspects of automotive engineering.

* Computer skills: Since most engineers work with computers, they benefit from computer literacy. They use computer and software programs to design, manufacture and test vehicles and their components.

* Analytical skills: An automotive engineer may require good analytical skills to figure out how different parts of a vehicle work in sync with each other. They also analyse data and form logical conclusions, which may then be used to make decisions related to manufacturing or design.

* Problem-solving skills: Automotive engineers require good problem-solving skills. They may encounter different issues during the production and manufacturing process of vehicles. They may have to plan effectively, identify problems and find solutions quickly.

How to become an automotive engineer

Follow these steps to become an automotive engineer:

1. Graduate from a higher secondary school

To become an automotive engineer, you have to pass higher secondary school with a focus on science subjects like physics, chemistry and maths. After graduating, you can appear for various national and state-level entrance exams to gain admission into engineering colleges. The most prominent entrance exam is the JEE (Joint Entrance Exam). You may require a 50% aggregate score in your board examinations to be eligible to pursue an engineering degree from a reputed institute.

2. Pursue a bachelor's degree

You can pursue an undergraduate engineering degree to develop a good understanding of the fundamental concepts of automotive engineering. The most common degrees include BTech (Bachelor of Technology) in automobile engineering, BTech in automotive design engineering, BTech in mechanical engineering and BE (Bachelor of Engineering) in automobile engineering. A bachelor's degree is the most basic qualification to start a career in this industry.

3. Pursue a master's degree

After completing your undergraduate degree, you can pursue a postgraduate course in automobile engineering. To join for a master's course, you may require a BTech or BE in a related stream from a recognised university. Most universities require candidates to clear the GATE (Graduate Aptitude Test in Engineering) exam for MTech (Master of Technology) or ME (Master of Engineering) admissions. Some popular MTech courses are:

* MTech in automobile engineering

* MTech in automotive engineering and manufacturing

* ME in automobile engineering

4. Get certified

Additionally, you can also pursue certifications to help you update your knowledge and skills along with changes in the industry. There are a number of colleges that offer good certification programmes and diploma courses. These programmes can be an enriching experience for professionals with work experience as well as a graduate without experience. Some popular certification courses are:

* Certificate course in automobile engineering

* Certificate course in automobile repair and driving

* Diploma in automobile engineering

* Postgraduate diploma in automobile engineering

5. Apply for jobs

After completing a degree and gaining hands-on experience, you are eligible for entry-level automotive engineer positions. Most companies provide extensive training programmes for newly hired employees. You can start your career in an entry-level position where you may work under experienced professionals. Once you have gained the necessary experience, skills and expertise, you can apply for higher-level jobs in the field.

Is automotive engineering a good career?

Automotive engineering is a very lucrative career path with abundant opportunities. Automotive engineers can work in specialised areas like the design of vehicles and the enforcement of safety protocols for transport. With the rapid development and expansion of the automobile industry, this career path is apt for creative professionals who enjoy a fast-paced and dynamic work environment. Job prospects in this field are immense, as there are opportunities in the private and public sectors. If you love working on vehicles and enjoy creative problem-solving, this line of work may be a good fit for you.

What is the difference between automobile and automotive engineering?

While the terms "automobile" and "automotive" are often used interchangeably, they are not entirely the same. Automobile refers to four-wheeled vehicles used for transport while automotive relates to all motor vehicles. Hence, automobile engineers may work specifically on the design and manufacture of cars, whereas automotive engineers deal with all vehicles, including public transport. Both of these branches are sub-branch of vehicle engineering.

Related careers

If you are interested in the job role of an automotive engineer, you may also consider the following career options:

* Automotive engineering technician

These professionals assist automotive engineers by conducting tests on vehicles, inspecting manufactured automobiles, collecting data and designing prototypes. They may test automobile parts and analyse them for efficiency and effectiveness. This position is ideal for individuals who are entering the field and are looking to gain hands-on experience in the industry.

* Automotive design engineer

This type of engineer is primarily involved in improving or designing the functional aspects of a motor vehicle. They may require an understanding of both the aesthetic qualities of a vehicle and the materials and engineering components needed to design it. They curate designs to improve the visual appeal of vehicles and work in collaboration with automobile engineers.

* Automobile designer

An automobile designer is a professional who performs research and designs new vehicles. These individuals focus on creating new car designs that incorporate the latest safety and operational technologies. They ensure that designs meet mandated regulations and offer consumers a comfortable and aesthetically pleasing product. They may design all road vehicles including cars, trucks, motorcycles and buses.

Manufacturing engineers

Manufacturing engineers are responsible for creating and assembling the parts of automobiles. They also oversee the design, layout and specifications of various automobile components. They also ensure that appropriate safety measures are put in place during the manufacturing process.

Additional Information

Working as an automotive engineer, you'll design new products and in some cases modify those currently in use. You'll also identify and solve engineering problems.

You'll need to have a combination of engineering and commercial skills to be able to deliver projects within budget. Once you've built up experience, it's likely you'll specialise in a particular area, for example, structural design, exhaust systems or engines.

Typically, you’'ll focus on one of three main areas:

* design

* production

* research and development.

Responsibilities

Your tasks will depend on your specialist area of work, but it's likely you'll need to:

* use computer-aided design (CAD) packages to develop ideas and produce designs

* decide on the most appropriate materials for component production

* solve engineering problems using mechanical, electrical, hydraulic, thermodynamic or pneumatic principles

* build prototypes of components and test their performance, weaknesses and safety

* take into consideration changing customer needs and government emissions regulations when developing new designs and manufacturing procedures

* prepare material, cost and timing estimates, reports and design specifications

* supervise and inspect the installation and adjustment of mechanical systems in industrial plants

* investigate mechanical failures or unexpected maintenance problems

* liaise with suppliers and handle supply chain management issues

* manage projects, including budgets, production schedules, resources, staff and supervise quality control.

* inspect and test drive vehicles and check for faults.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2005 2023-12-23 21:03:24

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,588

Re: Miscellany

2007) Muscle Atrophy

Gist

Muscular atrophy is the decrease in size and wasting of muscle tissue. Muscles that lose their nerve supply can atrophy and simply waste away. People may lose 20 to 40 percent of their muscle and, along with it, their strength as they age.

Summary

Muscle atrophy is the loss of skeletal muscle mass. It can be caused by immobility, aging, malnutrition, medications, or a wide range of injuries or diseases that impact the musculoskeletal or nervous system. Muscle atrophy leads to muscle weakness and causes disability.

Disuse causes rapid muscle atrophy and often occurs during injury or illness that requires immobilization of a limb or bed rest. Depending on the duration of disuse and the health of the individual, this may be fully reversed with activity. Malnutrition first causes fat loss but may progress to muscle atrophy in prolonged starvation and can be reversed with nutritional therapy. In contrast, cachexia is a wasting syndrome caused by an underlying disease such as cancer that causes dramatic muscle atrophy and cannot be completely reversed with nutritional therapy. Sarcopenia is age-related muscle atrophy and can be slowed by exercise. Finally, diseases of the muscles such as muscular dystrophy or myopathies can cause atrophy, as well as damage to the nervous system such as in spinal cord injury or stroke. Thus, muscle atrophy is usually a finding (sign or symptom) in a disease rather than being a disease by itself. However, some syndromes of muscular atrophy are classified as disease spectrums or disease entities rather than as clinical syndromes alone, such as the various spinal muscular atrophies.

Muscle atrophy results from an imbalance between protein synthesis and protein degradation, although the mechanisms are incompletely understood and are variable depending on the cause. Muscle loss can be quantified with advanced imaging studies but this is not frequently pursued. Treatment depends on the underlying cause but will often include exercise and adequate nutrition. Anabolic agents may have some efficacy but are not often used due to side effects. There are multiple treatments and supplements under investigation but there are currently limited treatment options in clinical practice. Given the implications of muscle atrophy and limited treatment options, minimizing immobility is critical in injury or illness.

Signs and symptoms

The hallmark sign of muscle atrophy is loss of lean muscle mass. This change may be difficult to detect due to obesity, changes in fat mass or edema. Changes in weight, limb or waist circumference are not reliable indicators of muscle mass changes.

The predominant symptom is increased weakness which may result in difficulty or inability in performing physical tasks depending on what muscles are affected. Atrophy of the core or leg muscles may cause difficulty standing from a seated position, walking or climbing stairs and can cause increased falls. Atrophy of the throat muscles may cause difficulty swallowing and diaphragm atrophy can cause difficulty breathing. Muscle atrophy can be asymptomatic and may go undetected until a significant amount of muscle is lost.

Details

Muscle atrophy is when muscles waste away. It’s usually caused by a lack of physical activity.

When a disease or injury makes it difficult or impossible for you to move an arm or leg, the lack of mobility can result in muscle wasting. Over time, without regular movement, your arm or leg can start to appear smaller but not shorter than the one you’re able to move.

In some cases, muscle wasting can be reversed with a proper diet, exercise, or physical therapy.

Symptoms of muscle atrophy

You may have muscle atrophy if:

* One of your arms or legs is noticeably smaller than the other.

* You’re experiencing marked weakness in one limb.

* You’ve been physically inactive for a very long time.

* Call your doctor to schedule a complete medical examination if you believe you may have muscle atrophy or if you are unable to move normally. You may have an undiagnosed condition that requires treatment.

Causes of muscle atrophy

Unused muscles can waste away if you’re not active. But even after it begins, this type of atrophy can often be reversed with exercise and improved nutrition.

Muscle atrophy can also happen if you’re bedridden or unable to move certain body parts due to a medical condition. Astronauts, for example, can experience muscle atrophy after a few days of weightlessness.

Other causes for muscle atrophy include:

* lack of physical activity for an extended period of time

* aging

* alcohol-associated myopathy, a pain and weakness in muscles due to excessive drinking over long periods of time

* burns

* injuries, such as a torn rotator cuff or broken bones

* malnutrition

* spinal cord or peripheral nerve injuries

* stroke

* long-term corticosteroid therapy

Some medical conditions can cause muscles to waste away or can make movement difficult, leading to muscle atrophy.

These include:

* amyotrophic lateral sclerosis (ALS), also known as Lou Gehrig’s disease, affects nerve cells that control voluntary muscle movement

* dermatomyositis, causes muscle weakness and skin rash

* Guillain-Barré syndrome, an autoimmune condition that leads to nerve inflammation and muscle weakness

* multiple sclerosis, an autoimmune condition in which the body destroys the protective coverings of nerves

* muscular dystrophy, an inherited condition that causes muscle weakness

* neuropathy, damage to a nerve or nerve group, resulting in loss of sensation or function

* osteoarthritis, causes reduced motion in the joints

* polio, a viral disease affecting muscle tissue that can lead to paralysis

* polymyositis, an inflammatory disease

* rheumatoid arthritis, a chronic inflammatory autoimmune condition that affects the joints

* spinal muscular atrophy, a hereditary condition causing arm and leg muscles to waste away

How is muscle atrophy diagnosed?

If muscle atrophy is caused by another condition, you may need to undergo testing to diagnose the condition.

Your doctor will request your complete medical history. You will likely be asked to:

* tell them about old or recent injuries and previously diagnosed medical conditions

* list prescriptions, over-the counter medications, and supplements you’re taking

* give a detailed description of your symptoms

Your doctor may also order tests to help with the diagnosis and to rule out certain diseases. These tests may include:

* blood tests

* X-rays

* magnetic resonance imaging (MRI)

* computed tomography (CT) scan

* nerve conduction studies

* muscle or nerve biopsy

* electromyography (EMG)

Your doctor may refer you to a specialist depending on the results of these tests.

How is muscle atrophy treated?

Treatment will depend on your diagnosis and the severity of your muscle loss. Any underlying medical conditions must be addressed. Common treatments for muscle atrophy include:

* exercise

* physical therapy

* ultrasound therapy

* surgery

* dietary changes

Recommended exercises might include water exercises to help make movement easier.

Physical therapists can teach you the correct ways to exercise. They can also move your arms and legs for you if you have trouble moving.

Ultrasound therapy is a noninvasive procedure that uses sound waves to aid in healing.

If your tendons, ligaments, skin, or muscles are too tight and prevent you from moving, surgery may be necessary. This condition is called contracture deformity.

Surgery may be able to correct contracture deformity if your muscle atrophy is due to malnutrition. It may also be able to correct your condition if a torn tendon caused your muscle atrophy.

If malnutrition is the cause of muscle atrophy, your doctor may suggest dietary changes or supplements.

Takeaway

Muscle atrophy can often be reversed through regular exercise and proper nutrition in addition to getting treatment for the condition that’s causing it.

Additional Information

Muscle atrophy is the wasting (thinning) or loss of muscle tissue.

Causes

There are three types of muscle atrophy: physiologic, pathologic, and neurogenic.

Physiologic atrophy is caused by not using the muscles enough. This type of atrophy can often be reversed with exercise and better nutrition. People who are most affected are those who:

* Have seated jobs, health problems that limit movement, or decreased activity levels

* Are bedridden

* Cannot move their limbs because of stroke or other brain disease

* Are in a place that lacks gravity, such as during space flights

Pathologic atrophy is seen with aging, starvation, and diseases such as Cushing disease (because of taking too much medicines called corticosteroids).

Neurogenic atrophy is the most severe type of muscle atrophy. It can be from an injury to, or disease of a nerve that connects to the muscle. This type of muscle atrophy tends to occur more suddenly than physiologic atrophy.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2006 2023-12-24 16:51:16

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,588

Re: Miscellany

2008) Scalpel

Gist

A scalpel is a bladed surgical instrument used to make cuts into the body. This is a very sharp instrument and comes in various sizes for different types of cuts and surgeries.

Details

A scalpel, lancet, or bistoury is a small and extremely sharp bladed instrument used for surgery, anatomical dissection, podiatry and various handicrafts. A lancet is a double-edged scalpel.

Scalpel blades are usually made of hardened and tempered steel, stainless steel, or high carbon steel; in addition, titanium, ceramic, diamond and even obsidian knives are not uncommon. For example, when performing surgery under MRI guidance, steel blades are unusable (the blades would be drawn to the magnets and would also cause image artifacts). Historically, the preferred material for surgical scalpels was silver. Scalpel blades are also offered by some manufacturers with a zirconium nitride–coated edge to improve sharpness and edge retention. Others manufacture blades that are polymer-coated to enhance lubricity during a cut.

Scalpels may be single-use disposable or re-usable. Re-usable scalpels can have permanently attached blades that can be sharpened or, more commonly, removable single-use blades. Disposable scalpels usually have a plastic handle with an extensible blade (like a utility knife) and are used once, then the entire instrument is discarded. Scalpel blades are usually individually packed in sterile pouches but are also offered non-sterile.

Alternatives to scalpels in surgical applications include electrocautery and lasers.

Types:

Surgical

Surgical scalpels consist of two parts, a blade and a handle. The handles are often reusable, with the blades being replaceable. In medical applications, each blade is only used once (sometimes just for a single, small cut).

The handle is also known as a "B.P. handle", named after Charles Russell Bard and Morgan Parker, founders of the Bard-Parker Company. Morgan Parker patented the 2-piece scalpel design in 1915 and Bard-Parker developed a method of cold sterilization that would not dull the blades, as did the heat-based method that was previously used.

Blades are manufactured with a corresponding fitment size so that they fit on only one size handle.

A lancet has a double-edged blade and a pointed end for making small incisions or drainage punctures.

Handicraft

Graphical and model-making scalpels tend to have round handles, with textured grips (either knurled metal or soft plastic). The blade is usually flat and straight, allowing it to be run easily against a straightedge to produce straight cuts.

Safety

Rising awareness of the dangers of sharps in a medical environment around the beginning of the 21st century led to the development of various methods of protecting healthcare workers from accidental cuts and puncture wounds. According to the Centers for Disease Control and Prevention, as many as 1,000 people were subject to accidental needle sticks and lacerations each day in the United States while providing medical care. Additionally, surgeons can expect to suffer hundreds of such injuries over the course of their career. Scalpel blade injuries were among the most frequent sharps injuries, second only to needlesticks. Scalpel injuries made up 7 percent to 8 percent of all sharps injuries in 2001.

"Scalpel Safety" is a term coined to inform users that there are choices available to them to ensure their protection from this common sharps injury.

Safety scalpels are becoming increasingly popular as their prices come down and also on account of legislation such as the Needle Stick Prevention Act, which requires hospitals to minimize the risk of pathogen transmission through needle or scalpel-related accidents.

There are essentially two kinds of disposable safety scalpels offered by various manufacturers. They can be either classified as retractable blade or retractable sheath type. The retractable blade version made by companies such as OX Med Tech, DeRoyal, Jai Surgicals, Swann Morton, and PenBlade are more intuitive to use due to their similarities to a standard box-cutter. Retractable sheath versions have much stronger ergonomic feel for the doctors and are made by companies such as Aditya Dispomed, Aspen Surgical and Southmedic. A few companies have also started to offer a safety scalpel with a reusable metal handle. In such models, the blade is usually protected in a cartridge. Such systems usually require a custom handle and the price of blades and cartridges is considerably more than for conventional surgical blades.

However, CDC studies shows that up to 87% of active medical devices are not activated. Safety scalpels are active devices and therefore the risk of not activating is still significant. There is a study that indicated there were actually four times more injuries with safety scalpels than reusable scalpels.

There are various scalpel blade removers on the market that allows users to safely remove blades from the handle, instead of dangerously using fingers or forceps. In the medical field, when taking into account activation rates, the combination of a single-handed scalpel blade remover with a passing tray or a neutral zone was as safe and up to five times safer than a safety scalpel. There are companies which offer a single-handed scalpel blade remover that complies with regulatory requirements such as US Occupational Safety and Health Administration Standards.

The usage of both safety scalpels and a single-handed blade remover, combined with a hands-free passing technique, are potentially effective in reducing scalpel blade injuries. It is up to employers and scalpel users to consider and use safer and more effective scalpel safety measures when feasible.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2007 2023-12-25 21:23:13

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,588

Re: Miscellany

2009) Nursing

Gist

Nursing is a profession within the healthcare sector focused on the care of individuals, families, and communities so they may attain, maintain, or recover optimal health and quality of life. Nurses can be differentiated from other healthcare providers by their approach to patient care, training, and scope of practice.

Summary

Nursing: Nursing is a profession within the healthcare sector focused on the care of individuals, families, and communities so they may attain, maintain, or recover optimal health and quality of life. Nurses can be differentiated from other healthcare providers by their approach to patient care, training, and scope of practice. Nurses practice in many specialties with differing levels of prescription authority. Nurses comprise the largest component of most healthcare environments; but there is evidence of international shortages of qualified nurses. Nurses collaborate with other healthcare providers such as physicians, nurse practitioners, physical therapists, and psychologists. There is a distinction between nurses and nurse practitioners; in the U.S., the latter are nurses with a graduate degree in advanced practice nursing, and are permitted to prescribe medications unlike the former. They practice independently in a variety of settings in more than half of the United States. Since the postwar period, nurse education has undergone a process of diversification towards advanced and specialized credentials, and many of the traditional regulations and provider roles are changing.