Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#2101 2024-03-25 00:04:00

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,762

Re: Miscellany

2103) Portable Network Graphics

Gist

The full form of PNG is Portable Network Graphics. It is a file format that is used for image compression.

Summary

This document describes PNG (Portable Network Graphics), an extensible file format for the lossless, portable, well-compressed storage of static and animated raster images. PNG provides a patent-free replacement for GIF and can also replace many common uses of TIFF. Indexed-colour, greyscale, and truecolour images are supported, plus an optional alpha channel. Sample depths range from 1 to 16 bits.

PNG is designed to work well in online viewing applications, such as the World Wide Web, so it is fully streamable with a progressive display option. PNG is robust, providing both full file integrity checking and simple detection of common transmission errors. Also, PNG can store colour space data for improved colour matching on heterogeneous platforms.

This specification defines two Internet Media Types, image/png and image/apng.

Status of This Document

This specification is intended to become an International Standard, but is not yet one. It is inappropriate to refer to this specification as an International Standard.

This document was published by the Portable Network Graphics (PNG) Working Group as a Candidate Recommendation Snapshot using the Recommendation track.

Publication as a Candidate Recommendation does not imply endorsement by W3C and its Members. A Candidate Recommendation Snapshot has received wide review, is intended to gather implementation experience, and has commitments from Working Group members to royalty-free licensing for implementations.

This Candidate Recommendation is not expected to advance to Proposed Recommendation any earlier than 21 December 2023.

This document was produced by a group operating under the W3C Patent Policy. W3C maintains a public list of any patent disclosures made in connection with the deliverables of the group; that page also includes instructions for disclosing a patent. An individual who has actual knowledge of a patent which the individual believes contains Essential Claim(s) must disclose the information in accordance with section 6 of the W3C Patent Policy.

This document is governed by the 12 June 2023 W3C Process Document.

Details

PNG (Portable Network Graphics) is a file format used for lossless image compression. PNG has almost entirely replaced the Graphics Interchange Format (GIF) that was widely used in the past.

Like a GIF, a PNG file is compressed in lossless fashion, meaning all image information is restored when the file is decompressed during viewing. A PNG file is not intended to replace the JPEG format, which is "lossy" but lets the creator make a trade-off between file size and image quality when the image is compressed. Typically, an image in a PNG file can be 10 percent to 30 percent more compressed than in a GIF format.

File format of PNG

The PNG format includes these features:

* Not only can one color be made transparent, but the degree of transparency, called opacity, can be controlled.

* Supports image interlacing and develops faster than in interlaced GIF format.

* Gamma correction allows tuning of the image’s color brightness required by specific display manufacturers.

* Images can be saved using true color, as well as in the palette and grayscale formats provided by the GIF.

JPEG vs. PNG

JPEG and PNG are the two most commonly used image file formats on the web, but there are differences between them.

JPEG (Joint Photographic Experts Group) was created in 1986. This image format takes up very little storage space and is quick to upload or download. JPEGs can display millions of colors, so they’re perfect for real-life images, such as photographs. They work well on websites and are ideal for posting on social media.

Because JPEG is “lossy,” -- which means that when data is compressed, unnecessary (redundant) information is deleted from the file permanently -- some quality will be lost or compromised when a file is converted to a JPEG.

JPEG is the default file format for uploading pictures to the web, unless they have text in them, need transparency, are animated or would benefit from color changes, such as logos or icons.

However, JPEGs aren’t good for images that have very few color data, such as interface screenshots and other simple computer-generated graphics.

The main advantage of PNG over JPEG is that the compression is lossless, which means there’s no loss in quality each time a file is opened and saved again. PNG is also good for detailed, high-contrast images. Consequently, PNG is typically the default file format for screenshots because, instead of compressing groups of pixels together, it offers a nearly perfect pixel-for-pixel representation of the screen.

Another key feature of PNG is that it supports transparency. With both grayscale and color and images, pixels in PNG files can be transparent, enabling users to create images that overlay neatly with the content of a website or image.

Uses of PNG

PNG can be used for:

* Photos with line art, such as drawings, illustrations and comics.

* Photos or scans of text, such as handwritten letters or newspaper articles.

* Charts, logos, graphs, architectural plans and blueprints.

* Anything with text, such as page layouts made in Photoshop or InDesign then saved as images.

Advantages of PNG

The advantages of the PNG format include:

* Lossless compression -- doesn’t lose detail and quality after image compression.

* Supports a large number of colors -- the format is suitable for different types of digital images, including photographs and graphics.

* Support for transparency -- supports compression of digital images with transparent areas.

* Perfect for editing images – lossless compressions makes it perfect for storing digital images for editing.

* Sharp edges and solid colors -- ideal for images containing texts, line arts and graphics.

The disadvantages of the PNG format include:

* Bigger file size -- compresses digital images at a larger file size.

* Not ideal for professional-quality print graphics -- doesn’t support non-RGB color spaces such as CMYK (cyan, magenta, yellow and black).

* Doesn’t support embedding EXIF metadata used by most digital cameras.

* Doesn’t natively support animation, but there are unofficial extensions available.

History of PNG

PNG was developed by an Internet working group headed up by Thomas Boutell that came together in 1994 to begin creating the PNG format. At the time, the GIF format was already well-established. Their goal was to increase color support as well as provide an image format that didn’t need at patent license.

The GIF format was owned by Unisys and its use in image-handling software involved licensing or other legal considerations. Web users could make, view and send GIF files freely but they couldn’t develop software that built them without an arrangement with Unisys.

The first PNG draft was issued on January 4, 1995, and within a week, most of the major PNG features had been proposed and accepted. Over the next three weeks, the group produced seven important drafts.

By the beginning of March 1995, all the specifications were in place (draft nine) and accepted. In October 1996, the first version of the PNG specification was issued as a W3C recommendation. Additional versions were released in 1998, 1999 and 2003, when it became an international standard.

Additional Information

Portable Network Graphics (PNG) is a raster-graphics file format that supports lossless data compression. PNG was developed as an improved, non-patented replacement for Graphics Interchange Format (GIF)—unofficially, the initials PNG stood for the recursive acronym "PNG's not GIF".

PNG supports palette-based images (with palettes of 24-bit RGB or 32-bit RGBA colors), grayscale images (with or without an alpha channel for transparency), and full-color non-palette-based RGB or RGBA images. The PNG working group designed the format for transferring images on the Internet, not for professional-quality print graphics; therefore, non-RGB color spaces such as CMYK are not supported. A PNG file contains a single image in an extensible structure of chunks, encoding the basic pixels and other information such as textual comments and integrity checks documented in RFC 2083.

PNG files have the ".png" file extension and the "image/png" MIME media type. PNG was published as an informational RFC 2083 in March 1997 and as an ISO/IEC 15948 standard in 2004.

History and development

The motivation for creating the PNG format was the realization, on 28 December 1994, that the Lempel–Ziv–Welch (LZW) data compression algorithm used in the Graphics Interchange Format (GIF) format was patented by Unisys. The patent required that all software supporting GIF pay royalties, leading to a flurry of criticism from Usenet users. One of them was Thomas Boutell, who on 4 January 1995 posted a precursory discussion thread on the Usenet newsgroup "comp.graphics" in which he devised a plan for a free alternative to GIF. Other users in that thread put forth many propositions that would later be part of the final file format. Oliver Fromme, author of the popular JPEG viewer QPEG, proposed the PING name, eventually becoming PNG, a recursive acronym meaning PING is not GIF, and also the .png extension. Other suggestions later implemented included the deflate compression algorithm and 24-bit color support, the lack of the latter in GIF also motivating the team to create their file format. The group would become known as the PNG Development Group, and as the discussion rapidly expanded, it later used a mailing list associated with a CompuServe forum.

The full specification of PNG was released under the approval of W3C on 1 October 1996, and later as RFC 2083 on 15 January 1997. The specification was revised on 31 December 1998 as version 1.1, which addressed technical problems for gamma and color correction. Version 1.2, released on 11 August 1999, added the iTXt chunk as the specification's only change, and a reformatted version of 1.2 was released as a second edition of the W3C standard on 10 November 2003, and as an International Standard (ISO/IEC 15948:2004) on 3 March 2004.

Although GIF allows for animation, it was decided that PNG should be a single-image format. In 2001, the developers of PNG published the Multiple-image Network Graphics (MNG) format, with support for animation. MNG achieved moderate application support, but not enough among mainstream web browsers and no usage among web site designers or publishers. In 2008, certain Mozilla developers published the Animated Portable Network Graphics (APNG) format with similar goals. APNG is a format that is natively supported by Gecko- and Presto-based web browsers and is also commonly used for thumbnails on Sony's PlayStation Portable system (using the normal PNG file extension). In 2017, Chromium based browsers adopted APNG support. In January 2020, Microsoft Edge became Chromium based, thus inheriting support for APNG. With this all major browsers now support APNG.

PNG Working Group

The original PNG specification was authored by an ad hoc group of computer graphics experts and enthusiasts. Discussions and decisions about the format were conducted by email. The original authors listed on RFC 2083 are:

Editor: Thomas Boutell

Contributing Editor: Tom Lane

Authors (in alphabetical order by last name): Mark Adler, Thomas Boutell, Christian Brunschen, Adam M. Costello, Lee Daniel Crocker, Andreas Dilger, Oliver Fromme, Jean-loup Gailly, Chris Herborth, Aleks Jakulin, Neal Kettler, Tom Lane, Alexander Lehmann, Chris Lilley, Dave Martindale, Owen Mortensen, Keith S. Pickens, Robert P. Poole, Glenn Randers-Pehrson, Greg Roelofs, Willem van Schaik, Guy Schalnat, Paul Schmidt, Tim Wegner, Jeremy Wohl.

-(1)-660.webp)

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2102 2024-03-26 00:02:32

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,762

Re: Miscellany

2104) Tagged Image File Format

Gist

TIF (or TIFF) is an image format used for containing high quality graphics. It stands for “Tagged Image File Format” or “Tagged Image Format”. The format was created by Aldus Corporation but Adobe acquired the format later and made subsequent update in this format.

Summary

A TIFF, which stands for Tag Image File Format, is a computer file used to store raster graphics and image information. A favorite among photographers, TIFFs are a handy way to store high-quality images before editing if you want to avoid lossy file formats.

Aldus eventually merged with Adobe Systems, who held the patent on the format from then on. Today, TIFF files are still widely used in the printing and publishing industry.

A TIFF file is a great choice when high quality is your goal, especially when it comes to printing photos or even billboards. TIFF is also an adaptable format that can support both lossy and lossless compression.

Details

Tag Image File Format or Tagged Image File Format, commonly known by the abbreviations TIFF or TIF, is an image file format for storing raster graphics images, popular among graphic artists, the publishing industry, and photographers. TIFF is widely supported by scanning, faxing, word processing, optical character recognition, image manipulation, desktop publishing, and page-layout applications. The format was created by the Aldus Corporation for use in desktop publishing. It published the latest version 6.0 in 1992, subsequently updated with an Adobe Systems copyright after the latter acquired Aldus in 1994. Several Aldus or Adobe technical notes have been published with minor extensions to the format, and several specifications have been based on TIFF 6.0, including TIFF/EP (ISO 12234-2), TIFF/IT (ISO 12639), TIFF-F (RFC 2306) and TIFF-FX (RFC 3949).

History

TIFF was created as an attempt to get desktop scanner vendors of the mid-1980s to agree on a common scanned image file format, in place of a multitude of proprietary formats. In the beginning, TIFF was only a binary image format (only two possible values for each pixel), because that was all that desktop scanners could handle. As scanners became more powerful, and as desktop computer disk space became more plentiful, TIFF grew to accommodate grayscale images, then color images. Today, TIFF, along with JPEG and PNG, is a popular format for deep-color images.

The first version of the TIFF specification was published by the Aldus Corporation in the autumn of 1986 after two major earlier draft releases. It can be labeled as Revision 3.0. It was published after a series of meetings with various scanner manufacturers and software developers. In April 1987 Revision 4.0 was released and it contained mostly minor enhancements. In October 1988 Revision 5.0 was released and it added support for palette color images and LZW compression.

TIFF is a complex format, defining many tags of which typically only a few are used in each file. This led to implementations supporting many varying subsets of the format, a situation that gave rise to the joke that TIFF stands for Thousands of Incompatible File Formats. This problem was addressed in revision 6.0 of the TIFF specification (June 1992) by introducing a distinction between Baseline TIFF (which all implementations were required to support) and TIFF Extensions (which are optional). Additional extensions are defined in two supplements to the specification, published September 1995 and March 2002 respectively.

Overview

A TIFF file contains one or several images, termed subfiles in the specification. The basic use-case for having multiple subfiles is to encode a multipage telefax in a single file, but it is also allowed to have different subfiles be different variants of the same image, for example scanned at different resolutions. Rather than being a continuous range of bytes in the file, each subfile is a data structure whose top-level entity is called an image file directory (IFD). Baseline TIFF readers are only required to make use of the first subfile, but each IFD has a field for linking to a next IFD.

The IFDs are where the tags for which TIFF is named are located. Each IFD contains one or several entries, each of which is identified by its tag. The tags are arbitrary 16-bit numbers; their symbolic names such as ImageWidth often used in discussions of TIFF data do not appear explicitly in the file itself. Each IFD entry has an associated value, which may be decoded based on general rules of the format, but it depends on the tag what that value then means. There may within a single IFD be no more than one entry with any particular tag. Some tags are for linking to the actual image data, other tags specify how the image data should be interpreted, and still other tags are used for image metadata.

TIFF images are made up of rectangular grids of pixels. The two axes of this geometry are termed horizontal (or X, or width) and vertical (or Y, or length). Horizontal and vertical resolution need not be equal (since in a telefax they typically would not be equal). A baseline TIFF image divides the vertical range of the image into one or several strips, which are encoded (in particular: compressed) separately. Historically this served to facilitate TIFF readers (such as fax machines) with limited capacity to store uncompressed data — one strip would be decoded and then immediately printed — but the present specification motivates it by "increased editing flexibility and efficient I/O buffering". A TIFF extension provides the alternative of tiled images, in which case both the horizontal and the vertical ranges of the image are decomposed into smaller units.

An example of these things, which also serves to give a flavor of how tags are used in the TIFF encoding of images, is that a striped TIFF image would use tags 273 (StripOffsets), 278 (RowsPerStrip), and 279 (StripByteCounts). The StripOffsets point to the blocks of image data, the StripByteCounts say how long each of these blocks are (as stored in the file), and RowsPerStrip says how many rows of pixels there are in a strip; the latter is required even in the case of having just one strip, in which case it merely duplicates the value of tag 257 (ImageLength). A tiled TIFF image instead uses tags 322 (TileWidth), 323 (TileLength), 324 (TileOffsets), and 325 (TileByteCounts). The pixels within each strip or tile appear in row-major order, left to right and top to bottom.

The data for one pixel is made up of one or several samples; for example an RGB image would have one Red sample, one Green sample, and one Blue sample per pixel, whereas a greyscale or palette color image only has one sample per pixel. TIFF allows for both additive (e.g. RGB, RGBA) and subtractive (e.g. CMYK) color models. TIFF does not constrain the number of samples per pixel (except that there must be enough samples for the chosen color model), nor does it constrain how many bits are encoded for each sample, but baseline TIFF only requires that readers support a few combinations of color model and bit-depth of images. Support for custom sets of samples is very useful for scientific applications; 3 samples per pixel is at the low end of multispectral imaging, and hyperspectral imaging may require hundreds of samples per pixel. TIFF supports having all samples for a pixel next to each other within a single strip/tile (PlanarConfiguration = 1) but also different samples in different strips/tiles (PlanarConfiguration = 2). The default format for a sample value is as an unsigned integer, but a TIFF extension allows declaring them as alternatively being signed integers or IEEE-754 floats, as well as specify a custom range for valid sample values.

TIFF images may be uncompressed, compressed using a lossless compression scheme, or compressed using a lossy compression scheme. The lossless LZW compression scheme has at times been regarded as the standard compression for TIFF, but this is technically a TIFF extension, and the TIFF6 specification notes the patent situation regarding LZW. Compression schemes vary significantly in at what level they process the data: LZW acts on the stream of bytes encoding a strip or tile (without regard to sample structure, bit depth, or row width), whereas the JPEG compression scheme both transforms the sample structure of pixels (switching to a different color model) and encodes pixels in 8×8 blocks rather than row by row.

Most data in TIFF files are numerical, but the format supports declaring data as rather being textual, if appropriate for a particular tag. Tags that take textual values include Artist, Copyright, DateTime, DocumentName, InkNames, and Model.

Internet Media Type

The MIME type image/tiff (defined in RFC 3302) without an application parameter is used for Baseline TIFF 6.0 files or to indicate that it is not necessary to identify a specific subset of TIFF or TIFF extensions. The optional "application" parameter (Example: Content-type: image/tiff; application=foo) is defined for image/tiff to identify a particular subset of TIFF and TIFF extensions for the encoded image data, if it is known. According to RFC 3302, specific TIFF subsets or TIFF extensions used in the application parameter must be published as an RFC.

MIME type image/tiff-fx (defined in RFC 3949 and RFC 3950) is based on TIFF 6.0 with TIFF Technical Notes TTN1 (Trees) and TTN2 (Replacement TIFF/JPEG specification). It is used for Internet fax compatible with the ITU-T Recommendations for Group 3 black-and-white, grayscale and color fax.

Digital preservation

Adobe holds the copyright on the TIFF specification (aka TIFF 6.0) along with the two supplements that have been published. These documents can be found on the Adobe TIFF Resources page. The Fax standard in RFC 3949 is based on these TIFF specifications.

TIFF files that strictly use the basic "tag sets" as defined in TIFF 6.0 along with restricting the compression technology to the methods identified in TIFF 6.0 and are adequately tested and verified by multiple sources for all documents being created can be used for storing documents. Commonly seen issues encountered in the content and document management industry associated with the use of TIFF files arise when the structures contain proprietary headers, are not properly documented, and/or contain "wrappers" or other containers around the TIFF datasets, and/or include improper compression technologies, or those compression technologies are not properly implemented.

Variants of TIFF can be used within document imaging and content/document management systems using CCITT Group IV 2D compression which supports black-and-white (bitonal, monochrome) images, among other compression technologies that support color. When storage capacity and network bandwidth was a greater issue than commonly seen in today's server environments, high-volume storage scanning, documents were scanned in black and white (not in color or in grayscale) to conserve storage capacity.

The inclusion of the SampleFormat tag in TIFF 6.0 allows TIFF files to handle advanced pixel data types, including integer images with more than 8 bits per channel and floating point images. This tag made TIFF 6.0 a viable format for scientific image processing where extended precision is required. An example would be the use of TIFF to store images acquired using scientific CCD cameras that provide up to 16 bits per photosite of intensity resolution. Storing a sequence of images in a single TIFF file is also possible, and is allowed under TIFF 6.0, provided the rules for multi-page images are followed.

Details

TIFF is a flexible, adaptable file format for handling images and data within a single file, by including the header tags (size, definition, image-data arrangement, applied image compression) defining the image's geometry. A TIFF file, for example, can be a container holding JPEG (lossy) and PackBits (lossless) compressed images. A TIFF file also can include a vector-based clipping path (outlines, croppings, image frames). The ability to store image data in a lossless format makes a TIFF file a useful image archive, because, unlike standard JPEG files, a TIFF file using lossless compression (or none) may be edited and re-saved without losing image quality. This is not the case when using the TIFF as a container holding compressed JPEG. Other TIFF options are layers and pages.

TIFF offers the option of using LZW compression, a lossless data-compression technique for reducing a file's size. Use of this option was limited by patents on the LZW technique until their expiration in 2004.

The TIFF 6.0 specification consists of the following parts:

* Introduction (contains information about TIFF Administration, usage of Private fields and values, etc.)

* Part 1: Baseline TIFF

* Part 2: TIFF Extensions

* Part 3: Appendices

Additional Information

TIFFs are a file format popular with graphic designers and photographers for their flexibility, high quality, and near-universal compatibility. Learn more about these raster graphic files and how you can put them to use in your next project.

What is a TIFF file?

A TIFF, which stands for Tag Image File Format, is a computer file used to store raster graphics and image information. A favorite among photographers, TIFFs are a handy way to store high-quality images before editing if you want to avoid lossy file formats.

TIFF files:

* Have either a .tiff or .tif extension.

* Are a lossless form of file compression, which means they’re larger than most but don’t lose image quality.

* Work with Windows, Linux, and macOS.

TIFFs aren’t the smallest files around, but they enable a user to tag up extra image information and data, such as additional layers. They’re also compatible with editing software like Adobe Photoshop.

History of the TIFF file.

Aldus Corporation created the TIFF file in the mid-1980s for use in desktop publishing. TIFFs retained high-quality data and could publish content directly from a computer. The file was designed as a universally applicable format for desktop scanners — hardware that previously handled, depending on the make and model, only a limited set of file formats.

Initially, TIFFs were restricted to print publications before they expanded into digital content. Aldus Corporation was later acquired by Adobe, which has since been responsible for the copyright of the file format.

What are TIFFs used for?

TIFFs are popular across a range of industries — such as design, photography, and desktop publishing. TIFF files can be used for:

* High-quality photographs.

TIFFs are perfect for retaining lots of impressively detailed image data because they use a predominately lossless form of file compression. This makes them a great choice for professional photographers and editors.

* High-resolution scans.

The detailed image quality stored within a TIFF means they’re ideal for scanned images and high-resolution documents. You might find them a useful choice for storing high-resolution images of your artwork or personal documents.

* Container files.

TIFFs also work as container files that store smaller JPEGs. You could store several lower-resolution JPEGs within one TIFF if you wanted to email a selection of photos to a contact.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2103 2024-03-27 00:02:51

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,762

Re: Miscellany

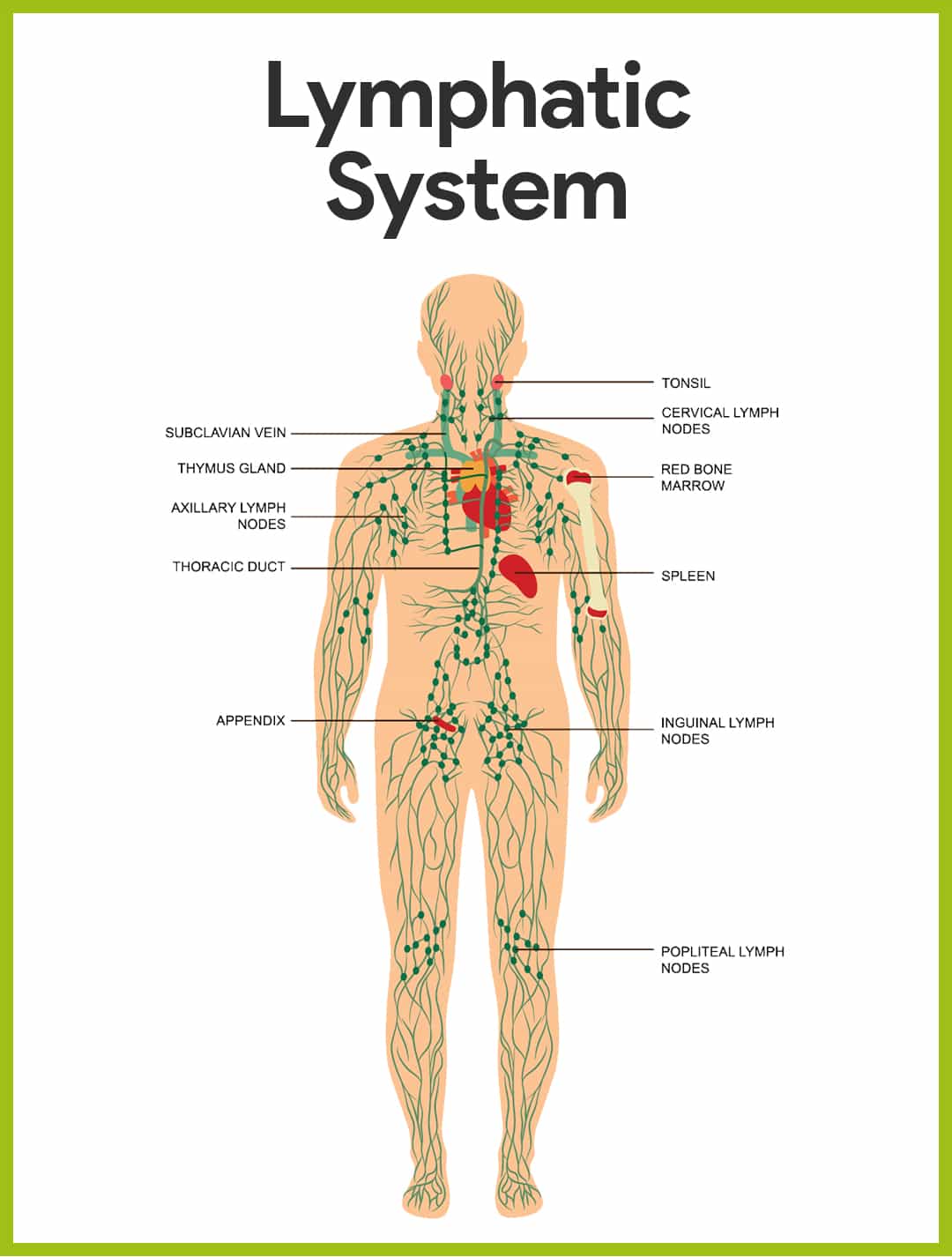

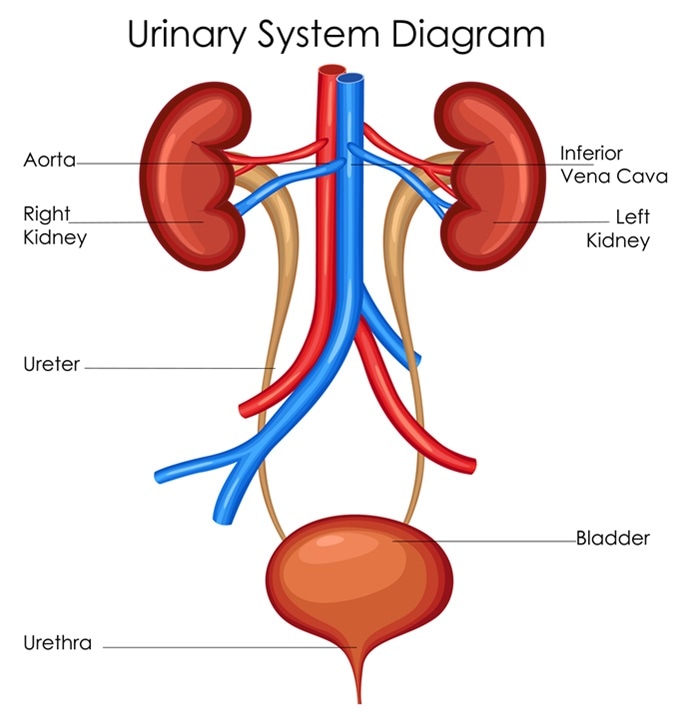

2105) Anatomy

Gist

The study of the structure of a plant or animal. Human anatomy includes the cells, tissues, and organs that make up the body and how they are organized in the body.

Summary

Anatomy is the study of the structure of living things – animal, human, plant – from microscopic cells and molecules to whole organisms as large as whales.

Anatomy Is Everywhere

* Anthropologists study cultures around the world.

* Paleontologists use cutting-edge technology to discover the ancient world.

* Archeologists uncover our history one artifact at a time.

* Veterinarians help humans care for pets and farm animals.

* Zoologists ensure captive animals – from backyard critters to endangered species – receive optimal care.

* Medical students learn anatomy before becoming nurses, doctors, and dentists.

* Inventors create exoskeletons to give people mobility.

* Biomedical engineers create better pacemakers and prosthetics.

* Physical therapists find remedies for their patients’ challenges.

Who Are Anatomists?

An anatomist broadly describes someone who studies, researches, or teaches in the anatomical sciences, including the study of extinct species, such as dinosaurs and Neanderthals. They help us understand how things are formed and constructed, which has enormous impact. However, not everyone who studies, applies, or researches anatomy calls themselves ‘anatomists.’

WHAT ANATOMISTS DO

Anatomists work with students and researchers to better understand humans and animals, in order to teach the next generation of doctors, nurses, physical therapists, dentists, and veterinarians. Their research into cell and molecular anatomy means that conditions such as cleft palate, congenital heart defects, neurological disorders, and cancer biology are better understood – and can be treated.

WHERE ANATOMISTS WORK

Anatomists work in universities, research institutions, and private industry. They teach anatomy in medical, dental, and veterinary schools, as well as at large undergraduate universities. They run their own research labs at organizations and universities, and they work together in teams of scientists, postdoctoral researchers, and students to uncover discoveries that lead to better understanding of our biology.

Details

Anatomy (from Ancient Greek anatomḗ) 'dissection') is the branch of biology concerned with the study of the structure of organisms and their parts. Anatomy is a branch of natural science that deals with the structural organization of living things. It is an old science, having its beginnings in prehistoric times. Anatomy is inherently tied to developmental biology, embryology, comparative anatomy, evolutionary biology, and phylogeny, as these are the processes by which anatomy is generated, both over immediate and long-term timescales. Anatomy and physiology, which study the structure and function of organisms and their parts respectively, make a natural pair of related disciplines, and are often studied together. Human anatomy is one of the essential basic sciences that are applied in medicine, and is often studied alongside physiology.

Anatomy is a complex and dynamic field that is constantly evolving as new discoveries are made. In recent years, there has been a significant increase in the use of advanced imaging techniques, such as MRI and CT scans, which allow for more detailed and accurate visualizations of the body's structures.

The discipline of anatomy is divided into macroscopic and microscopic parts. Macroscopic anatomy, or gross anatomy, is the examination of an animal's body parts using unaided eyesight. Gross anatomy also includes the branch of superficial anatomy. Microscopic anatomy involves the use of optical instruments in the study of the tissues of various structures, known as histology, and also in the study of cells.

The history of anatomy is characterized by a progressive understanding of the functions of the organs and structures of the human body. Methods have also improved dramatically, advancing from the examination of animals by dissection of carcasses and cadavers (corpses) to 20th-century medical imaging techniques, including X-ray, ultrasound, and magnetic resonance imaging.

Etymology and definition

Derived from the Greek "dissection" (from "I cut up, cut open" from "up", and "I cut"), anatomy is the scientific study of the structure of organisms including their systems, organs and tissues. It includes the appearance and position of the various parts, the materials from which they are composed, and their relationships with other parts. Anatomy is quite distinct from physiology and biochemistry, which deal respectively with the functions of those parts and the chemical processes involved. For example, an anatomist is concerned with the shape, size, position, structure, blood supply and innervation of an organ such as the liver; while a physiologist is interested in the production of bile, the role of the liver in nutrition and the regulation of bodily functions.

The discipline of anatomy can be subdivided into a number of branches, including gross or macroscopic anatomy and microscopic anatomy. Gross anatomy is the study of structures large enough to be seen with the naked eye, and also includes superficial anatomy or surface anatomy, the study by sight of the external body features. Microscopic anatomy is the study of structures on a microscopic scale, along with histology (the study of tissues), and embryology (the study of an organism in its immature condition). Regional anatomy is the study of the interrelationships of all of the structures in a specific body region, such as the abdomen. In contrast, systemic anatomy is the study of the structures that make up a discrete body system—that is, a group of structures that work together to perform a unique body function, such as the digestive system.

Anatomy can be studied using both invasive and non-invasive methods with the goal of obtaining information about the structure and organization of organs and systems. Methods used include dissection, in which a body is opened and its organs studied, and endoscopy, in which a video camera-equipped instrument is inserted through a small incision in the body wall and used to explore the internal organs and other structures. Angiography using X-rays or magnetic resonance angiography are methods to visualize blood vessels.

The term "anatomy" is commonly taken to refer to human anatomy. However, substantially similar structures and tissues are found throughout the rest of the animal kingdom, and the term also includes the anatomy of other animals. The term zootomy is also sometimes used to specifically refer to non-human animals. The structure and tissues of plants are of a dissimilar nature and they are studied in plant anatomy.

Animal tissues

The kingdom Animalia contains multicellular organisms that are heterotrophic and motile (although some have secondarily adopted a sessile lifestyle). Most animals have bodies differentiated into separate tissues and these animals are also known as eumetazoans. They have an internal digestive chamber, with one or two openings; the gametes are produced in multicellular sex organs, and the zygotes include a blastula stage in their embryonic development. Metazoans do not include the sponges, which have undifferentiated cells.

Unlike plant cells, animal cells have neither a cell wall nor chloroplasts. Vacuoles, when present, are more in number and much smaller than those in the plant cell. The body tissues are composed of numerous types of cells, including those found in muscles, nerves and skin. Each typically has a cell membrane formed of phospholipids, cytoplasm and a nucleus. All of the different cells of an animal are derived from the embryonic germ layers. Those simpler invertebrates which are formed from two germ layers of ectoderm and endoderm are called diploblastic and the more developed animals whose structures and organs are formed from three germ layers are called triploblastic. All of a triploblastic animal's tissues and organs are derived from the three germ layers of the embryo, the ectoderm, mesoderm and endoderm.

Animal tissues can be grouped into four basic types: connective, epithelial, muscle and nervous tissue.

Connective tissue

Connective tissues are fibrous and made up of cells scattered among inorganic material called the extracellular matrix. Connective tissue gives shape to organs and holds them in place. The main types are loose connective tissue, adipose tissue, fibrous connective tissue, cartilage and bone. The extracellular matrix contains proteins, the chief and most abundant of which is collagen. Collagen plays a major part in organizing and maintaining tissues. The matrix can be modified to form a skeleton to support or protect the body. An exoskeleton is a thickened, rigid cuticle which is stiffened by mineralization, as in crustaceans or by the cross-linking of its proteins as in insects. An endoskeleton is internal and present in all developed animals, as well as in many of those less developed.[16]

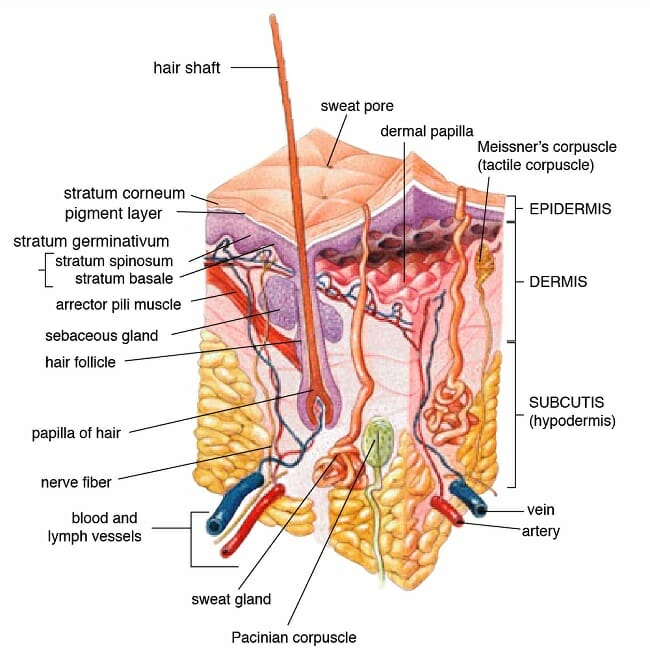

Epithelium

Epithelial tissue is composed of closely packed cells, bound to each other by cell adhesion molecules, with little intercellular space. Epithelial cells can be squamous (flat), cuboidal or columnar and rest on a basal lamina, the upper layer of the basement membrane, the lower layer is the reticular lamina lying next to the connective tissue in the extracellular matrix secreted by the epithelial cells. There are many different types of epithelium, modified to suit a particular function. In the respiratory tract there is a type of ciliated epithelial lining; in the small intestine there are microvilli on the epithelial lining and in the large intestine there are intestinal villi. Skin consists of an outer layer of keratinized stratified squamous epithelium that covers the exterior of the vertebrate body. Keratinocytes make up to 95% of the cells in the skin. The epithelial cells on the external surface of the body typically secrete an extracellular matrix in the form of a cuticle. In simple animals this may just be a coat of glycoproteins. In more advanced animals, many glands are formed of epithelial cells.

Muscle tissue

Muscle cells (myocytes) form the active contractile tissue of the body. Muscle tissue functions to produce force and cause motion, either locomotion or movement within internal organs. Muscle is formed of contractile filaments and is separated into three main types; smooth muscle, skeletal muscle and cardiac muscle. Smooth muscle has no striations when examined microscopically. It contracts slowly but maintains contractibility over a wide range of stretch lengths. It is found in such organs as sea anemone tentacles and the body wall of sea cucumbers. Skeletal muscle contracts rapidly but has a limited range of extension. It is found in the movement of appendages and jaws. Obliquely striated muscle is intermediate between the other two. The filaments are staggered and this is the type of muscle found in earthworms that can extend slowly or make rapid contractions. In higher animals striated muscles occur in bundles attached to bone to provide movement and are often arranged in antagonistic sets. Smooth muscle is found in the walls of the uterus, bladder, intestines, stomach, oesophagus, respiratory airways, and blood vessels. Cardiac muscle is found only in the heart, allowing it to contract and pump blood round the body.

Nervous tissue

Nervous tissue is composed of many nerve cells known as neurons which transmit information. In some slow-moving radially symmetrical marine animals such as ctenophores and cnidarians (including sea anemones and jellyfish), the nerves form a nerve net, but in most animals they are organized longitudinally into bundles. In simple animals, receptor neurons in the body wall cause a local reaction to a stimulus. In more complex animals, specialized receptor cells such as chemoreceptors and photoreceptors are found in groups and send messages along neural networks to other parts of the organism. Neurons can be connected together in ganglia. In higher animals, specialized receptors are the basis of sense organs and there is a central nervous system (brain and spinal cord) and a peripheral nervous system. The latter consists of sensory nerves that transmit information from sense organs and motor nerves that influence target organs. The peripheral nervous system is divided into the somatic nervous system which conveys sensation and controls voluntary muscle, and the autonomic nervous system which involuntarily controls smooth muscle, certain glands and internal organs, including the stomach.

Vertebrate anatomy

All vertebrates have a similar basic body plan and at some point in their lives, mostly in the embryonic stage, share the major chordate characteristics: a stiffening rod, the notochord; a dorsal hollow tube of nervous material, the neural tube; pharyngeal arches; and a tail posterior to the math. The spinal cord is protected by the vertebral column and is above the notochord, and the gastrointestinal tract is below it. Nervous tissue is derived from the ectoderm, connective tissues are derived from mesoderm, and gut is derived from the endoderm. At the posterior end is a tail which continues the spinal cord and vertebrae but not the gut. The mouth is found at the anterior end of the animal, and the math at the base of the tail. The defining characteristic of a vertebrate is the vertebral column, formed in the development of the segmented series of vertebrae. In most vertebrates the notochord becomes the nucleus pulposus of the intervertebral discs. However, a few vertebrates, such as the sturgeon and the coelacanth, retain the notochord into adulthood. Jawed vertebrates are typified by paired appendages, fins or legs, which may be secondarily lost. The limbs of vertebrates are considered to be homologous because the same underlying skeletal structure was inherited from their last common ancestor. This is one of the arguments put forward by Charles Darwin to support his theory of evolution.

Mammal anatomy

Mammals are a diverse class of animals, mostly terrestrial but some are aquatic and others have evolved flapping or gliding flight. They mostly have four limbs, but some aquatic mammals have no limbs or limbs modified into fins, and the forelimbs of bats are modified into wings. The legs of most mammals are situated below the trunk, which is held well clear of the ground. The bones of mammals are well ossified and their teeth, which are usually differentiated, are coated in a layer of prismatic enamel. The teeth are shed once (milk teeth) during the animal's lifetime or not at all, as is the case in cetaceans. Mammals have three bones in the middle ear and a cochlea in the inner ear. They are clothed in hair and their skin contains glands which secrete sweat. Some of these glands are specialized as mammary glands, producing milk to feed the young. Mammals breathe with lungs and have a muscular diaphragm separating the thorax from the abdomen which helps them draw air into the lungs. The mammalian heart has four chambers, and oxygenated and deoxygenated blood are kept entirely separate. Nitrogenous waste is excreted primarily as urea.

Mammals are amniotes, and most are viviparous, giving birth to live young. Exceptions to this are the egg-laying monotremes, the platypus and the echidnas of Australia. Most other mammals have a placenta through which the developing foetus obtains nourishment, but in marsupials, the foetal stage is very short and the immature young is born and finds its way to its mother's pouch where it latches on to a nipple and completes its development.

Human anatomy

In humans, dexterous hand movements and increased brain size are likely to have evolved simultaneously.

Humans have the overall body plan of a mammal. Humans have a head, neck, trunk (which includes the thorax and abdomen), two arms and hands, and two legs and feet.

Generally, students of certain biological sciences, paramedics, prosthetists and orthotists, physiotherapists, occupational therapists, nurses, podiatrists, and medical students learn gross anatomy and microscopic anatomy from anatomical models, skeletons, textbooks, diagrams, photographs, lectures and tutorials and in addition, medical students generally also learn gross anatomy through practical experience of dissection and inspection of cadavers. The study of microscopic anatomy (or histology) can be aided by practical experience examining histological preparations (or slides) under a microscope.

Human anatomy, physiology and biochemistry are complementary basic medical sciences, which are generally taught to medical students in their first year at medical school. Human anatomy can be taught regionally or systemically; that is, respectively, studying anatomy by bodily regions such as the head and chest, or studying by specific systems, such as the nervous or respiratory systems. The major anatomy textbook, Gray's Anatomy, has been reorganized from a systems format to a regional format, in line with modern teaching methods. A thorough working knowledge of anatomy is required by physicians, especially surgeons and doctors working in some diagnostic specialties, such as histopathology and radiology.

Academic anatomists are usually employed by universities, medical schools or teaching hospitals. They are often involved in teaching anatomy, and research into certain systems, organs, tissues or cells.

Additional Information

Anatomy is a field in the biological sciences concerned with the identification and description of the body structures of living things. Gross anatomy involves the study of major body structures by dissection and observation and in its narrowest sense is concerned only with the human body. “Gross anatomy” customarily refers to the study of those body structures large enough to be examined without the help of magnifying devices, while microscopic anatomy is concerned with the study of structural units small enough to be seen only with a light microscope. Dissection is basic to all anatomical research. The earliest record of its use was made by the Greeks, and Theophrastus called dissection “anatomy,” from ana temnein, meaning “to cut up.”

Comparative anatomy, the other major subdivision of the field, compares similar body structures in different species of animals in order to understand the adaptive changes they have undergone in the course of evolution.

Gross anatomy

This ancient discipline reached its culmination between 1500 and 1850, by which time its subject matter was firmly established. None of the world’s oldest civilizations dissected a human body, which most people regarded with superstitious awe and associated with the spirit of the departed soul. Beliefs in life after death and a disquieting uncertainty concerning the possibility of bodily resurrection further inhibited systematic study. Nevertheless, knowledge of the body was acquired by treating wounds, aiding in childbirth, and setting broken limbs. The field remained speculative rather than descriptive, though, until the achievements of the Alexandrian medical school and its foremost figure, Herophilus (flourished 300 BCE), who dissected human cadavers and thus gave anatomy a considerable factual basis for the first time. Herophilus made many important discoveries and was followed by his younger contemporary Erasistratus, who is sometimes regarded as the founder of physiology. In the 2nd century CE, Greek physician Galen assembled and arranged all the discoveries of the Greek anatomists, including with them his own concepts of physiology and his discoveries in experimental medicine. The many books Galen wrote became the unquestioned authority for anatomy and medicine in Europe because they were the only ancient Greek anatomical texts that survived the Dark Ages in the form of Arabic (and then Latin) translations.

Owing to church prohibitions against dissection, European medicine in the Middle Ages relied upon Galen’s mixture of fact and fancy rather than on direct observation for its anatomical knowledge, though some dissections were authorized for teaching purposes. In the early 16th century, the artist Leonardo da Vinci undertook his own dissections, and his beautiful and accurate anatomical drawings cleared the way for Flemish physician Andreas Vesalius to “restore” the science of anatomy with his monumental De humani corporis fabrica libri septem (1543; “The Seven Books on the Structure of the Human Body”), which was the first comprehensive and illustrated textbook of anatomy. As a professor at the University of Padua, Vesalius encouraged younger scientists to accept traditional anatomy only after verifying it themselves, and this more critical and questioning attitude broke Galen’s authority and placed anatomy on a firm foundation of observed fact and demonstration.

From Vesalius’s exact descriptions of the skeleton, muscles, blood vessels, nervous system, and digestive tract, his successors in Padua progressed to studies of the digestive glands and the urinary and reproductive systems. Hieronymus Fabricius, Gabriello Fallopius, and Bartolomeo Eustachio were among the most important Italian anatomists, and their detailed studies led to fundamental progress in the related field of physiology. William Harvey’s discovery of the circulation of the blood, for instance, was based partly on Fabricius’s detailed descriptions of the venous valves.

Microscopic anatomy

The new application of magnifying glasses and compound microscopes to biological studies in the second half of the 17th century was the most important factor in the subsequent development of anatomical research. Primitive early microscopes enabled Marcello Malpighi to discover the system of tiny capillaries connecting the arterial and venous networks, Robert Hooke to first observe the small compartments in plants that he called “cells,” and Antonie van Leeuwenhoek to observe muscle fibres and spermatozoa. Thenceforth attention gradually shifted from the identification and understanding of bodily structures visible to the naked eye to those of microscopic size.

The use of the microscope in discovering minute, previously unknown features was pursued on a more systematic basis in the 18th century, but progress tended to be slow until technical improvements in the compound microscope itself, beginning in the 1830s with the gradual development of achromatic lenses, greatly increased that instrument’s resolving power. These technical advances enabled Matthias Jakob Schleiden and Theodor Schwann to recognize in 1838–39 that the cell is the fundamental unit of organization in all living things. The need for thinner, more transparent tissue specimens for study under the light microscope stimulated the development of improved methods of dissection, notably machines called microtomes that can slice specimens into extremely thin sections. In order to better distinguish the detail in these sections, synthetic dyes were used to stain tissues with different colours. Thin sections and staining had become standard tools for microscopic anatomists by the late 19th century. The field of cytology, which is the study of cells, and that of histology, which is the study of tissue organization from the cellular level up, both arose in the 19th century with the data and techniques of microscopic anatomy as their basis.

In the 20th century anatomists tended to scrutinize tinier and tinier units of structure as new technologies enabled them to discern details far beyond the limits of resolution of light microscopes. These advances were made possible by the electron microscope, which stimulated an enormous amount of research on subcellular structures beginning in the 1950s and became the prime tool of anatomical research. About the same time, the use of X-ray diffraction for studying the structures of many types of molecules present in living things gave rise to the new subspecialty of molecular anatomy.

Anatomical nomenclature

Scientific names for the parts and structures of the human body are usually in Latin; for example, the name musculus biceps brachii denotes the biceps muscle of the upper arm. Some such names were bequeathed to Europe by ancient Greek and Roman writers, and many more were coined by European anatomists from the 16th century on. Expanding medical knowledge meant the discovery of many bodily structures and tissues, but there was no uniformity of nomenclature, and thousands of new names were added as medical writers followed their own fancies, usually expressing them in a Latin form.

By the end of the 19th century the confusion caused by the enormous number of names had become intolerable. Medical dictionaries sometimes listed as many as 20 synonyms for one name, and more than 50,000 names were in use throughout Europe. In 1887 the German Anatomical Society undertook the task of standardizing the nomenclature, and, with the help of other national anatomical societies, a complete list of anatomical terms and names was approved in 1895 that reduced the 50,000 names to 5,528. This list, the Basle Nomina Anatomica, had to be subsequently expanded, and in 1955 the Sixth International Anatomical Congress at Paris approved a major revision of it known as the Paris Nomina Anatomica (or simply Nomina Anatomica). In 1998 this work was supplanted by the Terminologia Anatomica, which recognizes about 7,500 terms describing macroscopic structures of human anatomy and is considered to be the international standard on human anatomical nomenclature. The Terminologia Anatomica, produced by the International Federation of Associations of Anatomists and the Federative Committee on Anatomical Terminology (later known as the Federative International Programme on Anatomical Terminologies), was made available online in 2011.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2104 2024-03-27 22:54:29

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,762

Re: Miscellany

2106) Muscular System

Gist

The muscular system is composed of specialized cells called muscle fibers. Their predominant function is contractibility. Muscles, attached to bones or internal organs and blood vessels, are responsible for movement. Nearly all movement in the body is the result of muscle contraction.

Summary

The muscular system is composed of specialized cells called muscle fibers. Their predominant function is contractibility. Muscles, attached to bones or internal organs and blood vessels, are responsible for movement. Nearly all movement in the body is the result of muscle contraction. Exceptions to this are the action of cilia, the flagellum on sperm cells, and amoeboid movement of some white blood cells.

The integrated action of joints, bones, and skeletal muscles produces obvious movements such as walking and running. Skeletal muscles also produce more subtle movements that result in various facial expressions, eye movements, and respiration.

In addition to movement, muscle contraction also fulfills some other important functions in the body, such as posture, joint stability, and heat production. Posture, such as sitting and standing, is maintained as a result of muscle contraction. The skeletal muscles are continually making fine adjustments that hold the body in stationary positions. The tendons of many muscles extend over joints and in this way contribute to joint stability. This is particularly evident in the knee and shoulder joints, where muscle tendons are a major factor in stabilizing the joint. Heat production, to maintain body temperature, is an important by-product of muscle metabolism. Nearly 85 percent of the heat produced in the body is the result of muscle contraction.

Details

Muscles play a part in every function of the body. The muscular system is made up of over 600 muscles. These include three muscle types: smooth, skeletal, and cardiac.

Only skeletal muscles are voluntary, meaning you can control them consciously. Smooth and cardiac muscles act involuntarily.

Each muscle type in the muscular system has a specific purpose. You’re able to walk because of your skeletal muscles. You can digest because of your smooth muscles. And your heart beats because of your cardiac muscle.

The different muscle types also work together to make these functions possible. For instance, when you run (skeletal muscles), your heart pumps harder (cardiac muscle), and causes you to breathe heavier (smooth muscles).

Keep reading to learn more about your muscular system’s functions.

1. Mobility

Your skeletal muscles are responsible for the movements you make. Skeletal muscles are attached to your bones and partly controlled by the central nervous system (CNS).

You use your skeletal muscles whenever you move. Fast-twitch skeletal muscles cause short bursts of speed and strength. Slow-twitch muscles function better for longer movements.

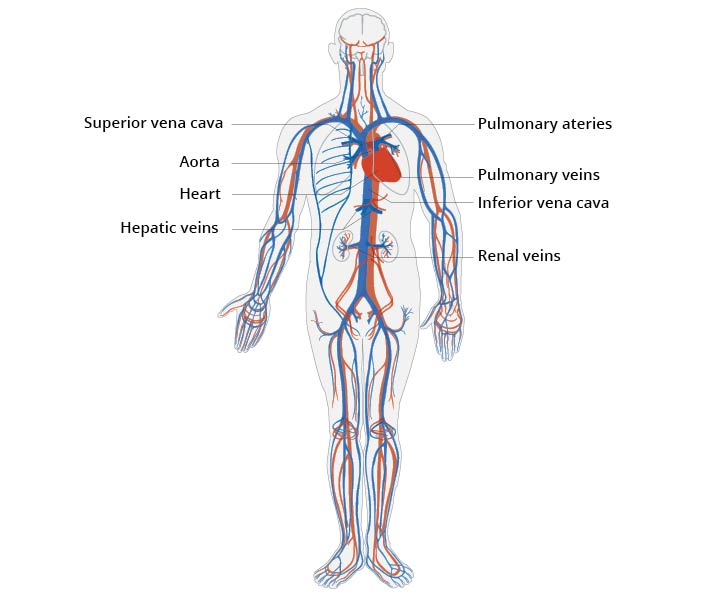

2. Circulation

The involuntary cardiac and smooth muscles help your heart beat and blood flow through your body by producing electrical impulses. The cardiac muscle (myocardium) is found in the walls of the heart. It’s controlled by the autonomic nervous system responsible for most bodily functions.

The myocardium also has one central nucleus like a smooth muscle.

Your blood vessels are made up of smooth muscles, and also controlled by the autonomic nervous system.

3. Respiration

Your diaphragm is the main muscle at work during quiet breathing. Heavier breathing, like what you experience during exercise, may require accessory muscles to help the diaphragm. These can include the abdominal, neck, and back muscles.

4. Digestion

Digestion is controlled by smooth muscles found in your gastrointestinal tract. This comprises the:

* mouth

* esophagus

* stomach

* small and large intestines

* rectum

* the last part of the digestive tract

The digestive system also includes the liver, pancreas, and gallbladder.

Your smooth muscles contract and relax as food passes through your body during digestion. These muscles also help push food out of your body through defecation, or vomiting when you’re sick.

5. Urination

Smooth and skeletal muscles make up the urinary system. The urinary system includes the:

* kidneys

* bladder

* ureters

* urethra

* male or female reproductive organs

* prostate

All the muscles in your urinary system work together so you can urinate. The dome of your bladder is made of smooth muscles. You can release urine when those muscles tighten. When they relax, you can hold in your urine.

6. Childbirth

Smooth muscles are found in the uterus. During pregnancy, these muscles grow and stretch as the baby grows. When a woman goes into labor, the smooth muscles of the uterus contract and relax to help push the baby through the math.

7. Vision

Your eye sockets are made up of six skeletal muscles that help you move your eyes. And the internal muscles of your eyes are made up of smooth muscles. All these muscles work together to help you see. If you damage these muscles, you may impair your vision.

8. Stability

The skeletal muscles in your core help protect your spine and help with stability. Your core muscle group includes the abdominal, back, and pelvic muscles. This group is also known as the trunk. The stronger your core, the better you can stabilize your body. The muscles in your legs also help steady you.

9. Posture

Your skeletal muscles also control posture. Flexibility and strength are keys to maintaining proper posture. Stiff neck muscles, weak back muscles, or tight hip muscles can throw off your alignment. Poor posture can affect parts of your body and lead to joint pain and weaker muscles. These parts include the:

* shoulders

* spine

* hips

* knees

The bottom line

The muscular system is a complex network of muscles vital to the human body. Muscles play a part in everything you do. They control your heartbeat and breathing, help digestion, and allow movement.

Muscles, like the rest of your body, thrive when you exercise and eat healthily. But too much exercise can cause sore muscles. Muscle pain can also be a sign that something more serious is affecting your body.

The following conditions can affect your muscular system:

* myopathy (muscle disease)

* muscular dystrophy

* multiple sclerosis (MS)

* Parkinson’s disease

* fibromyalgia

Talk to your doctor if you have one of these conditions. They can help you find ways to manage your health. It’s important to take care of your muscles so they stay healthy and strong.

Additional Information

The muscular system is an organ system consisting of skeletal, smooth, and cardiac muscle. It permits movement of the body, maintains posture, and circulates blood throughout the body. The muscular systems in vertebrates are controlled through the nervous system although some muscles (such as the cardiac muscle) can be completely autonomous. Together with the skeletal system in the human, it forms the musculoskeletal system, which is responsible for the movement of the body.

Types

There are three distinct types of muscle: skeletal muscle, cardiac or heart muscle, and smooth (non-striated) muscle. Muscles provide strength, balance, posture, movement, and heat for the body to keep warm.

There are approximately 640 muscles in an adult male human body. A kind of elastic tissue makes up each muscle, which consists of thousands, or tens of thousands, of small muscle fibers. Each fiber comprises many tiny strands called fibrils, impulses from nerve cells control the contraction of each muscle fiber.

Skeletal

Skeletal muscle, is a type of striated muscle, composed of muscle cells, called muscle fibers, which are in turn composed of myofibrils. Myofibrils are composed of sarcomeres, the basic building blocks of striated muscle tissue. Upon stimulation by an action potential, skeletal muscles perform a coordinated contraction by shortening each sarcomere. The best proposed model for understanding contraction is the sliding filament model of muscle contraction. Within the sarcomere, actin and myosin fibers overlap in a contractile motion towards each other. Myosin filaments have club-shaped myosin heads that project toward the actin filaments, and provide attachment points on binding sites for the actin filaments. The myosin heads move in a coordinated style; they swivel toward the center of the sarcomere, detach and then reattach to the nearest active site of the actin filament. This is called a ratchet type drive system.

This process consumes large amounts of adenosine triphosphate (ATP), the energy source of the cell. ATP binds to the cross-bridges between myosin heads and actin filaments. The release of energy powers the swiveling of the myosin head. When ATP is used, it becomes adenosine diphosphate (ADP), and since muscles store little ATP, they must continuously replace the discharged ADP with ATP. Muscle tissue also contains a stored supply of a fast-acting recharge chemical, creatine phosphate, which when necessary can assist with the rapid regeneration of ADP into ATP.

Calcium ions are required for each cycle of the sarcomere. Calcium is released from the sarcoplasmic reticulum into the sarcomere when a muscle is stimulated to contract. This calcium uncovers the actin-binding sites. When the muscle no longer needs to contract, the calcium ions are pumped from the sarcomere and back into storage in the sarcoplasmic reticulum.

There are approximately 639 skeletal muscles in the human body.

Cardiac

Heart muscle is striated muscle but is distinct from skeletal muscle because the muscle fibers are laterally connected. Furthermore, just as with smooth muscles, their movement is involuntary. Heart muscle is controlled by the sinus node influenced by the autonomic nervous system.

Smooth

Smooth muscle contraction is regulated by the autonomic nervous system, hormones, and local chemical signals, allowing for gradual and sustained contractions. This type of muscle tissue is also capable of adapting to different levels of stretch and tension, which is important for maintaining proper blood flow and the movement of materials through the digestive system.

Physiology:

Contraction

Neuromuscular junctions are the focal point where a motor neuron attaches to a muscle. Acetylcholine, (a neurotransmitter used in skeletal muscle contraction) is released from the axon terminal of the nerve cell when an action potential reaches the microscopic junction called a synapse. A group of chemical messengers across the synapse and stimulate the formation of electrical changes, which are produced in the muscle cell when the acetylcholine binds to receptors on its surface. Calcium is released from its storage area in the cell's sarcoplasmic reticulum. An impulse from a nerve cell causes calcium release and brings about a single, short muscle contraction called a muscle twitch. If there is a problem at the neuromuscular junction, a very prolonged contraction may occur, such as the muscle contractions that result from tetanus. Also, a loss of function at the junction can produce paralysis.

Skeletal muscles are organized into hundreds of motor units, each of which involves a motor neuron, attached by a series of thin finger-like structures called axon terminals. These attach to and control discrete bundles of muscle fibers. A coordinated and fine-tuned response to a specific circumstance will involve controlling the precise number of motor units used. While individual muscle units contract as a unit, the entire muscle can contract on a predetermined basis due to the structure of the motor unit. Motor unit coordination, balance, and control frequently come under the direction of the cerebellum of the brain. This allows for complex muscular coordination with little conscious effort, such as when one drives a car without thinking about the process.

Tendon

A tendon is a piece of connective tissue that connects a muscle to a bone.[8] When a muscle intercept, it pulls against the skeleton to create movement. A tendon connects this muscle to a bone, making this function possible.

Aerobic and anaerobic muscle activity

At rest, the body produces the majority of its ATP aerobically in the mitochondria without producing lactic acid or other fatiguing byproducts. During exercise, the method of ATP production varies depending on the fitness of the individual as well as the duration and intensity of exercise. At lower activity levels, when exercise continues for a long duration (several minutes or longer), energy is produced aerobically by combining oxygen with carbohydrates and fats stored in the body.

During activity that is higher in intensity, with possible duration decreasing as intensity increases, ATP production can switch to anaerobic pathways, such as the use of the creatine phosphate and the phosphagen system or anaerobic glycolysis. Aerobic ATP production is biochemically much slower and can only be used for long-duration, low-intensity exercise, but produces no fatiguing waste products that can not be removed immediately from the sarcomere and the body, and it results in a much greater number of ATP molecules per fat or carbohydrate molecule. Aerobic training allows the oxygen delivery system to be more efficient, allowing aerobic metabolism to begin quicker. Anaerobic ATP production produces ATP much faster and allows near-maximal intensity exercise, but also produces significant amounts of lactic acid which render high-intensity exercise unsustainable for more than several minutes. The phosphagen system is also anaerobic. It allows for the highest levels of exercise intensity, but intramuscular stores of phosphocreatine are very limited and can only provide energy for exercises lasting up to ten seconds. Recovery is very quick, with full creatine stores regenerated within five minutes.

Clinical significance

Multiple diseases can affect the muscular system.

Muscular Dystrophy

Muscular dystrophy is a group of disorders associated with progressive muscle weakness and loss of muscle mass. These disorders are caused by mutations in a person’s genes. The disease affects between 19.8 and 25.1 per 100,000 person-years globally.

There are more than 30 types of muscular dystrophy. Depending on the type, muscular dystrophy can affect the patient's heart and lungs, and/or their ability to move, walk, and perform daily activities. The most common types include:

* Duchenne muscular dystrophy (DMD) and Becker muscular dystrophy (BMD)

* Myotonic dystrophy

* Limb-Girdle (LGMD)

* Facioscapulohumeral dystrophy (FSHD)

* Congenital dystrophy (CMD)

* Distal (DD)

* Oculopharyngeal dystrophy (OPMD)

* Emery-Dreifuss (EDMD).

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2105 2024-03-28 23:08:49

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,762

Re: Miscellany

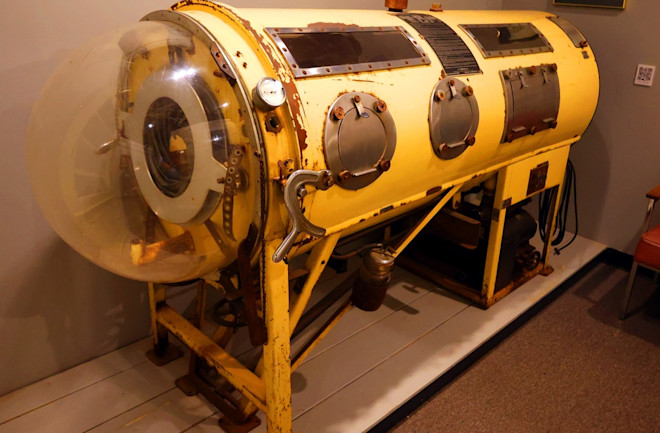

2107) Iron Lung

Gist

An iron lung is a device for artificial respiration in which rhythmic alternations in the air pressure in a chamber surrounding a patient's chest force air into and out of the lungs.

Summary

Can you name any truly life-changing inventions? There have been many over the course of human history. Just look at the field of medicine, for example. In this area alone, inventions like vaccines, anesthesia, and the stethoscope have changed the world.

Today’s Wonder of the Day is about another medical invention. Before the creation of respirators, it helped people who couldn’t breathe on their own. That’s right—today, we’re learning about the iron lung.

Iron lungs aren’t common today, but there was a time when they could be found in many hospitals. Invented in 1928, they offered treatment for severe cases of polio. This illness, which affected mostly children, could lead to life-threatening issues. It could even cause paralysis.

In these cases, patients could even lose the ability to breathe. This happened when the virus affected the diaphragm, a muscle below the lungs. Many of these patients regained the ability to breathe after a few weeks or months using an iron lung. Others relied on the machine for the rest of their lives.

How do iron lungs work? They rely on air pressure. To begin the treatment, patients are put on a sliding bed. A nurse or doctor pushes the bed into the machine, which is a large metal tube. Once patients are inside the lung, only their heads are outside of the tube. A rubber seal around their neck stops air from escaping the machine.

When the iron lung is switched on, it increases air pressure inside the tube. This causes the lungs to deflate, forcing the patient to exhale. Then, the air pressure decreases. This, in turn, leads the patient to inhale as their lungs inflate.

When patients begin treatment with an iron lung, they spend most of their time inside the machine. They may be taken out for mere minutes a day until they’re able to breathe on their own. As such, they rely on nurses, doctors, and other hospital staff to help them with everyday tasks like eating and changing clothes.

Does anyone still use an iron lung today? Yes, though they are very few. One example is Paul Alexander, who was diagnosed with polio in 1952 at age six. Today, Alexander can spend short periods of time, sometimes hours, outside of the lung. He has built a successful career as an attorney and lived a full life thanks to the breathing support he receives from this machine.

Thanks to vaccines, there hasn’t been a new case of polio in the U.S. since 1979. This has made it difficult for Alexander to find replacement parts for his iron lung. It’s also difficult to find people to repair the machine. Additionally, insurance companies no longer cover the repairs. As he relies on the machine for survival, Alexander must pay for its upkeep out-of-pocket.

Have you ever seen an iron lung in action? Today, hospitals opt for modern respirators in place of these devices. Still, the iron lung is to thank for the survival of many children who contracted polio in the 20th century. Can you think of any other medical inventions that have changed the world?

Details

An iron lung is a type of negative pressure ventilator (NPV), a mechanical respirator which encloses most of a person's body and varies the air pressure in the enclosed space to stimulate breathing. It assists breathing when muscle control is lost, or the work of breathing exceeds the person's ability. Need for this treatment may result from diseases including polio and botulism and certain poisons (for example, barbiturates, tubocurarine).

The use of iron lungs is largely obsolete in modern medicine as more modern breathing therapies have been developed and due to the eradication of polio in most of the world. However, in 2020, the COVID-19 pandemic revived some interest in the device as a cheap, readily-producible substitute for positive-pressure ventilators, which were feared to be outnumbered by patients potentially needing temporary artificially assisted respiration.

The iron lung is a large horizontal cylinder designed to stimulate breathing in patients who have lost control of their respiratory muscles. The patient's head is exposed outside the cylinder, while the body is sealed inside. Air pressure inside the cylinder is cycled to facilitate inhalation and exhalation. Devices like the Drinker, Emerson, and Both respirators are examples of iron lungs, which can be manually or mechanically powered. Smaller versions, like the cuirass ventilator and jacket ventilator, enclose only the patient's torso. Breathing in humans occurs through negative pressure, where the rib cage expands and the diaphragm contracts, causing air to flow in and out of the lungs.

The concept of external negative pressure ventilation was introduced by John Mayow in 1670. The first widely used device was the iron lung, developed by Philip Drinker and Louis Shaw in 1928. Initially used for coal gas poisoning treatment, the iron lung gained fame for treating respiratory failure caused by polio in the mid-20th century. John Haven Emerson introduced an improved and more affordable version in 1931. The Both respirator, a cheaper and lighter alternative to the Drinker model, was invented in Australia in 1937. British philanthropist William Morris financed the production of the Both–Nuffield respirators, donating them to hospitals throughout Britain and the British Empire. During the polio outbreaks of the 1940s and 1950s, iron lungs filled hospital wards, assisting patients with paralyzed diaphragms in their recovery.

Polio vaccination programs and the development of modern ventilators have nearly eradicated the use of iron lungs in the developed world. Positive pressure ventilation systems, which blow air into the patient's lungs via intubation, have become more common than negative pressure systems like iron lungs. However, negative pressure ventilation is more similar to normal physiological breathing and may be preferable in rare conditions. As of 2024, after the death of Paul Alexander, only one patient in the U.S. is still using iron lungs. In response to the COVID-19 pandemic and the shortage of modern ventilators, some enterprises developed prototypes of new, easily producible versions of the iron lung.

Design and function

The iron lung is typically a large horizontal cylinder in which a person is laid, with their head protruding from a hole in the end of the cylinder, so that their full head (down to their voice box) is outside the cylinder, exposed to ambient air, and the rest of their body sealed inside the cylinder, where air pressure is continuously cycled up and down to stimulate breathing.

To cause the patient to inhale, air is pumped out of the cylinder, causing a slight vacuum, which causes the patient's chest and abdomen to expand (drawing air from outside the cylinder, through the patient's exposed nose or mouth, into their lungs). Then, for the patient to exhale, the air inside the cylinder is compressed slightly (or allowed to equalize to ambient room pressure), causing the patient's chest and abdomen to partially collapse, forcing air out of the lungs, as the patient exhales the breath through their exposed mouth and nose, outside the cylinder.

Examples of the device include the Drinker respirator, the Emerson respirator, and the Both respirator. Iron lungs can be either manually or mechanically powered, but are normally powered by an electric motor linked to a flexible pumping diaphragm (commonly opposite the end of the cylinder from the patient's head). Larger "room-sized" iron lungs were also developed, allowing for simultaneous ventilation of several patients (each with their heads protruding from sealed openings in the outer wall), with sufficient space inside for a nurse or a respiratory therapist to be inside the sealed room, attending the patients.

Smaller, single-patient versions of the iron lung include the so-called cuirass ventilator (named for the cuirass, a torso-covering body armor). The cuirass ventilator encloses only the patient's torso, or chest and abdomen, but otherwise operates essentially the same as the original, full-sized iron lung. A lightweight variation on the cuirass ventilator is the jacket ventilator or poncho or raincoat ventilator, which uses a flexible, impermeable material (such as plastic or rubber) stretched over a metal or plastic frame over the patient's torso.

Method and use