Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#2276 2024-08-28 16:05:27

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,770

Re: Miscellany

2276) Pencil

Gist

A pencil is a writing or drawing implement with a solid pigment core in a protective casing that reduces the risk of core breakage and keeps it from marking the user's hand.

Pencils are available in diverse types, including traditional graphite pencils, versatile mechanical pencils, and vibrant colored pencils. They continually adjust to a wide array of artistic and functional needs.

The middle ground is referred to as HB. Softer lead gets a B grading, with a number to say how soft the lead is. B on its own is just a little softer than HB. 2B, 3B and 4B are increasingly soft. Further up the range, 9B is the very softest lead available, but so soft and crumbly that it's rarely used.

The degree of hardness of a pencil is printed on the pencil.

H stands for "hard". HB stands for "hard black", which means "medium hard".

Summary

pencil, slender rod of a solid marking substance, such as graphite, enclosed in a cylinder of wood, metal, or plastic; used as an implement for writing, drawing, or marking. In 1565 the German-Swiss naturalist Conrad Gesner first described a writing instrument in which graphite, then thought to be a type of lead, was inserted into a wooden holder. Gesner was the first to describe graphite as a separate mineral, and in 1779 the Swedish chemist Carl Wilhelm Scheele showed it to be a form of carbon. The name graphite is from the Greek graphein, “to write.” The modern lead pencil became possible when an unusually pure deposit of graphite was discovered in 1564 in Borrowdale, Cumberland, Eng.

The hardness of writing pencils, which is related to the proportion of clay (used as a binder) to graphite in the lead, is usually designated by numbers from one, the softest, to four, the hardest. Artists’ drawing pencils range in a hardness designation generally given from 8B, the softest, to F, the hardest. The designation of the hardness of drafting pencils ranges from HB, the softest, to 10H, the hardest.

The darkness of a pencil mark depends on the number of small particles of graphite deposited by the pencil. The particles are equally black (though graphite is never truly black) regardless of the hardness of the lead; only the size and number of particles determine the apparent degree of blackness of the pencil mark. The degree of hardness of a lead is a measure of how much the lead resists abrasion by the fibres of the paper.

Details

A pencil is a writing or drawing implement with a solid pigment core in a protective casing that reduces the risk of core breakage and keeps it from marking the user's hand.

Pencils create marks by physical abrasion, leaving a trail of solid core material that adheres to a sheet of paper or other surface. They are distinct from pens, which dispense liquid or gel ink onto the marked surface.

Most pencil cores are made of graphite powder mixed with a clay binder. Graphite pencils (traditionally known as "lead pencils") produce grey or black marks that are easily erased, but otherwise resistant to moisture, most solvents, ultraviolet radiation and natural aging. Other types of pencil cores, such as those of charcoal, are mainly used for drawing and sketching. Coloured pencils are sometimes used by teachers or editors to correct submitted texts, but are typically regarded as art supplies, especially those with cores made from wax-based binders that tend to smear when erasers are applied to them. Grease pencils have a softer, oily core that can leave marks on smooth surfaces such as glass or porcelain.

The most common pencil casing is thin wood, usually hexagonal in section, but sometimes cylindrical or triangular, permanently bonded to the core. Casings may be of other materials, such as plastic or paper. To use the pencil, the casing must be carved or peeled off to expose the working end of the core as a sharp point. Mechanical pencils have more elaborate casings which are not bonded to the core; instead, they support separate, mobile pigment cores that can be extended or retracted (usually through the casing's tip) as needed. These casings can be reloaded with new cores (usually graphite) as the previous ones are exhausted.

Types:

By marking material:

Graphite

Graphite pencils are the most common types of pencil, and are encased in wood. They are made of a mixture of clay and graphite and their darkness varies from light grey to black. Their composition allows for the smoothest strokes.

Solid

Solid graphite pencils are solid sticks of graphite and clay composite (as found in a 'graphite pencil'), about the diameter of a common pencil, which have no casing other than a wrapper or label. They are often called "woodless" pencils. They are used primarily for art purposes as the lack of casing allows for covering larger spaces more easily, creating different effects, and providing greater economy as the entirety of the pencil is used. They are available in the same darkness range as wood-encased graphite pencils.

Liquid

Liquid graphite pencils are pencils that write like pens. The technology was first invented in 1955 by Scripto and Parker Pens. Scripto's liquid graphite formula came out about three months before Parker's liquid lead formula. To avoid a lengthy patent fight the two companies agreed to share their formulas.

Charcoal

Charcoal pencils are made of charcoal and provide fuller blacks than graphite pencils, but tend to smudge easily and are more abrasive than graphite. Sepia-toned and white pencils are also available for duotone techniques.

Carbon pencils

Carbon pencils are generally made of a mixture of clay and lamp black, but are sometimes blended with charcoal or graphite depending on the darkness and manufacturer. They produce a fuller black than graphite pencils, are smoother than charcoal, and have minimal dust and smudging. They also blend very well, much like charcoal.

Colored

Colored pencils, or pencil crayons, have wax-like cores with pigment and other fillers. Several colors are sometimes blended together.

Grease

Grease pencils can write on virtually any surface (including glass, plastic, metal and photographs). The most commonly found grease pencils are encased in paper (Berol and Sanford Peel-off), but they can also be encased in wood (Staedtler Omnichrom).

Watercolor

Watercolor pencils are designed for use with watercolor techniques. Their cores can be diluted by water. The pencils can be used by themselves for sharp, bold lines. Strokes made by the pencil can also be saturated with water and spread with brushes.

By use:

Carpentry

Carpenter's pencils are pencils that have two main properties: their shape prevents them from rolling, and their graphite is strong. The oldest surviving pencil is a German carpenter's pencil dating from the 17th Century and now in the Faber-Castell collection.

Copying

Copying pencils (or indelible pencils) are graphite pencils with an added dye that creates an indelible mark. They were invented in the late 19th century for press copying and as a practical substitute for fountain pens. Their markings are often visually indistinguishable from those of standard graphite pencils, but when moistened their markings dissolve into a coloured ink, which is then pressed into another piece of paper. They were widely used until the mid-20th century when ball pens slowly replaced them. In Italy their use is still mandated by law for voting paper ballots in elections and referendums.

Eyeliner

Eye liner pencils are used for make-up. Unlike traditional copying pencils, eyeliner pencils usually contain non-toxic dyes.

Erasable coloring

Unlike wax-based colored pencils, the erasable variants can be easily erased. Their main use is in sketching, where the objective is to create an outline using the same color that other media (such as wax pencils, or watercolor paints) would fill or when the objective is to scan the color sketch. Some animators prefer erasable color pencils as opposed to graphite pencils because they do not smudge as easily, and the different colors allow for better separation of objects in the sketch. Copy-editors find them useful too as markings stand out more than those of graphite, but can be erased.

Non-reproduction

Also known as non-photo blue pencils, the non-reproducing types make marks that are not reproducible by photocopiers (examples include "Copy-not" by Sanford and "Mars Non-photo" by Staedtler) or by whiteprint copiers (such as "Mars Non-Print" by Staedtler).

Stenography

Stenographer's pencils, also known as a steno pencil, are expected to be very reliable, and their lead is break-proof. Nevertheless, steno pencils are sometimes sharpened at both ends to enhance reliability. They are round to avoid pressure pain during long texts.

Golf

Golf pencils are usually short (a common length is 9 cm or 3.5 in) and very cheap. They are also known as library pencils, as many libraries offer them as disposable writing instruments.

By shape:

* Triangular (more accurately a Reuleaux triangle)

* Hexagonal

* Round

* Bendable (flexible plastic)

By size:

Typical

A standard, hexagonal, "#2 pencil" is cut to a hexagonal height of 6 mm (1⁄4 in), but the outer diameter is slightly larger (about 7 mm or 9⁄32 in) A standard, "#2", hexagonal pencil is 19 cm (7.5 in) long.

Biggest

On 3 September 2007, Ashrita Furman unveiled his giant US$20,000 pencil – 23 metres (76 ft) long, 8,200 kilograms (18,000 lb) with over 2,000 kilograms (4,500 lb) for the graphite centre – after three weeks of creation in August 2007 as a birthday gift for teacher Sri Chinmoy. It is longer than the 20-metre (65 ft) pencil outside the Malaysia HQ of stationers Faber-Castell.

By manufacture:

Mechanical:

Mechanical pencils use mechanical methods to push lead through a hole at the end. These can be divided into two groups: with propelling pencils an internal mechanism is employed to push the lead out from an internal compartment, while clutch pencils merely hold the lead in place (the lead is extended by releasing it and allowing some external force, usually gravity, to pull it out of the body). The erasers (sometimes replaced by a sharpener on pencils with larger lead sizes) are also removable (and thus replaceable), and usually cover a place to store replacement leads. Mechanical pencils are popular for their longevity and the fact that they may never need sharpening. Lead types are based on grade and size; with standard sizes being 2.00 mm (0.079 in), 1.40 mm (0.055 in), 1.00 mm (0.039 in), 0.70 mm (0.028 in), 0.50 mm (0.020 in), 0.35 mm (0.014 in), 0.25 mm (0.0098 in), 0.18 mm (0.0071 in), and 0.13 mm (0.0051 in) (ISO 9175-1)—the 0.90 mm (0.035 in) size is available, but is not considered a standard ISO size.

Pop a Point

Pioneered by Taiwanese stationery manufacturer Bensia Pioneer Industrial Corporation in the early 1970s, Pop a Point Pencils are also known as Bensia Pencils, stackable pencils or non-sharpening pencils. It is a type of pencil where many short pencil tips are housed in a cartridge-style plastic holder. A blunt tip is removed by pulling it from the writing end of the body and re-inserting it into the open-ended bottom of the body, thereby pushing a new tip to the top.

Plastic

Invented by Harold Grossman for the Empire Pencil Company in 1967, plastic pencils were subsequently improved upon by Arthur D. Little for Empire from 1969 through the early 1970s; the plastic pencil was commercialised by Empire as the "EPCON" Pencil. These pencils were co-extruded, extruding a plasticised graphite mix within a wood-composite core.

Other aspects

By factory state: sharpened, unsharpened

By casing material: wood, paper, plastic

The P&P Office Waste Paper Processor recycles paper into pencils

Health

Residual graphite from a pencil stick is not poisonous, and graphite is harmless if consumed.

Although lead has not been used for writing since antiquity, such as in Roman styli, lead poisoning from pencils was not uncommon. Until the middle of the 20th century the paint used for the outer coating could contain high concentrations of lead, and this could be ingested when the pencil was sucked or chewed.

Manufacture

The lead of the pencil is a mix of finely ground graphite and clay powders. Before the two substances are mixed, they are separately cleaned of foreign matter and dried in a manner that creates large square cakes. Once the cakes have fully dried, the graphite and the clay squares are mixed together using water. The amount of clay content added to the graphite depends on the intended pencil hardness (lower proportions of clay makes the core softer), and the amount of time spent on grinding the mixture determines the quality of the lead. The mixture is then shaped into long spaghetti-like strings, straightened, dried, cut, and then tempered in a kiln. The resulting strings are dipped in oil or molten wax, which seeps into the tiny holes of the material and allows for the smooth writing ability of the pencil. A juniper or incense-cedar plank with several long parallel grooves is cut to fashion a "slat," and the graphite/clay strings are inserted into the grooves. Another grooved plank is glued on top, and the whole assembly is then cut into individual pencils, which are then varnished or painted. Many pencils feature an eraser on the top and so the process is usually still considered incomplete at this point. Each pencil has a shoulder cut on one end of the pencil to allow for a metal ferrule to be secured onto the wood. A rubber plug is then inserted into the ferrule for a functioning eraser on the end of the pencil.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2277 2024-08-29 16:16:45

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,770

Re: Miscellany

2277) Fuel Gas

Gist

Fuel gas means gas generated at a petroleum refinery or petrochemical plant and that is combusted separately or in any combination with any type of gas.

Regular, Mid-Grade, Or Premium

* Regular gas has the lowest octane level, typically at 87.

* Mid-grade gas typically has an octane level of 89.

* Premium gas has the highest octane levels and can range from 91 to 94.

Summary

The substances in which such ingredients are present which produce heat by acting with oxygen are called fuels.

In order to obtain heat from the fuel, initially it is given a small amount of heat in the presence of oxygen from some other source, by doing this, a microscopic part of the fuel reacts with oxygen and in this action heat is produced, which first Exceeded heat is given.

In this way, one part of the heat generated becomes radiate and the remaining part is helpful in reacting oxygen to another part of the fuel. Thus heat is generated and the fuel is slowly destroyed, this action is called burning of fuel.

Fuel Gas System

Fuel + Oxygen → Products + Heat

The amount of heat that a calorie produces from burning one gram of a fuel is called the calorific value of that fuel. The higher the calorific value of a fuel, the better the fuel is considered to be. The calorific value of carbon is 7830 calories per gram.

There are three types of fuel solid, fluid, gas, wood and coal are the main fuel. Among the liquid fuels, kerosene petrol and diesel are the main ones. Oil gas petrol gas producer gas water gas and call gas are major in gas fuel. Gaseous fuel is more useful than solid and liquid fuels.

Details

Fuel gas is one of a number of fuels that under ordinary conditions are gaseous. Most fuel gases are composed of hydrocarbons (such as methane and propane), hydrogen, carbon monoxide, or mixtures thereof. Such gases are sources of energy that can be readily transmitted and distributed through pipes.

Fuel gas is contrasted with liquid fuels and solid fuels, although some fuel gases are liquefied for storage or transport (for example, autogas and liquified petroleum gas). While their gaseous nature has advantages, avoiding the difficulty of transporting solid fuel and the dangers of spillage inherent in liquid fuels, it also has limitations. It is possible for a fuel gas to be undetected and cause a gas explosion. For this reason, odorizers are added to most fuel gases. The most common type of fuel gas in current use is natural gas.

Types of fuel gas

There are two broad classes of fuel gases, based not on their chemical composition, but their source and the way they are produced: those found naturally, and those manufactured from other materials.

Manufactured fuel gas

Manufactured fuel gases are those produced by chemical transformations of solids, liquids, or other gases. When obtained from solids, the conversion is referred to as gasification and the facility is known as a gasworks.

Manufactured fuel gases include:

* Coal gas, obtained from pyrolysis of coal

* Water gas, largely obsolete, obtained by passing steam over hot coke

* Producer gas, largely obsolete, obtained by passing steam and air over hot coke

* Syngas, major current technology, obtained mainly from natural gas

* Wood gas, obtained mainly from wood, once was popular and of relevance to biofuels

* Biogas, obtained from landfills

* Blast furnace gas

* Hydrogen from Electrolysis or Steam reforming

The coal gas made by the pyrolysis of coal contains impurities such a tar, ammonia and hydrogen sulfide. These must be removed and a substantial amount of plant may be required to do this.

Well or mine extracted fuel gases

In the 20th century, natural gas, composed primarily of methane, became the dominant source of fuel gas, as instead of having to be manufactured in various processes, it could be extracted from deposits in the earth. Natural gas may be combined with hydrogen to form a mixture known as HCNG.

Additional fuel gases obtained from natural gas or petroleum:

* Propane

* Butane

* Regasified liquefied petroleum gas

Natural gas is produced with water and gas condensate. These liquids have to be removed before the gas can be used as fuel. Even after treatment the gas will be saturated and liable to condense as liquid in the pipework. This can be reduced by superheating the fuel gas.

Uses of fuel gas

One of the earliest uses was gas lighting, which enabled the widespread adoption of streetlamps and the illumination of buildings in towns. Fuel gas was also used in gas burners, in particular the Bunsen burner used in laboratories. It may also be used gas heaters, camping stoves, and even to power vehicles, as they have a high calorific value.

Fuel gas is widely used by industrial, commercial and domestic users. Industry uses fuel gas for heating furnaces, kilns, boilers and ovens and for space heating and drying . The electricity industry uses fuel gas to power gas turbines to generate electricity. The specification of fuel gas for gas turbines may be quite stringent. Fuel gas may also be used as a feedstock for chemical processes.

Fuel gas in the commercial sector is used for heating, cooking, baking and drying, and in the domestic sector for heating and cooking.

Currently, fuel gases, especially syngas, are used heavily for the production of ammonia for fertilizers and for the preparation of many detergents and specialty chemicals.

On an industrial plant fuel gas may be used to purge pipework and vessels to prevent the ingress of air. Any fuel gas surplus to needs may be disposed of by burning in the plant gas flare system.

For users that burn gas directly fuel gas is supplied at a pressure of about 15 psi (1 barg). Gas turbines need a supply pressure of 250-350 psi (17-24 barg).

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2278 2024-08-30 16:20:12

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,770

Re: Miscellany

2278) Petroleum

Gist

Petroleum is a naturally occurring liquid found beneath the earth's surface that can be refined into fuel. Petroleum is used as fuel to power vehicles, heating units, and machines, and can be converted into plastics.

Summary

Petroleum is a complex mixture of hydrocarbons that occur in Earth in liquid, gaseous, or solid form. A natural resource, petroleum is most often conceived of in its liquid form, commonly called crude oil, but, as a technical term, petroleum also refers to natural gas and the viscous or solid form known as bitumen, which is found in tar sands. The liquid and gaseous phases of petroleum constitute the most important of the primary fossil fuels.

Liquid and gaseous hydrocarbons are so intimately associated in nature that it has become customary to shorten the expression “petroleum and natural gas” to “petroleum” when referring to both. The first use of the word petroleum (literally “rock oil” from the Latin petra, “rock” or “stone,” and oleum, “oil”) is often attributed to a treatise published in 1556 by the German mineralogist Georg Bauer, known as Georgius Agricola. However, there is evidence that it may have originated with Persian philosopher-scientist Avicenna some five centuries earlier.

The burning of all fossil fuels (coal and biomass included) releases large quantities of carbon dioxide (CO2) into the atmosphere. The CO2 molecules do not allow much of the long-wave solar radiation absorbed by Earth’s surface to reradiate from the surface and escape into space. The CO2 absorbs upward-propagating infrared radiation and reemits a portion of it downward, causing the lower atmosphere to remain warmer than it would otherwise be. This phenomenon has the effect of enhancing Earth’s natural greenhouse effect, producing what scientists refer to as anthropogenic (human-generated) global warming. There is substantial evidence that higher concentrations of CO2 and other greenhouse gases have contributed greatly to the increase of Earth’s near-surface mean temperature since 1950.

Details

Petroleum or crude oil, also referred to as simply oil, is a naturally occurring yellowish-black liquid mixture of mainly hydrocarbons, and is found in geological formations. The name petroleum covers both naturally occurring unprocessed crude oil and petroleum products that consist of refined crude oil.

Petroleum is primarily recovered by oil drilling. Drilling is carried out after studies of structural geology, sedimentary basin analysis, and reservoir characterization. Unconventional reserves such as oil sands and oil shale exist.

Once extracted, oil is refined and separated, most easily by distillation, into innumerable products for direct use or use in manufacturing. Products include fuels such as gasoline (petrol), diesel, kerosene and jet fuel; asphalt and lubricants; chemical reagents used to make plastics; solvents, textiles, refrigerants, paint, synthetic rubber, fertilizers, pesticides, pharmaceuticals, and thousands of others. Petroleum is used in manufacturing a vast variety of materials essential for modern life, and it is estimated that the world consumes about 100 million barrels (16 million cubic metres) each day. Petroleum production can be extremely profitable and was critical to global economic development in the 20th century. Some countries, known as petrostates, gained significant economic and international power over their control of oil production and trade.

Petroleum exploitation can be damaging to the environment and human health. Extraction, refining and burning of petroleum fuels all release large quantities of greenhouse gases, so petroleum is one of the major contributors to climate change. Other negative environmental effects include direct releases, such as oil spills, and as well as air and water pollution at almost all stages of use. These environmental effects have direct and indirect health consequences for humans. Oil has also been a source of internal and inter-state conflict, leading to both state-led wars and other resource conflicts. Production of petroleum is estimated to reach peak oil before 2035 as global economies lower dependencies on petroleum as part of climate change mitigation and a transition towards renewable energy and electrification. Oil has played a key role in industrialization and economic development.

Etymology

The word petroleum comes from Medieval Latin petroleum (literally 'rock oil'), which comes from Latin petra 'rock' and oleum 'oil'.

The origin of the term stems from monasteries in southern Italy where it was in use by the end of the first millennium as an alternative for the older term "naphtha". After that, the term was used in numerous manuscripts and books, such as in the treatise De Natura Fossilium, published in 1546 by the German mineralogist Georg Bauer, also known as Georgius Agricola. After the advent of the oil industry, during the second half of the 19th century, the term became commonly known for the liquid form of hydrocarbons.

Use

In terms of volume, most petroleum is converted into fuels for combustion engines. In terms of value, petroleum underpins the petrochemical industry, which includes many high value products such as pharmaceuticals and plastics.

Fuels and lubricants

Petroleum is used mostly, by volume, for refining into fuel oil and gasoline, both important primary energy sources. 84% by volume of the hydrocarbons present in petroleum is converted into fuels, including gasoline, diesel, jet, heating, and other fuel oils, and liquefied petroleum gas.

Due to its high energy density, easy transportability and relative abundance, oil has become the world's most important source of energy since the mid-1950s. Petroleum is also the raw material for many chemical products, including pharmaceuticals, solvents, fertilizers, pesticides, and plastics; the 16 percent not used for energy production is converted into these other materials. Petroleum is found in porous rock formations in the upper strata of some areas of the Earth's crust. There is also petroleum in oil sands (tar sands). Known oil reserves are typically estimated at 190 {km}^3 (1.2 trillion (short scale) barrels) without oil sands, or 595 {km}^3 (3.74 trillion barrels) with oil sands. Consumption is currently around 84 million barrels (13.4×{10}^6 m^3) per day, or 4.9 {km}^3 per year, yielding a remaining oil supply of only about 120 years, if current demand remains static. More recent studies, however, put the number at around 50 years.

Closely related to fuels for combustion engines are Lubricants, greases, and viscosity stabilizers. All are derived from petroleum.

Chemicals

Many pharmaceuticals are derived from petroleum, albeit via multistep processes.[citation needed] Modern medicine depends on petroleum as a source of building blocks, reagents, and solvents. Similarly, virtually all pesticides - insecticides, herbicides, etc. - are derived from petroleum. Pesticides have profoundly affected life expectancies by controlling disease vectors and by increasing yields of crops. Like pharmaceuticals, pesticides are in essence petrochemicals. Virtually all plastics and synthetic polymers are derived from petroleum, which is the source of monomers. Alkenes (olefins) are one important class of these precursor molecules.

Other derivatives

* Wax, used in the packaging of frozen foods, among others, Paraffin wax, derived from petroleum oil.

* Sulfur and its derivative sulfuric acid. Hydrogen sulfide is a product of sulfur removal from petroleum fraction. It is oxidized to elemental sulfur and then to sulfuric acid.

* Bulk tar and Asphalt

* Petroleum coke, used in speciality carbon products or as solid fuel.

Additional Information:

What Is Petroleum?

Petroleum, also called crude oil, is a naturally occurring liquid found beneath the earth’s surface that can be refined into fuel. A fossil fuel, petroleum is created by the decomposition of organic matter over time and used as fuel to power vehicles, heating units, and machines, and can be converted into plastics.

Because the majority of the world relies on petroleum for many goods and services, the petroleum industry is a major influence on world politics and the global economy.

Key Takeaways

* Petroleum is a naturally occurring liquid found beneath the earth’s surface that can be refined into fuel.

* Petroleum is used as fuel to power vehicles, heating units, and machines, and can be converted into plastics.

* The extraction and processing of petroleum and its availability are drivers of the world's economy and global politics.

* Petroleum is non-renewable energy, and other energy sources, such as solar and wind power, are becoming prominent.

Understanding Petroleum

The extraction and processing of petroleum and its availability is a driver of the world's economy and geopolitics. Many of the largest companies in the world are involved in the extraction and processing of petroleum or create petroleum-based products, including plastics, fertilizers, automobiles, and airplanes.

Petroleum is recovered by oil drilling and then refined and separated into different types of fuels. Petroleum contains hydrocarbons of different molecular weights and the denser the petroleum the more difficult it is to process and the less valuable it is.

Investing in petroleum means investing in oil, through the purchase of oil futures or options, or indirect investing, in exchanged traded funds (ETFs) that invest in companies in the energy sector.

Petroleum companies are divided into upstream, midstream, and downstream, depending on the oil and gas company's position in the supply chain. Upstream oil and gas companies identify, extract, or produce raw materials.

Downstream oil companies engage in the post-production of crude oil and natural gas. Midstream oil and gas companies connect downstream and upstream companies by storing and transporting oil and other refined products.

Pros and Cons of Petroleum

Petroleum provides transportation, heat, light, and plastics to global consumers. It is easy to extract but is a non-renewable, limited supply source of energy. Petroleum has a high power ratio and is easy to transport.

However, the extraction process and the byproducts of the use of petroleum are toxic to the environment. Underwater drilling may cause leaks and fracking can affect the water table. Carbon released into the atmosphere by using petroleum increases temperatures and is associated with global warming.

Pros:

* Stable energy source

* Easily extracted

* Variety of uses

* High power ratio

* Easily transportable

Cons

* Carbon emissions are toxic to the environment.

* Transportation can damage the environment.

* Extraction process is harmful to the environment.

The Petroleum Industry:

Classification

Oil is classified into three categories including the geographic location where it was drilled, its sulfur content, and its API gravity, its density measure.

Reservoirs

Geologists, chemists, and engineers research geographical structures that hold petroleum using “seismic reflection." A reservoir’s oil-in-place that can be extracted and refined is that reservoir’s oil reserves.

As of 2022, the top ranking countries for total oil reserves include Venezuela with 303.8 billion barrels, Saudi Arabia with 297.5, and Canada holding 168.1.

Extracting

Drilling for oil includes developmental drilling, where oil reserves have already been found. Exploratory drilling is conducted to search for new reserves and directional drilling is drilling vertically to a known source of oil.

Investing In Petroleum

The energy sector attracts investors who speculate on the demand for oil and fossil fuels and many oil and energy fund offerings consist of companies related to energy.

Mutual funds like Vanguard Energy Fund Investor Shares (VGENX) with holdings in ConocoPhillips, Shell, and Marathon Petroleum Corporation, and the Fidelity Select Natural Gas Fund (FSNGX), holding Enbridge and Hess, are two funds that invest in the energy sector and pay dividends.

Oil and gas exchange-traded funds (ETFs) offer investors more direct and easier access to the often-volatile energy market than many other alternatives. Three of the top-rated oil and gas ETFs for 2022 include the Invesco Dynamic Energy Exploration & Production ETF (PXE), First Trust Natural Gas ETF (FCG), and iShares U.S. Oil & Gas Exploration & Production ETF (IEO).

How Is Petroleum Formed?

Petroleum is a fossil fuel that was formed over millions of years through the transformation of dead organisms, such as algae, plants, and bacteria, that experienced high heat and pressure when trapped inside rock formations.

Is Petroleum Renewable?

Petroleum is not a renewable energy source. It is a fossil fuel with a finite amount of petroleum available.

What Are Alternatives to Petroleum?

Alternatives include wind, solar, and biofuels. Wind power uses wind turbines to harness the power of the wind to create energy. Solar power uses the sun as an energy source, and biofuels use vegetable oils and animal fat as a power source.

What Are Classifications of Petroleum?

Unrefined petroleum classes include asphalt, bitumen, crude oil, and natural gas.

The Bottom Line

Petroleum is a fossil fuel that is used widely in the daily lives of global consumers. In its refined state, petroleum is used to create gasoline, kerosene, plastics, and other byproducts. Petroleum is a finite material and non-renewable energy source. Because of its potential to be harmful to the environment, alternative energy sources are being explored and implemented, such as solar and wind energy.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2279 2024-08-31 00:23:33

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,770

Re: Miscellany

2279) Electric Vehicles

Gist

An EV's electric motor doesn't have to pressurize and ignite gasoline to move the car's wheels. Instead, it uses electromagnets inside the motor that are powered by the battery to generate rotational force. Inside the motor are two sets of magnets.

Here's a basic rundown of how electric cars work: EVs receive energy from a charging station and store the energy in its battery. The battery gives power to the motor which moves the wheels. Many electrical parts work together in the background to make this motion happen.

Summary

EV’s are like an automatic car. They have a forward and reverse mode. When you place the vehicle in gear and press on the accelerator pedal these things happen:

* Power is converted from the DC battery to AC for the electric motor

* The accelerator pedal sends a signal to the controller which adjusts the vehicle's speed by changing the frequency of the AC power from the inverter to the motor

* The motor connects and turns the wheels through a cog

* When the brakes are pressed or the car is decelerating, the motor becomes an alternator and produces power, which is sent back to the battery

AC/DC and electric cars

AC stands for Alternating Current. In AC, the current changes direction at a determined frequency, like the pendulum on a clock.

DC stands for Direct Current. In DC, the current flows in one direction only, from positive to negative.

Battery Electric Vehicles

The key components of a Battery Electric Vehicle are:

* Electric motor

* Inverter

* Battery

* Battery charger

* Controller

* Charging cable

Electric motor

You will find electric motors in everything from juicers and toothbrushes, washing machines and dryers, to robots. They are familiar, reliable and very durable. Electric vehicle motors use AC power.

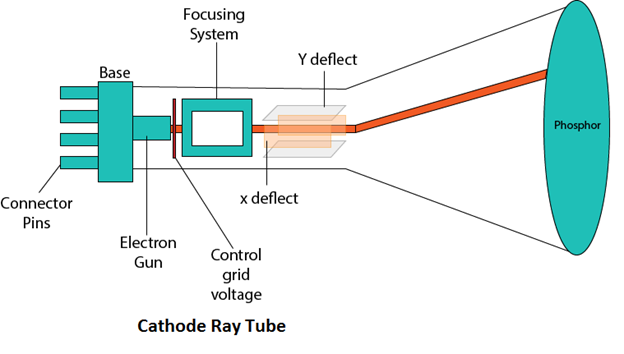

Inverter

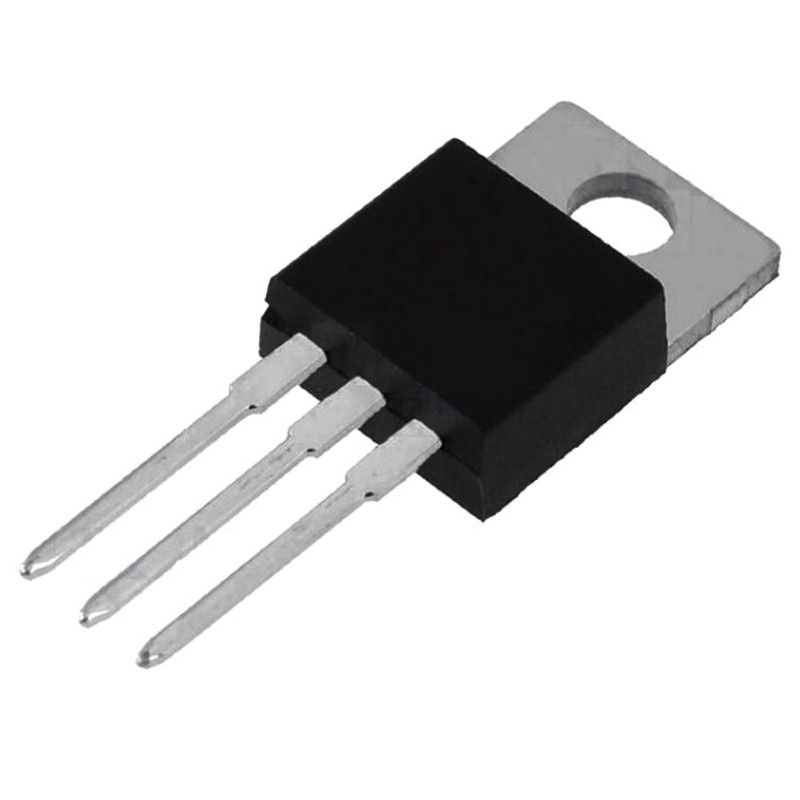

An inverter is a device that converts DC power to the AC power used in an electric vehicle motor. The inverter can change the speed at which the motor rotates by adjusting the frequency of the alternating current. It can also increase or decrease the power or torque of the motor by adjusting the amplitude of the signal.

Battery

An electric vehicle uses a battery to store electrical energy that is ready to use. A battery pack is made up of a number of cells that are grouped into modules. Once the battery has sufficient energy stored, the vehicle is ready to use.

Battery technology has improved hugely in recent years. Current EV batteries are lithium based. These have a very low rate of discharge. This means an EV should not lose charge if it isn't driven for a few days, or even weeks.

Battery charger

The battery charger converts the AC power available on our electricity network to DC power stored in a battery. It controls the voltage level of the battery cells by adjusting the rate of charge. It will also monitor the cell temperatures and control the charge to help keep the battery healthy.

Controller

The controller is like the brain of a vehicle, managing all of its parameters. It controls the rate of charge using information from the battery. It also translates pressure on the accelerator pedal to adjust speed in the motor inverter.

Charging cable

A charging cable for standard charging is supplied with and stored in the vehicle. It's used for charging at home or at standard public charge points. A fast charge point will have its own cable.

Details

An electric vehicle (EV) is a vehicle that uses one or more electric motors for propulsion. The vehicle can be powered by a collector system, with electricity from extravehicular sources, or can be powered autonomously by a battery or by converting fuel to electricity using a generator or fuel cells. EVs include road and rail vehicles, electric boats and underwater vessels, electric aircraft and electric spacecraft.

Early electric vehicles first came into existence in the late 19th century, when the Second Industrial Revolution brought forth electrification. Using electricity was among the preferred methods for motor vehicle propulsion as it provides a level of quietness, comfort and ease of operation that could not be achieved by the gasoline engine cars of the time, but range anxiety due to the limited energy storage offered by contemporary battery technologies hindered any mass adoption of private electric vehicles throughout the 20th century. Internal combustion engines (both gasoline and diesel engines) were the dominant propulsion mechanisms for cars and trucks for about 100 years, but electricity-powered locomotion remained commonplace in other vehicle types, such as overhead line-powered mass transit vehicles like electric trains, trams, monorails and trolley buses, as well as various small, low-speed, short-range battery-powered personal vehicles such as mobility scooters.

Hybrid electric vehicles, where electric motors are used as a supplementary propulsion to internal combustion engines, became more widespread in the late 1990s. Plug-in hybrid electric vehicles, where electric motors can be used as the predominant propulsion rather than a supplement, did not see any mass production until the late 2000s, and battery electric cars did not become practical options for the consumer market until the 2010s.

Progress in batteries, electric motors and power electronics have made electric cars more feasible than during the 20th century. As a means of reducing tailpipe emissions of carbon dioxide and other pollutants, and to reduce use of fossil fuels, government incentives are available in many areas to promote the adoption of electric cars and trucks.

History

Electric motive power started in 1827 when Hungarian priest Ányos Jedlik built the first crude but viable electric motor; the next year he used it to power a small model car. In 1835, Professor Sibrandus Stratingh of the University of Groningen, in the Netherlands, built a small-scale electric car, and sometime between 1832 and 1839, Robert Anderson of Scotland invented the first crude electric carriage, powered by non-rechargeable primary cells. American blacksmith and inventor Thomas Davenport built a toy electric locomotive, powered by a primitive electric motor, in 1835. In 1838, a Scotsman named Robert Davidson built an electric locomotive that attained a speed of four miles per hour (6 km/h). In England, a patent was granted in 1840 for the use of rails as conductors of electric current, and similar American patents were issued to Lilley and Colten in 1847.

The first mass-produced electric vehicles appeared in America in the early 1900s. In 1902, the Studebaker Automobile Company entered the automotive business with electric vehicles, though it also entered the gasoline vehicles market in 1904. However, with the advent of cheap assembly line cars by Ford Motor Company, the popularity of electric cars declined significantly.

Due to lack of electricity grids and the limitations of storage batteries at that time, electric cars did not gain much popularity; however, electric trains gained immense popularity due to their economies and achievable speeds. By the 20th century, electric rail transport became commonplace due to advances in the development of electric locomotives. Over time their general-purpose commercial use reduced to specialist roles as platform trucks, forklift trucks, ambulances, tow tractors, and urban delivery vehicles, such as the iconic British milk float. For most of the 20th century, the UK was the world's largest user of electric road vehicles.

Electrified trains were used for coal transport, as the motors did not use the valuable oxygen in the mines. Switzerland's lack of natural fossil resources forced the rapid electrification of their rail network. One of the earliest rechargeable batteries – the nickel-iron battery – was favored by Edison for use in electric cars.

EVs were among the earliest automobiles, and before the preeminence of light, powerful internal combustion engines (ICEs), electric automobiles held many vehicle land speed and distance records in the early 1900s. They were produced by Baker Electric, Columbia Electric, Detroit Electric, and others, and at one point in history outsold gasoline-powered vehicles. In 1900, 28 percent of the cars on the road in the US were electric. EVs were so popular that even President Woodrow Wilson and his secret service agents toured Washington, D.C., in their Milburn Electrics, which covered 60–70 miles (100–110 km) per charge.

Most producers of passenger cars opted for gasoline cars in the first decade of the 20th century, but electric trucks were an established niche well into the 1920s. A number of developments contributed to a decline in the popularity of electric cars. Improved road infrastructure required a greater range than that offered by electric cars, and the discovery of large reserves of petroleum in Texas, Oklahoma, and California led to the wide availability of affordable gasoline/petrol, making internal combustion powered cars cheaper to operate over long distances. Electric vehicles were seldom marketed as a women's luxury car, which may have been a stigma among male consumers. Also, internal combustion powered cars became ever-easier to operate thanks to the invention of the electric starter by Charles Kettering in 1912, which eliminated the need of a hand crank for starting a gasoline engine, and the noise emitted by ICE cars became more bearable thanks to the use of the muffler, which Hiram Percy Maxim had invented in 1897. As roads were improved outside urban areas, electric vehicle range could not compete with the ICE. Finally, the initiation of mass production of gasoline-powered vehicles by Henry Ford in 1913 reduced significantly the cost of gasoline cars as compared to electric cars.

In the 1930s, National City Lines, which was a partnership of General Motors, Firestone, and Standard Oil of California purchased many electric tram networks across the country to dismantle them and replace them with GM buses. The partnership was convicted of conspiring to monopolize the sale of equipment and supplies to their subsidiary companies, but was acquitted of conspiring to monopolize the provision of transportation services.

The Copenhagen Summit, which was conducted in the midst of a severe observable climate change brought on by human-made greenhouse gas emissions, was held in 2009. During the summit, more than 70 countries developed plans to eventually reach net zero. For many countries, adopting more EVs will help reduce the use of gasoline.

Experimentation

In January 1990, General Motors President introduced its EV concept two-seater, the "Impact", at the Los Angeles Auto Show. That September, the California Air Resources Board mandated major-automaker sales of EVs, in phases starting in 1998. From 1996 to 1998 GM produced 1117 EV1s, 800 of which were made available through three-year leases.

Chrysler, Ford, GM, Honda, and Toyota also produced limited numbers of EVs for California drivers during this time period. In 2003, upon the expiration of GM's EV1 leases, GM discontinued them. The discontinuation has variously been attributed to:

* the auto industry's successful federal court challenge to California's zero-emissions vehicle mandate,

* a federal regulation requiring GM to produce and maintain spare parts for the few thousand EV1s and

* the success of the oil and auto industries' media campaign to reduce public acceptance of EVs.

A movie made on the subject in 2005–2006 was titled Who Killed the Electric Car? and released theatrically by Sony Pictures Classics in 2006. The film explores the roles of automobile manufacturers, oil industry, the U.S. government, batteries, hydrogen vehicles, and the general public, and each of their roles in limiting the deployment and adoption of this technology.

Ford released a number of their Ford Ecostar delivery vans into the market. Honda, Nissan and Toyota also repossessed and crushed most of their EVs, which, like the GM EV1s, had been available only by closed-end lease. After public protests, Toyota sold 200 of its RAV4 EVs; they later sold at over their original forty-thousand-dollar price. Later, BMW of Canada sold off a number of Mini EVs when their Canadian testing ended.

The production of the Citroën Berlingo Electrique stopped in September 2005. Zenn started production in 2006 but ended by 2009.

Reintroduction

During the late 20th and early 21st century, the environmental impact of the petroleum-based transportation infrastructure, along with the fear of peak oil, led to renewed interest in electric transportation infrastructure. EVs differ from fossil fuel-powered vehicles in that the electricity they consume can be generated from a wide range of sources, including fossil fuels, nuclear power, and renewables such as solar power and wind power, or any combination of those. Recent advancements in battery technology and charging infrastructure have addressed many of the earlier barriers to EV adoption, making electric vehicles a more viable option for a wider range of consumers.

The carbon footprint and other emissions of electric vehicles vary depending on the fuel and technology used for electricity generation. The electricity may be stored in the vehicle using a battery, flywheel, or supercapacitors. Vehicles using internal combustion engines usually only derive their energy from a single or a few sources, usually non-renewable fossil fuels. A key advantage of electric vehicles is regenerative braking, which recovers kinetic energy, typically lost during friction braking as heat, as electricity restored to the on-board battery.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2280 2024-08-31 22:07:14

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,770

Re: Miscellany

2280) Slipper / Flip-flops

Gist

A slipper is a kind of indoor shoe that slips easily on and off your foot. You may prefer to walk around barefoot unless it's really cold, in which case you wear slippers.

Slippers are cozy, and they're often warm too. A more old fashioned kind of slipper was a dress shoe that slipped on the foot, rather than being buckled or buttoned—like Cinderella's glass slipper. The word comes from the fact that you can slip a slipper on or off easily. It's related to the Old English slypescoh, literally "slip-shoe."

Summary

Slippers are a type of shoes falling under the broader category of light footwear, that are easy to put on and off and are intended to be worn indoors, particularly at home. They provide comfort and protection for the feet when walking indoors.

History

The recorded history of slippers can be traced back to the 12th century. In the West, the record can only be traced to 1478. The English word "slippers" (sclyppers) occurs from about 1478. English-speakers formerly also used the related term "pantofles" (from French pantoufle).

Slippers in China date from 4700 BCE; they were made of cotton or woven rush, had leather linings, and featured symbols of power, such as dragons.

Native American moccasins were also highly decorative. Such moccasins depicted nature scenes and were embellished with beadwork and fringing; their soft sure-footedness made them suitable for indoors appropriation. Inuit and Aleut people made shoes from smoked hare-hide to protect their feet against the frozen ground inside their homes.

Fashionable Orientalism saw the introduction into the West of designs like the baboosh.

Victorian people needed such shoes to keep the dust and gravel outside their homes. For Victorian ladies slippers gave an opportunity to show off their needlepoint skills and to use embroidery as decoration.

Types

Types of slippers include:

* Open-heel slippers – usually made with a fabric upper layer that encloses the top of the foot and the toes, but leaves the heel open. These are often distributed in expensive hotels, included with the cost of the room.

* Closed slippers – slippers with a heel guard that prevents the foot from sliding out.

* Slipper boots – slippers meant to look like boots. Often favored by women, they are typically furry boots with a fleece or soft lining, and a soft rubber sole. Modeled after sheepskin boots, they may be worn outside.

* Sandal slippers – cushioned sandals with soft rubber or fabric soles, similar to Birkenstock's cushioned sandals.

* Evening slipper, also known as the "Prince Albert" slipper in reference to Albert, Prince Consort. It is made of velvet with leather soles and features a grosgrain bow or the wearer’s initials embroidered in gold.

Novelty animal-feet slippers

Some slippers are made to resemble something other than a slipper and are sold as a novelty item. The slippers are usually made from soft and colorful materials and may come in the shapes of animals, animal paws, vehicles, cartoon characters, etc.

Not all shoes with a soft fluffy interior are slippers. Any shoe with a rubber sole and laces is a normal outdoor shoe. In India, rubber chappals (flip-flops) are worn as indoor shoes.

In popular culture

The fictional character Cinderella is said to have worn glass slippers; in modern parlance, they would probably be called glass high heels. This motif was introduced in Charles Perrault's 1697 version of the fairy tale, "Cendrillon ou la petite pantoufle de verre" "Cinderella, or The Little Glass Slipper". For some years it was debated that this detail was a mistranslation and the slippers in the story were instead made of fur (French: vair), but this interpretation has since been discredited by folklorists.

A pair of ruby slippers worn by Judy Garland in The Wizard of Oz sold at Christie's in June 1988 for $165,000. The same pair was resold on May 24, 2000, for $666,000. On both occasions, they were the most expensive shoes from a film to be sold at auction.

In Hawaii and many islands of The Caribbean, slippers, or "slippahs" is used for describing flip-flops.

The term "house shoes" (elided into how-shuze) is common in the American South.

Details

Flip-flops are a type of light sandal-like shoe, typically worn as a form of casual footwear. They consist of a flat sole held loosely on the foot by a Y-shaped strap known as a toe thong that passes between the first and second toes and around both sides of the foot. This style of footwear has been worn by people of many cultures throughout the world, originating as early as the ancient Egyptians in 1500 BC. In the United States the modern flip-flop may have had its design taken from the traditional Japanese zōri after World War II, as soldiers brought them back from Japan.

Flip-flops became a prominent unisex summer footwear starting in the 1960s.

"Flip-flop" etymology and other names

The term flip-flop has been used in American and British English since the 1960s to describe inexpensive footwear consisting of a flat base, typically rubber, and a strap with three anchor points: between the big and second toes, then bifurcating to anchor on both sides of the foot. "Flip-flop" may be an onomatopoeia of the sound made by the sandals when walking in them.

Flip-flops are also called thongs (sometimes pluggers, single- or double- depending on construction) in Australia, jandals (originally a trademarked name derived from "Japanese sandals") in New Zealand, and slops or plakkies in South Africa and Zimbabwe.

In the Philippines, they are called tsinelas.

In India, they are called chappals, (which traditionally referred to leather slippers). This is hypothesized to have come from the Telugu word ceppu, from Proto-Dravidian *keruppu, meaning "sandal".

In some parts of Latin America, flip-flops are called chanclas. Throughout the world, they are also known by a variety of other names, including slippers in the Bahamas, Hawai‘i, Jamaica and Trinidad and Tobago.

History

Thong sandals have been worn for thousands of years, as shown in images of them in ancient Egyptian murals from 4,000 BC. A pair found in Europe was made of papyrus leaves and dated to be approximately 1,500 years old. These early versions of flip-flops were made from a wide variety of materials. Ancient Egyptian sandals were made from papyrus and palm leaves. The Maasai people of Africa made them out of rawhide. In India, they were made from wood. In China and Japan, rice straw was used. The leaves of the sisal plant were used to make twine for sandals in South America, while the natives of Mexico used the yucca plant.

The Ancient Greeks and Romans wore versions of flip-flops as well. In Greek sandals, the toe strap was worn between the first and second toes, while Roman sandals had the strap between the second and third toes. These differ from the sandals worn by the Mesopotamians, with the strap between the third and fourth toes. In India, a related "toe knob" sandal was common, with no straps but instead a small knob located between the first and second toes. They are known as Padukas.

The modern flip-flop became popular in the United States as soldiers returning from World War II brought Japanese zōri with them. It caught on in the 1950s during the postwar boom and after the end of hostilities of the Korean War. As they became adopted into American popular culture, the sandals were redesigned and changed into the bright colors that dominated 1950s design. They quickly became popular due to their convenience and comfort, and were popular in beach-themed stores and as summer shoes. During the 1960s, flip-flops became firmly associated with the beach lifestyle of California. As such, they were promoted as primarily a casual accessory, typically worn with shorts, bathing suits, or summer dresses. As they became more popular, some people started wearing them for dressier or more formal occasions.

In 1962, Alpargatas S.A. marketed a version of flip-flops known as Havaianas in Brazil. By 2010, more than 150 million pairs of Havaianas were produced each year. By 2019, production tops 200 million pairs per year. Prices range from under $5 for basics to more than $50 for high-end fashion models.

Flip-flops quickly became popular as casual footwear of young adults. Girls would often decorate their flip-flops with metallic finishes, charms, chains, beads, rhinestones, or other jewelry. Modern flip-flops are available in leather, suede, cloth or synthetic materials such as plastic. Platform and high-heeled variants of the sandals began to appear in the 1990s, and in the late 2010s, kitten heeled "kit-flops".

In the U.S., flip-flops with college colors and logos became common for fans to wear to intercollegiate games. In 2011, while vacationing in his native Hawaii, Barack Obama became the first President of the United States to be photographed wearing a pair of flip-flops. The Dalai Lama of Tibet is also a frequent wearer of flip-flops and has met with several U.S. presidents, including George W. Bush and Barack Obama, while wearing the sandals.

While exact sales figures for flip-flops are difficult to obtain due to the large number of stores and manufacturers involved, the Atlanta-based company Flip Flop Shops claimed that the shoes were responsible for a $20 billion industry in 2009. Furthermore, sales of flip-flops exceeded those of sneakers for the first time in 2006. If these figures are accurate, it is remarkable considering the low cost of most flip-flops.

Design and custom

The modern flip-flop has a straightforward design, consisting of a thin sole with two straps running in a Y shape from the sides of the foot to the gap between the big toe and the one beside it. Flip-flops are made from a wide variety of materials, as were the ancient thong sandals. The modern sandals are made of more modern materials, such as rubber, foam, plastic, leather, suede, and even fabric. Flip-flops made of polyurethane have caused some environmental concerns; because polyurethane is a number 7 resin, they can't be easily discarded, and they persist in landfills for a very long time. In response to these concerns, some companies have begun selling flip-flops made from recycled rubber, such as that from used bicycle tires, or even hemp, and some offer a recycling program for used flip flops.

Because of the strap between the toes, flip-flops are typically not worn with socks. In colder weather, however, some people wear flip-flops with toe socks or merely pull standard socks forward and bunch them up between the toes. The Japanese commonly wear tabi, a type of sock with a single slot for the thong, with their zōri.

Flip-flop health issues

Flip-flops provide the wearer with some mild protection from hazards on the ground, such as sharp rocks, splintery wooden surfaces, hot sand at the beach, broken glass, or even fungi and wart-causing viruses in locker rooms or community pool surfaces. However, walking for long periods in flip-flops can result in pain in the feet, ankles and lower legs or tendonitis.

The flip-flop straps may cause frictional issues, such as rubbing during walking, resulting in blisters, and the open-toed design may result in stubbed or even broken toes. Particularly, individuals with flat feet or other foot issues are advised to wear a shoe or sandal with better support.

The American Podiatric Medical Association strongly recommends that people not play sports in flip-flops, or do any type of yard work with or without power tools, including cutting the grass, when they wear these shoes. There are reports of people who ran or jumped in flip-flops and suffered sprained ankles, fractures, and severe ligament injuries that required surgery.

Because they provide almost no protection from the sun, on a part of the body more heavily exposed and where sunscreen can more easily be washed off, sunburn can be a risk for flip-flop wearers.

Flip-flops in popular culture

For many Latin Americans, la chancla (the flip-flop), held or thrown, is known to be used as a tool of corporal punishment by mothers, similar to the use of slippers for the same purpose in Europe. Poor conduct in public may elicit being struck on the head with a flip-flop. The flip-flop may also be thrown at a misbehaving child. For many children, even the threat of the mother reaching down to take off a flip-flop and hold it in her raised hand is considered enough to correct their behaviour. The notoriety of the practice has become an Internet meme among Latin Americans and Hispanic and Latino immigrants to the United States. In recent years, the practice has been increasingly condemned as physically abusive. One essay, "The Meaning of Chancla: Flip Flops and Discipline", seeks to end "chancla culture" in disciplining children.

In India, a chappal is traditionally a leather slipper, but the term has also come to include flip-flops. Throwing a chappal became a video trope, "flying chappal," and "Flying chappal received" an expression by an adult acknowledging that they had been verbally chastised by their parents or other adults.

Flip-flops are "tsinelas" in the Philippines, derived from the Spanish "chinela" (for slipper), and are used to discipline children, but with no mention of throwing. And children play Tumbang preso, which involves trying to knock over a can with thrown flip-flops.

When the Los Angeles–based Angel City FC and San Diego Wave FC joined the National Women's Soccer League in 2022, a leader in an Angel City supporters' group called the new regional rivalry La Chanclásico as a nod to the region's Hispanic heritage. The rivalry name combines chancla with clásico ("classic"), used in Spanish to describe many sports rivalries. The Chanclásico name quickly caught on with both fanbases, and before the first game between the teams, the aforementioned Angel City supporter created a rivalry trophy consisting of a flip-flop mounted on a trophy base and covered with gold spray paint. The rivalry name was effectively codified via a tweet from Wave and US national team star Alex Morgan.

As part of Q150 celebrations in 2009 celebrating the first 150 years of Queensland, Australia, the Queensland Government published a list of 150 cultural icons of Queensland, representing the people, places, events, and things that were significant to Queensland's first 150 years. Thongs (as they are known in Queensland) were among as the Q150 Icons.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2281 2024-09-01 16:05:55

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,770

Re: Miscellany

2281) Pediatrics

Gist

Pediatrics is the branch of medicine dealing with the health and medical care of infants, children, and adolescents from birth up to the age of 18. The word “paediatrics” means “healer of children”; they are derived from two Greek words: (pais = child) and (iatros = doctor or healer).

Summary

Pediatrics is the branch of medicine dealing with the health and medical care of infants, children, and adolescents from birth up to the age of 18. The word “paediatrics” means “healer of children”; they are derived from two Greek words: (pais = child) and (iatros = doctor or healer). Paediatrics is a relatively new medical specialty, developing only in the mid-19th century. Abraham Jacobi (1830–1919) is known as the father of paediatrics.

What does a pediatrician do?

A paediatrician is a child's physician who provides not only medical care for children who are acutely or chronically ill but also preventive health services for healthy children. A paediatrician manages physical, mental, and emotional well-being of the children under their care at every stage of development, in both sickness and health.

Aims of pediatrics

The aims of the study of paediatrics is to reduce infant and child rate of deaths, control the spread of infectious disease, promote healthy lifestyles for a long disease-free life and help ease the problems of children and adolescents with chronic conditions.

Paediatricians diagnose and treat several conditions among children including:-

* injuries

* infections

* genetic and congenital conditions

* cancers

* organ diseases and dysfunctions

Paediatrics is concerned not only about immediate management of the ill child but also long term effects on quality of life, disability and survival. Paediatricians are involved with the prevention, early detection, and management of problems including:-

* developmental delays and disorders

* behavioral problems

* functional disabilities

* social stresses

* mental disorders including depression and anxiety disorders

Collaboration with other specialists

Paediatrics is a collaborative specialty. Paediatricians need to work closely with other medical specialists and healthcare professionals and subspecialists of paediatrics to help children with problems.

Details

Pediatrics (American English) also spelled paediatrics or pædiatrics (British English), is the branch of medicine that involves the medical care of infants, children, adolescents, and young adults. In the United Kingdom, pediatrics covers many of their youth until the age of 18. The American Academy of Pediatrics recommends people seek pediatric care through the age of 21, but some pediatric subspecialists continue to care for adults up to 25. Worldwide age limits of pediatrics have been trending upward year after year. A medical doctor who specializes in this area is known as a pediatrician, or paediatrician. The word pediatrics and its cognates mean "healer of children", derived from the two Greek words: παῖς (pais "child") and ἰατρός (iatros "doctor, healer"). Pediatricians work in clinics, research centers, universities, general hospitals and children's hospitals, including those who practice pediatric subspecialties (e.g. neonatology requires resources available in a NICU).

History

The earliest mentions of child-specific medical problems appear in the Hippocratic Corpus, published in the fifth century B.C., and the famous Sacred Disease. These publications discussed topics such as childhood epilepsy and premature births. From the first to fourth centuries A.D., Greek philosophers and physicians Celsus, Soranus of Ephesus, Aretaeus, Galen, and Oribasius, also discussed specific illnesses affecting children in their works, such as rashes, epilepsy, and meningitis. Already Hippocrates, Aristotle, Celsus, Soranus, and Galen understood the differences in growing and maturing organisms that necessitated different treatment: Ex toto non sic pueri ut viri curari debent ("In general, boys should not be treated in the same way as men"). Some of the oldest traces of pediatrics can be discovered in Ancient India where children's doctors were called kumara bhrtya.

Even though some pediatric works existed during this time, they were scarce and rarely published due to a lack of knowledge in pediatric medicine. Sushruta Samhita, an ayurvedic text composed during the sixth century BCE, contains the text about pediatrics. Another ayurvedic text from this period is Kashyapa Samhita. A second century AD manuscript by the Greek physician and gynecologist Soranus of Ephesus dealt with neonatal pediatrics. Byzantine physicians Oribasius, Aëtius of Amida, Alexander Trallianus, and Paulus Aegineta contributed to the field.[6] The Byzantines also built brephotrophia (crêches). Islamic Golden Age writers served as a bridge for Greco-Roman and Byzantine medicine and added ideas of their own, especially Haly Abbas, Yahya Serapion, Abulcasis, Avicenna, and Averroes. The Persian philosopher and physician al-Razi (865–925), sometimes called the father of pediatrics, published a monograph on pediatrics titled Diseases in Children. Also among the first books about pediatrics was Libellus [Opusculum] de aegritudinibus et remediis infantium 1472 ("Little Book on Children Diseases and Treatment"), by the Italian pediatrician Paolo Bagellardo.[14][5] In sequence came Bartholomäus Metlinger's Ein Regiment der Jungerkinder 1473, Cornelius Roelans (1450–1525) no title Buchlein, or Latin compendium, 1483, and Heinrich von Louffenburg (1391–1460) Versehung des Leibs written in 1429 (published 1491), together form the Pediatric Incunabula, four great medical treatises on children's physiology and pathology.

While more information about childhood diseases became available, there was little evidence that children received the same kind of medical care that adults did. It was during the seventeenth and eighteenth centuries that medical experts started offering specialized care for children. The Swedish physician Nils Rosén von Rosenstein (1706–1773) is considered to be the founder of modern pediatrics as a medical specialty, while his work The diseases of children, and their remedies (1764) is considered to be "the first modern textbook on the subject". However, it was not until the nineteenth century that medical professionals acknowledged pediatrics as a separate field of medicine. The first pediatric-specific publications appeared between the 1790s and the 1920s.

Etymology

The term pediatrics was first introduced in English in 1859 by Abraham Jacobi. In 1860, he became "the first dedicated professor of pediatrics in the world." Jacobi is known as the father of American pediatrics because of his many contributions to the field. He received his medical training in Germany and later practiced in New York City.

The first generally accepted pediatric hospital is the Hôpital des Enfants Malades (French: Hospital for Sick Children), which opened in Paris in June 1802 on the site of a previous orphanage. From its beginning, this famous hospital accepted patients up to the age of fifteen years, and it continues to this day as the pediatric division of the Necker-Enfants Malades Hospital, created in 1920 by merging with the nearby Necker Hospital, founded in 1778.

In other European countries, the Charité (a hospital founded in 1710) in Berlin established a separate Pediatric Pavilion in 1830, followed by similar institutions at Saint Petersburg in 1834, and at Vienna and Breslau (now Wrocław), both in 1837. In 1852 Britain's first pediatric hospital, the Hospital for Sick Children, Great Ormond Street was founded by Charles West. The first Children's hospital in Scotland opened in 1860 in Edinburgh. In the US, the first similar institutions were the Children's Hospital of Philadelphia, which opened in 1855, and then Boston Children's Hospital (1869). Subspecialties in pediatrics were created at the Harriet Lane Home at Johns Hopkins by Edwards A. Park.

Differences between adult and pediatric medicine

The body size differences are paralleled by maturation changes. The smaller body of an infant or neonate is substantially different physiologically from that of an adult. Congenital defects, genetic variance, and developmental issues are of greater concern to pediatricians than they often are to adult physicians. A common adage is that children are not simply "little adults". The clinician must take into account the immature physiology of the infant or child when considering symptoms, prescribing medications, and diagnosing illnesses.

Pediatric physiology directly impacts the pharmacokinetic properties of drugs that enter the body. The absorption, distribution, metabolism, and elimination of medications differ between developing children and grown adults. Despite completed studies and reviews, continual research is needed to better understand how these factors should affect the decisions of healthcare providers when prescribing and administering medications to the pediatric population.

Absorption

Many drug absorption differences between pediatric and adult populations revolve around the stomach. Neonates and young infants have increased stomach pH due to decreased acid secretion, thereby creating a more basic environment for drugs that are taken by mouth. Acid is essential to degrading certain oral drugs before systemic absorption. Therefore, the absorption of these drugs in children is greater than in adults due to decreased breakdown and increased preservation in a less acidic gastric space.

Children also have an extended rate of gastric emptying, which slows the rate of drug absorption.

Drug absorption also depends on specific enzymes that come in contact with the oral drug as it travels through the body. Supply of these enzymes increase as children continue to develop their gastrointestinal tract. Pediatric patients have underdeveloped proteins, which leads to decreased metabolism and increased serum concentrations of specific drugs. However, prodrugs experience the opposite effect because enzymes are necessary for allowing their active form to enter systemic circulation.

Distribution

Percentage of total body water and extracellular fluid volume both decrease as children grow and develop with time. Pediatric patients thus have a larger volume of distribution than adults, which directly affects the dosing of hydrophilic drugs such as beta-lactam antibiotics like ampicillin. Thus, these drugs are administered at greater weight-based doses or with adjusted dosing intervals in children to account for this key difference in body composition.

Infants and neonates also have fewer plasma proteins. Thus, highly protein-bound drugs have fewer opportunities for protein binding, leading to increased distribution.

Metabolism

Drug metabolism primarily occurs via enzymes in the liver and can vary according to which specific enzymes are affected in a specific stage of development. Phase I and Phase II enzymes have different rates of maturation and development, depending on their specific mechanism of action (i.e. oxidation, hydrolysis, acetylation, methylation, etc.). Enzyme capacity, clearance, and half-life are all factors that contribute to metabolism differences between children and adults. Drug metabolism can even differ within the pediatric population, separating neonates and infants from young children.

Elimination

Drug elimination is primarily facilitated via the liver and kidneys. In infants and young children, the larger relative size of their kidneys leads to increased renal clearance of medications that are eliminated through urine. In preterm neonates and infants, their kidneys are slower to mature and thus are unable to clear as much drug as fully developed kidneys. This can cause unwanted drug build-up, which is why it is important to consider lower doses and greater dosing intervals for this population. Diseases that negatively affect kidney function can also have the same effect and thus warrant similar considerations.

Pediatric autonomy in healthcare

A major difference between the practice of pediatric and adult medicine is that children, in most jurisdictions and with certain exceptions, cannot make decisions for themselves. The issues of guardianship, privacy, legal responsibility, and informed consent must always be considered in every pediatric procedure. Pediatricians often have to treat the parents and sometimes, the family, rather than just the child. Adolescents are in their own legal class, having rights to their own health care decisions in certain circumstances. The concept of legal consent combined with the non-legal consent (assent) of the child when considering treatment options, especially in the face of conditions with poor prognosis or complicated and painful procedures/surgeries, means the pediatrician must take into account the desires of many people, in addition to those of the patient.

History of pediatric autonomy

The term autonomy is traceable to ethical theory and law, where it states that autonomous individuals can make decisions based on their own logic. Hippocrates was the first to use the term in a medical setting. He created a code of ethics for doctors called the Hippocratic Oath that highlighted the importance of putting patients' interests first, making autonomy for patients a top priority in health care.

In ancient times, society did not view pediatric medicine as essential or scientific. Experts considered professional medicine unsuitable for treating children. Children also had no rights. Fathers regarded their children as property, so their children's health decisions were entrusted to them. As a result, mothers, midwives, "wise women", and general practitioners treated the children instead of doctors. Since mothers could not rely on professional medicine to take care of their children, they developed their own methods, such as using alkaline soda ash to remove the vernix at birth and treating teething pain with opium or wine. The absence of proper pediatric care, rights, and laws in health care to prioritize children's health led to many of their deaths. Ancient Greeks and Romans sometimes even killed healthy female babies and infants with deformities since they had no adequate medical treatment and no laws prohibiting infanticide.