Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#2326 2024-09-29 00:10:34

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,012

Re: Miscellany

2326) Sand clock

Gist

An hourglass (or sandglass, sand timer, or sand clock) is a device used to measure the passage of time. It comprises two glass bulbs connected vertically by a narrow neck that allows a regulated flow of a substance (historically sand) from the upper bulb to the lower one due to gravity.

A sand clock works on the principle that all the sand from the upper chamber falls into the lower chamber in a fixed amount of time.

Which country invented sand clock?

Often referred to as the 'sand clock' the hourglass is not just a pretty ancient ornament tucked away on a modern shelf. Invented in the 8th century by a French monk called Liutprand, the hourglass was actually used as a timekeeping device.

An hourglass is an early device for measuring intervals of time. It is also known as a sandglass or a log glass when used in conjunction with the common log for ascertaining the speed of a ship. It consists of two pear-shaped bulbs of glass, united at their apexes and having a minute passage formed between them.

Summary

hourglass, an early device for measuring intervals of time. It is also known as a sandglass or a log glass when used in conjunction with the common log for ascertaining the speed of a ship. It consists of two pear-shaped bulbs of glass, united at their apexes and having a minute passage formed between them. A quantity of sand (or occasionally mercury) is enclosed in the bulbs, and the size of the passage is so proportioned that this media will completely run through from one bulb to another in the time it is desired to measure—e.g., an hour or a minute. Instruments of this kind, which have no great pretensions to accuracy, were formerly common in churches.

Details

An hourglass (or sandglass, sand timer, or sand clock) is a device used to measure the passage of time. It comprises two glass bulbs connected vertically by a narrow neck that allows a regulated flow of a substance (historically sand) from the upper bulb to the lower one due to gravity. Typically, the upper and lower bulbs are symmetric so that the hourglass will measure the same duration regardless of orientation. The specific duration of time a given hourglass measures is determined by factors including the quantity and coarseness of the particulate matter, the bulb size, and the neck width.

Depictions of an hourglass as a symbol of the passage of time are found in art, especially on tombstones or other monuments, from antiquity to the present day. The form of a winged hourglass has been used as a literal depiction of the Latin phrase tempus fugit ("time flies").

History:

Antiquity

The origin of the hourglass is unclear. Its predecessor the clepsydra, or water clock, is known to have existed in Babylon and Egypt as early as the 16th century BCE.

Middle Ages

There are no records of the hourglass existing in Europe prior to the Late Middle Ages; the first documented example dates from the 14th century, a depiction in the 1338 fresco Allegory of Good Government by Ambrogio Lorenzetti.

Use of the marine sandglass has been recorded since the 14th century. The written records about it were mostly from logbooks of European ships. In the same period it appears in other records and lists of ships stores. The earliest recorded reference that can be said with certainty to refer to a marine sandglass dates from c. 1345, in a receipt of Thomas de Stetesham, clerk of the King's ship La George, in the reign of Edward III of England; translated from the Latin, the receipt says: in 1345:

The same Thomas accounts to have paid at Lescluse, in Flanders, for twelve glass horologes (" pro xii. orlogiis vitreis "), price of each 4½ gross', in sterling 9s. Item, For four horologes of the same sort (" de eadem secta "), bought there, price of each five gross', making in sterling 3s. 4d.

Marine sandglasses were popular aboard ships, as they were the most dependable measurement of time while at sea. Unlike the clepsydra, hourglasses using granular materials were not affected by the motion of a ship and less affected by temperature changes (which could cause condensation inside a clepsydra). While hourglasses were insufficiently accurate to be compared against solar noon for the determination of a ship's longitude (as an error of just four minutes would correspond to one degree of longitude), they were sufficiently accurate to be used in conjunction with a chip log to enable the measurement of a ship's speed in knots.

The hourglass also found popularity on land as an inexpensive alternative to mechanical clocks. Hourglasses were commonly seen in use in churches, homes, and work places to measure sermons, cooking time, and time spent on breaks from labor. Because they were being used for more everyday tasks, the model of the hourglass began to shrink. The smaller models were more practical and very popular as they made timing more discreet.

After 1500, the hourglass was not as widespread as it had been. This was due to the development of the mechanical clock, which became more accurate, smaller and cheaper, and made keeping time easier. The hourglass, however, did not disappear entirely. Although they became relatively less useful as clock technology advanced, hourglasses remained desirable in their design. The oldest known surviving hourglass resides in the British Museum in London.

Not until the 18th century did John Harrison come up with a marine chronometer that significantly improved on the stability of the hourglass at sea. Taking elements from the design logic behind the hourglass, he made a marine chronometer in 1761 that was able to accurately measure the journey from England to Jamaica accurate within five seconds.

Design

Little written evidence exists to explain why its external form is the shape that it is. The glass bulbs used, however, have changed in style and design over time. While the main designs have always been ampoule in shape, the bulbs were not always connected. The first hourglasses were two separate bulbs with a cord wrapped at their union that was then coated in wax to hold the piece together and let sand flow in between. It was not until 1760 that both bulbs were blown together to keep moisture out of the bulbs and regulate the pressure within the bulb that varied the flow.

Material

While some early hourglasses actually did use silica sand as the granular material to measure time, many did not use sand at all. The material used in most bulbs was "powdered marble, tin/lead oxides, [or] pulverized, burnt eggshell". Over time, different textures of granule matter were tested to see which gave the most constant flow within the bulbs. It was later discovered that for the perfect flow to be achieved the ratio of granule bead to the width of the bulb neck needed to be 1/12 or more but not greater than 1/2 the neck of the bulb.

Practical uses

Hourglasses were an early dependable and accurate measure of time. The rate of flow of the sand is independent of the depth in the upper reservoir, and the instrument will not freeze in cold weather. From the 15th century onwards, hourglasses were being used in a range of applications at sea, in the church, in industry, and in cookery.

During the voyage of Ferdinand Magellan around the globe, 18 hourglasses from Barcelona were in the ship's inventory, after the trip had been authorized by King Charles I of Spain. It was the job of a ship's page to turn the hourglasses and thus provide the times for the ship's log. Noon was the reference time for navigation, which did not depend on the glass, as the sun would be at its zenith. A number of sandglasses could be fixed in a common frame, each with a different operating time, e.g. as in a four-way Italian sandglass likely from the 17th century, in the collections of the Science Museum, in South Kensington, London, which could measure intervals of quarter, half, three-quarters, and one hour (and which were used in churches, for priests and ministers to measure lengths of sermons).

Modern practical uses

While hourglasses are no longer widely used for keeping time, some institutions do maintain them. Both houses of the Australian Parliament use three hourglasses to time certain procedures, such as divisions.

Sand timers are sometimes included with boardgames such as Pictionary and Boggle that place time constraints on rounds of play.

Symbolic uses

Unlike most other methods of measuring time, the hourglass concretely represents the present as being between the past and the future, and this has made it an enduring symbol of time as a concept.

The hourglass, sometimes with the addition of metaphorical wings, is often used as a symbol that human existence is fleeting, and that the "sands of time" will run out for every human life. It was used thus on pirate flags, to evoke fear through imagery associated with death. In England, hourglasses were sometimes placed in coffins, and they have graced gravestones for centuries. The hourglass was also used in alchemy as a symbol for hour.

The former Metropolitan Borough of Greenwich in London used an hourglass on its coat of arms, symbolising Greenwich's role as the origin of Greenwich Mean Time (GMT). The district's successor, the Royal Borough of Greenwich, uses two hourglasses on its coat of arms.

Modern symbolic uses

Recognition of the hourglass as a symbol of time has survived its obsolescence as a timekeeper. For example, the American television soap opera Days of Our Lives (1965–present) displays an hourglass in its opening credits, with narration by Macdonald Carey: "Like sands through the hourglass, so are the days of our lives."

Various computer graphical user interfaces may change the pointer to an hourglass while the program is in the middle of a task, and may not accept user input. During that period of time, other programs, such as those open in other windows, may work normally. When such an hourglass does not disappear, it suggests a program is in an infinite loop and needs to be terminated, or is waiting for some external event (such as the user inserting a CD).

Unicode has an HOURGLASS symbol at U+231B.

In the 21st century, the Extinction symbol came into use as a symbol of the Holocene extinction and climate crisis. The symbol features an hourglass to represent time "running out" for extinct and endangered species, and also to represent time "running out" for climate change mitigation.

Hourglass motif

Because of its symmetry, graphic signs resembling an hourglass are seen in the art of cultures which never encountered such objects. Vertical pairs of triangles joined at the apex are common in Native American art; both in North America, where it can represent, for example, the body of the Thunderbird or (in more elongated form) an enemy scalp, and in South America, where it is believed to represent a Chuncho jungle dweller. In Zulu textiles they symbolise a married man, as opposed to a pair of triangles joined at the base, which symbolise a married woman. Neolithic examples can be seen among Spanish cave paintings. Observers have even given the name "hourglass motif" to shapes which have more complex symmetry, such as a repeating circle and cross pattern from the Solomon Islands. Both the members of Project Tic Toc, from television series the Time Tunnel and the Challengers of the Unknown use symbols of the hourglass representing either time travel or time running out.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2327 2024-09-30 00:02:05

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,012

Re: Miscellany

2327) Asteroid

Gist

Asteroids are small, rocky objects that orbit the Sun. Although asteroids orbit the Sun like planets, they are much smaller than planets. Asteroids are small, rocky objects that orbit the sun. Although asteroids orbit the sun like planets, they are much smaller than planets.

They probably consist of clay and silicate rocks, and are dark in appearance. They are among the most ancient objects in the solar system. The S-types ("stony") are made up of silicate materials and nickel-iron. The M-types are metallic (nickel-iron).

Most asteroids can be found orbiting the Sun between Mars and Jupiter within the main asteroid belt. Asteroids range in size from Vesta – the largest at about 329 miles (530 kilometers) in diameter – to bodies that are less than 33 feet (10 meters) across.

Summary

Asteroids come in a variety of shapes and sizes and teach us about the formation of the solar system.

Asteroids are the rocky remnants of material leftover from the formation of the solar system and its planets approximately 4.6 billion years ago.

The majority of asteroids originate from the main asteroid belt located between Mars and Jupiter, according to NASA. NASA's current asteroid count is over 1 million.

Asteroids orbit the sun in highly flattened, or "elliptical" circles, often rotating erratically, tumbling and falling through space.

Many large asteroids have one or more small companion moons. An example of this is Didymos, a half-mile (780 meters) wide asteroid that is orbited by the moonlet Dimorphos which measures just 525 feet (160 m) across.

Asteroids are also often referred to as "minor planets" and can range in size from the largest known example, Vesta, which has a diameter of around 326 miles (525 kilometers), to bodies that are less than 33 feet (10 meters) across.

Vesta recently snatched the "largest asteroid title" from Ceres, which NASA now classifies as a dwarf planet. Ceres is the largest object in the main asteroid belt while Vesta is the second largest.

As well as coming in a range of sizes, asteroids come in a variety of shapes from near spheres to irregular double-lobed peanut-shaped asteroids like Itokawa. Most asteroid surfaces are pitted with impact craters from collisions with other space rocks.

Though a majority of asteroids lurk in the asteroid belt, NASA says, the massive gravitational influence of Jupiter, the solar system's largest planet, can send them hurtling through in random directions, including through the inner solar system and thus towards Earth. But don't worry, NASA's Planetary Defense Coordination Office is keeping a watchful eye on near-Earth objects (NEOs), including asteroids, to assess the impact hazard and aid the U.S. government in planning for a response to a possible impact threat.

What is an asteroid?

Using NASA definitions, an asteroid is "A relatively small, inactive, rocky body orbiting the sun," while a comet is a "relatively small, at times active, object whose ices can vaporize in sunlight forming an atmosphere (coma) of dust and gas and, sometimes, a tail of dust and/or gas."

Additionally, a meteorite is a "meteoroid that survives its passage through the Earth's atmosphere and lands upon the Earth's surface" and a meteor is defined as a "light phenomenon which results when a meteoroid enters the Earth's atmosphere and vaporizes; a shooting star."

What are asteroids made of?

Before the formation of the planets of the solar system, the infant sun was surrounded by a disk of dust and gas, called a protoplanetary disk. While most of this disk collapsed to form the planets some material was left over.

"Simply put, asteroids are leftovers rocky material from the time of the solar system formation. They are the initial bricks that built the planets," Fred Jourdan, a planetary scientist at Curtin University told Space.com in an email "So all the material that formed all those asteroids is about 4.55 billion years old."

In the chaotic conditions of the early solar system, this material repeatedly clashed together with small grains clustering to form small rocks, which clustered to form larger rocks and eventually planetesimals — bodies that don't grow large enough to form planets. Further collisions shattered apart these planetesimals, with these fragments and rocks forming the asteroids we see today.

"All that happened 4.5 billion years ago but the solar system has remained a very dynamic place since then," Jourdan added. "During the next few billions of years until the present, some asteroids smashed into each other and destroyed each other, and the debris recombined and formed what we call rubble pile asteroids."

This means asteroids can also differ by how solid they are. Some asteroids are one solid monolithic body, while others like Bennu are essentially floating rubble piles, made of smaller bodies loosely bound together gravitationally.

"I would say there are three types of asteroids. The first one is the monolith chondritic asteroid, so that's the real brick of the solar system," Jourdan explained. "These asteroids remained relatively unchanged since their formation. Some of them are rich in silicates, and some of them are rich in carbon with different tales to tell."

The second type is the differentiated asteroids which for a while behaved like they were tiny planets forming a metallic core, a mantle, and a volcanic crust. Jourdan said these asteroids would look layered like an egg if cut from the side, with the best example of this being Vesta, which he calls his "favorite asteroid."

"The last type is the rubble pile asteroids so it's just when asteroids smashed into each other and the fragment that is ejected reassemble together," Jourdan continued. These asteroids are made of boulders, rocks, pebbles, dust, and a lot of void spacing which makes them so resistant to any further impacts. In that regard, rubble piles are a bit like giant space cushions."

How often do asteroids hit Earth?

Asteroids large enough to cause damage on the ground hit Earth about once per century, as you go to larger and larger asteroids, the impacts are increasingly infrequent. At the extremely small end, desk-sized asteroids hit Earth about once a month, but they just produce bright fireballs as they burn up in the atmosphere. As you go to larger and larger asteroids, the impacts are increasingly infrequent.

What's the difference between asteroids, meteorites and comets?

Asteroids are the rocky/dusty small bodies orbiting the sun. Meteorites are pieces on the ground left over after an asteroid breaks up in the atmosphere. Most asteroids are not strong, and when they disintegrate in the atmosphere they often produce a shower of meteorites on the ground.

Comets are also small bodies orbiting the sun, but they also contain ices that produce a gas and dust atmosphere and tail when they get near the sun and heat up.

Details

An asteroid is a minor planet—an object that is neither a true planet nor an identified comet— that orbits within the inner Solar System. They are rocky, metallic, or icy bodies with no atmosphere, classified as C-type (carbonaceous), M-type (metallic), or S-type (silicaceous). The size and shape of asteroids vary significantly, ranging from small rubble piles under a kilometer across and larger than meteoroids, to Ceres, a dwarf planet almost 1000 km in diameter. A body is classified as a comet, not an asteroid, if it shows a coma (tail) when warmed by solar radiation, although recent observations suggest a continuum between these types of bodies.

Of the roughly one million known asteroids, the greatest number are located between the orbits of Mars and Jupiter, approximately 2 to 4 AU from the Sun, in a region known as the main asteroid belt. The total mass of all the asteroids combined is only 3% that of Earth's Moon. The majority of main belt asteroids follow slightly elliptical, stable orbits, revolving in the same direction as the Earth and taking from three to six years to complete a full circuit of the Sun.

Asteroids have historically been observed from Earth. The first close-up observation of an asteroid was made by the Galileo spacecraft. Several dedicated missions to asteroids were subsequently launched by NASA and JAXA, with plans for other missions in progress. NASA's NEAR Shoemaker studied Eros, and Dawn observed Vesta and Ceres. JAXA's missions Hayabusa and Hayabusa2 studied and returned samples of Itokawa and Ryugu, respectively. OSIRIS-REx studied Bennu, collecting a sample in 2020 which was delivered back to Earth in 2023. NASA's Lucy, launched in 2021, is tasked with studying ten different asteroids, two from the main belt and eight Jupiter trojans. Psyche, launched October 2023, aims to study the metallic asteroid Psyche.

Near-Earth asteroids have the potential for catastrophic consequences if they strike Earth, with a notable example being the Chicxulub impact, widely thought to have induced the Cretaceous–Paleogene mass extinction. As an experiment to meet this danger, in September 2022 the Double Asteroid Redirection Test spacecraft successfully altered the orbit of the non-threatening asteroid Dimorphos by crashing into it.

Terminology

In 2006, the International Astronomical Union (IAU) introduced the currently preferred broad term small Solar System body, defined as an object in the Solar System that is neither a planet, a dwarf planet, nor a natural satellite; this includes asteroids, comets, and more recently discovered classes. According to IAU, "the term 'minor planet' may still be used, but generally, 'Small Solar System Body' will be preferred."

Historically, the first discovered asteroid, Ceres, was at first considered a new planet. It was followed by the discovery of other similar bodies, which with the equipment of the time appeared to be points of light like stars, showing little or no planetary disc, though readily distinguishable from stars due to their apparent motions. This prompted the astronomer Sir William Herschel to propose the term asteroid, coined in Greek, or asteroeidēs, meaning 'star-like, star-shaped', and derived from the Ancient Greek astēr 'star, planet'. In the early second half of the 19th century, the terms asteroid and planet (not always qualified as "minor") were still used interchangeably.

Traditionally, small bodies orbiting the Sun were classified as comets, asteroids, or meteoroids, with anything smaller than one meter across being called a meteoroid. The term asteroid, never officially defined, but can be informally used to mean "an irregularly shaped rocky body orbiting the Sun that does not qualify as a planet or a dwarf planet under the IAU definitions". The main difference between an asteroid and a comet is that a comet shows a coma (tail) due to sublimation of its near-surface ices by solar radiation. A few objects were first classified as minor planets but later showed evidence of cometary activity. Conversely, some (perhaps all) comets are eventually depleted of their surface volatile ices and become asteroid-like. A further distinction is that comets typically have more eccentric orbits than most asteroids; highly eccentric asteroids are probably dormant or extinct comets.

The minor planets beyond Jupiter's orbit are sometimes also called "asteroids", especially in popular presentations. However, it is becoming increasingly common for the term asteroid to be restricted to minor planets of the inner Solar System. Therefore, this article will restrict itself for the most part to the classical asteroids: objects of the asteroid belt, Jupiter trojans, and near-Earth objects.

For almost two centuries after the discovery of Ceres in 1801, all known asteroids spent most of their time at or within the orbit of Jupiter, though a few, such as 944 Hidalgo, ventured farther for part of their orbit. Starting in 1977 with 2060 Chiron, astronomers discovered small bodies that permanently resided further out than Jupiter, now called centaurs. In 1992, 15760 Albion was discovered, the first object beyond the orbit of Neptune (other than Pluto); soon large numbers of similar objects were observed, now called trans-Neptunian object. Further out are Kuiper-belt objects, scattered-disc objects, and the much more distant Oort cloud, hypothesized to be the main reservoir of dormant comets. They inhabit the cold outer reaches of the Solar System where ices remain solid and comet-like bodies exhibit little cometary activity; if centaurs or trans-Neptunian objects were to venture close to the Sun, their volatile ices would sublimate, and traditional approaches would classify them as comets.

The Kuiper-belt bodies are called "objects" partly to avoid the need to classify them as asteroids or comets. They are thought to be predominantly comet-like in composition, though some may be more akin to asteroids. Most do not have the highly eccentric orbits associated with comets, and the ones so far discovered are larger than traditional comet nuclei. Other recent observations, such as the analysis of the cometary dust collected by the Stardust probe, are increasingly blurring the distinction between comets and asteroids, suggesting "a continuum between asteroids and comets" rather than a sharp dividing line.

In 2006, the IAU created the class of dwarf planets for the largest minor planets—those massive enough to have become ellipsoidal under their own gravity. Only the largest object in the asteroid belt has been placed in this category: Ceres, at about 975 km (606 mi) across.

Additional Information

Asteroids, sometimes called minor planets, are rocky, airless remnants left over from the early formation of our solar system about 4.6 billion years ago.

Most asteroids can be found orbiting the Sun between Mars and Jupiter within the main asteroid belt. Asteroids range in size from Vesta – the largest at about 329 miles (530 kilometers) in diameter – to bodies that are less than 33 feet (10 meters) across. The total mass of all the asteroids combined is less than that of Earth's Moon.

During the 18th century, astronomers were fascinated by a mathematical expression called Bode's law. It appeared to predict the locations of the known planets, but with one exception...

Bode's law suggested there should be a planet between Mars and Jupiter. When Sir William Herschel discovered Uranus, the seventh planet, in 1781, at a distance that corresponded to Bode's law, scientific excitement about the validity of this mathematical expression reached an all-time high. Many scientists were absolutely convinced that a planet must exist between Mars and Jupiter.

By the end of the century, a group of astronomers had banded together to use the observatory at Lilienthal, Germany, owned by Johann Hieronymous Schröter, to hunt down this missing planet. They called themselves, appropriately, the 'Celestial Police'.

Despite their efforts, they were beaten by Giuseppe Piazzi, who discovered what he believed to be the missing planet on New Year's Day, 1801, from the Palermo Observatory.

The new body was named Ceres but subsequent observations swiftly established that it could not be classed a major planet as its diameter is just 940 kilometres (Pluto, the smallest planet, has a diameter is just over 2300 kilometres). Instead, it was classified as a 'minor planet' and the search for the 'real' planet continued.

Between 1801 and 1808, astronomers tracked down a further three minor planets within this region of space: Pallas, Juno and Vesta, each smaller than Ceres. It became obvious that there was no single large planet out there and enthusiasm for the search waned.

A fifth asteroid, Astraea, was discovered in 1845 and interest in the asteroids as a new 'class' of celestial object began to build. In fact, since that time new asteroids have been discovered almost every year.

It soon became obvious that a 'belt' of asteroids existed between Mars and Jupiter. This collection of space debris was the 'missing planet'. It was almost certainly prevented from forming by the large gravitational field of adjacent Jupiter.

Now there are a number of telescopes dedicated to the task of finding new asteroids. Specifically, these instruments are geared towards finding any asteroids that cross the Earth's orbit and may therefore pose an impact hazard.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2328 2024-10-01 00:04:25

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,012

Re: Miscellany

2328) Table

Gist

A table is an item of furniture with a raised flat top and is supported most commonly by 1 to 4 legs (although some can have more). It is used as a surface for working at, eating from or on which to place things.

Summary

Table, basic article of furniture, known and used in the Western world since at least the 7th century bce, consisting of a flat slab of stone, metal, wood, or glass supported by trestles, legs, or a pillar.

Egyptian tables were made of wood, Assyrian of metal, and Grecian usually of bronze. Roman tables took on quite elaborate forms, the legs carved in the shapes of animals, sphinxes, or grotesque figures. Cedar and other exotic woods with a decorative grain were employed for the tops, and the tripod legs were made of bronze or other metals.

Early medieval tables were of a fairly basic type, but there were certain notable exceptions; Charlemagne, for example, possessed two tables of silver and one of gold, probably constructed of wood covered with thin sheets of metal. With the growing formality of life in the feudal period, tables took on a greater social significance. Although small tables were used in private apartments, in the great hall of a feudal castle the necessity of feeding a host of retainers stimulated the development of an arrangement whereby the master and his guests sat at a rectangular table on a dais surmounted by a canopy, while the rest of the household sat at tables placed at right angles to this one.

One of the few surviving examples of a large (and much restored) round table dating from the 15th century is at Winchester Castle in Hampshire, Eng. For the most part, circular tables were intended for occasional uses. The most common type of large medieval dining table was of trestle construction, consisting of massive boards of oak or elm resting on a series of central supports to which they were affixed by pegs, which could be removed and the table dismantled. Tables with attached legs, joined by heavy stretchers fixed close to the floor, appeared in the 15th century. They were of fixed size and heavy to move, but in the 16th century an ingenious device known as a draw top made it possible to double the length of the table. The top was composed of three leaves, two of which could be placed under the third and extended on runners when required. Such tables were usually made of oak or elm but sometimes of walnut or cherry. The basic principle involved is still applied to some extending tables.

Growing technical sophistication meant that from the middle of the 16th century onward tables began to reflect far more closely than before the general design tendencies of their period and social context. The typical Elizabethan draw table, for instance, was supported on four vase-shaped legs terminating in Ionic capitals, reflecting perfectly the boisterous decorative atmosphere of the age. The despotic monarchies that yearned after the splendours of Louis XIV’s Versailles promoted a fashion for tables of conspicuous opulence. Often made in Italy, these tables, which were common between the late 17th and mid-18th century, were sometimes inlaid with elaborate patterns of marquetry or rare marbles; others, such as that presented by the City of London to Charles II on his restoration as king of England, were entirely covered in silver or were made of ebony with silver mountings.

Increasing contact with the East in the 18th century stimulated a taste for lacquered tables for occasional use. Indeed, the pattern of development in the history of the table that became apparent in this century was that, whereas the large dining table showed few stylistic changes, growing sophistication of taste and higher standards of living led to an increasing degree of specialization in occasional-table design. A whole range of particular functions was now being catered to, a tendency that persisted until at least the beginning of the 20th century. Social customs such as tea-drinking fueled the development of these specialized forms. The exploitation of man-made materials in the second half of the 20th century produced tables of such materials as plastic, metal, fibreglass, and even corrugated cardboard.

Details

A table is an item of furniture with a raised flat top and is supported most commonly by 1 to 4 legs (although some can have more). It is used as a surface for working at, eating from or on which to place things. Some common types of tables are the dining room tables, which are used for seated persons to eat meals; the coffee table, which is a low table used in living rooms to display items or serve refreshments; and the bedside table, which is commonly used to place an alarm clock and a lamp. There are also a range of specialized types of tables, such as drafting tables, used for doing architectural drawings, and sewing tables.

Common design elements include:

* Top surfaces of various shapes, including rectangular, square, rounded, semi-circular or oval

* Legs arranged in two or more similar pairs. It usually has four legs. However, some tables have three legs, use a single heavy pedestal, or are attached to a wall.

* Several geometries of folding table that can be collapsed into a smaller volume (e.g., a TV tray, which is a portable, folding table on a stand)

* Heights ranging up and down from the most common 18–30 inches (46–76 cm) range, often reflecting the height of chairs or bar stools used as seating for people making use of a table, as for eating or performing various manipulations of objects resting on a table

* A huge range of sizes, from small bedside tables to large dining room tables and huge conference room tables

* Presence or absence of drawers, shelves or other areas for storing items

* Expansion of the table surface by insertion of leaves or locking hinged drop leaf sections into a horizontal position (this is particularly common for dining tables)

Etymology

The word table is derived from Old English tabele, derived from the Latin word tabula ('a board, plank, flat top piece'), which replaced the Old English bord; its current spelling reflects the influence of the French table.

History

Some very early tables were made and used by the Ancient Egyptians around 2500 BC, using wood and alabaster. They were often little more than stone platforms used to keep objects off the floor, though a few examples of wooden tables have been found in tombs. Food and drinks were usually put on large plates deposed on a pedestal for eating. The Egyptians made use of various small tables and elevated playing boards. The Chinese also created very early tables in order to pursue the arts of writing and painting, as did people in Mesopotamia, where various metals were used.

The Greeks and Romans made more frequent use of tables, notably for eating, although Greek tables were pushed under a bed after use. The Greeks invented a piece of furniture very similar to the guéridon. Tables were made of marble or wood and metal (typically bronze or silver alloys), sometimes with richly ornate legs. Later, the larger rectangular tables were made of separate platforms and pillars. The Romans also introduced a large, semicircular table to Italy, the mensa lunata. Plutarch mentions use of "tables" by Persians.

Furniture during the Middle Ages is not as well known as that of earlier or later periods, and most sources show the types used by the nobility. In the Eastern Roman Empire, tables were made of metal or wood, usually with four feet and frequently linked by x-shaped stretchers. Tables for eating were large and often round or semicircular. A combination of a small round table and a lectern seemed very popular as a writing table.

In western Europe, although there was variety of form — the circular, semicircular, oval and oblong were all in use — tables appear to have been portable and supported upon trestles fixed or folding, which were cleared out of the way at the end of a meal. Thus Charlemagne possessed three tables of silver and one of gold, probably made of wood and covered with plates of the precious metals. The custom of serving dinner at several small tables, which is often supposed to be a modern refinement, was followed in the French châteaux, and probably also in the English castles, as early as the 13th century.

Refectory tables first appeared at least as early as the 17th century, as an advancement of the trestle table; these tables were typically quite long and wide and capable of supporting a sizeable banquet in the great hall or other reception room of a castle.

Shape, height, and function

Tables come in a wide variety of materials, shapes, and heights dependent upon their origin, style, intended use and cost. Many tables are made of wood or wood-based products; some are made of other materials including metal and glass. Most tables are composed of a flat surface and one or more supports (legs). A table with a single, central foot is a pedestal table. Long tables often have extra legs for support.

Table tops can be in virtually any shape, although rectangular, square, round (e.g. the round table), and oval tops are the most frequent. Others have higher surfaces for personal use while either standing or sitting on a tall stool.

Many tables have tops that can be adjusted to change their height, position, shape, or size, either with foldable, sliding or extensions parts that can alter the shape of the top. Some tables are entirely foldable for easy transportation, e.g. camping or storage, e.g., TV trays. Small tables in trains and aircraft may be fixed or foldable, although they are sometimes considered as simply convenient shelves rather than tables.

Tables can be freestanding or designed for placement against a wall. Tables designed to be placed against a wall are known as pier tables[9] or console tables (French: console, "support bracket") and may be bracket-mounted (traditionally), like a shelf, or have legs, which sometimes imitate the look of a bracket-mounted table.

Types

Tables of various shapes, heights, and sizes are designed for specific uses:

* Dining room tables are designed to be used for formal dining.

* Bedside tables, nightstands, or night tables are small tables used in a bedroom. They are often used for convenient placement of a small lamp, alarm clock, glasses, or other personal items.

* Drop-leaf tables have a fixed section in the middle and a hinged section (leaf) on either side that can be folded down.

* Gateleg tables have one or two hinged leaves supported by hinged legs.

* Coffee tables are low tables designed for use in a living room, in front of a sofa, for convenient placement of drinks, books, or other personal items.

* Refectory tables are long tables designed to seat many people for meals.

* Drafting tables usually have a top that can be tilted for making a large or technical drawing. They may also have a ruler or similar element integrated.

* Workbenches are sturdy tables, often elevated for use with a high stool or while standing, which are used for assembly, repairs, or other precision handwork.

* Nested tables are a set of small tables of graduated size that can be stacked together, each fitting within the one immediately larger. They are for occasional use (such as a tea party), hence the stackable design.

Specialized types

Historically, various types of tables have become popular for specific uses:

* Loo tables were very popular in the 18th and 19th centuries as candlestands, tea tables, or small dining tables, although they were originally made for the popular card game loo or lanterloo. Their typically round or oval tops have a tilting mechanism, which enables them to be stored out of the way (e.g. in room corners) when not in use. A further development in this direction was the "birdcage" table, the top of which could both revolve and tilt.

* Pembroke tables, first introduced during the 18th century, were popular throughout the 19th century. Their main characteristic was a rectangular or oval top with folding or drop leaves on each side. Most examples have one or more drawers and four legs, sometimes connected by stretchers. Their design meant they could easily be stored or moved about and conveniently opened for serving tea, dining, writing, or other occasional uses. One account attributes the design of the Pembroke table to Henry Herbert, 9th Earl of Pembroke (1693-1751).

* Sofa tables are similar to Pembroke tables and usually have longer and narrower tops. They were specifically designed for placement directly in front of sofas for serving tea, writing, dining, or other convenient uses. Generally speaking, a sofa table is a tall, narrow table used behind a sofa to hold lamps or decorative objects.

* Work tables were small tables designed to hold sewing materials and implements, providing a convenient work place for women who sewed. They appeared during the 18th century and were popular throughout the 19th century. Most examples have rectangular tops, sometimes with folding leaves, and usually one or more drawers fitted with partitions. Early examples typically have four legs, often standing on casters, while later examples sometimes have turned columns or other forms of support.

* Drum tables are round tables introduced for writing, with drawers around the platform.

* End tables are small tables typically placed beside couches or armchairs. Often lamps will be placed on an end table.

* Overbed tables are narrow rectangular tables whose top is designed for use above the bed, especially for hospital patients.

* Billiards tables are bounded tables on which billiards-type games are played. All provide a flat surface, usually composed of slate and covered with cloth, elevated above the ground.

* Chess tables are a type of games table that integrates a chessboard.

* Table tennis tables are usually masonite or a similar wood, layered with a smooth low-friction coating. they are divided into two halves by a low net, which separates opposing players.

* Poker tables or card tables are used to play poker or other card games.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2329 2024-10-02 00:05:44

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,012

Re: Miscellany

2329) Calculus (Medicine)

Gist

A calculus ( pl. : calculi), often called a stone, is a concretion of material, usually mineral salts, that forms in an organ or duct of the body.

Calculus, renal: A stone in the kidney (or lower down in the urinary tract). Also called a kidney stone. The stones themselves are called renal caluli. The word "calculus" (plural: calculi) is the Latin word for pebble. Renal stones are a common cause of blood in the urine and pain in the abdomen, flank, or groin.

Treating a staghorn calculus usually means surgery. The entire stone, even small pieces, must be removed so they can't lead to infection or the formation of new stones. One way to remove staghorn stones is with a percutaneous nephrolithotomy (PCNL).

A urologist can remove the kidney stone or break it into small pieces with the following treatments: Shock wave lithotripsy. The doctor can use shock wave lithotripsy link to blast the kidney stone into small pieces. The smaller pieces of the kidney stone then pass through your urinary tract.

What is a calculi in the kidneys?

Kidney stones (also called renal calculi, nephrolithiasis or urolithiasis) are hard deposits made of minerals and salts that form inside your kidneys. Diet, excess body weight, some medical conditions, and certain supplements and medications are among the many causes of kidney stones.

Summary

Kidney stone is a common clinical problem faced by clinicians. The prevalence of the disease is increasing worldwide. As the affected population is getting younger and recurrence rates are high, dietary modifications, lifestyle changes, and medical management are essential. Patients with recurrent stone disease need careful evaluation for underlying metabolic disorder. Medical management should be used judiciously in all patients with kidney stones, with appropriate individualization. This chapter focuses on medical management of kidney stones.

Urinary tract stones (urolithiasis) are known to the mankind since antiquity. Kidney stone is not a true diagnosis; rather it suggests a broad variety of underlying diseases. Kidney stones are mainly composed of calcium salts, uric acid, cysteine, and struvite. Calcium oxalate and calcium phosphate are the most common types accounting for >80% of stones, followed by uric acid (8–10%) and cysteine, struvite in remainders.

The incidence of urolithiasis is increasing globally, with geographic, racial, and gender variation in its occurrence. Epidemiological study (1979) in the western population reveals the incidence of urolithiasis to be 124 per 100,000 in males and 36 per 100,000 in females. The lifetime risk of having urolithiasis is higher in the Middle East (20–25%) and western countries (10–15%) and less common in Africans and Asian population. Stone disease carries high risk of recurrence after the initial episode, of around 50% at 5 years and 70% at 9 years.

Positive family history of stone disease, young age at onset, recurrent urinary tract infections (UTIs), and underlying diseases like renal tubular acidosis (RTA) and hyperparathyroidism are the major risk factors for recurrence. High incidence and recurrence rate add enormous cost and loss of work days.

Though the pathogenesis of stone disease is not fully understood, systematic metabolic evaluation, medical treatment of underlying conditions, and patient-specific modification in diet and lifestyle are effective in reducing the incidence and recurrence of stone disease.

Details

A calculus (pl.: calculi), often called a stone, is a concretion of material, usually mineral salts, that forms in an organ or duct of the body. Formation of calculi is known as lithiasis. Stones can cause a number of medical conditions.

Some common principles (below) apply to stones at any location, but for specifics see the particular stone type in question.

Calculi are not to be confused with gastroliths, which are ingested rather than grown endogenously.

Types

* Calculi in the inner ear are called otoliths

* Calculi in the urinary system are called urinary calculi and include kidney stones (also called renal calculi or nephroliths) and bladder stones (also called vesical calculi or cystoliths). They can have any of several compositions, including mixed. Principal compositions include oxalate and urate.

* Calculi of the gallbladder and bile ducts are called gallstones and are primarily developed from bile salts and cholesterol derivatives.

* Calculi in the nasal passages (rhinoliths) are rare.

* Calculi in the gastrointestinal tract (enteroliths) can be enormous. Individual enteroliths weighing many pounds have been reported in horses.

* Calculi in the stomach are called gastric calculi (not to be confused with gastroliths which are exogenous in nature).

* Calculi in the salivary glands are called salivary calculi (sialoliths).

* Calculi in the tonsils are called tonsillar calculi (tonsilloliths).

* Calculi in the veins are called venous calculi (phleboliths).

* Calculi in the skin, such as in sweat glands, are not common but occasionally occur.

* Calculi in the navel are called omphaloliths.

* Calculi are usually asymptomatic, and large calculi may have required many years to grow to their large size.

Cause

* From an underlying abnormal excess of the mineral, e.g., with elevated levels of calcium (hypercalcaemia) that may cause kidney stones, dietary factors for gallstones.

* Local conditions at the site in question that promote their formation, e.g., local bacteria action (in kidney stones) or slower fluid flow rates, a possible explanation of the majority of salivary duct calculus occurring in the submandibular salivary gland.

* Enteroliths are a type of calculus found in the intestines of animals (mostly ruminants) and humans, and may be composed of inorganic or organic constituents.

* Bezoars are lumps of indigestible material in the stomach and/or intestines; most commonly, they consist of hair (in which case they are also known as hairballs). A bezoar may form the nidus of an enterolith.

* In kidney stones, calcium oxalate is the most common mineral type (see nephrolithiasis). Uric acid is the second most common mineral type, but an in vitro study showed uric acid stones and crystals can promote the formation of calcium oxalate stones.

Pathophysiology

Stones can cause disease by several mechanisms:

* Irritation of nearby tissues, causing pain, swelling, and inflammation

* Obstruction of an opening or duct, interfering with normal flow and disrupting the function of the organ in question

* Predisposition to infection (often due to disruption of normal flow)

A number of important medical conditions are caused by stones:

* Nephrolithiasis (kidney stones)

** Can cause hydronephrosis (swollen kidneys) and kidney failure

** Can predispose to pyelonephritis (kidney infections)

** Can progress to urolithiasis

* Urolithiasis (urinary bladder stones)

** Can progress to bladder outlet obstruction

* Cholelithiasis (gallstones)

** Can predispose to cholecystitis (gall bladder infections) and ascending cholangitis (biliary tree infection)

** Can progress to choledocholithiasis (gallstones in the bile duct) and gallstone pancreatitis (inflammation of the pancreas)

* Gastric calculi can cause colic, obstruction, torsion, and necrosis.

Diagnosis

Diagnostic workup varies by the stone type, but in general:

* Clinical history and physical examination

Imaging studies:

* Some stone types (mainly those with substantial calcium content) can be detected on X-ray and CT scan

* Many stone types can be detected by ultrasound

Factors contributing to stone formation (as in #Etiology) are often tested:

* Laboratory testing can give levels of relevant substances in blood or urine

* Some stones can be directly recovered (at surgery, or when they leave the body spontaneously) and sent to a laboratory for analysis of content

Treatment

Modification of predisposing factors can sometimes slow or reverse stone formation. Treatment varies by stone type, but, in general:

* Healthy diet and exercise (promotes flow of energy and nutrition)

* Drinking fluids (water and electrolytes like lemon juice, diluted vinegar e.g. in pickles, salad dressings, sauces, soups, shrubs mix)

* Surgery (lithotomy)

* Medication / antibiotics

* Extracorporeal shock wave lithotripsy (ESWL) for removal of calculi

History

The earliest operation for curing stones is given in the Sushruta Samhita (6th century BCE). The operation involved exposure and going up through the floor of the bladder.

The care of this disease was forbidden to the physicians that had taken the Hippocratic Oath because:

* There was a high probability of intraoperative and postoperative surgical complications like infection or bleeding

* The physicians would not perform surgery as in ancient cultures they were two different professions

Etymology

The word comes from Latin calculus "small stone", from calx "limestone, lime", probably related to Greek chalix "small stone, pebble, rubble", which many trace to a Proto-Indo-European language root for "split, break up". Calculus was a term used for various kinds of stones. In the 18th century it came to be used for accidental or incidental mineral buildups in human and animal bodies, like kidney stones and minerals on teeth.

Additional Information

If your doctor suspects that you have a kidney stone, you may have diagnostic tests and procedures, such as:

* Blood testing. Blood tests may reveal too much calcium or uric acid in your blood. Blood test results help monitor the health of your kidneys and may lead your doctor to check for other medical conditions.

* Urine testing. The 24-hour urine collection test may show that you're excreting too many stone-forming minerals or too few stone-preventing substances. For this test, your doctor may request that you perform two urine collections over two consecutive days.

* Imaging. Imaging tests may show kidney stones in your urinary tract. High-speed or dual energy computerized tomography (CT) may reveal even tiny stones. Simple abdominal X-rays are used less frequently because this kind of imaging test can miss small kidney stones.

Ultrasound, a noninvasive test that is quick and easy to perform, is another imaging option to diagnose kidney stones.

* Analysis of passed stones. You may be asked to urinate through a strainer to catch stones that you pass. Lab analysis will reveal the makeup of your kidney stones. Your doctor uses this information to determine what's causing your kidney stones and to form a plan to prevent more kidney stones.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2330 2024-10-02 20:04:33

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,012

Re: Miscellany

2330) Cafeteria

Gist

A cafeteria a restaurant in which the customers serve themselves or are served at a counter but carry their own food to their tables.

A cafeteria is a self-service restaurant in a large shop or workplace.

It is a restaurant, especially one for staff or workers, where people collect their meals themselves and carry them to their tables.

Summary

A cafeteria is a self-service restaurant in which customers select various dishes from an open-counter display. The food is usually placed on a tray, paid for at a cashier’s station, and carried to a dining table by the customer. The modern cafeteria, designed to facilitate a smooth flow of patrons, is particularly well adapted to the needs of institutions—schools, hospitals, corporations—attempting to serve large numbers of people efficiently and inexpensively. In addition to providing quick service, the cafeteria requires fewer service personnel than most other commercial eating establishments.

Early versions of self-service restaurants began to appear in the late 19th century in the United States. In 1891 the Young Women’s Christian Association (YWCA) of Kansas City, Missouri, established what some food-industry historians consider the first cafeteria. This institution, founded to provide low-cost meals for working women, was patterned after a Chicago luncheon club for women where some aspects of self-service were already in practice. Cafeterias catering to the public opened in several U.S. cities in the 1890s, but cafeteria service did not become widespread until shortly after the turn of the century, when it became the accepted method of providing food for employees of factories and other large businesses.

Details

A cafeteria, sometimes called a canteen outside the U.S. and Canada, is a type of food service location in which there is little or no waiting staff table service, whether in a restaurant or within an institution such as a large office building or school; a school dining location is also referred to as a dining hall or lunchroom (in American English). Cafeterias are different from coffeehouses, although the English term came from the Spanish term cafetería, which carries the same meaning.

Instead of table service, there are food-serving counters/stalls or booths, either in a line or allowing arbitrary walking paths. Customers take the food that they desire as they walk along, placing it on a tray. In addition, there are often stations where customers order food, particularly items such as hamburgers or tacos which must be served hot and can be immediately prepared with little waiting. Alternatively, the patron is given a number and the item is brought to their table. For some food items and drinks, such as sodas, water, or the like, customers collect an empty container, pay at check-out, and fill the container after check-out. Free unlimited-second servings are often allowed under this system. For legal purposes (and the consumption patterns of customers), this system is rarely, if at all, used for alcoholic drinks in the United States.

Customers are either charged a flat rate for admission (as in a buffet) or pay at check-out for each item. Some self-service cafeterias charge by the weight of items on a patron's plate. In universities and colleges, some students pay for three meals a day by making a single large payment for the entire semester.

As cafeterias require few employees, they are often found within a larger institution, catering to the employees or clientele of that institution. For example, schools, colleges and their residence halls, department stores, hospitals, museums, places of worship, amusement parks, military bases, prisons, factories, and office buildings often have cafeterias. Although some of such institutions self-operate their cafeterias, many outsource their cafeterias to a food service management company or lease space to independent businesses to operate food service facilities. The three largest food service management companies servicing institutions are Aramark, Compass Group, and Sodexo.

At one time, upscale cafeteria-style restaurants dominated the culture of the Southern United States, and to a lesser extent the Midwest. There were numerous prominent chains of them: Bickford's, Morrison's Cafeteria, Piccadilly Cafeteria, S&W Cafeteria, Apple House, Luby's, K&W, Britling, Wyatt's Cafeteria, and Blue Boar among them. Currently, two Midwestern chains still exist, Sloppy Jo's Lunchroom and Manny's, which are both located in Illinois. There were also several smaller chains, usually located in and around a single city. These institutions, except K&W, went into a decline in the 1960s with the rise of fast food and were largely finished off in the 1980s by the rise of all-you-can-eat buffets and other casual dining establishments. A few chains—particularly Luby's and Piccadilly Cafeterias (which took over the Morrison's chain in 1998)—continue to fill some of the gap left by the decline of the older chains. Some of the smaller Midwestern chains, such as MCL Cafeterias centered in Indianapolis, are still in business.

History

Perhaps the first self-service restaurant (not necessarily a cafeteria) in the U.S. was the Exchange Buffet in New York City, which opened September 4, 1885, and catered to an exclusively male clientele. Food was purchased at a counter and patrons ate standing up. This represents the predecessor of two formats: the cafeteria, described below, and the automat.

During the 1893 World's Columbian Exposition in Chicago, entrepreneur John Kruger built an American version of the smörgåsbords he had seen while traveling in Sweden. Emphasizing the simplicity and light fare, he called it the 'Cafeteria' - Spanish for 'coffee shop'. The exposition attracted over 27 million visitors (half the U.S. population at the time) in six months, and it was because of Kruger's operation that the United States first heard the term and experienced the self-service dining format.

Meanwhile, the chain of Childs Restaurants quickly grew from about 10 locations in New York City in 1890 to hundreds across the U.S. and Canada by 1920. Childs is credited with the innovation of adding trays and a "tray line" to the self-service format, introduced in 1898 at their 130 Broadway location. Childs did not change its format of sit-down dining, however. This was soon the standard design for most Childs Restaurants, and, ultimately, the dominant method for succeeding cafeterias.

It has been conjectured that the 'cafeteria craze' started in May 1905, when Helen Mosher opened a downtown L.A. restaurant where people chose their food at a long counter and carried their trays to their tables. California has a long history in the cafeteria format - notably the Boos Brothers Cafeterias, and the Clifton's and Schaber's. The earliest cafeterias in California were opened at least 12 years after Kruger's Cafeteria, and Childs already had many locations around the country. Horn & Hardart, an automat format chain (different from cafeterias), was well established in the mid-Atlantic region before 1900.

Between 1960 and 1981, the popularity of cafeterias was overcome by fast food restaurants and fast casual restaurant formats.

Outside the United States, the development of cafeterias can be observed in France as early as 1881 with the passing of the Ferry Law. This law mandated that public school education be available to all children. Accordingly, the government also encouraged schools to provide meals for students in need, thus resulting in the conception of cafeterias or cantine (in French). According to Abramson, before the creation of cafeterias, only some students could bring home-cooked meals and be properly fed in schools.

As cafeterias in France became more popular, their use spread beyond schools and into the workforce. Thus, due to pressure from workers and eventually new labor laws, sizable businesses had to, at minimum, provide established eating areas for their workers. Support for this practice was also reinforced by the effects of World War II when the importance of national health and nutrition came under great attention.

Other names

A cafeteria in a U.S. military installation is known as a chow hall, a mess hall, a galley, a mess deck, or, more formally, a dining facility, often abbreviated to DF, whereas in common British Armed Forces parlance, it is known as a cookhouse or mess. Students in the United States often refer to cafeterias as lunchrooms, which also often serve school breakfast. Some school cafeterias in the U.S. and Canada have stages and movable seating that allow use as auditoriums. These rooms are known as cafetoriums or All Purpose Rooms. In some older facilities, a school's gymnasium is also often used as a cafeteria, with the kitchen facility being hidden behind a rolling partition outside non-meal hours. Newer rooms which also act as the school's grand entrance hall for crowd control and are used for multiple purposes are often called the commons.

Cafeterias serving university dormitories are sometimes called dining halls or dining commons. A food court is a type of cafeteria found in many shopping malls and airports featuring multiple food vendors or concessions. However, a food court could equally be styled as a type of restaurant as well, being more aligned with the public, rather than institutionalized, dining. Some institutions, especially schools, have food courts with stations offering different types of food served by the institution itself (self-operation) or a single contract management company, rather than leasing space to numerous businesses. Some monasteries, boarding schools, and older universities refer to their cafeteria as a refectory. Modern-day British cathedrals and abbeys, notably in the Church of England, often use the phrase refectory to describe a cafeteria open to the public. Historically, the refectory was generally only used by monks and priests. For example, although the original 800-year-old refectory at Gloucester Cathedral (the stage setting for dining scenes in the Harry Potter movies) is now mostly used as a choir practice area, the relatively modern 300-year-old extension, now used as a cafeteria by staff and public alike, is today referred to as the refectory.

A cafeteria located within a movie or TV studio complex is often called a commissary.

College cafeteria

In American English, a college cafeteria is a cafeteria intended for college students. In British English, it is often called the refectory. These cafeterias can be a part of a residence hall or in a separate building. Many of these colleges employ their students to work in the cafeteria. The number of meals served to students varies from school to school but is normally around 21 meals per week. Like normal cafeterias, a person will have a tray to select the food that they want, but (at some campuses) instead of paying money, pays beforehand by purchasing a meal plan.

The method of payment for college cafeterias is commonly in the form of a meal plan, whereby the patron pays a certain amount at the start of the semester and details of the plan are stored on a computer system. Student ID cards are then used to access the meal plan. Meal plans can vary widely in their details and are often not necessary to eat at a college cafeteria. Typically, the college tracks students' plan usage by counting the number of predefined meal servings, points, dollars, or buffet dinners. The plan may give the student a certain number of any of the above per week or semester and they may or may not roll over to the next week or semester.

Many schools offer several different options for using their meal plans. The main cafeteria is usually where most of the meal plan is used but smaller cafeterias, cafés, restaurants, bars, or even fast food chains located on campus, on nearby streets, or in the surrounding town or city may accept meal plans. A college cafeteria system often has a virtual monopoly on the students due to an isolated location or a requirement that residence contracts include a full meal plan.

Taiwanese cafeteria

There are many self-service bento shops in Taiwan. The store puts the dishes in the self-service area for the customers to pick them up by themselves. After the customers choose, they go to the cashier to check out; many stores use the staff to visually check the amount of food when assessing the price, and some stores use the method of weighing.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2331 2024-10-03 16:49:51

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,012

Re: Miscellany

2331) Antenna

Gist

An antenna is a metallic structure that captures and/or transmits radio electromagnetic waves. Antennas come in all shapes and sizes from little ones that can be found on your roof to watch TV to really big ones that capture signals from satellites millions of miles away.

An antenna is a device that is made out of a conductive, metallic material and has the purpose of transmitting and/or receiving electromagnetic waves, usually radio wave signals. The purpose of transmitting and receiving radio waves is to communicate or broadcast information at the speed of light.

Summary

An antenna is a metallic structure that captures and/or transmits radio electromagnetic waves. Antennas come in all shapes and sizes from little ones that can be found on your roof to watch TV to really big ones that capture signals from satellites millions of miles away.

The antennas that Space Communications and Navigation (SCaN) uses are a special bowl shaped antenna that focuses signals at a single point called a parabolic antenna. The bowl shape is what allows the antennas to both capture and transmit electromagnetic waves. These antennas move horizontally (measured in hour angle/declination) and vertically (measured in azimuth/elevation) in order to capture and transmit the signal.

SCaN has over 65 antennas that help capture and transmit data to and from satellites in space.

Details

In radio engineering, an antenna (American English) or aerial (British English) is an electronic device that converts an alternating electric current into radio waves (transmitting), or radio waves into an electric current (receiving). It is the interface between radio waves propagating through space and electric currents moving in metal conductors, used with a transmitter or receiver. In transmission, a radio transmitter supplies an electric current to the antenna's terminals, and the antenna radiates the energy from the current as electromagnetic waves (radio waves). In reception, an antenna intercepts some of the power of a radio wave in order to produce an electric current at its terminals, that is applied to a receiver to be amplified. Antennas are essential components of all radio equipment.

An antenna is an array of conductors (elements), electrically connected to the receiver or transmitter. Antennas can be designed to transmit and receive radio waves in all horizontal directions equally (omnidirectional antennas), or preferentially in a particular direction (directional, or high-gain, or "beam" antennas). An antenna may include components not connected to the transmitter, parabolic reflectors, horns, or parasitic elements, which serve to direct the radio waves into a beam or other desired radiation pattern. Strong directivity and good efficiency when transmitting are hard to achieve with antennas with dimensions that are much smaller than a half wavelength.

The first antennas were built in 1888 by German physicist Heinrich Hertz in his pioneering experiments to prove the existence of electromagnetic waves predicted by the 1867 electromagnetic theory of James Clerk Maxwell. Hertz placed dipole antennas at the focal point of parabolic reflectors for both transmitting and receiving. Starting in 1895, Guglielmo Marconi began development of antennas practical for long-distance, wireless telegraphy, for which he received the 1909 Nobel Prize in physics.

Terminology

The words antenna and aerial are used interchangeably. Occasionally the equivalent term "aerial" is used to specifically mean an elevated horizontal wire antenna. The origin of the word antenna relative to wireless apparatus is attributed to Italian radio pioneer Guglielmo Marconi. In the summer of 1895, Marconi began testing his wireless system outdoors on his father's estate near Bologna and soon began to experiment with long wire "aerials" suspended from a pole. In Italian a tent pole is known as l'antenna centrale, and the pole with the wire was simply called l'antenna. Until then wireless radiating transmitting and receiving elements were known simply as "terminals". Because of his prominence, Marconi's use of the word antenna spread among wireless researchers and enthusiasts, and later to the general public.

Antenna may refer broadly to an entire assembly including support structure, enclosure (if any), etc., in addition to the actual RF current-carrying components. A receiving antenna may include not only the passive metal receiving elements, but also an integrated preamplifier or mixer, especially at and above microwave frequencies.

Additional Information

antenna, component of radio, television, and radar systems that directs incoming and outgoing radio waves. Antennas are usually metal and have a wide variety of configurations, from the mastlike devices employed for radio and television broadcasting to the large parabolic reflectors used to receive satellite signals and the radio waves generated by distant astronomical objects.

The first antenna was devised by the German physicist Heinrich Hertz. During the late 1880s he carried out a landmark experiment to test the theory of the British mathematician-physicist James Clerk Maxwell that visible light is only one example of a larger class of electromagnetic effects that could pass through air (or empty space) as a succession of waves. Hertz built a transmitter for such waves consisting of two flat, square metallic plates, each attached to a rod, with the rods in turn connected to metal spheres spaced close together. An induction coil connected to the spheres caused a spark to jump across the gap, producing oscillating currents in the rods. The reception of waves at a distant point was indicated by a spark jumping across a gap in a loop of wire.

The Italian physicist Guglielmo Marconi, the principal inventor of wireless telegraphy, constructed various antennas for both sending and receiving, and he also discovered the importance of tall antenna structures in transmitting low-frequency signals. In the early antennas built by Marconi and others, operating frequencies were generally determined by antenna size and shape. In later antennas frequency was regulated by an oscillator, which generated the transmitted signal.

More powerful antennas were constructed during the 1920s by combining a number of elements in a systematic array. Metal horn antennas were devised during the subsequent decade following the development of waveguides that could direct the propagation of very high-frequency radio signals.

Over the years, many types of antennas have been developed for different purposes. An antenna may be designed specifically to transmit or to receive, although these functions may be performed by the same antenna. A transmitting antenna, in general, must be able to handle much more electrical energy than a receiving antenna. An antenna also may be designed to transmit at specific frequencies. In the United States, amplitude modulation (AM) radio broadcasting, for instance, is done at frequencies between 535 and 1,605 kilohertz (kHz); at these frequencies, a wavelength is hundreds of metres or yards long, and the size of the antenna is therefore not critical. Frequency modulation (FM) broadcasting, on the other hand, is carried out at a range from 88 to 108 megahertz (MHz). At these frequencies a typical wavelength is about 3 metres (10 feet) long, and the antenna must be adjusted more precisely to the electromagnetic wave, both in transmitting and in receiving. Antennas may consist of single lengths of wire or rods in various shapes (dipole, loop, and helical antennas), or of more elaborate arrangements of elements (linear, planar, or electronically steerable arrays). Reflectors and lens antennas use a parabolic dish to collect and focus the energy of radio waves, in much the same way that a parabolic mirror in a reflecting telescope collects light rays. Directional antennas are designed to be aimed directly at the signal source and are used in direction-finding.

More Information

An antenna or aerial is a metal device made to send or receive radio waves. Many electronic devices like radio, television, radar, wireless LAN, cell phone, and GPS need antennas to do their job. Antennas work both in air and outer space.

The word 'antenna' is from Guglielmo Marconi's test with wireless equipment in 1895. For the test, he used a 2.5 meter long pole antenna with a tent pole called ' l'antenna centrale ' in Italian. So his antenna was simply called ' l'antenna '. After that, the word 'antenna' became popular among people and had the meaning it has today. The plural of antenna is either antennas or antennae (U.S. and Canada tends to use antennas more than other places).

Types of antennas

Each one is made to work for a specific frequency range. The antenna's length or size usually depends on the wavelength (1/frequency) it uses.

Different kinds of antenna have different purposes. For example, the isotropic radiator is an imaginary antenna that sends signals equally in all directions. The dipole antenna is simply two wires with one end of each wire connected to the radio and the other end standing free in space. It sends or receives signals in all directions except where the wires are pointing. Some antennas are more directional. Horn is used where high gain is needed, the wavelength is short. Satellite television and radio telescopes mostly use dish antennas.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2332 2024-10-04 16:27:11

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,012

Re: Miscellany

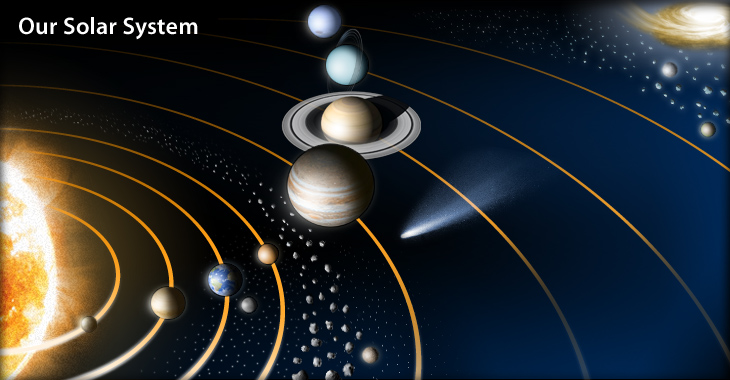

2332) Solar System

Gist

The Solar System is the gravitationally bound system of the Sun and the objects that orbit it. It formed about 4.6 billion years ago when a dense region of a molecular cloud collapsed, forming the Sun and a protoplanetary disc.

Summary

The solar system, assemblage consisting of the Sun—an average star in the Milky Way Galaxy—and those bodies orbiting around it: 8 (formerly 9) planets with more than 210 known planetary satellites (moons); many asteroids, some with their own satellites; comets and other icy bodies; and vast reaches of highly tenuous gas and dust known as the interplanetary medium. The solar system is part of the "observable universe," the region of space that humans can actually or theoretically observe with the aid of technology. Unlike the observable universe, the universe is possibly infinite.