Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#1 Re: Dark Discussions at Cafe Infinity » crème de la crème » Today 00:05:02

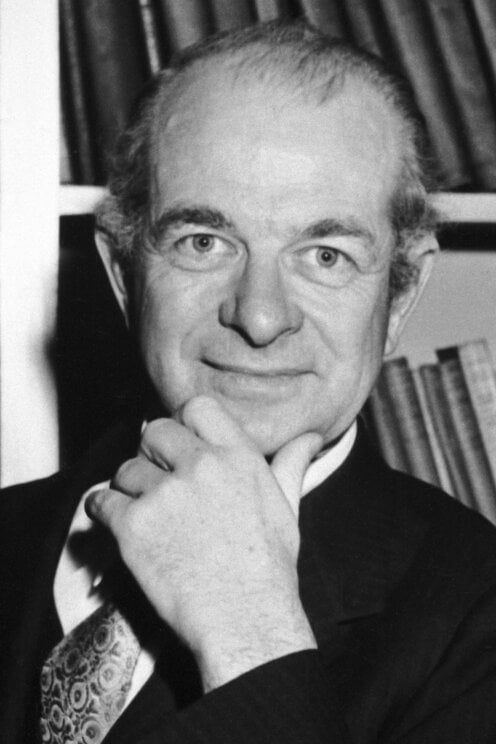

2450) Linus Pauling

Gist:

Life

Linus Pauling was born in Portland, Oregon, in the United States. His family came from a line of Prussian farmers, and Pauling's father worked as a pharmaceuticals salesman, among other things. After first studying at Oregon State University in Corvallis, Oregon, Pauling earned his PhD from the California Institute of Technology in Pasadena, with which he maintained ties for the rest of his career. In the 1950s, Pauling's involvement in the anti-nuclear movement led to his being labeled a suspected communist, which resulted in his passport being revoked at times. Linus and Ava Helen Pauling had four children together.

Work

The development of quantum mechanics during the 1920s had a great impact not only on the field of physics, but also on chemistry. During the 1930s Linus Pauling was among the pioneers who used quantum mechanics to understand and describe chemical bonding–that is, the way atoms join together to form molecules. Pauling worked in a broad range of areas within chemistry. For example, he worked on the structures of biologically important chemical compounds. In 1951 he published the structure of the alpha helix, which is an important basic component of many proteins.

Summary

Linus Carl Pauling (February 28, 1901 – August 19, 1994) was an American chemist and peace activist. He published more than 1,200 papers and books, of which about 850 dealt with scientific topics. New Scientist called him one of the 20 greatest scientists of all time. For his scientific work, Pauling was awarded the Nobel Prize in Chemistry in 1954. For his peace activism, he was awarded the Nobel Peace Prize in 1962. He is one of five people to have won more than one Nobel Prize. Of these, he is the only person to have been awarded two unshared Nobel Prizes, and one of two people to be awarded Nobel Prizes in different fields, the other being Marie Skłodowska-Curie.

Pauling was one of the founders of the fields of quantum chemistry and molecular biology. His contributions to the theory of the chemical bond include the concept of orbital hybridisation and the first accurate scale of electronegativities of the elements. Pauling also worked on the structures of biological molecules, and showed the importance of the alpha helix and beta sheet in protein secondary structure. Pauling's approach combined methods and results from X-ray crystallography, molecular model building, and quantum chemistry. His discoveries inspired the work of James Watson, Francis Crick, Rosalind Franklin, and Maurice Wilkins on the structure of DNA, which in turn made it possible for geneticists to crack the DNA code of all organisms.

In his later years, he promoted nuclear disarmament, as well as orthomolecular medicine, megavitamin therapy, and dietary supplements, especially ascorbic acid (commonly known as Vitamin C). None of his ideas concerning the medical usefulness of large doses of vitamins have gained much acceptance in the mainstream scientific community. He was married to the American human rights activist Ava Helen Pauling.

Details

Linus Pauling (born February 28, 1901, Portland, Oregon, U.S.—died August 19, 1994, Big Sur, California) was an American theoretical physical chemist who became the only person to have won two unshared Nobel Prizes. His first prize (1954) was awarded for research into the nature of the chemical bond and its use in elucidating molecular structure; the second (1962) recognized his efforts to ban the testing of nuclear weapons.

Early life and education

Pauling was the first of three children and the only son of Herman Pauling, a pharmacist, and Lucy Isabelle (Darling) Pauling, a pharmacist’s daughter. After his early education in Condon and Portland, Oregon, he attended Oregon Agricultural College (now Oregon State University), where he met Ava Helen Miller, who would later become his wife, and where he received his Bachelor of Science degree in chemical engineering summa cum laude in 1922. He then attended the California Institute of Technology (Caltech), where Roscoe G. Dickinson showed him how to determine the structures of crystals using X rays. He received his Ph.D. in 1925 for a dissertation derived from his crystal-structure papers. Following a brief period as a National Research Fellow, he received a Guggenheim Fellowship to study quantum mechanics in Europe. He spent most of the 18 months at Arnold Sommerfeld’s Institute for Theoretical Physics in Munich, Germany.

Elucidation of molecular structures

After completing postdoctoral studies, Pauling returned to Caltech in 1927. There he began a long career of teaching and research. Analyzing chemical structure became the central theme of his scientific work. By using the technique of X-ray diffraction, he determined the three-dimensional arrangement of atoms in several important silicate and sulfide minerals. In 1930, during a trip to Germany, Pauling learned about electron diffraction, and upon his return to California he used this technique of scattering electrons from the nuclei of molecules to determine the structures of some important substances. This structural knowledge assisted him in developing an electronegativity scale in which he assigned a number representing a particular atom’s power of attracting electrons in a covalent bond.

To complement the experimental tool that X-ray analysis provided for exploring molecular structure, Pauling turned to quantum mechanics as a theoretical tool. For example, he used quantum mechanics to determine the equivalent strength in each of the four bonds surrounding the carbon atom. He developed a valence bond theory in which he proposed that a molecule could be described by an intermediate structure that was a resonance combination (or hybrid) of other structures. His book The Nature of the Chemical Bond, and the Structure of Molecules and Crystals (1939) provided a unified summary of his vision of structural chemistry.

The arrival of the geneticist Thomas Hunt Morgan at Caltech in the late 1920s stimulated Pauling’s interest in biological molecules, and by the mid-1930s he was performing successful magnetic studies on the protein hemoglobin. He developed further interests in protein and, together with biochemist Alfred Mirsky, Pauling published a paper in 1936 on general protein structure. In this work the authors explained that protein molecules naturally coiled into specific configurations but became “denatured” (uncoiled) and assumed some random form once certain weak bonds were broken.

On one of his trips to visit Mirsky in New York, Pauling met Karl Landsteiner, the discoverer of blood types, who became his guide into the field of immunochemistry. Pauling was fascinated by the specificity of antibody-antigen reactions, and he later developed a theory that accounted for this specificity through a unique folding of the antibody’s polypeptide chain. World War II interrupted this theoretical work, and Pauling’s focus shifted to more practical problems, including the preparation of an artificial substitute for blood serum useful to wounded soldiers and an oxygen detector useful in submarines and airplanes. J. Robert Oppenheimer asked Pauling to head the chemistry section of the Manhattan Project, but his suffering from glomerulonephritis (inflammation of the glomerular region of the kidney) prevented him from accepting this offer. For his outstanding services during the war, Pauling was later awarded the Presidential Medal for Merit.

While collaborating on a report about postwar American science, Pauling became interested in the study of sickle-cell anemia. He perceived that the sickling of cells noted in this disease might be caused by a genetic mutation in the globin portion of the blood cell’s hemoglobin. In 1949 he and his coworkers published a paper identifying the particular defect in hemoglobin’s structure that was responsible for sickle-cell anemia, which thereby made this disorder the first “molecular disease” to be discovered. At that time, Pauling’s article on the periodic law appeared in the 14th edition of Encyclopædia Britannica.

While serving as a visiting professor at the University of Oxford in 1948, Pauling returned to a problem that had intrigued him in the late 1930s—the three-dimensional structure of proteins. By folding a paper on which he had drawn a chain of linked amino acids, he discovered a cylindrical coil-like configuration, later called the alpha helix. The most significant aspect of Pauling’s structure was its determination of the number of amino acids per turn of the helix. During this same period he became interested in deoxyribonucleic acid (DNA), and early in 1953 he and protein crystallographer Robert Corey published their version of DNA’s structure, three strands twisted around each other in ropelike fashion. Shortly thereafter James Watson and Francis Crick published DNA’s correct structure, a double helix. Pauling’s efforts to modify his postulated structure had been hampered by poor X-ray photographs of DNA and by his lack of understanding of this molecule’s wet and dry forms. In 1952 he failed to visit Rosalind Franklin, working in Maurice Wilkins’s laboratory at King’s College, London, and consequently did not see her X-ray pictures of DNA. Frankin’s pictures proved to be the linchpin in allowing Watson and Crick to elucidate the actual structure. Nevertheless, Pauling was awarded the 1954 Nobel Prize for Chemistry “for his research into the nature of the chemical bond and its application to the elucidation of the structure of complex substances.”

Humanitarian activities of Linus Pauling

During the 1950s Pauling and his wife became well known to the public through their crusade to stop the atmospheric testing of nuclear weapons. In 1958 they presented an appeal for a test ban to the United Nations in the form of a document signed by 9,235 scientists from 44 countries. Pauling’s sentiments were also promulgated through his book No More War! (1958), a passionate analysis of the implications of nuclear war for humanity. In 1960 he was called upon to defend his actions regarding a test ban before a congressional subcommittee. By refusing to reveal the names of those who had helped him collect signatures, he risked going to jail—a stand initially condemned but later widely admired. His work on behalf of world peace was recognized with the 1962 Nobel Prize for Peace awarded on October 10, 1963, the date that the Nuclear Test Ban Treaty went into effect.

Pauling’s Peace Prize generated such antagonism from Caltech administrators that he left the institute in 1963. He became a staff member at the Center for the Study of Democratic Institutions in Santa Barbara, California, where his humanitarian work was encouraged. Although he was able to develop a new model of the atomic nucleus while working at the Center, his desire to perform more experimental research led him to a research professorship at the University of California in San Diego in 1967. There he published a paper on orthomolecular psychiatry that explained how mental health could be achieved by manipulating substances normally present in the body. Two years later he accepted a post at Stanford University, where he worked until 1972.

Later years

While at San Diego and Stanford, Pauling’s scientific interests centred on a particular molecule—ascorbic acid (vitamin C). He examined the published reports about this vitamin and concluded that, when taken in large enough quantities (megadoses), it would help the body fight off colds and other diseases. The outcome of his research was the book Vitamin C and the Common Cold (1970), which became a best-seller. Pauling’s interest in vitamin C in particular and orthomolecular medicine in general led, in 1973, to his founding an institute that eventually bore his name—the Linus Pauling Institute of Science and Medicine. During his tenure at this institute, he became embroiled in controversies about the relative benefits and risks of ingesting megadoses of various vitamins. The controversy intensified when he advocated vitamin C’s usefulness in the treatment of cancer. Pauling and his collaborator, the Scottish physician Ewan Cameron, published their views in Cancer and Vitamin C (1979). Their ideas were subjected to experimental animal studies funded by the institute. While these studies supported their ideas, investigations at the Mayo Clinic involving human cancer patients did not corroborate Pauling’s results.

Although he continued to receive recognition for his earlier accomplishments, Pauling’s later work provoked considerable skepticism and controversy. His cluster model of the atomic nucleus was rejected by physicists, his interpretation of the newly discovered quasicrystals received little support, and his ideas on vitamin C were rejected by the medical establishment. In an effort to raise money to support his increasingly troubled institute, Pauling published How to Live Longer and Feel Better (1986), but the book failed to become the success that he and his associates had anticipated.

Both Pauling and his wife developed cancer. Ava Helen Pauling died of stomach cancer in 1981. Ten years later Pauling discovered that he had prostate cancer. Although he underwent surgery and other treatments, the cancer eventually spread to his liver. He died at his ranch on the Big Sur coast of California.

#2 Re: This is Cool » Miscellany » Today 00:04:02

2513) Neurotransmitters

Gist

Neurotransmitters are endogenous chemical messengers that transmit signals across a synapse from one neuron to another target cell (neuron, muscle, or gland). They are vital for brain function, influencing mood, sleep, memory, and motor control. Over 50 types exist, acting as either excitatory (triggering a response) or inhibitory (inhibiting a response).

Neurotransmitters are often referred to as the body's chemical messengers. They are the molecules used by the nervous system to transmit messages between neurons, or from neurons to muscles.

Neurotransmitters are endogenous chemical messengers that transmit signals across a synapse between neurons, muscles, or gland cells, essential for regulating body functions, emotions, and thoughts. They are classified into amino acids, peptides, monoamines, purines, and gasotransmitters, with over 50 types known. Their action is either excitatory (promoting a signal) or inhibitory (stopping a signal).

Summary

A neurotransmitter is a signaling molecule secreted by a neuron to affect another cell across a synapse. The cell receiving the signal, or target cell, may be another neuron, but could also be a gland or muscle cell.

Neurotransmitters are released from synaptic vesicles into the synaptic cleft where they are able to interact with neurotransmitter receptors on the target cell. Some neurotransmitters are also stored in large dense core vesicles. The neurotransmitter's effect on the target cell is determined by the receptor it binds to. Many neurotransmitters are synthesized from simple and plentiful precursors such as amino acids, which are readily available and often require a small number of biosynthetic steps for conversion.

Neurotransmitters are essential to the function of complex neural systems. The exact number of unique neurotransmitters in humans is unknown, but more than 100 have been identified. Common neurotransmitters include glutamate, GABA, acetylcholine, glycine, dopamine and norepinephrine.

(GABA: gamma-aminobutyric acid).

Details

Neurotransmitters are your body’s chemical messengers. They carry messages from one nerve cell across a space to the next nerve, muscle or gland cell. These messages help you move your limbs, feel sensations, keep your heart beating, and take in and respond to all information your body receives from other internal parts of your body and your environment.

What are neurotransmitters?

Neurotransmitters are chemical messengers that your body can’t function without. Their job is to carry chemical signals (“messages”) from one neuron (nerve cell) to the next target cell. The next target cell can be another nerve cell, a muscle cell or a gland.

Your body has a vast network of nerves (your nervous system) that send and receive electrical signals from nerve cells and their target cells all over your body. Your nervous system controls everything from your mind to your muscles, as well as organ functions. In other words, nerves are involved in everything you do, think and feel. Your nerve cells send and receive information from all body sources. This constant feedback is essential to your body’s optimal function.

What body functions do nerves and neurotransmitters help control?

Your nervous system controls such functions as your:

* Heartbeat and blood pressure.

* Breathing.

* Muscle movements.

* Thoughts, memory, learning and feelings.

* Sleep, healing and aging.

* Stress response.

* Hormone regulation.

* Digestion, sense of hunger and thirst.

* Senses (response to what you see, hear, feel, touch and taste).

How do neurotransmitters work?

You have billions of nerve cells in your body. Nerve cells are generally made up of three parts:

* A cell body. The cell body is vital to producing neurotransmitters and maintaining the function of the nerve cell.

* An axon. The axon carries the electrical signals along the nerve cell to the axon terminal.

* An axon terminal. This is where the electrical message is changed to a chemical signal using neurotransmitters to communicate with the next group of nerve cells, muscle cells or organs.

Neurotransmitters are located in a part of the neuron called the axon terminal. They’re stored within thin-walled sacs called synaptic vesicles. Each vesicle can contain thousands of neurotransmitter molecules.

As a message or signal travels along a nerve cell, the electrical charge of the signal causes the vesicles of neurotransmitters to fuse with the nerve cell membrane at the very edge of the cell. The neurotransmitters, which now carry the message, are then released from the axon terminal into a fluid-filled space that’s between one nerve cell and the next target cell (another nerve cell, muscle cell or gland).

In this space, called the synaptic junction, the neurotransmitters carry the message across less than 40 nanometers (nm) wide (by comparison, the width of a human hair is about 75,000 nm). Each type of neurotransmitter lands on and binds to a specific receptor on the target cell (like a key that can only fit and work in its partner lock). After binding, the neurotransmitter then triggers a change or action in the target cell, like an electrical signal in another nerve cell, a muscle contraction or the release of hormones from a cell in a gland.

What action or change do neurotransmitters transmit to the target cell?

Neurotransmitters transmit one of three possible actions in their messages, depending on the specific neurotransmitter.

* Excitatory. Excitatory neurotransmitters “excite” the neuron and cause it to “fire off the message,” meaning, the message continues to be passed along to the next cell. Examples of excitatory neurotransmitters include glutamate, epinephrine and norepinephrine.

* Inhibitory. Inhibitory neurotransmitters block or prevent the chemical message from being passed along any farther. Gamma-aminobutyric acid (GABA), glycine and serotonin are examples of inhibitory neurotransmitters.

* Modulatory. Modulatory neurotransmitters influence the effects of other chemical messengers. They “tweak” or adjust how cells communicate at the synapse. They also affect a larger number of neurons at the same time.

What happens to neurotransmitters after they deliver their message?

After neurotransmitters deliver their message, the molecules must be cleared from the synaptic cleft (the space between the nerve cell and the next target cell). They do this in one of three ways.

Neurotransmitters:

* Fade away (a process called diffusion).

* Are reabsorbed and reused by the nerve cell that released it (a process called reuptake).

* Are broken down by enzymes within the synapse so it can’t be recognized or bind to the receptor cell (a process called degradation).

How many different types of neurotransmitters are there?

Scientists know of at least 100 neurotransmitters and suspect there are many others that have yet to be discovered. They can be grouped into types based on their chemical nature. Some of the better-known categories and neurotransmitter examples and their functions include the following:

Amino acids neurotransmitters

These neurotransmitters are involved in most functions of your nervous system.

* Glutamate. This is the most common excitatory neurotransmitter of your nervous system. It’s the most abundant neurotransmitter in your brain. It plays a key role in cognitive functions like thinking, learning and memory. Imbalances in glutamate levels are associated with Alzheimer’s disease, dementia, Parkinson’s disease and seizures.

* Gamma-aminobutryic acid (GABA). GABA is the most common inhibitory neurotransmitter of your nervous system, particularly in your brain. It regulates brain activity to prevent problems in the areas of anxiety, irritability, concentration, sleep, seizures and depression.

* Glycine. Glycine is the most common inhibitory neurotransmitter in your spinal cord. Glycine is involved in controlling hearing processing, pain transmission and metabolism.

Monoamines neurotransmitters

These neurotransmitters play a lot of different roles in your nervous system and especially in your brain. Monoamines neurotransmitters regulate consciousness, cognition, attention and emotion. Many disorders of your nervous system involve abnormalities of monoamine neurotransmitters, and many drugs that people commonly take affect these neurotransmitters.

* Serotonin. Serotonin is an inhibitory neurotransmitter. Serotonin helps regulate mood, sleep patterns, sexuality, anxiety, appetite and pain. Diseases associated with serotonin imbalance include seasonal affective disorder, anxiety, depression, fibromyalgia and chronic pain. Medications that regulate serotonin and treat these disorders include selective serotonin reuptake inhibitors (SSRIs) and serotonin-norepinephrine reuptake inhibitors (SNRIs).

* Histamine. Histamine regulates body functions including wakefulness, feeding behavior and motivation. Histamine plays a role in asthma, bronchospasm, mucosal edema and multiple sclerosis.

* Dopamine. Dopamine plays a role in your body’s reward system, which includes feeling pleasure, achieving heightened arousal and learning. Dopamine also helps with focus, concentration, memory, sleep, mood and motivation. Diseases associated with dysfunctions of the dopamine system include Parkinson’s disease, schizophrenia, bipolar disease, restless legs syndrome and attention deficit hyperactivity disorder (ADHD). Many highly addictive drugs (cocaine, methamphetamines, amphetamines) act directly on the dopamine system.

* Epinephrine. Epinephrine (also called adrenaline) and norepinephrine (see below) are responsible for your body’s so-called “fight-or-flight response” to fear and stress. These neurotransmitters stimulate your body’s response by increasing your heart rate, breathing, blood pressure, blood sugar and blood flow to your muscles, as well as heighten attention and focus to allow you to act or react to different stressors. Too much epinephrine can lead to high blood pressure, diabetes, heart disease and other health problems. As a drug, epinephrine is used to treat anaphylaxis, asthma attacks, cardiac arrest and severe infections.

* Norepinephrine. Norepinephrine (also called noradrenaline) increases blood pressure and heart rate. It’s most widely known for its effects on alertness, arousal, decision-making, attention and focus. Many medications (stimulants and depression medications) aim to increase norepinephrine levels to improve focus or concentration to treat ADHD or to modulate norepinephrine to improve depression symptoms.

Peptide neurotransmitters

Peptides are polymers or chains of amino acids.

* Endorphins. Endorphins are your body’s natural pain reliever. They play a role in our perception of pain. Release of endorphins reduces pain, as well as causes “feel good” feelings. Low levels of endorphins may play a role in fibromyalgia and some types of headaches.

Acetylcholine

This excitatory neurotransmitter does a number of functions in your central nervous system (CNS [brain and spinal cord]) and in your peripheral nervous system (nerves that branch from the CNS). Acetylcholine is released by most neurons in your autonomic nervous system regulating heart rate, blood pressure and gut motility. Acetylcholine plays a role in muscle contractions, memory, motivation, sexual desire, sleep and learning. Imbalances in acetylcholine levels are linked with health issues, including Alzheimer’s disease, seizures and muscle spasms.

Why would a neurotransmitter not work as it should?

Several things can go haywire and lead to neurotransmitters not working as they should. In general, some of these problems include:

* Too much or not enough of one or more neurotransmitters are produced or released.

* The receptor on the receiver cell (the nerve, muscle or gland) isn’t working properly. The otherwise normal functioning neurotransmitter can’t effectively signal the next cell.

* The cell receptors aren’t taking up enough neurotransmitter due to inflammation and damage of the synaptic cleft.

* Neurotransmitters are reabsorbed too quickly.

* Enzymes limit the number of neurotransmitters from reaching their target cell.

Problems with other parts of nerves, existing diseases or medications you may be taking can affect neurotransmitters. Also, when neurotransmitters don’t function as they should, disease can happen. For example:

* Not enough acetylcholine can lead to the loss of memory that’s seen in Alzheimer’s disease.

* Too much serotonin is possibly associated with autism spectrum disorders.

* An increase in activity of glutamate or reduced activity of GABA can result in sudden, high-frequency firing of local neurons in your brain, which can cause seizures.

* Too much norepinephrine and dopamine activity and abnormal glutamate transmission contribute to mania.

How do medications affect the action of neurotransmitters?

Scientists recognized the value and the role of neurotransmitters in your nervous system and the importance of developing medications that could influence these chemical messengers to treat many health conditions. Many medications, especially those that treat diseases of your brain, work in many ways to affect neurotransmitters.

Medications can block the enzyme that breaks down a neurotransmitter so that more of it reaches nerve receptors.

Example: Donepezil, galantamine and rivastigmine block the enzyme acetylcholinesterase, which breaks down the neurotransmitter acetylcholine. These medications are used to stabilize and improve memory and cognitive function in people with Alzheimer’s disease, as well as other neurodegenerative disorders.

Medications can block the neurotransmitter from being received at its receptor site.

Example: Selective serotonin reuptake inhibitors are a type of drug class that blocks serotonin from being received and absorbed by a nerve cell. These drugs may be helpful in treating depression, anxiety and other mental health conditions.

Medications can block the release of a neurotransmitter from a nerve cell.

Example: Lithium works as a treatment for mania partially by blocking norepinephrine release and is used in the treatment of bipolar disorder.

Additional Information

Neurotransmitters are chemical messengers in the body. Their function is to transmit signals from nerve cells to target cells. These signals help regulate bodily functions ranging from heart rate to appetite.

Neurotransmitters are part of the nervous system. They play a crucial role in human development and many bodily functions.

What is a neurotransmitter?

The nervous system controls the body’s organs and plays a role in nearly all bodily functions. Nerve cells, also known as neurons, and their neurotransmitters play important roles in this system.

Nerve cells fire nerve impulses. They do this by releasing neurotransmitters, also known as the body’s chemical messengers. These chemicals carry signals to other cells.

Neurotransmitters relay their messages by traveling between cells and attaching to specific receptors on target cells.

Each neurotransmitter attaches to a different receptor. For example, dopamine molecules attach to dopamine receptors. When they attach, it triggers an action in the target cells.

After neurotransmitters deliver their messages, the body breaks them down or recycles them.

What do neurotransmitters do?

The brain needs neurotransmitters to regulate many necessary functions, including:

* heart rate

* breathing

* sleep cycles

* digestion

* mood

* concentration

* appetite

* muscle movement

Neurotransmitters also play a role in early human development.

Types of neurotransmitters

Experts have identified over 100 neurotransmitters to date and are still discovering more.

Neurotransmitters have different types of actions:

* Excitatory neurotransmitters encourage a target cell to take action.

* Inhibitory neurotransmitters decrease the chances of the target cell taking action. In some cases, these neurotransmitters have a relaxation-like effect.

* Modulatory neurotransmitters can send messages to many neurons at the same time. They also communicate with other neurotransmitters.

Some neurotransmitters can carry out several functions depending on the type of receptor they connect to.

#3 Jokes » Miscellaneous Jokes - II » Today 00:03:32

- Jai Ganesh

- Replies: 0

Q: Why did the skittles go to school?

A: Because they wanted to be smarties!

* * *

Q: How do you make a fruit punch?

A: Give it boxing lessons.

* * *

Q: Did you hear about the angry pancake?

A: He just flipped.

* * *

Q: Why did the pecans run across the busy road?

A: Because they were nuts!

* * *

Q: What is pink, goes in hard and dry and comes out soft and wet?

A: Bubble Gum.

* * *

#4 Dark Discussions at Cafe Infinity » Come Quotes - XXII » Today 00:02:47

- Jai Ganesh

- Replies: 0

Come Quotes - XXII

1. Nothing else in the world... not all the armies... is so powerful as an idea whose time has come. - Victor Hugo

2. Non-violence, which is the quality of the heart, cannot come by an appeal to the brain. - Mahatma Gandhi

3. When we are born we cry that we are come to this great stage of fools. - William Shakespeare

4. Walk while ye have the light, lest darkness come upon you. - John Ruskin

5. The goal towards which the pleasure principle impels us - of becoming happy - is not attainable: yet we may not - nay, cannot - give up the efforts to come nearer to realization of it by some means or other. - Sigmund Freud

6. When we understand string theory, we will know how the universe began. It won't have much effect on how we live, but it is important to understand where we come from and what we can expect to find as we explore. - Stephen Hawking

7. Let us more and more insist on raising funds of love, of kindness, of understanding, of peace. Money will come if we seek first the Kingdom of God - the rest will be given. - Mother Teresa

8. I balanced all, brought all to mind, the years to come seemed waste of breath, a waste of breath the years behind, in balance with this life, this death. - William Butler Yeats.

#5 This is Cool » River Amazon » Yesterday 17:13:13

- Jai Ganesh

- Replies: 0

River Amazon

Gist

The Amazon River in South America is the world's largest river by discharge volume, carrying more water than the next eight largest rivers combined. It is generally considered the second longest (approx. 6,400 km/4,000 miles) after the Nile, though some studies claim it is longer. Originating in the Andes, it traverses Peru, Colombia, and Brazil, emptying into the Atlantic Ocean.

The Amazon River in South America is the largest river by discharge volume of water in the world, and the longest or second-longest river system in the world, a title which is disputed with the Nile.

Summary

The Amazon River in South America is the largest river by discharge volume of water in the world, and the longest or second-longest river system in the world, a title which is disputed with the Nile.

The headwaters of the Apurímac River on Nevado Mismi had been considered, for nearly a century, the Amazon basin's most distant source until a 2014 study found it to be the headwaters of the Mantaro River on the Cordillera Rumi Cruz in Peru. The Mantaro and Apurímac rivers join, and with other tributaries form the Ucayali River, which in turn meets the Marañón River upstream of Iquitos, Peru, forming what countries other than Brazil consider to be the main stem of the Amazon. Brazilians call this section the Solimões River above its confluence with the Rio Negro forming what Brazilians call the Amazon at the Meeting of Waters (Portuguese: Encontro das Águas) at Manaus, the largest city on the river.

The Amazon River has an average discharge of about 215,000–230,000 cubic meters per second (7,600,000–8,100,000 cu ft/s)—approximately 6,591–7,570 cubic kilometers (1,581–1,816 cu mi) per year, greater than the next seven largest independent rivers combined. Two of the top ten rivers by discharge are tributaries of the Amazon river. The Amazon represents 20% of the global riverine discharge into oceans. The Amazon basin is the largest drainage basin in the world, with an area of approximately 7,000,000 square kilometers (2,700,000 sq mi). The portion of the river's drainage basin in Brazil alone is larger than any other river's basin. The Amazon enters Brazil with only one-fifth of the flow it finally discharges into the Atlantic Ocean, yet already has a greater flow at this point than the discharge of any other river in the world. It has a recognized length of 6,400 kilometers (4,000 mi), but according to some reports, its length varies from 6,575–7,062 kilometers (4,086–4,388 mi).

Details

Amazon River is the greatest river of South America and the largest drainage system in the world in terms of the volume of its flow and the area of its basin. The total length of the river—as measured from the headwaters of the Ucayali-Apurímac river system in southern Peru—is at least 4,000 miles (6,400 km), which makes it slightly shorter than the Nile River but still the equivalent of the distance from New York City to Rome. Its westernmost source is high in the Andes Mountains, within 100 miles (160 km) of the Pacific Ocean, and its mouth is in the Atlantic Ocean, on the northeastern coast of Brazil. However, both the length of the Amazon and its ultimate source have been subjects of debate since the mid-20th century, and there are those who claim that the Amazon is actually longer than the Nile. (See below The length of the Amazon.)

The vast Amazon basin (Amazonia), the largest lowland in Latin America, has an area of about 2.7 million square miles (7 million square km) and is nearly twice as large as that of the Congo River, the Earth’s other great equatorial drainage system. Stretching some 1,725 miles (2,780 km) from north to south at its widest point, the basin includes the greater part of Brazil and Peru, significant parts of Colombia, Ecuador, and Bolivia, and a small area of Venezuela; roughly two-thirds of the Amazon’s main stream and by far the largest portion of its basin are within Brazil. The Tocantins-Araguaia catchment area in Pará state covers another 300,000 square miles (777,000 square km). Although considered a part of Amazonia by the Brazilian government and in popular usage, it is technically a separate system. It is estimated that about one-fifth of all the water that runs off Earth’s surface is carried by the Amazon. The flood-stage discharge at the river’s mouth is four times that of the Congo and more than 10 times the amount carried by the Mississippi River. This immense volume of fresh water dilutes the ocean’s saltiness for more than 100 miles (160 km) from shore.

The extensive lowland areas bordering the main river and its tributaries, called várzeas (“floodplains”), are subject to annual flooding, with consequent soil enrichment; however, most of the vast basin consists of upland, well above the inundations and known as terra firme. More than two-thirds of the basin is covered by an immense rainforest, which grades into dry forest and savanna on the higher northern and southern margins and into montane forest in the Andes to the west. The Amazon Rainforest, which represents about half of the Earth’s remaining rainforest, also constitutes its single largest reserve of biological resources.

Since the later decades of the 20th century, the Amazon basin has attracted international attention because human activities have increasingly threatened the equilibrium of the forest’s highly complex ecology. Deforestation has accelerated, especially south of the Amazon River and on the piedmont outwash of the Andes, as new highways and air transport facilities have opened the basin to a tidal wave of settlers, corporations, and researchers. Significant mineral discoveries have brought further influxes of population. The ecological consequences of such developments, potentially reaching well beyond the basin and even gaining worldwide importance, have attracted considerable scientific attention.

The first European to explore the Amazon, in 1541, was the Spanish soldier Francisco de Orellana, who gave the river its name after reporting pitched battles with tribes of female warriors, whom he likened to the Amazons of Greek mythology. Although the name Amazon is conventionally employed for the entire river, in Peruvian and Brazilian nomenclature it properly is applied only to sections of it. In Peru the upper main stream (fed by numerous tributaries flowing from sources in the Andes) down to the confluence with the Ucayali River is called Marañón, and from there to the Brazilian border it is called Amazonas. In Brazil the name of the river that flows from Peru to its confluence with the Negro River is Solimões; from the Negro out to the Atlantic the river is called Amazonas.

Additional Information

Occupying much of Brazil and Peru, and also parts of Guyana, Colombia, Ecuador, Bolivia, Suriname, French Guiana, and Venezuela, the Amazon River Basin is the world’s largest drainage system. The Amazon Basin supports the world’s largest rainforest, which accounts for more than half the total volume of rainforests in the world.

The Amazon River is the second longest river in the world, flowing through South America. It is also the largest river by volume, carrying more water than all of the other rivers in the world combined. The Amazon River basin is home to the largest rainforest in the world, which is home to an incredible diversity of plant and animal life. The Amazon River is also a vital resource for the people of South America, providing food, water, and transportation. However, the Amazon River is also facing a number of threats, including deforestation, pollution, and climate change.

Amazon River – Discharge

The Amazon River is the largest river in the world by volume, with a discharge of approximately 209,000 cubic meters per second. This means that the Amazon River carries more water than all of the other rivers in the world combined. The Amazon River’s discharge is so large that it can be seen from space.

The Amazon River’s discharge is driven by the rainfall in the Amazon rainforest. The Amazon rainforest is one of the wettest places on Earth, with an average annual rainfall of over 2,000 millimeters (mm). This rainfall creates a large amount of runoff, which flows into the Amazon River.

The Amazon River’s discharge also varies throughout the year. During the wet season, from January to May, the Amazon River’s discharge can reach up to 300,000 m³/s. During the dry season, from June to December, the Amazon River’s discharge can drop to as low as 100,000 cubic meters per second.

The Amazon River’s discharge has a significant impact on the environment. The Amazon River’s discharge provides water for the Amazon rainforest, which is home to a vast array of plant and animal life. The Amazon River’s discharge also helps to regulate the climate in the Amazon rainforest.

The Amazon River’s discharge is also important to humans. The Amazon River is a major source of water for drinking, irrigation, and transportation. The Amazon River is also a major source of food, with fish being a staple of the diet of many people who live in the Amazon rainforest.

Here are some examples of the Amazon River’s discharge:

* The Amazon River’s discharge is so large that it can be seen from space.

* The Amazon River’s discharge is greater than the combined discharge of all of the other rivers in the world.

* The Amazon River’s discharge varies throughout the year, with the highest discharge occurring during the wet season and the lowest discharge occurring during the dry season.

* The Amazon River’s discharge has a significant impact on the environment, providing water for the Amazon rainforest, regulating the climate, and supporting a vast array of plant and animal life.

* The Amazon River’s discharge is also important to humans, providing water for drinking, irrigation, transportation, and food.

Amazon River Basin

The Amazon River Basin is the largest drainage basin in the world, covering an area of approximately 7 million square kilometers (2.7 million square miles). It is located in South America and includes parts of Brazil, Peru, Bolivia, Ecuador, Colombia, and Venezuela. The Amazon River is the main waterway of the basin and is the second longest river in the world, after the Nile River.

Geography

The Amazon River Basin is a vast, lowland region that is covered in dense rainforest. The basin is bordered by the Andes Mountains to the west, the Guiana Highlands to the north, and the Brazilian Highlands to the south. The Amazon River flows from the Andes Mountains in Peru and empties into the Atlantic Ocean near the city of Belém, Brazil.

Climate

The Amazon River Basin has a tropical climate, with high temperatures and abundant rainfall throughout the year. The average temperature in the basin is around 25 degrees Celsius (77 degrees Fahrenheit). The rainy season lasts from December to May, and the dry season lasts from June to November.

Biodiversity

The Amazon River Basin is one of the most biodiverse regions on Earth. It is home to an estimated 10% of the world’s known species, including many endangered species. Some of the most iconic animals of the Amazon River Basin include the jaguar, the giant anteater, the sloth, and the piranha.

Human Activity

The Amazon River Basin is home to a large population of people, including indigenous peoples, settlers, and migrants. The main economic activities in the basin are agriculture, logging, mining, and fishing. However, these activities have also led to environmental problems, such as deforestation, pollution, and climate change.

Conservation

The Amazon River Basin is a vital ecosystem that provides a number of important services, such as regulating the climate, providing food and water, and supporting biodiversity. However, the basin is facing a number of threats, including deforestation, pollution, and climate change. Conservation efforts are underway to protect the Amazon River Basin and its biodiversity.

Examples of Conservation Efforts

* The Brazilian government has created a number of protected areas in the Amazon River Basin, including national parks, wildlife refuges, and sustainable development reserves.

* The Amazon Conservation Association (ACA) is a non-profit organization that works to protect the Amazon River Basin and its biodiversity. The ACA supports sustainable development projects, promotes education and research, and advocates for policies that protect the Amazon.

* The World Wildlife Fund (WWF) is another non-profit organization that works to protect the Amazon River Basin. The WWF supports conservation projects, raises awareness about the importance of the Amazon, and advocates for policies that protect the environment.

These are just a few examples of the many conservation efforts that are underway to protect the Amazon River Basin. By working together, we can help to ensure that this vital ecosystem is preserved for future generations.

#6 Science HQ » Cataract » Yesterday 16:29:12

- Jai Ganesh

- Replies: 0

Cataract

Gist

A cataract is the gradual clouding of the eye's natural lens, usually caused by aging, which results in hazy vision, light sensitivity, and faded colors. Primarily affecting adults over 60, risks include smoking, diabetes, and UV exposure. Treatment requires a safe, 15-30 minute outpatient surgery to replace the cloudy lens with an artificial one, typically allowing quick recovery.

The main cause of cataracts is aging, as proteins in the eye's lens break down and clump together, causing clouding, but other major factors include long-term UV light exposure, smoking, diabetes, eye injuries, and steroid medication use. These risk factors accelerate the natural aging process, leading to vision becoming hazy or cloudy over time.

Summary

A cataract is a clouding of the lens of the eye, which is typically clear. For people who have cataracts, seeing through cloudy lenses is like looking through a frosty or fogged-up window. Clouded vision caused by cataracts can make it more difficult to read, drive a car at night or see the expression on a friend's face.

Most cataracts develop slowly and don't disturb eyesight early on. But with time, cataracts will eventually affect vision.

At first, stronger lighting and eyeglasses can help deal with cataracts. But if impaired vision affects usual activities, cataract surgery might be needed. Fortunately, cataract surgery is generally a safe, effective procedure.

Symptoms

Symptoms of cataracts include:

* Clouded, blurred or dim vision.

* Trouble seeing at night.

* Sensitivity to light and glare.

* Need for brighter light for reading and other activities.

* Seeing "halos" around lights.

* Frequent changes in eyeglass or contact lens prescription.

* Fading or yellowing of colors.

* Double vision in one eye.

At first, the cloudiness in your vision caused by a cataract may affect only a small part of the eye's lens. You may not notice any vision loss. As the cataract grows larger, it clouds more of your lens. More clouding changes the light passing through the lens. This may lead to symptoms you notice more.

When to see a doctor

Make an appointment for an eye exam if you notice any changes in your vision. If you develop sudden vision changes, such as double vision or flashes of light, sudden eye pain, or a sudden headache, see a member of your health care team right away.

Details

A cataract is a cloudy area in the lens of the eye that impairs vision. Cataracts often develop slowly and can affect one or both eyes. Symptoms may include faded colours, blurry or double vision, halos around light, trouble with bright lights, and difficulty seeing at night. This may result in difficulty driving, reading and recognizing faces. Poor vision caused by cataracts may also result in an increased risk of falling and depression. In 2020 Cataracts caused 39.6% of all cases of blindness and 28.3% of visual impairment worldwide. Cataracts remain the single most common cause of global blindness.

Cataracts are most commonly due to aging but may also be due to trauma or radiation exposure, be present from birth or occur following eye surgery for other problems. Risk factors include diabetes, longstanding use of corticosteroid medication, smoking tobacco, prolonged exposure to sunlight and alcohol. In addition, poor nutrition, obesity, chronic kidney disease and autoimmune diseases have been recognized in various studies as contributing to the development of cataracts. Cataract formation is primarily driven by oxidative stress, which damages lens proteins, leading to their aggregation and the accumulation of clumps of protein or yellow-brown pigment in the lens. This reduces the transmission of light to the retina at the back of the eye, impairing vision. Additionally, alterations in the lens's metabolic processes, including imbalances in calcium and other ions, contribute to cataract development. Diagnosis is typically through an eye examination, with ophthalmoscopy and slit-lamp examination being the most effective methods. During ophthalmoscopy the pupil is dilated and the red reflex is examined for any opacities in the lens. Slit-lamp examination provides further details on the characteristics, location and extent of the cataract.

Wearing sunglasses with UV protection and a wide brimmed hat, eating leafy vegetables and fruits and avoiding smoking may reduce the risk of developing cataracts or slow the process. Early on, the symptoms may be improved with glasses. If this does not help, surgery to remove the cloudy lens and replace it with an artificial lens is the only effective treatment. Cataract surgery is not readily available in many countries, and surgery is needed only if the cataracts are causing problems and generally results in an improved quality of life.

About 20 million people worldwide are blind owing to cataracts. They are the cause of approximately 5% of blindness in the United States and nearly 60% of blindness in parts of Africa and South America. Blindness from cataracts occurs in 10 to 40 per 100,000 children in the developing world and 1 to 4 per 100,000 children in the developed world. Cataracts become more common with age. In the United States, cataracts occur in 68% of those over the age of 80 years. They are more common in women and less common in Hispanic and Black people.

Additional Information

A cataract is a cloudy or opaque area in the normally clear lens of the eye. Depending upon its size and location, it can interfere with normal vision.

Most cataracts develop in people over age 55, but they occasionally occur in infants and young children or as a result of trauma or medications. Usually, cataracts develop in both eyes, but one may be worse than the other.

The lens is located inside the eye behind the iris, the colored part of the eye. Normally, the lens focuses light on the retina, which sends the image through the optic nerve to the brain. However, if the lens is clouded by a cataract, light is scattered so the lens can no longer focus it properly, causing vision problems. The lens is made of mostly proteins and water. The clouding of the lens occurs due to changes in the proteins and lens fibers.

Types of cataracts

The lens is composed of layers, like an onion. The outermost is the capsule. The layer inside the capsule is the cortex, and the innermost layer is the nucleus. A cataract may develop in any of these areas. Cataracts are named for their location in the lens:

* A nuclear cataract is located in the center of the lens. The nucleus tends to darken with age, changing from clear to yellow and sometimes brown.

* A cortical cataract affects the layer of the lens surrounding the nucleus. The cataract looks like a wedge or a spoke.

* A posterior capsular cataract is found in the back outer layer of the lens. This type often develops more rapidly.

Causes & risk factors

Most cataracts are due to age-related changes in the lens of the eye that cause it to become cloudy or opaque. However, other factors can contribute to cataract development, including:

* Diabetes mellitus. People with diabetes are at higher risk for cataracts.

* Drugs. Certain medications are associated with cataract development. These include:

** Corticosteroids.

** Chlorpromazine and other phenothiazine related medications.

* Ultraviolet radiation. Studies show an increased chance of cataract formation with unprotected exposure to ultraviolet (UV) radiation.

* Smoking. There is possibly an association between smoking and increased lens cloudiness.

* Alcohol. Several studies show increased cataract formation in patients with higher alcohol consumption compared with people who have lower or no alcohol consumption.

* Nutritional deficiency. Although the results are inconclusive, studies suggest an association between cataract formation and low levels of antioxidants (for example, vitamin C, vitamin E, and carotenoids). Further studies may show that antioxidants can help decrease cataract development.

* Family History. If a close relative has had cataracts, there is a higher chance of developing a cataract.

Rarely, cataracts are present at birth or develop shortly after. They may be inherited or develop due to an infection (such as rubella) in the mother during pregnancy. A cataract may also develop following an eye injury or surgery for another eye problem, such as glaucoma.

Symptoms

Cataracts generally form very slowly. Signs and symptoms of a cataract may include:

* Blurred or hazy vision.

* Reduced-intensity of colors.

* Increased sensitivity to glare from lights, particularly when driving at night.

* Increased difficulty seeing at night.

* Change in the eye's refractive error, or eyeglass prescription.

Diagnosis

Cataracts are diagnosed through a comprehensive eye examination. This examination may include:

* Patient history to determine if vision difficulties are limiting daily activities and other general health concerns affecting vision.

* Visual acuity measurement to determine to what extent a cataract may be limiting clear distance and near vision.

* Refraction to determine the need for changes in an eyeglass or contact lens prescription.

* Evaluation of the lens under high magnification and illumination to determine the extent and location of any cataracts.

* Evaluation of the retina of the eye through a dilated pupil.

* Measurement of pressure within the eye.

* Supplemental testing for color vision and glare sensitivity.

Further testing may be needed to determine how much the cataract is affecting vision and to evaluate whether other eye diseases may limit vision following cataract surgery.

Using the information from these tests, your doctor of optometry can determine if you have cataracts and advise you on your treatment options.

Treatment

Cataract treatment is based on the level of visual impairment they cause. If a cataract minimally affects vision, or not at all, no treatment may be needed. Patients may be advised to monitor for increased visual symptoms and follow a regular check-up schedule.

In some cases, changing the eyeglass prescription may provide temporary vision improvement. In addition, anti-glare coatings on eyeglass lenses can help reduce glare for night driving. Increasing the amount of light used when reading may be beneficial.

When a cataract progresses to the point that it affects a person's ability to do normal everyday tasks, surgery may be needed. Cataract surgery involves removing the lens of the eye and replacing it with an artificial lens. The artificial lens requires no care and can significantly improve vision. Some artificial lenses have the natural focusing ability of a young healthy lens. Once a cataract is removed, it cannot grow back.

Two approaches to cataract surgery are generally used:

* Small-incision cataract surgery involves making an incision in the side of the cornea (the clear outer covering of the eye) and inserting a tiny probe into the eye. The probe emits ultrasound waves that soften and break up the lens so it can be suctioned out. This process is called phacoemulsification.

* Extracapsular surgery requires a somewhat larger incision in the cornea so that the lens core can be removed in one piece. The natural lens is replaced by a clear plastic lens called an intraocular lens (IOL). When implanting an IOL is not possible because of other eye problems, contact lenses and, in some cases, eyeglasses may be an option for vision correction.

As with any surgery, cataract surgery has risks from infection and bleeding. Cataract surgery also slightly increases the risk of retinal detachment. It is important to discuss the benefits and risks of cataract surgery with your eye care providers. Other eye conditions may increase the need for cataract surgery or prevent a person from being a cataract surgery candidate.

Cataract surgery is one of the safest and most effective types of surgery performed in the United States today. Approximately 90% of cataract surgery patients report better vision following the surgery.

Prevention

There is no treatment to prevent or slow cataract progression. In age-related cataracts, changes in vision can be very gradual. Some people may not initially recognize the visual changes. However, as cataracts worsen, vision symptoms increase.

While there are no clinically proven approaches to preventing cataracts, simple preventive strategies include:

* Reducing exposure to sunlight through UV-blocking lenses.

* Decreasing or stopping smoking.

* Increasing antioxidant vitamin consumption by eating more leafy green vegetables and taking nutritional supplements.

Researchers have linked eye-friendly nutrients such as lutein and zeaxanthin, vitamin C, vitamin E and zinc to reducing the risk of certain eye diseases, including cataracts. For more information on the importance of good nutrition and eye health, please see the diet and nutrition section.

#7 Re: Jai Ganesh's Puzzles » General Quiz » Yesterday 15:55:06

Hi,

#10783. What does the term in Geography Demilitarized zone mean?

#10784. What does the term in Geography Demography mean?

#8 Re: Jai Ganesh's Puzzles » English language puzzles » Yesterday 15:42:13

Hi,

#5979. What does the noun nesting mean?

#5980. What does the noun nether world or netherworld mean?

#9 Re: Jai Ganesh's Puzzles » Doc, Doc! » Yesterday 15:27:15

Hi,

#2586. What does the medical term Endovascular aneurysm repair (EVAR) mean?

#11 Re: Jai Ganesh's Puzzles » Oral puzzles » Yesterday 14:52:40

Hi,

#6365.

#12 Re: Exercises » Compute the solution: » Yesterday 14:13:08

Hi,

2726.

#13 Re: This is Cool » Miscellany » Yesterday 00:03:13

2512) Intraocular Lens

Gist

Intraocular lenses (IOLs) are tiny, artificial, permanent lenses implanted inside the eye to replace a natural lens removed during cataract surgery or to correct refractive errors like myopia, hyperopia, and astigmatism. Made of acrylic or silicone, they restore clear vision by focusing light on the retina without needing maintenance.

Intraocular lenses usually last a lifetime. How is an intraocular lens used in cataract surgery? Cataract surgery involves removing the eye's natural lens which has become cloudy (cataract) and replacing it with an intraocular lens.

Summary

An intraocular lens (or IOL) is a tiny, artificial lens for the eye. It replaces the eye's natural lens that is removed during cataract surgery.

The lens bends (refracts) light rays that enter the eye, helping you to see. Your lens should be clear. But if you have a cataract, your lens has become cloudy. Things look blurry, hazy or less colorful with a cataract. Cataract surgery removes this cloudy lens and replaces it with a clear IOL to improve your vision.

IOLs come in different focusing powers, just like prescription eyeglasses or contact lenses. Your ophthalmologist will measure the length of your eye and the curve of your cornea. These measurements are used to set your IOLs focusing power.

What are IOLs made of?

Most IOLs are made of silicone, acrylic, or other plastic compositions. They are also coated with a special material to help protect your eyes from the sun's harmful ultraviolet (UV) rays.

Monofocal IOLs

The most common type of lens used with cataract surgery is called a monofocal IOL. It has one focusing distance. It is set to focus for up close, medium range or distance vision. Most people have them set for clear distance vision. Then they wear eyeglasses for reading or close work.

Some IOLs have different focusing powers within the same lens. These are called presbyopia-correcting IOLs. These IOLs reduce your dependence on glasses by giving you clear vision for more than one set distance.

Multifocal IOLs

These IOLs provide both distance and near focus at the same time. The lens has different zones set at different powers.

Extended depth-of-focus IOLs:

Similar to multifocal lenses, extended depth-of-focus (EDOF) lenses sharpen near and far vision, but with only one corrective zone, which “extends” to cover both distances. This may mean less effort to re-focus between distances.

Accommodative IOLs

These lenses move or change shape inside your eye, allowing focusing at different distances.

Toric IOLs

For people with astigmatism, there is an IOL called a toric lens. Astigmatism is a refractive error caused by an uneven curve in your cornea or lens. The toric lens is designed to correct that refractive error.

Details

An intraocular lens (IOL) is a lens implanted in the eye usually as part of a treatment for cataracts or for correcting other vision problems such as near-sightedness (myopia) and far-sightedness (hyperopia); a form of refractive surgery. If the natural lens is left in the eye, the IOL is known as phakic, otherwise it is a pseudophakic lens (or false lens). Both kinds of IOLs are designed to provide the same light-focusing function as the natural crystalline lens. This can be an alternative to LASIK, but LASIK is not an alternative to an IOL for treatment of cataracts.

IOLs usually consist of a small plastic lens with plastic side struts, called haptics, to hold the lens in place in the capsular bag inside the eye. IOLs were originally made of a rigid material (PMMA), although this has largely been superseded by the use of flexible materials, such as silicone. Most IOLs fitted today are fixed monofocal lenses matched to distance vision. However, other types are available, such as a multifocal intraocular lens that provides multiple-focused vision at far and reading distance, and adaptive IOLs that provide limited visual accommodation. Multifocal IOLs can also be trifocal IOLs or extended depth of focus (EDOF) lenses.

As of 2021, nearly 28 million cataract procedures took place annually worldwide. That is about 75,000 procedures per day globally. The procedure can be done under local or topical anesthesia with the patient awake throughout the operation. The use of a flexible IOL enables the lens to be rolled for insertion into the capsular bag through a very small incision, thus avoiding the need for stitches. This procedure usually takes less than 30 minutes in the hands of an experienced ophthalmologist, and the recovery period is about two to three weeks. After surgery, patients should avoid strenuous exercise or anything else that significantly increases blood pressure. They should visit their ophthalmologists regularly for three weeks to monitor the implants.

IOL implantation carries several risks associated with eye surgeries, such as infection, loosening of the lens, lens rotation, inflammation, nighttime halos and retinal detachment. Though IOLs enable many patients to have reduced dependence on glasses, most patients still rely on glasses for certain activities, such as reading. These reading glasses may be avoided in some cases if multifocal IOLs, trifocal IOLs or EDOF lenses are used.

Additional Information

IOLs (intraocular lenses) are clear, artificial lenses that replace your eye’s natural ones. You receive IOLs during cataract surgery and refractive lens exchange. IOL implants correct a range of vision issues, including nearsightedness and age-related farsightedness. They may also help reduce your reliance on glasses for certain types of tasks.

What are IOLs?

IOLs (intraocular lenses) are clear artificial lenses that a healthcare provider will implant in your eye to replace your natural lens. Like glasses or contacts, IOL implants can correct vision issues such as:

* Myopia (nearsightedness).

* Hyperopia (farsightedness).

* Presbyopia (age-related farsightedness).

* Astigmatism (altered eye shape).

IOL implants are permanent, meaning they stay in your eyes for the rest of your life. IOLs help improve your vision and may reduce your reliance on glasses in your daily routine. You receive IOLs during eye lens replacement surgery, most commonly during cataract surgery.

Who needs intraocular lens implants?

You may benefit from IOL implants if you:

* Have cataracts that prevent you from seeing clearly. Virtually everyone undergoing cataract surgery will need to have an IOL implant in order to restore vision.

* Have refractive errors that affect your vision, but you’re not a candidate for LASIK or other vision correction surgeries.

What are the different types of intraocular lenses?

There are many types of IOLs, each with its own pros and cons. The main drawback with some types of IOLs is you’ll still need to wear glasses for some tasks (like reading). Some IOLs can reduce your reliance on glasses, but you may notice side effects like glare around lights at night.

The list below covers some general categories of IOLs. Ask your ophthalmologist about which type of IOL is best for you. They’ll help you customize your IOL selection to suit your vision needs, lifestyle and personal preferences.

Monofocal lenses

This is the type of IOL that most people select. Monofocal lenses have one focusing power. This means they sharpen either your distance, mid-range or close-up vision. Most people set their monofocal lenses for distance vision, which can help with tasks like driving. You’ll probably still need glasses for close-up vision.

Monofocal lenses with monovision

Monofocal IOLs set to monovision are a good option for some people who want to rely less on glasses. Normally, the monofocal IOLs for both of your eyes are set to the same range (like distance). But with monovision, the lens for each eye has a different focusing power. For example, the lens for your right eye might correct for distance, with the lens for your left eye correcting for close-up vision.

With monovision, your eyes work together to help you see both distant and close-up objects. One drawback is that it takes some time to adapt to monovision. Some people can’t adapt to monovision at all. So, before choosing monovision IOLs, your provider may suggest you try monovision contact lenses for a couple of weeks. This allows you to see if this method of correction feels comfortable to you.

Multifocal lenses

Multifocal lenses improve your close-up and distance vision and may reduce your need for glasses. Unlike monofocal lenses, multifocal lenses contain several focal zones. Your brain adjusts to these zones and chooses the focusing power you need for any given task (like driving or reading). You may need some time to adapt to these lenses. But over time, you should be able to rely less on your reading glasses. Some people don’t need glasses at all.

One drawback of multifocal lenses is that you may notice rings or halos around lights, like when driving at night.

Extended depth-of-focus (EDOF) lenses

Unlike multifocal lenses, EDOF lenses contain one long focal point that expands your corrected range of vision and depth of focus. These lenses give you excellent distance vision along with improvements in your mid-range vision (for tasks such as computer use). You may still need to use glasses for close-up tasks like reading.

Accommodative lenses

These lenses are similar to your eyes’ natural lenses in that they adjust their shape to help you see close-up or distant objects. Accommodative lenses are another option to help reduce dependency on glasses. But you may prefer to use glasses if you’re reading or focusing on close-up objects for longer periods of time.

Toric lenses

Toric lenses help people who have astigmatism. These lenses improve how light hits your retina, allowing you to have a sharper, clearer vision. Toric lenses are available in monofocal, multifocal, extended depth of focus (EDOF) or accommodative models. They serve to improve the quality of the vision delivered. Toric lenses will help reduce the amount of glare and halos artifacts commonly experienced by people with astigmatism.

Light-adjustable lenses (LALs)

Light-adjustable lenses are different from other IOL options in that your ophthalmologist fine-tunes their corrective power after your lens replacement surgery. They do this through a series of UV light treatment procedures, spaced several days apart. These procedures customize your lens prescription to bring you as close to your desired visual outcome as possible. This is still a type of monofocal lens, so glasses will be necessary for reading or driving.

Phakic lenses

Phakic lenses are typically implanted in younger individuals while trying to preserve the natural human lens, to correct for near-sightedness in people who don’t qualify for laser refractive surgery. This helps preserve your natural ability to focus and accommodate. These lenses will eventually have to be removed during cataract surgery but can offer younger people clear vision for a long time.

Which intraocular lens is best for me?

Your ophthalmologist will determine if you would benefit from cataract surgery, or if you would qualify for a refractive lens exchange surgery. They’ll discuss your options and help you decide which IOLs are best for you. They’ll also conduct a thorough eye exam to check your vision and the health of your eyes. They’ll perform some simple, painless tests to measure your eye size and shape, too.

To prepare for a conversation with your ophthalmologist, you should think about your priorities for your IOLs, as well as aspects that aren’t as important to you. It may help to ask yourself the following questions:

* Am I OK wearing glasses sometimes? If so, how often and for what types of tasks?

* What kind of vision is required in my work/profession? Am I OK wearing glasses for these tasks?

* Do I drive often at night? If so, can I adapt to seeing glare and halos around lights when I drive?

* What kind of hobbies and activities do I enjoy the most and how much dependency on glasses am I OK with for these activities?

* What is my budget for surgery?

Most insurance plans cover monofocal lenses, but you may have to pay for other types out of pocket. Be sure to find out the cost of various IOL options before making your final decision.

What are possible issues and complications related to IOL implantation?

Most IOL complications are rare and include:

* Posterior capsular opacification:This is commonly known as a secondary cataract. This happens after many months or years when a film-like material grows behind the implanted lens. This is a normal process that happens after surgery and can be expected to occur over time for almost everyone. The treatment for this is very quick and straightforward and is usually performed using a laser in the office.

* IOL dislocation: This means your IOL shifts from its normal position. You face a higher risk if you have certain eye conditions, like pseudoexfoliation syndrome, or have had trauma or prior eye surgeries. Certain genetic disorders, such as Ehlers-Danlos syndrome and Marfan syndrome, may also raise your risk. In some cases, you may need surgery to reposition or replace the IOL.

* Uveitis-glaucoma-hyphema (UGH) syndrome: UGH syndrome occurs when an IOL irritates your iris and other parts of your eye. This leads to inflammation, raised intraocular pressure and other symptoms. As with IOL dislocation, you may need surgery to reposition or replace the IOL. This is an extremely rare complication that most people don’t experience with routine surgery.

* IOL opacification: This is a clouding of your IOL. Your vision may become less sharp, and you may notice glare around lights. Treatment involves surgery to give you a new IOL. This is extremely uncommon with modern-day IOLs.

* Refractive surprise: A refractive surprise is when your vision after IOL implantation isn’t as sharp as you and your ophthalmologist expected. Your ophthalmologist will suggest a range of solutions. You may decide to accept the vision correction as is and do nothing further. Or you can choose to wear glasses, have laser vision correction (such as LASIK or PRK) or have an IOL replacement surgery.

Talk with your ophthalmologist about possible complications and your level of risk before choosing to have IOLs implanted in your eyes. They’ll tell you what to expect based on your medical history, eye health and other factors. Also, ask them about common side effects associated with cataract surgery or refractive lens exchange. Be sure to get all the information you need to make the decision that’s right for you.

LASIK

LASIK is a laser eye surgery that corrects vision problems. It changes the shape of your cornea to improve how light hits your retina. This improves your vision. About 99% of people have uncorrected vision that’s 20/40 or better after their LASIK surgery. More than 90% end up with 20/20 vision. Dry eye is the most common side effect.

PRK

Photorefractive keratectomy (PRK) is a laser eye surgery similar to LASIK. Unlike LASIK, which involves opening a flap in your cornea, PRK removes your cornea so that it grows back naturally. That makes it a better laser eye surgery choice for some people who can’t undergo LASIK.

#14 Re: Dark Discussions at Cafe Infinity » crème de la crème » Yesterday 00:02:53

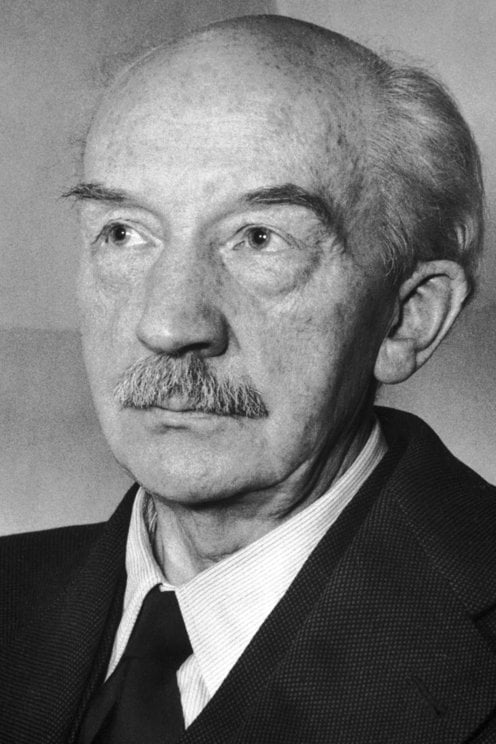

2449) Walther Bothe

Gist:

Work

In a counter tube, particles passing through the tube generate an electric pulse. In 1925 Walter Bothe connected two counter tubes together so that only simultaneous passages were registered. This meant that either the passages were caused by particles that originated from the same event or by a particle that moved so fast that the time for movement between the tubes was negligible. Bothe used the method to show that energy is conserved in impacts between particles and photons and to study cosmic radiation.

Summary

Walther Bothe (born Jan. 8, 1891, Oranienburg, Ger.—died Feb. 8, 1957, Heidelberg, W.Ger.) was a German physicist who shared the Nobel Prize for Physics in 1954 with Max Born for his invention of a new method of detecting subatomic particles and for other resulting discoveries.

Bothe taught at the universities of Berlin (1920–31), Giessen (1931–34), and Heidelberg (1934–57). In 1925 he and Hans Geiger used two Geiger counters to gather data on the Compton effect—the dependence of the increase in the wavelength of a beam of X rays upon the angle through which the beam is scattered as a result of collision with electrons. Their experiments, which simultaneously measured the energies and directions of single photons and electrons emerging from individual collisions, refuted a statistical interpretation of the Compton effect and definitely established the particle nature of electromagnetic radiation.

With the astronomer Werner Kolhörster, Bothe again applied this coincidence-counting method in 1929 and found that cosmic rays are not composed exclusively of gamma rays, as was previously believed. In 1930 Bothe discovered an unusual radiation emitted by beryllium when it is bombarded with alpha particles. This radiation was later identified by Sir James Chadwick as the neutron.

During World War II Bothe was one of the leaders of German research on nuclear energy. He was responsible for the planning and building of Germany’s first cyclotron, which was completed in 1943.

Details

Walther Wilhelm Georg Bothe (8 January 1891 – 8 February 1957) was a German experimental physicist who shared the 1954 Nobel Prize in Physics with Max Born "for the coincidence method and his discoveries made therewith."

Bothe served in the military during World War I from 1914, and he was a prisoner of war of the Russians, returning to Germany in 1920. Upon his return to the laboratory, he developed and applied coincidence circuits to the study of nuclear reactions, such as the Compton effect, cosmic rays, and the wave–particle duality of radiation.

In 1930, Bothe became Full Professor and Director of the Physics Department at the University of Giessen. In 1932, he became Director of the Physical and Radiological Institute at the University of Heidelberg; he was driven out of this position by elements of the Deutsche Physik movement. To preclude his emigration from Germany, he was appointed Director of the Physics Institute of the Kaiser Wilhelm Institute for Medical Research in Heidelberg. There, he built the first operational cyclotron in Germany. Furthermore, he became a principal in the German nuclear energy project, also known as Uranverein, which was started in 1939 under the supervision of the Army Ordnance Office.

In 1946, in addition to his directorship of the Physics Institute at the KWImf, Bothe was reinstated as a professor at the University of Heidelberg. From 1956 to 1957, he was a member of the Nuclear Physics Working Group in Germany.

In the year after Bothe's death, his Physics Institute at the KWImF was elevated to the status of a new institute under the Max Planck Society and it then became the Max Planck Institute for Nuclear Physics. Its main building was later named Bothe laboratory.

Education

Walther Wilhelm Georg Bothe was born on 8 January 1891 in Oranienburg, Germany, the son of Friedrich Bothe and Charlotte Hartung.