Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#2251 2024-08-11 15:09:32

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,374

Re: Miscellany

2253) Trade Winds

Gist

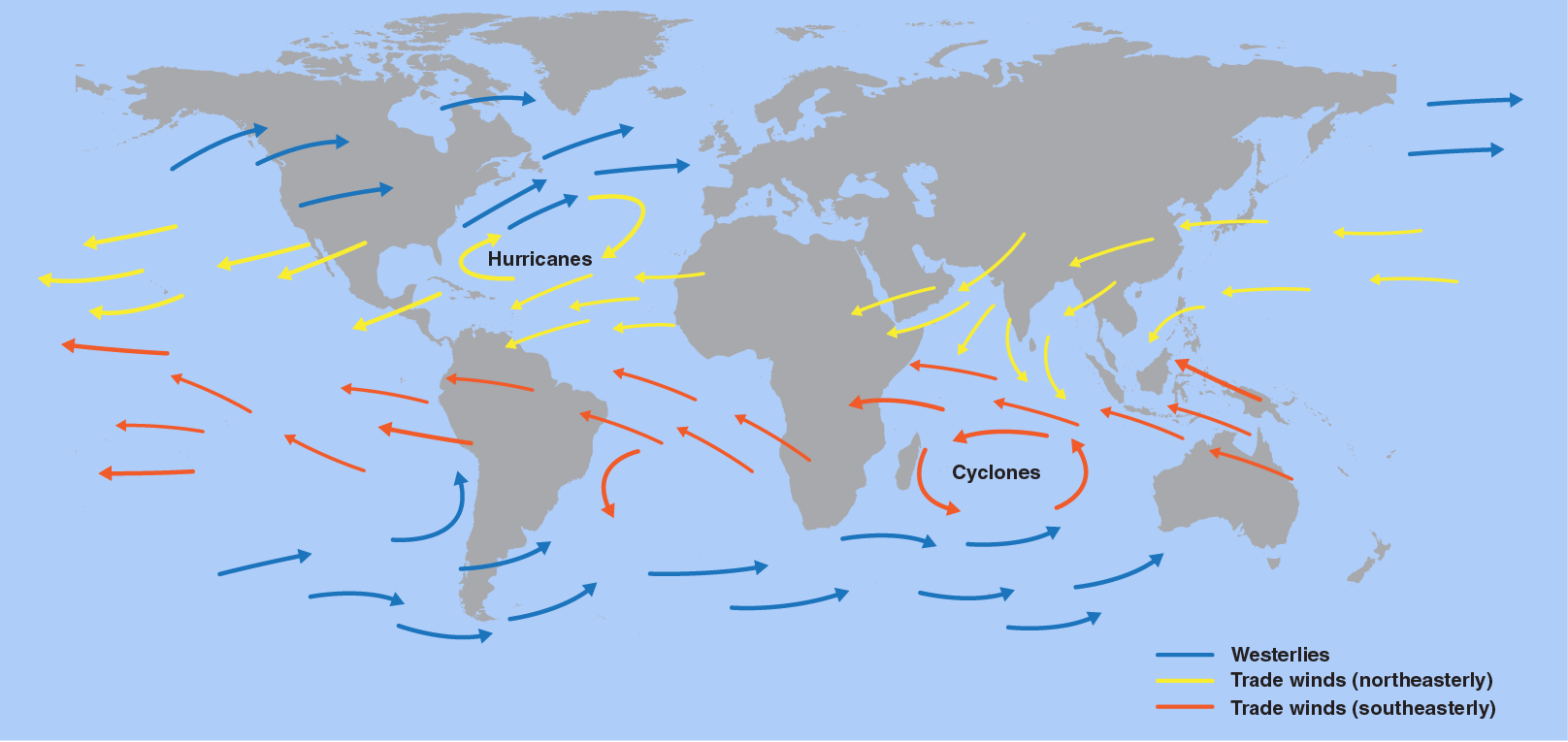

The trade winds are winds that reliably blow east to west just north and south of the equator. The winds help ships travel west, and they can also steer storms such as hurricanes, too.

What Are Trade Winds? Trade winds can be defined as the wind that flows towards the equator from the north-east in the Northern Hemisphere or from the south-east in the Southern Hemisphere. These are also known as tropical easterlies and are known for their consistency in force and direction.

Summary

Trade wind, persistent wind that blows westward and toward the Equator from the subtropical high-pressure belts toward the intertropical convergence zone (ITCZ). It is stronger and more consistent over the oceans than over land and often produces partly cloudy sky conditions, characterized by shallow cumulus clouds, or clear skies that make trade-wind islands popular tourist resorts. Its average speed is about 5 to 6 metres per second (11 to 13 miles per hour) but can increase to speeds of 13 metres per second (30 miles per hour) or more. The trade winds were named by the crews of sailing ships that depended on the winds during westward ocean crossings.

Details

The trade winds or easterlies are permanent east-to-west prevailing winds that flow in the Earth's equatorial region. The trade winds blow mainly from the northeast in the Northern Hemisphere and from the southeast in the Southern Hemisphere, strengthening during the winter and when the Arctic oscillation is in its warm phase. Trade winds have been used by captains of sailing ships to cross the world's oceans for centuries. They enabled European colonization of the Americas, and trade routes to become established across the Atlantic Ocean and the Pacific Ocean.

In meteorology, they act as the steering flow for tropical storms that form over the Atlantic, Pacific, and southern Indian oceans and cause rainfall in North America, Southeast Asia, and Madagascar and East Africa. Shallow cumulus clouds are seen within trade wind regimes and are capped from becoming taller by a trade wind inversion, which is caused by descending air aloft from within the subtropical ridge. The weaker the trade winds become, the more rainfall can be expected in the neighboring landmasses.

The trade winds also transport nitrate- and phosphate-rich Saharan dust to all Latin America, the Caribbean Sea, and to parts of southeastern and southwestern North America. Sahara dust is on occasion present in sunsets across Florida. When dust from the Sahara travels over land, rainfall is suppressed and the sky changes from a blue to a white appearance which leads to an increase in red sunsets. Its presence negatively impacts air quality by adding to the count of airborne particulates.

History

The term originally derives from the early fourteenth century sense of trade (in late Middle English) still often meaning "path" or "track". The Portuguese recognized the importance of the trade winds (then the volta do mar, meaning in Portuguese "turn of the sea" but also "return from the sea") in navigation in both the north and south Atlantic Ocean as early as the 15th century. From West Africa, the Portuguese had to sail away from continental Africa, that is, to west and northwest. They could then turn northeast, to the area around the Azores islands, and finally east to mainland Europe. They also learned that to reach South Africa, they needed to go far out in the ocean, head for Brazil, and around 30°S go east again. (This is because following the African coast southbound means sailing upwind in the Southern hemisphere.) In the Pacific Ocean, the full wind circulation, which included both the trade wind easterlies and higher-latitude westerlies, was unknown to Europeans until Andres de Urdaneta's voyage in 1565.

The captain of a sailing ship seeks a course along which the winds can be expected to blow in the direction of travel. During the Age of Sail, the pattern of prevailing winds made various points of the globe easy or difficult to access, and therefore had a direct effect on European empire-building and thus on modern political geography. For example, Manila galleons could not sail into the wind at all.

By the 18th century, the importance of the trade winds to England's merchant fleet for crossing the Atlantic Ocean had led both the general public and etymologists to identify the name with a later meaning of "trade": "(foreign) commerce". Between 1847 and 1849, Matthew Fontaine Maury collected enough information to create wind and current charts for the world's oceans.

Cause

As part of the Hadley cell, surface air flows toward the equator while the flow aloft is towards the poles. A low-pressure area of calm, light variable winds near the equator is known as the doldrums, near-equatorial trough, intertropical front, or the Intertropical Convergence Zone. When located within a monsoon region, this zone of low pressure and wind convergence is also known as the monsoon trough. Around 30° in both hemispheres, air begins to descend toward the surface in subtropical high-pressure belts known as subtropical ridges. The subsident (sinking) air is relatively dry because as it descends, the temperature increases, but the moisture content remains constant, which lowers the relative humidity of the air mass. This warm, dry air is known as a superior air mass and normally resides above a maritime tropical (warm and moist) air mass. An increase of temperature with height is known as a temperature inversion. When it occurs within a trade wind regime, it is known as a trade wind inversion.

The surface air that flows from these subtropical high-pressure belts toward the Equator is deflected toward the west in both hemispheres by the Coriolis effect. These winds blow predominantly from the northeast in the Northern Hemisphere and from the southeast in the Southern Hemisphere. Because winds are named for the direction from which the wind is blowing, these winds are called the northeasterly trade winds in the Northern Hemisphere and the southeasterly trade winds in the Southern Hemisphere. The trade winds of both hemispheres meet at the Doldrums.

As they blow across tropical regions, air masses heat up over lower latitudes due to more direct sunlight. Those that develop over land (continental) are drier and hotter than those that develop over oceans (maritime), and travel northward on the western periphery of the subtropical ridge. Maritime tropical air masses are sometimes referred to as trade air masses. All tropical oceans except the northern Indian Ocean have extensive areas of trade winds.

Weather and biodiversity effects

Clouds which form above regions within trade wind regimes are typically composed of cumulus which extend no more than 4 kilometres (13,000 ft) in height, and are capped from being taller by the trade wind inversion. Trade winds originate more from the direction of the poles (northeast in the Northern Hemisphere, southeast in the Southern Hemisphere) during the cold season, and are stronger in the winter than the summer. As an example, the windy season in the Guianas, which lie at low latitudes in South America, occurs between January and April. When the phase of the Arctic oscillation (AO) is warm, trade winds are stronger within the tropics. The cold phase of the AO leads to weaker trade winds. When the trade winds are weaker, more extensive areas of rain fall upon landmasses within the tropics, such as Central America.

During mid-summer in the Northern Hemisphere (July), the westward-moving trade winds south of the northward-moving subtropical ridge expand northwestward from the Caribbean Sea into southeastern North America (Florida and Gulf Coast). When dust from the Sahara moving around the southern periphery of the ridge travels over land, rainfall is suppressed and the sky changes from a blue to a white appearance which leads to an increase in red sunsets. Its presence negatively impacts air quality by adding to the count of airborne particulates. Although the Southeast US has some of the cleanest air in North America, much of the African dust that reaches the United States affects Florida. Since 1970, dust outbreaks have worsened due to periods of drought in Africa. There is a large variability in the dust transport to the Caribbean and Florida from year to year. Dust events have been linked to a decline in the health of coral reefs across the Caribbean and Florida, primarily since the 1970s.

Every year, millions of tons of nutrient-rich Saharan dust cross the Atlantic Ocean, bringing vital phosphorus and other fertilizers to depleted Amazon soils.

Additional Information

When you’re outside, you might notice that one day the wind blows one direction and the next day, wind is blowing a different direction. That’s a pretty common occurrence.

However, many winds on Earth are quite predictable. For example, high in the atmosphere, the jet streams typically blow across Earth from west to east. The trade winds are air currents closer to Earth’s surface that blow from east to west near the equator.

The Charles W. Morgan is the last of an American whaling fleet that once numbered more than 2,700 vessels. Ships like the Morgan often used routes defined by the trade winds to navigate the ocean.

Known to sailors around the world, the trade winds and associated ocean currents helped early sailing ships from European and African ports make their journeys to the Americas. Likewise, the trade winds also drive sailing vessels from the Americas toward Asia. Even now, commercial ships use "the trades" and the currents the winds produce to hasten their oceanic voyages.

How do these commerce-friendly winds form?

Between about 30 degrees north and 30 degrees south of the equator, in a region called the horse latitudes, the Earth's rotation causes air to slant toward the equator in a southwesterly direction in the northern hemisphere and in a northwesterly direction in the southern hemisphere. This is called the Coriolis Effect.

The Coriolis Effect, in combination with an area of high pressure, causes the prevailing winds—the trade winds—to move from east to west on both sides of the equator across this 60-degree "belt."

As the wind blows to about five degrees north and south of the equator, both air and ocean currents come to a halt in a band of hot, dry air. This 10-degree belt around Earth's midsection is called the Inter-Tropical Convergence Zone, more commonly known as the doldrums.

Intense solar heat in the doldrums warms and moistens the trade winds, thrusting air upwards into the atmosphere like a hot air balloon. As the air rises, it cools, causing persistent bands of showers and storms in the tropics and rainforests. The rising air masses move toward the poles, then sink back toward Earth's surface near the horse latitudes. The sinking air triggers the calm trade winds and little precipitation, completing the cycle.

Why do the trade winds blow from east to west?

The trade winds blow toward the west partly because of how Earth rotates on its axis. The trade winds begin as warm, moist air from the equator rises in the atmosphere and cooler air closer to the poles sinks.

The trade winds are created by a cycle of warm, moist air rising near the equator. The air eventually cools and sinks a bit further north in the tropics. This phenomenon is called the Hadley cell.

So, if air is cycling from the equator to the poles, why don’t all winds blow north and south? That’s where Earth’s rotation changes things. Because Earth rotates as the air is moving, the winds in the Northern Hemisphere curve to the right and air in the Southern Hemisphere curves to the left.

This phenomenon is called the Coriolis Effect and it’s why the trade winds blow toward the west in both the Northern Hemisphere and Southern Hemisphere. The trade winds can be found about 30 degrees north and south of the equator. Right at the equator there is almost no wind at all—an area sometimes called the doldrums.

Earth's rotation causes the trade winds to curve clockwise in the Northern Hemisphere and counterclockwise in the Southern Hemisphere. The area of almost no wind at the equator is called the doldrums.

How do the doldrums and trade winds affect our weather?

The Sun shines very directly at the equator, creating very intense heat. The heat warms the air and causes some ocean water to evaporate, meaning air in the doldrums becomes warm and moist. This warm, moist air rises in the atmosphere and cools, becoming clouds — and eventually rain and storms — in tropical regions. In the Atlantic Ocean, some of these storms become hurricanes, and the trade winds can steer hurricanes west toward the United States.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Online

#2252 2024-08-12 00:03:11

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,374

Re: Miscellany

2254) National Anthem

Gist

National Anthem is the official song of a country that is played at public events.

Summary

National anthem is a hymn or song expressing patriotic sentiment and either governmentally authorized as an official national hymn or holding that position in popular feeling. The oldest national anthem is Great Britain’s “God Save the Queen,” which was described as a national anthem in 1825, although it had been popular as a patriotic song and used on occasions of royal ceremonial since the mid-18th century.

During the 19th and early 20th centuries, most European countries followed Britain’s example, some national anthems being written especially for the purpose, others being adapted from existing tunes. The sentiments of national anthems vary, from prayers for the monarch to allusions to nationally important battles or uprisings (“The Star-Spangled Banner,” United States; “La Marseillaise,” France) to expressions of patriotic feeling (“O Canada”).

National anthems vary greatly in musical merit, and the verse or text, like the music, has not in every case been written by a national of the country concerned. Changes in politics or international relationships often cause the texts to be altered or a new anthem to be adopted. For example, the U.S.S.R. adopted “Gimn Sovetskogo Soyuza” (“Hymn of the Soviet Union”) as its national anthem in 1944, replacing the communist hymn “L’Internationale,” whose words and music were written in the late 19th century by two French workers.

Few national anthems have been written by poets or composers of renown, a notable exception being the first Austrian national anthem, “Gott erhalte Franz den Kaiser” (“God Save Emperor Francis”), composed by Joseph Haydn in 1797 and later (1929) sung to the text “Sei gesegnet ohne Ende” (“Be Blessed Forever”). Haydn’s melody was also used for the German national anthem “Deutschland, Deutschland über Alles” (“Germany, Germany Above All”), adopted in 1922. Beginning with its third verse, “Einigkeit und Recht und Freiheit” (“Unity and rights and freedom”), it continues in use as the national anthem of Germany, retitled as “Deutschlandlied.” The German national anthem before 1922 had been “Heil dir im Siegerkranz” (“Hail to Thee in Victor’s Garlands”), sung to the melody of “God Save the Queen.” Some authors of national anthems, such as Italy’s Goffredo Mameli, gained renown only as a result of their composition’s national popularity.

Details

A national anthem is a patriotic musical composition symbolizing and evoking eulogies of the history and traditions of a country or nation. The majority of national anthems are marches or hymns in style. American, Central Asian, and European nations tend towards more ornate and operatic pieces, while those in the Middle East, Oceania, Africa, and the Caribbean use a more simplistic fanfare. Some countries that are devolved into multiple constituent states have their own official musical compositions for them (such as with the United Kingdom, Russia, and the Soviet Union); their constituencies' songs are sometimes referred to as national anthems even though they are not sovereign states.

History

In the early modern period, some European monarchies adopted royal anthems. Some of these anthems have survived into current use. "God Save the King/Queen", first performed in 1619, remains the royal anthem of the United Kingdom and the Commonwealth realms. La Marcha Real, adopted as the royal anthem of the Spanish monarchy in 1770, was adopted as the national anthem of Spain in 1939. Denmark retains its royal anthem, Kong Christian stod ved højen mast (1780) alongside its national anthem (Der er et yndigt land, adopted 1835). In 1802, Gia Long commissioned a royal anthem in the European fashion for the Kingdom of Vietnam.

Following the reinstating of La Marseillaise in 1830, in the wake of the July Revolution, as the national anthem of France, it became common for newly formed nations to define national anthems, notably as a result of the Latin American wars of independence, for Argentina (1813), Peru (1821), Brazil (1831) but also Belgium (1830). Consequently, adoption of national anthems prior to the 1930s was mostly by newly formed or newly independent states, such as the First Portuguese Republic (A Portuguesa, 1911), the Kingdom of Greece ("Hymn to Liberty", 1865), the First Philippine Republic (Marcha Nacional Filipina, 1898), Lithuania (Tautiška giesmė, 1919), Weimar Germany (Deutschlandlied, 1922), Ireland (Amhrán na bhFiann, 1926) and Greater Lebanon ("Lebanese National Anthem", 1927). Though the custom of an officially adopted national anthem became popular in the 19th century, some national anthems predate this period, often existing as patriotic songs long before their designation as national anthem.

If an anthem is defined as consisting of both a melody and lyrics, then the oldest national anthem in use today is the national anthem of the Netherlands, the Wilhelmus. Written between 1568 and 1572 during the Dutch Revolt, it was already a popular orangist hymn during the 17th century, though it would take until 1932 for it to be officially recognized as the Dutch national anthem. The lyrics of the Japanese national anthem, Kimigayo, predate those of the Dutch anthem by several centuries, being taken from a Heian period (794–1185) poem, but were not set to music until 1880. If a national anthem is defined by being officially designated as the national song of a particular state, then La Marseillaise, which was officially adopted by the French National Convention in 1796, would qualify as the first official national anthem.

The Olympic Charter of 1920 introduced the ritual of playing the national anthems of the gold medal winners. From this time, the playing of national anthems became increasingly popular at international sporting events, creating an incentive for such nations that did not yet have an officially defined national anthem to introduce one.

The United States introduced the patriotic song The Star-Spangled Banner as a national anthem in 1931. Following this, several nations moved to adopt as official national anthem patriotic songs that had already been in de facto use at official functions, such as Mexico (Mexicanos, al grito de guerra, composed 1854, adopted 1943) and Switzerland ("Swiss Psalm", composed 1841, de facto use from 1961, adopted 1981).

By the period of decolonisation in the 1960s, it had become common practice for newly independent nations to adopt an official national anthem. Some of these anthems were specifically commissioned, such as the anthem of Kenya, Ee Mungu Nguvu Yetu, produced by a dedicated "Kenyan Anthem Commission" in 1963.

A number of nations remain without an official national anthem adopted de iure. In these cases, there are established de facto anthems played at sporting events or diplomatic receptions. These include the United Kingdom (God Save the King) and Sweden (Du gamla, Du fria; the country also has a royal anthem, Kungssangen). Countries that have moved to officially adopt de iure their long-standing de facto anthems since the 1990s include:

Luxembourg (Ons Heemecht, adopted 1993), South Africa (National anthem of South Africa, adopted 1997), Israel (Hatikvah, composed 1888, de facto use from 1948, adopted 2004) and Italy (Il Canto degli Italiani, composed 1847, de facto use from 1946, adopted 2017).

Usage

Star-Spangled Banner with the American flag (ca. 1940s). Anthems used during sign-on and sign-off sequences have become less common due to the increasing prevalence of 24-hour-a-day, seven-day-a-week broadcasting.

National anthems are used in a wide array of contexts. Certain etiquette may be involved in the playing of a country's anthem. These usually involve military honours, standing up, removing headwear etc. In diplomatic situations the rules may be very formal. There may also be royal anthems, presidential anthems, state anthems etc. for special occasions.

They are played on national holidays and festivals, and have also come to be closely connected with sporting events. Wales was the first country to adopt this, during a rugby game against New Zealand in 1905. Since then during sporting competitions, such as the Olympic Games, the national anthem of the gold medal winner is played at each medal ceremony; also played before games in many sports leagues, since being adopted in baseball during World War II.[8] When teams from two nations play each other, the anthems of both nations are played, the host nation's anthem being played last.

In some countries, the national anthem is played to students each day at the start and/or end of school as an exercise in patriotism, such as in Tanzania. In other countries the state anthem may be played in a theatre before a play or in a cinema before a movie. Many radio and television stations have adopted this and play the national anthem when they sign on in the morning and again when they sign off at night. For instance, the national anthem of China is played before the broadcast of evening news on Hong Kong's local television stations including TVB Jade. In Colombia, it is a law to play the National Anthem at 6:00 and 18:00 on every public radio and television station, while in Thailand, "Phleng Chat Thai" is played at 08:00 and 18:00 nationwide (the Royal Anthem is used for sign-ons and closedowns instead). The use of a national anthem outside of its country, however, is dependent on the international recognition of that country. For instance, Taiwan has not been recognized by the International Olympic Committee as a separate nation since 1979 and must compete as Chinese Taipei; its "National Banner Song" is used instead of its national anthem. In Taiwan, the country's national anthem is sung before instead of during flag-rising and flag-lowering, followed by the National Banner Song during the actual flag-rising and flag-lowering. Even within a state, the state's citizenry may interpret the national anthem differently (such as in the United States some view the U.S. national anthem as representing respect for dead soldiers and policemen whereas others view it as honouring the country generally).

Various solutions may be used when countries with different national anthems compete in a unified team. When North Korea and South Korea participated together in the 2018 Winter Olympics, the folk song "Arirang", beloved on both sides of the border and seen as a symbol of Korea as a whole, was used as an anthem instead of the national anthem of either state.

On 21 September 2021, to mark the International Day of Peace, Australian sisters Teresa and Augnes Joy set a world record for singing the national anthems of 193 countries in a hundred languages at an event hosted by the United Nations Association of Australia in Brisbane.

Creators

Most of the best-known national anthems were written by little-known or unknown composers such as Claude Joseph Rouget de Lisle, composer of "La Marseillaise" and John Stafford Smith who wrote the tune for "The Anacreontic Song", which became the tune for the U.S. national anthem, "The Star-Spangled Banner". The author of "God Save the King", one of the oldest and best-known anthems in the world, is unknown and disputed.

Very few countries have a national anthem written by a world-renowned composer. Exceptions include Germany, whose anthem "Das Lied der Deutschen" uses a melody written by Joseph Haydn, and Austria, whose national anthem "Land der Berge, Land am Strome" is sometimes credited to Wolfgang Amadeus Mozart. The music of the "Pontifical Anthem", anthem of the Vatican City, was composed in 1869 by Charles Gounod, for the golden jubilee of Pope Pius IX's priestly ordination. When Armenia was under Soviet rule, its anthem, the "Anthem of the Armenian Soviet Socialist Republic" used a melody by Aram Khachaturian.

The committee charged with choosing a national anthem for the Federation of Malaya (later Malaysia) at independence decided to invite selected composers of international repute to submit compositions for consideration, including Benjamin Britten, William Walton, Gian Carlo Menotti and Zubir Said, who later composed "Majulah Singapura", the national anthem of Singapore. None were deemed suitable. The tune eventually selected was (and still is) the anthem of the constituent state of Perak, which was in turn adopted from a popular French melody titled "La Rosalie" composed by the lyricist Pierre-Jean de Béranger.

A few anthems have words by Nobel laureates in literature. The first Asian laureate, Rabindranath Tagore, wrote the words and music of "Jana Gana Mana" and "Amar Shonar Bangla", later adopted as the national anthems of India and Bangladesh respectively. Bjørnstjerne Bjørnson wrote the lyrics for the Norwegian national anthem "Ja, vi elsker dette landet".

Other countries had their anthems composed by locally important people. This is the case for Colombia, whose anthem's lyrics were written by former president and poet Rafael Nuñez, who also wrote the country's first constitution, and in Malta, written by Dun Karm Psaila, already a National Poet. A similar case is Liberia, the national anthem of which was written by its third president, Daniel Bashiel Warner.

Languages

A national anthem, when it has lyrics (as is usually the case), is most often in the national or most common language of the country, whether de facto or official, there are notable exceptions. Most commonly, states with more than one national language may offer several versions of their anthem, for instance:

* The "Swiss Psalm", the national anthem of Switzerland, has different lyrics for each of the country's four official languages (French, German, Italian and Romansh).

* The national anthem of Canada, "O Canada", has official lyrics in both English and French which are not translations of each other, and is frequently sung with a mixture of stanzas, representing the country's bilingual nature. The song itself was originally written in French.

* "Flower of Scotland", the unofficial national anthem of Scotland, features some words written and spoken in the Scots language

* "The Soldier's Song", the national anthem of Ireland, was originally written and adopted in English, but an Irish translation, although never formally adopted, is nowadays almost always sung instead, even though only 10.5% of Ireland speaks Irish natively.

* The current South African national anthem is unique in that five of the country's eleven official languages are used in the same anthem (the first stanza is divided between two languages, with each of the remaining three stanzas in a different language). It was created by combining two songs together and then modifying the lyrics and adding new ones.

* The former country of Czechoslovakia combined the two national anthems of the two lands; the first stanza consisting of the first stanza of the Czech anthem "Kde domov můj", and the second stanza consisting of the first stanza of the Slovak anthem "Nad Tatrou sa blýska".

* One of the two official national anthems of New Zealand, "God Defend New Zealand", is now commonly sung with the first verse in Māori ("Aotearoa") and the second in English ("God Defend New Zealand"). The tune is the same but the words are not a direct translation of each other.

* "God Bless Fiji" has lyrics in English and Fijian which are not translations of each other. Although official, the Fijian version is rarely sung, and it is usually the English version that is performed at international sporting events.

* Although Singapore has four official languages, with English being the current lingua franca, the national anthem, "Majulah Singapura" is in Malay and, by law, can only be sung with its original Malay lyrics, despite Malay being a minority language in Singapore. This is because Part XIII of the Constitution of the Republic of Singapore declares, "the national language shall be the Malay language and shall be in the Roman script [...]"

* There are several countries that do not have official lyrics to their national anthems. One of these is the "Marcha Real", the national anthem of Spain. Although it originally had lyrics, those lyrics were discontinued after governmental changes in the early 1980s after Francisco Franco's dictatorship ended. In 2007, a national competition to write words was held, but no lyrics were chosen. Other national anthems with no words include "Inno Nazionale della Repubblica", the national anthem of San Marino, that of Bosnia and Herzegovina, that of Russia from 1990 to 2000, and that of Kosovo, entitled "Europe".

* The national anthem of India, "Jana Gana Mana": the official lyrics are in Bengali; they were adapted from a poem written by Rabindranath Tagore.

* Despite the most common language in Wales being English, the unofficial national anthem of Wales, "Hen Wlad Fy Nhadau" is sung in the Welsh language.

* An unofficial national anthem of Finland, "Maamme", was first written in Swedish and only later translated to Finnish. It is nowadays sung in both languages as there is a Swedish speaking minority of about 5% in the country. * * The national anthem of Estonia, "Mu isamaa, mu õnn ja rõõm" has a similar melody with "Maamme", but only with different lyrics and without repeating the second halves of strophes. Finlandia has been repeatedly suggested to be the official national anthem of Finland.

* The national anthem of Pakistan, the "Qaumi Taranah", is unique in that it is entirely in Farsi (Persian) with the exception of one word which is in Urdu, the national language.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Online

#2253 2024-08-12 22:02:10

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,374

Re: Miscellany

2255) Cardamom

Gist

Cardamom has a strong taste, with an aromatic, resinous fragrance. Black cardamom has a more smoky – though not bitter – aroma, with a coolness some consider similar to mint. Green cardamom is one of the most expensive spices by weight, but little is needed to impart flavor.

Cardamom is a spice consisting of whole or ground dried fruits, or seeds, of Elettaria cardamomum, a herbaceous perennial plant of the ginger family (Zingiberaceae). The seeds have a warm, slightly pungent, and highly aromatic flavour somewhat reminiscent of camphor.

Summary

cardamom, spice consisting of whole or ground dried fruits, or seeds, of Elettaria cardamomum, a herbaceous perennial plant of the ginger family (Zingiberaceae). The seeds have a warm, slightly pungent, and highly aromatic flavour somewhat reminiscent of camphor. They are a popular seasoning in South Asian dishes, particularly curries, and in Scandinavian pastries.

Introduced to Europe in the mid-16th century, cardamom bears a name that blends the Greek words for “spice” and “cress.” The name is sometimes mistakenly applied to similar spices in the ginger family, but it properly describes two related varieties of the spice, black and green, the latter being the more common. Black cardamom is aromatic and smoky, whereas green cardamom has a milder flavour.

Physical description

Leafy shoots of the cardamom plant arise 1.5 to 6 metres (5 to 20 feet) from the branching rootstock. Flowering shoots, approximately 1 metre (3 feet) long, may be upright or sprawling; each bears numerous flowers about 5 cm (2 inches) in diameter with greenish petals and a purple-veined white lip. The whole fruit, 0.8 to 1.5 cm, is a green three-sided oval capsule containing 15 to 20 dark, reddish brown to brownish black, hard, angular seeds. The essential oil occurs in large parenchyma cells underlying the epidermis of the seed coat. The essential oil content varies from 2 to 10 percent; its principal components are cineole and α-terpinyl acetate.

Cultivation and processing

Cardamom fruits may be collected from wild plants, native to the moist forests of southern India, but most cardamom is cultivated in India, Sri Lanka, and Guatemala. The fruits are picked or clipped from the stems just before maturity, cleansed, and dried in the sun or in a heated curing chamber. Cardamom may be bleached to a creamy white colour in the fumes of burning sulfur. After curing and drying, the small stems of the capsules are removed by winnowing. Decorticated cardamom consists of husked dried seeds.

Cooking uses and health benefits

The cardamom pod, which contains hard, black seeds, is sometimes added whole to dishes. More commonly, the pods are opened and the seeds are removed, then roasted in an oven or a skillet. These seeds contain the spice’s essential oil, which gives it its flavour and scent, with hints of mint and lemon. The seeds are ground with a mortar and pestle, then added to South Asian foods such as curry and chai. Cardamom is a characteristic ingredient in Middle Eastern cuisine as well. It also figures in pastries, especially in the Scandinavian countries, where it is also used as a flavouring for coffee and tea. The spice mixes well with cinnamon, as well as nutmeg and cloves. It is also an ingredient in the Indian spice blend called garam masala.

Cardamom contains vitamin C, niacin, magnesium, and potassium. Apart from its distinctive flavour, cardamom contains high levels of antioxidants, and it is used in Ayurvedic medicine to treat urinary tract disorders and to lower blood sugar levels. It is also frequently incorporated as an ingredient in homeopathic toothpaste for its antibacterial and breath-freshening qualities. Stronger health claims, such as its efficacy in fighting cancers, lack medical substantiation to date.

Although cardamom is widely cultivated at various elevations in South Asia, most of the world market demand is met by Guatemala, where cardamom was introduced by European coffee planters. It is ranked high among the world’s most expensive spices by weight.

Details

Cardamom, sometimes cardamon or cardamum, is a spice made from the seeds of several plants in the genera Elettaria and Amomum in the family Zingiberaceae. Both genera are native to the Indian subcontinent and Indonesia. They are recognized by their small seed pods: triangular in cross-section and spindle-shaped, with a thin, papery outer shell and small, black seeds; Elettaria pods are light green and smaller, while Amomum pods are larger and dark brown.

Species used for cardamom are native throughout tropical and subtropical Asia. The first references to cardamom are found in Sumer, and in Ayurveda. In the 21st century, it is cultivated mainly in India, Indonesia, and Guatemala.

Etymology

The word cardamom is derived from the Latin cardamōmum, as a Latinisation of the Greek (kardámōmon), a compound of (kárdamon, "cress") and (ámōmon), of unknown origin.

The earliest attested form of the word signifying "cress" is the Mycenaean Greek ka-da-mi-ja, written in Linear B syllabic script, in the list of flavorings on the spice tablets found among palace archives in the House of the Sphinxes in Mycenae.

The modern genus name Elettaria is derived from the root ēlam attested in Dravidian languages.

Types and distribution

The two main types of cardamom are:

* True or green cardamom (or white cardamom when bleached) comes from the species Elettaria cardamomum and is distributed from India to Malaysia. What is often referred to as white cardamon is actually Siam cardamom, Amomum krervanh.

* Black cardamom, also known as brown, greater, large, longer, or Nepal cardamom, comes from the species Amomum subulatum and is native to the eastern Himalayas and mostly cultivated in Eastern Nepal, Sikkim, and parts of Darjeeling district in West Bengal of India, and southern Bhutan.

The two types of cardamom were distinguished in the fourth century BCE by Theophrastus.

Uses

Both forms of cardamom are used as flavorings and cooking spices in both food and drink. E. cardamomum (green cardamom) is used as a spice, a masticatory, or is smoked.

Food and beverage

Cardamom has a strong taste, with an aromatic, resinous fragrance. Black cardamom has a more smoky – though not bitter – aroma, with a coolness some consider similar to mint.

Green cardamom is one of the most expensive spices by weight, but little is needed to impart flavor. It is best stored in the pod, as exposed or ground seeds quickly lose their flavor. Grinding the pods and seeds together lowers both the quality and the price. For recipes requiring whole cardamom pods, a generally accepted equivalent is 10 pods equals 1+1/2 teaspoons (7.4 ml) of ground cardamom.

Cardamom is a common ingredient in Indian cooking. It is also often used in baking in the Nordic countries, in particular in Sweden, Norway, and Finland, where it is used in traditional treats such as the Scandinavian Yule bread Julekake, the Swedish kardemummabullar sweet bun, and Finnish sweet bread pulla. In the Middle East, green cardamom powder is used as a spice for sweet dishes, and as a traditional flavouring in coffee and tea. Cardamom is used to a wide extent in savoury dishes. In some Middle Eastern countries, coffee and cardamom are often ground in a wooden mortar, a mihbaj, and cooked together in a skillet, a mehmas, over wood or gas, to produce mixtures with up to 40% cardamom.

In Asia, both types of cardamom are widely used in both sweet and savoury dishes, particularly in the south. Both are frequent components in such spice mixes as Indian and Nepali masalas and Thai curry pastes. Green cardamom is often used in traditional Indian sweets and in masala chai (spiced tea). Both are also often used as a garnish in basmati rice and other dishes. Individual seeds are sometimes chewed and used in much the same way as chewing gum. It is used by confectionery giant Wrigley; its Eclipse Breeze Exotic Mint packaging indicates the product contains "cardamom to neutralize the toughest breath odors". It is also included in aromatic bitters, gin, and herbal teas.

In Korea, Tavoy cardamom (Wurfbainia villosa var. xanthioides) and red cardamom (Lanxangia tsao-ko) are used in tea called jeho-tang.

Composition

The essential oil content of cardamom seeds depends on storage conditions and may be as high as 8%. The oil is typically 45% α-terpineol, 27% myrcene, 8% limonene, 6% menthone, 3% β-phellandrene, 2% 1,8-cineol, 2% sabinene and 2% heptane. Other sources report the following contents: 1,8-cineol (20 to 50%), α-terpenylacetate (30%), sabinene, limonene (2 to 14%), and borneol.

In the seeds of round cardamom from Java (Wurfbainia compacta), the content of essential oil is lower (2 to 4%), and the oil contains mainly 1,8-cineol (up to 70%) plus β-pinene (16%); furthermore, α-pinene, α-terpineol and humulene are found.

Production

In 2022, world production of cardamom (included with nutmeg and mace for reporting to the United Nations) was 138,888 tonnes, led by India, Indonesia and Guatemala, which together accounted for 85% of the total.

Production practices

According to Nair (2011), in the years when India achieves a good crop, it is still less productive than Guatemala. Other notable producers include Costa Rica, El Salvador, Honduras, Papua New Guinea, Sri Lanka, Tanzania, Thailand, and Vietnam.

Much production of cardamom in India is cultivated on private property or in areas the government leases out to farmers. Traditionally, small plots of land within the forests (called eld-kandies) where the wild or acclimatised plant existed are cleared during February and March. Brushwood is cut and burned, and the roots of powerful weeds are torn up to free the soil. Soon after clearing, cardamom plants spring up. After two years the cardamom plants may have eight-to-ten leaves and reach 30 cm (1 ft) in height. In the third year, they may be 120 cm (4 ft) in height. In the following May or June the ground is again weeded, and by September to November a light crop is obtained. In the fourth year, weeding again occurs, and if the cardamoms grow less than 180 cm (6 ft) apart a few are transplanted to new positions. The plants bear for three or four years; and historically the life of each plantation was about eight or nine years. In Malabar the seasons run a little later than in Mysore, and – according to some reports – a full crop may be obtained in the third year. Cardamoms grown above 600 m (2,000 ft) elevation are considered to be of higher quality than those grown below that altitude.

Plants may be raised from seed or by division of the rhizome. In about a year, the seedlings reach about 30 cm (1 ft) in length, and are ready for transplantation. The flowering season is April to May, and after swelling in August and September, by the first half of October usually attain the desired degree of ripening. The crop is accordingly gathered in October and November, and in exceptionally moist weather, the harvest protracts into December. At the time of harvesting, the scapes or shoots bearing the clusters of fruits are broken off close to the stems and placed in baskets lined with fresh leaves. The fruits are spread out on carefully prepared floors, sometimes covered with mats, and are then exposed to the sun. Four or five days of careful drying and bleaching in the sun is usually enough. In rainy weather, drying with artificial heat is necessary, though the fruits suffer greatly in colour; they are consequently sometimes bleached with steam and sulphurous vapour or with ritha nuts.

The industry is highly labour-intensive, each hectare requiring considerable maintenance throughout the year. Production constraints include recurring climate vagaries, the absence of regular re-plantation, and ecological conditions associated with deforestation.

Cultivation

In 1873 and 1874, Ceylon (now Sri Lanka) exported about 4,100 kg (9,000 lb) each year. In 1877, Ceylon exported 5,039 kg (11,108 lb), in 1879, 8,043 kg (17,732 lb), and in the 1881–82 season, 10,490 kg (23,127 lb). In 1903, 1,600 hectares (4,000 acres) of cardamom growing areas were owned by European planters. The produce of the Travancore plantations was given as 290,000 kg (650,000 lb), or just a little under that of Ceylon. The yield of the Mysore plantations was approximately 91,000 kg (200,000 lb), and the cultivation was mainly in Kadur district. The volume for 1903–04 stated the value of the cardamoms exported to have been Rs. 3,37,000 as compared with Rs. 4,16,000 the previous year. India, which ranks second in world production, recorded a decline of 6.7 percent in cardamom production for 2012–13, and projected a production decline of 30–40% in 2013–14, compared with the previous year due to unfavorable weather. In India, the state of Kerala is by far the most productive producer, with the districts of Idukki, Palakkad and Wynad being the principal producing areas. Given that a number of bureaucrats have personal interests in the industry, in India, several organisations have been set up to protect cardamom producers such as the Cardamom Growers Association (est. 1992) and the Kerala Cardamom Growers Association (est. 1974). Research in India's cardamom plantations began in the 1970s while Kizhekethil Chandy held the office of Chairman of the Cardamom Board. The Kerala Land Reforms Act imposed restrictions on the size of certain agricultural holdings per household to the benefit of cardamom producers.

In 1979–1980, Guatemala surpassed India in worldwide production. Guatemala cultivates Elettaria cardamomum, which is native to the Malabar Coast of India. Alta Verapaz Department produces 70 percent of Guatemala's cardamom. Cardamom was introduced to Guatemala before World War I by the German coffee planter Oscar Majus Kloeffer. After World War II, production was increased to 13,000 to 14,000 tons annually.

The average annual income for a plantation-owning household in 1998 was US$3,408. Although the typical harvest requires over 210 days of labor per year, most cardamom farmers are better off than many other agricultural workers, and there are a significant number of those from the upper strata of society involved in the cultivation process. Increased demand since the 1980s, principally from China, for both Wurfbainia villosa and Lanxangia tsao-ko, has provided a key source of income for poor farmers living at higher altitudes in localized areas of China, Laos, and Vietnam, people typically isolated from many other markets. Laos exports about 400 tonnes annually through Thailand according to the FAO.

Trade

Cardamom production's demand and supply patterns of trade are influenced by price movements, nationally and internationally, in 5 to 6-year cycles. Importing leaders mentioned are Saudi Arabia and Kuwait, while other significant importers include Germany, Iran, Japan, Jordan, Pakistan, Qatar, United Arab Emirates, the UK, and the former USSR. According to the United Nations Conference on Trade and Development, 80 percent of cardamom's total consumption occurs in the Middle East.

In the 19th century, Bombay and Madras were among the principal distributing ports of cardamom. India's exports to foreign countries increased during the early 20th century, particularly to the United Kingdom, followed by Arabia, Aden, Germany, Turkey, Japan, Persia and Egypt. However, some 95% of cardamom produced in India is for domestic purposes, and India is itself by far the most important consuming country for cardamoms in the world. India also imports cardamom from Sri Lanka. In 1903–1904, these imports came to 122,076 kg (269,132 lb), valued at Rs. 1,98,710. In contrast, Guatemala's local consumption is negligible, which supports the exportation of most of the cardamom that is produced. In the mid-1800s, Ceylon's cardamom was chiefly imported by Canada. After saffron and vanilla, cardamom is currently the third most expensive spice, and is used as a spice and flavouring for food and liqueurs.

History

Cardamom has been used in flavorings and food over centuries. During the Middle Ages, cardamom dominated the trade industry. The Arab states played a significant role in the trade of Indian spices, including cardamom. It is now ranked the third most expensive spice following saffron and vanilla.

Cardamom production began in ancient times, and has been referred to in ancient Sanskrit texts as ela. The Babylonians and Assyrians used the spice early on, and trade in cardamom opened up along land routes and by the interlinked Persian Gulf route controlled from Dilmun as early as the third millennium BCE Early Bronze Age, into western Asia and the Mediterranean world.

The ancient Greeks thought highly of cardamom, and the Greek physicians Dioscorides and Hippocrates wrote about its therapeutic properties, identifying it as a digestive aid. Due to demand in ancient Greece and Rome, the cardamom trade developed into a handsome luxury business; cardamom was one of the spices eligible for import tax in Alexandria in 126 CE. In medieval times, Venice became the principal importer of cardamom into the west, along with pepper, cloves and cinnamon, which was traded with merchants from the Levant with salt and meat products.

In China, Amomum was an important part of the economy during the Song Dynasty (960–1279). In 1150, the Arab geographer Muhammad al-Idrisi noted that cardamom was being imported to Aden, in Yemen, from India and China.

The Portuguese became involved in the trade in the 16th century, and the industry gained wide-scale European interest in the 19th century.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Online

#2254 2024-08-13 16:23:11

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,374

Re: Miscellany

2256) Eye Bank

Gist

Eye banks can be defined as “a non-profit organization that obtains, medically evaluates and distributes eye tissue for transplant, research, and education”. Ideally, an eye bank should function autonomously and not be part of a medical organization. Eye banks feature a wide range of functions and specializations.

Summary

While prevention is the most desirable way to control corneal blindness, once a cornea has lost its transparency, a corneal transplant, or graft, is a patient's best chance to regain vision in the affected eye(s). However, the biggest limiting factor is the worldwide shortage of donated corneas.

In low- and middle-income countries, where the magnitude of corneal blindness is greatest, the availability of donated corneas is very low. This is due in large part to the lack of local eye banks. Efforts are under way to develop eye banks of optimal standards in many low- and middle-income countries, with countries like India and Philippines making notable progress. Myanmar, Ethiopia, and Kenya are examples where high quality eye banks have been established. However, this is still not enough to meet the need for corneas.

The Eye Bank of Ethiopia in Addis Ababa has been in existence since 2003. It is associated with Menelik II Referral Hospital, a tertiary referral centre, where most of the transplants are done. The eye bank also sends corneas to two university referral hospitals in northwestern and southern Ethiopia. Between 130 and 150 corneas are harvested (using in situ corneal excision) and used in 90–120 transplants every year. There are five corneal transplant surgeons in Ethiopia. Cornea donation is encouraged in a variety of ways, including media campaigns with well-known personalities such as the president of Ethiopia and athlete Haile Gabreselassi. So far, 6,000 Ethiopians, including Mr Gabreselassi, have pledged their corneas, and next-of-kin consent is being used increasingly. The eye bank is funded by ORBIS International Ethiopia and Addis Ababa City Government Health Bureau; it also raises funds locally. (Elmien Wolvaardt Ellison)

What is an eye bank?

Eye banks are the institutions responsible for collecting (harvesting) and processing donor corneas, and for distributing them to trained corneal graft surgeons. Eye banks are regulated and part of the local health system; they may be attached to a hospital or housed in a separate building.

Cornea harvesting is the surgical removal from a deceased person of either the whole eye (enucleation) or the cornea (in situ corneal excision). This can be done by appropriately trained eye care personnel (eye bank technicians, ophthalmology residents, ophthalmologists, or general practitioners) in a variety of settings, including hospitals, homes, and funeral grounds.

Before harvesting

Corneas can be harvested up to twelve hours after death, but ideally within six hours. The person who will harvest the cornea must first do the following:

* Obtain written consent from the senior next of kin of the deceased.

* Verify the death certificate and ensure there is a stated cause of death.

* Review the donor's medical and social history to ensure they have no contraindications to donation. (This is done by studying medical records, interviewing the physician under whose care the donor was, and interviewing close family members. Each eye bank must have a list of such contraindications, which are available from other well-established eye banks.)

* Obtain information about any blood loss occurred prior to and at time of death, and whether the donor received infusion/transfusion of crystalloids, colloids, and blood; these are used to calculate plasma dilution.

During harvesting

Aseptic methods must be adhered to, including maintaining a sterile field while performing enucleation or in-situ corneal excision. Standard protocols include:

* pen torch examination of the eyes for foreign objects and other defects

* preparing the face and eyes of the donor using povidone iodine

* employing aseptic techniques for in situ corneal excision or enucleation

* immediate preservation of the excised eye or cornea in an appropriate cornea preservation medium

* drawing blood to screen the donor for infectious diseases. Each eye bank must decide the most appropriate serological tests needed but at a minimum they must test for HIV, hepatits B, and syphilis.

Storing donated corneas

Whole eyes can be stored in a moist chamber at two to eight degrees Celsius. This is the simplest and least expensive way to store whole eyes, but the eyes have to be used within 48 hours. Such a storage method may be suitable for some eye banks with limited resources.

Excised corneas can be stored in intermediate-term preservation media, such as McCary Kaufman medium (MK medium) or Optisol, both maintained at four degrees Celsius. Corneas can be stored for 96 hours in the MK medium and ten days in Optisol.

With the availability of MK medium and Optisol, eye banks should ideally switch over from enucleation to in situ corneal excision procedures. This will enable better viability of donated corneas during storage. With increased resistance to the antibiotics used in preservation media, inclusion of alternative antibiotics must be considered.

After corneas reach the eye bank, they are examined using a slit lamp to check for corneal and stromal pathology. The endothelial cell density is also examined by specular microscope; this is necessary as donor corneas with a low number of endothelial cells are likely to fail soon after surgery. The processing of whole eyes must be done within a laminar flow hood maintained in sterile conditions.

The suitability of a cornea for transplantation is assessed by the corneal surgeon, who will consider the donor screening report, slit lamp and specular microscopic results, and serology reports. Following processing and evaluation of corneas and serological testing, transplantable corneas are transported to hospitals individually sealed and packaged, maintaining the cold chain at four degrees Celcius. The vial containing the cornea must be labelled properly with the eye bank name, tissue number, name of the preservative medium, medium lot number, expiry date of the medium, and date and time of the donor's death. The surgeon must also be provided with the donor screening, tissue evaluation, and serology reports. It is important that the eye bank follows a fair and equitable system of tissue distribution.

Standards

Eye banks should develop and adhere to acceptable standards. This reduces the risk that grafts will fail or that infection will be transmitted. It may help to refer to the technical guidelines and acceptable minimum medical standards of the European Eye Banking Association (see Useful Resources, page 38).

Finding donors

Even with an effective eye bank, finding enough people willing to donate their corneas can be difficult.

Public awareness programmes play an important role. They must emphasise that corneas can be donated by anyone, whatever their age, religion, or gender, and that neither enucleation nor in situ corneal excision causes disfigurement of the face or any delays in funeral arrangements. Family pledging is also becoming more important as family consent is usually needed before eyes or corneas can be removed.

Some of these problems may be circumvented by favourable legislation for eye donation, such as a ‘required request' law. This law requires hospital authorities to identify potential cornea donors and obtain consent from bereaved family members. Another law employed in some countries, such as the United States and Ethiopia, is a ‘presumed consent' law. Under this law, every person who dies while in hospital is presumed to be an eye donor unless this is actively rejected by their next of kin.

Hospital cornea retrieval programmes can meet some of the immediate need. In these programmes, trained eye donation counsellors approach family members of the deceased and motivate them to consider eye donation. Training these counsellors in the art of grief counselling assists them in approaching family members at an appropriate time, sharing their grief, and preparing them to take the positive step of giving permission for eye donation on behalf of their loved one.

Details

What is an eye bank? What do eye bankers do?

Eye banks are facilities where eye bankers restore sight and change lives in their local communities and around the world by facilitating cornea donation and providing ocular tissue for transplant, research, and education.

EBAA eye banks are non-profit organizations that obtain, medically evaluate, and distribute ocular tissue for transplant, research, and education. On average, U.S. EBAA eye banks provide tissue for more than 85,000 sight-restoring corneal transplants each year.

EBAA Member eye banks serve all 50 U.S. states, Washington DC, Puerto Rico, and locations around the world. Eye banks can be eye only, eye and tissue, or part of an organ procurement organization, and they vary in the functions that are performed including: recovery, storage, tissue evaluation, donor eligibility determination, processing, and distribution. Besides core functions, many eye banks conduct or provide tissue for innovative research to advance eye banking and sight restoration.

EBAA Medical Standards and Food and Drug Administration (FDA) regulations are the foundation for eye bank procedures, ensuring that all tissue is recovered, processed, and distributed in adherence to approved medical processes and resulting in the healthiest, most high quality tissue with the best possible outcomes for recipients. The majority of EBAA Member eye banks are EBAA Accredited which assures that their processes and facilities have undergone thorough inspection by peer eye bank professionals and corneal surgeons, meeting or exceeding industry standards and regulations.

Eye Bank Structures

Eye banking organizations vary in structure and functions, but typically fall into these categories:

* Eye Bank Only Functions

* Tissue and Eye Bank Functions

* Recovery Centers

* Processing Centers

* Distribution Centers

* Multicenter Eye Banks

What is an Eye Banker?

While roles vary, generally speaking, an eye banker is an individual who works at/for an eye bank, and is committed to the EBAA mission to #RestoreSight worldwide.

Departments/Eye Banking Roles

Eye banks typically consist of the departments outlined below, with eye bankers working in various roles.

Donor Eligibility

Review potential donors’ medical history to verify if the donor is eligible to donate.

Recovery

Procure ocular tissue from deceased donors who have been cleared for donation through first person authorization, or consent from the next of kin.

Evaluation

Evaluate tissue using slit lamp and specular microscopes, as well as other equipment to assess the health and quality of the tissue.

Processing

Perform procedures to prepare the tissue for transplant, this can include testing for microorganisms, preparing the tissue, manipulating or resizing the tissue based on surgical specifications, sterilizing the tissue, or removing adventitious agents.

Distribution

Work with surgical centers, hospitals, and practices, to fulfill requests for tissue. Package and ship tissue for transplant and research.

Quality Assurance

Develop, implement, and monitor quality systems and quality assurance as it relates to regulatory compliance, departmental, and organizational strategic goals and processes.

Hospital Development/ Partner Relations/ Community Outreach

Build and strengthen relationships with the local community, hospitals, hospices, funeral homes, medical examiner/ coroner offices and other third-party organizations.

Family Services

Provide information and resources to donor family members and cornea recipients.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Online

#2255 2024-08-14 00:17:29

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,374

Re: Miscellany

2257) Papaya

Details

The papaya, papaw, or pawpaw is the plant species Carica papaya, one of the 21 accepted species in the genus Carica of the family Caricaceae, and also the name of its fruit. It was first domesticated in Mesoamerica, within modern-day southern Mexico and Central America. It is grown in several countries in regions with a tropical climate. In 2022, India produced 38% of the world's supply of papayas.

Etymology

The word papaya derives from Arawak via Spanish, and is also the name for the plant. The name papaw or pawpaw is used alternatively for the fruit only in some regions.

Description

The papaya is a small, sparsely branched tree, usually with a single stem growing from 5 to 10 m (16 to 33 ft) tall, with spirally arranged leaves confined to the top of the trunk. The lower trunk is conspicuously scarred where leaves and fruit were borne. The leaves are large, 50–70 cm (20–28 in) in diameter, deeply palmately lobed, with seven lobes. All plant parts contain latex in articulated laticifers.

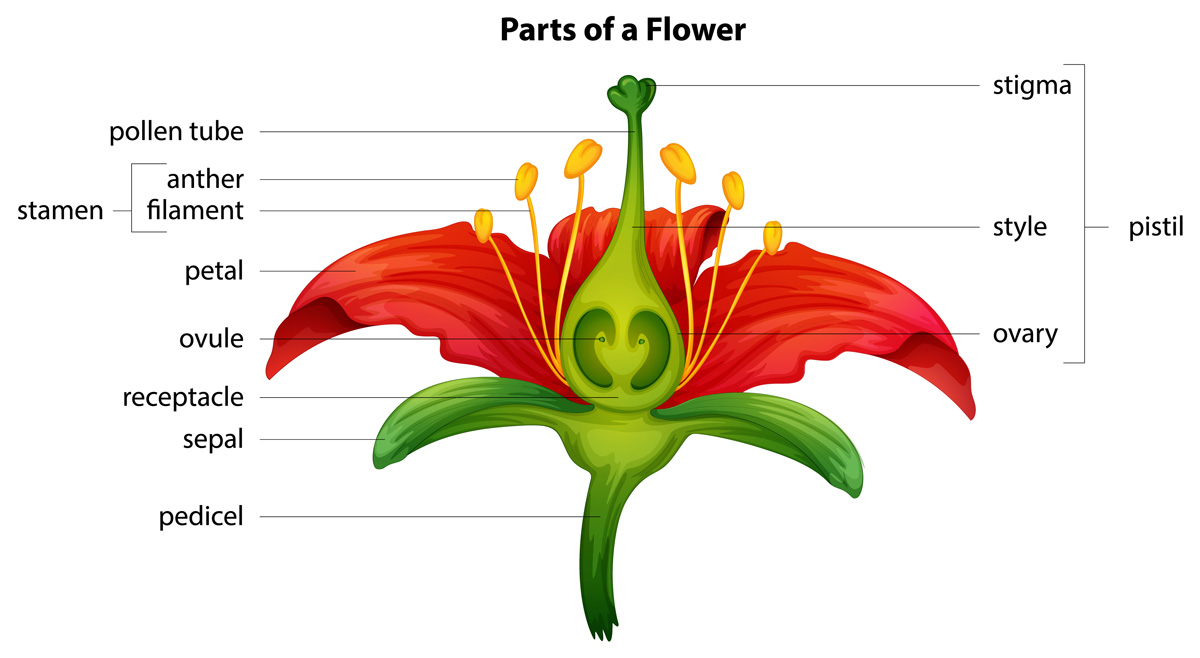

Flowers

Papayas are dioecious. The flowers are five-parted and highly dimorphic; the male flowers have the stamens fused to the petals. There are two different types of papaya flowers. The female flowers have a superior ovary and five contorted petals loosely connected at the base.

Male and female flowers are borne in the leaf axils; the male flowers are in multiflowered dichasia, and the female ones are in few-flowered dichasia. The pollen grains are elongated and approximately 35 microns in length. The flowers are sweet-scented, open at night, and are wind- or insect-pollinated.

Fruit

The fruit is a large berry about 15–45 cm (6–17+3/4 in) long and 10–30 cm (4–11+3/4 in) in diameter. It is ripe when it feels soft (as soft as a ripe avocado or softer), its skin has attained an amber to orange hue and along the walls of the large central cavity are attached numerous black seeds.

Chemistry

Papaya skin, pulp, and seeds contain a variety of phytochemicals, including carotenoids and polyphenols, as well as benzyl isothiocyanates and benzyl glucosinates, with skin and pulp levels that increase during ripening. The carotenoids, lutein and beta-carotene, are prominent in the yellow skin, while lycopene is dominant in the red flesh (table). Papaya seeds also contain the cyanogenic substance prunasin. The green fruit contains papain, a cysteine protease enzyme used to tenderize meat.

Distribution and habitat

Native to tropical America, papaya originates from southern Mexico and Central America. Papaya is also considered native to southern Florida, introduced by predecessors of the Calusa no later than AD 300. Spaniards introduced papaya to the Old World in the 16th century. Papaya cultivation is now nearly pantropical, spanning Hawaii, Central Africa, India, and Australia.

Wild populations of papaya are generally confined to naturally disturbed tropical forests. Papaya is found in abundance on Everglades hammocks following major hurricanes, but is otherwise infrequent. In the rain forests of southern Mexico, papaya thrives and reproduces quickly in canopy gaps while dying off in the mature closed-canopy forests.

Ecology

Viruses

Papaya ringspot virus is a well-known virus within plants in Florida. The first signs of the virus are yellowing and vein-clearing of younger leaves and mottling yellow leaves. Infected leaves may obtain blisters, roughen, or narrow, with blades sticking upwards from the middle of the leaves. The petioles and stems may develop dark green greasy streaks and, in time, become shorter. The ringspots are circular, C-shaped markings that are a darker green than the fruit. In the later stages of the virus, the markings may become gray and crusty. Viral infections impact growth and reduce the fruit's quality. One of the biggest effects that viral infections have on papaya is taste. As of 2010, the only way to protect papaya from this virus is genetic modification.

The papaya mosaic virus destroys the plant until only a small tuft of leaves is left. The virus affects both the leaves of the plant and the fruit. Leaves show thin, irregular, dark-green lines around the borders and clear areas around the veins. The more severely affected leaves are irregular and linear in shape. The virus can infect the fruit at any stage of its maturity. Fruits as young as two weeks old have been spotted with dark-green ringspots about 1 inch (25 mm) in diameter. Rings on the fruit are most likely seen on either the stem end or the blossom end. In the early stages of the ringspots, the rings tend to be many closed circles, but as the disease develops, the rings increase in diameter consisting of one large ring. The difference between the ringspot and the mosaic viruses is the ripe fruit in the ringspot has a mottling of colors, and the mosaic does not.

Fungi and oomycetes

The fungus anthracnose is known to attack papaya, especially mature fruits. The disease starts small with very few signs, such as water-soaked spots on ripening fruits. The spots become sunken, turn brown or black, and may get bigger. In some of the older spots, the fungus may produce pink spores. The fruit ends up being soft and having an off flavor because the fungus grows into the fruit.

The fungus powdery mildew occurs as a superficial white presence on the leaf's surface, which is easily recognized. Tiny, light yellow spots begin on the lower surfaces of the leaf as the disease starts to make its way. The spots enlarge, and white powdery growth appears on the leaves. The infection usually appears at the upper leaf surface as white fungal growth. Powdery mildew is not as severe as other diseases.

The fungus-like oomycete Phytophthora causes damping-off, root rot, stem rot, stem girdling, and fruit rot. Damping-off happens in young plants by wilting and death. The spots on established plants start as white, water-soaked lesions at the fruit and branch scars. These spots enlarge and eventually cause death. The disease's most dangerous feature is the fruit's infection, which may be toxic to consumers. The roots can also be severely and rapidly infected, causing the plant to brown and wilt away, collapsing within days.

Pests

The papaya fruit fly lays its eggs inside of the fruit, possibly up to 100 or more eggs. The eggs usually hatch within 12 days when they begin to feed on seeds and interior parts of the fruit. When the larvae mature, usually 16 days after being hatched, they eat their way out of the fruit, drop to the ground, and pupate in the soil to emerge within one to two weeks later as mature flies. The infected papaya turns yellow and drops to the ground after the papaya fruit fly infestation.

The two-spotted spider mite is a 0.5-mm-long brown or orange-red or a green, greenish-yellow translucent oval pest. They all have needle-like piercing-sucking mouthparts and feed by piercing the plant tissue with their mouthparts, usually on the underside of the plant. The spider mites spin fine threads of webbing on the host plant, and when they remove the sap, the mesophyll tissue collapses, and a small chlorotic spot forms at the feeding sites. The leaves of the papaya fruit turn yellow, gray, or bronze. If the spider mites are not controlled, they can cause the death of the fruit.

The papaya whitefly lays yellow, oval eggs that appear dusted on the undersides of the leaves. They eat papaya leaves, therefore damaging the fruit. There, the eggs developed into flies in three stages called instars. The first instar has well-developed legs and is the only mobile immature life stage. The crawlers insert their mouthparts in the lower surfaces of the leaf when they find it suitable and usually do not move again in this stage. The next instars are flattened, oval, and scale-like. In the final stage, the pupal whiteflies are more convex, with large, conspicuously red eyes.

Papayas are one of the most common hosts for fruit flies like A. suspensa, which lay their eggs in overripe or spoiled papayas. The larvae of these flies then consume the fruit to gain nutrients until they can proceed into the pupal stage. This parasitism has led to extensive economic costs for nations in Central America.

Cultivation

Historical accounts from 18th-century travelers and botanists suggested that papaya seeds were transported from the Caribbean to Malacca and then to India. From Malacca or the Philippines, papaya spread throughout Asia and into the South Pacific region. Credit for introducing papaya to Hawaii is often given to Francisco de Paula Marín, a Spanish explorer and horticulturist, who brought it from the Marquesas Islands in the early 1800s. Since then, papaya cultivation has expanded to all tropical countries and many subtropical regions worldwide. Today, papaya is grown extensively across the globe, owing to its adaptability to various climates and its popularity as a tropical fruit.

Papaya plants grow in three sexes: male, female, and hermaphrodite. The male produces only pollen, never fruit. The female produces small, inedible fruits unless pollinated. The hermaphrodite can self-pollinate since its flowers contain both male stamens and female ovaries. Almost all commercial papaya orchards contain only hermaphrodites.

Originally from southern Mexico (particularly Chiapas and Veracruz), Central America, northern South America, and southern Florida the papaya is now cultivated in most tropical countries. In cultivation, it grows rapidly, fruiting within three years. It is, however, highly frost-sensitive, limiting its production to tropical climates. Temperatures below −2 °C (29 °F) are greatly harmful, if not fatal. In Florida, California, and Texas, growth is generally limited to the southern parts of those states. It prefers sandy, well-drained soil, as standing water can kill the plant within 24 hours.

Cultivars

Two kinds of papayas are commonly grown. One has sweet, red, or orange flesh, and the other has yellow flesh; in Australia, these are called "red papaya" and "yellow papaw," respectively. Either kind, picked green, is called a "green papaya."

The large-fruited, red-fleshed 'Maradol,' 'Sunrise,' and 'Caribbean Red' papayas often sold in U.S. markets are commonly grown in Mexico and Belize.

In 2011, Philippine researchers reported that by hybridizing papaya with Vasconcellea quercifolia, they had developed papaya resistant to papaya ringspot virus (PRV), part of a long line of attempts to transfer resistance from Vasconcellea species into papaya.

Genetically engineered cultivars

Carica papaya was the first transgenic fruit tree to have its genome sequenced. In response to the papaya ringspot virus outbreak in Hawaii in 1998, genetically altered papaya were approved and brought to market (including 'SunUp' and 'Rainbow' varieties.) Varieties resistant to PRV have some DNA of this virus incorporated into the plant's DNA. As of 2010, 80% of Hawaiian papaya plants were genetically modified. The modifications were made by University of Hawaii scientists, who made the modified seeds available to farmers without charge.

In transgenic papaya, resistance is produced by inserting the viral coat protein gene into the plant's genome. Doing so seems to cause a similar protective reaction in the plant to cross-protection, which involves using an attenuated virus to protect against a more dangerous strain. Conventional varieties of transgenic papaya has reduced resistance against heterologous (not closely related to the coat gene source) strains, forcing different localities to develop their own transgenic varieties. As of 2016, one transgenic line appears able to deal with three different heterologous strains in addition to its source.

Production

In 2022, global production of papayas was 13.8 million tonnes, led by India with 38% of the world total. Global papaya production grew significantly over the early 21st century, mainly as a result of increased production in India and demand by the United States. The United States is the largest consumer of papayas worldwide.

In South Africa, papaya orchards yield up to 100 tonnes of fruit per hectare.

Toxicity

Papaya releases a latex fluid when not ripe, possibly causing irritation and an allergic reaction in some people. Because the enzyme papain acts as an allergen in sensitive individuals, meat that has been tenderized with it may induce an allergic reaction.

Culinary use

The ripe fruit of the papaya is usually eaten raw, without skin or seeds. The black seeds are edible and have a sharp, spicy taste. The unripe green fruit is usually cooked due to its latex content.

Both green papaya fruit and its latex are rich in papain, a cysteine protease used for tenderizing meat and other proteins, as practiced currently by indigenous Americans, people of the Caribbean region, and the Philippines. It is included as a component in some powdered meat tenderizers. Papaya is not suitable for foods which set due to gelatin (such as jelly or aspic) because the enzymatic properties of papain prevent gelatin from setting.

Nutrition

Raw papaya pulp contains 88% water, 11% carbohydrates, and negligible fat and protein. In a 100-g amount, papaya fruit provides 43 kilocalories and is a significant source of vitamin C (75% of the Daily Value, DV) and a moderate source of folate (10% DV), but otherwise has a low content of nutrients.

Additional Information

Papayas contain a range of nutrients, such as antioxidants and potassium. Possible health benefits of eating papaya may include reducing the risk of heart disease, diabetes, cancer, and more.

Papaya, papaw, or pawpaw, is a soft, fleshy fruit of the plant species Carica papaya.

This article examines the possible health benefits and uses of papaya fruit, its nutritional value, and how to incorporate more papaya into a diet.

Fast facts on papaya:

* Papaya is native to Mexico. However, it grows naturally in the Caribbean and Florida too.

* According to the Food and Agriculture Organization of the United Nations (FAO), India produces the most papayas – over 5 million tons in 2013.

* It can be added to salads, smoothies, and other dishes.

The nutrients in papaya may have a range of health benefits. They may help protect against a number of health conditions.

Vision and eye health

Some of the organic compounds present in papaya may help prevent inflammation and oxidative stress in age-related eye diseases, such as macular degeneration.

One of these compounds, called lycopene, may help protect the retinal pigment epithelium — a part of the retina essential for healthy vision — against inflammation and oxidative stress.

Papaya also contains carotene, a compound that gives the papaya its distinctive orange color. Carotene has links to vision improvement and the prevention of night blindness.

Zeaxanthin, an antioxidant in papaya, filters out harmful blue light rays. It is thought to play a protective role in eye health and may ward off macular degeneration.

Asthma

Consuming a high amount of fruits and vegetables lowers the risk of developing asthma and can prevent the condition from worsening.

This may be due to dietary components in fruits and vegetables, such as antioxidants, fiber, and vitamin D. These nutrients can assist the immune system’s typical functioning, which over-responds in people with asthma.

One 2022 study also linked a higher intake of carotenes, lycopene, and zeaxanthin to a lower risk of developing asthma in adults. Papaya contains all three of these organic compounds.

A 2017 animal study also found that papaya leaf extract has an anti-inflammatory effect on the airways of mice. However, more research is necessary into the effect of papaya leaf extract on humans.

Cancer

Many of the compounds present in papaya — such as lycopene, zeaxanthin, and lutein, may have anticancer effects.

A 2022 review explained that some studies have shown that lycopene has anticancer properties, particularly against prostate cancer. However, the researchers mentioned more research is necessary to determine the recommended doses.

A 2020 study suggested that zeaxanthin may have a beneficial effect on gastric cancer cells.

Another 2018 study also found that lutein selectively slows the growth of breast cancer cells.

Additionally, eating a diet rich in fruits and vegetables can help reduce the risk of cancer more generally. Generally, plants with the most color — dark green, yellow, red, and orange — have the most nutrients.

Bone health

Papaya is a source of vitamin K. Low intakes of vitamin K have associations with a higher risk of bone fracture.