Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#2301 2024-09-14 17:01:52

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,767

Re: Miscellany

2301) Longitude

Gist

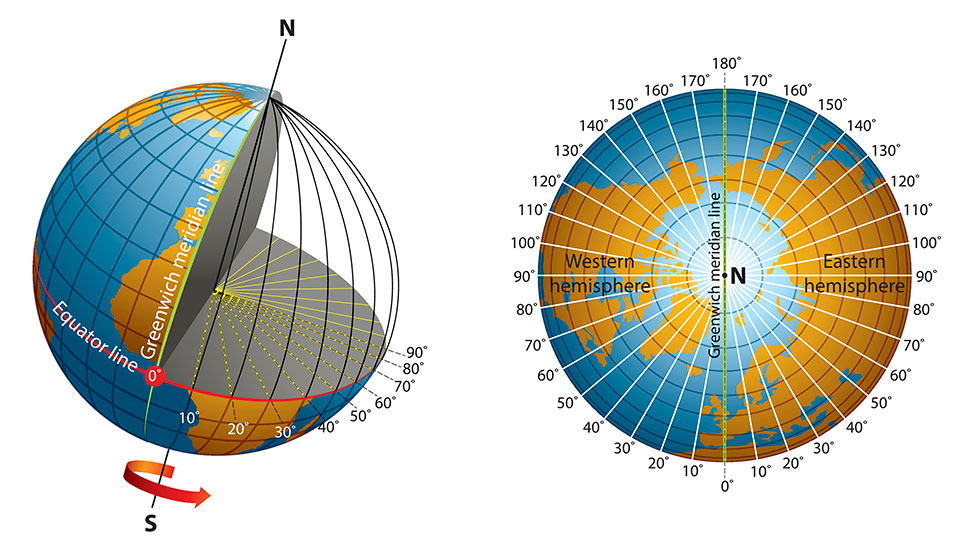

Longitude measures distance east or west of the prime meridian. Lines of longitude, also called meridians, are imaginary lines that divide the Earth. They run north to south from pole to pole, but they measure the distance east or west. Longitude is measured in degrees, minutes, and seconds.

Summary

Longitude is a geographic coordinate that specifies the east–west position of a point on the surface of the Earth, or another celestial body. It is an angular measurement, usually expressed in degrees and denoted by the Greek letter lambda. Meridians are imaginary semicircular lines running from pole to pole that connect points with the same longitude. The prime meridian defines 0° longitude; by convention the International Reference Meridian for the Earth passes near the Royal Observatory in Greenwich, south-east London on the island of Great Britain. Positive longitudes are east of the prime meridian, and negative ones are west.

Because of the Earth's rotation, there is a close connection between longitude and time measurement. Scientifically precise local time varies with longitude: a difference of 15° longitude corresponds to a one-hour difference in local time, due to the differing position in relation to the Sun. Comparing local time to an absolute measure of time allows longitude to be determined. Depending on the era, the absolute time might be obtained from a celestial event visible from both locations, such as a lunar eclipse, or from a time signal transmitted by telegraph or radio. The principle is straightforward, but in practice finding a reliable method of determining longitude took centuries and required the effort of some of the greatest scientific minds.

A location's north–south position along a meridian is given by its latitude, which is approximately the angle between the equatorial plane and the normal from the ground at that location.

Longitude is generally given using the geodetic normal or the gravity direction. The astronomical longitude can differ slightly from the ordinary longitude because of vertical deflection, small variations in Earth's gravitational field.

Details

Lines of longitude, also called meridians, are imaginary lines that divide the Earth. They run north to south from pole to pole, but they measure the distance east or west. Longitude is measured in degrees, minutes, and seconds. Although latitude lines are always equally spaced, longitude lines are furthest from each other at the equator and meet at the poles.

Unlike the equator (which is halfway between the Earth’s north and south poles), the prime meridian is an arbitrary line. In 1884, representatives at the International Meridian Conference in Washington, D.C., met to define the meridian that would represent 0 degrees longitude. For its location, the conference chose a line that ran through the telescope at the Royal Observatory in Greenwich, England. At the time, many nautical charts and time zones already used Greenwich as the starting point, so keeping this location made sense. But, if you go to Greenwich with your GPS receiver, you’ll need to walk 102 meters (334 feet) east of the prime meridian markers before your GPS shows 0 degrees longitude. In the 19th century, scientists did not take into account local variations in gravity or the slightly squished shape of the Earth when they determined the location of the prime meridian. Satellite technology, however, allows scientists to more precisely plot meridians so that they are straight lines running north and south, unaffected by local gravity changes. In the 1980s, the International Reference Meridian (IRM) was established as the precise location of 0 degrees longitude. Unlike the prime meridian, the IRM is not a fixed location, but will continue to move as the Earth’s surface shifts.

Lines of longitude, also called meridians, are imaginary lines that divide the Earth. They run north to south from pole to pole, but they measure the distance east or west.

The prime meridian, which runs through Greenwich, England, has a longitude of 0 degrees. It divides the Earth into the eastern and western hemispheres. The antimeridian is on the opposite side of the Earth, at 180 degrees longitude. Though the antimeridian is the basis for the international date line, actual date and time zone boundaries are dependent on local laws. The international date line zigzags around borders near the antimeridian.

Like latitude, longitude is measured in degrees, minutes, and seconds. Although latitude lines are always equally spaced, longitude lines are furthest from each other at the equator and meet at the poles. At the equator, longitude lines are the same distance apart as latitude lines — one degree covers about 111 kilometers (69 miles). But, by 60 degrees north or south, that distance is down to 56 kilometers (35 miles). By 90 degrees north or south (at the poles), it reaches zero.

Navigators and mariners have been able to measure latitude with basic tools for thousands of years. Longitude, however, required more advanced tools and calculations. Starting in the 16th century, European governments began offering huge rewards if anyone could solve “the longitude problem.” Several methods were tried, but the best and simplest way to measure longitude from a ship was with an accurate clock.

A navigator would compare the time at local noon (when the sun is at its highest point in the sky) to an onboard clock that was set to Greenwich Mean Time (the time at the prime meridian). Each hour of difference between local noon and the time in Greenwich equals 15 degrees of longitude. Why? Because the Earth rotates 360 degrees in 24 hours, or 15 degrees per hour. If the sun’s position tells the navigator it’s local noon, and the clock says back in Greenwich, England, it’s 2 p.m., the two-hour difference means the ship’s longitude is 30 degrees west.

But aboard a swaying ship in varying temperatures and salty air, even the most accurate clocks of the age did a poor job of keeping time. It wasn’t until marine chronometers were invented in the 18th century that longitude could be accurately measured at sea.

Accurate clocks are still critical to determining longitude, but now they’re found in GPS satellites and stations. Each GPS satellite is equipped with one or more atomic clocks that provide incredibly precise time measurements, accurate to within 40 nanoseconds (or 40 billionths of a second). The satellites broadcast radio signals with precise timestamps. The radio signals travel at a constant speed (the speed of light), so we can easily calculate the distance between a satellite and GPS receiver if we know precisely how long it took for the signal to travel between them.

Additional Information

Longitude is the measurement east or west of the prime meridian. Longitude is measured by imaginary lines that run around Earth vertically (up and down) and meet at the North and South Poles. These lines are known as meridians. Each meridian measures one arc degree of longitude. The distance around Earth measures 360 degrees.

The meridian that runs through Greenwich, England, is internationally accepted as the line of 0 degrees longitude, or prime meridian. The antimeridian is halfway around the world, at 180 degrees. It is the basis for the International Date Line.

Half of the world, the Eastern Hemisphere, is measured in degrees east of the prime meridian. The other half, the Western Hemisphere, in degrees west of the prime meridian.

Degrees of longitude are divided into 60 minutes. Each minute of longitude can be further divided into 60 seconds. For example, the longitude of Paris, France, is 2° 29' E (2 degrees, 29 minutes east). The longitude for Brasilia, Brazil, is 47° 55' W (47 degrees, 55 minutes west).

A degree of longitude is about 111 kilometers (69 miles) at its widest. The widest areas of longitude are near the Equator, where Earth bulges out. Because of Earth's curvature, the actual distance of a degrees, minutes, and seconds of longitude depends on its distance from the Equator. The greater the distance, the shorter the length between meridians. All meridians meet at the North and South Poles.

Longitude is related to latitude, the measurement of distance north or south of the Equator. Lines of latitude are called parallels. Maps are often marked with parallels and meridians, creating a grid. The point in the grid where parallels and meridians intersect is called a coordinate. Coordinates can be used to locate any point on Earth.

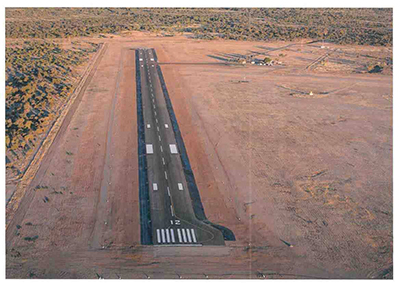

Knowing the exact coordinates of a site (degrees, minutes, and seconds of longitude and latitude) is valuable for military, engineering, and rescue operations. Coordinates can give military leaders the location of weapons or enemy troops. Coordinates help engineers plan the best spot for a building, bridge, well, or other structure. Coordinates help airplane pilots land planes or drop aid packages in specific locations.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2302 2024-09-15 00:02:34

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,767

Re: Miscellany

2302) Fertilizer

Gist

A fertilizer is a natural or artificial substance containing the chemical elements that improve growth and productiveness of plants. Fertilizers enhance the natural fertility of the soil or replace chemical elements taken from the soil by previous crops.

Summary

A fertilizer (American English) or fertiliser (British English) is any material of natural or synthetic origin that is applied to soil or to plant tissues to supply plant nutrients. Fertilizers may be distinct from liming materials or other non-nutrient soil amendments. Many sources of fertilizer exist, both natural and industrially produced. For most modern agricultural practices, fertilization focuses on three main macro nutrients: nitrogen (N), phosphorus (P), and potassium (K) with occasional addition of supplements like rock flour for micronutrients. Farmers apply these fertilizers in a variety of ways: through dry or pelletized or liquid application processes, using large agricultural equipment, or hand-tool methods.

Historically, fertilization came from natural or organic sources: compost, animal manure, human manure, harvested minerals, crop rotations, and byproducts of human-nature industries (e.g. fish processing waste, or bloodmeal from animal slaughter). However, starting in the 19th century, after innovations in plant nutrition, an agricultural industry developed around synthetically created fertilizers. This transition was important in transforming the global food system, allowing for larger-scale industrial agriculture with large crop yields.

Nitrogen-fixing chemical processes, such as the Haber process invented at the beginning of the 20th century, and amplified by production capacity created during World War II, led to a boom in using nitrogen fertilizers. In the latter half of the 20th century, increased use of nitrogen fertilizers (800% increase between 1961 and 2019) has been a crucial component of the increased productivity of conventional food systems (more than 30% per capita) as part of the so-called "Green Revolution".

The use of artificial and industrially-applied fertilizers has caused environmental consequences such as water pollution and eutrophication due to nutritional runoff; carbon and other emissions from fertilizer production and mining; and contamination and pollution of soil. Various sustainable agriculture practices can be implemented to reduce the adverse environmental effects of fertilizer and pesticide use and environmental damage caused by industrial agriculture.

Details

Soil fertility is the quality of a soil that enables it to provide compounds in adequate amounts and proper balance to promote growth of plants when other factors (such as light, moisture, temperature, and soil structure) are favourable. Where fertility of the soil is not good, natural or manufactured materials may be added to supply the needed plant nutrients. These are called fertilizers, although the term is generally applied to largely inorganic materials other than lime or gypsum.

Essential plant nutrients

In total, plants need at least 16 elements, of which the most important are carbon, hydrogen, oxygen, nitrogen, phosphorus, sulfur, potassium, calcium, and magnesium. Plants obtain carbon from the atmosphere and hydrogen and oxygen from water; other nutrients are taken up from the soil. Although plants contain sodium, iodine, and cobalt, these are apparently not essential. This is also true of silicon and aluminum.

Overall chemical analyses indicate that the total supply of nutrients in soils is usually high in comparison with the requirements of crop plants. Much of this potential supply, however, is bound tightly in forms that are not released to crops fast enough to give satisfactory growth. Because of this, the farmer is interested in measuring the available nutrient supply as contrasted to the total nutrient supply. When the available supply of a given nutrient becomes depleted, its absence becomes a limiting factor in plant growth. Excessive quantities of some nutrients may cause a decrease in yield, however.

Determining nutrient needs

Determination of a crop’s nutrient needs is an essential aspect of fertilizer technology. The appearance of a growing crop may indicate a need for fertilizer, though in some plants the need for more or different nutrients may not be easily observable. If such a problem exists, its nature must be diagnosed, the degree of deficiency must be determined, and the amount and kind of fertilizer needed for a given yield must be found. There is no substitute for detailed examination of plants and soil conditions in the field, followed by simple fertilizer tests, quick tests of plant tissues, and analysis of soils and plants.

Sometimes plants show symptoms of poor nutrition. Chlorosis (general yellow or pale green colour), for example, indicates lack of sulfur and nitrogen. Iron deficiency produces white or pale yellow tissue. Symptoms can be misinterpreted, however. Plant disease can produce appearances resembling mineral deficiency, as can various organisms. Drought or improper cultivation or fertilizer application each may create deficiency symptoms.

After field diagnosis, the conclusions may be confirmed by experiments in a greenhouse or by making strip tests in the field. In strip tests, the fertilizer elements suspected of being deficient are added, singly or in combination, and the resulting plant growth observed. Next it is necessary to determine the extent of the deficiency.

An experiment in the field can be conducted by adding nutrients to the crop at various rates. The resulting response of yield in relation to amounts of nutrients supplied will indicate the supplying power of the unfertilized soil in terms of bushels or tons of produce. If the increase in yield is large, this practice will show that the soil has too little of a given nutrient. Such field experiments may not be practical, because they can cost too much in time and money. Soil-testing laboratories are available in most areas; they conduct chemical soil tests to estimate the availability of nutrients. Commercial soil-testing kits give results that may be very inaccurate, depending on techniques and interpretation. Actually, the most accurate system consists of laboratory analysis of the nutrient content of plant parts, such as the leaf. The results, when correlated with yield response to fertilizer application in field experiments, can give the best estimate of deficiency. Further development of remote sensing techniques, such as infrared photography, are under study and may ultimately become the most valuable technique for such estimates.

The economics of fertilizers

The practical goal is to determine how much nutrient material to add. Since the farmer wants to know how much profit to expect when buying fertilizer, the tests are interpreted as an estimation of increased crop production that will result from nutrient additions. The cost of nutrients must be balanced against the value of the crop or even against alternative procedures, such as investing the money in something else with a greater potential return. The law of diminishing returns is well exemplified in fertilizer technology. Past a certain point, equal inputs of chemicals produce less and less yield increase. The goal of the farmer is to use fertilizer in such a way that the most profitable application rate is employed. Ideal fertilizer application also minimizes excess and ill-timed application, which is not only wasteful for the farmer but also harmful to nearby waterways. Unfortunately, water pollution from fertilizer runoff, which has a sphere of impact that extends far beyond the farmer and the fields, is a negative externality that is not accounted for in the costs and prices of the unregulated market.

Fertilizers can aid in making profitable changes in farming. Operators can reduce costs per unit of production and increase the margin of return over total cost by increasing rates of application of fertilizer on principal cash and feed crops. They are then in a position to invest in soil conservation and other improvements that are needed when shifting acreage from surplus crops to other uses.

Synthetic fertilizers

Modern chemical fertilizers include one or more of the three elements that are most important in plant nutrition: nitrogen, phosphorus, and potassium. Of secondary importance are the elements sulfur, magnesium, and calcium.

Most nitrogen fertilizers are obtained from synthetic ammonia; this chemical compound (NH3) is used either as a gas or in a water solution, or it is converted into salts such as ammonium sulfate, ammonium nitrate, and ammonium phosphate, but packinghouse wastes, treated garbage, sewage, and manure are also common sources of it. Because its nitrogen content is high and is readily converted to ammonia in the soil, urea is one of the most concentrated nitrogenous fertilizers. An inexpensive compound, it is incorporated in mixed fertilizers as well as being applied alone to the soil or sprayed on foliage. With formaldehyde it gives methylene-urea fertilizers, which release nitrogen slowly, continuously, and uniformly, a full year’s supply being applied at one time.

Phosphorus fertilizers include calcium phosphate derived from phosphate rock or bones. The more soluble superphosphate and triple superphosphate preparations are obtained by the treatment of calcium phosphate with sulfuric and phosphoric acid, respectively. Potassium fertilizers, namely potassium chloride and potassium sulfate, are mined from potash deposits. Of commercially produced potassium compounds, almost 95 percent of them are used in agriculture as fertilizer.

Mixed fertilizers contain more than one of the three major nutrients—nitrogen, phosphorus, and potassium. Fertilizer grade is a conventional expression that indicates the percentage of plant nutrients in a fertilizer; thus, a 10–20–10 grade contains 10 percent nitrogen, 20 percent phosphoric oxide, and 10 percent potash. Mixed fertilizers can be formulated in hundreds of ways.

Organic fertilizers and practices

The use of manure and compost as fertilizers is probably almost as old as agriculture. Many traditional farming systems still rely on these sustainable fertilizers, and their use is vital to the productivity of certified organic farms, in which synthetic fertilizers are not permitted.

Farm manure

Among sources of organic matter and plant nutrients, farm manure has been of major importance. Manure is understood to mean the refuse from stables and barnyards, including both excreta and straw or other bedding material. Large amounts of manure are produced by livestock; such manure has value in maintaining and improving soil because of the plant nutrients, humus, and organic substances contained in it.

Due to the potential for harbouring human pathogens, the USDA National Organic Standards mandate that raw manure must be applied no later than 90 or 120 days before harvest, depending on whether the harvested part of the crop is in contact with the ground. Composted manure that has been turned five times in 15 days and reached temperatures between 55 and 77.2 °C (131 and 171 °F) has no restrictions on application times. As manure must be managed carefully in order to derive the most benefit from it, some farmers may be unwilling to expend the necessary time and effort. Manure must be carefully stored to minimize loss of nutrients, particularly nitrogen. It must be applied to the right kind of crop at the proper time. Also, additional fertilizer may be needed, such as phosphoric oxide, in order to gain full value of the nitrogen and potash that are contained in manure.

Manure is fertilizer graded as approximately 0.5–0.25–0.5 (percentages of nitrogen, phosphoric oxide, and potash), with at least two-thirds of the nitrogen in slow-acting forms. Given that these nutrients are mostly in an unmineralized form that cannot be taken up by plants, soil microbes are needed to break down organic matter and transform nutrients into a bioavailable “mineralized” state. In comparison, synthetic fertilizers are already in mineralized form and can be taken up by plants directly. On properly tilled soils, the returns from synthetic fertilizer usually will be greater than from an equivalent amount of manure. However, manure provides many indirect benefits. It supplies humus, which improves the soil’s physical character by increasing its capacity to absorb and store water, by enhancement of aeration, and by favouring the activities of lower organisms. Manure incorporated into the topsoil will help prevent erosion from heavy rain and slow down evaporation of water from the surface. In effect, the value of manure as a mulching material may be greater than is its value as a source of essential plant nutrients.

Green manuring

In reasonably humid areas the practice of green manuring can improve yield and soil qualities. A green manure crop is grown and plowed under for its beneficial effects, although during its growth it may be grazed. These green manure crops are usually annuals, either grasses or legumes, whose roots bear nodule bacteria capable of fixing atmospheric nitrogen. Among the advantages of green manure crops are the addition of nitrogen to the soil, an increase in general fertility, a reduction of erosion, an improvement of physical condition, and a reduction of nutrient loss from leaching. Disadvantages include the chance of not obtaining satisfactory growth; the possibility that the cost of growing the manure crop may exceed the cost of applying commercial nitrogen; possible increases in disease, insect pests, and nematodes (parasitic worms); and possible exhaustion of soil moisture by the crop.

Green manure crops are usually planted in the fall and turned under in the spring before the summer crop is sown. Their value as a source of nitrogen, particularly that of the legumes, is unquestioned for certain crops such as potatoes, cotton, and corn (maize); for other crops, such as peanuts (groundnuts; themselves legumes), the practice is questionable.

Compost

Compost is used in agriculture and gardening primarily as a soil amendment rather than as fertilizer, because it has a low content of plant nutrients. It may be incorporated into the soil or mulched on the surface. Heavy rates of application are common.

Compost is basically a mass of rotted organic matter made from waste plant residues. Addition of nitrogen during decomposition is usually advisable. The result is a crumbly material that when added to soil does not compete with the crop for nitrogen. When properly prepared, it is free of obnoxious odours. Composts commonly contain about 2 percent nitrogen, 0.5 to 1 percent phosphorus, and about 2 percent potassium. The nitrogen of compost becomes available slowly and never approaches that available from inorganic sources. This slow release of nitrogen reduces leaching and extends availability over the whole growing season. Composts are essentially fertilizers with low nutrient content, which explains why large amounts are applied. The maximum benefits of composts on soil structure (better aggregation, pore spacing, and water storage) and on crop yield usually occur after several years of use.

In practical farming, the use of composted plant residues must be compared with the use of fresh residues. More beneficial soil effects usually accrue with less labour by simply turning under fresh residues; also, since one-half the organic matter is lost in composting, fresh residues applied at the same rate will cover twice the area that composted residues would cover. In areas where commercial fertilizers are expensive, labour is cheap, and implements are simple, however, composting meets the need and is a logical practice.

Sewage sludge, the solid material remaining from the treatment of sewage, is not permitted in certified organic farming, though it is used in other, nonorganic settings. After suitable processing, it is sold as fertilizer and as a soil amendment for use on lawns, in parks, and on golf courses. Use of human biosolids in agriculture is controversial, as there are concerns that even treated sewage may harbour harmful bacteria, viruses, pharmaceutical residues, and heavy metals.

Liming

Liming to reduce soil acidity is practiced extensively in humid areas where rainfall leaches calcium and magnesium from the soil, thus creating an acid condition. Calcium and magnesium are major plant nutrients supplied by liming materials. Ground limestone is widely used for this purpose; its active agent, calcium carbonate, reacts with the soil to reduce its acidity. The calcium is then available for plant use. The typical limestones, especially dolomitic, contain magnesium carbonate as well, thus also supplying magnesium to the plant.

Marl and chalk are soft impure forms of limestone and are sometimes used as liming materials, as are oyster shells. Calcium sulfate (gypsum) and calcium chloride, however, are unsuitable for liming, for, although their calcium is readily soluble, they leave behind a residue that is harmful. Organic standards by the European Union and the U.S. Food and Drug Administration restrict certain liming agents; burnt lime and hydrated lime are not permitted for certified organic farms in the U.S., for example.

Lime is applied by mixing it uniformly with the surface layer of the soil. It may be applied at any time of the year on land plowed for spring crops or winter grain or on permanent pasture. After application, plowing, disking, or harrowing will mix it with the soil. Such tillage is usually necessary, because calcium migrates slowly downward in most soils. Lime is usually applied by trucks specially equipped and owned by custom operators.

Methods of application

Fertilizers may be added to soil in solid, liquid, or gaseous forms, the choice depending on many factors. Generally, the farmer tries to obtain satisfactory yield at minimum cost in money and labour.

Manure can be applied as a liquid or a solid. When accumulated as a liquid from livestock areas, it may be stored in tanks until needed and then pumped into a distributing machine or into a sprinkler irrigation system. The method reduces labour, but the noxious odours are objectionable. The solid-manure spreader, which can also be used for compost, conveys the material to the field, shreds it, and spreads it uniformly over the land. The process can be carried out during convenient times, including winter, but rarely when the crop is growing.

Application of granulated or pelleted solid fertilizer has been aided by improved equipment design. Such devices, depending on design, can deposit fertilizer at the time of planting, side-dress a growing crop, or broadcast the material. Solid-fertilizer distributors have a wide hopper with holes in the bottom; distribution is effected by various means, such as rollers, agitators, or endless chains traversing the hopper bottom. Broadcast distributors have a tub-shaped hopper from which the material falls onto revolving disks that distribute it in a broad swath. Fertilizer attachments are available for most tractor-mounted planters and cultivators and for grain drills and some types of plows. They deposit fertilizer with the seed when planted, without damage to the seed, yet the nutrient is readily available during early growth. Placement of the fertilizer varies according to the types of crops; some crops require banding above the seed, while others are more successful when the fertilizer band is below the seed.

The use of liquid and ammonia fertilizers is growing, particularly of anhydrous ammonia, which is handled as a liquid under pressure but changes to gas when released to atmospheric pressure. Anhydrous ammonia, however, is highly corrosive, inflammable, and rather dangerous if not handled properly; thus, application equipment is specialized. Typically, the applicator is a chisel-shaped blade with a pipe mounted on its rear side to conduct the ammonia 13 to 15 cm (5 to 6 inches) below the soil surface. Pipes are fed from a pressure tank mounted above. Mixed liquid fertilizers containing nitrogen, phosphorus, and potassium may be applied directly to the soil surface or as a foliar spray by field sprayers where close-growing crops are raised. Large areas can be covered rapidly by use of aircraft, which can distribute both liquid and dry fertilizer.

Additional Information

Fertilizers have played an essential role in feeding a growing global population. It's estimated that just under half of the people alive today are dependent on synthetic fertilizers.

They can bring environmental benefits too: fertilizers can increase crop yields. By increasing crop yields we can reduce the amount of land we use for agriculture.

But they also create environmental pollution. Many countries overapply fertilizers, leading to the runoff of nutrients into water systems and ecosystems.

A problem we need to tackle is using fertilizers efficiently: yielding its benefits to feed a growing population while reducing the environmental damage that they cause.

Organic and Mineral Fertilizer: Differences and Similarities.

Fertilizers are materials that are applied to soils, or directly to plants, for their ability to supply the essential nutrients needed by crops to grow and improve soil fertility. They are used to increase crop yield and/or quality, as well as to sustain soils’ ability to support future crop production.

Mineral fertilizers are produced from materials mined from naturally occurring nutrient deposits, or from the fixation of nitrogen from the atmosphere into plant-available forms. Mineral fertilizers generally contain high concentrations of a single, or two or three, plant nutrients.

Organic fertilizers are derived from plant matter, animal excreta, sewage and food waste, generally in the form of animal manure, green manure and biosolids. Organic fertilizers provide essential nutrients needed by crops, generally containing a wide variety in low concentrations. They also play an important role in improving soil health.

Organo-mineral fertilizers combine dried organic and mineral fertilizers to provide balanced nutrients along with soil health improvements in a long-lasting, easy-to transport and store form.

They play a key role in reducing micronutrient deficiencies in people:

The fertilizer fortification of staple food crops with micronutrients (also known as agronomic biofortification) has alleviated deficiencies in zinc, selenium and iodine in communities around the world.

The fertilizer industry supports policies that link agriculture, nutrition and health, and the use of micronutrients where they are needed most.

:max_bytes(150000):strip_icc():format(webp)/Fertilizing-plants-0440837aeee64749832645ba62572f95.jpg)

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2303 2024-09-16 00:04:36

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,767

Re: Miscellany

2303) Siren

Gist

A siren is a warning device which makes a long, loud noise. Most fire engines, ambulances, and police cars have sirens.

It is a device for making a loud warning noise.

Summary

A siren is noisemaking device producing a piercing sound of definite pitch. Used as a warning signal, it was invented in the late 18th century by the Scottish natural philosopher John Robison. The name was given it by the French engineer Charles Cagniard de La Tour, who devised an acoustical instrument of the type in 1819. A disk with evenly spaced holes around its edge is rotated at high speed, interrupting at regular intervals a jet of air directed at the holes. The resulting regular pulsations cause a sound wave in the surrounding air. The siren is thus classified as a free aerophone. The sound-wave frequency of its pitch equals the number of air puffs (or holes times number of revolutions) per second. The strident sound results from the high number of overtones (harmonics) present.

Details

A siren is a loud noise-making device. Civil defense sirens are mounted in fixed locations and used to warn of natural disasters or attacks. Sirens are used on emergency service vehicles such as ambulances, police cars, and fire engines. There are two general types: mechanical and electronic.

Many fire sirens (used for summoning volunteer firefighters) serve double duty as tornado or civil defense sirens, alerting an entire community of impending danger. Most fire sirens are either mounted on the roof of a fire station or on a pole next to the fire station. Fire sirens can also be mounted on or near government buildings, on tall structures such as water towers, as well as in systems where several sirens are distributed around a town for better sound coverage. Most fire sirens are single tone and mechanically driven by electric motors with a rotor attached to the shaft. Some newer sirens are electronically driven speakers.

Fire sirens are often called fire whistles, fire alarms, or fire horns. Although there is no standard signaling of fire sirens, some utilize codes to inform firefighters of the location of the fire. Civil defense sirens also used as fire sirens often can produce an alternating "hi-lo" signal (similar to emergency vehicles in many European countries) as the fire signal, or attack (slow wail), typically 3x, as to not confuse the public with the standard civil defense signals of alert (steady tone) and fast wail (fast wavering tone). Fire sirens are often tested once a day at noon and are also called noon sirens or noon whistles.

The first emergency vehicles relied on a bell. In the 1970s, they switched to a duotone airhorn, which was itself overtaken in the 1980s by an electronic wail.

History

Some time before 1799, the siren was invented by the Scottish natural philosopher John Robison. Robison's sirens were used as musical instruments; specifically, they powered some of the pipes in an organ. Robison's siren consisted of a stopcock that opened and closed a pneumatic tube. The stopcock was apparently driven by the rotation of a wheel.

In 1819, an improved siren was developed and named by Baron Charles Cagniard de la Tour. De la Tour's siren consisted of two perforated disks that were mounted coaxially at the outlet of a pneumatic tube. One disk was stationary, while the other disk rotated. The rotating disk periodically interrupted the flow of air through the fixed disk, producing a tone. De la Tour's siren could produce sound under water, suggesting a link with the sirens of Greek mythology; hence the name he gave to the instrument.

Instead of disks, most modern mechanical sirens use two concentric cylinders, which have slots parallel to their length. The inner cylinder rotates while the outer one remains stationary. As air under pressure flows out of the slots of the inner cylinder and then escapes through the slots of the outer cylinder, the flow is periodically interrupted, creating a tone. The earliest such sirens were developed during 1877–1880 by James Douglass and George Slight (1859–1934) of Trinity House; the final version was first installed in 1887 at the Ailsa Craig lighthouse in Scotland's Firth of Clyde. When commercial electric power became available, sirens were no longer driven by external sources of compressed air, but by electric motors, which generated the necessary flow of air via a simple centrifugal fan, which was incorporated into the siren's inner cylinder.

To direct a siren's sound and to maximize its power output, a siren is often fitted with a horn, which transforms the high-pressure sound waves in the siren to lower-pressure sound waves in the open air.

The earliest way of summoning volunteer firemen to a fire was by ringing of a bell, either mounted atop the fire station, or in the belfry of a local church. As electricity became available, the first fire sirens were manufactured. In 1886 French electrical engineer Gustave Trouvé developed a siren to announce the silent arrival of his electric boats. Two early fire siren manufacturers were William A. Box Iron Works, who made the "Denver" sirens as early as 1905, and the Inter-State Machine Company (later the Sterling Siren Fire Alarm Company) who made the ubiquitous Model "M" electric siren, which was the first dual tone siren. The popularity of fire sirens took off by the 1920s, with many manufacturers including the Federal Electric Company and Decot Machine Works creating their own sirens. Since the 1970s, many communities have since deactivated their fire sirens as pagers became available for fire department use. Some sirens still remain as a backup to pager systems.

During the Second World War, the British civil defence used a network of sirens to alert the general population to the imminence of an air raid. A single tone denoted an "all clear". A series of tones denoted an air raid.

Types:

Pneumatic

The pneumatic siren, which is a free aerophone, consists of a rotating disk with holes in it (called a chopper, siren disk or rotor), such that the material between the holes interrupts a flow of air from fixed holes on the outside of the unit (called a stator). As the holes in the rotating disk alternately prevent and allow air to flow it results in alternating compressed and rarefied air pressure, i.e. sound. Such sirens can consume large amounts of energy. To reduce the energy consumption without losing sound volume, some designs of pneumatic sirens are boosted by forcing compressed air from a tank that can be refilled by a low powered compressor through the siren disk.

In United States English language usage, vehicular pneumatic sirens are sometimes referred to as mechanical or coaster sirens, to differentiate them from electronic devices. Mechanical sirens driven by an electric motor are often called "electromechanical". One example is the Q2B siren sold by Federal Signal Corporation. Because of its high current draw (100 amps when power is applied) its application is normally limited to fire apparatus, though it has seen increasing use on type IV ambulances and rescue-squad vehicles. Its distinct tone of urgency, high sound pressure level (123 dB at 10 feet) and square sound waves account for its effectiveness.

In Germany and some other European countries, the pneumatic two-tone (hi-lo) siren consists of two sets of air horns, one high pitched and the other low pitched. An air compressor blows the air into one set of horns, and then it automatically switches to the other set. As this back and forth switching occurs, the sound changes tones. Its sound power varies, but could get as high as approximately 125 dB, depending on the compressor and the horns. Comparing with the mechanical sirens, it uses much less electricity but needs more maintenance.

In a pneumatic siren, the stator is the part which cuts off and reopens air as rotating blades of a chopper move past the port holes of the stator, generating sound. The pitch of the siren's sound is a function of the speed of the rotor and the number of holes in the stator. A siren with only one row of ports is called a single tone siren. A siren with two rows of ports is known as a dual tone siren. By placing a second stator over the main stator and attaching a solenoid to it, one can repeatedly close and open all of the stator ports thus creating a tone called a pulse. If this is done while the siren is wailing (rather than sounding a steady tone) then it is called a pulse wail. By doing this separately over each row of ports on a dual tone siren, one can alternately sound each of the two tones back and forth, creating a tone known as Hi/Lo. If this is done while the siren is wailing, it is called a Hi/Lo wail. This equipment can also do pulse or pulse wail. The ports can be opened and closed to send Morse code. A siren which can do both pulse and Morse code is known as a code siren.

Electronic

Electronic sirens incorporate circuits such as oscillators, modulators, and amplifiers to synthesize a selected siren tone (wail, yelp, pierce/priority/phaser, hi-lo, scan, airhorn, manual, and a few more) which is played through external speakers. It is not unusual, especially in the case of modern fire engines, to see an emergency vehicle equipped with both types of sirens. Often, police sirens also use the interval of a tritone to help draw attention. The first electronic siren that mimicked the sound of a mechanical siren was invented in 1965 by Motorola employees Ronald H. Chapman and Charles W. Stephens.

Other types

Steam whistles were also used as a warning device if a supply of steam was present, such as a sawmill or factory. These were common before fire sirens became widely available, particularly in the former Soviet Union. Fire horns, large compressed air horns, also were and still are used as an alternative to a fire siren. Many fire horn systems were wired to fire pull boxes that were located around a town, and this would "blast out" a code in respect to that box's location. For example, pull box number 233, when pulled, would trigger the fire horn to sound two blasts, followed by a pause, followed by three blasts, followed by a pause, followed by three more blasts. In the days before telephones, this was the only way firefighters would know the location of a fire. The coded blasts were usually repeated several times. This technology was also applied to many steam whistles as well. Some fire sirens are fitted with brakes and dampers, enabling them to sound out codes as well. These units tended to be unreliable, and are now uncommon.

Physics of the sound

Mechanical sirens blow air through a slotted disk or rotor. The cyclic waves of air pressure are the physical form of sound. In many sirens, a centrifugal blower and rotor are integrated into a single piece of material, spun by an electric motor.

Electronic sirens are high efficiency loudspeakers, with specialized amplifiers and tone generation. They usually imitate the sounds of mechanical sirens in order to be recognizable as sirens.

To improve the efficiency of the siren, it uses a relatively low frequency, usually several hundred hertz. Lower frequency sound waves go around corners and through holes better.

Sirens often use horns to aim the pressure waves. This uses the siren's energy more efficiently by aiming it. Exponential horns achieve similar efficiencies with less material.

The frequency, i.e. the cycles per second of the sound of a mechanical siren is controlled by the speed of its rotor, and the number of openings. The wailing of a mechanical siren occurs as the rotor speeds and slows. Wailing usually identifies an attack or urgent emergency.

The characteristic timbre or musical quality of a mechanical siren is caused because it is a triangle wave, when graphed as pressure over time. As the openings widen, the emitted pressure increases. As they close, it decreases. So, the characteristic frequency distribution of the sound has harmonics at odd (1, 3, 5...) multiples of the fundamental. The power of the harmonics roll off in an inverse square to their frequency. Distant sirens sound more "mellow" or "warmer" because their harsh high frequencies are absorbed by nearby objects.

Two tone sirens are often designed to emit a minor third, musically considered a "sad" sound. To do this, they have two rotors with different numbers of openings. The upper tone is produced by a rotor with a count of openings divisible by six. The lower tone's rotor has a count of openings divisible by five. Unlike an organ, a mechanical siren's minor third is almost always physical, not tempered. To achieve tempered ratios in a mechanical siren, the rotors must either be geared, run by different motors, or have very large numbers of openings. Electronic sirens can easily produce a tempered minor third.

A mechanical siren that can alternate between its tones uses solenoids to move rotary shutters that cut off the air supply to one rotor, then the other. This is often used to identify a fire warning.

When testing, a frightening sound is not desirable. So, electronic sirens then usually emit musical tones:

Westminster chimes is common. Mechanical sirens sometimes self-test by "growling", i.e. operating at low speeds.

In music

Sirens are also used as musical instruments. They have been prominently featured in works by avant-garde and contemporary classical composers. Examples include Edgard Varèse's compositions Amériques (1918–21, rev. 1927), Hyperprism (1924), and Ionisation (1931); math Avraamov's Symphony of Factory Sirens (1922); George Antheil's Ballet Mécanique (1926); Dimitri Shostakovich's Symphony No. 2 (1927), and Henry Fillmore's "The Klaxon: March of the Automobiles" (1929), which features a klaxophone.

In popular music, sirens have been used in The Chemical Brothers' "Song to the Siren" (1992) and in a CBS News 60 Minutes segment played by percussionist Evelyn Glennie. A variation of a siren, played on a keyboard, are the opening notes of the REO Speedwagon song "Ridin' the Storm Out". Some heavy metal bands also use air raid type siren intros at the beginning of their shows. The opening measure of Money City Maniacs 1998 by Canadian band Sloan uses multiple sirens overlapped.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2304 2024-09-16 20:11:30

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,767

Re: Miscellany

2304) Stainless Steel

Gist

Stainless steel is a corrosion-resistant alloy of iron, chromium and, in some cases, nickel and other metals. Completely and infinitely recyclable, stainless steel is the “green material” par excellence.

Stainless steel is the name of a family of iron-based alloys known for their corrosion and heat resistance. One of the main characteristics of stainless steel is its minimum chromium content of 10.5%, which gives it its superior resistance to corrosion in comparison to other types of steels.

Summary

Stainless steels are a family of ferrous alloys containing less than 1.2% carbon and over 10.5% chromium and are protected by a passive surface layer of chromium and iron oxides and hydroxides that protects them efficiently from corrosion.

Stainless steel is a family of alloy steels containing low carbon steel with a minimum chromium content of 10% or more by weight. The name originates from the fact that stainless steel does not stain, corrode or rust as easily as ordinary steel; however, ‘stain-less’ is not ‘stain-proof’ in all conditions. It is important to select the correct type and grade of stainless steel for a particular application. In many cases, manufacturing rooms, processing lines, equipment and machines will be subject to requirements from authorities, manufacturers or customers.

The addition of chromium gives the steel its unique stainless, corrosion-resistant properties. The chromium, when in contact with oxygen, forms a natural barrier of adherent chromium(III) oxide (Cr2O3), commonly called ‘ceramic,’ which is a ‘passive film’ resistant to further oxidation or rusting. This event is called passivation and is seen in other metals, such as aluminum and silver, but unlike in these metals this passive film is transparent on stainless steel. This invisible, self repairing and relatively inert film is only a few microns thick so the metal stays shiny. If damaged mechanically or chemically, the film is self-healing, meaning the layer quickly reforms, providing that oxygen is present, even if in very small amounts. This protective oxide or ceramic coating is common to most corrosion resistant materials. Similarly, anodizing is an electrolytic passivation process used to increase the thickness of the natural oxide layer on the surface of metals such as aluminum, titanium, and zinc among others. Passivation is not a useful treatment for iron or carbon steel because these metals exfoliate when oxidized, i.e. the iron(III) oxide (rust) flakes off, constantly exposing the underlying metal to corrosion.

The corrosion resistance and other useful properties of stainless steel can be enhanced by increasing the chromium content and the addition of other alloying elements such as molybdenum, nickel and nitrogen. There are more than 60 grades of stainless steel, however, the entire group can be divided into five classes (cast stainless steels, in general, are similar to the equivalent wrought alloys). Each is identified by the alloying elements which affect their microstructure and for which each is named.

Details

Stainless steel, also known as inox, corrosion-resistant steel (CRES), and rustless steel, is an alloy of iron that is resistant to rusting and corrosion. It contains iron with chromium and other elements such as molybdenum, carbon, nickel and nitrogen depending on its specific use and cost. Stainless steel's resistance to corrosion results from the 10.5%, or more, chromium content which forms a passive film that can protect the material and self-heal in the presence of oxygen.

The alloy's properties, such as luster and resistance to corrosion, are useful in many applications. Stainless steel can be rolled into sheets, plates, bars, wire, and tubing. These can be used in cookware, cutlery, surgical instruments, major appliances, vehicles, construction material in large buildings, industrial equipment (e.g., in paper mills, chemical plants, water treatment), and storage tanks and tankers for chemicals and food products. Some grades are also suitable for forging and casting.

The biological cleanability of stainless steel is superior to both aluminium and copper, and comparable to glass. Its cleanability, strength, and corrosion resistance have prompted the use of stainless steel in pharmaceutical and food processing plants.

Different types of stainless steel are labeled with an AISI three-digit number. The ISO 15510 standard lists the chemical compositions of stainless steels of the specifications in existing ISO, ASTM, EN, JIS, and GB standards in a useful interchange table.

Properties:

Corrosion resistance

Although stainless steel does rust, this only affects the outer few layers of atoms, its chromium content shielding deeper layers from oxidation.

The addition of nitrogen also improves resistance to pitting corrosion and increases mechanical strength. Thus, there are numerous grades of stainless steel with varying chromium and molybdenum contents to suit the environment the alloy must endure. Corrosion resistance can be increased further by the following means:

* increasing chromium content to more than 11%

* adding nickel to at least 8%

* adding molybdenum (which also improves resistance to pitting corrosion)

Strength

The most common type of stainless steel, 304, has a tensile yield strength around 210 MPa (30,000 psi) in the annealed condition. It can be strengthened by cold working to a strength of 1,050 MPa (153,000 psi) in the full-hard condition.

The strongest commonly available stainless steels are precipitation hardening alloys such as 17-4 PH and Custom 465. These can be heat treated to have tensile yield strengths up to 1,730 MPa (251,000 psi).

Melting point

Stainless steel is a steel, and as such its melting point is near that of ordinary steel, and much higher than the melting points of aluminium or copper. As with most alloys, the melting point of stainless steel is expressed in the form of a range of temperatures, and not a single temperature. This temperature range goes from 1,400 to 1,530 °C (2,550 to 2,790 °F; 1,670 to 1,800 K; 3,010 to 3,250 °R) depending on the specific consistency of the alloy in question.

Conductivity

Like steel, stainless steels are relatively poor conductors of electricity, with significantly lower electrical conductivities than copper. In particular, the non-electrical contact resistance (ECR) of stainless steel arises as a result of the dense protective oxide layer and limits its functionality in applications as electrical connectors. Copper alloys and nickel-coated connectors tend to exhibit lower ECR values and are preferred materials for such applications. Nevertheless, stainless steel connectors are employed in situations where ECR poses a lower design criteria and corrosion resistance is required, for example in high temperatures and oxidizing environments.

Magnetism

Martensitic, duplex and ferritic stainless steels are magnetic, while austenitic stainless steel is usually non-magnetic. Ferritic steel owes its magnetism to its body-centered cubic crystal structure, in which iron atoms are arranged in cubes (with one iron atom at each corner) and an additional iron atom in the center. This central iron atom is responsible for ferritic steel's magnetic properties. This arrangement also limits the amount of carbon the steel can absorb to around 0.025%. Grades with low coercive field have been developed for electro-valves used in household appliances and for injection systems in internal combustion engines. Some applications require non-magnetic materials, such as magnetic resonance imaging. Austenitic stainless steels, which are usually non-magnetic, can be made slightly magnetic through work hardening. Sometimes, if austenitic steel is bent or cut, magnetism occurs along the edge of the stainless steel because the crystal structure rearranges itself.

Wear

Galling, sometimes called cold welding, is a form of severe adhesive wear, which can occur when two metal surfaces are in relative motion to each other and under heavy pressure. Austenitic stainless steel fasteners are particularly susceptible to thread galling, though other alloys that self-generate a protective oxide surface film, such as aluminum and titanium, are also susceptible. Under high contact-force sliding, this oxide can be deformed, broken, and removed from parts of the component, exposing the bare reactive metal. When the two surfaces are of the same material, these exposed surfaces can easily fuse. Separation of the two surfaces can result in surface tearing and even complete seizure of metal components or fasteners. Galling can be mitigated by the use of dissimilar materials (bronze against stainless steel) or using different stainless steels (martensitic against austenitic). Additionally, threaded joints may be lubricated to provide a film between the two parts and prevent galling. Nitronic 60, made by selective alloying with manganese, silicon, and nitrogen, has demonstrated a reduced tendency to gall.

Density

The density of stainless steel ranges from 7.5 to 8.0 g/{cm}^3 (0.27 to 0.29 lb/cu in) depending on the alloy.

Additional Information

Stainless steel is any one of a family of alloy steels usually containing 10 to 30 percent chromium. In conjunction with low carbon content, chromium imparts remarkable resistance to corrosion and heat. Other elements, such as nickel, molybdenum, titanium, aluminum, niobium, copper, nitrogen, sulfur, phosphorus, or selenium, may be added to increase corrosion resistance to specific environments, enhance oxidation resistance, and impart special characteristics.

Most stainless steels are first melted in electric-arc or basic oxygen furnaces and subsequently refined in another steelmaking vessel, mainly to lower the carbon content. In the argon-oxygen decarburization process, a mixture of oxygen and argon gas is injected into the liquid steel. By varying the ratio of oxygen and argon, it is possible to remove carbon to controlled levels by oxidizing it to carbon monoxide without also oxidizing and losing expensive chromium. Thus, cheaper raw materials, such as high-carbon ferrochromium, may be used in the initial melting operation.

There are more than 100 grades of stainless steel. The majority are classified into five major groups in the family of stainless steels: austenitic, ferritic, martensitic, duplex, and precipitation-hardening. Austenitic steels, which contain 16 to 26 percent chromium and up to 35 percent nickel, usually have the highest corrosion resistance. They are not hardenable by heat treatment and are nonmagnetic. The most common type is the 18/8, or 304, grade, which contains 18 percent chromium and 8 percent nickel. Typical applications include aircraft and the dairy and food-processing industries. Standard ferritic steels contain 10.5 to 27 percent chromium and are nickel-free; because of their low carbon content (less than 0.2 percent), they are not hardenable by heat treatment and have less critical anticorrosion applications, such as architectural and auto trim. Martensitic steels typically contain 11.5 to 18 percent chromium and up to 1.2 percent carbon with nickel sometimes added. They are hardenable by heat treatment, have modest corrosion resistance, and are employed in cutlery, surgical instruments, wrenches, and turbines. Duplex stainless steels are a combination of austenitic and ferritic stainless steels in equal amounts; they contain 21 to 27 percent chromium, 1.35 to 8 percent nickel, 0.05 to 3 percent copper, and 0.05 to 5 percent molybdenum. Duplex stainless steels are stronger and more resistant to corrosion than austenitic and ferritic stainless steels, which makes them useful in storage-tank construction, chemical processing, and containers for transporting chemicals. Precipitation-hardening stainless steel is characterized by its strength, which stems from the addition of aluminum, copper, and niobium to the alloy in amounts less than 0.5 percent of the alloy’s total mass. It is comparable to austenitic stainless steel with respect to its corrosion resistance, and it contains 15 to 17.5 percent chromium, 3 to 5 percent nickel, and 3 to 5 percent copper. Precipitation-hardening stainless steel is used in the construction of long shafts.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2305 2024-09-17 00:03:00

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,767

Re: Miscellany

2305) Earphones / Headphones

Gist

Headphones are also known as earphones or, colloquially, cans. Circumaural (around the ear) and supra-aural (over the ear) headphones use a band over the top of the head to hold the drivers in place. Another type, known as earbuds or earpieces, consists of individual units that plug into the user's ear canal.

They are electroacoustic transducers, which convert an electrical signal to a corresponding sound. Headphones let a single user listen to an audio source privately, in contrast to a loudspeaker, which emits sound into the open air for anyone nearby to hear. Headphones are also known as earphones or, colloquially, cans.

Headphones are a type of hardware output device that can be connected to a computer's line-out or speakers port, as well as wirelessly using Bluetooth. They are also referred to as earbuds. You can watch a movie or listen to audio without bothering anyone nearby by using headphones.

Summary

A headphone is a small loudspeaker (earphone) held over the ear by a band or wire worn on the head. Headphones are commonly employed in situations in which levels of surrounding noise are high, as in an airplane math, or where a user such as a switchboard operator needs to keep the hands free, or where the listener is moving about or wants to listen without disturbing other people. A headphone may be equipped with one earphone or two and may include a miniature microphone, in which case it is called a headset. For listening to stereophonically reproduced sound, stereo headphones may be used, with separate channels of sound being fed to the two earphones.

An earphone is a small loudspeaker held or worn close to the listener’s ear or within the outer ear. Common forms include the hand-held telephone receiver; the headphone, in which one or two earphones are held in place by a band worn over the head; and the plug earphone, which is inserted in the outer opening of the ear. The conversion of electrical to acoustical signals is effected by any of the devices used in larger loudspeakers; the highest fidelity is provided by the so-called dynamic earphone, which ordinarily is made part of a headphone and equipped with a cushion to isolate the ears from other sound sources.

Details

Headphones are a pair of small loudspeaker drivers worn on or around the head over a user's ears. They are electroacoustic transducers, which convert an electrical signal to a corresponding sound. Headphones let a single user listen to an audio source privately, in contrast to a loudspeaker, which emits sound into the open air for anyone nearby to hear. Headphones are also known as earphones or, colloquially, cans. Circumaural (around the ear) and supra-aural (over the ear) headphones use a band over the top of the head to hold the drivers in place. Another type, known as earbuds or earpieces, consists of individual units that plug into the user's ear canal. A third type are bone conduction headphones, which typically wrap around the back of the head and rest in front of the ear canal, leaving the ear canal open. In the context of telecommunication, a headset is a combination of a headphone and microphone.

Headphones connect to a signal source such as an audio amplifier, radio, CD player, portable media player, mobile phone, video game console, or electronic musical instrument, either directly using a cord, or using wireless technology such as Bluetooth, DECT or FM radio. The first headphones were developed in the late 19th century for use by switchboard operators, to keep their hands free. Initially, the audio quality was mediocre and a step forward was the invention of high fidelity headphones.

Headphones exhibit a range of different audio reproduction quality capabilities. Headsets designed for telephone use typically cannot reproduce sound with the high fidelity of expensive units designed for music listening by audiophiles. Headphones that use cables typically have either a 1/4 inch (6.4 mm) or 1/8 inch (3.2 mm) phone jack for plugging the headphones into the audio source. Some headphones are wireless, using Bluetooth connectivity to receive the audio signal by radio waves from source devices like cellphones and digital players. As a result of the Walkman effect, beginning in the 1980s, headphones started to be used in public places such as sidewalks, grocery stores, and public transit. Headphones are also used by people in various professional contexts, such as audio engineers mixing sound for live concerts or sound recordings and DJs, who use headphones to cue up the next song without the audience hearing, aircraft pilots and call center employees. The latter two types of employees use headphones with an integrated microphone.

History

Headphones grew out of the need to free up a person's hands when operating a telephone. By the 1880s, soon after the invention of the telephone, telephone switchboard operators began to use head apparatuses to mount the telephone receiver. The receiver was mounted on the head by a clamp which held it next to the ear. The head mount freed the switchboard operator's hands, so that they could easily connect the wires of the telephone callers and receivers. The head-mounted telephone receiver in the singular form was called a headphone. These head-mounted phone receivers, unlike modern headphones, only had one earpiece.

By the 1890s a listening device with two earpieces was developed by the British company Electrophone. The device created a listening system through the phone lines that allowed the customer to connect into live feeds of performances at theaters and opera houses across London. Subscribers to the service could listen to the performance through a pair of massive earphones that connected below the chin and were held by a long rod.

French engineer Ernest Mercadier in 1891 patented a set of in-ear headphones. He was awarded U.S. Patent No. 454,138 for "improvements in telephone-receivers...which shall be light enough to be carried while in use on the head of the operator." The German company Siemens Brothers at this time was also selling headpieces for telephone operators which had two earpieces, although placed outside the ear. These headpieces by Siemens Brothers looked fairly similar to modern headphones. The majority of headgear used by telephone operators continued to have only one earpiece.

Modern headphones subsequently evolved out of the emerging field of wireless telegraphy, which was the beginning stage of radio broadcasting. Some early wireless telegraph developers chose to use the telephone receiver's speaker as the detector for the electrical signal of the wireless receiving circuit. By 1902 wireless telegraph innovators, such as Lee de Forest, were using two jointly head-mounted telephone receivers to hear the signal of the receiving circuit. The two head-mounted telephone receivers were called in the singular form "head telephones". By 1908 the headpiece began to be written simply as "head phones", and a year later the compound word "headphones" began to be used.

One of the earliest companies to make headphones for wireless operators was the Holtzer-Cabot Company in 1909. They were also makers of head receivers for telephone operators and normal telephone receivers for the home. Another early manufacturer of headphones was Nathaniel Baldwin. He was the first major supplier of headsets to the U.S. Navy. In 1910 he invented a prototype telephone headset due to his inability to hear sermons during Sunday service. He offered it for testing to the navy, which promptly ordered 100 of them because of their good quality. Wireless Specialty Apparatus Co., in partnership with Baldwin Radio Company, set up a manufacturing facility in Utah to fulfill orders.

These early headphones used moving iron drivers, with either single-ended or balanced armatures. The common single-ended type used voice coils wound around the poles of a permanent magnet, which were positioned close to a flexible steel diaphragm. The audio current through the coils varied the magnetic field of the magnet, exerting a varying force on the diaphragm, causing it to vibrate, creating sound waves. The requirement for high sensitivity meant that no damping was used, so the frequency response of the diaphragm had large peaks due to resonance, resulting in poor sound quality. These early models lacked padding, and were often uncomfortable to wear for long periods. Their impedance varied; headphones used in telegraph and telephone work had an impedance of 75 ohms. Those used with early wireless radio had more turns of finer wire to increase sensitivity. Impedance of 1,000 to 2,000 ohms was common, which suited both crystal sets and triode receivers. Some very sensitive headphones, such as those manufactured by Brandes around 1919, were commonly used for early radio work.

In early powered radios, the headphone was part of the vacuum tube's plate circuit and carried dangerous voltages. It was normally connected directly to the positive high voltage battery terminal, and the other battery terminal was securely grounded. The use of bare electrical connections meant that users could be shocked if they touched the bare headphone connections while adjusting an uncomfortable headset.

In 1958, John C. Koss, an audiophile and jazz musician from Milwaukee, produced the first stereo headphones.

Smaller earbud type earpieces, which plugged into the user's ear canal, were first developed for hearing aids. They became widely used with transistor radios, which commercially appeared in 1954 with the introduction of the Regency TR-1. The most popular audio device in history, the transistor radio changed listening habits, allowing people to listen to radio anywhere. The earbud uses either a moving iron driver or a piezoelectric crystal to produce sound. The 3.5 mm radio and phone connector, which is the most commonly used in portable application today, has been used at least since the Sony EFM-117J transistor radio, which was released in 1964. Its popularity was reinforced with its use on the Walkman portable tape player in 1979.

Applications

Headphones may be used with stationary CD and DVD players, home theater, personal computers, or portable devices (e.g., digital audio player/MP3 player, mobile phone), as long as these devices are equipped with a headphone jack. Cordless headphones are not connected to their source by a cable. Instead, they receive a radio or infrared signal encoded using a radio or infrared transmission link, such as FM, Bluetooth or Wi-Fi. These are battery-powered receiver systems, of which the headphone is only a component. Cordless headphones are used with events such as a Silent disco or Silent Gig.

In the professional audio sector, headphones are used in live situations by disc jockeys with a DJ mixer, and sound engineers for monitoring signal sources. In radio studios, DJs use a pair of headphones when talking to the microphone while the speakers are turned off to eliminate acoustic feedback while monitoring their own voice. In studio recordings, musicians and singers use headphones to play or sing along to a backing track or band. In military applications, audio signals of many varieties are monitored using headphones.

Wired headphones are attached to an audio source by a cable. The most common connectors are 6.35 mm (1/4 inch) and 3.5 mm phone connectors. The larger 6.35 mm connector is more common on fixed location home or professional equipment. The 3.5 mm connector remains the most widely used connector for portable application today. Adapters are available for converting between 6.35 mm and 3.5 mm devices.

As active component, wireless headphones tend to be costlier due to the necessity for internal hardware such as a battery, a charging controller, a speaker driver, and a wireless transceiver, whereas wired headphones are a passive component, outsourcing speaker driving to the audio source.

Some headphone cords are equipped with a serial potentiometer for volume control.

Wired headphones may be equipped with a non-detachable cable or a detachable auxiliary male-to-male plug, as well as some with two ports to allow connecting another wired headphone in a parallel circuit, which splits the audio signal to share with another participant, but can also be used to hear audio from two inputs simultaneously. An external audio splitter can retrofit this ability.

Applications for audiometric testing

Various types of specially designed headphones or earphones are also used to evaluate the status of the auditory system in the field of audiology for establishing hearing thresholds, medically diagnosing hearing loss, identifying other hearing related disease, and monitoring hearing status in occupational hearing conservation programs. Specific models of headphones have been adopted as the standard due to the ease of calibration and ability to compare results between testing facilities.

Supra-aural style headphones are historically the most commonly used in audiology as they are the easiest to calibrate and were considered the standard for many years. Commonly used models are the Telephonics Dynamic Headphone (TDH) 39, TDH-49, and TDH-50. In-the-ear or insert style earphones are used more commonly today as they provide higher levels of interaural attenuation, introduce less variability when testing 6,000 and 8,000 Hz, and avoid testing issues resulting from collapsed ear canals. A commonly used model of insert earphone is the Etymotic Research ER-3A. Circum-aural earphones are also used to establish hearing thresholds in the extended high frequency range (8,000 Hz to 20,000 kHz). Along with Etymotic Research ER-2A insert earphones, the Sennheiser HDA300 and Koss HV/1A circum-aural earphones are the only models that have reference equivalent threshold sound pressure level values for the extended high frequency range as described by ANSI standards.

Audiometers and headphones must be calibrated together. During the calibration process, the output signal from the audiometer to the headphones is measured with a sound level meter to ensure that the signal is accurate to the reading on the audiometer for sound pressure level and frequency. Calibration is done with the earphones in an acoustic coupler that is intended to mimic the transfer function of the outer ear. Because specific headphones are used in the initial audiometer calibration process, they cannot be replaced with any other set of headphones, even from the same make and model.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#2306 2024-09-17 19:39:43

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,767

Re: Miscellany

2306) Graphite

Gist

Graphite is a type of crystal carbon and a half-metal along with being one of the renowned carbon allotropes. Under the conditions that are ideal, it would be one of the most stable forms of carbon available. To define the standard state of heat for making compounds of carbons.

Graphite is used in pencils, lubricants, crucibles, foundry facings, polishes, brushes for electric motors, and cores of nuclear reactors.

Summary

Graphite is a mineral consisting of carbon. Graphite has a greasy feel and leaves a black mark, thus the name from the Greek verb graphein, “to write.”

Graphite has a layered structure that consists of rings of six carbon atoms arranged in widely spaced horizontal sheets. Graphite thus crystallizes in the hexagonal system, in contrast to diamond, another form of carbon, that crystallizes in the octahedral or tetrahedral system. Such pairs of differing forms of the same element usually are rather similar in their physical properties, but not so in this case. Graphite is dark gray to black, opaque, and very soft (with a Mohs scale hardness of 1.5), while diamond may be colorless and transparent and is the hardest naturally occurring substance (with a Mohs scale hardness of 10). Graphite is very soft because the individual layers of carbon atoms are not as tightly bound together as the atoms within the layer. It is an excellent conductor of heat and electricity.

Graphite is formed by the metamorphosis of sediments containing carbonaceous material, by the reaction of carbon compounds with hydrothermal solutions or magmatic fluids, or possibly by the crystallization of magmatic carbon. It occurs as isolated scales, large masses, or veins in older crystalline rocks, gneiss, schist, quartzite, and marble and also in granites, pegmatites, and carbonaceous clay slates. Small isometric crystals of graphitic carbon (possibly pseudomorphs after diamond) found in meteoritic iron are called cliftonite.

Naturally occurring graphite is classified into three types: amorphous, flake, and vein. Amorphous is the most common kind and is formed by metamorphism under low pressures and temperatures. It is found in coal and shale and has the lowest carbon content, typically 70 to 90 percent, of the three types. Flake graphite appears in flat layers and is formed by metamorphism under high pressures and temperatures. It is the most commonly used type and has a carbon content between 85 and 98 percent. Vein graphite is the rarest form and is likely formed when carbon compounds react with hydrothermal solutions or magmatic fluids. Vein graphite can have a purity greater than 99 percent and is commercially mined only in Sri Lanka.

Graphite was first synthesized accidentally by Edward G. Acheson while he was performing high-temperature experiments on carborundum. He found that at about 4,150 °C (7,500 °F) the silicon in the carborundum vaporized, leaving the carbon behind in graphitic form. Acheson was granted a patent for graphite manufacture in 1896, and commercial production started in 1897. Since 1918 petroleum coke, small and imperfect graphite crystals surrounded by organic compounds, has been the major raw material in the production of 99 to 99.5 percent pure graphite.