Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#1601 2022-12-17 00:45:54

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,394

Re: Miscellany

1564) Night

Summary

Night (also described as night time, unconventionally spelled as "nite") is the period of ambient darkness from sunset to sunrise during each 24-hour day, when the Sun is below the horizon. The exact time when night begins and ends depends on the location and varies throughout the year, based on factors such as season and latitude.

The word can be used in a different sense as the time between bedtime and morning. In common communication, the word night is used as a farewell ("good night", sometimes shortened to "night"), mainly when someone is going to sleep or leaving.

Astronomical night is the period between astronomical dusk and astronomical dawn when the Sun is between 18 and 90 degrees below the horizon and does not illuminate the sky. As seen from latitudes between about 48.56° and 65.73° north or south of the Equator, complete darkness does not occur around the summer solstice because, although the Sun sets, it is never more than 18° below the horizon at lower culmination, −90° Sun angles occur at the Tropic of Cancer on the December solstice and Tropic of Capricorn on the June solstice, and at the equator on equinoxes. And as seen from latitudes greater than 72° north or south of the equator, complete darkness does not occur both equinoxes because, although the Sun sets, it is never more than 18° below the horizon.

The opposite of night is day (or "daytime", to distinguish it from "day" referring to a 24-hour period). Twilight is the period of night after sunset or before sunrise when the Sun still illuminates the sky when it is below the horizon. At any given time, one side of Earth is bathed in sunlight (the daytime), while the other side is in darkness caused by Earth blocking the sunlight. The central part of the shadow is called the umbra, where the night is darkest.

Natural illumination at night is still provided by a combination of moonlight, planetary light, starlight, zodiacal light, gegenschein, and airglow. In some circumstances, aurorae, lightning, and bioluminescence can provide some illumination. The glow provided by artificial lighting is sometimes referred to as light pollution because it can interfere with observational astronomy and ecosystems.

Details

Duration and geography

On Earth, an average night is shorter than daytime due to two factors. Firstly, the Sun's apparent disk is not a point, but has an angular diameter of about 32 arcminutes (32'). Secondly, the atmosphere refracts sunlight so that some of it reaches the ground when the Sun is below the horizon by about 34'. The combination of these two factors means that light reaches the ground when the center of the solar disk is below the horizon by about 50'. Without these effects, daytime and night would be the same length on both equinoxes, the moments when the Sun appears to contact the celestial equator. On the equinoxes, daytime actually lasts almost 14 minutes longer than night does at the Equator, and even longer towards the poles.

The summer and winter solstices mark the shortest and longest nights, respectively. The closer a location is to either the North Pole or the South Pole, the wider the range of variation in the night's duration. Although daytime and night nearly equalize in length on the equinoxes, the ratio of night to day changes more rapidly at high latitudes than at low latitudes before and after an equinox. In the Northern Hemisphere, Denmark experiences shorter nights in June than India. In the Southern Hemisphere, Antarctica sees longer nights in June than Chile. Both hemispheres experience the same patterns of night length at the same latitudes, but the cycles are 6 months apart so that one hemisphere experiences long nights (winter) while the other is experiencing short nights (summer).

In the region within either polar circle, the variation in daylight hours is so extreme that part of summer sees a period without night intervening between consecutive days, while part of winter sees a period without daytime intervening between consecutive nights.

On other celestial bodies

The phenomenon of day and night is due to the rotation of a celestial body about its axis, creating an illusion of the sun rising and setting. Different bodies spin at very different rates, however. Some may spin much faster than Earth, while others spin extremely slowly, leading to very long days and nights. The planet Venus rotates once every 224.7 days – by far the slowest rotation period of any of the major planets. In contrast, the gas giant Jupiter's sidereal day is only 9 hours and 56 minutes. However, it is not just the sidereal rotation period which determines the length of a planet's day-night cycle but the length of its orbital period as well - Venus has a rotation period of 224.7 days, but a day-night cycle just 116.75 days long due to its retrograde rotation and orbital motion around the Sun. Mercury has the longest day-night cycle as a result of its 3:2 resonance between its orbital period and rotation period - this resonance gives it a day-night cycle that is 176 days long. A planet may experience large temperature variations between day and night, such as Mercury, the planet closest to the sun. This is one consideration in terms of planetary habitability or the possibility of extraterrestrial life.

Effect on life:

Biological

The disappearance of sunlight, the primary energy source for life on Earth, has dramatic effects on the morphology, physiology and behavior of almost every organism. Some animals sleep during the night, while other nocturnal animals, including moths and crickets, are active during this time. The effects of day and night are not seen in the animal kingdom alone – plants have also evolved adaptations to cope best with the lack of sunlight during this time. For example, crassulacean acid metabolism is a unique type of carbon fixation which allows some photosynthetic plants to store carbon dioxide in their tissues as organic acids during the night, which can then be used during the day to synthesize carbohydrates. This allows them to keep their stomata closed during the daytime, preventing transpiration of water when it is precious.

Social

The first constant electric light was demonstrated in 1835. As artificial lighting has improved, especially after the Industrial Revolution, night time activity has increased and become a significant part of the economy in most places. Many establishments, such as nightclubs, bars, convenience stores, fast-food restaurants, gas stations, distribution facilities, and police stations now operate 24 hours a day or stay open as late as 1 or 2 a.m. Even without artificial light, moonlight sometimes makes it possible to travel or work outdoors at night.

Nightlife is a collective term for entertainment that is available and generally more popular from the late evening into the early hours of the morning. It includes pubs, bars, nightclubs, parties, live music, concerts, cabarets, theatre, cinemas, and shows. These venues often require a cover charge for admission. Nightlife entertainment is often more adult-oriented than daytime entertainment.

Cultural and psychological aspects

Night is often associated with danger and evil, because of the psychological connection of night's all-encompassing darkness to the fear of the unknown and darkness's hindrance of a major sensory system (the sense of sight). Nighttime is naturally associated with vulnerability and danger for human physical survival. Criminals, animals, and other potential dangers can be concealed by darkness. Midnight has a particular importance in human imagination and culture.

Upper Paleolithic art was found to show (by Leroi-Gourhan) a pattern of choices where the portrayal of animals that were experienced as dangerous were located at a distance from the entrance of a cave dwelling at a number of different cave locations.

The belief in magic often includes the idea that magic and magicians are more powerful at night. Séances of spiritualism are usually conducted closer to midnight. Similarly, mythical and folkloric creatures such as vampires and werewolves are described as being more active at night. Ghosts are believed to wander around almost exclusively during night-time. In almost all cultures, there exist stories and legends warning of the dangers of night-time. In fact, the Saxons called the darkness of night the "death mist".

The cultural significance of the night in Islam differs from that in Western culture. The Quran was revealed during the Night of Power, the most significant night according to Islam. Muhammad made his famous journey from Mecca to Jerusalem and then to heaven in the night. Another prophet, Abraham came to a realization of the supreme being in charge of the universe at night.

People who prefer to be active during the night-time are called night owls.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1602 2022-12-18 00:58:04

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,394

Re: Miscellany

1565) Dawn

Summary

Dawn is the time that marks the beginning of twilight before sunrise. It is recognized by the appearance of indirect sunlight being scattered in Earth's atmosphere, when the centre of the Sun's disc has reached 18° below the observer's horizon. This morning twilight period will last until sunrise (when the Sun's upper limb breaks the horizon), when direct sunlight outshines the diffused light.

Types of dawn

Dawn begins with the first sight of lightness in the morning, and continues until the Sun breaks the horizon. This morning twilight before sunrise is divided into three categories depending on the amount of sunlight that is present in the sky, which is determined by the angular distance of the centre of the Sun (degrees below the horizon) in the morning. These categories are astronomical, nautical, and civil dawn.

Astronomical dawn

Astronomical dawn begins when the Sun is 18 degrees below the horizon in the morning. Astronomical twilight follows instantly until the Sun is 12 degrees below the horizon. At this point a very small portion of the Sun's rays illuminate the sky and the fainter stars begin to disappear. Astronomical dawn is often indistinguishable from night, especially in areas with light pollution. Astronomical dawn marks the beginning of astronomical twilight, which lasts until nautical dawn.

Nautical dawn

Nautical twilight begins when there is enough illumination for sailors to distinguish the horizon at sea but the sky being too dark to perform outdoor activities. Formally, it begins when the Sun is 12 degrees below the horizon in the morning. The sky becomes light enough to clearly distinguish it from land and water. Nautical dawn marks the start of nautical twilight, which lasts until civil dawn.

Civil dawn

Civil dawn begins when there is enough light for most objects to be distinguishable, so that some outdoor activities can commence. Formally, it occurs when the Sun is 6 degrees below the horizon in the morning.

If the sky is clear, it is blue colored, and if there is some cloud or haze, there can be bronze, orange and yellow colours. Some bright stars and planets such as Venus and Jupiter are visible to the naked eye at civil dawn. This moment marks the start of civil twilight, which lasts until sunrise.

Effects of latitude

The duration of the twilight period (e.g. between astronomical dawn and sunrise) varies greatly depending on the observer's latitude: from a little over 70 minutes at the Equator, to many hours in the polar regions.

The Equator

The period of twilight is shortest at the Equator, where the equinox Sun rises due east and sets due west, at a right angle to the horizon. Each stage of twilight (civil, nautical, and astronomical) lasts only 24 minutes. From anywhere on Earth, the twilight period is shortest around the equinoxes and longest on the solstices.

Polar regions

Daytime becomes longer as the summer solstice approaches, while nighttime gets longer as the winter solstice approaches. This can have a potential impact on the times and durations of dawn and dusk. This effect is more pronounced closer to the poles, where the Sun rises at the vernal equinox and sets at the autumn equinox, with a long period of twilight, lasting for a few weeks.

The polar circle (at 66°34′ north or south) is defined as the lowest latitude at which the Sun does not set at the summer solstice. Therefore, the angular radius of the polar circle is equal to the angle between Earth's equatorial plane and the ecliptic plane. This period of time with no sunset lengthens closer to the pole.

Near the summer solstice, latitudes higher than 54°34′ get no darker than nautical twilight; the "darkness of the night" varies greatly at these latitudes.

At latitudes higher than about 60°34, summer nights get no darker than civil twilight. This period of "bright nights" is longer at higher latitudes.

Example

Around the summer solstice, Glasgow, Scotland at 55°51′ N, and Copenhagen, Denmark at 55°40′ N, get a few hours of "night feeling". Oslo, Norway at 59°56′ N, and Stockholm, Sweden at 59°19′ N, seem very bright when the Sun is below the horizon. When the Sun gets 9.0 to 9.5 degrees below the horizon (at summer solstice this is at latitudes 57°30′–57°00′), the zenith gets dark even on cloud-free nights (if there is no full moon), and the brightest stars are clearly visible in a large majority of the sky.

Mythology and religion

In Islam, Zodiacal Light (or "false dawn") is referred to as False Morning and astronomical dawn is called Sahar or True Morning, and it is the time of first prayer of the day, and the beginning of the daily fast during Ramadan.

Many Indo-European mythologies have a dawn goddess, separate from the male Solar deity, her name deriving from PIE *h2ausos-, derivations of which include Greek Eos, Roman Aurora and Indian Ushas. Also related is Lithuanian Aušrinė, and possibly a Germanic *Austrōn- (whence the term Easter). In Sioux mythology, Anpao is an entity with two faces.

The Hindu dawn deity Ushas is female, whereas Surya, the Sun, and Aruṇa, the Sun's charioteer, are male. Ushas is one of the most prominent Rigvedic deities. The time of dawn is also referred to as the Brahmamuhurtham (Brahma is the God of creation and muhurtham is a Hindu unit of time), and is considered an ideal time to perform spiritual activities, including meditation and yoga. In some parts of India, both Usha and Pratyusha (dusk) are worshiped along with the Sun during the festival of Chhath.

Jesus in the Bible is often symbolized by dawn in the morning, also when Jesus rose on the third day it happened during the morning. Prime is the fixed time of prayer of the traditional Divine Office (Canonical Hours) in Christian liturgy, said at the first hour of daylight. Associated with Jesus, in Christianity, Christian burials take place in the direction of dawn.

In Judaism, the question of how to calculate dawn (Hebrew Alos/Alot HaShachar, or Alos/Alot) is posed by the Talmud, as it has many ramifications for Jewish law (such as the possible start time for certain daytime commandments, like prayer). The simple reading of the Talmud is that dawn takes place 72 minutes before sunrise. Others, including the Vilna Gaon, have the understanding that the Talmud's timeframe for dawn was referring specifically to an equinox day in Mesopotamia, and is therefore teaching that dawn should be calculated daily as commencing when the Sun is 16.1 degrees below the horizon. The longstanding practice among most Sephardic Jews is to follow the first opinion, while many Ashkenazi Jews follow the latter view.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1603 2022-12-19 00:03:21

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,394

Re: Miscellany

1566) Dusk

Summary

Dusk is the time just before night when the daylight has almost gone but when it is not completely dark.

Details

Dusk occurs at the darkest stage of twilight, or at the very end of astronomical twilight after sunset and just before nightfall. At predusk, during early to intermediate stages of twilight, enough light in the sky under clear conditions may occur to read outdoors without artificial illumination; however, at the end of civil twilight (when Earth rotates to a point at which the center of the Sun's disk is 6° below the local horizon), such lighting is required to read outside. The term dusk usually refers to astronomical dusk, or the darkest part of twilight before night begins.

Technical definitions

Civil, nautical, and astronomical twilight. Dusk is the darkest part of evening twilight.

The time of dusk is the moment at the very end of astronomical twilight, just before the minimum brightness of the night sky sets in, or may be thought of as the darkest part of evening twilight.However, technically, the three stages of dusk are as follows:

* At civil dusk, the center of the Sun's disc goes 6° below the horizon in the evening. It marks the end of civil twilight, which begins at sunset. At this time objects are still distinguishable and depending on weather conditions some stars and planets may start to become visible to the naked eye. The sky has many colors at this time, such as orange and red. Beyond this point artificial light may be needed to carry out outdoor activities, depending on atmospheric conditions and location.

* At nautical dusk, the Sun apparently moves to 12° below the horizon in the evening. It marks the end of nautical twilight, which begins at civil dusk. At this time, objects are less distinguishable, and stars and planets appear to brighten.

* At astronomical dusk, the Sun's position is 18° below the horizon in the evening. It marks the end of astronomical twilight, which begins at nautical dusk. After this time the Sun no longer illuminates the sky, and thus no longer interferes with astronomical observations.

Media

Dusk can be used to create an ominous tone and has been used as a title for many projects. One instance of this is the 2018 first person shooter Dusk (video game) by New Blood Interactive whose setting is in a similar lighting as the actual time of day.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1604 2022-12-19 21:58:15

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,394

Re: Miscellany

1567) Watermark

Summary

Watermark is a design produced by creating a variation in the thickness of paper fibre during the wet-paper phase of papermaking. This design is clearly visible when the paper is held up to a light source.

Watermarks are known to have existed in Italy before the end of the 13th century. Two types of watermark have been produced. The more common type, which produces a translucent design when held up to a light, is produced by a wire design laid over and sewn onto the sheet mold wire (for handmade paper) or attached to the “dandy roll” (for machine-made paper). The rarer “shaded” watermark is produced by a depression in the sheet mold wire, which results in a greater density of fibres—hence, a shaded, or darker, design when held up to a light. Watermarks are often used commercially to identify the manufacturer or the grade of paper. They have also been used to detect and prevent counterfeiting and forgery.

The notion of watermarks as a means of identification was carried beyond the printing press into the computer age. Digital watermarks, which may or may not be visible, can be added to image and video files so that information embedded in the file is retrievable for purposes of copyright protection. Audio files can also be watermarked in this manner.

Details

A watermark is an identifying image or pattern in paper that appears as various shades of lightness/darkness when viewed by transmitted light (or when viewed by reflected light, atop a dark background), caused by thickness or density variations in the paper. Watermarks have been used on postage stamps, currency, and other government documents to discourage counterfeiting. There are two main ways of producing watermarks in paper; the dandy roll process, and the more complex cylinder mould process.

Watermarks vary greatly in their visibility; while some are obvious on casual inspection, others require some study to pick out. Various aids have been developed, such as watermark fluid that wets the paper without damaging it.

A watermark is very useful in the examination of paper because it can be used for dating documents and artworks, identifying sizes, mill trademarks and locations, and determining the quality of a sheet of paper.

The word is also used for digital practices that share similarities with physical watermarks. In one case, overprint on computer-printed output may be used to identify output from an unlicensed trial version of a program. In another instance, identifying codes can be encoded as a digital watermark for a music, video, picture, or other file.

History

The origin of the water part of a watermark can be found back when a watermark was something that only existed in paper. At that time the watermark was created by changing the thickness of the paper and thereby creating a shadow/lightness in the watermarked paper. This was done while the paper was still wet/watery and therefore the mark created by this process is called a watermark.

Watermarks were first introduced in Fabriano, Italy, in 1282.

Processes:

Dandy roll process

Traditionally, a watermark was made by impressing a water-coated metal stamp onto the paper during manufacturing. The invention of the dandy roll in 1826 by John Marshall revolutionised the watermark process and made it easier for producers to watermark their paper.

The dandy roll is a light roller covered by material similar to window screen that is embossed with a pattern. Faint lines are made by laid wires that run parallel to the axis of the dandy roll, and the bold lines are made by chain wires that run around the circumference to secure the laid wires to the roll from the outside. Because the chain wires are located on the outside of the laid wires, they have a greater influence on the impression in the pulp, hence their bolder appearance than the laid wire lines.

This embossing is transferred to the pulp fibres, compressing and reducing their thickness in that area. Because the patterned portion of the page is thinner, it transmits more light through and therefore has a lighter appearance than the surrounding paper. If these lines are distinct and parallel, and/or there is a watermark, then the paper is termed laid paper. If the lines appear as a mesh or are indiscernible, and/or there is no watermark, then it is called wove paper. This method is called line drawing watermarks.

Cylinder mould process

Another type of watermark is called the cylinder mould watermark. It is a shaded watermark first used in 1848 that incorporates tonal depth and creates a greyscale image. Instead of using a wire covering for the dandy roll, the shaded watermark is created by areas of relief on the roll's own surface. Once dry, the paper may then be rolled again to produce a watermark of even thickness but with varying density. The resulting watermark is generally much clearer and more detailed than those made by the Dandy Roll process, and as such Cylinder Mould Watermark Paper is the preferred type of watermarked paper for banknotes, passports, motor vehicle titles, and other documents where it is an important anti-counterfeiting measure.

On postage stamps

In philately, the watermark is a key feature of a stamp, and often constitutes the difference between a common and a rare stamp. Collectors who encounter two otherwise identical stamps with different watermarks consider each stamp to be a separate identifiable issue. The "classic" stamp watermark is a small crown or other national symbol, appearing either once on each stamp or a continuous pattern. Watermarks were nearly universal on stamps in the 19th and early 20th centuries, but generally fell out of use and are not commonly used on modern U.S. issues, but some countries continue to use them.

Some types of embossing, such as that used to make the "cross on oval" design on early stamps of Switzerland, resemble a watermark in that the paper is thinner, but can be distinguished by having sharper edges than is usual for a normal watermark. Stamp paper watermarks also show various designs, letters, numbers and pictorial elements.

The process of bringing out the stamp watermark is fairly simple. Sometimes a watermark in stamp paper can be seen just by looking at the unprinted back side of a stamp. More often, the collector must use a few basic items to get a good look at the watermark. For example, watermark fluid may be applied to the back of a stamp to temporarily reveal the watermark.

Even using the simple watermarking method described, it can be difficult to distinguish some watermarks. Watermarks on stamps printed in yellow and orange can be particularly difficult to see. A few mechanical devices are also used by collectors to detect watermarks on stamps such as the Morley-Bright watermark detector and the more expensive Safe Signoscope. Such devices can be very useful for they can be used without the application of watermark fluid and also allow the collector to look at the watermark for a longer period of time to more easily detect the watermark.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1605 2022-12-20 20:44:54

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,394

Re: Miscellany

1568) Child

Summary

A child (pl: children) is a human being between the stages of birth and puberty, or between the developmental period of infancy and puberty. The legal definition of child generally refers to a minor, otherwise known as a person younger than the age of majority. Children generally have fewer rights and responsibilities than adults. They are classed as unable to make serious decisions.

Child may also describe a relationship with a parent (such as sons and daughters of any age) or, metaphorically, an authority figure, or signify group membership in a clan, tribe, or religion; it can also signify being strongly affected by a specific time, place, or circumstance, as in "a child of nature" or "a child of the Sixties."

Details:

Healthy Development

The early years of a child’s life are very important for his or her health and development. Healthy development means that children of all abilities, including those with special health care needs, are able to grow up where their social, emotional and educational needs are met. Having a safe and loving home and spending time with family―playing, singing, reading, and talking―are very important. Proper nutrition, exercise, and sleep also can make a big difference.

Effective Parenting Practices

Parenting takes many different forms. However, some positive parenting practices work well across diverse families and in diverse settings when providing the care that children need to be happy and healthy, and to grow and develop well. A recent report looked at the evidence in scientific publications for what works, and found these key ways that parents can support their child’s healthy development:

* Responding to children in a predictable way

* Showing warmth and sensitivity

* Having routines and household rules

* Sharing books and talking with children

* Supporting health and safety

* Using appropriate discipline without harshness

Parents who use these practices can help their child stay healthy, be safe, and be successful in many areas—emotional, behavioral, cognitive, and social. Read more about the report here.

Positive Parenting Tips

Get parenting, health, and safety tips for children from birth through 17 years of age

Developmental Milestones

Skills such as taking a first step, smiling for the first time, and waving “bye-bye” are called developmental milestones. Children reach milestones in how they play, learn, speak, behave, and move (for example, crawling and walking).

Children develop at their own pace, so it’s impossible to tell exactly when a child will learn a given skill. However, the developmental milestones give a general idea of the changes to expect as a child gets older.

As a parent, you know your child best. If your child is not meeting the milestones for his or her age, or if you think there could be a problem with your child’s development, talk with your child’s doctor and share your concerns. Don’t wait.

Learn more about milestones and parenting tips from the National Institutes of Health:

* Normal growth and developmentexternal icon

* Preschoolersexternal icon

* School age children external icon

* Adolescents external icon

* Developmental Monitoring and Screening

Parents, grandparents, early childhood providers, and other caregivers can participate in developmental monitoring, which observes how your child grows and changes over time and whether your child meets the typical developmental milestones in playing, learning, speaking, behaving, and moving.

Developmental screening takes a closer look at how your child is developing. A missed milestone could be a sign of a problem, so when you take your child to a well visit, the doctor, nurse, or another specialist might give your child a brief test, or you will complete a questionnaire about your child.

If the screening tool identifies an area of concern, a formal developmental evaluation may be needed, where a trained specialist takes an in-depth look at a child’s development.

If a child has a developmental delay, it is important to get help as soon as possible. When a developmental delay is not found early, children must wait to get the help they need to do well in social and educational settings.

If You’re Concerned

If your child is not meeting the milestones for his or her age, or you are concerned about your child’s development, talk with your child’s doctor and share your concerns. Don’t wait!

Additional Information

Children thrive when they feel safe, loved and nurtured. For many parents, forming a close bond with their child comes easily. For many others who did not feel cherished, protected or valued during their own childhood, it can be much more of a struggle. The good news is that parenting skills can be learned. Read on to learn why bonding with your little one is crucial to their development and well-being, and some simple ways that you can do it every day.

Why building a relationship with your child matters

Providing your child with love and affection is a pre-requisite for the healthy development of their brain, their self-confidence, capacity to thrive and even their ability to form relationships as they go through life.

You literally cannot give babies ‘too much’ love. There is no such thing as spoiling them by holding them too much or giving them too much attention. Responding to their cues for feeding and comfort makes babies feel secure. When babies are routinely left alone, they think they have been abandoned and so they become more clingy and insecure when their parents return.

When you notice your child’s needs and respond to them in a loving way, this helps your little one to feel at ease. Feeling safe, seen, soothed and secure increases neuroplasticity, the ability of the brain to change and adapt. When a child’s world at home is full of love, they are better prepared to deal with the challenges of the larger world. A positive early bond lays the ground for children to grow up to become happy, independent adults. Loving, secure relationships help build resilience, our ability to cope with challenges and recover from setbacks.

How to bond with your child

* Parenting can be difficult at times and there is no such thing as a perfect parent. But if you can provide a loving and nurturing environment for your child to grow up in and you’re a steady and reliable presence in their life, then you’ll be helping them to have a great start in life. Here are some ways that can help you build a strong connection with your child from the moment you meet.

* Notice what they do. When your baby or young child cries, gestures or babbles, respond appropriately with a hug, eye contact or words. This not only teaches your child that you’re paying attention to them, but it helps to build neural connections in your little one’s brain that support the development of communication and social skills.

* Play together. By playing with your child, you are showing them that they are valued and fun to be around. Give them your full attention when you play games together and enjoy seeing the world from your child’s perspective. When you’re enjoying fun moments and laughing together, your body releases endorphins (“feel-good hormones”) that promote a feeling of well-being for both you and your child.

* Hold them close. Cuddling and having skin-to-skin contact with your baby helps to bring you closer in many ways. Your child will feel comforted by your heartbeat and will even get to know your smell. As your child gets a bit older, hugging them can help them learn to regulate their emotions and manage stress. This is because when a child receives a hug, their brain releases oxytocin – the “feel good” chemical – and calms the release of cortisol, the “stress” chemical.

* Have conversations. Taking interest in what your young child has to say shows them that you care about their thoughts and feelings. This can even start from day one. By talking and softly singing to your newborn, it lets them know that you are close by and paying attention to them. When they make cooing noises, respond with words to help them learn the back and forth of a conversation.

* Respond to their needs. Changing a diaper or nappy, feeding your child and helping them fall asleep reassures them that their needs will be met and that they are safe and cared for. Taking care of your child and meeting their needs is also a great reminder of your ability to support your child.

Above all, enjoy being with your child, make the most of the time together and know that your love and presence go a long way to helping your child thrive.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1606 2022-12-21 13:11:48

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,394

Re: Miscellany

1509) Tower

Summary

A tower is any structure that is relatively tall in proportion to the dimensions of its base. It may be either freestanding or attached to a building or wall. Modifiers frequently denote a tower’s function (e.g., watchtower, water tower, church tower, and so on).

Historically, there are several types of structures particularly implied by the name. Defensive towers served as platforms from which a defending force could rain missiles down upon an attacking force. The Romans, Byzantines, and medieval Europeans built such towers along their city walls and adjoining important gates. The Romans and other peoples also used offensive, or siege, towers, as raised platforms for attacking troops to overrun high city walls. Military towers often gave their name to an entire fortress; the Tower of London, for example, includes the entire complex of buildings contiguous with the White Tower of William I the Conqueror.

Towers were an important feature of the churches and cathedrals built during the Romanesque and Gothic periods. Some Gothic church towers were designed to carry a spire, while others had flat roofs. Many church towers were used as belfries, though the most famous campanile, or bell tower, the Leaning Tower of Pisa (1174), is a freestanding structure. In civic architecture, towers were often used to hold clocks, as in many hotels de ville (town halls) in France and Germany. The use of towers declined somewhat during the Renaissance but reappeared in the more flamboyant Baroque architecture of the 17th and 18th centuries.

The use of steel frames enabled buildings to reach unprecedented heights in the late 19th and 20th centuries; the Eiffel Tower (1889) in Paris was the first structure to reveal the true vertical potential of steel construction. The ubiquity of modern skyscrapers has robbed the word tower of most of its meaning, though the Petronas Twin Towers in Kuala Lumpur, Malaysia, the Willis Tower in Chicago, and other skyscrapers still bear the term in their official names.

In 2007 the world’s tallest freestanding building was Taipei 101 (2003; the Taipei Financial Centre), 1,667 feet (508 metres) tall, in Taiwan. The tallest supported structure is a 2,063-foot (629-metre) stayed television broadcasting tower, completed in 1963 and located between Fargo and Blanchard, North Dakota, U.S.

CN Tower, also called Canadian National Tower, is a broadcast and telecommunications tower in Toronto. Standing at a height of 1,815 feet (553 metres), it was the world’s tallest freestanding structure until 2007, when it was surpassed by the Burj Dubai building in Dubayy (Dubai), U.A.E. Construction of CN Tower began in February 1973 and involved more than 1,500 workers; the tower was completed in February 1974, and the attachment of its antenna was finished in April 1975. First opened to the public on June 26, 1976, CN Tower was built by Canadian National Railway Company and was initially privately owned, but ownership of the tower was transferred to the Canadian government in 1995; it is now managed by a public corporation. CN Tower, whose designers included John Andrews, Webb Zerafa, Menkes Housden, and E.R. Baldwin, is by far Toronto’s most distinctive landmark. It is a major tourist attraction that includes observation decks, a revolving restaurant at some 1,151 feet (351 metres), and an entertainment complex. It is also a centre for telecommunications in Toronto.

Details

A tower is a tall structure, taller than it is wide, often by a significant factor. Towers are distinguished from masts by their lack of guy-wires and are therefore, along with tall buildings, self-supporting structures.

Towers are specifically distinguished from buildings in that they are built not to be habitable but to serve other functions using the height of the tower. For example, the height of a clock tower improves the visibility of the clock, and the height of a tower in a fortified building such as a castle increases the visibility of the surroundings for defensive purposes. Towers may also be built for observation, leisure, or telecommunication purposes. A tower can stand alone or be supported by adjacent buildings, or it may be a feature on top of a larger structure or building.

History

Towers have been used by mankind since prehistoric times. The oldest known may be the circular stone tower in walls of Neolithic Jericho (8000 BC). Some of the earliest towers were ziggurats, which existed in Sumerian architecture since the 4th millennium BC. The most famous ziggurats include the Sumerian Ziggurat of Ur, built in the 3rd millennium BC, and the Etemenanki, one of the most famous examples of Babylonian architecture.

Some of the earliest surviving examples are the broch structures in northern Scotland, which are conical tower houses. These and other examples from Phoenician and Roman cultures emphasised the use of a tower in fortification and sentinel roles. For example, the name of the Moroccan city of Mogador, founded in the first millennium BC, is derived from the Phoenician word for watchtower ('migdol'). The Romans utilised octagonal towers as elements of Diocletian's Palace in Croatia, which monument dates to approximately 300 AD, while the Servian Walls (4th century BC) and the Aurelian Walls (3rd century AD) featured square ones. The Chinese used towers as integrated elements of the Great Wall of China in 210 BC during the Qin Dynasty. Towers were also an important element of castles.

Other well known towers include the Leaning Tower of Pisa in Pisa, Italy built from 1173 until 1372, the Two Towers in Bologna, Italy built from 1109 until 1119 and the Towers of Pavia (25 survive), built between 11th and 13th century. The Himalayan Towers are stone towers located chiefly in Tibet built approximately 14th to 15th century.

Mechanics

Up to a certain height, a tower can be made with the supporting structure with parallel sides. However, above a certain height, the compressive load of the material is exceeded, and the tower will fail. This can be avoided if the tower's support structure tapers up the building.

A second limit is that of buckling—the structure requires sufficient stiffness to avoid breaking under the loads it faces, especially those due to winds. Many very tall towers have their support structures at the periphery of the building, which greatly increases the overall stiffness.

A third limit is dynamic; a tower is subject to varying winds, vortex shedding, seismic disturbances etc. These are often dealt with through a combination of simple strength and stiffness, as well as in some cases tuned mass dampers to damp out movements. Varying or tapering the outer aspect of the tower with height avoids vibrations due to vortex shedding occurring along the entire building simultaneously.

Functions

Although not correctly defined as towers, many modern high-rise buildings (in particular skyscraper) have 'tower' in their name or are colloquially called 'towers'. Skyscrapers are more properly classified as 'buildings'. In the United Kingdom, tall domestic buildings are referred to as tower blocks. In the United States, the original World Trade Center had the nickname the Twin Towers, a name shared with the Petronas Twin Towers in Kuala Lumpur. In addition some of the structures listed below do not follow the strict criteria used at List of tallest towers.

Strategic advantages

The tower throughout history has provided its users with an advantage in surveying defensive positions and obtaining a better view of the surrounding areas, including battlefields. They were constructed on defensive walls, or rolled near a target (see siege tower). Today, strategic-use towers are still used at prisons, military camps, and defensive perimeters.

Potential energy

By using gravity to move objects or substances downward, a tower can be used to store items or liquids like a storage silo or a water tower, or aim an object into the earth such as a drilling tower. Ski-jump ramps use the same idea, and in the absence of a natural mountain slope or hill, can be human-made.

Communication enhancement

In history, simple towers like lighthouses, bell towers, clock towers, signal towers and minarets were used to communicate information over greater distances. In more recent years, radio masts and cell phone towers facilitate communication by expanding the range of the transmitter. The CN Tower in Toronto, Ontario, Canada was built as a communications tower, with the capability to act as both a transmitter and repeater.

Transportation support

Towers can also be used to support bridges, and can reach heights that rival some of the tallest buildings above-water. Their use is most prevalent in suspension bridges and cable-stayed bridges. The use of the pylon, a simple tower structure, has also helped to build railroad bridges, mass-transit systems, and harbors.

Control towers are used to give visibility to help direct aviation traffic.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1607 2022-12-22 00:43:58

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,394

Re: Miscellany

1510) Prefabrication

Summary

Prefabrication is the the assembly of buildings or their components at a location other than the building site. The method controls construction costs by economizing on time, wages, and materials. Prefabricated units may include doors, stairs, window walls, wall panels, floor panels, roof trusses, room-sized components, and even entire buildings.

The concept and practice of prefabrication in one form or another has been part of human experience for centuries; the modern sense of prefabrication, however, dates from about 1905. Until the invention of the gasoline-powered truck, prefabricated units—as distinct from precut building materials such as stones and logs—were of ultralight construction. Since World War I the prefabrication of more massive building elements has developed in accordance with the fluctuation of building activity in the United States, the Soviet Union, and western Europe.

Prefabrication requires the cooperation of architects, suppliers, and builders regarding the size of basic modular units. In the American building industry, for example, the 4 × 8-foot panel is a standard unit. Building plans are drafted using 8-foot ceilings, and floor plans are described in multiples of four. Suppliers of prefabricated wall units build wall frames in dimensions of 8 feet high by 4, 8, 16, or 24 feet long. Insulation, plumbing, electrical wiring, ventilation systems, doors, and windows are all constructed to fit within the 4 × 8-foot modular unit.

Another prefabricated unit widely used in light construction is the roof truss, which is manufactured and stockpiled according to angle of pitch and horizontal length in 4-foot increments.

On the scale of institutional and office buildings and works of civil engineering, such as bridges and dams, rigid frameworks of steel with spans up to 120 feet (37 m) are prefabricated. The skins of large buildings are often modular units of porcelainized steel. Stairwells are delivered in prefabricated steel units. Raceways and ducts for electrical wiring, plumbing, and ventilation are built into the metal deck panels used in floors and roofs. The Verrazano-Narrows Bridge in New York City (with a span of 4,260 feet [1,298 m]) is made of 60 prefabricated units weighing 400 tons each.

Precast concrete components include slabs, beams, stairways, modular boxes, and even kitchens and bathrooms complete with precast concrete fixtures.

A prefabricated building component that is mass-produced in an assembly line can be made in a shorter time for lower cost than a similar element fabricated by highly paid skilled labourers at a building site. Many contemporary building components also require specialized equipment for their construction that cannot be economically moved from one building site to another. Savings in material costs and assembly time are facilitated by locating the prefabrication operation at a permanent site. Materials that have become highly specialized, with attendant fluctuations in price and availability, can be stockpiled at prefabrication shops or factories. In addition, the standardization of building components makes it possible for construction to take place where the raw material is least expensive.

The major drawback to prefabrication is the dilution of responsibility. A unit that is designed in one area of the country may be prefabricated in another and shipped to yet a third area, which may or may not have adequate criteria for inspecting materials that are not locally produced. This fragmentation of control factors increases the probability of structural failure.

Details

Prefabrication is the practice of assembling components of a structure in a factory or other manufacturing site, and transporting complete assemblies or sub-assemblies to the construction site where the structure is to be located. The term is used to distinguish this process from the more conventional construction practice of transporting the basic materials to the construction site where all assembly is carried out.

The term prefabrication also applies to the manufacturing of things other than structures at a fixed site. It is frequently used when fabrication of a section of a machine or any movable structure is shifted from the main manufacturing site to another location, and the section is supplied assembled and ready to fit. It is not generally used to refer to electrical or electronic components of a machine, or mechanical parts such as pumps, gearboxes and compressors which are usually supplied as separate items, but to sections of the body of the machine which in the past were fabricated with the whole machine. Prefabricated parts of the body of the machine may be called 'sub-assemblies' to distinguish them from the other components.

Process and theory

An example from house-building illustrates the process of prefabrication. The conventional method of building a house is to transport bricks, timber, cement, sand, steel and construction aggregate, etc. to the site, and to construct the house on site from these materials. In prefabricated construction, only the foundations are constructed in this way, while sections of walls, floors and roof are prefabricated (assembled) in a factory (possibly with window and door frames included), transported to the site, lifted into place by a crane and bolted together.

Prefabrication is used in the manufacture of ships, aircraft and all kinds of vehicles and machines where sections previously assembled at the final point of manufacture are assembled elsewhere instead, before being delivered for final assembly.

The theory behind the method is that time and cost is saved if similar construction tasks can be grouped, and assembly line techniques can be employed in prefabrication at a location where skilled labour is available, while congestion at the assembly site, which wastes time, can be reduced. The method finds application particularly where the structure is composed of repeating units or forms, or where multiple copies of the same basic structure are being constructed. Prefabrication avoids the need to transport so many skilled workers to the construction site, and other restricting conditions such as a lack of power, lack of water, exposure to harsh weather or a hazardous environment are avoided. Against these advantages must be weighed the cost of transporting prefabricated sections and lifting them into position as they will usually be larger, more fragile and more difficult to handle than the materials and components of which they are made.

History

Prefabrication has been used since ancient times. For example, it is claimed that the world's oldest known engineered roadway, the Sweet Track constructed in England around 3800 BC, employed prefabricated timber sections brought to the site rather than assembled on-site.[citation needed]

Sinhalese kings of ancient Sri Lanka have used prefabricated buildings technology to erect giant structures, which dates back as far as 2000 years, where some sections were prepared separately and then fitted together, specially in the Kingdom of Anuradhapura and Polonnaruwa.

After the great Lisbon earthquake of 1755, the Portuguese capital, especially the Baixa district, was rebuilt by using prefabrication on an unprecedented scale. Under the guidance of Sebastião José de Carvalho e Melo, popularly known as the Marquis de Pombal, the most powerful royal minister of D. Jose I, a new Pombaline style of architecture and urban planning arose, which introduced early anti-seismic design features and innovative prefabricated construction methods, according to which large multistory buildings were entirely manufactured outside the city, transported in pieces and then assembled on site. The process, which lasted into the nineteenth century, lodged the city's residents in safe new structures unheard-of before the quake.

Also in Portugal, the town of Vila Real de Santo António in the Algarve, founded on 30 December 1773, was quickly erected through the use of prefabricated materials en masse. The first of the prefabricated stones was laid in March 1774. By 13 May 1776, the centre of the town had been finished and was officially opened.

In 19th century Australia a large number of prefabricated houses were imported from the United Kingdom.

The method was widely used in the construction of prefabricated housing in the 20th century, such as in the United Kingdom as temporary housing for thousands of urban families "bombed out" during World War II. Assembling sections in factories saved time on-site and the lightness of the panels reduced the cost of foundations and assembly on site. Coloured concrete grey and with flat roofs, prefab houses were uninsulated and cold and life in a prefab acquired a certain stigma, but some London prefabs were occupied for much longer than the projected 10 years.

The Crystal Palace, erected in London in 1851, was a highly visible example of iron and glass prefabricated construction; it was followed on a smaller scale by Oxford Rewley Road railway station.

During World War II, prefabricated Cargo ships, designed to quickly replace ships sunk by Nazi U-boats became increasingly common. The most ubiquitous of these ships was the American Liberty ship, which reached production of over 2,000 units, averaging 3 per day.

Current uses

The most widely used form of prefabrication in building and civil engineering is the use of prefabricated concrete and prefabricated steel sections in structures where a particular part or form is repeated many times. It can be difficult to construct the formwork required to mould concrete components on site, and delivering wet concrete to the site before it starts to set requires precise time management. Pouring concrete sections in a factory brings the advantages of being able to re-use moulds and the concrete can be mixed on the spot without having to be transported to and pumped wet on a congested construction site. Prefabricating steel sections reduces on-site cutting and welding costs as well as the associated hazards.

Prefabrication techniques are used in the construction of apartment blocks, and housing developments with repeated housing units. Prefabrication is an essential part of the industrialization of construction. The quality of prefabricated housing units had increased to the point that they may not be distinguishable from traditionally built units to those that live in them. The technique is also used in office blocks, warehouses and factory buildings. Prefabricated steel and glass sections are widely used for the exterior of large buildings.

Detached houses, cottages, log cabin, saunas, etc. are also sold with prefabricated elements. Prefabrication of modular wall elements allows building of complex thermal insulation, window frame components, etc. on an assembly line, which tends to improve quality over on-site construction of each individual wall or frame. Wood construction in particular benefits from the improved quality. However, tradition often favors building by hand in many countries, and the image of prefab as a "cheap" method only slows its adoption. However, current practice already allows the modifying the floor plan according to the customer's requirements and selecting the surfacing material, e.g. a personalized brick facade can be masoned even if the load-supporting elements are timber.

Prefabrication saves engineering time on the construction site in civil engineering projects. This can be vital to the success of projects such as bridges and avalanche galleries, where weather conditions may only allow brief periods of construction. Prefabricated bridge elements and systems offer bridge designers and contractors significant advantages in terms of construction time, safety, environmental impact, constructibility, and cost. Prefabrication can also help minimize the impact on traffic from bridge building. Additionally, small, commonly used structures such as concrete pylons are in most cases prefabricated.

Radio towers for mobile phone and other services often consist of multiple prefabricated sections. Modern lattice towers and guyed masts are also commonly assembled of prefabricated elements.

Prefabrication has become widely used in the assembly of aircraft and spacecraft, with components such as wings and fuselage sections often being manufactured in different countries or states from the final assembly site. However, this is sometimes for political rather than commercial reasons, such as for Airbus.

Advantages

* Moving partial assemblies from a factory often costs less than moving pre-production resources to each site

* Deploying resources on-site can add costs; prefabricating assemblies can save costs by reducing on-site work

* Factory tools - jigs, cranes, conveyors, etc. - can make production faster and more precise

* Factory tools - shake tables, hydraulic testers, etc. - can offer added quality assurance

* Consistent indoor environments of factories eliminate most impacts of weather on production

* Cranes and reusable factory supports can allow shapes and sequences without expensive on-site falsework

* Higher-precision factory tools can aid more controlled movement of building heat and air, for lower energy consumption and healthier buildings

* Factory production can facilitate more optimal materials usage, recycling, noise capture, dust capture, etc.

* Machine-mediated parts movement, and freedom from wind and rain can improve construction safety

Disadvantages

* Transportation costs may be higher for voluminous prefabricated sections (especially sections so big that they constitute oversize loads requiring special signage, escort vehicles, and temporary road closures) than for their constituent materials, which can often be packed more densely and are more likely to fit onto standard-sized vehicles.

* Large prefabricated sections may require heavy-duty cranes and precision measurement and handling to place in position.

Off-site fabrication

Off-site fabrication is a process that incorporates prefabrication and pre-assembly. The process involves the design and manufacture of units or modules, usually remote from the work site, and the installation at the site to form the permanent works at the site. In its fullest sense, off-site fabrication requires a project strategy that will change the orientation of the project process from construction to manufacture to installation. Examples of off-site fabrication are wall panels for homes, wooden truss bridge spans, airport control stations.

There are four main categories of off-site fabrication, which is often also referred to as off-site construction. These can be described as component (or sub-assembly) systems, panelised systems, volumetric systems, and modular systems. Below these categories different branches, or technologies are being developed. There are a vast number of different systems on the market which fall into these categories and with recent advances in digital design such as building information modeling (BIM), the task of integrating these different systems into a construction project is becoming increasingly a "digital" management proposition.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1608 2022-12-23 00:12:42

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,394

Re: Miscellany

1511) Fragility (glass physics)

Summary

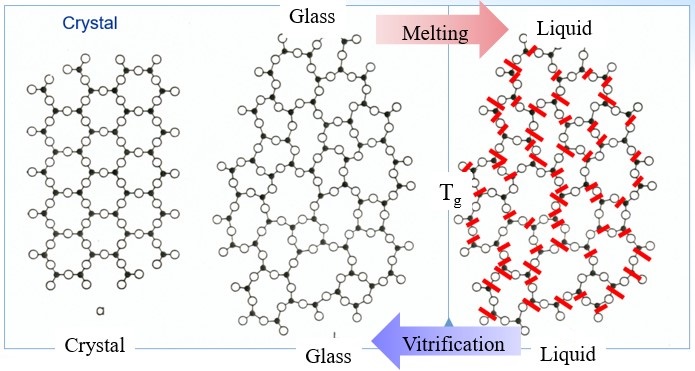

Glass physics: The standard definition of a glass (or vitreous solid) is a solid formed by rapid melt quenching. However, the term "glass" is often defined in a broader sense, to describe any non-crystalline (amorphous) solid that exhibits a glass transition when heated towards the liquid state. Glass is an amorphous solid.

Details

In glass physics, fragility characterizes how rapidly the dynamics of a material slows down as it is cooled toward the glass transition: materials with a higher fragility have a relatively narrow glass transition temperature range, while those with low fragility have a relatively broad glass transition temperature range. Physically, fragility may be related to the presence of dynamical heterogeneity in glasses, as well as to the breakdown of the usual Stokes–Einstein relationship between viscosity and diffusion.

Definition

Formally, fragility reflects the degree to which the temperature dependence of the viscosity (or relaxation time) deviates from Arrhenius behavior. This classification was originally proposed by Austen Angell. The most common definition of fragility is the "kinetic fragility index" m, which characterizes the slope of the viscosity (or relaxation time) of a material with temperature as it approaches the glass transition temperature from above:

where

is viscosity, Tg is the glass transition temperature, m is fragility, and T is temperature. Glass-formers with a high fragility are called "fragile"; those with a low fragility are called "strong". For example, silica has a relatively low fragility and is called "strong", whereas some polymers have relatively high fragility and are called "fragile". Fragility has no direct relationship with the colloquial meaning of the word "fragility", which more closely relates to the brittleness of a material.Several fragility parameters have been introduced to characterise the fragility of liquids, including the Bruning–Sutton, Avramov and Doremus fragility parameters. The Bruning–Sutton fragility parameter m relies on the curvature or slope of the viscosity curves. The Avramov fragility parameter α is based on a Kohlraush-type formula of viscosity derived for glasses: strong liquids have α ≈ 1 whereas liquids with higher α values become more fragile. Doremus indicated that practically all melts deviate from the Arrhenius behaviour, e.g. the activation energy of viscosity changes from a high QH at low temperature to a low QL at high temperature. However asymptotically both at low and high temperatures the activation energy of viscosity becomes constant, e.g. independent of temperature. Changes that occur in the activation energy are unambiguously characterised by the ratio between the two values of activation energy at low and high temperatures, which Doremus suggested could be used as a fragility criterion: RD=QH/QL. The higher RD, the more fragile are the liquids, Doremus’ fragility ratios range from 1.33 for germania to 7.26 for diopside melts.

The Doremus’ criterion of fragility can be expressed in terms of thermodynamic parameters of the defects mediating viscous flow in the oxide melts: RD=1+Hd/Hm, where Hd is the enthalpy of formation and Hm is the enthalpy of motion of such defects. Hence the fragility of oxide melts is an intrinsic thermodynamic parameter of melts which can be determined unambiguously by experiment.

The fragility can also be expressed analytically in terms of physical parameters that are related to the interatomic or intermolecular interaction potential. It is given as function of a parameter which measures the steepness of the interatomic or intermolecular repulsion, and as a function of the thermal expansion coefficient of the liquid, which, instead, is related to the attractive part of the interatomic or intermolecular potential. The analysis of various systems (from Lennard-Jones model liquids to metal alloys) has evidenced that a steeper interatomic repulsion leads to more fragile liquids, or, conversely, that soft atoms make strong liquids.

Recent synchrotron radiation X-ray diffraction experiments showed a clear link between structure evolution of the supercooled liquid on cooling, for example, intensification of Ni-P and Cu-P peaks in the radial distribution function close to the glass-transition, and liquid fragility.

Physical implications

The physical origin of the non-Arrhenius behavior of fragile glass formers is an area of active investigation in glass physics. Advances over the last decade have linked this phenomenon with the presence of locally heterogeneous dynamics in fragile glass formers; i.e. the presence of distinct (if transient) slow and fast regions within the material. This effect has also been connected to the breakdown of the Stokes–Einstein relation between diffusion and viscosity in fragile liquids.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1609 2022-12-24 00:11:23

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 53,394

Re: Miscellany

1512) Sunglasses

Sunglasses or sun glasses (informally called shades or sunnies; more names below) are a form of protective eyewear designed primarily to prevent bright sunlight and high-energy visible light from damaging or discomforting the eyes. They can sometimes also function as a visual aid, as variously termed spectacles or glasses exist, featuring lenses that are colored, polarized or darkened. In the early 20th century, they were also known as sun cheaters (cheaters then being an American slang term for glasses).

Since the 1930s, sunglasses have been a popular fashion accessory, especially on the beach.

The American Optometric Association recommends wearing sunglasses that block ultraviolet radiation (UV) whenever a person is in the sunlight to protect the eyes from UV and blue light, which can cause several serious eye problems. Their usage is mandatory immediately after some surgical procedures, such as LASIK, and recommended for a certain time period in dusty areas, when leaving the house and in front of a TV screen or computer monitor after LASEK. It is important to note that dark glasses that do not block UV radiation can be more damaging to the eyes than not wearing eye protection at all, since they tend to open the pupil and allow more UV rays into the eye.

(LASIK or Lasik (laser-assisted in situ keratomileusis), commonly referred to as laser eye surgery or laser vision correction, is a type of refractive surgery for the correction of myopia, hyperopia, and an actual cure for astigmatism, since it is in the cornea. LASIK surgery is performed by an ophthalmologist who uses a laser or microkeratome to reshape the eye's cornea in order to improve visual acuity. For most people, LASIK provides a long-lasting alternative to eyeglasses or contact lenses.

LASIK is very similar to another surgical corrective procedure, photorefractive keratectomy (PRK), and LASEK. All represent advances over radial keratotomy in the surgical treatment of refractive errors of vision. For patients with moderate to high myopia or thin corneas which cannot be treated with LASIK and PRK, the phakic intraocular lens is an alternative.

As of 2018, roughly 9.5 million Americans have had LASIK and, globally, between 1991 and 2016, more than 40 million procedures were performed. However, the procedure seemed to be a declining option as of 2015.)

Visual clarity and comfort

Sunglasses can improve visual comfort and visual clarity by protecting the eye from glare.

Various types of disposable sunglasses are dispensed to patients after receiving mydriatic eye drops during eye examinations.

The lenses of polarized sunglasses reduce glare reflected at some angles off shiny non-metallic surfaces, such as water. They allow wearers to see into water when only surface glare would otherwise be seen, and eliminate glare from a road surface when driving into the sun.

Protection

Sunglasses offer protection against excessive exposure to light, including its visible and invisible components.

The most widespread protection is against ultraviolet radiation, which can cause short-term and long-term ocular problems such as photokeratitis (snow blindness), cataracts, pterygium, and various forms of eye cancer. Medical experts advise the public on the importance of wearing sunglasses to protect the eyes from UV; for adequate protection, experts recommend sunglasses that reflect or filter out 99% or more of UVA and UVB light, with wavelengths up to 400 nm. Sunglasses that meet this requirement are often labeled as "UV400". This is slightly more protection than the widely used standard of the European Union, which requires that 95% of the radiation up to only 380 nm must be reflected or filtered out. Sunglasses are not sufficient to protect the eyes against permanent harm from looking directly at the Sun, even during a solar eclipse. Special eyewear known as solar viewers are required for direct viewing of the sun. This type of eyewear can filter out UV radiation harmful to the eyes.

More recently, high-energy visible light (HEV) has been implicated as a cause of age-related macular degeneration; before, debates had already existed as to whether "blue blocking" or amber tinted lenses may have a protective effect. Some manufacturers already design glasses to block blue light; the insurance company Suva, which covers most Swiss employees, asked eye experts around Charlotte Remé (ETH Zürich) to develop norms for blue blocking, leading to a recommended minimum of 95% of the blue light. Sunglasses are especially important for children, as their ocular lenses are thought to transmit far more HEV light than adults (lenses "yellow" with age).

There has been some speculation that sunglasses actually promote skin cancer. This is due to the eyes being tricked into producing less melanocyte-stimulating hormone in the body.

Assessing protection

The only way to assess the protection of sunglasses is to have the lenses measured, either by the manufacturer or by a properly equipped optician. Several standards for sunglasses allow a general classification of the UV protection (but not the blue light protection), and manufacturers often indicate simply that the sunglasses meet the requirements of a specific standard rather than publish the exact figures.

The only "visible" quality test for sunglasses is their fit. The lenses should fit close enough to the face that only very little "stray light" can reach the eye from their sides, or from above or below, but not so close that the eyelashes smear the lenses. To protect against "stray light" from the sides, the lenses should fit close enough to the temples and/or merge into broad temple arms or leather blinders.

It is not possible to "see" the protection that sunglasses offer. Dark lenses do not automatically filter out more harmful UV radiation and blue light than light lenses. Inadequate dark lenses are even more harmful than inadequate light lenses (or wearing no sunglasses at all) because they provoke the pupil to open wider. As a result, more unfiltered radiation enters the eye. Depending on the manufacturing technology, sufficiently protective lenses can block much or little light, resulting in dark or light lenses. The lens color is not a guarantee either. Lenses of various colors can offer sufficient (or insufficient) UV protection. Regarding blue light, the color gives at least a first indication: Blue blocking lenses are commonly yellow or brown, whereas blue or gray lenses cannot offer the necessary blue light protection. However, not every yellow or brown lens blocks sufficient blue light. In rare cases, lenses can filter out too much blue light (i.e., 100%), which affects color vision and can be dangerous in traffic when colored signals are not properly recognized.

High prices cannot guarantee sufficient protection as no correlation between high prices and increased UV protection has been demonstrated. A 1995 study reported that "Expensive brands and polarizing sunglasses do not guarantee optimal UVA protection." The Australian Competition & Consumer Commission has also reported that "consumers cannot rely on price as an indicator of quality". One survey even found that a $6.95 pair of generic glasses offered slightly better protection than expensive Salvatore Ferragamo shades.

Further functions

While non-tinted glasses are very rarely worn without the practical purpose of correcting eyesight or protecting one's eyes, sunglasses have become popular for several further reasons, and are sometimes worn even indoors or at night.

Sunglasses can be worn to hide one's eyes. They can make eye contact impossible, which can be intimidating to those not wearing sunglasses; the avoided eye contact can also demonstrate the wearer's detachment, which is considered desirable (or "cool") in some circles. Eye contact can be avoided even more effectively by using mirrored sunglasses. Sunglasses can also be used to hide emotions; this can range from hiding blinking to hiding weeping and its resulting red eyes. In all cases, hiding one's eyes has implications for nonverbal communication; this is useful in poker, and many professional poker players wear heavily tinted glasses indoors while playing, so that it is more difficult for opponents to read tells which involve eye movement and thus gain an advantage.

Fashion trends can be another reason for wearing sunglasses, particularly designer sunglasses from high-end fashion brands. Sunglasses of particular shapes may be in vogue as a fashion accessory. The relevance of sunglasses within the fashion industry has included prominent fashion editors' reviews of annual trends in sunglasses as well as runway fashion shows featuring sunglasses as a primary or secondary component of a look. Fashion trends can also draw on the "cool" image of sunglasses and association with a particular lifestyle, especially the close connection between sunglasses and beach life. In some cases, this connection serves as the core concept behind an entire brand.

People may also wear sunglasses to hide an abnormal appearance of their eyes. This can be true for people with severe visual impairment, such as the blind, who may wear sunglasses to avoid making others uncomfortable. The assumption is that it may be more comfortable for another person not to see the hidden eyes rather than see abnormal eyes or eyes which seem to look in the wrong direction. People may also wear sunglasses to hide dilated or contracted pupils, bloodshot eyes due to drug use, chronic dark circles or crow's feet, recent physical abuse (such as a black eye), exophthalmos (bulging eyes), a cataract, or eyes which jerk uncontrollably (nystagmus).

Lawbreakers have been known to wear sunglasses during or after committing a crime as an aid to hiding their identities.

Land vehicle driving

When driving a vehicle, particularly at high speed, dazzling glare caused by a low Sun, or by lights reflecting off snow, puddles, other vehicles, or even the front of the vehicle, can be lethal. Sunglasses can protect against glare when driving. Two criteria must be met: vision must be clear, and the glasses must let sufficient light get to the eyes for the driving conditions. General-purpose sunglasses may be too dark, or otherwise unsuitable for driving.

The Automobile Association and the Federation of Manufacturing Opticians have produced guidance for selection of sunglasses for driving. Variable tint or photochromic lenses increase their optical density when exposed to UV light, reverting to their clear state when the UV brightness decreases. Car windscreens filter out UV light, slowing and limiting the reaction of the lenses and making them unsuitable for driving as they could become too dark or too light for the conditions. Some manufacturers produce special photochromic lenses that adapt to the varying light conditions when driving.

Lenses of fixed tint are graded according to the optical density of the tint; in the UK sunglasses must be labelled and show the filter category number. Lenses with light transmission less than 75% are unsuitable for night driving, and lenses with light transmission less than 8% (category 4) are unsuitable for driving at any time; they should by UK law be labelled 'Not suitable for driving and road use'. Yellow tinted lenses are also not recommended for night use. Due to the light levels within the car, filter category 2 lenses which transmit between 18% and 43% of light are recommended for daytime driving. Polarised lenses normally have a fixed tint, and can reduce reflected glare more than non-polarised lenses of the same density, particularly on wet roads.

Graduated lenses, with the bottom part lighter than the top, can make it easier to see the controls within the car. All sunglasses should be marked as meeting the standard for the region where sold. An anti-reflection coating is recommended, and a hard coating to protect the lenses from scratches. Sunglasses with deep side arms can block side, or peripheral, vision and are not recommended for driving.

Even though some of these glasses are proven good enough for driving at night, it is strongly recommended not to do so, due to the changes in a wide variety of light intensities, especially while using yellow tinted protection glasses. The main purpose of these glasses are to protect the wearer from dust and smog particles entering into the eyes while driving at high speeds.

Aircraft piloting